Resource-Constrained Edge AI with Early Exit Prediction

2022-06-29RongkangDongYuyiMaoJunZhang

Rongkang Dong,Yuyi Mao,Jun Zhang

Abstract—By leveraging the data sample diversity,the early-exit network recently emerges as a prominent neural network architecture to accelerate the deep learning inference process.However,intermediate classifiers of the early exits introduce additional computation overhead,which is unfavorable for resource-constrained edge artificial intelligence (AI).In this paper,we propose an early exit prediction mechanism to reduce the on-device computation overhead in a device-edge co-inference system supported by early-exit networks.Specifically,we design a low-complexity module,namely the exit predictor,to guide some distinctly“hard”samples to bypass the computation of the early exits.Besides,considering the varying communication bandwidth,we extend the early exit prediction mechanism for latency-aware edge inference,which adapts the prediction thresholds of the exit predictor and the confidence thresholds of the early-exit network via a few simple regression models.Extensive experiment results demonstrate the effectiveness of the exit predictor in achieving a better tradeoff between accuracy and on-device computation overhead for early-exit networks.Besides,compared with the baseline methods,the proposed method for latency-aware edge inference attains higher inference accuracy under different bandwidth conditions.

Keywords—artificial intelligence (AI),edge AI,deviceedge cooperative inference,early-exit network,early exit prediction

I.INTRODUCTION

With the great demand for Internet of things (IoT) applications to embrace the fifth generation(5G)era,the number of connected devices(e.g.,smartphones,surveillance cameras,and unmanned vehicles)has seen a dramatic increase over the past decade,which results in a vast volume of data being generated at the wireless networks[1].Such massive data along with the recent success of deep learning has hastened numerous intelligent mobile applications such as vehicle detection and virtual reality[2,3].However,in order to advance the state-of-the-art accuracy performance,a deep neural network (DNN) typically has tens or even hundreds of network layers,leading to significant computational cost and memory consumption.As a result,it is hard to deploy the whole DNN on resource-constrained devices.Although the high transmission rate offered by the next generation wireless networks allows mobile devices to offload inference tasks to a powerful cloud server,the long communication latency caused by data transmission in the public network prohibits real-time responsiveness[4].

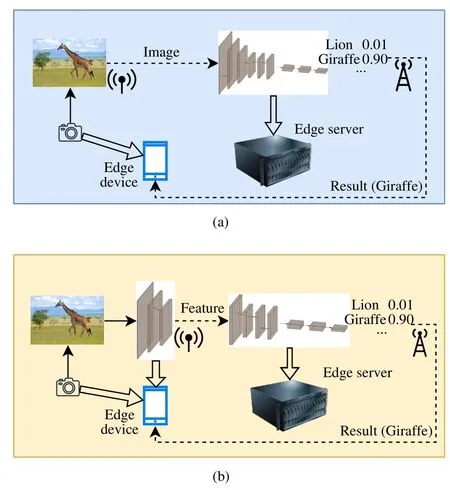

Edge inference,which deploys mini-servers for computation at the edge of wireless networks (a.k.a.edge servers),is a complementary solution of cloud inference to provide low-latency artificial intelligence (AI) services[4,5].Two typical edge inference modes are depicted in Fig.1.In particular,edge-only inference refers to the scenario that an edge device (e.g.,a smartphone) transfers the raw inference input data to an edge server for remote inference and retrieves the inference results thereafter (Fig.1(a)).Due to the limited wireless resources and the potentially large size of the inference input,the transmission latency may be too high,especially for applications with tight latency budgets (e.g.,autonomous driving[6]).Device-edge co-inference is a more agile paradigm that can alleviate this problem.In this edge inference mode,an edge device first extracts a compact intermediate feature by executing the front layers of a backbone DNN,which is then offloaded to the edge server for further processing of the remaining layers[7](Fig.1(b)).By making the most use of the computational resources at both the edge device and edge server,device-edge co-inference effectively trims the communication overhead,thus becoming an ideal solution for resource-constrained edge inference[8-11].

Fig.1 Two typical edge inference modes:(a) Edge-only inference;(b)device-edge co-inference

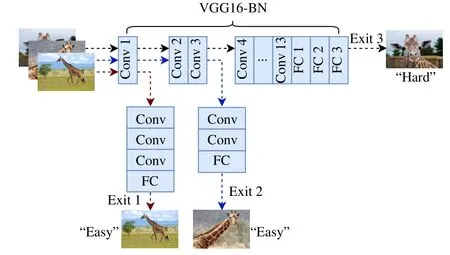

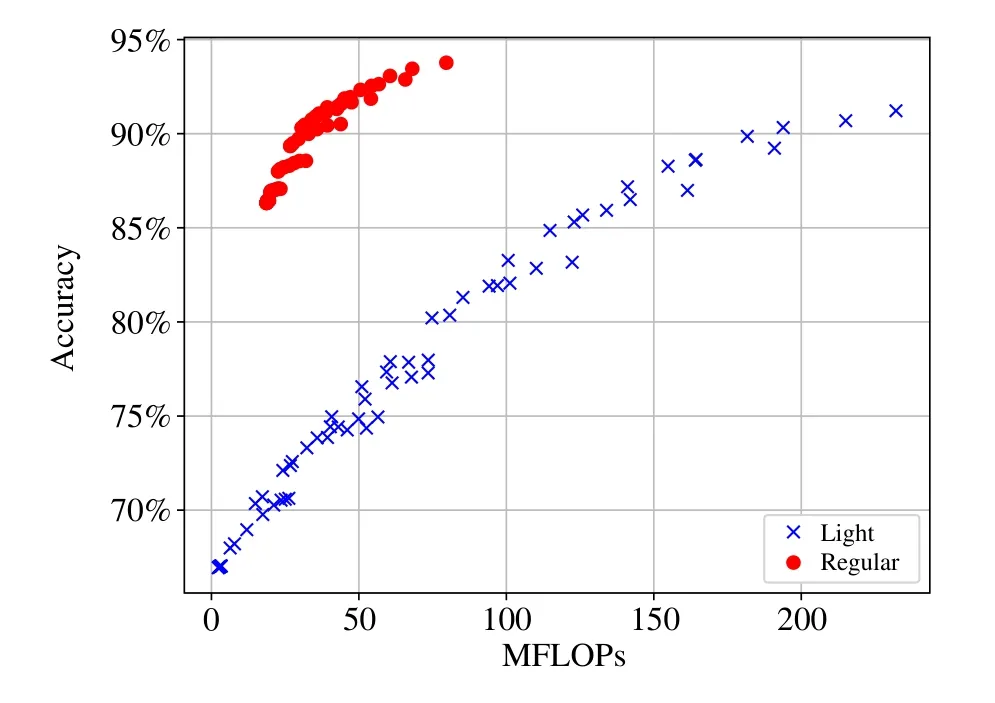

To enable more efficient device-edge co-inference,the early-exit (EE) network,which takes advantages of the data sample diversity,has gained increasing interest[8,12-14].Specifically,an early-exit network can be derived by inserting a few intermediate classifiers along with the depth of a backbone network,e.g.,VGG16-BN[15]as shown in Fig.2.As such,the“easy”data samples can be inferred by the intermediate classifiers with sufficient confidence,leaving the“hard”data samples to be processed by all the layers in the main branch.It was shown in Ref.[12] that the early exit mechanism can achieve a 5.4×/1.5×/1.9×CPU speedup for the LeNet/AlexNet/ResNet.Early-exit networks can also reduce the communication overhead in device-edge co-inference systems because the“easy”samples can be inferred from the early exits deployed on-device without being processed by the server-based network.However,depending on the backbone architecture and the insertion position,an intermediate classifier normally contains at least one convolutional layer and one fully-connected(FC)layer[12,14,16],which introduces substantial computation overhead.Despite the computation complexity of early-exit networks can be reduced by using light intermediate classifiers (such as those in Ref.[17]),it comes at a price of severe accuracy degradation,especially for data samples with their inference processes being early terminated.To illustrate this issue,we show the relationship between the inference accuracy and computation complexity of a VGG16-BN-based early-exit network in Fig.3.The result shows that the early-exit network with regular-size intermediate classifiers generally requires a lower computation cost for a given accuracy requirement,compared to that with light intermediate classifiers (except with a very low accuracy requirement,i.e.,<70%).This is because the light intermediate classifiers fail to terminate the inference process with enough confidence and thus more samples have to be processed by all the backbone layers in order to attain high accuracy.

Fig.2 An early-exit network with the VGG16-BN[15] as backbone.For conciseness,some convolutional layers shown in the figure may also contain a batch normalization(BN)layer,a nonlinear layer,and a pooling layer

Fig.3 The relationship between the inference accuracy and computation complexity(measured by million floating-point operations(MFLOPs))of the early-exit network shown in Fig.2 with regular-size and light intermediate classifiers on the CIFAR10 dataset.The regular-size intermediate classifiers follow the design in Fig.2 and the light intermediate classifiers consist of a fully-connected layer after the pooling operation for each exit[17].The inference accuracy and computation complexity tradeoff is adjusted by tuning the confidence thresholds of the early-exit network,as will be elaborated in section II,i.e.,λ1,λ2 ∈[0.2,0.9]with a step size of 0.1

To reduce the computation overhead brought by the early exits,in this paper,we advocate developing a low-complexity early exit prediction mechanism,named exit predictor (EP),for resource-constrained edge AI.Our basic idea is that notable on-device computation can be saved by skipping appropriate early exits for some distinctly“hard”data samples.

A.Related Works and Motivations

Collaborative inference,as shown in Fig.1(b),is an effective inference model for resource-constrained edge AI[7].In particular,NeuroSurgeon[10]first proposed to partition a DNN model between an edge device and a cloud server,and it attempted to find an optimal model partition point considering both the latency and energy performance.To reduce the storage and accelerate the co-inference process,mixedprecision DNN models are adopted for collaborative inference in Ref.[18].However,DNN partitioning may result in increased communication overhead because of the in-layer data amplification phenomenon[19],and therefore intermediate feature compression techniques were also exploited to achieve communication-efficient device-edge co-inference[19-21].

The early-exit network architecture can reduce both the computation and transmission cost of device-edge coinference[12,22],which avoids all inference data to be processed by every backbone layer.Early-exit networks can also be used in device-edge-cloud collaborative inference systems[8],where the inference model is divided into three partitions over the distributed computing hierarchy.Besides,Li et al.introduced the edgent framework in Ref.[14],which optimizes the latency of device-edge co-inference under static and dynamic bandwidth conditions through adaptive DNN partitioning and right-sizing.Similarly,synergistic progressive inference of neural network (SPINN)[23]optimizes the early-exit policy and model partition point of an early-exit network for cooperative edge inference under dynamic network conditions.Nevertheless,while many prior investigations on early-exit networks focused on improving the model accuracy[24,25]and efficiency when being deployed in the edge computing environments[14,23],few studies tackled the additional computational overhead brought by intermediate classifiers[16].Although the significant computation overhead of intermediate classifiers has received some most recent attentions,existing solutions either use light intermediate classifiers with severe performance degradation[17],or reuse the predictions of the preceding intermediate classifiers for later ones to gain a better accuracy-time tradeoff[26].However,the“hard”samples may still need to be processed by all the intermediate classifiers.

B.Contributions

In this paper,we consider a device-edge co-inference system supported by early-exit networks and propose a lowcomplexity early exit prediction mechanism to improve the on-device computation efficiency.Our major contributions are summarized as follows:

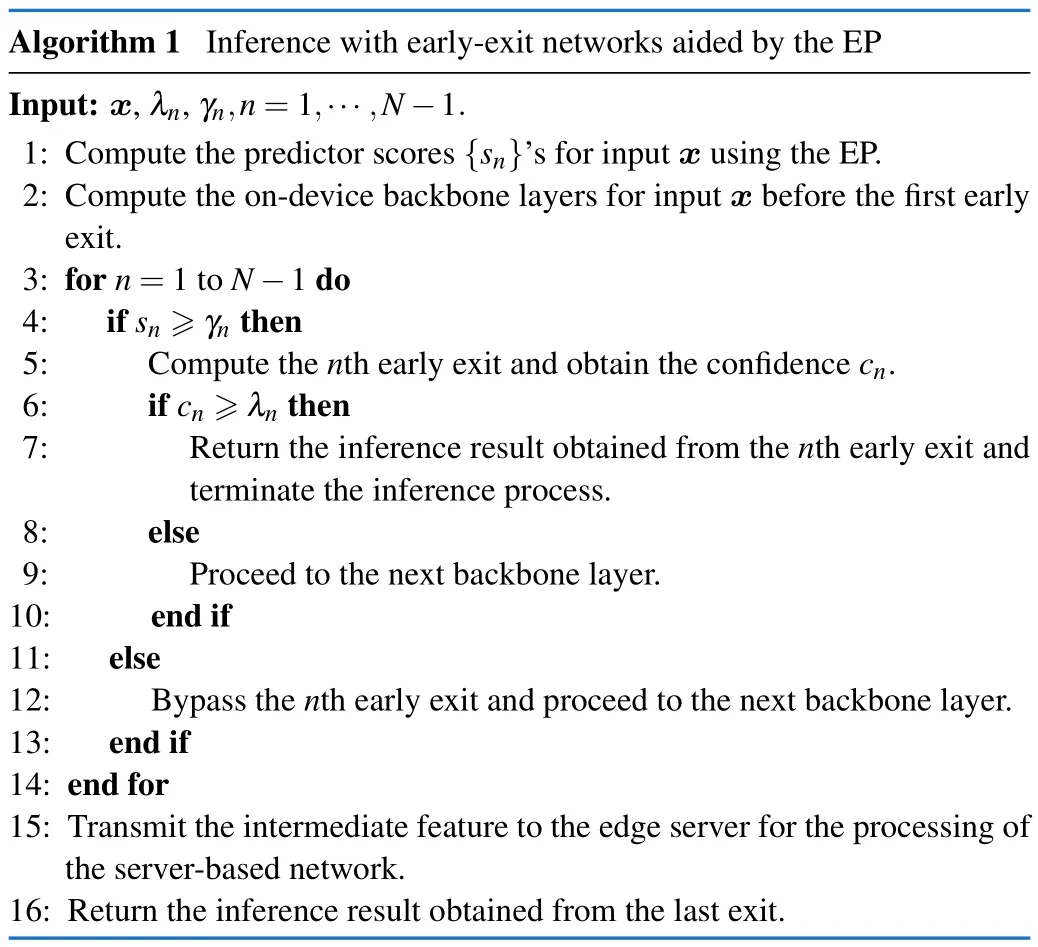

·We design an innovative early exit prediction mechanism,named EP,as an effective means to reduce the ondevice computation overhead in device-edge co-inference systems supported by early-exit networks.To make the EP with low computation cost,depthwise separable convolution is applied to design its network architecture,meanwhile,the squeeze-and-excitation and channel concatenation operations are adopted to improve the performance.

·We extend the EP for latency-aware edge inference under different communication bandwidths.To avoid training multiple EPs for each different bandwidth conditions,we propose to retain just one EP,and adapt its hyper-parameters,namely the prediction thresholds,together with the confidence thresholds of the early-exit network via a few simple regression models.

·We conduct an extensive performance evaluation for the proposed methods on image classification tasks.The experiment results show that the EP helps reduce the on-device computation overhead remarkably with little accuracy loss or no accuracy loss.For latency-aware edge inference,the proposed method achieves better accuracy compared with other baselines under different bandwidth conditions.

C.Organization

The rest of this paper is organized as follows.In section II,we introduce a device-edge co-inference system supported by early-exit networks and define the design problem for on-device computation overhead reduction.We develop an early exit prediction mechanism in section III and extend our investigation to latency-aware edge inference with varying communication bandwidth in section IV.Experimental results are presented in section V and conclusions are drawn in section VI.

II.SYSTEM MODEL AND DESIGN PROBLEM

In this section,we first introduce the device-edge coinference system supported by early-exit networks.Then,we define the design problem to reduce the on-device computation overhead of the considered edge inference system.

A.Device-edge Co-inference with Early-exit Networks

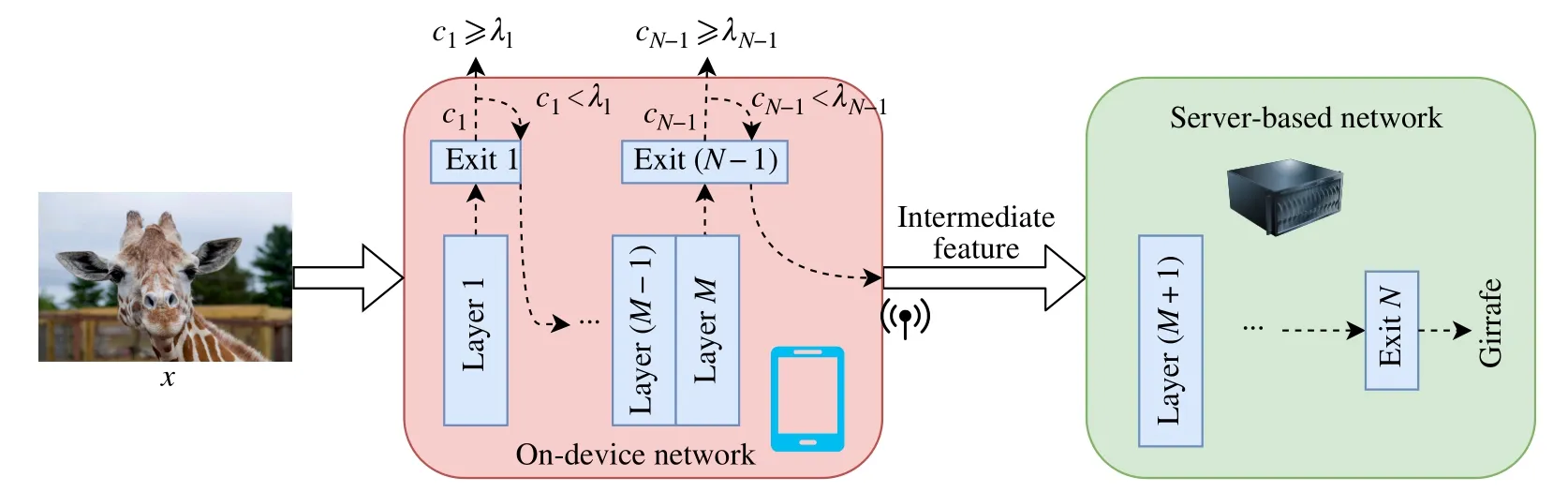

We consider a device-edge co-inference system empowered by an early-exit network as shown in Fig.4.The earlyexit network is derived from a backbone network by insertingN-1 early exits (i.e.,Nexits in total),which is partitioned between an edge device and the edge server as the on-device and server-based networks,respectively.Each early exit contains an intermediate classifier,which may consist of multiple network layers,followed by a fully-connected layer and a soft-max layer[12].For convenience,the term“intermediate classifier”is used interchangeably with“early exit”in the remainder of this paper.We assume that the edge server is wellresourced,i.e.,the computation latency at the edge server is negligible compared with those of on-device computation and intermediate feature transmission,and thus all theN-1 early exits are deployed on the edge device.Accordingly,the model partition point is chosen as the insertion position of the last early exit.

Fig.4 A device-edge co-inference system empowered by an early-exit network with N-1 early exits

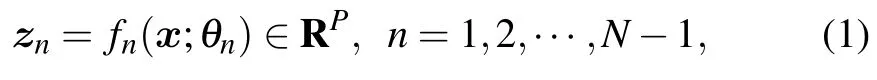

Each inference data (e.g.,an image) is processed sequentially by theN-1 early exits until an inference result with sufficient confidence is obtained.It is also possible that none of the early exits is able to obtain a reliable inference result,and in this case,the intermediate feature computed by the ondevice network is forwarded to the edge server and the inference result is then determined by the last (Nth) exit.We denote the feedforward operation from the input layer to thenth exit asfn(·),and the output of thenth intermediate classifier before the soft-max layer can thus be expressed as follows:

wherexis the input data,θnencapsulates the network parameters from the input layer to the layer before the soft-max operation in thenth exit,andPrepresents the number of categories in the classification task(e.g.,P10 in the CIFAR10 image classification task).Following most prior studies on early-exit networks,we use the top-1 probability[23]as the confidence score of the intermediate inference results,which can be written as follows:

where softmax(zn)≜anddenotes thepth dimension in vectorzn.If the confidence scorecnis no smaller than a pre-determined confidence thresholdλn ∈(0,1),the intermediate inference result is deemed with sufficient confidence and the inference process ofxis terminated at thenth early exit.Otherwise,the feature computed by the backbone layer right before thenth early exit is fed to the next backbone layer for further processing.We note that there is no need to set a confidence threshold for the last exit as the inference process has to be terminated at the edge server if none of theN-1 early exits withcn≥λn,n1,2,···,N-1.

B.Design Problem

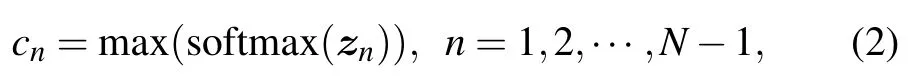

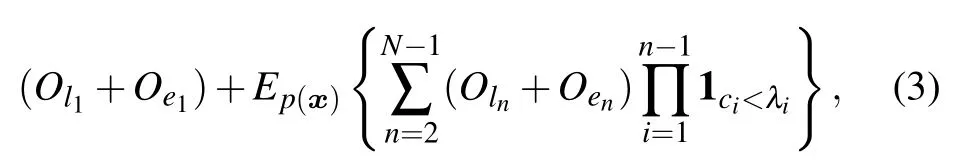

In the considered device-edge co-inference system,the average on-device computation,which is measured by the number of FLOPs1The FLOPs of different neural network models can be conveniently obtained in the PyTorch environment by using the“FLOPs counter for convolutional networks in PyTorch framework”package.,can be expressed as follows:

wherep(x)denotes the input data distribution and 1Eis an indicator function that equals 1 if eventEhappens and 0 if otherwise.Besides,denotes the number of FLOPs required to compute thenth intermediate classifier andgives the number of FLOPs required to compute the backbone layers between the(n-1)th andnth early exit(n0 represents the input layer).For the example in Fig.2,is the number of FLOPs required to compute the“Conv 2”and“Conv 3”layers,andis the number of FLOPs required to compute the two convolutional layers and one fully-connected layer in Exit 2.

It is clear from(3)that those samples being terminated by thenth exit need to be processed by all then-1 preceding intermediate classifiers.Therefore,many of the on-device computations spent on the first few early exits are wasted if a large number of input samples are terminated by the deeper exits.In the next section,we develop an early exit prediction mechanism to reduce the on-device computation overhead by wisely skipping the processing of some early exits.

III.THE PROPOSED EARLY EXIT PREDICTION MECHANISM

In this section,we develop an early exit prediction mechanism,named EP,to save the on-device computation for resource-constrained edge inference.To justify our motivation,we consider an instance of the early-exit network shown in Fig.2 with both of the two confidence thresholds set as 0.9,corresponding to the point in Fig.3 with the highest accuracy.For the CIFAR10 test set with 10 000 images,66.62%,19.81%,and 13.57%of the input samples are output from the three exits,respectively,leading to 79.64 MFLOPs(including 42.44 MFLOPs on-device).Ideally,if there is an oracle to direct the samples output from the appropriate exit,the required number of FLOPs to process this test set can be reduced to 72.13 MFLOPs(including 34.93 MFLOPs on-device).

The EP shall determine whether some early exits can be skipped without being computed for the particular input samples.Our idea is actually similar to those in Refs.[27,28],which aim at selecting the inference network for each input sample via a lightweight neural network,so that some“easy”samples can be processed by the low-complexity models.However,the methods developed in Refs.[27,28] are not readily applicable to early-exit networks.On one hand,the lightweight neural network developed in Ref.[27]directly learns the top-1 probabilities for inference model selection.But as this is a complex multivariate regression task,large discrepancies between the predicted and ground-truth values may be incurred.Also,Ref.[28] proposed to learn the sample complexity for selection between two inference models with mild and aggressive pruning,respectively,which,however,cannot be used for early-exit networks with three or even more early exits.

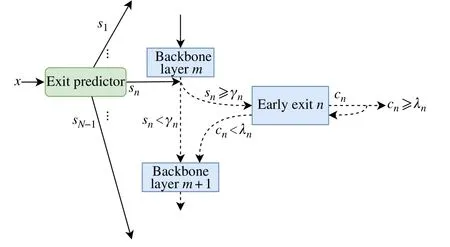

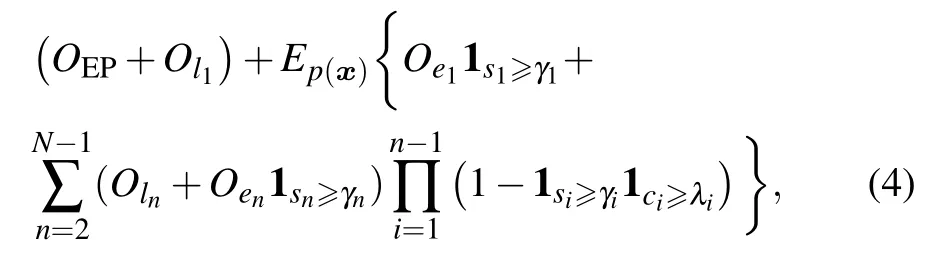

A.Early Exit Prediction Mechanism

Since the inference results are obtained from the last exit if all the intermediate classifiers fail to derive sufficiently confident results,the proposed EP derivesN-1 prediction scoressn,n1,···,N-1 as indicators on whether an early exit should be computed or not.Fig.5 shows the working mechanism of the EP,which is also deployed on the device.Specifically,before being fed to the early-exit network,each input samplexis first processed by the EP to generate the prediction scores{sn}’s for all theN-1 early exits.During the inference process,ifsnis no smaller than a predefined prediction thresholdγn,the intermediate feature computed by the backbone layers is processed by the intermediate classifier in thenth early exit.Otherwise,thenth early exit is skipped and the intermediate feature is passed to the next backbone layer.Nonetheless,for a sample computed by thenth early exit,whether the inference process is actually terminated is still governed by the relationship between the confidence scorecnand the confidence thresholdλn,as elaborated in section II.A.The inference procedures of early-exit networks aided by the EP are summarized in Algorithm 1.

Fig.5 Working mechanism of the EP

As a result,the average on-device computation with the proposed early exit prediction mechanism can be written in the following expression:

whereOEPdenotes the number of FLOPs required to compute the EP.Since the processing of the EP is compulsory for every sample,the EP should have a low computational complexity in order not to compromise its advantages of skipping the early exits.

At the foot of our bed is a brass8 chest that is filled with all of the greeting cards Patricia has received from me over the past fifteen years, hundreds and hundreds of cards, each one full of just as much love as the next. I can only hope that our life together will last long enough for me to fill ten brass chests with my weekly messages of love, affection and most of all thanks for the joy Patricia has brought to my life.

B.Network Architecture

To implement the EP with a lightweight neural network,we formulate the task of generating theN-1 prediction scores{sn}’s asN-1 binary classification tasks,wheresncan be interpreted as the likelihood that the confidence scorecnis no smaller than the pre-defined confidence thresholdλn.

Fig.6 shows the proposed network architecture of the EP,and its computational cost and storage requirement mainly come from the convolutional layers and FC layers.In order to reduce the size of convolutional layers,we replace the traditional convolution with the depth-wise separable convolution that consists of sequential depth-wise convolution and point-wise convolution[29-31],except for the first convolutional layer.In our experimental results as shown in section V,the strides of the first convolutional layer and the depth-wise convolutional layers are chosen as 2,which reduce the height and width of input features by half.In this way,the computational complexity of the EP can be significantly reduced.To boost the performance of the EP,we concatenate the input and output feature channels[32]of the depth-wise convolutional layers before the squeeze-and-excitation operation[33],and max pooling is adopted as the downsample operation for channel concatenation.The number of channels output from the first convolutional layer is 16 and those output from the three point-wise convolutional layers are 32,64,and 128,respectively.The last FC layer is cascaded with a Sigmoid function to convert each output dimension to a prediction score from 0 to 1.

Fig.6 Network architecture of the EP(“Cat.”stands for channel concatenation and“SE”refers to the squeeze-and-excitation operation.“Dw”and“Pw”are the abbreviations of“depth-wise”and“point-wise”respectively.The blue blocks are layers with stride 1 and the yellow blocks are layers with stride 2.The green blocks are the fully-connected layers)

C.Training Procedures

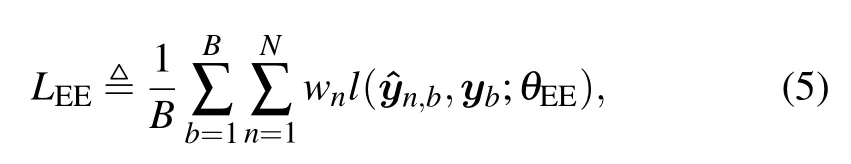

The EP imitates the behavior of a pretrained early-exit network.The confidence thresholds for theN-1 early exits also need to be determined before training the EP.In particular,the early-exit network is trained by optimizing the following loss function on a training dataset

whereθEEdenotes the network parameters,wnis the weight of thenth exit,softmax(zn,b),andzn,bis the output of thenth exit for thebth training sample before the soft-max layer as given by(1).Besides,the labels{yb}’s are encoded in one-hot format andldenotes the cross entropy function.

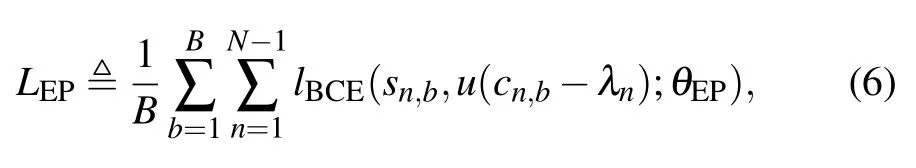

Once the early-exit network is trained,we can collect the data to train the EP.Therefore,the training objective of the EP is designed as follows:

whereis the binary cross entropy loss andθEPrepresents the network parameters of the EP.The value ofsn,bis constrained within 0 to 1 by a Sigmoid function,i.e.,the last layer of the EP,as follows:

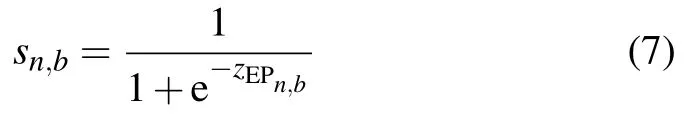

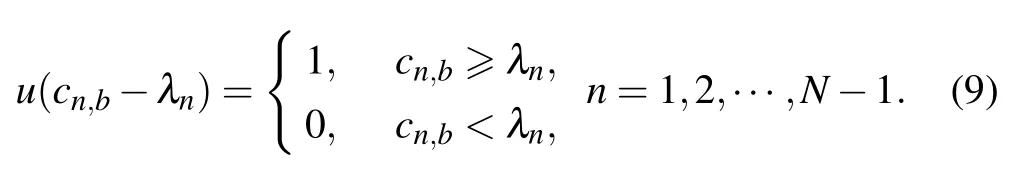

as the output of the EP of the training samplexbbefore the Sigmoid function.We note that in (6),u(·) is the unit step function and thusu(cn,b-λn)given below is the target binary label of samplexb:

When training the EP,each sample is first processed by the early-exit network and the confidence scores{cn,b}’s corresponding to theN-1 early exits are generated.Then,the pre-defined confidence thresholds are applied to obtain binary labels as shown in (9).Hence,the training objective can be optimized via the back-propagation algorithm[34].

IV.LATENCY-AWARE EARLY EXIT PREDICTION

Although the EP developed in section III is able to reduce the amount of on-device computations,it fails to optimize the critical end-to-end inference latency,which consists of not only the on-device computation latency,but also the transmission latency.This section extends the EP to scenarios with varying communication bandwidth,striving for the best tradeoff between the accuracy and latency performance.

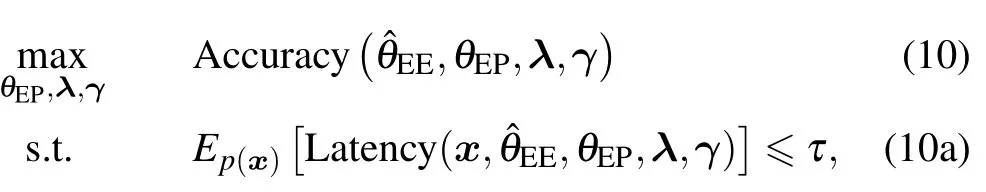

To reduce the transmission latency,we compress the intermediate feature prior to transmission[9].Also,when the communication bandwidth is low,it is desirable to keep more samples with their inference processes being terminated by the early exits on the device,which can be achieved by tuning the confidence thresholds[23,35].Therefore,the device-edge co-inference system seeks to maximize the inference accuracy subject to an average inference latency requirementτ,which can be formulated as follows:

whereOdevice(·)denotes the on-device computation andD(·)is the compressed feature size.Specifically,we follow the idea of Bottlenet++[19]by adopting an autoencoder to compress the intermediate feature before transmission,which is decompressed at the edge server before being processed by the server-based network.We also truncate the feature bitwidth to attain further communication overhead reduction.The training procedures of the early-exit network with the autoencoder and quantizer are summarized below.

·We first pretrain the early-exit networkθEEin an end-toend manner as discussed in section III.C.

·An autoencoder is inserted at the selected model partition point,and it is trained with the server-based network while freezing the on-device network.In this way,the autoencoder causes no effect on the early exits.

·We fine-tune the server-based network after adding the quantization module to increase the accuracy of the last exit.Since the quantization model cannot be back-propagated,the parameters of the on-device network and the feature encoder are frozen in this step.

When the confidence thresholds are adjusted according to the communication bandwidth,the EP needs to be adapted accordingly as it is trained with a given set of{λn}’s.A straightforward solution is to train an EP for each possible communication bandwidth.However,deploying a large number of EPs shall result in significant memory footprint,which is notdesirable for resource-constrained edge AI.As a result,we propose to retain just one EP,which imitates the early-exit network with the highest accuracy2We perform a multi-dimensional grid search on the confidence thresholds to obtain an early-exit network with the highest accuracy on the test set,and train the EP using the procedures in section III.C on the training set.,and adapts the prediction thresholds and confidence thresholds to the communication bandwidth.To determine the threshold values under different bandwidth conditions,a few simple regression models (each is made up of two FC layers)are trained,which are facilitated by recording the optimal combinations of the threshold values,i.e.,λandγ,for(10)with bothandθEPfixed on multiple small sets of discrete communication bandwidth values of the test set3The number of regression models to be trained and the sets of bandwidth values used for training the regression models are hyper-parameters that need to be tuned in practice..

V.EXPERIMENTAL RESULTS

In this section,we evaluate the performance of the proposed early exit prediction mechanism through numerical experiments.

A.Experimental Setup

We conduct the experiments with three backbone DNN architectures,including the AlexNet4Consider the kernel size and stride of the original AlexNet[36] may be too large for 32×32 images,we use a modified AlexNet architecture with smaller kernel size and stride.,VGG16-BN[15],and ResNet44[37]on the CIFAR10 and CIFAR100 datasets[38].The two datasets contain 50 000 training samples and 10 000 test samples,which are 32×32 color images from 10 and 100 categories,respectively.All our experiments are implemented with the PyTorch library[39].Tab.1 shows the model accuracy and the computation complexity of the three backbone networks on the two datasets.

Tab.1 Backbone model accuracy and computation complexity

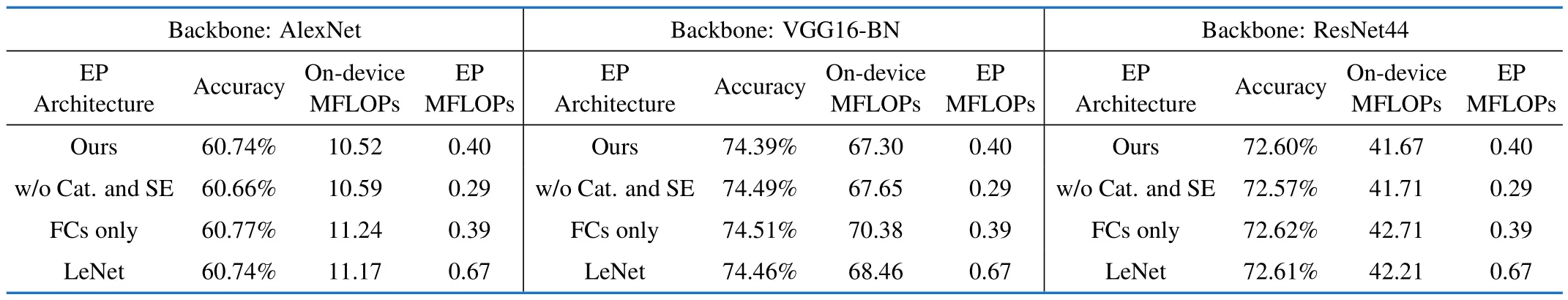

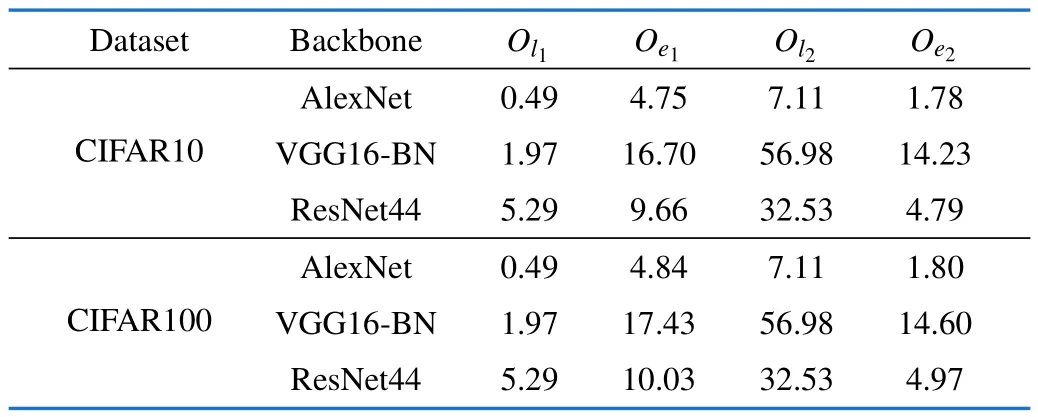

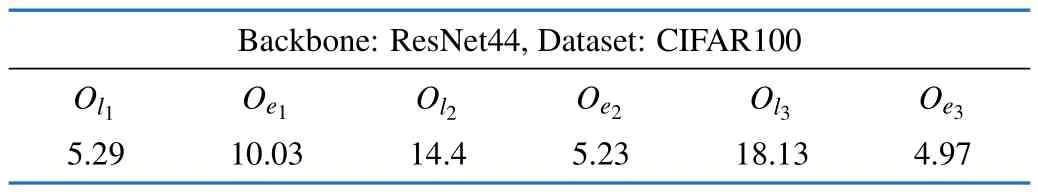

Two early exits are inserted into the on-device network,and the weights in the training objective(5)for the three exits are set as 0.2,0.3,and 0.5,respectively.We adopt intermediate classifiers with relatively low computational complexity compared to the backbone networks[16].Specifically,we assign more convolutional layers to the intermediate classifiers for a deeper backbone network,and the second early exit is lighter in computation than the first one.Since AlexNet is not deep,we insert two intermediate classifiers with 2 and 1 convolutional layer(s) respectively,followed by a max-pooling layer and an FC layer,which are placed after the first and second convolutional layer in the backbone network respectively.Besides,for VGG16-BN,the first intermediate classifier consists of 3 convolutional layers and 1 FC layer,which is inserted after the first convolutional layer in the backbone.The second intermediate classifier consists of 2 convolutional layers and 1 FC layer,and it is inserted after the third convolutional layer in the backbone.In addition,as ResNet44 applies the short connection structure,the early exits should avoid being inserted inside a residual block (RB).Hence,we insert the first intermediate classifier with 2 RBs,1 max-pooling layer,and 1 FC layer after the first RB in the backbone,and the second early exit consists of 1 RB,1 max-pooling layer,and 1 FC layer after the eighth RB in the backbone.Tab.3 in Appendix A) and Tab.5 in Appendix B) respectively show the output feature size of each layer/RB in the early exits and the amount of computations required to process each part of the on-device network.We use stochastic gradient descent with a batch size of 128 and a weight decay of 0.000 5 to train the early-exit networks.Cosine annealing is applied with an initial learning rate of 0.1 and an end learning rate of 0.000 1 at the 200th epoch.The total number of training epochs is 220.The hyper-parameter setting of training the EP is similar to that of the early-exit networks except with a weight decay of 0.000 2.

Tab.2 Performance of EPs with different network architectures on the CIFAR100 dataset

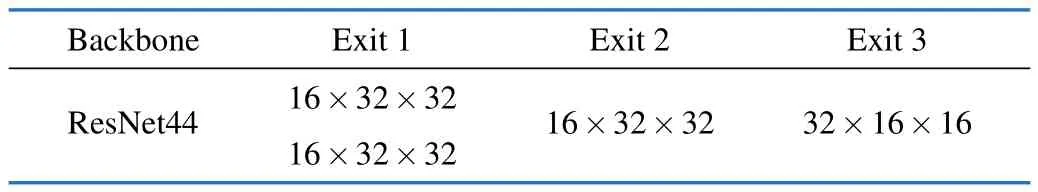

Tab.3 Output feature size(channel×height×width) of each layer/residual block in the early exits(for early-exit networks with two intermediate classifiers)

Tab.4 Output feature size(channel×height×width)of each residual block in the early exits(for the early-exit network with three intermediate classifiers in the ablation study)

Tab.5 Amount of computations(in MFLOPs)required for each part of the on-device network(for early-exit networks with two intermediate classifiers)

B.On-device Computation Savings

We evaluate the performance of the EP in terms of classification accuracy and average on-device computation for each inference data sample.For comparisons,the original earlyexit network,as well as a filter pruning[40]method which removes the filters according to theirl2-norm values,are adopted as baselines.Since the edge server is well-resourced,filter pruning only applies to the on-device network (except the last FC layer to avoid drastic accuracy degradation).

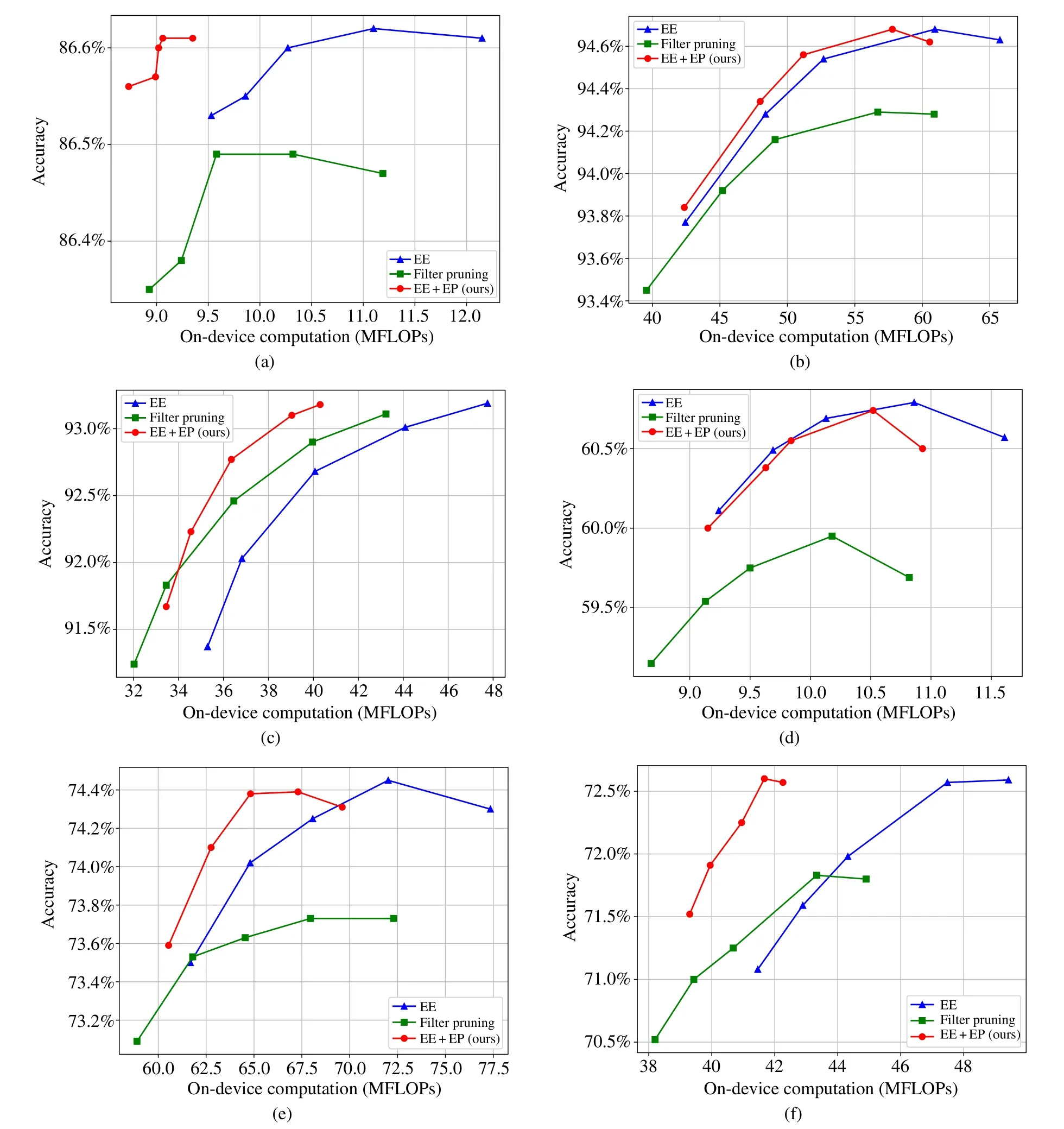

In the experiments,we adjust the confidence thresholds of the early exits to achieve a different tradeoff between accuracy and on-device computation overhead,and the results are shown in Fig.7.In general,compared with the original early-exit networks,the proposed EP can greatly reduce the on-device computation without much impairing the classification accuracy.Also,we observe that although the filter pruning method is able to save some on-device computations,it suffers from notable accuracy degradation.In other words,the proposed EP is more suitable for scenarios with stringent accuracy requirements.As the values of the confidence thresholds become lower,the inference processes of more samples are terminated by the first early exit,leading to smaller on-device computation and reduced inference accuracy.Thus,fewer samples are able to skip the first early exit so that the on-device computation saving brought by the EP becomes less significant.There is an interesting observation from the results of the early-exit networks with the AlexNet and VGG16-BN backbones that the accuracy drops even with high on-device computation overhead,i.e.,large values of the confidence thresholds,which can be explained by the overthinking of early-exit networks[41].Fig.8 further shows the performance of the filter pruning method with different pruning ratios.We find that the proposed early exit prediction mechanism still substantially outperforms the filter pruning method.

Fig.7 Classification accuracy versus on-device computation overhead.The two confidence thresholds are assumed to be identical and increase with the on-device computation.The filter pruning method removes 10% of the filters with the lowest l2-norm values.The prediction thresholds γ are chosen as the ones with the lowest on-device computation while less than 2% additional samples are terminated at the last exit,i.e.,cannot be terminated by the two early exits:(a) AlexNet backbone on CIFAR10 with λ1 λ2 ∈{0.90,0.92,0.94,0.97,0.99};(b) VGG16-BN backbone on CIFAR10 with λ1λ2 ∈{0.90,0.95,0.97,0.99,0.995};(c)ResNet44 backbone on CIFAR10 with λ1λ2 ∈{0.85,0.88,0.93,0.97,0.99};(d)AlexNet backbone on CIFAR100 with λ1λ2 ∈{0.50,0.55,0.60,0.70,0.80};(e)VGG16-BN backbone on CIFAR100 with λ1λ2 ∈{0.75,0.80,0.85,0.90,0.95};(f)ResNet44 backbone on CIFAR100 with λ1λ2 ∈{0.70,0.75,0.80,0.90,0.95}

Fig.8 Classification accuracy versus on-device computation overhead for the early-exit network based on the ResNet44 backbone and the CIFAR100 dataset.The numbers in the figure indicate the pruning ratios of the filter pruning method.The two confidence thresholds of the early-exit network are both set as 0.95

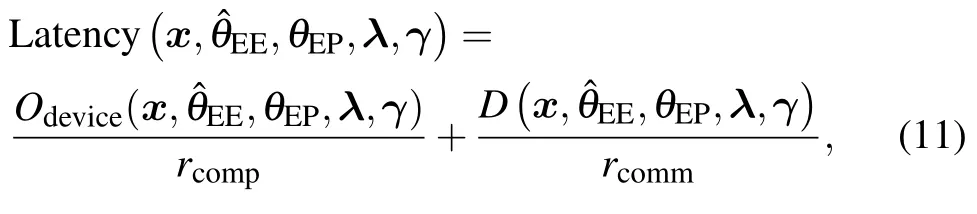

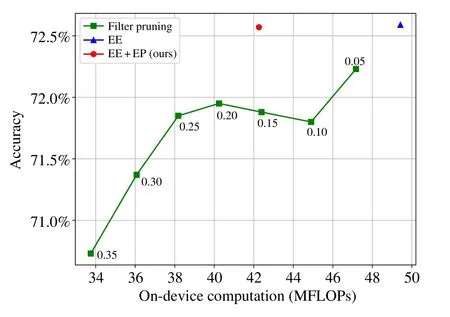

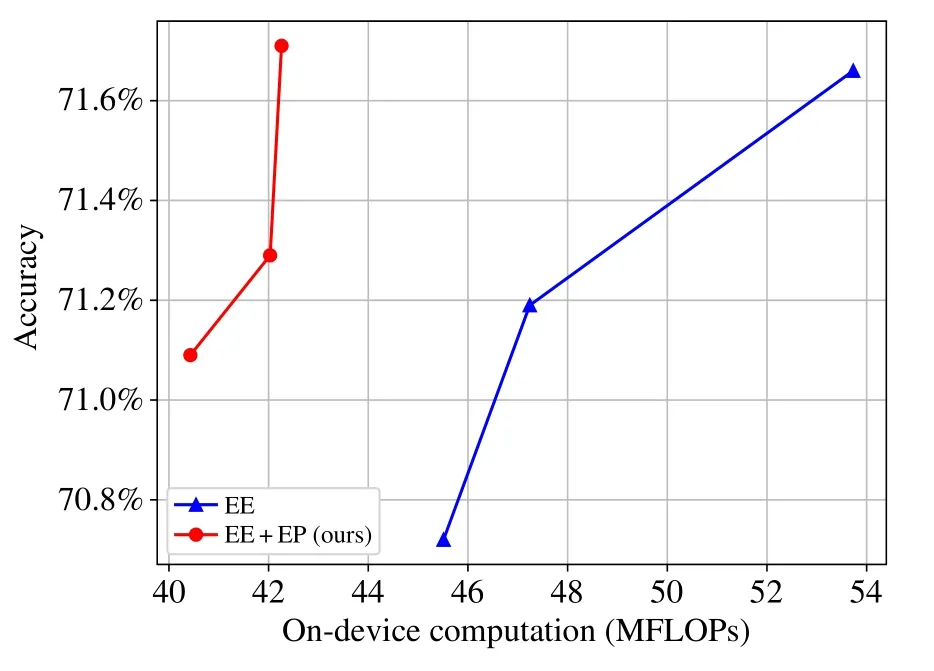

Ablation Studies:We first examine the performance of different EP architectures on the CIFAR100 dataset,as shown in Tab.2,including the proposed design in Fig.6 (“ours”),the proposed design in Fig.6 without the channel concatenation and the squeeze-and-excitation operations (“w/o Cat.and SE”),the network architecture consists of only two FC layers (“FCs only”),and the LeNet[42].From this set of results,we find all the considered network architectures achieve similar classification accuracy,while the proposed network architecture for the EP always results in the lowest on-device computation overhead.We further test the EP for early-exit networks with three early exits.We choose the ResNet44 as the backbone,which is deeper than AlexNet and VGG16-BN so that more early exits can be inserted.Tab.4 in Appendix A) and Tab.6 in Appendix B) respectively show the output feature size of each residual block in the early exits and the amount of computations required to process each part of the on-device network.The classification accuracy versus the ondevice computation overhead is shown in Fig.9,from which,we can still observe significant improvements achieved by the EP compared with the original early-exit networks.

Tab.6 Amount of computations(in MFLOPs)required for each part of the on-device network(for the early-exit network with three intermediate classifiers in the ablation study)

Fig.9 Classification accuracy versus on-device computation overhead on the CIFAR100 dataset.The early-exit network is constructed by inserting three early exits to the ResNet44 backbone,with weights in (5) for the four exits as 0.2,0.2,0.2,0.4.In this figure,the confidence thresholds are set as λ1λ2λ3{0.75,0.8,0.95}

C.Latency and Accuracy under Different Communication Bandwidths

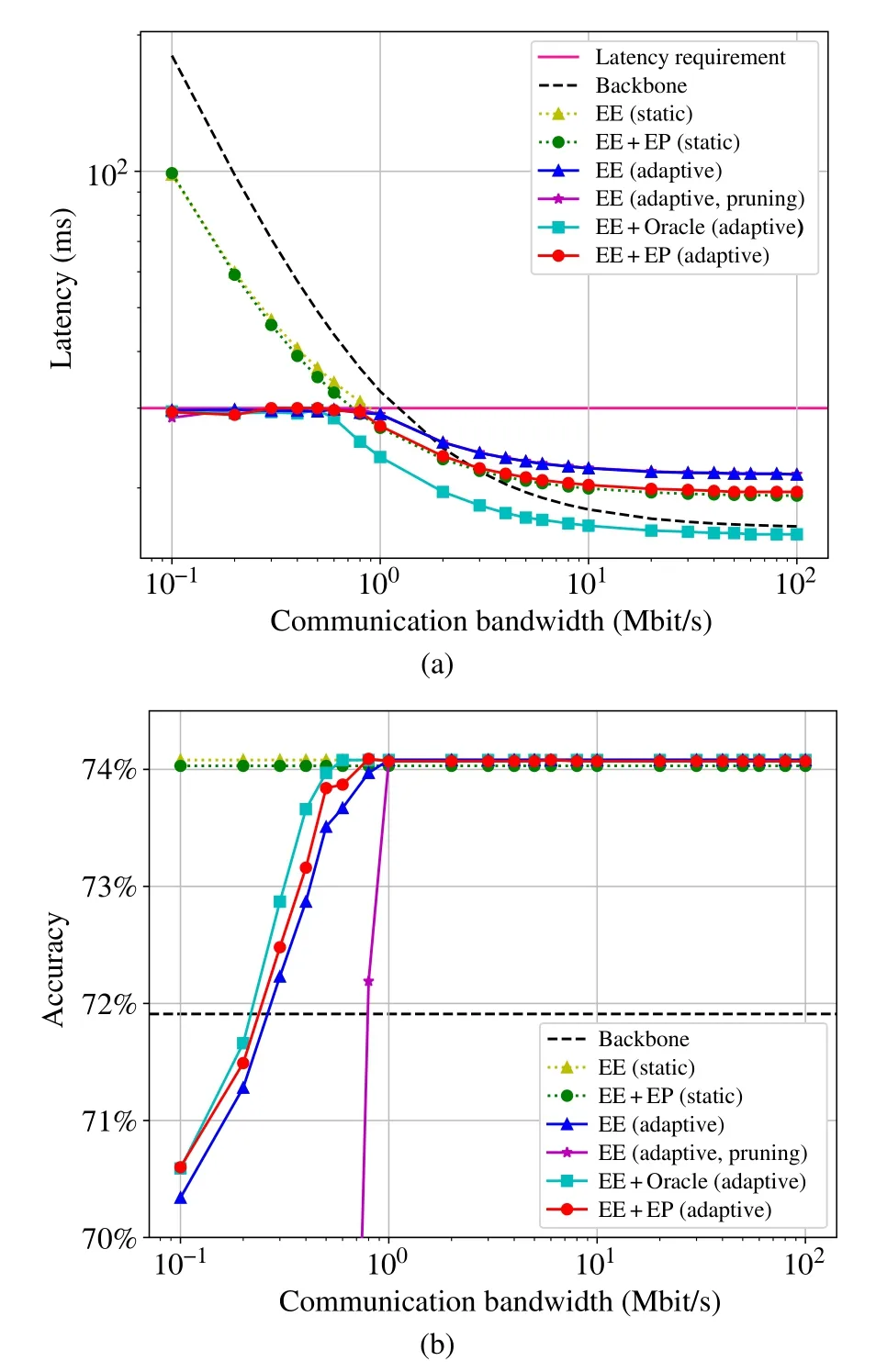

The experiments in this section are based on the VGG16-BN backbone and the CIFAR100 dataset.The architecture of the feature encoder and decoder are adopted from Ref.[19]by substituting the Sigmoid function in the encoder with a ReLU function,which reduces the height and width of each channel by half,and the number of channels to 1/4.Besides,the quantization operation converts the bit-width from 32 to 8.Therefore,the feature compression ratio is given by 64,resulting in less than 0.5% accuracy degradation.We choose the Raspberry Pi 3 as the edge device,which offers a computation speed of 3.62 GFLOPS.The latency requirement is set to 30 ms.We train 3 regression models respectively for the bandwidth intervals 0.1~1 Mbit/s,1~10 Mbit/s,and 1~100 Mbit/s in this experiment.The sets of communication bandwidth values to train the three regression models are given by{0.1,0.3,0.5,0.7,1},{1,3,5,7,10},and{10,30,50,70,100}Mbit/s respectively.We compare the proposed method (“EE+EP (adaptive)”) in section IV with the following baselines(Feature compression is applied to all the methods for fair comparisons).

·Backbone:This method uses the VGG16-BN backbone for device-edge co-inference.

·EE(static):This method refers to the original early-exit network,where the confidence scores of the two early exitsare set as 0.95 and 0.85 to achieve the best accuracy.

·EE+EP(static):This method applies the EP proposed in section III to the early-exit network in the EE(static)method,where{γi}’s are chosen with the same criterion as in Fig.7.

·EE (adaptive):This method adapts the confidence thresholds of an early-exit network according to the communication bandwidth to meet the latency requirement.

·EE(adaptive,pruning):On top of the EE(static)method,this method further applies filter pruning to the convolutional layer in the feature encoder to reduce the number of channels to be transmitted.The pruning ratio is chosen such that the latency requirement is not violated.

·EE+Oracle(adaptive):This is an idealized method and assumes that there is an oracle that can perfectly guide the samples to be computed by the exit that can obtain a confident inference result,i.e.,cn≥λn.In other words,it can be regarded as a performance upper bound of the proposed EE+EP(adaptive)method.

The latency and accuracy performance under different communication bandwidths are shown in Fig.10.It can be observed from the figure that,when the bandwidth is low,the latency requirement can not be satisfied by the three static methods,despite they are able to achieve the best classification accuracy.Compared with the early-exit network,the end-to-end inference latency of the VGG16-BN backbone is significant higher when the communication bandwidth is low because all the samples need to be processed by the server-based network.When the communication bandwidth is high,the VGG16-BN backbone achieves lower latency compared with the early-exit network as the computation latency dominates in this regime.This result demonstrates the benefits of early-exit networks for device-edge co-inference systems with insufficient communication resources.Kindly note that the VGG16-BN backbone has slight accuracy degradation compared with the early exit network5Similar observations can be found in Refs.[12,24]..In contrast,the four adaptive methods can always meet the inference latency requirement.However,the EE(adaptive,pruning)method suffers from serious accuracy degradation as too many filters are removed from the encoded feature in order to meet the latency requirement.In the lowbandwidth regime(i.e.,0.1 to 1 Mbit/s),the proposed EE+EP(adaptive) method outperforms the EE (adaptive) baseline,which is because on-device computation latency reduction of the“easy”samples brought by the EP so that more“hard”samples can be inferred by the last exit with higher accuracy.The idealized EE+Oracle (adaptive) method achieves both latency and accuracy improvements over our proposed method because it can precisely avoid unnecessary computation.When the communication bandwidth is higher than 1 Mbit/s,all the adaptive methods converge to the highest accuracy,and with the early exit prediction mechanism,lower latency can be achieved for its strength in saving the on-device computation.

Fig.10 Latency and classification accuracy under different communication bandwidths with the VGG16-BN backbone on the CIFAR100 dataset:(a)Latency versus communication bandwidth;(b)Classification accuracy versus communication bandwidth

VI.CONCLUSIONS

In this paper,we considered a device-edge co-inference system empowered by early-exit networks and proposed a low-complexity early exit prediction mechanism,named EP,to improve the on-device computation efficiency.Assisted by learning-based feature compression,we extended the earlyexit network for latency-aware edge inference under different bandwidth conditions.Experiment results demonstrated the benefits of our proposed methods in reducing the on-device computation overhead and end-to-end inference latency for resource-constrained edge AI.

However,as can be seen from the experiment results,the proposed method for latency-aware edge inference still has a considerable performance gap compared to the idealized method,implying there is room for further improvement.Besides,there are many future directions beyond this study.For example,it would be interesting to combine model compression and layer-skipping techniques with the proposed early exit prediction mechanism,and extend our investigation to multi-device cooperative edge inference.

APPENDIX

A)Output Feature Size of the Early Exit Layers/Residual Blocks

B) Amount of Computations Required to Process Each Part of the On-device Network

杂志排行

Journal of Communications and Information Networks的其它文章

- Channel Estimation for One-Bit Massive MIMO Based on Improved CGAN

- DOA Estimation Based on Root Sparse Bayesian Learning Under Gain and Phase Error

- Multi-UAV Trajectory Design and Power Control Based on Deep Reinforcement Learning

- A Lightweight Mutual Authentication Protocol for IoT

- Local Observations-Based Energy-Efficient Multi-Cell Beamforming via Multi-Agent Reinforcement Learning

- Rethinking Data Center Networks:Machine Learning Enables Network Intelligence