Rethinking Data Center Networks:Machine Learning Enables Network Intelligence

2022-06-29BoLiTingWangPengYangMingsongChenMounirHamdi

Bo Li,Ting Wang,Peng Yang,Mingsong Chen,Mounir Hamdi

Abstract—To support the needs of ever-growing cloudbased services,the number of servers and network devices in data centers is increasing exponentially,which in turn results in high complexities and difficulties in network optimization.Machine learning (ML) provides an effective way to deal with these challenges by enabling network intelligence.To this end,numerous creative ML-based approaches have been put forward in recent years.Nevertheless,the intelligent optimization of data center networks (DCN) still faces enormous challenges.To the best of our knowledge,there is a lack of systematic and original investigations with in-depth analysis on intelligent DCN.To this end,in this paper,we investigate the application of ML to DCN optimization and provide a general overview and in-depth analysis of the recent works,covering flow prediction,flow classification,and resource management.Moreover,we also give unique insights into the technology evolution of the fusion of DCN and ML,together with some challenges and future research opportunities.

Keywords—data center network,intelligent optimization,machine learning,network intelligence

I.INTRODUCTION

As the storage and computation progressively migrate to the cloud,the data center (DC) as the core infrastructure of cloud computing provides vital technical and platform support for enterprise and cloud services.The geographically distributed servers of a data center are logically interconnected by the data center network (DCN),which is enhanced with intelligence in flow prediction,flow classification,and resource management,as shown in Fig.1.Specifically,both flow prediction and flow classification can provide valuable statistical knowledge for the network resource allocation,network congestion control,and other network optimizations,leading to more accurate and robust network services.Therefore,the flow prediction and flow classification can be regarded as the cornerstones of network intelligence,providing fundamental services for network resource management and enabling the network with smarter resource provisioning.However,with the rapid rise of the DC scale,the flow prediction,flow classification,and resource management have become more and more complicated and challenging.What’s more,the burgeoning 5G has spawned numerous complex,real-time,diversified,and heterogeneous service scenarios[1,2],and the upcoming 6G drives the further development of network intelligence[3-5].The emergence of these new services poses new standards and higher requirements for data centers,such as high concurrency,ultra-low latency,and micro-burst tolerance.In terms of DCN automation,benefiting from software defined networks(SDN),DCs have initially achieved automation in some areas,such as automated installation of network policies and automated network monitoring.However,the implementation of such automation typically depends on predefined policies.Whenever the predefined policies are exceeded,the system lacks adaptive processing capability through autonomous learning,and human intervention must be involved.In the face of these challenges and issues,traditional solutions[6-9]have become inefficient and incompetent.Driven by these factors,both academia and industry have conducted extensive research in improving the intelligence level of DCNs by leveraging machine learning (ML)techniques[10-13].

Although the research on intelligent DCN has made great progress,it still confronts many challenges.On the one hand,the data collection and processing play an important role in the effectiveness of data-driven ML-based models.In particular,the impact of the traffic and computation overhead caused by data collection,and the potential data leakage are essentially critical.On the other hand,the research on intelligent DCN is still in its initial stage,and the intelligent solutions in some fields are not mature yet.For example,the huge scale of network,the diversity of services impose great challenges for flow predictions in dealing with dynamic flows with irregular and random distributions in both time and space dimensions.Besides,the current ML-based traffic classification schemes also have much room for improvement in the fineness of granularity,time efficiency,and robustness.Moreover,with the increasing complexity of network infrastructure,the explosive growth of the number of devices,and the growing demand for services,traditional resource management solutions can no longer effectively deal with these problems.Above all,the high networking complexity,highly dynamic environment,diverse traffic pattern,and diversified services all make it not so easy to directly employ ML techniques in the data center.

In this survey,we comprehensively investigate the research progress of ML-based intelligent DCN.These existing intelligent DCN solutions will be analyzed and compared from different dimensions.Furthermore,in-depth insights into the current challenges and future opportunities of ML-assisted DCN will be provided subsequently.To the best of the authors’knowledge,this is the first survey about the application of ML in DCNs.The main contributions of this paper are summarized as follows.

·We comprehensively review 100+most recent related literature.The diversity of ML techniques is fully respected to ensure fair comparisons.

·We provide enlightening discussions on the usage of ML algorithms in DCNs,and analyze their effectiveness from various aspects.

·We identify a number of research challenges,directions,and opportunities corresponding to the open or partially solved problems in the current literature.

The rest of this paper is organized as follows.Section II,section III,and section IV investigate the existing intelligent DCN solutions in the fields of flow prediction,flow classification and resource management,respectively,together with in-depth discussion and analysis.Section V provides insights into DCN’s intelligence accompanied by challenges as well as opportunities.Finally,the paper concludes in section VI.

II.FLOW PREDICTION

Flow prediction plays a crucial role in DCN optimization,and serves as a priori knowledge in routing optimization,resource allocation,and congestion control.It can grasp the characteristics and trends of network flow in advance,providing necessary support for relevant service optimization and decision-making.However,the irregular and random distributions of flows in both time and space dimensions impose great challenges.For instance,the flow estimation methods based on the flow gravity model[14,15]and network cascade imaging[16,17]are challenging to cope with a large number of redundant paths among the massive number of servers.

The current research work can be generally divided into classical statistical models and ML-based prediction models.The classical statistical models usually include autoregressive(AR) models,moving average (MA) models,autoregressive moving average (ARMA) models,and autoregressive synthetic moving average(ARSMA)models.These models cannot cope with high-dimensional and complex nonlinear relationships yet,and their efficiency and performance in complex spaces are fairly limited.ML-based prediction models can be trained based on historical flow data information to find potential logical relationships in complex and massive data,explaining the irregular distribution of network flow in time and space.According to the flow’s spatial and temporal distribution characteristics,we classify ML-based prediction solutions into temporal-dependent modeling and spatial-temporaldependent modeling.Next,we will discuss and compare the existing representative work of these two schemes from different perspectives,followed by our insights into flow prediction.

A.Temporal-Dependent Modeling

The temporal-dependent modeling focuses on the temporal dimension inside the DC.Flow forecasting is usually achieved by using one-dimensional time series data.Szostak et al.[18]used supervised learning and deep learning(DL)algorithms to predict future flow in dynamic optical networks.They testedsix ML classifiers based on three different datasets.Hardegen et al.[19]collected about 100 000 flow data from a university DCN and used deep learning to perform a more fine-grained predictive analysis of the flow.Besides,researchers[20-22]have also carried on a lot of innovative work on the basic theoretical research of artificial intelligence(AI).However,some of the experiments to verify the effectiveness of these intelligent schemes are not sufficient.For example,Hongsuk et al.[23]only conducted experimental comparisons on the effectiveness with different parameter settings,lacking the crosssectional comparisons as aforementioned.

B.Spatial-Temporal-Dependent Modeling

The spatial-temporal-dependent modeling focuses on both temporal and spatial dimensions across DCs.Note that we counted the collected papers and found that 80%of such kind of intelligent solutions were compared with the classical statistical models and other ML-based prediction models.Evidently,the spatial-temporal-dependent modeling greatly improves the feasibility and accuracy of solutions,but it also increases the complexity and the operational cost of network operations and maintenance(O&M).Such pros and cons may explain why less than half of the commercial DC flow prediction solutions adopt spatial-temporal-dependent modeling according to the statistical data we investigated.Li et al.[24]studied flow transmission schemes across DCs,combined wavelet transform technique with a neural network,and used the interpolation filling method to alleviate the monitoring overhead caused by the uneven spatial distribution of DC traffic.Its experiments conducted in Baidu DC showed that the scheme could reduce the prediction error by 5%to 30%.We also note that about 70%of the intelligent flow prediction solutions we have collected using real-world data.For spatial-temporaldependent modeling solutions we collected,this metric is 80%.Pf¨ulb et al.[25]used DL to predict the inter-DC traffic based on the real-world data obtained from a university DC that had been desensitized and visualized.

C.Discussion and Insights

Tab.1 presents the comparisons of existing approaches.Here we provide some of our insights listed as below.

· Prior knowledge.ML algorithms such as support vector regression (SVR) and random forest regression (RFR),compared to classical statistical models,can handle highdimentional data and obtain their nonlinear relationships well.Nevertheless,their performance in exceptionally complex spatio-temporal scenarios is still limited,partially because they require additional expert knowledge support,where the model learns through the features pre-designed by the experts.However,these features usually can not fully describe the data’s essential properties.

· Quality of source data.The performance of flow prediction heavily depends on the quality of source data,with respect to authenticity,validity,diversity,and instantaneity.Not only for flow prediction,the quality of source data also plays a crucial role in other optimization scenarios of intelligent DCN,which will be detailed in section II.A.

· Anti-interference ability.The network upgrading,transformation and failures typically can cause sudden traffic fluctuations,and these abnormal data will interfere with the accuracy of the model.To improve the accuracy of traffic prediction,it is suggested to provide an abnormal traffic identification mechanism to identify the abnormal interference data and eliminate them when executing traffic predictions.

III.FLOW CLASSIFICATION

Similar to flow prediction,flow classification is also widely used as a priori knowledge for many other optimization modules such as flow scheduling,load balancing,and energy management.Accurate classification of service flows is essential for quality of service(QoS),dynamic access control,and resource optimization.The daily operation and maintenance also require accurate classification of unknown or malicious flows.Moreover,a reasonable prioritized classification ordering can help network operators optimize service applications individually and meet the resource management requirements and service needs.Nevertheless,the highly dynamic and differentiated traffic,and complex traffic transmission mechanism greatly increases the difficulty of flow classification.

Traditional flow classification schemes are usually based on the information of port,payload,and host behaviors.In the early stages of the Internet,most protocols used wellknown port numbers assigned by the Internet assigned numbers authority (IANA).However,protocols and applications began to use random or dynamic port numbers to hide network security tools.Some experimental results show that port-based classification methods are not very effective,for example,Moore et al.[39]observed that the accuracy of the classification techniques based on IANA port list does not exceed 70%.To overcome such limitations,the payloadbased flow classification method,also known as deep packet inspection (DPI),was proposed to classify flows by examining the packet payload and comparing it with the protocols’known signatures[40,41].Common DPI tools include L7 filter[42]and OpenDPI[43].However,such DPI-based solutions incur high computation overhead and storage cost though they can achieve higher accuracy of traffic classification than portbased solutions.Furthermore,dealing with the increasingly prominent network privacy and security issues also brings high complexity and difficulty to DPI-based techniques[44,45].Thus,some researchers put forward a new kind of flow classification technique based on host behaviors.This technique uses the hosts’ inherent behavioral characteristics to classify flows,overcoming the limitations caused by unregistered or misused port numbers and high loads of encrypted packets.Nevertheless,the location of the monitoring system largely determines the accuracy of this method[46],especially when the observed communication patterns may be affected by the asymmetry of routing.

Facing such dilemma of traditional solutions,ML-based flow classification techniques can address the mentioned limitations effectively[47,48].Based on the statistical characteristics of data flows,they can complete the complex classification tasks with a lower computational cost.Next,we will review and discuss different types of ML-based flow classification techniques followed by our insights at the end.

A.Traditional ML-Based Classification

Supervised learning can achieve higher accuracy of classification.Despite of the tedious labeling work,many supervised learning algorithms have been applied in flow classification,including decision trees,random forests(RFs),K-nearest neighbor(KNN),and support vector machine(SVM).Trois et al.[49]generated different image textures for different applications,and they classified the flow matrix information using supervised learning algorithms,such as SVM and random forests.Zhao et al.[50]applied supervised learning algorithms to design a new classification model that achieved an accuracy of about 99%in a large super computing center.

Unsupervised learning-based flow classification techniques do not require labeled datasets,eliminating the difficulties encountered in supervised learning and providing higher robustness.In contrast to supervised learning,the clusters constructed by unsupervised learning need to be mapped to the corresponding applications.However,the large gap between the number of clusters and applications makes it more challenging to classify flows.As investigated in Ref.[51],many existing flow classification schemes adopted unsupervised learning algorithms[52-56].Ref.[12]proposed a cost-sensitive classification method that can effectively reduce classification latency.Ref.[57]proposed a knowledge-defined networking(KDN)based approach for identifying heavy hitters in DCNs,where the efficient threshold for the heavy hitter detection was determined through clustering analysis.Unfortunately,the scheme was not compared with other methods,thus failing in proving its superiority.

B.DL-Based Classification

The service data and traffic data generated in DCNs are typically massive,multidimensional,and interrelated.It’s very challenging to dynamically explore the valuable relationship between these data in real time.To this end,deep learning,such as convolutional neural network (CNN),recurrent neural network(RNN)and long short-term memory(LSTM),is introduced to DCN as a promising way to find the potential relationship between these massive and interrelated data.However,compared with the former two ML-based classification techniques,the DL-based schemes have no advantage in training time and classification speed.Thus,Wang et al.[58]focused on improving the speed of classification and implemented a high-speed online flow classifier via field programmable gate array(FPGA),where the authors claimed that it can guarantee accuracy of more than 99% while reducing the training time to be one-thousandth of the central processing unit (CPU) based approach.Liu et al.[59]implemented a more fine-grained flow classification method based on gated recurrent unit (GRU) and reduced flow monitoring costs.In addition,Zeng et al.[60]proposed a lightweight end-to-end framework for flow classification and intrusion detection by deeply integrating flow classification and network security.

C.RL-Based Classification

Reinforcement learning (RL) agent iteratively interacts with the environment aiming to find a global optimal classification scheme according to the feedback reward and punishment of feedback in a network scenario.Specifically,after the agent makes an action,the environment then makes a corresponding feedback and transits to a new state.At the same time,the environment generates a signal,typically a numerical value,where the discounted sum of the signal is called return,which is the goal that the agent wants to maximize during action generation.We can classify feedback values as rewards or penalties depending on the degree of contribution generated by making the action.Those that positively contribute to the maximization of the goal are called rewards,while the opposite is a punishment.More specifically,an agent that is rewarded for performing an action increases the probability that the agent will perform the action in that state;if it is punished for performing the action,it decreases the probability that the agent will perform the action in that state.To handle the highly dynamic network conditions in DCNs,Tang et al.[61]proposed a new RL-based flow splitter that effectively reduced the average completion time of flows,especially for delay-sensitive mice flows.Whereas,as RL tends to fall into local optimal solution and takes a longer training time,this paradigm has not been widely used in flow classification.

D.Discussion and Insights

ML can overcome the limitations and constraints of traditional flow classification schemes.In view of this,numerous ML-based flow classification schemes have been proposed.Tab.2 summarizes and compares these existing works from various perspectives.Through systematic investigations and in-depth analysis,in this paper we summarize a general flow classification workflow,as shown in Fig.2,ranging from different levels of feature collections,data pre-processing,model training,to model inference outputting classification results.Here,we summarize several key concerns that are necessary to be addressed,as listed below.

· Fine granularity.The complex diverse DCN service scenarios,high requirements on flow control,and more precise network management are driving the flow classification techniques toward a more fine-grained direction.A finegrained and accurate classification scheme can help allocate network resources more efficiently,ensuring a better user experience.However,most of the traditional and ML-based classification schemes are solely based on a rough general classification scale,for example,singly based on the network protocol or a single function of the application,which can not provide better QoS.In some simplified scenarios,even if the fine-grained classification has been achieved,the computational overhead,monitoring overhead,stability,and feasibility are also major concerns that are necessary to be considered.

· Flexibility and robustness.To meet various service needs,flow classification schemes should consider the timeliness and effectiveness of classification,which could help services meet their service-level agreements(SLA).Using FPGA is a feasible way to improve the response speed of classification and avoid the influence of abnormal conditions on the classification efficiency.When encountering the common network anomalies such as jitter,packet loss,and retransmission,the efficiency of a robust flow classification solution should not degrade.Moreover,the quality of extracted data features can also significantly affect the final result of classification,and redundant features will reduce the accuracy of the algorithm along with additional computational overhead[62].

IV.RESOURCE MANAGEMENT

As one of the most critical optimization problems in DC,resource management involves the allocation,scheduling,and optimization of computing,storage,network,and other resources,which directly affects the overall resource utilization and resource availability,and further affects the user experience and the revenue of service providers.However,the extreme dynamics of DCN,the increasing networking complexity of large-scale DCN,and the highly diversified services bring great challenges for traditional unintelligent solutions.ML provides an effective way to deal with these challenges,where it can help maximize the profit of service providers,provide a better quality of experience (QoE) for tenants,and effectively reduce energy costs.

There has been a wide variety of resource management solutions for virtualized cloud data centers,such as multilevel queues[77],simulated annealing[78],priority-based[79],and heuristic algorithms[80].The advent of virtualization allows virtual machines(VM),virtual containers(VC),and virtual networks (VN) to be implemented on a shared physical server.Whereas,the association between various physical resources and virtual resources is highly dynamic throughout the life cycle of services.The preliminary research findings demonstrated that traditional unintelligent resource management methods can not mine the potential relationships between complex parameters quickly and dynamically.Besides,multi-objective optimization also increases the difficulty of network optimization,such as considering QoS,energy cost,and performance optimization at the same time.Furthermore,in a large-scale data center,the complex configuration is also a challenging and destructive problem,where once the configuration error occurs,it will cause incalculable damage to cloud services,especially for latency-sensitive services.Machine learning can make up for the deficiency of traditional unintelligent methods by learning historical data to dynamically make appropriate strategies adaptively.Therefore,numerous ML-based solutions were proposed.For example,Murali et al.[81]proposed a distributed NN-based approach to achieve efficient resource allocation.

In data center networks,the types of network resources are of high richness and diversity.At the network level,it can be a physical hardware resource (e.g.,server,switch,port,link,CPU,and memory)or an abstract software resource(e.g.,virtual network,virtual node,virtual link,and virtual switch).In addition,network resources can be task/job-oriented or QoS-oriented.From the perspective of the resource life cycle,resource management can also focus on resource prediction or resource utilization optimization.In view of the diversity of resource management methods and the difference of optimization objectives,we divide the existing ML-based resource management schemes into the following five categories:task-oriented,virtual entity-oriented,QoS-oriented,resource prediction-oriented,and resource utilization-oriented resource management.Next,we will discuss and analyze these five types of schemes respectively.

A.Task-Oriented Resource Management

In cloud data centers,there are various types of tasks with different particular performance requirements,such as computation-intensive tasks and latency-sensitive tasks,which requires that the resource management solutions should be customized for different kinds of tasks.Tesauro et al.[82]employed a reinforcement learning algorithm to optimize the allocation of computing resources by global arbitration,efficiently allocated server resources (such as bandwidth and memory) for each web application,and solved the limitations of reinforcement learning with queuing model policy.Marahatta et al.[83]classified tasks into failure-prone and nonfailure-prone tasks based on the predicted failure rate using DNN model and executed different allocation policies for different types of tasks.In addition,an energy-efficient resource management can also help reduce the energy consumption of the data center.Yi et al.[84]scheduled the load of computationally intensive tasks based on deep reinforcement learning(DRL)to minimize the energy cost.

B.Virtual Entities-Oriented Resource Management

Virtualization allows tasks with different services and performance requirements to share a series of resources.Generally,virtualized entities include VMs,VCs,and VNs,and we define the solutions that allocate resources for virtualized entities as virtual entities-oriented resource management.

To ensure the network performance while minimizing power consumption,Caviglione et al.[85]applied a DRL algorithm,named Rainbow deep Q-learning (DQN),to solve the multi-objective VM placement problem.Their model was based on the percentages of network capacity,CPU,and disk,with full consideration of energy cost,network security,and QoS.Liu et al.[86]applied Q-learning algorithm to distributed management of resources,and their proposed hierarchical network architecture can provide resource allocation and power management of VMs.Experiments showed that when the physical server clusters are set to 30,for 95 000 jobs,the proposed hierarchical framework can reduce the network energy consumption and latency by 16.12%and 16.67%respectively,compared with the DRL-based resource allocation.It can be seen that in addition to the resource allocation for tasks,the resource allocation for virtual entities also greatly affects the operation efficiency and power consumption of the DC.Jobava et al.[87]managed VM resources through flow-aware consolidation.The learning automata algorithm was used to divide the virtual clusters to reduce the total communication cost,and then simulated annealing algorithm was employed for the cluster placement.Both two phases were traffic aware.

C.QoS-Oriented Resource Management

Resource management optimization research has improved the overall QoS of the service by optimizing resource allocation,although this is not the primary key objective.The two typical representative research works aiming at QoS are as follows.Wang et al.[88]proposed an on-demand resource scheduling method based on DNN to ensure the QoS of delaysensitive applications.Yadwadkar et al.[89]leveraged SVM to perform resource prediction and meet QoS requirements with a performance-aware resource allocation policy.As for the uncertainty of prediction,they introduced the concept of confidence measure to mitigate this problem.

D.Prediction-Oriented Resource Management

Resource prediction plays an essential role in resource management.Timely and accurate resource forecasting can make the data center achieve more effective resource scheduling,and further improve the overall performance of the data center network.However,although virtualization and other technologies greatly enrich the types of resources and improve service efficiency,it also increases the difficulty of resource prediction.Besides,Aguado et al.[90]implied that the prediction accuracy of traditional unintelligent algorithms cannot be guaranteed on account of diversity of services and bandwidth explosion.Moreover,to cope with the unpredictable resource demand,traditional resource management mechanisms usually over-allocate resources to ensure the availability of resources,which is harmful to the overall resource utilization of data centers.How to deal with the differentiated requirements of various workloads and precisely predict resources still remains a challenge.Yu et al.[91]proposed a DL-based flow prediction and resource allocation strategy in optical DCNs,and experimental results demonstrated that their approach achieved a better performance compared with a single-layer NN-based algorithm.Iqbal et al.[13]proposed an adaptive observation window resizing method based on a 4-hidden-layer DNN for resource utilization estimation.Thonglek et al.[92]predicted the required resources for jobs using a two-layer LSTM network,which outperformed the traditional RNN model,with improvements of 10.71% and 47.36% in CPU and memory utilization,respectively.

E.Resource Utilization-Oriented Resource Management

Resource utilization is regarded as an intuitive and important metric to evaluate a resource management mechanism.This type of resource management scheme typically improves resource utilization through task scheduling,VM migration and load balancing algorithms.It is worth noting that dynamicchange of resource demand in DCs requires the algorithm being able to automatically optimize resource utilization according to the changing network environment.However,the traditional unintelligent solutions are difficult to cope with the high variability of the network environment.Therefore,a few researchers have begun to apply ML to solve these problems.Che et al.[93]performed task scheduling based on the actorcritic DRL algorithm to optimize resource utilization and task completion time.Telenyk et al.[94]used the Q-learning algorithm for global resource management,and realized resource optimization and energy saving through VM scheduling and VM aggregation.In addition to improving resource utilization through scheduling and consolidation,Yang et al.[95]focused their research on the optimization of storage resource,that is,how to efficiently store data.They used distributed multi-agent RL methods to achieve joint optimization of resources,which effectively improved network throughput and reduced stream transmission time.

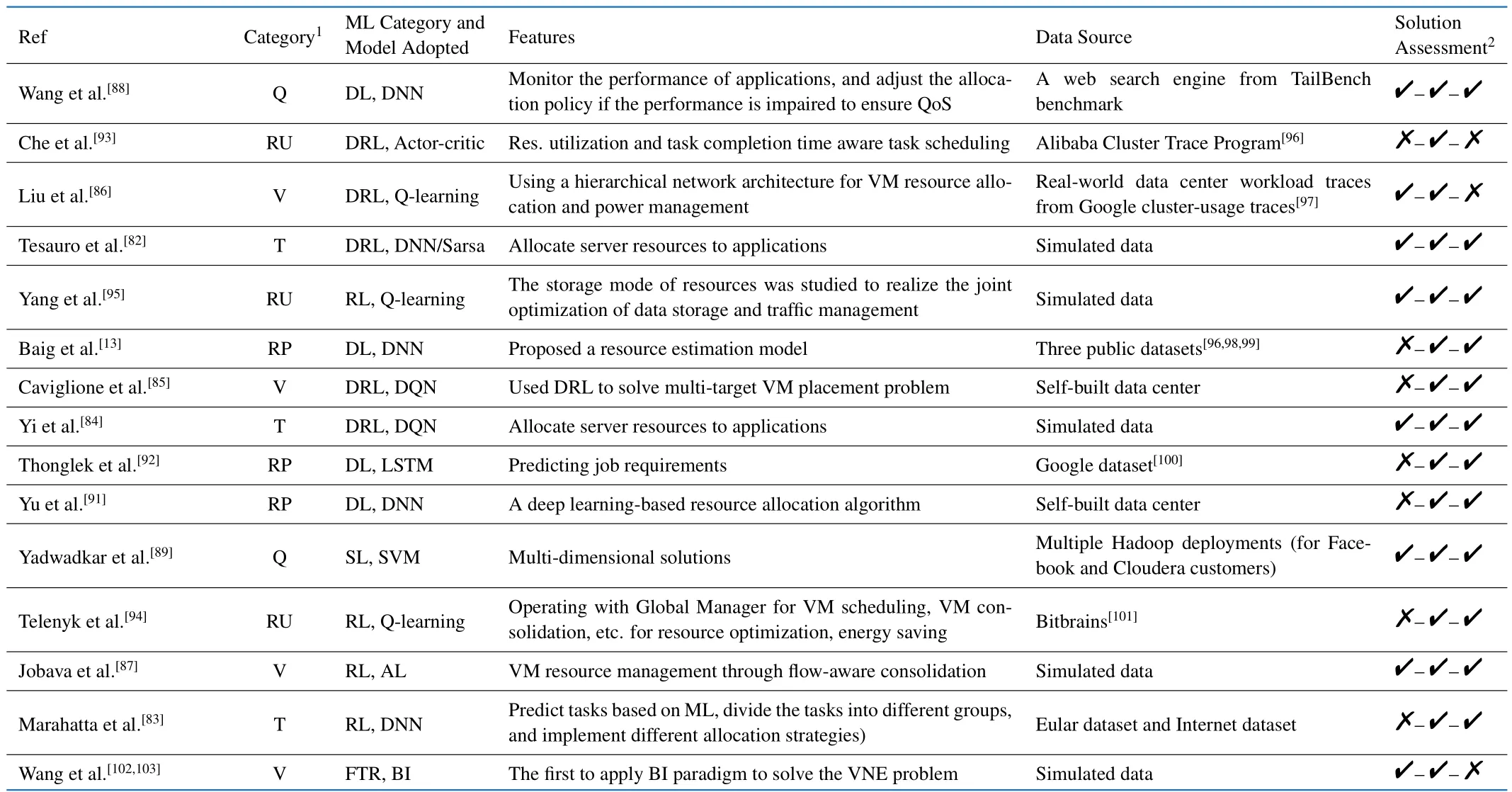

F.Discussion and Insights

Along with the expansion of service scenarios,the resource scheduling among various virtualized entities is getting more complicated.Increasingly,researchers adopt DL or DRL aiming to achieve more intelligent resource management.We list the details of each intelligent solution in Tab.3.Here,we summarize several key concerns,as below,which deserve to be further studied and addressed.

Tab.3 Research progress of data center network intelligence:resource management

· Stability and scalability of models.Taking reinforcement learning as an example,primary decisions may have relatively poor consequences due to a lack of domain knowledge or good heuristic strategies[82].When the agent performs tentative actions,it may fall into local optimal solutions if not appropriately trained.Besides,reinforcement learning may lack good scalability in large DCNs.

· Adaptability to Multi-objective and multi-task.Whether it is a traditional resource allocation scheme (such as priority-based VM allocation[79],heuristic-based resource allocation[80]),or an intelligent resource allocation scheme,their performance is usually evaluated in a specific single scenario.Whereas,one qualified solution should fully consider the richness of scenarios and requirements,and be able to adapt to multi-scenario and multi-task network environment.

· Security of resource allocation.The flexibility of virtualized resources can make vulnerability or fault propagation faster,and fault recovery and fault source tracing more difficult.Padhy et al.[104]disclosed that vulnerabilities were found in VMware’s shared folder mechanism,which could allow the users of guest systems to read and write to any part of the host file system,including system folders and other securitysensitive files.

· Perspective of resource life-cycle.The allocation,utilization,and recycling of resources occur frequently.Current intelligent solutions focus more on the prediction of resource allocation and maximization of benefits in the process of resource allocation,but lack related studies on resource collection and recycling.

V.INSIGHTS AND CHALLENGES

Admittedly,the research on DCN intelligence is being extensively explored and has made certain achievements,however,most of the existing research work relies on an implanted ML module to make the network intelligent,where the role of ML in the network is more like a tool.The current research on DCN intelligence is still at an early stage and there are still many open problems to be solved.Whereas,we insist that the intelligence of the future DCN should be an intrinsic natural attribute,where a truly intelligent network should transform intelligence into an intrinsic property to support network intelligence.Finally,before concluding this paper,we will further discuss the challenges and opportunities of DCN intelligence from four aspects:industry standard,model design,network transmission,and network visualization.

A.Industry Standard

The quality of the source data includes authenticity,validity,diversity,and timeliness.Simulated data lack convincingness,scenario-specific generated data lack universal validity,data containing only a few feature information is challenging to improve the prediction accuracy,and antiquated historical data lose timeliness having little value.Some existing works did not provide any information about the data source,making it difficult to examine the quality of data sets and the validity of experiments.It is necessary to call on researchers to develop a data quality assessment standard as soon as possible,where a quantifiable data quality assessment standard will help enhance the convincingness of experimental results.

B.Model Design

1)Intelligent Resource Allocation Mechanism:Data center networks need to intelligently perceive scenarios and services,and reasonably consider the life-cycle of resource management,i.e.,resource prediction,allocation,utilization,integration,and recovery,under the security conditions.However,most the existing solutions mainly focus on the resource prediction,allocation,and utilization optimization,and there is little research on the resource fragment integration and resource recovery.

2)Inter-DC Collaborative Optimization Mechanism:The inter-DC network optimization is also a very important but more complex research topic,where the optimization usually requires close collaborations among multiple data centers.Thereby,how to achieve efficient collaboration among different intelligent models of different data centers have become a big challenge.Ideally,all separate models can be globally trained based on a complete set of all DC’s data,however,normally local data cannot be transferred freely across data centers due to privacy and bandwidth overhead issues.Hence,it will be a good research opportunity to explore efficient methods to achieve an efficient collaboration of inter-DC intelligent models on the premise of ensuring data privacy and security.

3) Adaptive Feature Engineering:Feature engineering largely affects the ultimate effect of ML models.Usually,the feature engineering in ML models is specially designed for a single problem in a specific scenario.However,the richness of data center network layers makes the data collected at each layer vary greatly,and the diversity of services also makes the corresponding feature selection difficult.How to make feature engineering adaptive to network scenarios and service types under the above complex environment is a key challenge for feature engineering in DCN.

4) Intelligent Model Selection Mechanism:Without doubt,there is no one universal learning model that works for all scenarios,and every model has its own certain limitations in different scenarios.The highly dynamic nature of the network environment,the diversity of service requirements,the heterogeneity of network data,and the inconsistency of optimization goals make it extremely difficult and time-consuming to select the most suitable machine learning model.This is also one of the key pain points of the application of artificial intelligence in data center networks.

C.Network Transmission

As the data center service scenarios become more and more complex,the network scale becomes larger and larger,and the requirements for user experience and service quality become higher and higher,the traditional communication protocols have already not been qualified to cope with these challenges.Thus,the data center network inevitably requires more efficient and intelligent communication protocols to ensure fast convergence,high bandwidth,low latency,and no packet loss for network transmissions.However,the new communication protocols proposed in recent years also fail to meet data center requirements of heterogeneous scenarios[105]and have compatibility issues with legacy protocols[106].There is no doubt that artificial intelligence can help network protocols achieve better responsiveness,predictability,and self-adjusting ability.However,there is still little research on how to achieve a more friendly and efficient transmission protocol assisted with ML,which is a valuable opportunity for future research.

D.Network Visualization

Along with the rapid growth of DCNs,both the network size and the volume of network data have dramatically increased.As a result,the burden of network supervision is getting heavier.A proven way to accurately monitor and control the DCN based on the massive amount of data is network visualization.Network visualization is a comprehensive and concise display of network data by means of graphics,with a good ability to reduce the burden of traditional network monitoring.Network visualization can help the O&M personnels accurately perceive the network by explicitly presenting the real-time status of the network.Currently,the DCN still suffers from the following three aspects of invisibility,which leads to inefficiency of network O&M and optimization.

· Routing invisible:Invisible routing makes the transmission changes cannot be reproduced and the changing process cannot be backtracked.This often leads to a tough situation,that is,the user reported one network fault,but when the O&M personnel starts to locate the fault,the fault disappears again,and there is no historical information to query.As a result,the cause of the fault cannot be diagnosed.

· End-to-end service pipeline invisible:This leads to the inability to see the actual forwarding path corresponding to the service pipeline,as well as the performance of the forwarding path.As a result,after the network failure occurs,we can only locate the failure hop by hop,which is time-consuming and laborious.

· QoS invisible:The service quality is not visible,leading to that the user experience cannot be perceived.The traditional network management tools usually only provide the performance data of network,but cannot exhibit the QoS contents carried by the network.In other words,the network performance and service quality are separated without any correlations,resulting in low efficiency of fault location.

Overall,the research on applying ML to achieve an intelligent DCN is still at an early stage.There are always many opportunities to further explore the potential and value of applying ML technologies in various fields of data center networks.It can conclude that the network intelligence will inevitably become the future trend of data center network development.In the foreseeable future,ML-based intelligent networking will become the core research direction of the cloud computing,driving the data center network from SDN-enabled automatic network to ML-driven intelligent network.

VI.CONCLUSION

In this survey paper,we provide a comprehensive review of the representative research works with in-depth analysis and discussions from various perspectives including flow prediction,flow classification,and resource management.We thoroughly explore the challenges existed in current research and opportunities for future research from various aspects together with our key insights.To sum up,the research on the application of AI in DCNs is still in its infancy,but it has aroused the attention of more and more scholars and researchers,and has achieved preliminary research results in many fields.However,there are still many problems and deficiencies in the current research,which remain to be further studied.

杂志排行

Journal of Communications and Information Networks的其它文章

- Channel Estimation for One-Bit Massive MIMO Based on Improved CGAN

- DOA Estimation Based on Root Sparse Bayesian Learning Under Gain and Phase Error

- Multi-UAV Trajectory Design and Power Control Based on Deep Reinforcement Learning

- A Lightweight Mutual Authentication Protocol for IoT

- Local Observations-Based Energy-Efficient Multi-Cell Beamforming via Multi-Agent Reinforcement Learning

- RIS-Assisted Over-the-Air Federated Learning in Millimeter Wave MIMO Networks