Influence of image data set noise on classification with a convolutional network

2019-04-04WeiTaoShuaiLiguoZhangYulu

Wei Tao Shuai Liguo Zhang Yulu

(School of Mechanical Engineering, Southeast University, Nanjing 211189, China)

Abstract:To evaluate the influence of data set noise, the network in network(NIN) model is introduced and the negative effects of different types and proportions of noise on deep convolutional models are studied. Different types and proportions of data noise are added to two reference data sets, Cifar-10 and Cifar-100. Then, this data containing noise is used to train deep convolutional models and classify the validation data set. The experimental results show that the noise in the data set has obvious adverse effects on deep convolutional network classification models. The adverse effects of random noise are small, but the cross-category noise among categories can significantly reduce the recognition ability of the model. Therefore, a solution is proposed to improve the quality of the data sets that are mixed into a single noise category. The model trained with a data set containing noise is used to evaluate the current training data and reclassify the categories of the anomalies to form a new data set. Repeating the above steps can greatly reduce the noise ratio, so the influence of cross-category noise can be effectively avoided.

Key words:image recognition; data set noise; deep convolutional network; filtering of cross-category noise

In recent years, machine learning, deep learning and other methods in image recognition have experienced vigorous development. A variety of deep convolutional network models have made significant contributions to the field of image recognition, increasing the accuracy of image classification to new levels and achieving breakthroughs in all application areas. Deep learning is a branch of machine learning. It is a hierarchical model structure similar to that of the human brain. It extracts the characteristics of the input data from the bottom layer to the highest layer to establish a mapping relationship from the bottom signal to high-level semantics[1]. Today, it has become an inevitable trend for the Internet, big data, and artificial intelligence[2].

The CNN is the first successful learning algorithm for training multilayer network structures. CNNs use a gradient-based improved back-propagation algorithm to train the weights in the network. They implement a multilayer filtered network structure for deep learning and a global training algorithm combined with filters and classifiers, which reduces the complexity of the network model and the number of weights[3]. The CNN has achieved very good results in the image processing field[4]. After the development of the Alex-Net deep convolutional network model[5]for the ILSVRC-2012 fire in 2012, researchers continued to propose deep convolutions, such as the ALL-NET[6], ResNet-50[7], Inception-V3[8], and VGG-19[9]models. These models have achieved good performances in tasks such as image recognition, target detection, and semantic segmentation. The basis of these well-performing models is the need for sufficient training data to provide supervised training; therefore, the quality of the data sets is a fundamental factor that limits the training results of the deep convolutional network model.

Since data sets are usually developed manually, the quality of data sets is subjectively influenced by people. An important basis for judging the quality of a data set is the presence of noise images in each category. Elaborately produced open-source benchmark data sets, whose images of various categories are often represented by exact and true category semantics, can often achieve a high classification accuracy by using classification models applied to these data sets. Inspired by this, this paper starts from the perspective of image classification, uses noise data to train the classification model, and then uses the classification accuracy of the noiseless test set to reflect the impact of data set noise on the degraded performance of the deep convolutional network classification.

1 Data Set Noise Analysis

When the data set is in the production stage and the artificial subjective classification error occurs, it may add the remaining similar image in the data set to a certain category, or it may mistakenly add the image of an unrelated category to a specific category. The first operation introduces cross-category noise, and the second introduces random noise. For cross-category noise, the artificial standard category often mislabels multiple images in the same data class as a specific category. At this time, the noise concentrates on a certain class. In contrast, random noise has a wide range of irrelevant category images. For example, if the original image is randomly cropped during the training phase, the background containing no target is used as the specified data label. This background content often does not belong to any category. In the same data set, the proportion of noise data is usually uncertain, depending on the artificial subjective recognition experience and the proportion of the size in the target image.

To facilitate the analysis of noise data, this paper selects the reference data set as the data source and then samples the source data set to obtain different proportions of noise data. The subsequent experiments set multiple proportions of the noise control group for classification training. Finally, the normal test data is used to detect the classification accuracy. The idea of this analysis is as follows. After the benchmark data set is carefully annotated manually, the noise data is completely removed. At this time, artificially introducing certain types of noise can effectively analyze the effects of different noise types.

In this case, when simulating the convergence conditions of the training process, it is necessary to add noise to the training set and verification set during the noise analysis. The test set is used to reflect the performance degradation. Only test data with accurate classification can accurately reflect the real situation of the degradation of recognition performance under noise data. Therefore,noise should not be introduced into the test data in the analysis phase.

2 Experimental Data and Model Selection

This paper uses Cifar-10 and Cifar-100, which are two benchmark data sets, for classification testing. Both data sets add a specific proportion of random noise. To better judge the impact of cross-category noise on the quality of the data sets, a comparison was made here between the impact of single-category and multicategory cross-category noise on model recognition performance. Cifar-10 has 10 different categories. Furthermore, we tested its 10 categories separately. The Cifar-100 data set has 20 coarse labels, each with 5 fine labels. In this paper, among the 20 coarse labels, one fine label for each coarse label was arbitrarily selected as the final test object.

2.1 Introducing random noise

To introduce random noise images in the training set and validation set, the experiment randomly selected some images from ImageNet and scaled them to 32×32 and then added them to the training data and verification data proportionally.

On the third31 morning after they had left their father s house they set about their wandering again, but only got deeper and deeper into the wood, and now they felt that if help did not come to them soon they must perish

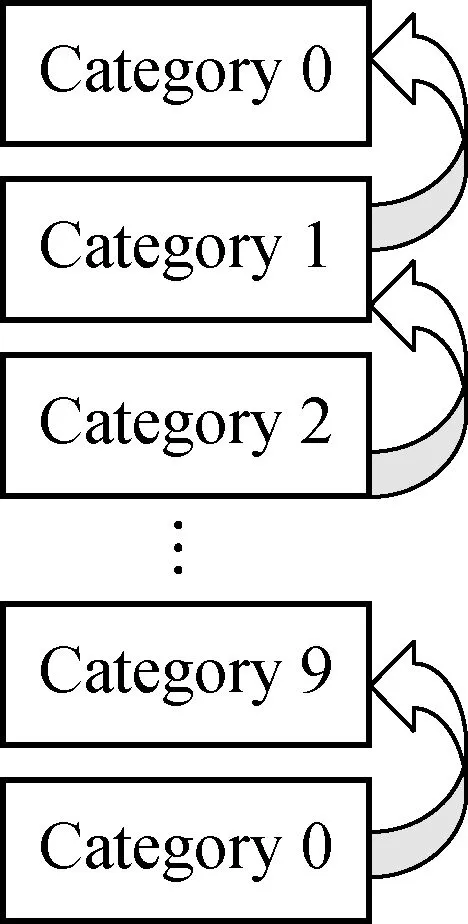

2.2 Introducing cross-category noise

As shown in Fig.1, to introduce a cross-category noise image into the training data, in Cifar-10, the experiment directly added other categories to a specific category at a certain proportion. For Cifar-100, 20 coarse labels were separated from 100 categories, and 5 fine labels were separated from each coarse label. Among the 20 coarse labels, one fine-label was arbitrarily selected as a test object, and the other four fine labels were introduced as noise into the category at the same ratio. Therefore, the effect of introducing cross-category noise can be obtained. Here, (0) to (4) represent fine labels in every coarse label.

(b)

Fig.1 Adding cross-category noise to Cifar-10 and Cifar-100.(a) Cifar-10;(b) Cifar-100>

2.3 Details of noise introduction

In this paper, four noise ratios are set to obtain reliable inferences from test data from multiple control groups. In both data sets, the proportion of noise images in the training set for each category is the same. In Cifar-10, the random noise and cross-category noise ratios are set to be 2.5%, 5%, 10%, and 20%, respectively. In Cifar-100, the ratio of random noise to total cross-category noise is the same as that of cifar-10. However, due to the introduction of four categories of noise, each noise ratio introduced should be the total cross-category noise ratio divided by four.

2.4 Model selection and its superiority

Classic convolutional neural networks consist of alternatively stacked convolutional layers and spatial pooling layers. The convolutional layers generate feature maps by linear convolutional filters followed by nonlinear activation functions (e.g., rectifier, sigmoid, and tanh). Using the linear rectifier as an example, the feature map can be calculated as

(1)

where (i,j) represents the pixel index in the feature map;xi,jis the input patch centered at location (i,j);kis used to index the channels of the feature map.

In fact, the high-level features of the CNN are actually a combination of low-level features through some kind of operation. According to this idea,the principle of NIN[10]is to perform more complex operations in each local receptive field and implement an improved algorithm for the convolutional layer: the MLP convolutional layer. Compared to the traditional convolutional layer process, the Mlpconv layer can be seen as a microlayer network in the local receptive field of each convolution. Fig.2 shows the difference between the linear convolution layer and the Mlpconv layer.

(b)

Fig.2 The difference between the linear convolution layer and the Mlpconv layer.(a) Linear convolution layer; (b) Mlpconv layer

Using the micronetwork of the multilayer MLP, more complex operations are performed on each local receptive field neuron, and the nonlinearity is improved. The calculation process is as follows:

(2)

wherenis the number of layers in the multilayer perceptron. A rectified linear unit is used as the activation function in the multilayer perceptron.

(b)

Fig.3 The difference between fully connected layers and global average pooling. (a) Fully connected layers; (b) Global average pooling>

Based on the above advantages, this paper uses NIN to classify categories. In fact, NIN, with a small number of parameters, has good performance with CIFAR-10 and CIFAR-100. The detailed settings are as follows. The model uses Relu activation to speed up model convergence. The loss term of the training optimization goal consists of the classification loss and L2 regularization term, where the weight of the L2 regularization term is 0.001. SGD is used to evaluate the loss function, and the learning rate is exponentially decayed. Otherwise, the dropout is set to be 0.5 to prevent overfitting. Finally, a softmax classifier is added to identify each category type. The number of iterations of the training process is 90.

3 Classification under Noise Conditions

In this experiment, Cifar-10 and Cifar-100 were trained using the noise settings above, and the NIN model was used to calculate the accuracy and average of each category on the test set.

3.1 Analysis of the average accuracy of each data set

Tab.1 shows the performance of the test set under the three training set training models by introducing various noise ratios. The leftmost AP value is the average accuracy rate predicted by the model trained using only normal graph data in the test set. The middle row of the AP values is the average accuracy under random noise. The rightmost AP value is the average accuracy under cross-category noise. Whether it is random noise or cross noise, the accuracy of model recognition decreases as the noise ratio increases. The former has less influence on the recognition ability of the model, and the latter has more significant effects.

In fact, if we take an extreme example, the effects of random noise and cross-category noise on the model become more apparent. Assuming that the model is perfect enough to achieve the correct classification of all categories, we can obtain the following conclusions:

IfRn=100% andKn=1, then the original category is completely replaced by a new category,P1=1,A=0.

Otherwise, ifRn=100% andKn=T, then the cross-category noise is approximated as random noise; therefore, the probability density function is

Tab.1 The average precision of classification of Cifar-10 and Cifar-100 in different noise situations

(3)

Thus, we can obtainPT≈1/T,A=1/T. Here,Rnis the rate of noise in the training data set;Knis the number of cross-category noise types;P1is the probability of adding cross-category noise;Tis the number of training set images;PTis the probability of adding any one ofTkinds of noise. The variableAis the recognition accuracy of the original category.

Obviously, the influence of cross-category noise is greater than that of random noise.

3.2 Methods to reduce the effects of cross-category noise

The greater the proportion of cross-category noise, the more significant the influence on the negative effects of the model. Therefore, reducing the proportion of cross-category noise effectively improves the recognition ability of the model. In Cifar-10, the training data is classified using a model that is initially trained to contain noise data. As shown in Tab.2, from the mixing matrix of the classification results, most of the cross-category noise categories can be effectively identified. For example, the 1 000 cross-category noise images whose labels are 0 in the training data have real labels of 1. A total of 909 of these images were judged to have labels of 1. Therefore, the screening of images that have been categorized as other categories in the training data can greatly reduce the cross-category noise ratio, and the recognition performance of the correspondingly retrained model is greatly improved.

Tab.2 The mixing matrix of the classification of cross-category noises in Cifar-10 before iterations

To obtain more accurate training data, we designed such an algorithm to separate interspersed cross-category noise as much as possible. By judging the data in the mixing matrix shown in Fig.9, if the recognition accuracy of the category can be improved, the images are reclassified. Then, the images are retrained, and the training data is classified to obtain a new mixing matrix. Here, we set the number of iterations to be 3. The specific flow chart is shown in Fig.4.

Fig.4 The algorithm flowchart for filtering of cross-category noise

After three iterations, we can obtain a new mixing matrix, as shown in Tab.3. We find that the prediction of each introduced cross-category noise leaves only the noise category introduced and the original category. This shows that iterative screening does have a positive effect on correcting a wrong label. At the same time, it also shows that cross-category noise does affect the model’s extraction of the original category features, resulting in some images being incorrectly categorized. Of course, the more iterations there are, the less the noise of the data. After three iterations, the correction rate is 91.57% according to the following formula:

(4)

whereCis the correction rate;cis the row label of the mixing matrix;Nis the value of the current coordinate.

Tab.3 The mixing matrix for the classification of cross-category noise in Cifar-10 after three iterations

In Cifar-10, each category is mixed with a single category of noise. However, each fine label of Cifar-100 mixes into the other four categories of noise on the same coarse label. Similarly, we can also use the above model to obtain the mixing matrix, as shown in Tab.4.

Tab.4 The mixing matrix for the classification of cross-category noise in Cifar-100

Notes: 0 (1) represents the fine label 0 in the coarse label 1. The rest is similar.17 in 12/17 represents the total amount of 1(0) predicted and corrected, but the correct number is only 12.The rest is similar.

As seen from Tab.4, this algorithm is actually very bad for data sets mixed into multiple categories of noise. Although it can correct the type of mixed noise to a certain extent, it mixes various types of noise into other categories, which causes the noise distribution to be more extensive. In other words, there is almost no completely correct category for the entire training data set. Therefore, this algorithm only has a significant effect on correcting data sets with a single category of noise. For a data set mixed into multiple types of noise, it becomes worse.

4 Conclusions

1) The recognition rate of each category in the data set has a different degree of reduction with increased noise ratio.

2) Compared with the introduction of random noise, the introduction of cross-category noise has a more significant influence on the recognition effect.

3) From the mixing matrix shown above, it can be seen that for a data set mixed into a single category, to reduce the influence of cross-level noise, the initial training model can be used to filter the cross-category noise in the training data so that the quality of the data set can be effectively improved. After filtering, the noise ratio of the data set can be significantly reduced. However, for a data set mixed into multiple categories of noise, the algorithm of this paper cannot improve its quality, which requires further research.

杂志排行

Journal of Southeast University(English Edition)的其它文章

- A low-cost personal navigation unit

- Flexural behavior of steel reinforced engineered cementitious composite beams

- Compact passing algorithm for signalized intersection management based on vehicular network

- Analysis of conflict factors between pedestrians and right-turning vehicles at signalized intersections

- Effect of contract choice on upstream carbon emission reduction considering carbon taxation

- Multi-relaxation-time lattice Boltzmann simulation of slide damping in micro-scale shear-driven rarefied gas flow