基于MSRCR与注意力机制的群体蚕茧智能识别算法

2022-06-22孙卫红杨程杰邵铁锋梁曼郑健

孙卫红 杨程杰 邵铁锋 梁曼 郑健

摘要:针对目前人工选茧误选率高、效率低的问题,本文以上车茧、黄斑茧、烂茧为研究对象,提出一种基于多尺度色彩恢复算法与注意力机制的群体蚕茧智能识别算法。首先,将原始图像进行低通滤波,并乘以色彩恢复因子,在多尺度内恢复蚕茧色彩与表面细节信息,得到多尺度高频细节图像。其次,通过修改YOLOv3算法主干特征提取网络中的残差层引入注意力机制,对卷积后特征图中的分支特征重新标定,增大有效特征的权重。最后,在非极大值抑制算法基础上增加一项得分与相邻框重合度计算过程,筛除YOLOv3后期無效预测框,实现群体蚕茧种类识别。实验结果表明,本文算法的均值平均精度达到85.52%,相较于YOLOv3增加4.85%。

关键词:蚕茧;智能识别;MSRCR算法;YOLOv3算法;注意力机制;NMS算法

中图分类号:TS101.91文献标志码:A文章编号: 10017003(2022)06005808

引用页码: 061108

DOI: 10.3969/j.issn.1001-7003.2022.06.008(篇序)

基金项目: 国家市场监管总局科技计划项目(S2021MK0217);浙江省公益技术应用研究项目(LGG20E050014);江西省市场监督管理局科技项目(GSJK202003)

作者简介:孙卫红(1969),男,教授,博士,主要从事检验技术及自动化装置、数字化设计制造、制造业信息化的研究。

茧丝绸行业内根据制丝时工艺要求差异对原料蚕茧进行分类的过程称为选茧[1]。肉眼评定法是目前行业内应用最广泛的选茧方式,选茧效率依赖于操作人员的熟练程度,无法避免主观意识与情绪,难以恒定评估蚕茧的质量[2]。为解决人工选茧速度慢、误差大等问题,国内外学者将图像处理技术应用至蚕茧种类识别研究。陈浩等[3]采用Matlab软件对采集的单粒蚕茧图像进行二值化、空洞填充及面积计算等处理,达到表面污斑面积自动检测的目的;宋亚杰等[4]运用数字图像处理技术,根据数学形态对蚕茧进行划分;孙卫红等[5]基于不同蚕茧在HSV空间模型下的颜色特征,结合支持向量机设计并构造分类器方案。上述检测方法应用于单粒蚕茧或者数量较少场合,自动化程度较高,但在面对蚕茧数量较多情况时,算法的检测精度大幅下降。因此,亟需一种可准确识别群体蚕茧种类的检测算法。

目前国内外学者在群体目标检测方面的研究已取得一定进展。曹诗雨等[6]通过改进Fast R-CNN(Fast Region-based Convolutional Network)目标检测算法,可准确识别城市道路中的公交车、小型汽车;彭红星等[7]以四种水果为研究对象,提出一种改进的SSD(Single Shot MultiBox Detector)深度学习水果检测算法,解决了自然环境下水果识别率低、泛化性弱等问题;Loey等[8]基于ResNet-50提取深度迁移学习模型的特征,并采用YOLOv2(You Only Look Once)算法对人群中的医用口罩特征进行标注与定位;赵德安等[9]为实现复杂环境中机器人对苹果的检测,采用一种基于YOLOv3的苹果识别算法,准确定位苹果的同时,验证YOLOv3算法对群体目标识别检测的可行性。

为提高YOLOv3算法对群体蚕茧种类识别的精度与鲁棒性,本文以上车茧、黄斑茧与烂茧为研究对象,提出基于多尺度色彩恢复算法(Multi-Scale Retinex with Color Restoration,MSRCR)与注意力机制(Convolution Block Attention Module,CBAM)的群体蚕茧智能识别算法,在深度学习训练前对暗箱内拍摄的蚕茧原始图片进行MSRCR算法预处理得到多尺度高频细节图像,增加蚕茧图像细节信息,解决蚕茧图像表面细节清晰度低的问题。将注意力机制引入YOLOv3的主干特征提取网络,对高保真图像的分支特征重新标定,使网络聚焦于有效疵点特征,抑制背景的干扰。后期预测框筛选策略增加一项得分与相邻框重合度的计算过程,提高预测框筛选的合理性,降低算法对群体蚕茧识别的漏检率。

1 方 法

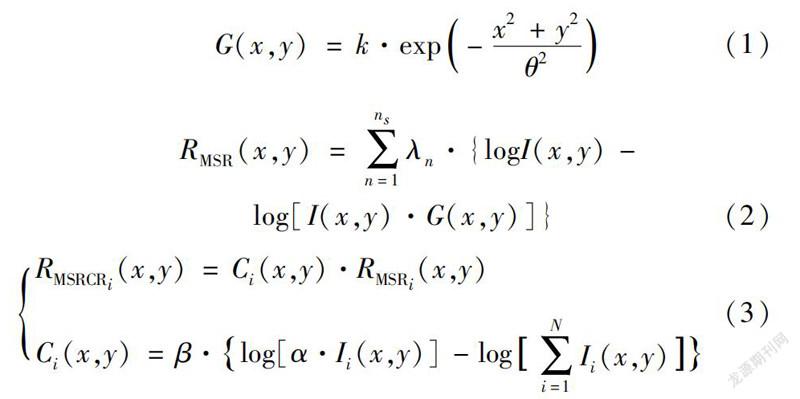

1.1 蚕茧图像色彩恢复

深度学习前需对采集图像进行预处理,其目的是改善视觉效果,转换为机器更为适合分析的形式[10]。蚕茧图像均拍摄自暗箱,由于蚕茧呈椭圆球状,受光源照射不均匀,图像中蚕茧表面局部细节清晰度较低。本文通过MSRCR算法进行图像预处理,恢复阴暗图像中蚕茧色彩,减小光照对算法检测精度的影响。MSRCR算法的原理公式如式(1)(2)[11-13],式(3)采用色彩恢复因子C调节式(2)输出项RMSR(x,y),凸显蚕茧表面较暗区域的特征信息,解决图像局部区域对比度增强而导致色彩失真的问题,得到结合色彩恢复因子的第i颜色通道的多尺度滤波高频细节图像RMSRCRi(x,y)。

G(x,y)=k·exp-x2+y2θ2 (1)

RMSR(x,y)=∑nsn=1λn·{logI(x,y)-log[I(x,y)·G(x,y)]} (2)09B613B9-6D44-4542-91B1-A1F0EE71EE1A

RMSRCRi(x,y)=Ci(x,y)·RMSRi(x,y)Ci(x,y)=β·log[α·Ii(x,y)]-log∑Ni=1Ii(x,y) (3)

式(1)为高斯函数,其中k和θ分别为归一化参数和高斯环绕尺度参数;式(2)利用式(1)对原图I(x,y)先选取多个尺度参数进行低通滤波,再进行加权平均求和,得到多尺度滤波后的蚕茧高频细节图像R(x,y),式(2)中λn表示权值,ns为尺度数目;式(3)中i为颜色通道,N为图像中所有颜色通道,α为非线性强度控制因子,β为可调的增益参数。β决定图像色彩恢复的最终效果,需根据实际目标增强效果调整并取值[12]。本文采用200万像素的相机,暗箱内平均光照强度为106 Lux,通过改变增益参数β获得不同增益效果的上车茧、黄斑茧和烂茧多尺度高频细节图像。经YOLOv3算法检测,最后检测效果评价指标采用均值平均精度(mean Average Precision,mAP),实验结果如图1所示。由图1可知,本实验中β取值35时,均值平均精度较高。

蚕茧原始图像与MSRCR图像增强后的多尺度高频细节图像示例如图2所示。图2(c)(d)中的蚕茧图像色彩得到恢复,相较于图2(a)(b)的对比度与清晰度提高,且在高动态范围内得到压缩。因此,经MSRCR算法预处理后的蚕茧图像可作为YOLOv3训练的数据集。

1.2 YOLOv3算法改进

YOLOv3是目前主流的目标检测算法之一,在实时性与精准度方面表现突出。针对YOLOv3算法在群体蚕茧识别时出现误检、漏检等问题,本文采用Darknet53[14-16]作为主干特征提取网络,将输入图像固定尺寸至416×416像素,经卷积层、残差层及注意力机制提取特征后,输出层得到13×13、26×26、52×52三种不同像素尺寸的特征图,其中Concat的作用是将相同尺寸特征图的通道进行拼接,达到上采样的目的。经过Soft-NMS算法筛除无效预测框,改进后的YOLOv3算法达到群体蚕茧种类识别的目的。具体算法流程如图3所示。

1.2.1 基于注意力机制的主干特征提取网络

YOLOv3算法应用于群体蚕茧识别时,发现对中小目标的识别存在缺陷,极易出现误检。本文通过修改主干特征提取网络的残差层,对中间层的蚕茧特征图沿通道和空间两个独立维度推导权重,将权重与输入特征图相乘,划分图中有效疵点特征与无效背景,进行自适应特征细化。注意力机制结构如图4所示,由通道注意力与空间注意力组成,F为原始特征图,MC和MS分别表示通道注意力特征图与空间注意力特征图,F′和F″分别表示经通道注意力与空间注意力处理后输出的蚕茧特征图[17]。蚕茧图像经注意力机制提取特征后,有效特征(呈红色)与背景实现分割,神经网络注意力区域集中于蚕茧表面,提升了通道内有效特征的权重。

注意力机制由通道和空间注意力组成[18]。通道注意力使用最大池化与平均池化聚合特征图中的信息,再使用共享网络层,采用逐元素求和方式输出特征向量,对经过特征矩阵卷积后的通道进行过滤,提升有效疵点特征在特征图中的权重,突出蚕茧图像关键特征区域的信息,通道注意力结构如图5(a)所示。空间注意力关注特征图中目标蚕茧的坐标信息,是对通道注意力的补充。空间注意力经最大池化与平均池化处理,在保留通道维度的前提下,汇集相似的特征向量,将其输入至卷积层,基于特征图生成描述符对蚕茧的空间位置进行编码,最后通过Sigmoid函数归一化得到特征图,空间注意力结构如图5(b)所示。

本文对MSRCR算法预处理得到的高频细节图像,分别利用YOLOv3算法与改进的YOLOv3算法进行检测,如图6—图8所示。对比图7、图8可知,改进算法检测蚕茧高频细节图像时,特征图热区得到提升,有效疵点特征权重增大,可见网络提取蚕茧特征的能力增强。

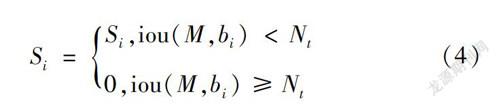

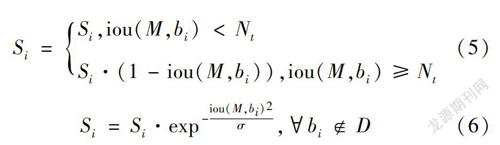

1.2.2 柔性非极大值抑制算法

在后期预测框处理阶段,YOLOv3算法采用非极大值抑制算法(Non-Maximum Suppresion,NMS)筛选一定区域内属于同一目标蚕茧的最高得分预测框,用于解决目标检测过程中预测框重叠问题[19]。NMS算法原理如式(4)[20]所示。

Si=Si,iou(M,bi)

式中:Si是每个框通过分类计算器得到的分数;Nt是重叠区域比较的阈值,设定为0.5。

非极大值抑制算法对目标蚕茧预测框的分数进行排序,保留最高项,删除与该项重合度大于阈值的其余预测框。

实际群体蚕茧识别过程中,由于分数最高的预测框会将该目標蚕茧区域内其余预测框删除,而删除预测框中恰好包括相邻蚕茧的预测框,导致出现预测框缺失的问题。因此,本文通过对非极大值抑制算法增加线性加权函数与高斯加权函数的方法,引入柔性非极大值抑制算法Soft-NMS,在原算法基础上增加一项与相邻框的重合度计算。Soft-NMS的原理如式(5)(6)[21]所示。09B613B9-6D44-4542-91B1-A1F0EE71EE1A

Si=Si,iou(M,bi)

Si=Si·exp-iou(M,bi)2σ, biD (6)

式中:M为当前得分最高框,bi为待处理框,D为所有处理完毕预测框的集合,σ为一个极小正数。

由算法原理可知,bi与M的交并比(Intersection over Union,iou)越高,bi的得分Si就越低。改进算法通过降低高得分预测框内重合度大于阈值的预测框分数,优化预测框筛选策略。

NMS算法与Soft-NMS算法在测试集上的对比结果如表1所示。针对蚕茧相邻距离过近造成预测框消失的问题,实验中所用蚕茧图像为距离较近摆放拍摄。由表1可见,在相同蚕茧检测数量下,Soft-NMS正确率均高于NMS算法,表明YOLOv3算法采用柔性极大值抑制方法处理预测框可降低蚕茧漏检率。

2 实验与分析

实验硬件环境:采用型号为AMD Ryzen5 2600X的CPU(超威半导体公司),型号为NVIDIA GTX 1660TiGPU的显卡(英伟达公司)。软件环境:Ubuntu 18.04操作系统,深度学习框架为Pytorch,编程语言采用Python。

2.1 数据集制作与模型训练

数据集制作是深度学习训练任务的基础环节。为验证本文算法对于群体蚕茧种类识别的适用性,将检测机构及暗箱拍摄的上车茧、黄斑茧、烂茧标准图像若干,经MSRCR图像预处理后以PASCAL VOC2007数据集格式进行存储,用于测试算法的精度与鲁棒性。数据集共计蚕茧图像1 200张,上述每种蚕茧各占1/3,训练前均缩放至416×416像素。实验训练过程采用随机梯度下降法,初始学习率设定为0.001,权重衰减系数为0.000 1。图9为训练模型所得损失值函数曲线,横坐标表示训练迭代次数,纵坐标为训练过程中的损失值,损失值越低代表模型精度越高,最高迭代6 000次。

2.2 评价指标

实验采用平均精度(Average Precision,AP)、准确率(Precision,P)、召回率(Recall,R)、均值平均精度与单张图像检测时间作为衡量群体蚕茧识别算法性能的指标。准确率与召回率的定义如式(7)(8)所示。

Pprecision=TPTP+FP,Rrecall=TPTP+FN (7)

MAP=∑Nk=1(R(k+1)-R(k))·max[P(R(k+1)),P(R(k))],MmAP=1C·AP (8)

式中:TP为目标蚕茧被识别为正确种类的个数,FP为目标蚕茧被识别为错误种类的个数,FN为漏检蚕茧的个数,C为数据集中蚕茧种类的数量,N为引用阈值的数量,k为阈值,P(k)、R(k)分别为准确率与召回率[14]。

针对数据集分别使用目标检测领域常用的YOLOv3-tiny算法、YOLOv3算法与本文提出的群体蚕茧识别算法进行比较,平均精度与均值平均精度检测结果如图10所示。对于同

一蚕茧种类,本文算法的平均精度均高于其他两种算法,说明引入注意力机制有效提高算法对蚕茧特征的提取能力,同时采用柔性非极大值抑制算法能降低后期预测框的漏检率,两者结合可提升算法的检测精度。对于实验的三种蚕茧,平均精度均有上升,说明本文算法在满足有效性的前提下,泛化能力较强。

为进一步比较三种算法的各项性能,本文增加准确率、召回率与对单张图像的检测时间,如表2所示。在精度指标上,本文算法对于同种蚕茧的准确率与召回率均高于YOLOv3-tiny、YOLOv3,在数据集上的mAP达到85.52%,相比YOLOv3-tiny、YOLOv3分别提升19.27%、4.85%,上车茧、黄斑茧和烂茧的AP相比改进前YOLOv3算法,分别得到4.30%、4.70%、5.53%的提升。从检测单张图像时间的开销来看,改进YOLOv3算法单张蚕茧图像的时间为30.1 ms,略高于YOLOv3,但在实际应用场景中可满足实时性的要求。

本文算法与YOLOv3算法对比效果如图11所示。图11(a)中蚕茧图像未经MSRCR图像预处理,YOLOv3算法检测得到的预测框范围较大,对于关键特征的识别并不精准,算法经改进后,实际效果如图11(d)所示,预测框的像素值范围减小,说明对关键特征识别的定位精度得到提高。YOLOv3算法在图11(b)中检测相邻紧密的蚕茧时出现漏检,表明非极大值抑制算法处理重合度较大的预测框时存在缺陷,可通过Soft-NMS算法降低目标框内其余预测框分数的方式进行优化,效果如图11(e)所示。图11(c)中YOLOv3算法检测时将一粒上车茧误检为黄斑茧,而图11(f)中改进后的YOLOv3算法除正确识别其他茧外,也正确识别该茧为上车茧。

3 结 论

为提高YOLOv3算法对群体蚕茧识别的准确率,本文提出一种基于MSRCR与注意力机制的群体蚕茧智能识别算法。本文算法首先利用MSRCR預处理蚕茧原图得到高频细节图像,减小光照对算法精度的影响。其次通过修改主干特征提取网络的残差层引入注意力机制,融合模型训练过程中不同通道间蚕茧的有效疵点特征。最后采用Soft-NMS算法优化后期预测框的筛除策略,降低算法的漏检率与误检率。对比实验结果表明,改进后的YOLOv3算法在满足实时性的要求下,对群体蚕茧种类识别的精度得到提高。09B613B9-6D44-4542-91B1-A1F0EE71EE1A

参考文献:

[1]王超, 刘文烽, 胡紫东. 并联机器人在蚕茧分拣系统中的应用研究[J]. 企业科技与发展, 2018(4): 87-90.

WANG Chao, LIU Wenfeng, HU Zidong. Research on the application of parallel robots in cocoon sorting system[J]. Sci-Tech & Development of Enterprise, 2018(4): 87-90.

[2]金君, 邢秋明. 蚕茧质量无损检测方法的探讨[J]. 中国纤检, 2012(9): 64-66.

JIN Jun, XING Qiuming. Discussion onnon-destructive examination of cocoon quality[J]. China Fiber Inspection, 2012(9): 64-66.

[3]陈浩, 杨峥, 刘霞, 等. 基于Matlab的桑蚕茧选茧辅助检验方法的研究[J]. 丝绸, 2016, 53(3): 32-36.

CHEN Hao, YANG Zheng, LIU Xia, et al. Study on auxiliary testing method for mulberry silkworm cocoon sorting based on Matlab[J]. Journal of Silk, 2016, 53(3): 32-36.

[4]宋亚杰, 谢守勇, 冉瑞龙. 机器视觉技术在蚕茧无损检测中的应用研究[J]. 现代农业装备, 2006(9): 48-51.

SONG Yaljie, XIE Shouyong, RAN Ruilong. Applied research on machine vision technology in non-destructive test of cocoon[J]. Modern Agricultural Equipments, 2006(9): 48-51.

[5]孫卫红, 黄志鹏, 梁曼, 等. 基于颜色特征和支持向量机的蚕茧分类方法研究[J]. 蚕业科学, 2020, 46(1): 86-95.

SUN Weihong, HUANG Zhipeng, LIANG Man, et al. Cocoon classification method based on color feature and support vector machine[J]. Acta Sericologica Sinica, 2020, 46(1): 86-95.

[6]曹诗雨, 刘跃虎, 李辛昭. 基于Fast R-CNN的车辆目标检测[J]. 中国图象图形学报, 2017, 22(5): 671-677.

CAO Shiyu, LIU Yuehu, LI Xinzhao. Vehicle detection method based on Fast R-CNN[J]. Journal of Image and Graphics, 2017, 22(5): 671-677.

[7]彭红星, 黄博, 邵园园, 等. 自然环境下多类水果采摘目标识别的通用改进SSD模型[J]. 农业工程学报, 2018, 34(16): 155-162.

PENG Hongxing, HUANG Bo, SHAO Yuanyuan, et al. General improved SSD model for picking object recognition of multiple fruits in natural environment[J]. Transactions of the Chinese Society of Agricultural Engineering, 2018, 34(16): 155-162.

[8]LOEY M, MANOGARAN G, TAHA M H N, et al. Fighting against COVID-19: A novel deep learning model based on YOLO-v2 with ResNet-50 for medical face mask detection[J]. Sustainable Cities and Society, 2021, 65(2): 102600.

[9]赵德安, 吴任迪, 刘晓洋, 等. 基于YOLO深度卷积神经网络的复杂背景下机器人采摘苹果定位[J]. 农业工程学报, 2019, 35(3): 164-173.

ZHAO Dean, WU Rendi, LIU Xiaoyang, et al. Apple positioning based on YOLO deep convolutional neural network for picking robot in complex background[J]. Transactions of the Chinese Society of Agricultural Engineering, 2019, 35(3): 164-173.

[10]熊俊涛, 邹湘军, 王红军, 等. 基于Retinex图像增强的不同光照条件下的成熟荔枝识别[J]. 农业工程学报, 2013, 29(12): 170-178.

XIONG Juntao, ZOU Xiangjun, WANG Hongjun, et al. Recognition of ripe litchi in different illumination conditions based on Retinex image enhancement[J]. Transactions of the Chinese Society of Agricultural Engineering, 2013, 29(12): 170-178.09B613B9-6D44-4542-91B1-A1F0EE71EE1A

[11]LAND E H. An alternative technique for the computation of the designator in the retinex theory of color vision[J]. Proceedings of the National Academy of Sciences of the United States of America, 1986, 83(10): 3078-3080.

[12]JOBSON D J, RAHMAN Z, WOODELL G A. A multiscale retinex for bridging the gap between color images and the human observation of scenes[J]. IEEE Transactions on Image Processing: A Publication of the IEEE Signal Processing Society, 1997, 6(7): 965-976.

[13]HUSSEIN R, HAMODI Y I, SABRI R A. Retinex theory for color image enhancement: A systematic review[J]. International Journal of Electrical and Computer Engineering, 2019, 9(6): 5560-5569.

[14]黎洲, 黄妙华. 基于YOLO_v2模型的车辆实时检测[J]. 中国机械工程, 2018, 29(15): 1869-1874.

LI Zhou, HUANG Miaohua. Vehicle detections based on YOLO_v2 in real-time[J]. China Mechanical Engineering, 2018, 29(15): 1869-1874.

[15]REDMON J, DIVVALA S, GIRSHICK R, et al. You only look once: Unified, real-time object detection[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. New York: IEEE, 2016: 779-788.

[16]蔡逢煌, 張岳鑫, 黄捷. 基于YOLOv3与注意力机制的桥梁表面裂痕检测算法[J]. 模式识别与人工智能, 2020, 33(10): 926-933.

CAI Fenghuang, ZHANG Yuexin, HUANG Jie. Bridge surface crack detection algorithm based on YOLOv3 and attention mechanism[J]. Pattern Recognition and Artificial Intelligence, 2020, 33(10): 926-933.

[17]FU Huixuan, SONG Guoqing, WANG Yuchao. Improved YOLOv4 marine target detection combined with CBAM[J]. Symmetry, 2021, 13(4): 623-637.

[18]蒋镕圻, 彭月平, 谢文宣, 等. 嵌入scSE模块的改进YOLOv4小目标检测算法[J]. 图学学报, 2021, 42(4): 546-555.

JIANG Rongqi, PENG Yueping, XIE Wenxuan, et al. Improved YOLOv4 small target detection algorithm with embedded scSE module[J]. Journal of Graphics, 2021, 42(4): 546-555.

[19]赵文清, 严海, 邵绪强. 改进的非极大值抑制算法的目标检测[J]. 中国图象图形学报, 2018, 23(11): 1676-1685.

ZHAO Wenqing, YAN Hai, SHAO Xuqiang. Objection detection based on improved non-maximum suppression algorithm[J]. Journal of Image and Graphics, 2018, 23(11): 1676-1685.

[20]WU G, LI Y. Non-maximum suppression for object detection based on the chaotic whale optimization algorithm[J]. Journal of Visual Communication and Image Representation, 2021, 74(1): 102985.

[21]徐利锋, 黄海帆, 丁维龙, 等. 基于改进DenseNet的水果小目标检测[J]. 浙江大学学报(工学版), 2021, 55(2): 377-385.

XU Lifeng, HUANG Haifan, DING Weilong, et al. Detection of small fruit target based on improved DenseNet[J]. Journal of Zhejiang University (Engineering Science), 2021, 55(2): 377-385.09B613B9-6D44-4542-91B1-A1F0EE71EE1A

Intelligence recognition algorithm of group cocoons based on MSRCR and CBAM

SUN Weihong1, YANG Chengjie1, SHAO Tiefeng1, LIANG Man1, ZHENG Jian2

(1a.College of Mechanical and Electrical Engineering; 1b.Cocoon and Silk Quality Inspection Technology Institute, China Jiliang University,Hangzhou 310018, China; 2.Jiangxi Market Supervision Management Quality and Safety Inspection Center, Nanchang 330096, China)

Abstract:Silk, as a "national treasure" that has accumulated thousands of years of civilization, is one of the very few advantageous industries in China that can dominate the international market. The silk industry plays an economic, ecological and social role, and has made important contributions to farmers prosperity, employment expansion, ecological protection and export earnings. The quality of silk is closely related to the control of the type of cocoon at the sorting stage. Sensory testing is still the main mode of cocoon sorting in China at this stage, that is, the silk reeling enterprise requires inspectors to classify the raw material cocoon according to national standards by original methods such as eye and hand touch. Cocoon sorting requires a high quality of inspectors who should not only have rich experience in sorting, standardized operation and smooth vision, but also have a deep understanding of the technical standards for cocoon sorting. The high technical requirements are a test for the technical personnel of the enterprise and increase the management and training costs of the enterprise.

In order to solve the problems of high labor cost and low efficiency in the traditional sorting process, this paper implements the intelligent identification of group cocoon species based on multi-scale retinex with color restoration and convolution block attention module. Because the surface of the cocoon collected in the experiment is susceptible to light, some areas are less visible. In this paper, from the perspective of restoring the surface color of the cocoon, multi-scale color recovery of the collected images is carried out. The MSRCR algorithm uses the Gaussian function to perform low-pass filtering on the original map of the cocoon at multiple scales to highlight the defect characteristics of the surface. In order to solve the problem of distortion of cocoon images due to local contrast enhancement, this paper uses color recovery factors to highlight the information of darker areas. Secondly, when the YOLOv3 algorithm is applied to the identification and detection of group silkworm cocoons, the identification of small and medium-sized targets is defective, and it is very easy to have the problem of false detection. This article introduces convolution block attention module by modifying the residual layer. Convolution block attention module includes channel attention module (CAM) and spatial attention module (SAM). The convolution block attention module derives the weights along the two independent dimensions of the channel and space on the cocoon feature map of the middle layer of the network, multiplies the weights with the input feature map, divides the effective defect features and invalid background features in the image, and performs adaptive feature refinement. In the later prediction frame processing stage, the YOLOv3 algorithm uses a non-maximum suppresion (NMS) algorithm to filter the highest score prediction box belonging to the same cocoon in a certain area, which is prone to missed detection. In this paper, the flexible non-maximum suppression algorithm Soft-NMS is introduced by adding a linear weighted function and a Gaussian weighted function to the non-maximum suppression algorithm, and a coincidence calculation with adjacent boxes is added on the basis of the original algorithm to effectively solve the problem of missed detection of silkworm cocoons. On this basis, in this paper, reelable cocoon, yellow spotted cocoon and decay cocoon were used as research objects to make a cocoon species dataset and carry out control experiments. Experimental results show that in terms of accuracy correlation indicators, the accuracy of the proposed algorithm for the same kind of cocoon is better than that of the YOLov3 algorithm. Mean average precision is 4.85% better than the original algorithm. In terms of the detection time of a single image, the proposed algorithm only needs 30.1 ms, which meets the requirements of the real-time accuracy of the algorithm in the actual sorting process.

In order to improve the accuracy of YOLOv3 on the population cocoon detection algorithm, this paper proposes a group cocoon intelligent identification algorithm based on MSRCR and attention mechanism. However, due to the existing equipment conditions, there is a problem of incomplete acquisition in the sample image collection process of this article. How to improve the integrity of the acquisition of defect images on the surface of the cocoon is still worth further research by follow-up researchers.

Key words:cocoon; intelligent recognition; MSRCR algorithm; YOLOv3 algorithm; convolution block attention module; NMS algorithm09B613B9-6D44-4542-91B1-A1F0EE71EE1A