Gait Recognition by Cross Wavelet Transform and Graph Model

2018-08-11SagarArunMoreandPramodJaganDeore

Sagar Arun More and Pramod Jagan Deore

Abstract—In this paper,a multi-view gait based human recognition system using the fusion of two kinds of features is proposed.We use cross wavelet transform to extract dynamic feature and bipartite graph model to extract static feature which are coefficients of quadrature mirror filter(QMF)-graph wavelet filter bank.Feature fusion is done after normalization.For normalization of features,min-max rule is used and mean-variance method is used to find weights for normalized features.Euclidean distance between each feature vector and center of the cluster which is obtained by k-means clustering is used as similarity measure in Bayesian framework.Experiments performed on widely used CASIA B gait database show that,the fusion of these two feature sets preserve discriminant information.We report 99.90%average recognition rate.

I.INTRODUCTION

RECOGNIZING human from a distance by gait biometric has attracted researchers in recent years.It has many advantages like;non-invasive,less obscured,unobtrusive,without subject cooperation,ability to work from a distance and with low quality video.Comparatively,gait biometric is newer modality than face,iris and fingerprint.Recognizing someone from a certain distance,where no fine details are available is very difficult task.In such case,gait biometrics in which the person gets recognized by the manner of walking only is useful.Gait is a potential biometric trait where unconstrained person identification is demanded.It is a protocol free biometric technique which does not require willingness of person and hence found application in surveillance.However,commonly used biometric recognition systems usually operate in constrained acquisition scenarios and under rigid protocols.The finger print,iris and face recognition could not be the right choice in the unconstrained environment,where distant data capture is required.Comparatively,gait comprised of motion trajectories of various body parts,have a potential to get captured properly from relatively far distance.It does not need systematic data capture process,where subjects should necessarily be informed.This makes the identification process protocol free.The extended application of gait recognition can be suspect identification in a sensitive area where security is at the highest priority.These characteristics of gait biometrics lead it to be an attractive modality from the perspective of human recognition from a distance.

In spite of various advantages,co-variate factors like;walking speed,carrying conditions,clothing,the surface of walking,fatigue,drunkenness,pregnancy,injury to feet and the psycho-somatic condition affect the normal walking style.View angle also plays vital role while testing such system.It may be possible that certain view angle provides discriminant information of walking individual while another may not.Hence,an investigation is needed to find robust gait representation which can cope up with these challenges in multi-view scenario.

The main contribution of this paper is the achievement of significant recognition rate in co-variate conditions like carrying bag and cloth variation.There is no need to segment bag from subject to remove co-variate.It does not need any complex model to extract static or dynamic features.This scheme is simple,as it does not need color and texture information of the sequences and innovative in the sense that,the application of cross wavelet transform and graph model is not proposed in fusion approach yet.

Rest of the paper is organized as follows.Section II briefs existing methods of gait recognition.In Section III,proposed method is discussed.Section IV explores feature extraction and feature fusion along with training and testing of the system in details.Experimental results are discussed in Section V followed by conclusion in Section VI.

II.LITERATURE OVERVIEW

Gait recognition and analysis have been studied heavily in recent past.In this section we will discuss it briefly.The approaches in the literature can be broadly classified into two types viz.model free[1]−[4]and model-based[5]−[8].In these approaches,various static and dynamic features of gait sequences were extracted by using shape analysis,image geometry transformations,wavelet analysis,so on and so forth.The model free approach extracts features directly from the image plane.Whereas,the model based approach models the human gait and then extracts model parameters as features.In[1],procrustes shape analysis is used to represent gait signature,which is obtained by extracting the mean shape of the unwrapped silhouette.Whereas,[2]is a 3D approach for gait recognition,which constructs 3D silhouette vector of 2D scene by using stereo vision method.In a recent work[4],complete canonical correlation analysis is used to compute correlation between two gait energy image(GEI)features.In another recent paper[3],authors extract different width vectors and combined them to construct gait signature.This feature is then approximated by radial basis function(RBF)network for recognition.

In an earlier model based approach[5],gait pattern was detected in XYT spatio-temporal volume.The bounding contour of walking person is found by the snake.Furthermore a 5-stick human model is controlled by these contours.Various angle signals are then extracted by using this model for recognition.Whereas in[6],each silhouette is first labelled manually.Various features like;area,gravity center,orientation of each body part is then calculated.In[8],the gait cycle is modelled as chain of key poses first,then features like pose energy image and pose kinematic are extracted.View invariant approaches have also been proposed in the regard of gait recognition such as[7].In this paper,authors estimate marker less joints followed by viewpoint verification.

Either static or dynamic feature alone can perform well for recognition but with some limitations.While dealing with static features,one can not analyze dynamic features and vice-versa.Extracting both features simultaneously improves the recognition rate on the cost of increased computational complexity.Various approaches are proposed in this regard,which extract static and dynamic features simultaneously,either fusing model free and model based approaches[9]−[13]or fusing various features into a single augmented feature vector[14]−[17].

In[14],gait energy image and motion energy images are combined to form feature vector,whereas in[15],the static silhouette template(SST)and dynamic silhouette template(DST)are fused to construct dynamic static silhouette template(DSST).The position of the gravity center of human body may change because of various co-variate factors as aforementioned.This problem is addressed by[16].In this paper,authors divide the GEI transformed image into three body parts like;head,torso and leg.Furthermore,they compute shifted energy image(SEI)features which are horizontal centres of body parts.Next,gait structural profile(GSP)extracted to capture body geometry.For this,silhouette segmented into four body parts as per the anatomical measurements like;head,torso,left and right leg.The GSP,which is the difference of gravity center of these segmented body parts and entire body is computed.These two features are then used in combination for recognition.In[17],two distinct features namely frieze pattern and wavelet coefficients are extracted.The frieze pattern preserves spatial information and wavelet coefficients preserve low frequency information.Factorial hidden Morcow model(HMM)is used to combine these features and parallel HMM facilitates decision level fusion of two individual classifiers for recognition.All these approaches signify that,the fusion of multiple gait features improves the recognition system performance.

There are certain methods,which explore static and dynamic characteristics of the human body.They fuse static and dynamic features for improvement in performance of gait recognition system.In[13],features like;centroid,arm swing,stride length,mean height were extracted from the binary silhouette.Further,they fit ellipse on each region and compute it’s aspect ratio and orientation.These features are then combined and transformed by discrete cosine transform(DCT)and applied to generalized regression neural network for recognition.Whereas in[9],mean shape is extracted by using Procrustes shape analysis as a static feature.The dynamic features are extracted by modelling human body parts by truncated cone,head by sphere and computing joint angles of this model.A human skeleton model is adopted in[10]to extract dynamic features and computing various angles of key body points.The static feature is denoted by wavelet descriptor,which is obtained by applying wavelet transform to the boundary-centroid distance.

In[18],HMMs are used to extract static and dynamic gait features,without using any human body model.The static features are extracted by conventional HMM and dynamic features by hierarchical HMM.After labelling,they extract three features namely;component area,component center and component orientation.First HMM represents general shape information while the second HMM extracts detailed subdynamic information.Whereas in[19],local binary pattern is used to denote the texture information of optical flow as the static feature.Dynamic feature is represented by HMM with Gaussian mixture model.In[11],the GEI is transformed by dual tree complex wavelet transform(DTCWT)with different scales and orientations.A two stage Gaussian mixture model denote the patch distribution of each DTCWT based gait image.Further,to model the correlation of multi-view gait feature,a sparse local discriminant canonical correlation model is used.In a recent paper[12],the dynamic feature is extracted by Lucas-Kanade based optical flow image.The mean shape of head and shoulder are then extracted by using Procrustes shape analysis,which is the static feature.The fusion is done on score level.

It can be noted that not all the aforementioned methods adopt human body model such as skeleton to extract dynamic features.Most often,authors prefer mathematical modelling,as it is efficient to extract different kinds of features and also facilitate lower computational complexity.

III.PROPOSED GAIT RECOGNITION METHOD

This paper aims to develop a method,which is the fusion of both approaches,viz.,model free and model based,without using human body model such as skeleton.It facilitates to extract static and dynamic feature sets simultaneously.Dynamic feature set is obtained by computing cross wavelet transform among dynamic body parts like hand and leg from each gait sequence.Further,to extract static feature set,the bipartite graph is used to model gait silhouette,as the graph is a powerful tool to represent an image on the basis of pixel adjacency.We apply quadrature mirror filter(QMF)-graph wavelet filter bank proposed by[20]to each gait sequence.Only analysis filter bank is used for this task.The feature vector(FV)represented by fusion of these two feature sets.

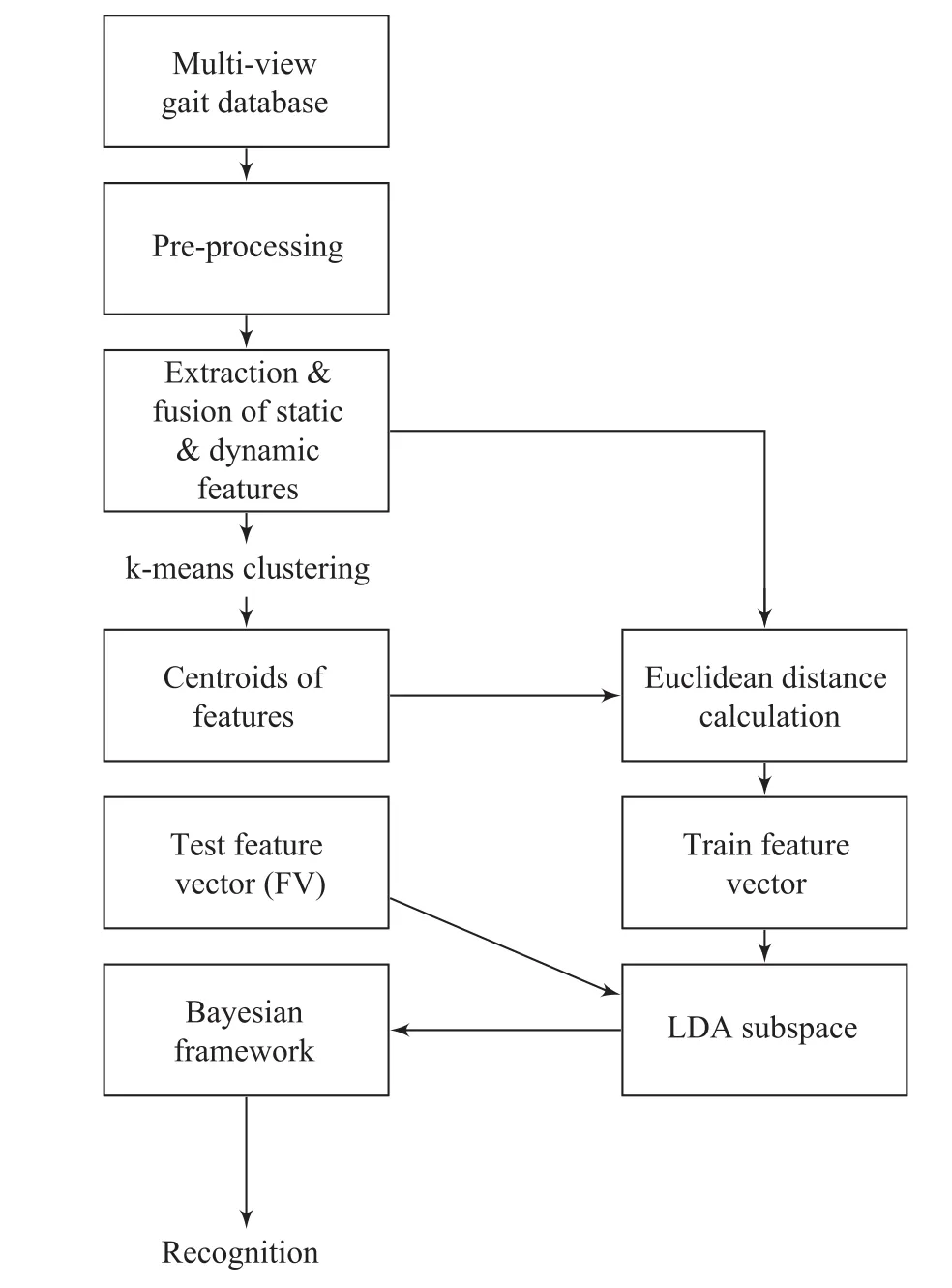

The static and dynamic feature sets extracted from all the sequences with 11 view angles and 10 co-variate conditions.These features are combined on feature level.The centroid of clusters of these augmented feature vectors then obtained by using k-means clustering.The Euclidean distance computed between each feature vector and centroid of clusters which is linearly classified in linear discriminant analysis(LDA)space.For identification,we use Bayesian framework.These steps are depicted in Fig.1.

Fig.1.Proposed method.

IV.FEATURE FORMATION

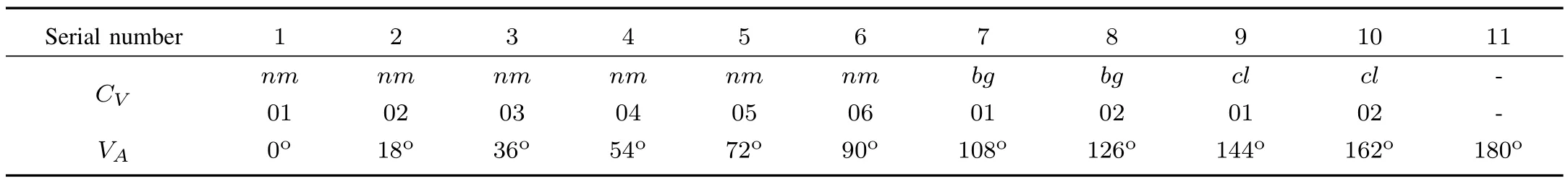

In this work,we use CASIA B multi-view gait database[21],which consists of 124 persons.Each person is depicted in 10 sequences with various co-variate(CV)like;normal/slow walking(nm-01 to nm-06),with bag(bg-01,bg-02),with coat(cl-01,cl-02).The sequences are captured at 11 different viewing angles(VA)(0o,...,180o).Table I shows the view angle and co-variate with serial numbers which we use in experiments.Thus,the database consists of 124×10×11=13640 gait sequences.The feature space consists of two different kinds of feature sets.We use cross wavelet transform to extract dynamic feature set and QMF-graph wavelet filter bank to compress the sequence,which is static feature set of a complete gait sequence at an arbitrary view angle and covariate condition.

A.Pre-processing

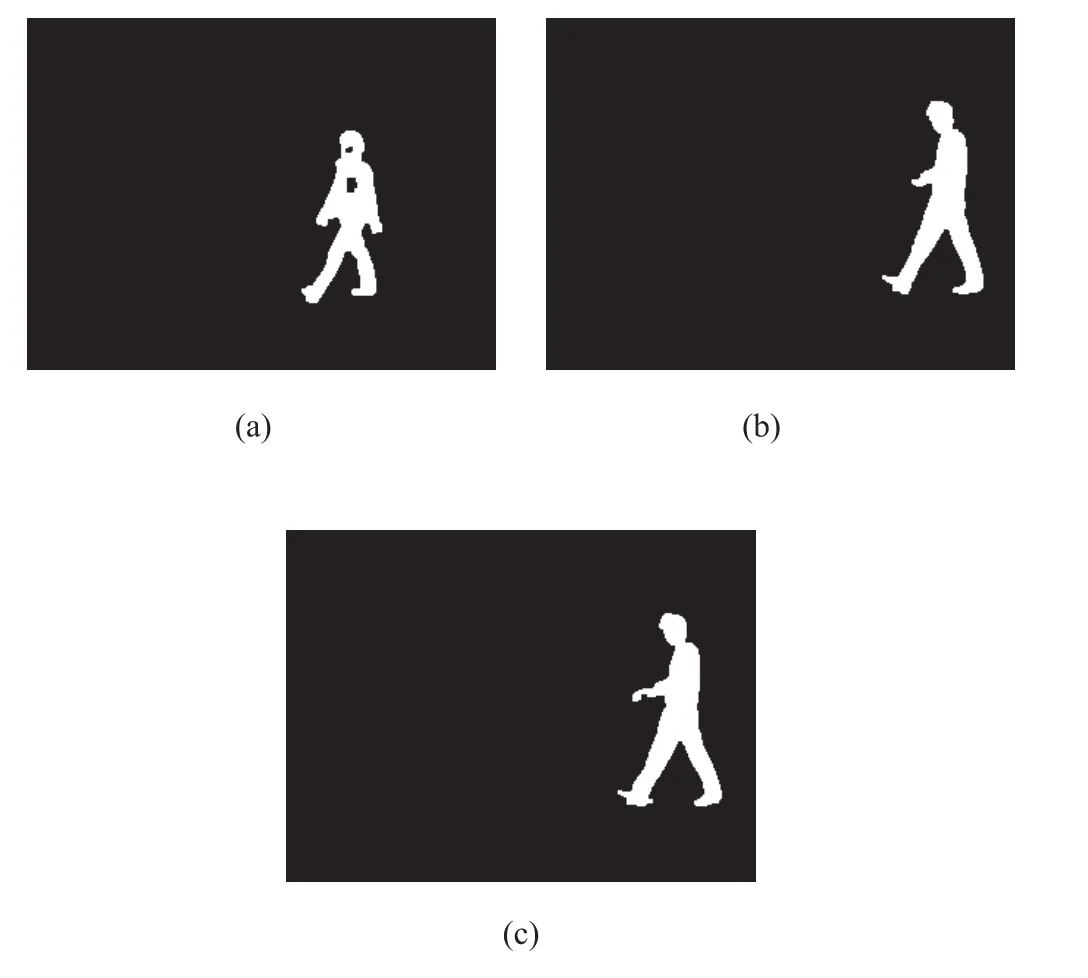

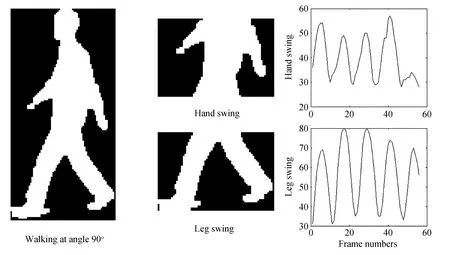

The silhouettes which are available readily in CASIA B database have holes as shown in Fig.2(a)and breaks in successive frames as shown in Figs.2(b)and 2(c).In order to extract meaningful features,we do morphological operations like;dilation,erosion,opening and closing.Further,it is required to divide silhouette which contains body parts like hands and legs.A bounding box technique is applied after cropping the divided silhouette from complete silhouette to get horizontal width of cropped silhouette.This width varies in each frame as hands/legs displaces.We divide entire silhouette into three equal parts,viz.,the portions containing head and shoulder,hands,legs.We processed only the portions containing hand and legs by first cropping and then applying bounding box on both the portions separately.The width of the bounding box is saved as 1D width vector.Here,in this work we consider the movement of hand and leg only for the computation of dynamic feature using cross wavelet transform.

Fig.2.Inferior silhouette.(a)Hole in silhouette t.(b)Break in silhouette t−1.(c)Break in silhouette t.

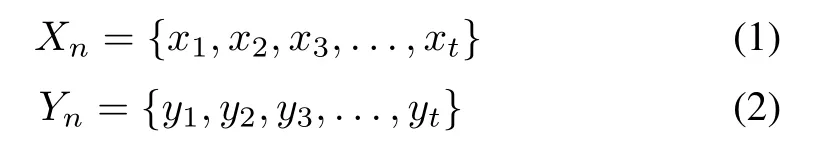

Let Xnand Ynbe the 1D signals generated due to dynamic movement of hands and legs respectively for n sequences.Then,we can write

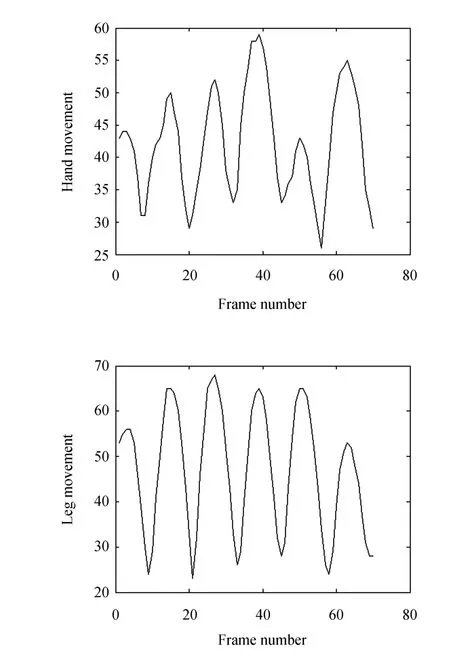

where xtand ytare the width vector computed from bounding box and t is number of frames in a sequence.The width of the bounding box is saved as 1D width vector as shown in Fig.3.

B.Dynamic Feature Extraction(FVdynamic)

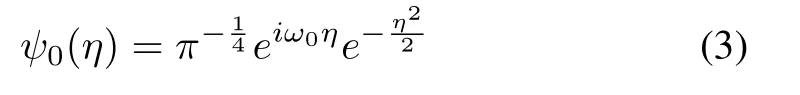

After pre-processing,the width vectors extracted from each silhouette which represents the dynamic movements of hands and legs throughout the entire gait sequence.Cross wavelet transform then applied to these 1D signals.Morlet wavelet(with ω0=6)is used as it better suits for such nature of signals with regard to time and frequency localization.

where ω0is dimensionless frequency and η is dimensionless time.

TABLE ICO-VARIATES AND VIEW ANGLES IN CASIA B DATABASE

Fig.3.1D signals extraction.

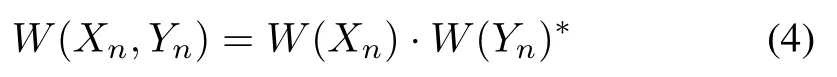

1)Cross Wavelet Transform:The cross wavelet transform is defined over two time series,which reveal an area of common higher power and relative phase in the time-frequency domain.Cross wavelet transform of two time signals Xnand Ynis expressed as[22]:

where W(Xn)is continuous wavelet transform and∗is complex conjugation.The cross wavelet power can be defined as

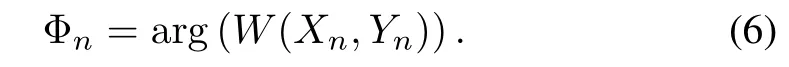

The local relative phase between Xnand Yncan be expressed as complex argument

The complete representation of wavelet cross spectrum is

where Φnis the phase at time tn.

2)Wavelet Coherence:More significant coherence between two continuous wavelet transformed signals is found even when common power is low.This relationship is expressed as wavelet coherence(WCOH).Wavelet coherence denotes the relationship between two independent time series signals expressed in terms of area of the common frequency band at a certain time interval,across which these two signals vary simultaneously.Following[22],[23],wavelet coherence between two signals Xnand Yncan be written as:

where ς=S ·s−1,S is smoothing parameter and s is the scale.

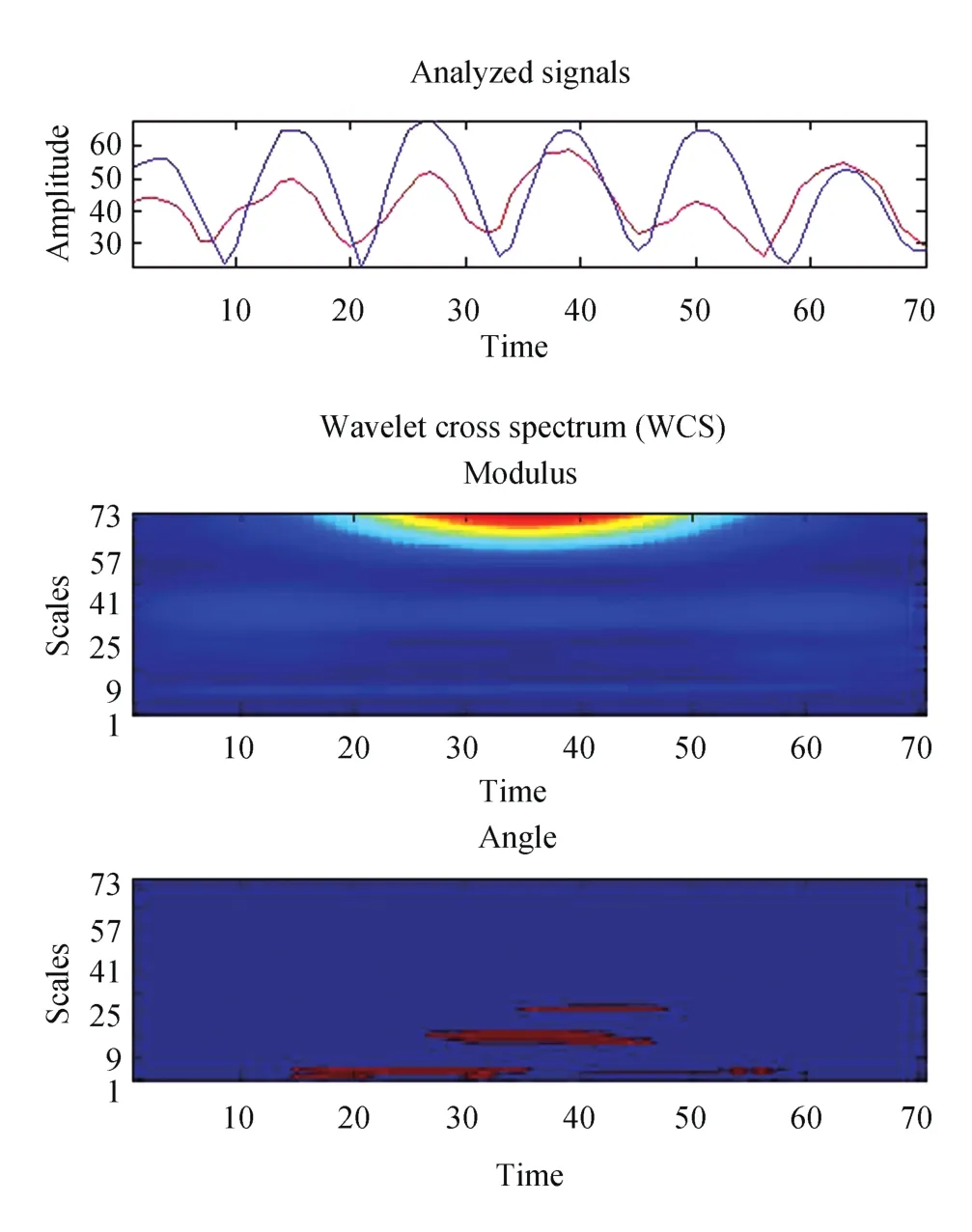

For demonstration purpose,we show 1D signals extracted at angle 90owith bag carrying co-variate as shown in Figs.4−6 denote wavelet cross spectrum,wavelet coherence and phase relationship between extracted signals,respectively.These Figs.are color-coded spectrograms.They denote variations in spectral and coherence components.We choose scale(s)up to 75 as it is optimum choice after visual inspection.The smoothing(S)is done in both the time and scale directions to compute meaningful coherence.The time smoothing uses a filter given by the absolute value of the Morlet wavelet function at each scale and normalized to have a weight of unity.The scale smoothing is done by using a boxcar filter of a certain width which is 0.60 for Morlet wavelet[24].

The dynamic feature set includes the mean value of cross wavelet spectrum along with wavelet coherence and phase across the entire gait sequence.The length of dynamic features varies with the number of frames present in that particular sequence,hence zero padding is done to individual feature to make the feature set of fixed length.

C.Static Feature Extraction(FVstatic)

Various static features like;mean height,centroid,mean shape,wavelet descriptor have been presented earlier,which are already discussed in literature overview.We do not extract such features.Instead, first we represent gait silhouette by a bipartite graph and further use QMF-graph filter bank to compress the entire gait sequence.A brief about graph and QMF-graph filter bank is discussed next.

Fig.4.1D signals at view angle 90owith bag.

Fig.5.Wavelet cross spectrum at view angle 90owith bag.

Fig.6.Wavelet coherence at view angle 90owith bag.

1)Graph Model:A graph G=(V,E),in which V and E are the vertex and edge,respectively,is a powerful tool for modelling image and video signals,as it offers flexibility in adjacent pixel relationship.The 2D images can be represented as graph using various pixel connectivities such as:rectangular,vertical,horizontal,diagonal,8 connected neighbours.This flexibility leads to different down sampling patterns for filters.Various concepts from signal processing like Fourier decomposition and filtering can be extended to graph domain.These functions are called as graph signals.In this work,we use the bipartite graph to model the silhouette image.The bipartite graph is expressed as G=(L,H,E).They are also called as two-colourable graphs as their vertices can be coloured into two colours.The connected two vertices are not of the same colour.The decomposition of bipartite graph produces edge disjoint set of sub-graphs.The vertices V are divided into two disjoint sets L and H.Each vertex in L is connected to each vertex in H by a link as shown in Fig.7.We model the silhouette by such undirected bipartite graph,which is without self loop and considering each pixel as an individual node to form 8 connected image graph G.An adjacency matrix A is defined over the graph and A(i,j)is weight between node i and j.D=diag(di)denote diagonal degree matrix,where diis degree of node i.Furthermore,the Laplacian matrix of graph is expressed as,L=D−A,where,L=I−D−1/2AD−1/2is the normalized Laplacian.

Here,we apply only analysis filter of perfect reconstruction two channel critically sampled QMF-graph wavelet filter bank as shown in Fig.8 to compress the graph-structured data.As suggested in[20],the colouring of the vertices is done by using BSC algorithm[25],followed by decomposition of the graph into the set of bipartite graphs using Harary’s algorithm[26].Each sub-graph is down sampled by βLand βH,which are down sampling operators.The nodes in L preserve output of low pass channel,whereas nodes in H preserves output of high pass channel.Thus H and L facilitates bi-partition of graph.The analysis filters H0and H1can be written as,

where,h0(λ)and h1(λ)are spectral kernels,L is normalized Laplacian,λ is eigenvalue,σ(G)is spectrum of graph which is set of eigenvalues and Pλis the projection matrix of eigen space V(λ).The low pass analysis kernel h0(L)is computed by using Chebychev approximation of Meyer kernel.The other spectral kernels can be computed by using QMF relations.The static feature set includes the mean wavelet coefficients of entire gait sequence.The length of static feature is of 256 after second level decomposition.

Fig.7.Bipartite graph.

Fig.8.Graph wavelet filter bank(analysis).

D.Feature Fusion

As discussed in previous subsections,static and dynamic features are extracted,which have different discriminating power.We concatenated these two features to construct a single augmented feature vector.Since,these features are not directly comparable,we first normalize them using min-max normalization method[27].The length of dynamic features varies with the number of frames present in that particular sequence,hence zero padding is done to individual feature to make the dynamic feature set of fixed length.The static feature set is of fixed length,hence zero padding is not required.Further,weights for normalized feature vectors are computed using the mean-variance method.

The normalized static and dynamic feature vectors are

The weights for normalized features vectors are

where,w is weight,m is mean and σ is variance of the feature vector.

Finally,these two features are concatenated as shown in(15)to form a single augmented vector for representation and further processing,

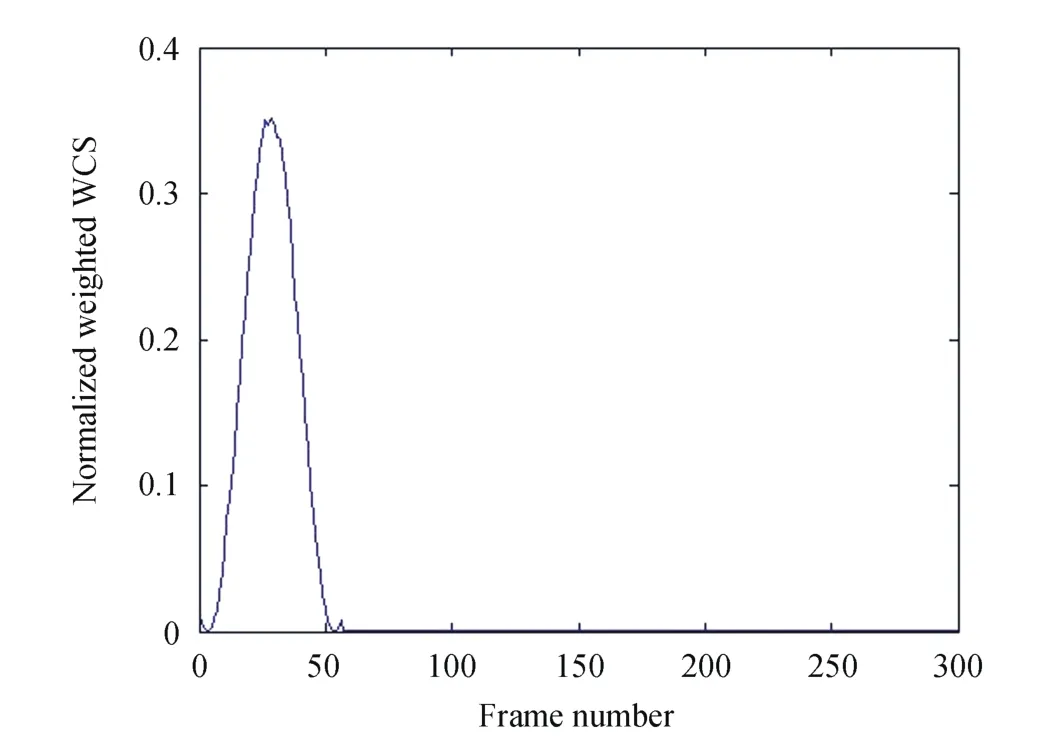

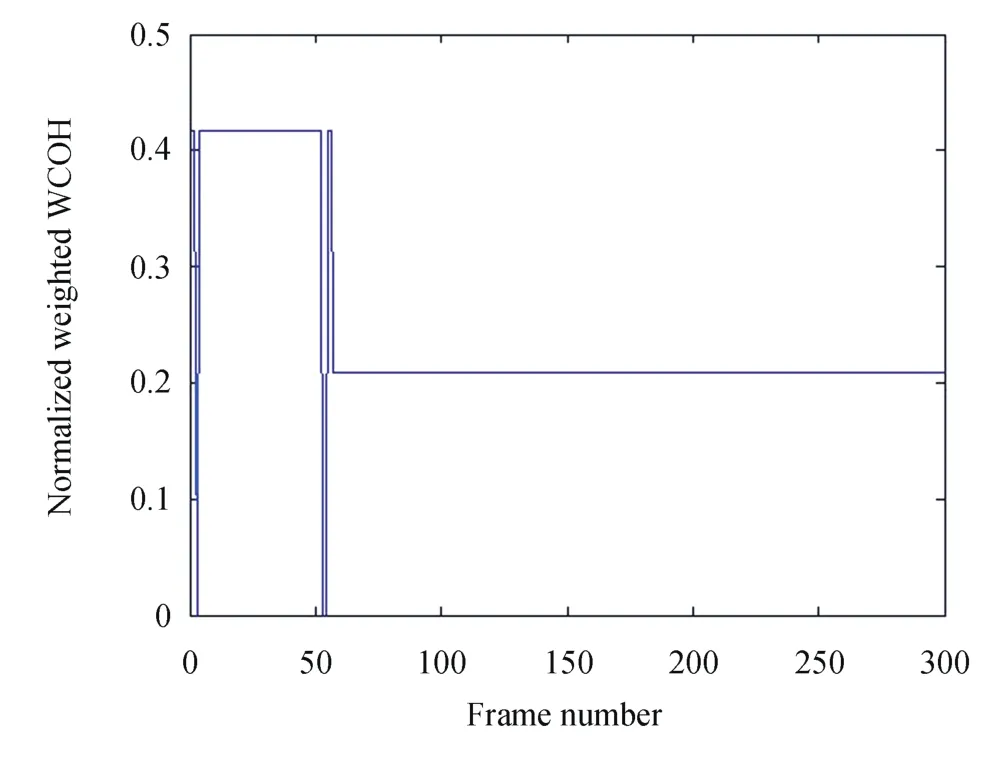

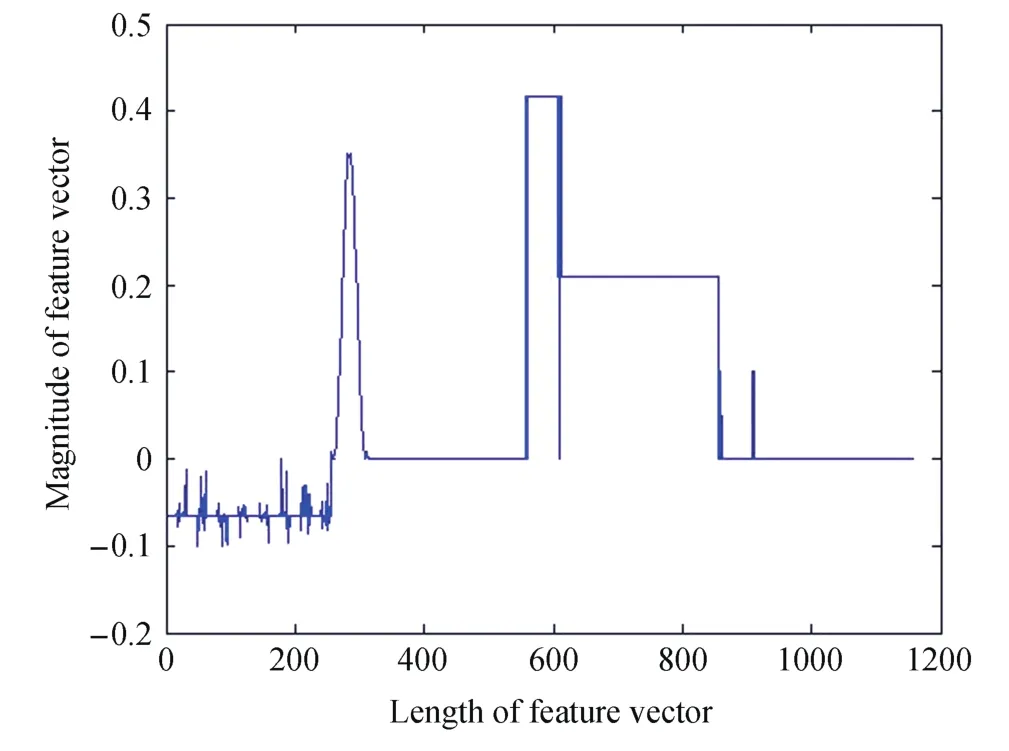

For demonstration of the proposed fusion approach,we computed dynamic and static features of a person walking normally with view angle of 90o.Figs.9−11 denote zero padded normalized weighted dynamic features.Fig.9 shows mean value of wavelet cross spectrum(WCS),Fig.10 denotes wavelet coherence(WCOH)and Fig.11 shows local relative phase(φ)relation between dynamic body parts.The static feature is shown in Fig.12,which are coefficients of graph wavelet filter bank decomposed up to second level.The augmented feature vector after fusion of static and dynamic feature set is shown in Fig.13.It is the representation of a person walking normally at view angle 90oin feature space.

Fig.9.Normalized WCS feature.

Fig.10.Normalized WCOH feature.

Fig.11.Normalized phase feature.

Fig.12.Normalized filter bank coefficients.

Fig.13.Augmented feature vector.

E.Training

For each gaitsequence we extract feature vector FV(PN,VA,CV),which is fusion of cross wavelet coherence and QMF-graph wavelet filter bank coefficients.Considering PN=1,...,124,VA=1,...,11, CV=1,...,10,where,PNis number of subjects(persons),VAis view angle and CVis co-variate.The centroid of clusters pqis then obtained as a result of k-means clustering.It clusters training FV(PN,VA,CV)vectors to Q clusters to minimize the with in cluster distance

where αiq=1,if FV(PN,VA,CV)is assigned to the cluster q and αiq= 0 otherwise.The centroid of clusters pq,q=1,...,Q are the centres of clusters.The optimal number of clusters is determined by using cross-validation procedure.

The FV(PN,VA,CV)vector describes the feature vector of PNth person,walking at viewing angle VAand having covariate condition CV.This feature vector of each training subject is then mapped by Euclidean distance to the centroid of clusters pqas follows

Other distances can also be used but Euclidean distance exhibit simple representation hence preferred.Each distance vector is then finally represented as D=[d1,d2,...,dQ]T.Further,for the final representation,the Euclidean distances are normalized to get membership vector

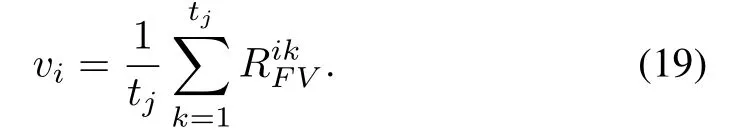

To make this membership vector duration invariant,as we use multi-period gait sequences,the mean of RFVis taken.For i=1,...,CVand all tj;j=1,...,pqmembership vectors

Further,linear discriminant analysis is applied to vito project it into low dimensional discriminant subspace.Each person can be linearly separable in this subspace.In LDA,an optimum projection matrix Woptis derived to minimize the Fisher criterion.

where Swand Sbare the scatter matrices of within class and between class of C classes

where

µiis the mean vector of class ωiand µ is the mean vector of the training set.

F.Testing

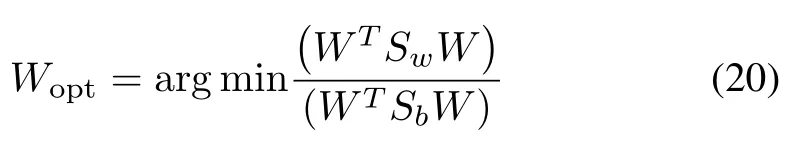

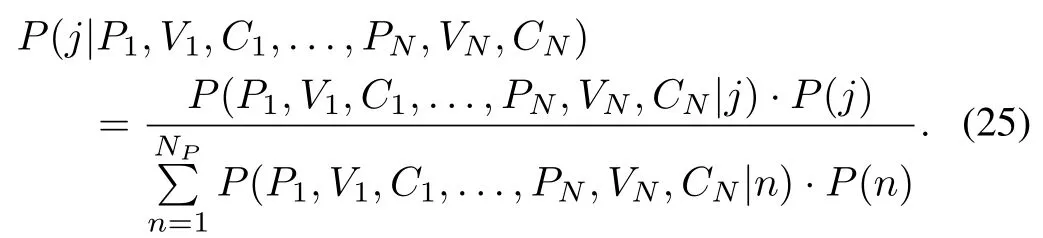

The test feature vector is obtained using similar steps as used to obtain the training feature vector.For identification,we use probabilistic model such as Bayesian framework.The Bayesian probabilistic decision theory is a fundamental approach in pattern classification.Assuming equiprobable classes and all probabilities are known,let P(j)is the apriori probability of occurrence of jth person in the database of PNclasses.The class conditional probabilities can be expressed as P(j|PN,VA,CV),where PNare total subjects in databases,VAare viewing angles considered and CVare co-variate conditions while training.The apriori can be estimated during training and aposteriori can be estimated by the following equation

V.EXPERIMENTAL RESULTS AND COMPARISON

Experiments are performed on CASIA B multi-view gait database,considering all view angles and co-variate conditions in MATLAB environment.All the training classes are equiprobable as Bayesian framework is used for identification.Training includes all view angles and co-variate factors considering only hand and leg movement.The mean value of wavelet cross spectrum(WCS)along with wavelet coherence and phase(φ)are preserved to construct the dynamic feature set.Each sequence is compressed by QMF-graph wavelet filter bank to get wavelet coefficients as the static feature.The feature fusion is done by min-max rule.The Euclidean distance between each training vector and centroid of the clusters are then preserved.While testing,we divide the database into three sets.Set A is of sequences nm-05 and nm-06,set B is of cl-01 and cl-02 and set C is of bg-01 and bg-02.

We compare our work with[16],[11]and[28],even though itis notstraight forward.The rank 1 results are shown in Table II.In[16], first they extract side view gait cycle and extract two kinds of features namely;shifted energy image and gait structural profile.They performed experiments by considering;normal walking sequences nm-01 to nm-04 as gallery set,nm-05,nm-06 as set A,cl-01,cl-02 as set B,bg-01,bg-02 as set C.We extract two features considering all view angles and co-variate conditions as gallery set.The probe sets are taken similarly to perform experiments as given in[16].It is found that our method slightly works better for set A,but outperforms for sets B and C.In[11],authors consider seven angles(from 36oto 144o)and all co-variate conditions.They performed experiments considering similar probe sets as aforementioned.Here,they use single angle for testing on multi-view gallery each time,e.g.,probe angle is 126oand gallery angles are 36oto 144o.In our case,we train and test the system by multi-view sequences.Our method outperforms for all probe sets.

TABLE IIWORK COMPARISON RANK1 RECOGNITION RATE(%)

Whereas in[28],authors extract static and dynamic features.The histogram distribution of optical flow vector is used as dynamic feature and Fourier descriptor is used as static feature.This work is similar to ours in this sense but they consider only three view angles,viz.,72o,90oand 108o.They used rank based fusion,whereas ours is feature based fusion.Experimental results shows that our method performs better for probe sets A,B and C than[28].

The proposed method outperforms the above methods as the features we extracted are robust and found invariant to covariate conditions especially carrying bag and cloth variations.

VI.CONCLUSION

In this work,we use cross wavelet transform and bipartite graph model for gait based human recognition in multi-view scenario.The experimental results show that,the fusion of different kinds of features represent gait pattern of an individual significantly.Table II shows that our method outperforms others in co-variate conditions also.The average recognition rate considering all view-angles and co-variate conditions in Bayesian framework is 99.90%.It has been observed that,the recognition rate decreases if the probe sequence is not included in the gallery while training.In future,we will concentrate on our research work to investigate different gait features which can improve performance of system in various co-variate conditions.

ACKNOWLEDGMENT

Portions of the research in this paper use the CASIA gait database collected by Institute of Automation,Chinese Academy of Sciences.Authors are grateful to Prof.Yogesh Ratnakar Vispute,for proof reading of the paper.

杂志排行

IEEE/CAA Journal of Automatica Sinica的其它文章

- Advances in Vision-Based Lane Detection:Algorithms,Integration,Assessment,and Perspectives on ACP-Based Parallel Vision

- Deep Scalogram Representations for Acoustic Scene Classification

- A Mode-Switching Motion Control System for Reactive Interaction and Surface Following Using Industrial Robots

- Adaptive Proportional-Derivative Sliding Mode Control Law With Improved Transient Performance for Underactuated Overhead Crane Systems

- On“Over-sized"High-Gain Practical Observers for Nonlienear Systems

- H∞Tracking Control for Switched LPV Systems With an Application to Aero-Engines