Optimization and Design of Cloud-Edge-End Collaboration Computing for Autonomous Robot Control Using 5G and Beyond

2022-11-03HaoWang

Hao Wang

Abstract: Robots have important applications in industrial production, transportation, environmental monitoring and other fields, and multi-robot collaboration is a research hotspot in recent years. Multi-robot autonomous collaborative tasks are limited by communication, and there are problems such as poor resource allocation balance, slow response of the system to dynamic changes in the environment, and limited collaborative operation capabilities. The combination of 5G and beyond communication and edge computing can effectively reduce the transmission delay of task offloading and improve task processing efficiency. First, this paper designs a robot autonomous collaborative computing architecture based on 5G and beyond and mobile edge computing(MEC).Then, the robot cooperative computing optimization problem is studied according to the task characteristics of the robot swarm. Then, a reinforcement learning task offloading scheme based on Qlearning is further proposed, so that the overall energy consumption and delay of the robot cluster can be minimized. Finally, simulation experiments demonstrate that the method has significant performance advantages.

Keywords: robot collaboration; mobile edge computing (MEC); 5G and beyond network; task offloading; resource allocation

1 Introduction

Robots have important applications in many areas such as industrial production, transportation, environmental monitoring, etc. [1– 3]. In recent years, with the improvement of artificial intelligence in robotics, cooperative heterogeneous multi-robot systems have become one of the research hotspots in academia [4]. The autonomous cooperative tasks of heterogeneous multi-robots in an unknown dynamic environment are limited by communication. There are problems such as poor resource allocation balance, slow response of the system to dynamic changes in the environment, and limited cooperative operation capabilities [5]. The high bandwidth and low latency characteristics of 5G and beyond can effectively break through the communication bottleneck of multi-machine autonomous cooperation [6, 7], thereby improving the collaborative operation ability of heterogeneous multirobots [8]. However, in heterogeneous multimachine cooperation, the communication requirements of machine-machine, machine-machinegroup, and machine-server are diverse, such as the need to transmit a large number of video and image data packets, the need to transmit highfrequency low-frequency data packet transmission, as well as low-latency and high-reliability transmission requirements for robot control, etc.At the same time, meeting these business requirements is also a challenge to advanced 5G and beyond communication technology.

With the goal of task-driven multi-robot cooperation, problems such as wide-area distribution, complex information interaction logic, and coexistence of loose coupling, multi-robot cooperative systems have many communication nodes,large transmission data, different coupling relationships between nodes, and different business information priorities [9]. To make full use of the high bandwidth and low latency of 5G and beyond, minimizing the response time, and maximizing the support for collaborative task execution are important issues that need to be solved.At the same time, the dynamic opening of the environment leads to complex and changeable transmission channels, and there is random uncertainty interference. A reliable communication mechanism that considers real-time performance is also the core problem to be solved.

Mobile edge computing (MEC) is an emergent architecture that can enhance computing power by deploying high-performance servers at network edge [10]. By deploying the MEC server in the vicinity of the robot cluster, the robot can offload computing tasks to the MEC server through the 5G and beyond base station by 5G and beyond network [11]. Since the MEC has significantly stronger computing power than the robot itself, the task processing process will be significantly accelerated. Therefore, the problem of task offloading and computing resource allocation, as a key point of MEC, has attracted great interest [12].

In a task-driven multi-robot collaboration system, task offloading and resource allocation are one of the most important parts [13]. Reinforcement learning (RL), as an autonomous machine learning paradigm[14,15], has been widely used in many fields like industrial control,unmanned driving, and natural language processing in recent years [16–18]. Reinforcement learning has the potential to solve various problems due to its ability to interact with the environment and obtain feedback and learn and evolve autonomously. Since the task offloading and resource allocation process is a Markov decision process (MDP), we focus on using reinforcement learning-based approaches to address these two important issues.

Queralta et al. studied the edge computing based distributed robotic system, which showed the potential of using MEC in robot collaborative control system [19]. Meanwhile, collaborative control have been proven to be a promising method for multi-robot cooperation [20]. However, no article has yet attempted to investigate cloud and mobile edge computing-based methods for autonomous collaborative control of robots.

For real-time and reliable big data processing architectures and communication mechanisms supporting multi-robot collaboration in 5G and beyond, this paper first designs a centralized and distributed architecture for multi-robot collaboration. This architecture makes full use of cloud, edge, and end collaboration to provide computing resources for multiple robots. The system is looking forward to comprehensively optimizing delay, energy consumption, and revenue.Subsequently, we try to solve the problem of task offloading and computing resource allocation using RL-based methods. Due to the simplicity and easy-to-train nature of Q-learning, we propose a method to solve this core problem using Q-learning. The main contributions of this paper can be summarized as follows.

1) A centralized-distributed system architecture of 5G and beyond multi-dimensional and multi-source data based on edge computing is proposed. Some tasks coordinated by heterogeneous robots need a real-time response, and a lot of data needs to be processed in time. MEC can well solve the compromise between computing power and real-time performance. For the case of multi-robots, a centralized-distributed edge computing architecture is constructed. The current popular cloud-edge collaboration is adopted to further expand the system functions. The architecture provides a theoretical basis for multirobot task offloading and cooperative operation.

2) A multi-robot task offloading strategy based on Q-learning is proposed. Aiming at the problem of task offloading and computing resource allocation based on MEC in robotic collaborative systems, a cross-domain offloading strategy is studied, aiming at comprehensively optimizing delay, energy consumption and revenue. A computational task model in a cooperative robot system is proposed. Considering the data transfer cost and migration cost, establish the robot task offloading policy state, action and value function, and use the reinforcement learning method of Q-learning to train the agent.

3) Compared with the other two baseline models, our proposed method based on Q-learning shows the advantages of the overall performance of the system in the simulation experiments. This means that using this method will significantly improve computing latency, system power consumption, etc.

The following paper is organized as follows.In section 2, we present the system model,including system architecture, network model,task model and computation model, and formulate the task offloading and resource allocation problem. In section 3, we discuss ways to solve the problem, first normalizing states, actions, and rewards for reinforcement learning and then introducing the Q-learning algorithm. In section 4, we conduct simulation experiments. Finally,conclusions and future work are given in section 5.

2 System Model and Problem Formulation

In this section, we first design a centralized-distributed system architecture for 5G and beyond multi-dimensional and multi-source data. Then,for the most critical task offloading and resource allocation, it analyzes and models from three perspectives of network, task and computing. The model can further solve this problem in subsequent chapters.

2.1 System Architecture

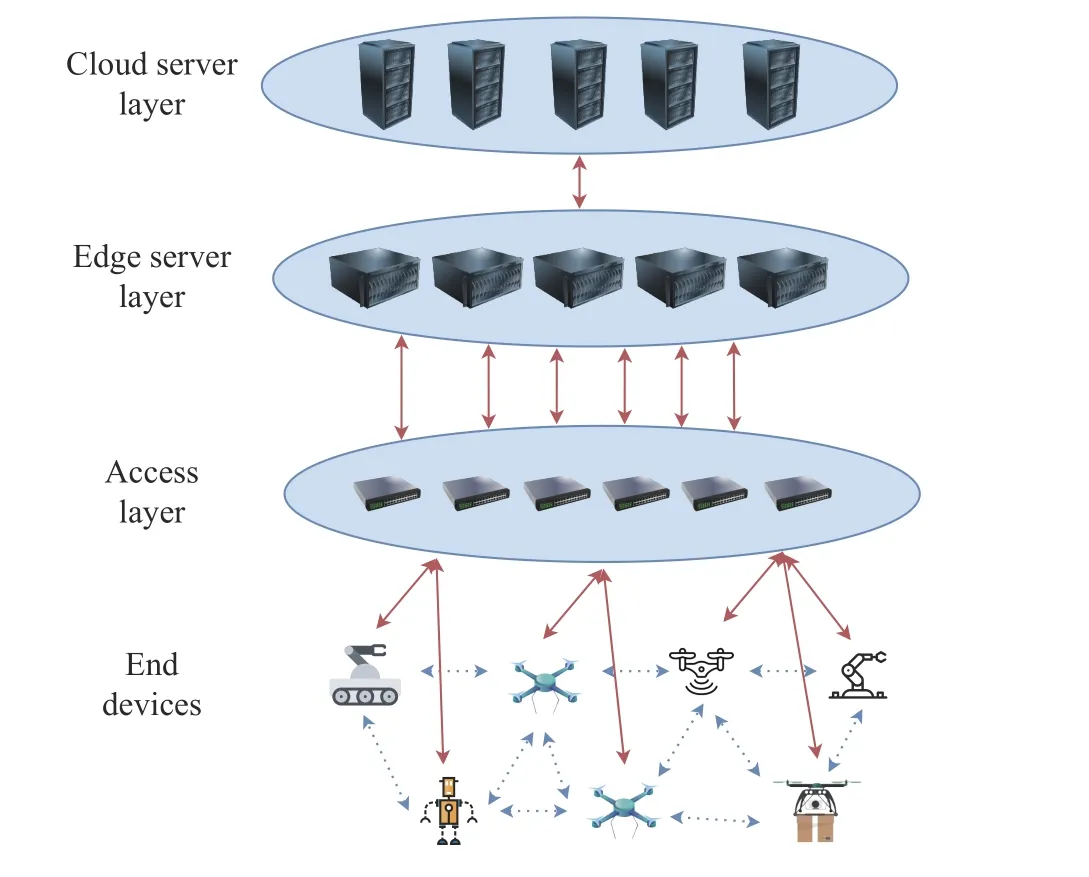

Some tasks coordinated by heterogeneous robots need a real-time response, and much data needs to be processed in time. Edge cloud computing can well solve the compromise between computing power and real-time performance. Based on the 5G and beyond communication system, we design the logical architecture of centralized-distributed edge cloud computing for robot collaboration. As shown in Fig. 1, the system consists of a cloud service layer, edge layer and device layer from top to bottom.

Fig. 1 Centralized-distributed system architecture for 5G and beyond multi-dimensional and multi-source data

The cloud service layer is responsible for managing all robots, assigning tasks, and providing an operating interface for operators; the cloud service layer communicates with edge computing through wired communication and sends tasks and instructions to edge nodes; the edge layer consists of several edge nodes, each edge node manages a specific edge area. The edge node is only responsible for managing the map information and robot collaborative control information in the current area and responding to computing requests from robots in the current area; the device layer mainly refers to the robot and the processors and sensors it carries. The equipment layer is not only the execution part of the collaborative robot system but also the information collection part.

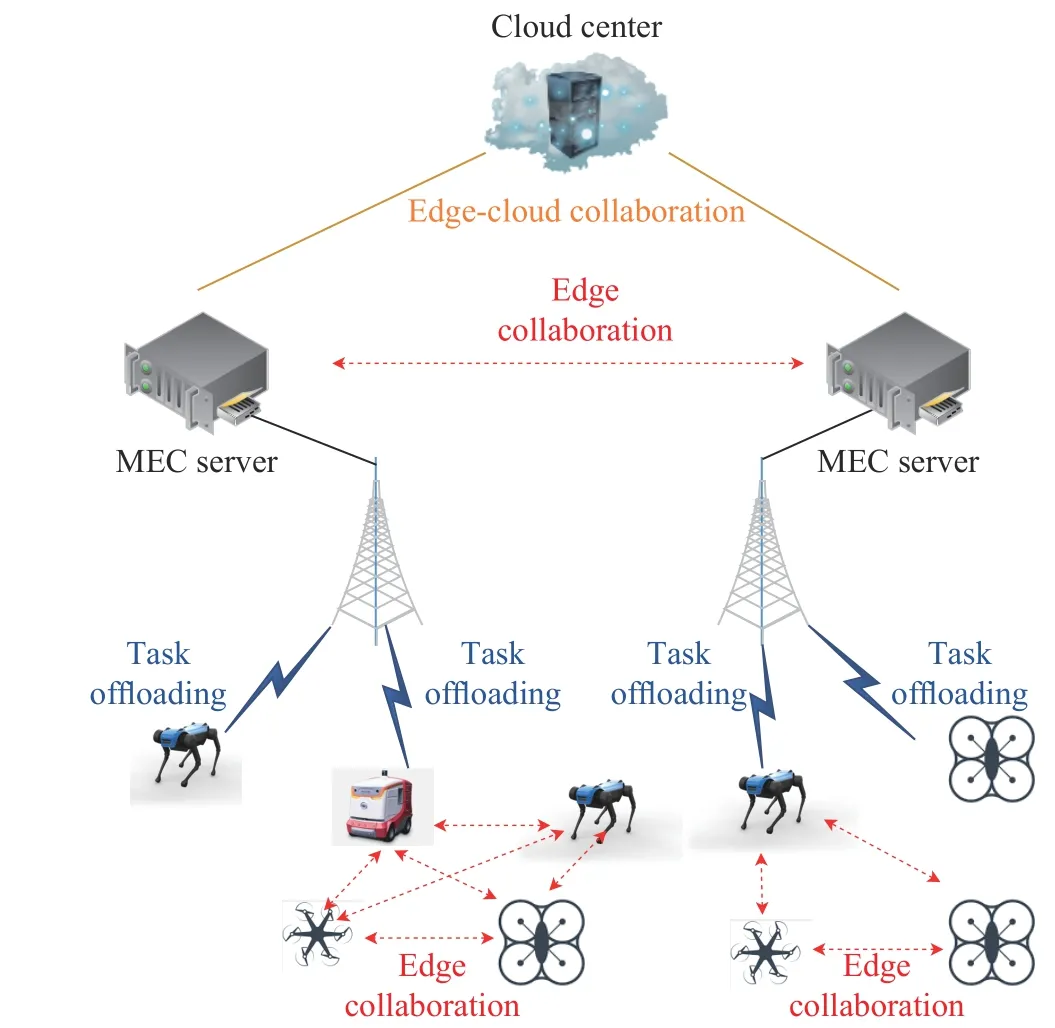

As we can see in Fig. 2, robots can work together through an cloud-edge-end collaborative architecture. Robots can obtain information about surrounding robots through brief information sharing. So the robot can use this information to decide its own decision-making behavior.At the same time, for computationally intensive tasks, robots can offload tasks to edge servers through 5G and beyond communication base stations. They can also choose to upload the tricky tasks to more powerful cloud computing center.MEC improves the cooperation efficiency of the overall system by reasonably allocating computing resources to the tasks of each robot.

Fig. 2 Cloud-edge-end collaboration structure of the centralized-distributed system

In this architecture, effectively offloading tasks and allocating resources is the key when robots face problems such as video image analysis of large data packets and high latency-sensitive computing requirements. We will divide the robot task offloading and resource problem into three parts: network model, task model and computational model in the following subsections.

2.2 Network Model

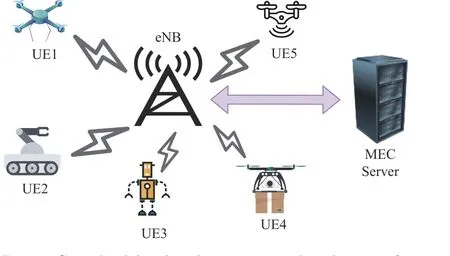

In the collaborative robot system, the computing power of the processor that comes with the robot is limited. In the collaborative robot system, the computing power of the robot is limited. Fig. 3 shows the network model. We treat each robot as a robot devices (RDs). Consider 5G station as an example. There is one 5G station andNRDs in each unit. The MEC server is deployed near the 5G station and connected with 5G station. The set of RDs are expressed asN={1, 2, ...,N}.Considering each RD has a computationally intensive task that needs to be processed. Each RD can offload the task to the MEC server through 5G and beyond network or process the task locally. MEC server has a certain computational resource which is significantly more than RD’s onboard computational resource. However,the computational resource on the MEC server may not be enough for a large number of RDs to offload tasks.

Fig. 3 Centralized-distributed system network architecture for multi-robots

DefineWas the network bandwidth of 5G stations. As we can see in Fig. 3, there is only one 5G station in a zone of robot clusters, and the gap interference can be ignored. If multiple RDs choose to offload tasks at the same time, the wireless bandwidth will be evenly allocated to offloaded RDs for uploading data.

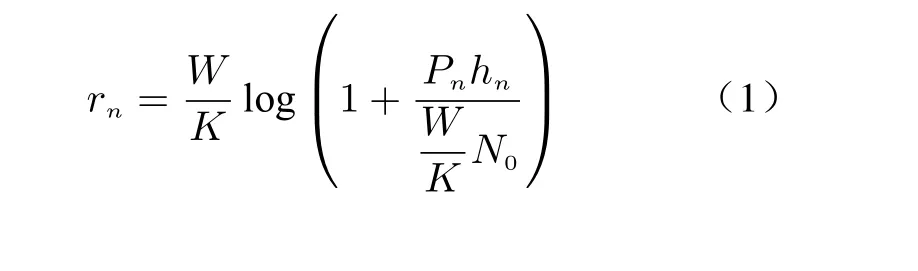

Based on the theoretical knowledge, for RDn, the achievable upload data rate is

whereKis the number of offloaded RDs,Pnis the energy constrain during the data uploading of RDn,hnis the channel gain of RDnin the 5G and beyond channel, andN0is the variance of the complex Gaussian white noise in the channel.

2.3 Task Model

Considering RDnhas a computationally-intensive taskRn=(Dn,Bn,τn), RD can choose whether to process the task on the MEC server or locally on the computing unit of RD itself.Bnrepresents the amount of data to calculate the task,Dnrepresents the CPU/GPU cycles of processing the task, andτnrepresents the maximum waiting time. If the processing time is longer thanτn, it will be considered unacceptable. Each task of RD cannot be separated into multiple small tasks, which means the task is either processed locally or being offloaded to the MEC server to process. Thus,A=[α1,α2,...,αn] represents the decision of RDn, whereαn= 1 meansRnis offloaded to MEC to process, otherwise,αn= 0.

2.4 Computation Model

2.4.1 Local Computing Model

and defineEn

las the energy consumption of processing the taskRn, which can be expressed as

2.4.2 MEC Computing Model

When RDnchoose to execute taskRnat MEC server, the process can be divided into three steps. First, RDnwill upload sufficient data to 5G station and 5G station will transmit them to MEC server. Secondly, MEC will allocate computing resource for the taskRnand process the data for final results. Thirdly, MEC sends back the results to RDnby 5G station.

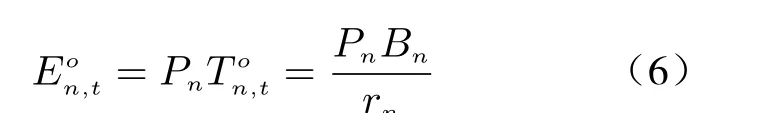

wherernmeans the upload rate from RDnto the 5G station. The energy consumption of this step isn

2.5 Problem Formulation

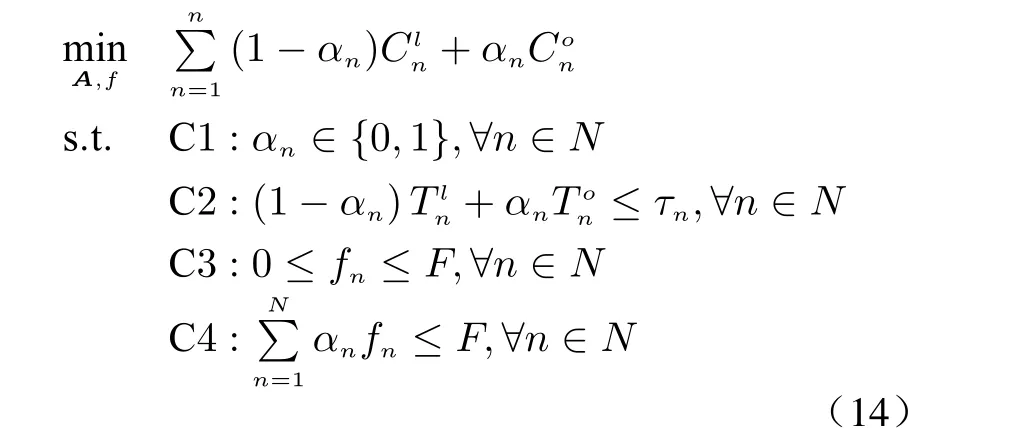

In this section, the computing decision and computational resource allocation problems are formulated as an optimization problem. The main optimization goal of this paper is to obtain the best performance of the whole system with the lowest total energy consumption and total delay.Therefore, under the constraints of the robot’s maximum tolerable delay and total computing resources, the problem can be expressed as

In (14),A=[α1,α2,...,αn] is the offloading decision vector,Fis the overall computational resource of the MEC server.

C1 represents that each RD will choose whether to process taskRnlocally or to offload tasks to the MEC server to process.

C2 represents that regardless of the task processing method, the time delay should not be greater than the RD’s maximum tolerable time.

C3 indicates that the computational resource allocated to taskRnshould not exceedFallof the MEC server.

C4 indicates that the overall computational resources allocated to tasks should not exceedFallof the MEC server.

The above optimization problem can be solved by finding the joint optimal value of the decision vectorAand the computational resource allocationf. However, sinceAis a binary variable, the feasible region of the above problem is not convex. Furthermore, if the number of robots increases, the size of the optimization problem can increase rapidly, which can lead to an exponential increase in the difficulty of solving the problem. So instead of solving it with traditional optimization solving methods, we propose reinforcement learning methods to find the optimalAandf.

3 Reinforcement-Based Solution

In this section, we first define the RL state,action and reward. Then we introduce an efficient RL method: the Q-learning method.

3.1 State, Action, and Reward of RL

The mathematical basis of reinforcement learning is the Markov decision process (MDP)[24].An MDP has three important components: state,action, and reward.

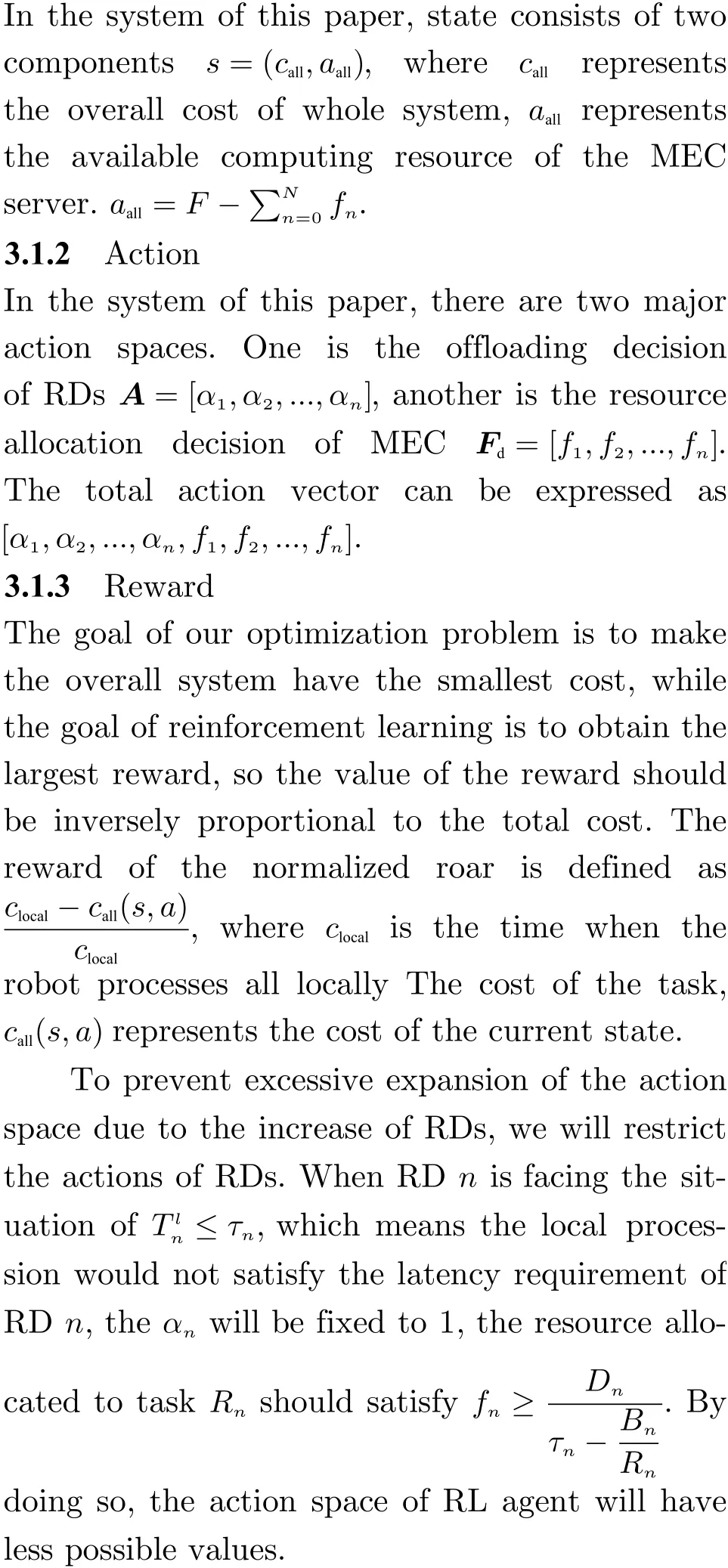

3.1.1 State

3.2 Q-learning Method

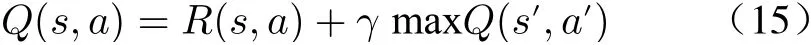

Q-learning is a model-free RL algorithm, and the agent makes decisions based on the learned Qvalues [25]. Each state-action pair has a valueQ(s,a). For each step, the agent computesQ(s,a)and stores it in the Q table, thenQ(s,a) can be given as

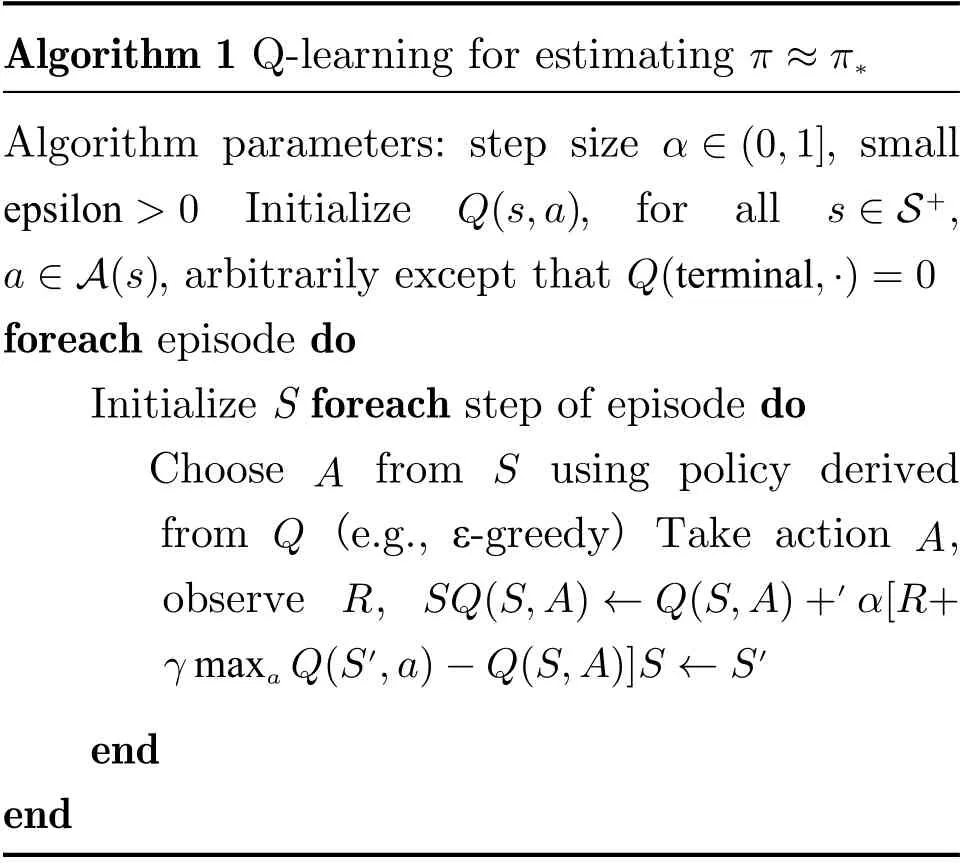

wheres,ais the current state and action,s′,a′is the next state and next action.γis the learning rate, satisfying 0≤γ ≤1. By adjustingγ, the agent can pay more attention to current benefits (γapproaches 0) or long-term benefits (γapproaches 1). After each step, the value ofQ(s,a) is iterated, and finally the agent chooses the optimalAandf. Algorithm 1 shows the process of the Qlearning algorithm.

Algorithm 1 Q-learning for estimating π ≈π*Algorithm parameters: step size , small Initialize , for all, arbitrarily except that foreach episode do Initialize S foreach step of episode do A S Q A R SQ(S,A)←Q(S,A)+′α[R+γ maxa Q(S′,a)-Q(S,A)]S ←S′α ∈(0,1]epsilon >0 Q(s,a) s ∈S+,a ∈A(s) Q(terminal,·)=0 Choose from using policy derived from (e.g., ε-greedy) Take action ,observe ,end end

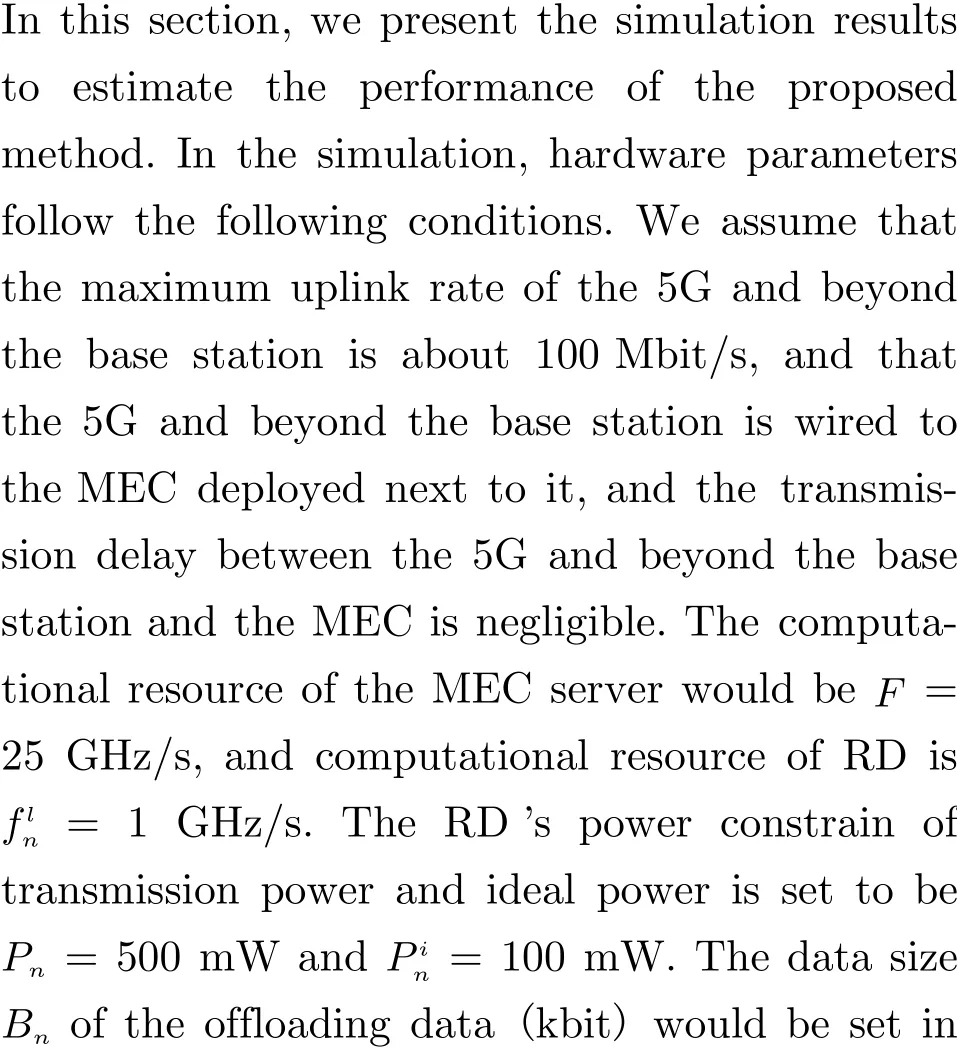

4 Simulation Results

In comparison, we set up two baseline models “Fully local” & “Fully MEC”.“Fully local”means all the computational tasks are processed locally at the RDs, and “Fully MEC” means all the computational tasks are offloaded to the MEC server to process with all computational resources at MEC are fully allocated to all RDs.

First, the overall cost of the system with the increasing number of RDs is tested in Fig. 4. In general, the overall cost of the entire system increases with the number of RDs. The “Fully local” method is not an ideal solution for the system due to the weak computing performance of RDs itself, and the overall cost of the system is always in a high state. The Q-learning way has lower total system latency in all experimental cases, and with the increase of the number of robots, the overall cost increases the least. “Fully MEC” has the same overall cost as the Q-learning way when there are only 3 robots, but as the number of robots increases, the overall cost increases significantly. Obviously, the resources of MEC cannot support a large number of robots that choose MEC to process tasks. Under the premise of a large number of robots and limited MEC resources, using Q-learning to selectively offload tasks will have better overall system performance.

Fig. 4 Overall cost with different number of robots

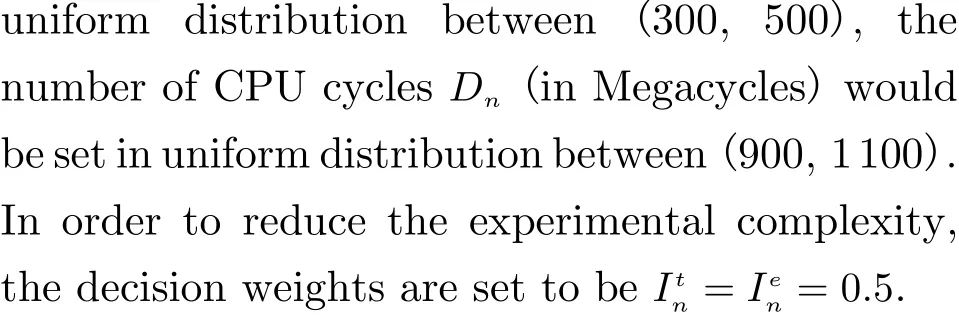

In Fig. 5, different computational resource situations of the MEC server are set to test the overall cost of the system. The number of robots is set to 5. As can be seen from the figure, the Qlearning method always has the lowest overall cost and is the optimal solution. The “Fully local” method does not use the MEC server, so the overall cost always keeps the same value. The“Fully MEC” approach is significantly affected by MEC computing resources. The “Fully MEC”method has the worst performance when the MEC server computing resourceF <3 GHz/s.The “Fully MEC ” and Q-learning curves decrease as the computing power of the MEC server increases, because the execution time becomes shorter if each UE is allocated more computing resources. The results show that when the MEC has massive resources, the overall cost is mainly affected by other constraints, such as upload resource energy consumption, network bandwidth and so on.

Fig. 5 Overall cost with different MEC computational resource

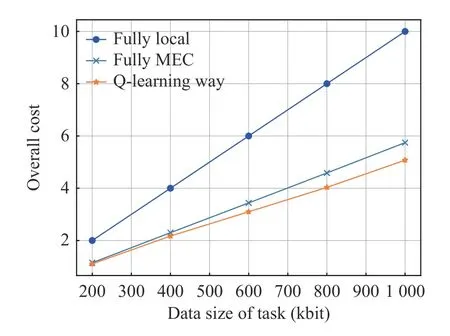

In Fig. 6, the case where the total cost varies with the data sizeBnof the task are tested, the number of robots is set to 5. The total cost of all methods increases with the amount of data for offloading tasks because of the larger the amount of data, the more time and energy consumption for offloading. The Q-learning method achieves the best results due to its slower growth trend than other methods. As the number of data increases, the “Fully local” curve grows much faster than the other three methods, indicating that the larger the amount of data for the task,the greater the latency and energy gains we get offloading to the MEC computation. Furthermore, we can see that an increase in the size of the data leads to a significant increase in the total cost of the MEC system becauseDnandBnare positively correlated, thus achieving an exponential increase in our objective function.

Fig. 6 Overall cost with different task data size

5 Conclusion and Future Work

In this paper, we propose a framework for the robot autonomous collaboration method based on 5G and beyond and edge computing. Under this framework, we propose two problems of offloading decision and resource allocation and solve them synthetically using RL. In the simulation experiments, we compared other baseline models and found that the Q-learning-based method has better performance in various situations. This paper provides important experience for autonomous robotic collaboration. In the future,we will further study the problem of task offloading and resource allocation under the collaborative computing mode of cloud, edge and terminal.

杂志排行

Journal of Beijing Institute of Technology的其它文章

- Resource Allocation for Uplink CSI Sensing Report in Multi-User WLAN Sensing

- A Multi-Vehicle Cooperative Localization Method Based on Belief Propagation in Satellite Denied Environment

- Optimize the Deployment and Integration for Multicast-Oriented Virtual Network Function Tree

- Performance Analysis for Mobility Management of Dual Connectivity in HetNet

- Symbol Synchronization of Single-Carrier Signal with Ultra-Low Oversampling Rate Based on Polyphase Filter

- Intelligent Reflecting Surface with Power Splitting Aided Symbiotic Radio Networks