CDP-GAN: Near-Infrared and Visible Image Fusion Via Color Distribution Preserved GAN

2022-09-08JunChenKangleWuYangYuandLinboLuo

Jun Chen, Kangle Wu, Yang Yu, and Linbo Luo

Dear Editor,

This letter is concerned with dealing with the great discrepancy between near-infrared (NIR) and visible (VS) image fusion via color distribution preserved generative adversarial network (CDP-GAN).Different from the global discriminator in prior GAN, conflict of preserving NIR details and VS color is resolved by introducing an attention guidance mechanism into the discriminator. Moreover,perceptual loss with adaptive weights increases quality of highfrequency features and helps to eliminate noise appeared in VS image. Finally, experiments are given to validate the proposed method.

VS images appear poor details or visual effects in non-ideal lighting conditions such as low light, haze or noisy conditions due to the dependence on object reflection and scene illumination [1]. To solve this problem, the straightforward method is to increase the sensitive area of sensors. However, this requires higher hardware requirements and the improvement of image shadow effect is limited to a very low degree. Another solution is to obtain high signal instantaneous ratio NIR gray image by adding NIR complementary light, and then fuse it with color VS image. The NIR and VS image fusion aims to generate a clean image with considerable detail information, which has important application values in low illumination fields such as night monitoring.

Different fusion schemes have been developed to exploit combining complementary information in VS and NIR images, such as multi-scale decomposition-based, regularization-based and mathematical statistics-based fusion methods. However, most existing methods use manual methods to design transmission model. To improve the fusion effect, the design process is becoming more and more complex. Consequently, it is harder to avoid the implementation and computational efficiency. On the contrary, image fusion methods based on deep learning mostly adopt end-to-end model,which can directly generate fused images using inputs without complicated activity level measurements and fusion rules [2].Moreover, the deep network is trained with a large number of source images and thus more informative features with specific characteristics could be extracted.

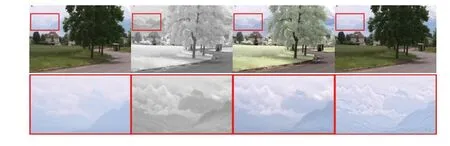

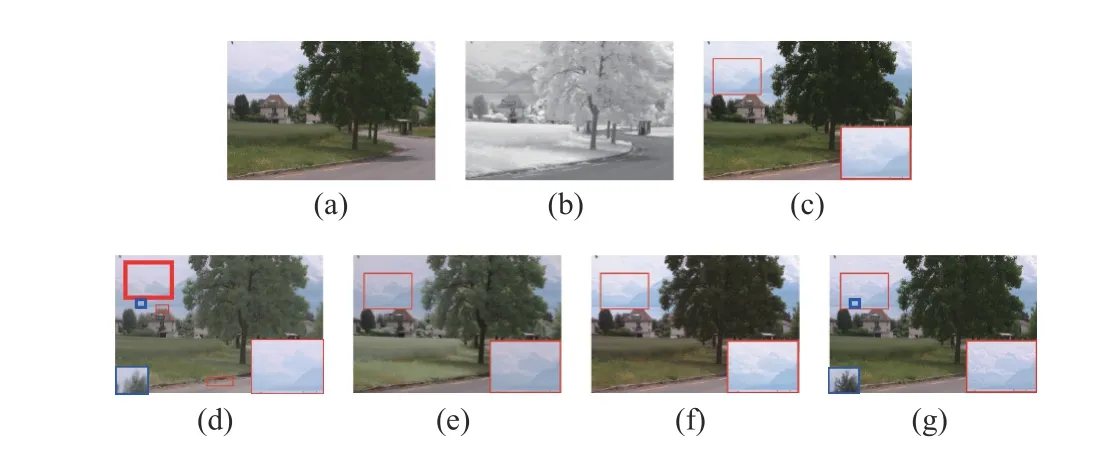

In general, deep learning-based methods have been proved to be effective for image fusion whereas deep learning-based NIR and VS image fusion is seldom studied as far as we have acknowledged. To a certain extent, infrared (IR) and VS image fusion is similar to task of NIR and VS image fusion, for they both motivate to transfer the useful information in IR/NIR image to VS image. And yet, the former aims to transfer the local salient region in IR image, so little attention is paid to preserving color appearance of VS image. On the contrary, NIR and VS image fusion methods are designed to transfer the global details [3]. Therefore, color appearance of the VS color image will inevitably be disturbed while IR and VS image fusion methods are directly applied to fuse NIR and VS images. To verify the above analysis, we present a representative experiment in Fig. 1.The third image is obtained by a recent deep learning-based unified image fusion method (termed as IFCNN). It fails to retain the color appearance compared to the VS image and serious color distortion occurs in its fusion result. Most existing NIR and VS image fusion methods fail to balance these two tasks thus similar failure as IFCNN occurs in their result. Fortunately, due to the capability to fit multiple distribution characteristics [4], the generative adversarial network(GAN) could achieve these two goals and preserve the distribution of detail and color information simultaneously.

Fig. 1. Schematic illustration of image fusion. From left to right: VS image,NIR image, fusion result of a recent deep learning-based unified image fusion method [5], and fusion result of our proposed CDP-GAN.

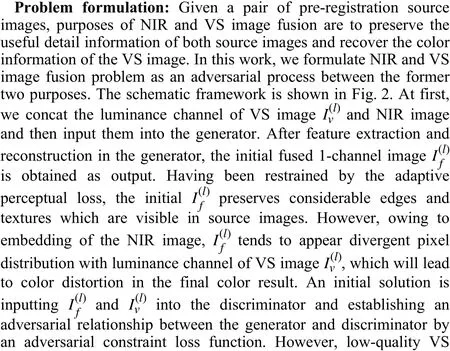

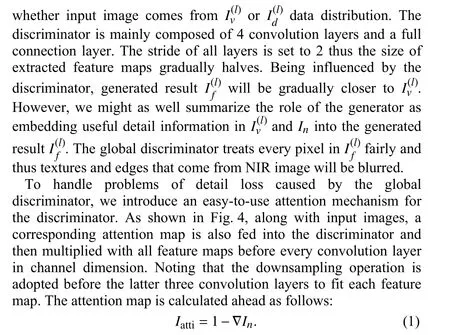

On the basis of above analysis, we propose a GAN-based NIR and VS image fusion architecture with color distribution preservation,termed as CDP-GAN. We formulate NIR and VS image fusion as an adversarial game between generator and discriminator. The generator aims to generate a fused image that contains considerate details,whereas the discriminator attempts to constrain the fused image to have similar pixel intensity as noise-free VS image so that final fused image would not suffer from color distortion. To prevent details from being blurred by the global discriminator, an easily realized attention mechanism is combined with discriminator, which enforces discriminator to pay more attention to VS region while ignoring NIR texture region. Therefore, CDP-GAN could produce a noise-free result that not only contain rich textures, but also retain the highfidelity color information compared with VS image.

Fig. 2. Framework of the proposed CDP-GAN for NIR and VS image fusion.

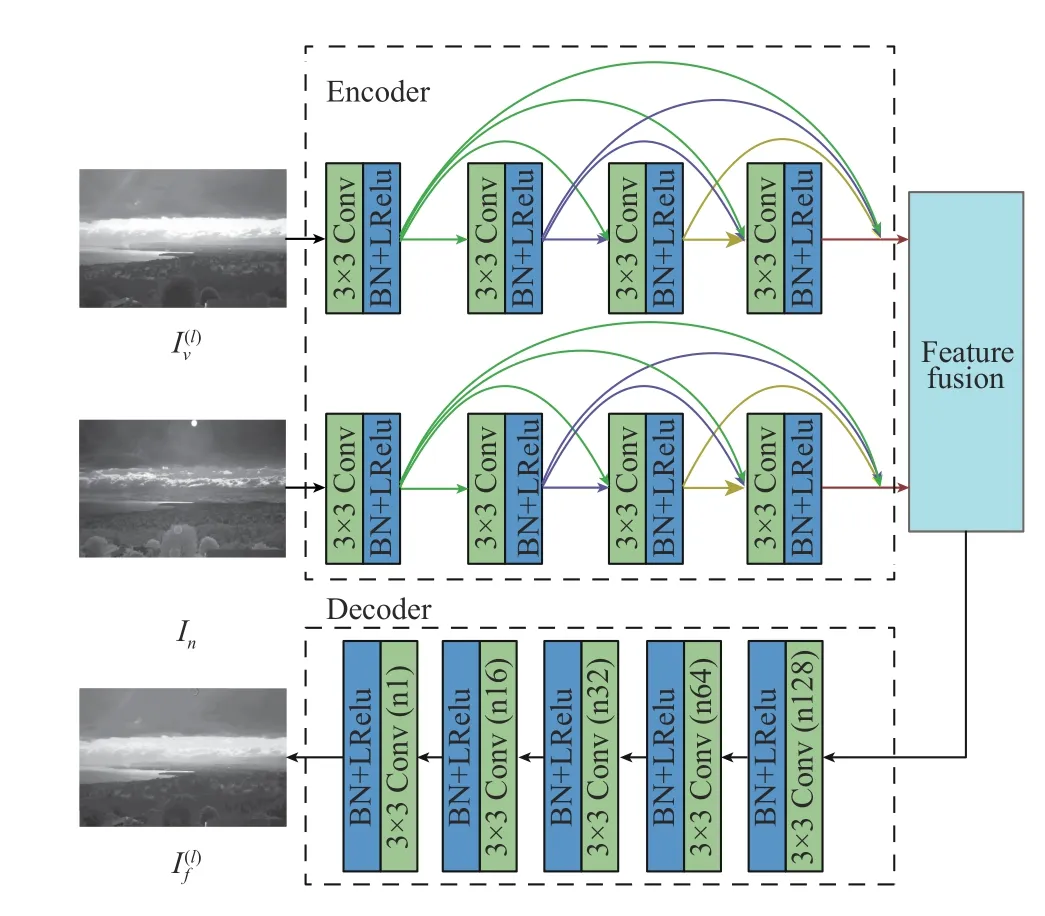

Network architecture: As shown in Fig. 3, the generator of CDPGAN contains two encoder networks, a feature fusion module and a decoder network. To reduce computational complexity while training, these two encoder networks have the same architecture that consists of 4 convolution layers that adopt 3×3 filter consistently,with weights shared between them. In order to reduce gradient loss,compensate for feature loss and reuse previously calculated features,the encoder adopts dense connection and establishes short direct connection between each layer and all layers in feedforward mode[7]. The batch normalization is adopted on the heels of each layer to quicken the training and avoid gradient explosion [8]. The stride of each convolution layer is empirically set to 1. To avoid dropping out detail information in the source images, we remove downsampling operation in convolution. Moreover, all convolution layers except the last one use leaky ReLU activation function which could deal with dying neurons in traditional ReLU and speed up the convergence.

Fig. 3. The overall architecture of generator.

Fig. 4. The overall architecture of discriminator.

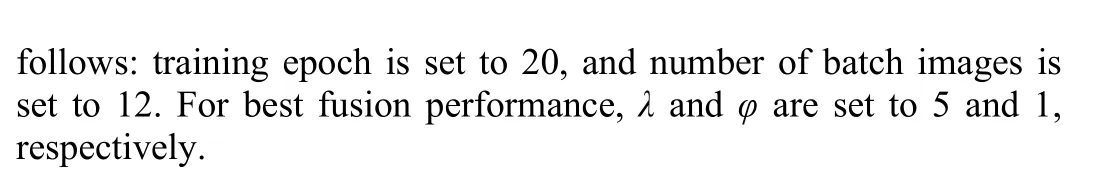

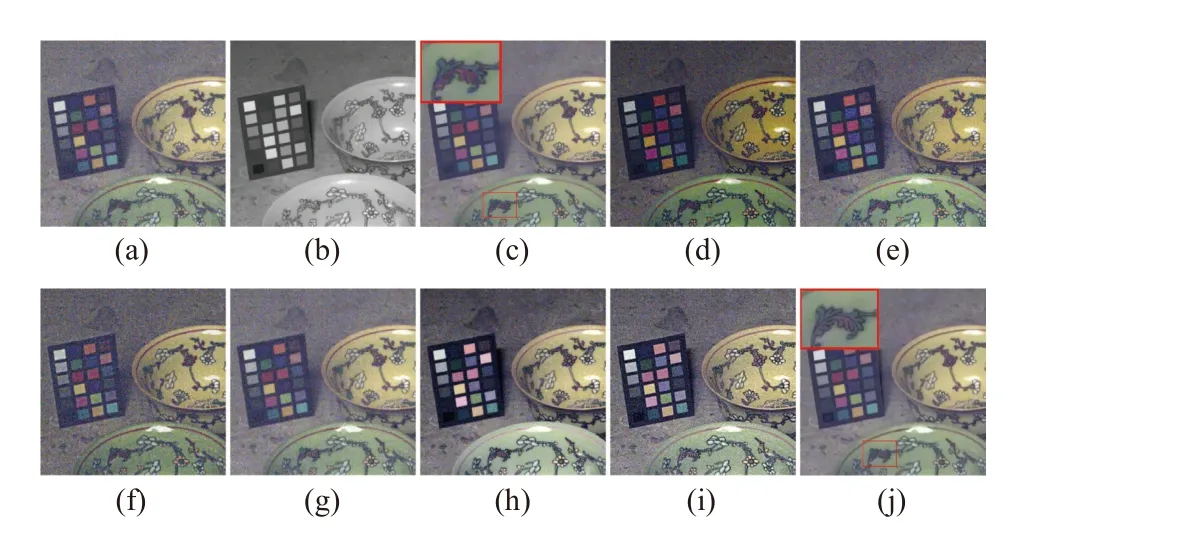

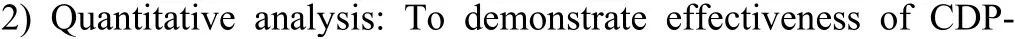

1) Qualitative analysis: To prove superiority of CDP-GAN subjectively, we provide two sets of representative qualitative results in Figs. 5 and 6. Their results could be classified into two categories.The first category is more like VS image thus natural color appears,such as VSM and CNI. However, textures are not rich enough in their results. More specifically, mountains in Figs. 5(c) and 5(f) are unclear compared with other results. In conclusion, VSM and CNI have little ability to retain details in NIR image. On the contrary, the second category contains much detail information about mountains in Figs. 5(d), 5(e) and 5(g)-5(i), but their color appearance is unnatural and color distortion occurs, such as GF, CVN, WLP, U2Fusion and SDNet. CDP-GAN can not only preserve details under non-ideal scene, but also has hardly any color distortion.

Fig. 5. Qualitative comparison of different fusion algorithms on image Img1.(a) VS image; (b) NIR image; The fused images are obtained by (c) VSM; (d)CVN; (e) WLP; (f) CNI; (g) GF; (h) U2fusion; (i) SDNet; (j) our algorithm.

Fig. 6. Qualitative comparison of different fusion algorithms on image Img2.(a) VS image; (b) NIR image; The fused images are obtained by (c) VSM; (d)CVN; (e) WLP; (f) CNI; (g) GF; (h) U2fusion; (i) SDNet; (j) our algorithm.

Limited by device, VS image in Fig. 6 contains much noise. In CDP-GAN, adaptive perceptual loss measures feature rather than pixel-wise similarity. Features obtained by VGG-16 are robust to noise in protecting structure [15] thus generator could produce noisefree result. Moreover, to prevent introducing noise, initial denoised VS image is adopted in discriminator. Initial denoising would remove details in VS color image along with the noise. However,adaptive perceptual loss can transfer details of captured NIR gray image to fused result. Therefore, the final fused image can be both noise-free and detail-preserved. As shown in Fig. 6, only VSM and CDP-GAN could produce noise-free image. However, as highlighted by red rectangles in Fig. 6, the vein is more clear in our result and thus CDP-GAN could not only eliminate noise in VS images but also retain considerable details which are of benefit to human visual system.

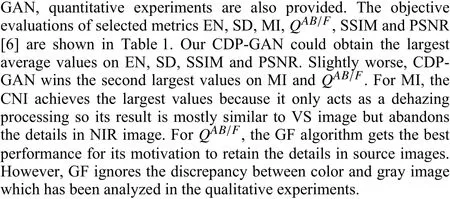

Table 1.Quantitative Comparison of Different Fusion Algorithms

3) Validation experiment: To verify effect of improvements,ablation experiments are shown in Fig. 7. While pixel-wise loss is adopted in generator, result contains less details than our result. As framed in Fig. 7(c), mountains in the distance are not clear enough,which proves that details have not been transmitted to fused image.On the contrary, we can see from Fig. 7(g) that mountains obtained by proposed loss are more complete and larger detail preservation degree is achieved. While no attention mechanism, although result appears similar color information, detail blurred and halo artifacts which are schematically shown in Fig. 7(d) occur. Moreover, it can be concluded that discriminator with multiplication-attention mechanism could achieve greater details and color retention by comparing Figs. 7(e) and 7(g). For feature fusion strategy, result obtained with addition achieves goal of preserving detail and color information. However, concatenation is more suitable for feature fusion as generator with this strategy could recover clearer textures compared with the addition strategy as shown in highlighted region.

Fig. 7. Ablation experiment. (a) VS image; (b) NIR image; The fused images obtained (c) with pixel-wise loss; (d) without attention mechanism in discriminator; (e) with concatenation-attention mechanism in discriminator;(f) with addition strategy in feature fusion; (g) with multiplication-attention mechanism in discriminator and concatenation strategy in feature fusion.

Conclusion: In this letter, we propose a new framework for NIR and VS image fusion termed as CDP-GAN. It can simultaneously keep color distribution in VS image and detail information in both source images. Specifically, an adaptive perceptual loss is introduced to increase detail preservation degree. Moreover, we unite the global discriminator with proposed attention mechanism, which effectively eliminates color distortion. Experiments verify that our CDP-GAN can not only retain useful information in source images but also act as a denoising method with the aid of NIR image. Compared with other methods on publicly available datasets, our CDP-GAN is superior in both qualitative and quantitative aspects.

Acknowledgments: This work was supported by the National Natural Science Foundation of China (62073304, 41977242, 61973283).

杂志排行

IEEE/CAA Journal of Automatica Sinica的其它文章

- Autonomous Maneuver Decisions via Transfer Learning Pigeon-Inspired Optimization for UCAVs in Dogfight Engagements

- Interval Type-2 Fuzzy Hierarchical Adaptive Cruise Following-Control for Intelligent Vehicles

- Efficient Exploration for Multi-Agent Reinforcement Learning via Transferable Successor Features

- Reinforcement Learning Behavioral Control for Nonlinear Autonomous System

- An Extended Convex Combination Approach for Quadratic L 2 Performance Analysis of Switched Uncertain Linear Systems

- Adaptive Attitude Control for a Coaxial Tilt-Rotor UAV via Immersion and Invariance Methodology