SSL-WAEIE: Self-Supervised Learning With Weighted Auto-Encoding and Information Exchange for Infrared and Visible Image Fusion

2022-09-08GuchengZhangRencanNieandJindeCao

Gucheng Zhang, Rencan Nie, and Jinde Cao,,

Dear editor,

Infrared and visible image fusion (IVIF) technologies are to extract complementary information from source images and generate a single fused result [1], which is widely applied in various high-level visual tasks such as segmentation and object detection [2].

Traditional fusion methods mainly include spatial domain-based methods and multi-scale decomposition-based (MSD) methods. The former ones, such as the guided filter-based methods (GF) [3] and Bayesian [4], are to produce fusion images generally by weighting local pixels or saliency of source images. MSD methods, including TE-MST [5], Hybrid-MSD [6], MDLatLRR [7], etc., first decompose the source images into multi-scale features, and further employ fusion rules to integrate these features at each level, for a reconstructed result. However, how to pixel-wisely measure the importance or fusion contribution of source images is always an open problem in these methods. Such they must elaborately design reasonable weighed strategies or fusion rules.

In recent years, deep learning has emerged as a powerful tool to perform image fusion tasks [8], [9]. Different from traditional methods, it can adaptively extract the multi-level features and automatically reconstruct the result we expected, guided by a reasonable loss. Supervised learning-based methods, such as FuseGAN [10], are mainly devoted to multi-focus image fusion because there is ground truth for each pair of training images. For our IVIF task, the most popular ways are unsupervised learning-based methods, including SeAFusion [2], FusionGAN [11], DenseFuse [12], RFN-Nest [13],DIDFuse [14], and DualFuse [15], etc., in which the network architecture is generally designed as an encoder-decoder. Similar technology is also utilized for multi-exposure image fusion [16].However, we are not uncertain whether the features from the encoder are all the best ones. For this point, self-supervised learning-based algorithms, such as TransFuse [17] and SFA-Fuse [18], have been developed, where the encoder is designed to conduct an auxiliary task and extract the features with a prior. Nevertheless, we can also note in these methods that the features from the encoder are directly employed to reconstruct the fused result, only guided by a loss design. That is, their importance or fusion contribution not be well measured.

For contribution estimation, Nieet al.[19] stated a very novel idea based on information exchange. A person with less knowledge tends to learn more information from the other one with more knowledge,and vice versa, implying that this one will provide fewer contributions when they cooperatively perform a certain task. Based on this principle, the work [19] constructed a pulse coupled neural network(PCNN)-based information exchange module, and applied it to perform the contribution estimation for multi-modal medical image fusion, where the fusion contribution can be easily estimated, via an exchanged information-based inverse proportional rule. Unfortunately, this module can not be optimized by the derivative-based image fusion method, due to the existing hard threshold of PCNN,and not be also trained on a large-scale dataset due to the complicated structure of a neuron.

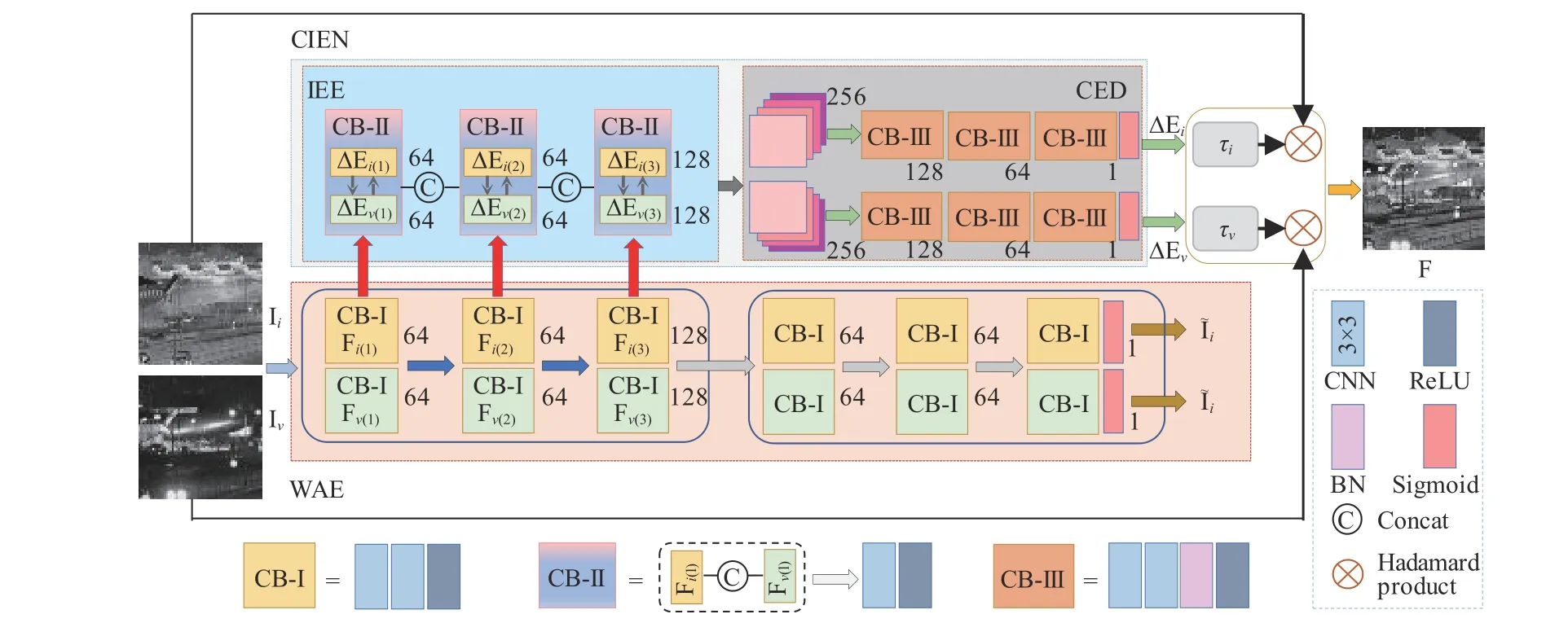

To tackle these challenges above, in this letter, we propose a selfsupervised learning-based fusion framework, named SSL-WAEIE,for the IVIF task. There are two key ideas in our method. First, we design a weighted auto-encoder (WAE) to extract the multi-level features to be fused, from source images. Second, inspired by the basic principle of information exchange in [19], we further construct a convolutional information exchange network (CIEN) to easily complete the fusion contribution estimation for source images. The main contributions of our method can be summarized as follows.

1) A novel self-supervised learning-based fusion framework: To our best knowledge, it is the first try to perform the image fusion via a convolutional neural network (CNN)-based information exchange in a manner of self-supervised learning.

2) A new network architecture: Our SSL-WAEIE consists of a WAE and a CIEN, where the WAE designed for an auxiliary task is to extract the multi-level features from source images, whereas CIEN contributes to estimating the fusion contribution mainly via a CNNbased information exchange module (IEM-CNN).

3) Hybrid losses: We propose two hybrid losses to effectively train the WAE and SSL-WAEIE, respectively.

Fig. 1. Our fusion framework contains a WAE and a CIEN, where the CIEN is composed of a IEE and a CED. Note that each subnetwork is constructed by different CB, and there are three types of CBs, termed as CB-I to CB-III, respectivelly. In particular, a CB-II just corresponds to a IEM-CNN.

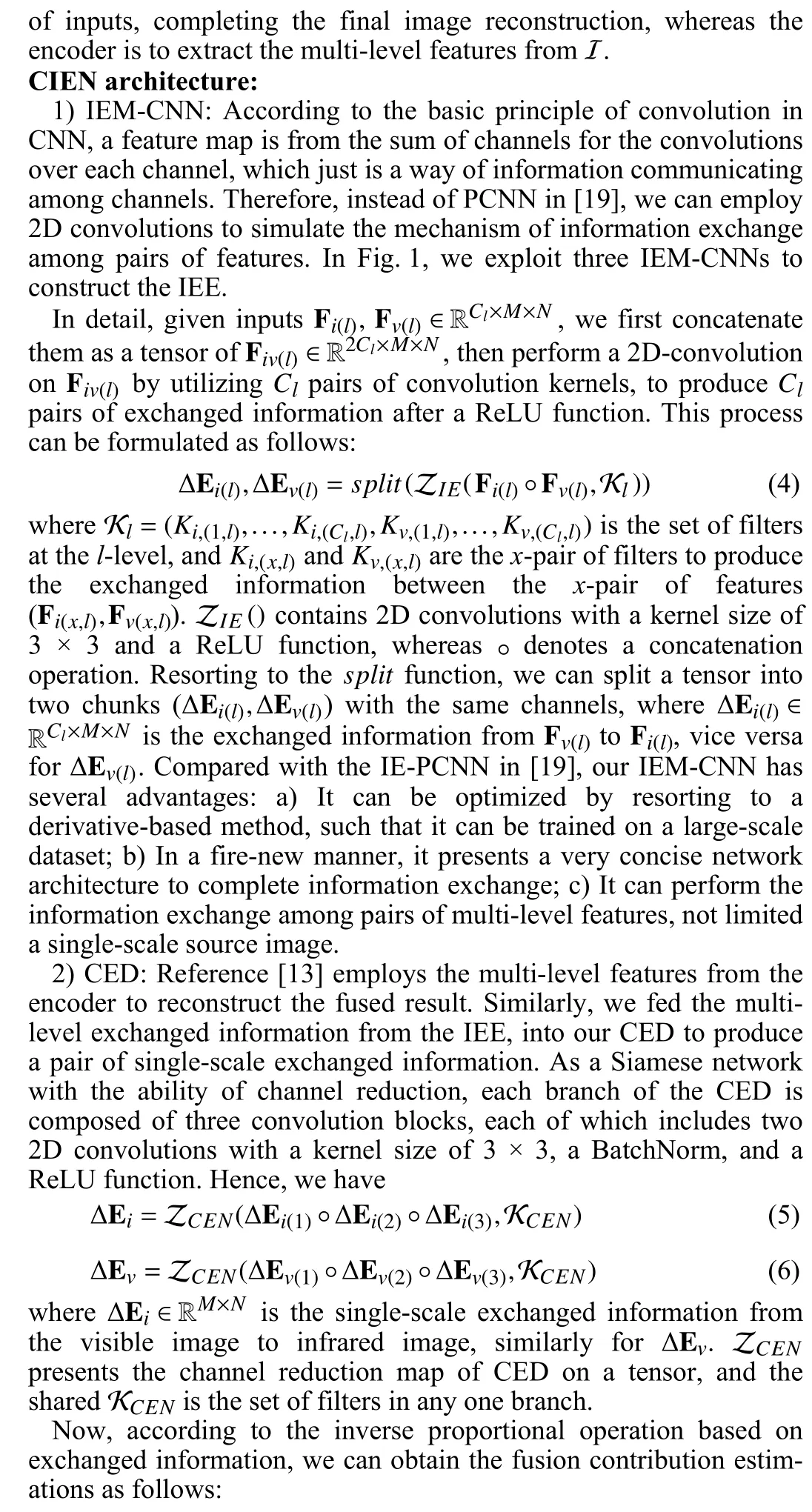

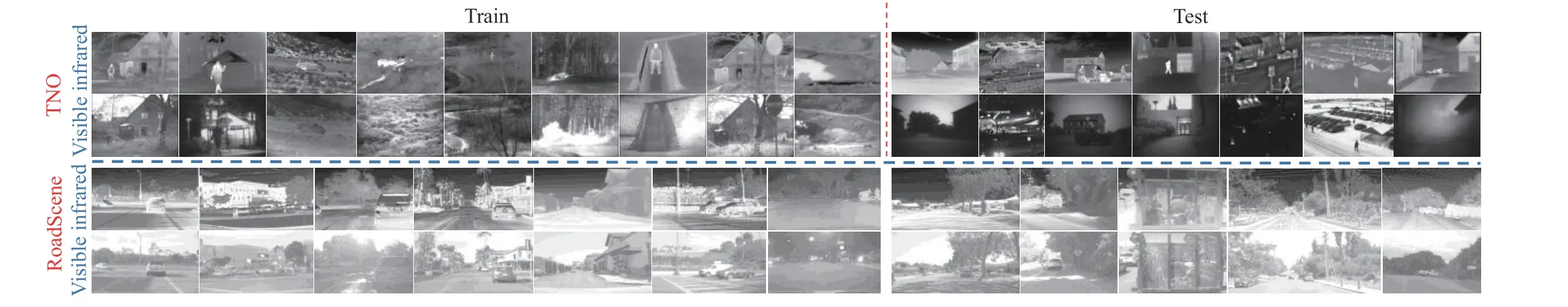

Fig. 2. Several samples of training and test datasets from two public datasets. For the TNO or RoadScene, the first row of each dataset represents the infrared images, whereas the second row denotes the visible images. Additionally, the left parts are the examples of the training samples, wheres the examples of test samples are on the right.

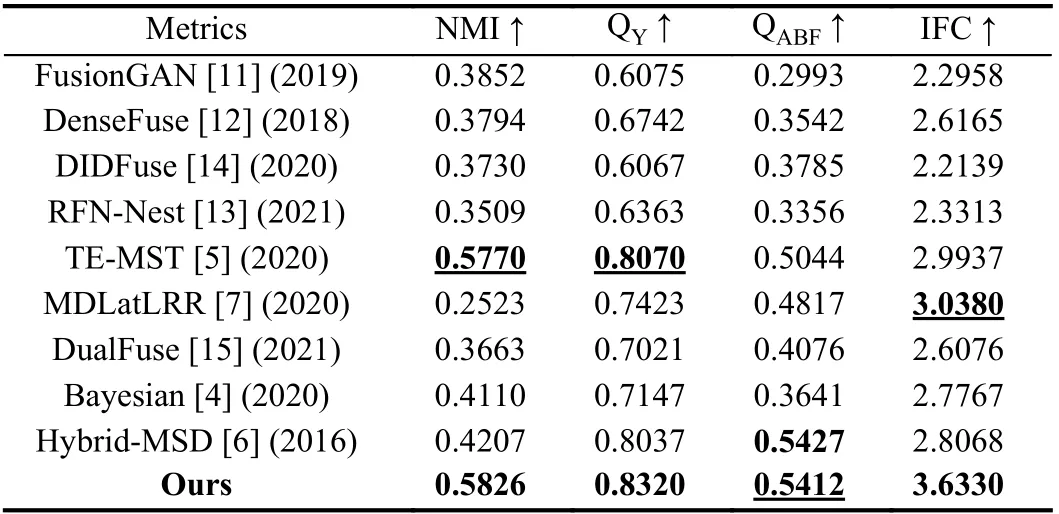

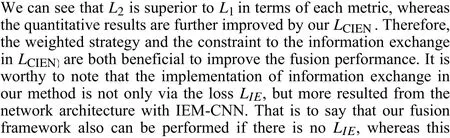

Setups: We compare the fusion results of our SSL-WAEIE with nine state-of-the-art methods, which contain four traditional technologies such as MDLatLRR [7], TE-MST [5], Bayesian [4], and Hybrid-MSD [6], and five deep learning-based algorithms, i.e., FusionGAN[11], DenseFuse [12], RFN-Nest [13], DIDFuse [14] and DualFuse[15]. Moreover, as four typical metrics, normalized mutual information (NMI) [22], Yang’s metrics (QY) [23], gradient-based metric(QABF) [24], and information fidelity criterion (IFC) [25] are employed to quantitatively evaluate the results.

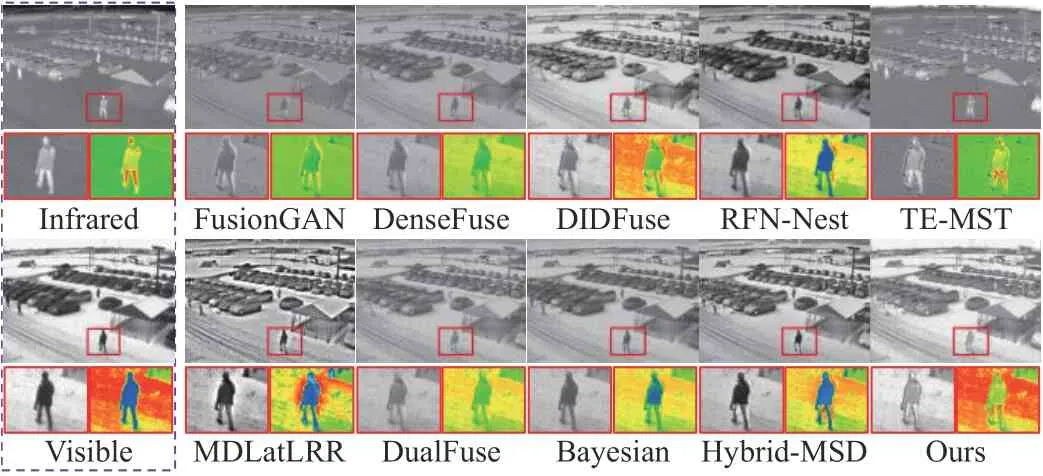

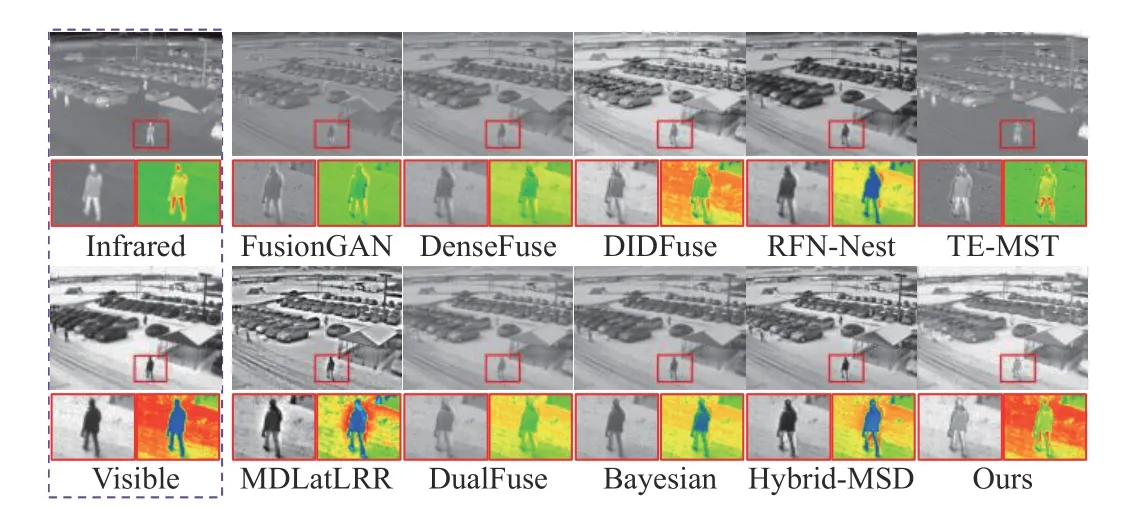

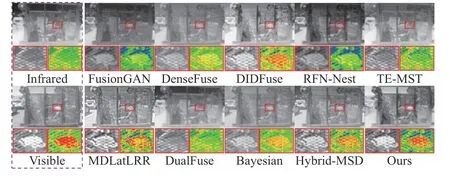

Results: As shown in Fig. 3, the results from FusionGAN,DenseFuse, DualFuse, and TE-MST, suffer from obscure edges for the infrared objects. On the other hand, although RFN-Nest and Bayesian preserve acceptable background from the visible image,their results show low contrast due to luminance degradation.Additionally, MDLatLRR produces obvious artifacts, especially in Fig. 4. DIDFuse and Hybrid-MSD achieve good results, whereas the details and luminance of the targets in the red rectangle are still defective. Compared to these methods, our SSL-WAEIE not only depicts a significant improvement in luminance but also retains more texture details furthest.

Table 1 illustrates that our method gives the best quantitative results in terms of NMI, QY, and IFC, on the TNO dataset, compared with nine competitors, whereas it ranks second for QABF. The same conclusion, on RoadScene dataset, also can be drawn from Table 2.

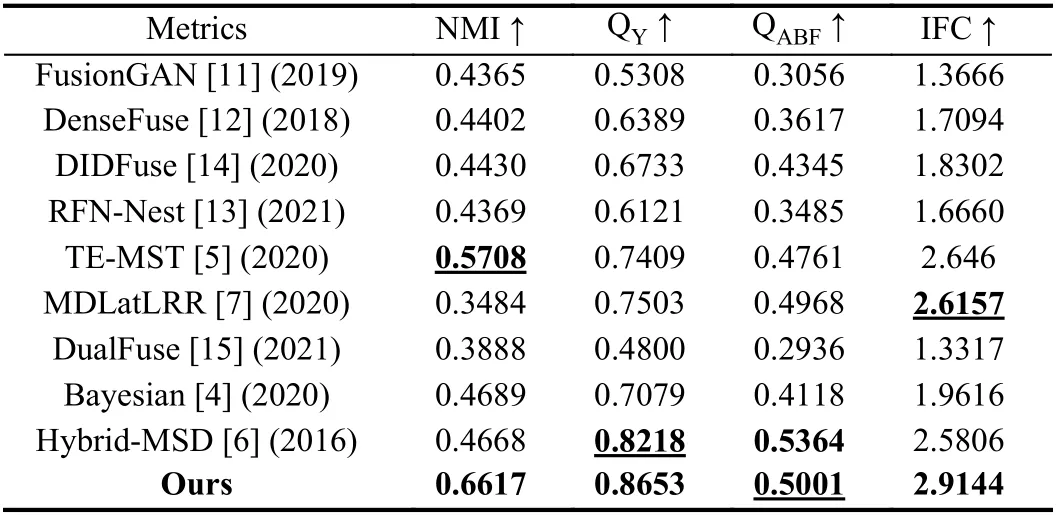

Parameter analysis: The regularization parameterμin our loss plays an important role to train our network. Hence, we vary it from 9 to 11 to investigate its influence on the fusion performance related to each metric. Table 3 shows that asμincreases, all metrics increase before 10. They then decrease on the whole and fall into an oscillation period. Hence, we take the value ofμas 10 for our SSL-WAEIE.

Ablation study:

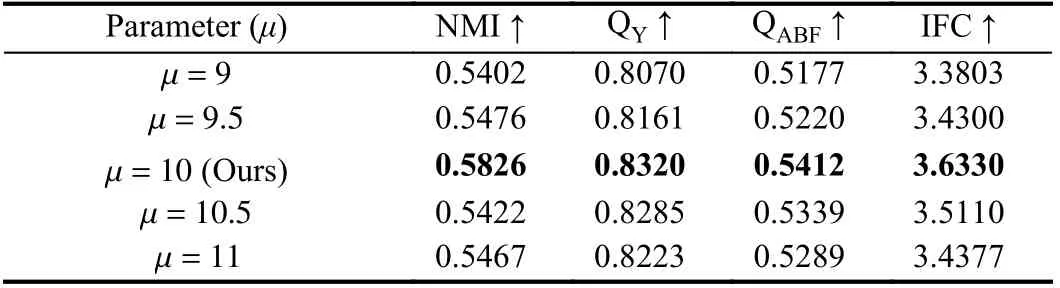

1) Ablation to network: Discarding the WAE and IEE, respectively, our SSL-WAEIE degenerates into two versions: No WAE and No IEE. In the first one, the decoder of the WAE will be destroyed,such that it will be not pre-trained via our auxiliary task. In the other one, the multi-level features from the decoder of the WAE will be directly concatenated and fed into CEN to generate the fused result.Table 4 shows that No IEE presents the worst results in terms of each metric, whereas SSL-WAEIE is the best one. Therefore, WAE and IEE both provide significant contributions to our method, however,IEE is more important. Moreover, let IEE perform the information exchange on only the last level features from WAE, such that our IEE turns to a single-level one (SL-IEE) and only has an IEM-CNN.We can see that SL-IEE, in terms of each metric, is superior to No IEE, whereas it is inferior to the IEE. Hence, instead of single-level information exchange, the multi-level one in our IEE is more beneficial to produce a good result, this also implies that our WAE can extract good multi-level features rather than trivial solutions.regularization can further improve the fusion performance resulting from the mechanism of information exchange.

Infrared FusionGAN DenseFuse DIDFuse RFN-Nest TE-MST Visible MDLatLRR DualFuse Hybrid-MSD Bayesian Ours

Fig. 3. An example of visual comparison among different methods on TNO dataset, where the results of our method have a significant advantage over other algorithms.

Fig. 4. An example of visual comparison among different methods on RoadScene dataset. Compared to other algorithms, our proposed method gives the best visual result.

Table 1.Average Quantitative Results on TNO for Different Methods

Table 2.Average Quantitative Results on RoadScene for Each Method

Table 3.Quantitative Results in Terms of Each Metric for Different Regularization Parameters in , on TNOLCIEN

Table 4.Quantitative Results for the Ablation Study on TNO

Conclusions: In this letter, we propose a novel self-supervised learning-based fusion network for infrared and visible images. A WAE is designed to perform an auxiliary task for the weighted reconstruction over source images. Such that we can further employ the multi-level features from the encoder of WAE to perform the exchanged information-based fusion contribution estimation in CIEN. Particularly, according to the principle of information exchange, we employ CNN to specifically construct an information exchange module, such that our CIEN can easily complete the fusion contribution estimation for source images. Moreover, we employ the weighted strategy and the constraint to the information exchange to design a hybrid loss to effectively train our SSL-WAEIE. Extensive experiments on two public datasets verify the superiority of our method to other state-of-art competitors.

Acknowledgments: This work was supported by the National Natural Science Foundation of China (61966037, 61833005,61463052), China Postdoctoral Science Foundation (2017M621586),Program of Yunnan Key Laboratory of Intelligent Systems and Computing (202205AG070003), and Postgraduate Science Foundation of Yunnan University (2021Y263).

杂志排行

IEEE/CAA Journal of Automatica Sinica的其它文章

- Autonomous Maneuver Decisions via Transfer Learning Pigeon-Inspired Optimization for UCAVs in Dogfight Engagements

- Interval Type-2 Fuzzy Hierarchical Adaptive Cruise Following-Control for Intelligent Vehicles

- Efficient Exploration for Multi-Agent Reinforcement Learning via Transferable Successor Features

- Reinforcement Learning Behavioral Control for Nonlinear Autonomous System

- An Extended Convex Combination Approach for Quadratic L 2 Performance Analysis of Switched Uncertain Linear Systems

- Adaptive Attitude Control for a Coaxial Tilt-Rotor UAV via Immersion and Invariance Methodology