基于日志模板的异常检测技术

2018-10-20王智远任崇广陈榕秦莉

王智远 任崇广 陈榕 秦莉

Abstract: Log analysis is a very important business of cloud computing platform management work, which aims to guarantee the efficiency and availability of cloud platforms. There exist such problems as complex logs and massive logs. A log anomaly detection method is proposed in this paper. First of all, the template is formed by using text clustering based on edit distance; then, on this basis, characteristic vector is constructed, and weak classifier training is used to form scoring feature vector; finally, combined with Random Forest, weak classifier is continuously used to build strong classifier. Experimental results show that mutual information is 0.91 between the real template and log template, which has been relatively close; and the classification accuracy of using Random Forest to build strong classifier on data sets is the best, which could be up to 0.94.

引言

云计算与大数据技术为分布式计算提供了一种解决方案。对于大型的分布式系统来说,出现异常或请求超时可能带来重大损失,而由于系统规模较大,复杂度较高,这就将使系统维护人员面临严峻挑战[1]。分布式系统在运行时会产生大量的日志,日志记录了系统的状态及运行轨迹,系统维护人员可以利用日志来追踪系统的运行状态以发现问题[2]。但由于分布式系统日志量较大,人工查看日志耗时耗力,如何从海量日志中提取有效信息即已成为系统维护的关键研发内容。

基于日志的异常检测技术主要包括如下步骤:日志收集、日志解析、特征抽取、异常检测[3]。文献[4]从源码出发,生成与日志相关的AST树(抽象语法树),从而解析出日志模板,在模板基础之上利用PCA方法找出离群点进行异常检测。该模型的优点在于考虑了消息类型特征,故障检测效果准确。文献[5]在展开实验研究后发现系统出现异常时,进程之间的通信变得频繁活跃,在此基础上设计提出了日志消息频数骤增或激变的异常检测模型,该模型易于实现和应用。文献[6]利用文本与时间相似度抽取日志模板,并基于此来研发构建CFG图(控制流图),从而实现对每条工作流的异常检测,该模型的优点在于支持并行化,适合大规模数据集。文献[7]根据时间序列和概率统计方法进行故障预测,首先使用基于规则的日志处理模式将日志文本转换为结构化数据,将变量值提取出来构建特征,使用不同的时间序列算法对日志中数值型变量做出预测,继而使用基于规则的概率模型进行异常检测,最后实验表明ARMA模型(自回归滑动平均模型)[8]在数据量较大的情况下效果较好。文献[9]基于概率模型进行异常检测,利用日志模板将日志序列化,再利用贝叶斯模型计算时间窗口内与错误日志关联概率最高的日志构成故障日志序列,在线检测过程中匹配与故障序列一样的日志序列判定为异常。文献[10]则优选了分类模型应用于故障预测,又通过日志模板来构建特征向量,还使用SVM(支持向量机)模型[11]进行后续的训练预测。国内日志分析技术大多数都是着重于Web服务器日志的研究,而针对云环境日志的分析迄今为止仍然少见,因此本文就探讨提出了一种针对云环境日志的分析方法。

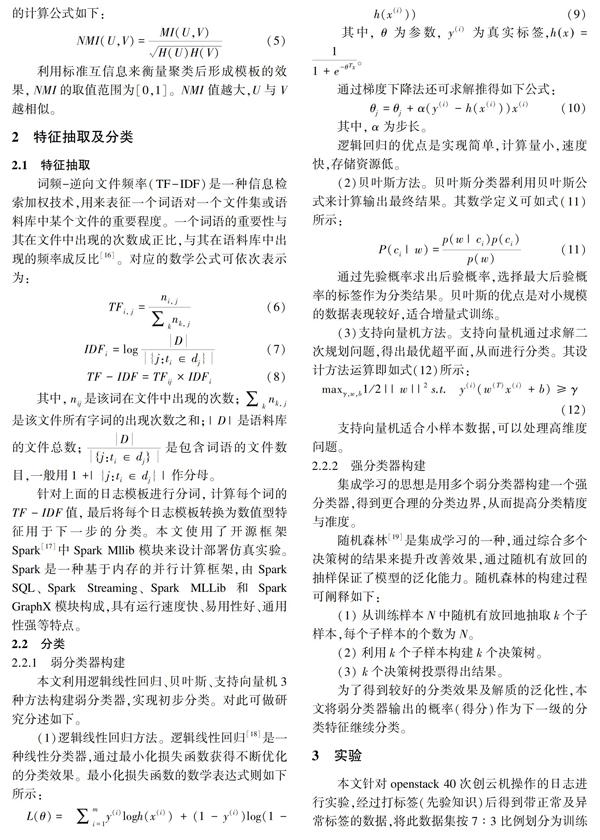

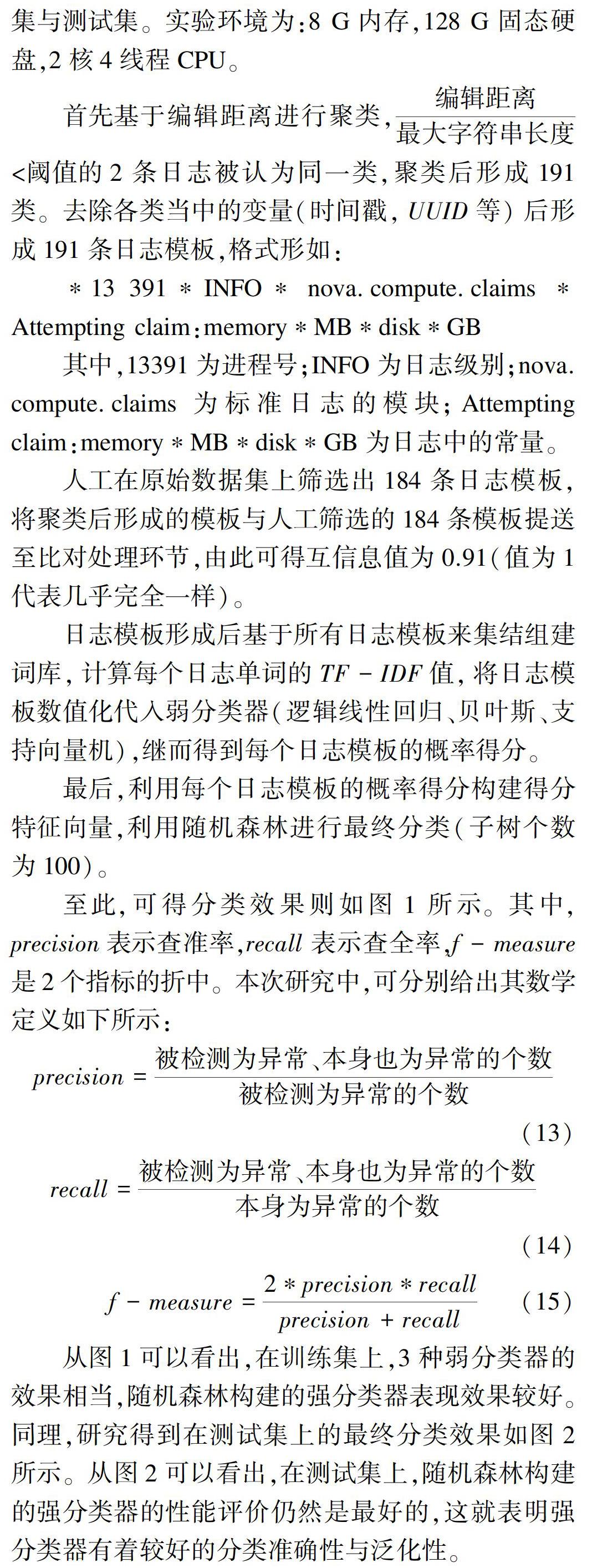

本文首先对日志进行基本的清洗,然后基于Levenshtein distance(编辑距离)求得日志聚类、并形成日志模板,针对日志模板利用TF-IDF(词频-逆文件频率)生成特征向量,使用贝叶斯、逻辑回归、支持向量机、决策树等分类器构建得分特征向量,再利用得分特征向量与随机森林构建强分类器。在结果检验部分,用互信息检测真实模板与聚类形成的模板之间的关联性,利用准确率与召回率检测分类器的效果,最后展示了各种分类器的分类效果。

1日志模板挖掘

1.1编辑距离

编辑距离是Levenshtein提出的用于计算字符串相似度的方法[12]。编辑距离指由原字符串S变化到目标字符串D所需的最少操作次数,其中涉及的操作有:针对单个字符的插入、删除、替换。

1.2模板挖掘

日志中包含一些常量及變量,因此拟从非结构化的日志中启用处理分析将颇具现实难度。模板挖掘是为了寻找一组结构化的日志集合用以表示原始非结构化的日志。目前模板挖掘主要有2类,分别是:基于聚类的模板挖掘技术、启发式的挖掘技术[14-15]。本文使用基于编辑距离的模板挖掘方法。首先,对日志进行预处理,将日志中一些变量(IP,UUID等)用空字符串替换,然后运用算法1计算编辑聚类,最后形成日志模板。这里,给出算法1的研发代码可见如下。

4结束语

本次设计开发得到的重点研究成果可阐释如下。

(1)本文基于编辑距离进行聚类形成日志模板,在模板的基础上构建TF-IDF特征向量,从而在弱分类器的基础上构建强分类器。实验表明,在训练集与测试集上利用弱分类器构建的强分类器,无论是查全率、或是查准率均有可观的提升。

(2)在提取日志模板的提取過程中,阈值设定采取了人工方式,灵活性较差;分类器也存在阈值人工设定,灵活性差的问题。因此,如何自动设定阈值是本文未来的研究方向。

参考文献

[1] FU Qiang, LOU Jianguang, WANG Yi, et al. Execution anomaly detection in distributed systems through unstructured log analysis[C]//2009 Ninth IEEE International Conference on Data Mining. Miami,Florida: IEEE, 2009: 149-158.

[2] TANG Liang, LI Tao, PERNG C S. LogSig: Generating system events from raw textual logs[C]// Proceedings of the 20th ACM International Conference on Information and Knowledge management. Glasgow, Scotland, UK:ACM, 2011:785-794.

[3] HE Shilin, ZHU Jieming, HE Pinjia, et al. Experience report: System log analysis for anomaly detection[C]// 2016 IEEE 27th International Symposium on Software Reliability Engineering (ISSRE). Ottawa, ON, Canada:IEEE, 2016:207-218.

[4] XU Wei, HUANG Ling, FOX A, et al. Detecting large-scale system problems by mining console logs[C]// Proceedings of the ACM SIGOPS 22nd symposium on Operating Systems Principles. Big Sky, Montana, USA:ACM, 2009:117-132.

[5] LIM C, SINGH N, YAJNIK S. A log mining approach to failure analysis of enterprise telephony systems[C]// IEEE International Conference on Dependable Systems and Networks with FTCS and DCC. Anchorage, AK, USA:IEEE, 2008:398-403.

[6] NANDI A, MANDAL A, ATREJA S, et al. Anomaly detection using program control flow graph mining from execution logs[C]// Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. San Francisco, California, USA:ACM, 2016:215-224.

[7] SAHOO R K, OLINER A J, RISH I, et al. Critical event prediction for proactive management in large-scale computer clusters[C]// Proceedings of the ninth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. Washington, DC, USA:ACM, 2003:426-435.

[8] TINGSANCHALI T, GAUTAM M R. Application of tank, NAM, ARMA and neural network models to flood forecasting[J]. Hydrological Processes, 2015, 14(14):2473-2487.

[9] WATANABE Y, MATSUMOTO Y. Online failure prediction in cloud data centers[J]. Fujitsu Scientific & Technical Journal, 2014, 50(1):66-71.

[10]FRONZA I, SILLITTI A, SUCCI G, et al. Failure prediction based on log files using random indexing and Support Vector Machines[J]. Journal of Systems and Software, 2013, 86(1):2-11.

[11]KEERTHI S S, SHEVADE S K, BHATTACHARYYA C, et al. Improvements to Platt's SMO algorithm for SVM classifier design[J]. Neural Computation, 2001, 13(3):637-649.

[12]LEVENSHTEIN V I. Binary codes capable of correcting deletions, insertions and reversals[J]. Soviet Physics Doklady, 1966, 10(1):707-710.

[13]OKUDA T, TANAKA E, KASAI T. A method for the correction of Garbled words based on the Levenshtein Metric[J]. IEEE Transactions on Computers, 1976, C-25(2):172-178.

[14]MAKANJU A A O, ZINCIR-HEYWOOD A N, MILIOS E E. Clustering event logs using iterative partitioning[C]// Proceedings of the 15th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. Paris, France:ACM, 2009:1255-1264.

[15]VAARANDI R. A data clustering algorithm for mining patterns from event logs[C]// 3rd IEEE Workshop on Ip Operations & Management. Kansas City, MO, USA:IEEE, 2003:119-126.

[16]AIZAWA A. An information-theoretic perspective of tf-idf measures[J]. Information Processing & Management, 2003, 39(1):45-65.

[17]ZAHARIA M, CHOWDHURY M, FRANKLIN M J, et al. Spark: Cluster computing with working sets[C]// HotCloud'10 Proceedings of the 2nd USENIX conference on Hot topics in cloud computing. Boston, MA:ACM, 2010:10.

[18]HARRELL F E. Regression modeling strategies: with applications to linear models, logistic regression, and survival analysis[M]. New York:Springer-Verlag, 2001.

[19]LIAW A, WIENER M. Classification and regression by Random Forest[J]. R news, 2002, 2(3): 18-22.