Cyber-Physical-Social System Between a Humanoid Robot and a Virtual Human Through a Shared Platform for Adaptive Agent Ecology

2018-01-26MizanoorRahman

S.M.Mizanoor Rahman,

I.INTRODUCTION

P RESENT applications of humanoid robots(HRs)include various activities such as therapy for abnormal social development and autism[1],education and training[2],security and rescue operations[3],social service and business[4],entertainment[5],industrial operations[6],and so forth.However,applications of HRs especially in accomplishing social tasks in cooperation with or for the welfare of humans are limited by their anthropomorphism,intelligence,autonomy and social,behavioral,perceptual and communicational skills[7].HRs may possess capabilities of perceiving human’s affective states,expressions,intentions and actions,interpretingperceptions based on contextual information,communicating like humans,and acting based on prevailing situations[8],[9].

On the other hand,virtual humans(VHs)are software generated human-like animated artificial characters[10],and present applications of VHs include various tasks such as serving as virtual patient,tutor,student,trainee,advertiser,and so forth[10]−[12].There are increasing contributions of VHs toward anatomy education,psychotherapy,and biological and biomedical research[13],[14].However,it appears that VHs could not perform beyond their virtual environments.It is believed that the scope of the contributions of VHs could be augmented if they could be used to perform real-world tasks for humans or to cooperate with humans to perform real-world tasks.The VHs may be enriched with various real-world social functions and attributes for interactions with their human counterparts.Such social functions and attributes may include exhibiting human-like intelligence,motion,action,emotion,gesture and expression,communicating and interacting with humans,memorizing facts and retrieving with dynamic contexts,and demonstrating reasoning and decision-making abilities based on their perceptions[15].However,such real-world intelligent features of VHs are still not observed.

Based on the know ledge of state-of-the-art HRs and VHs,it is believed that a HR and a VH have a lot of common objectives and performance.HRs and VHs may separately cooperate with humans and also with each other to perform real-world tasks.Networked and bi-laterally communicating HRs and VHs cooperating in a coordinated and goal-oriented way may perform better than an individual HR or a VH.Dynamic collaboration between HRs and VHs seems to be superior to the augmented reality for robots where a robot may follow its virtual counterpart,but dynamic bidirectional collaboration between them is limited[16].A comprehensive framework is necessary for real-world collaboration between a HR and a VH.A well-defined evaluation scheme is necessary to evaluate the collaboration performance[17],[18].However,investigations on collaborations between HRs and VHs have not received much attention yet except a few preliminary initiatives,e.g.,[19]−[21].A common platform may be helpful to implement real-world collaboration between a HR and a VH,e.g.,it may reduce the volume of software development and ease the animation.However,suitable framework for collaboration between a HR and a VH,initiative of developing a common collaboration platform,and proper evaluation schemes of the collaboration are still not so emphasized[19]−[21].

Agent ecology means that complex tasks may not be performed properly by a single and capable agent.Instead,the tasks may be performed through the cooperation of two or more networked,bi-laterally communicating and mutually dependent agents in a coordinated and goal-oriented way[21].Building smart spaces in this way may reduce application complexity and costs,and enhance individual values and contributions of the agents enabling new services that may not be performed by any agent individually.It is believed that a cyber-physical system(CPS)between a HR and a VH through a common platform may help form the proposed ecology between the agents for benefiting humans[22].Humans also need to be kept in the loop as the system is proposed for the benefits of the humans.However,initiative to develop such an adaptive ecology between a HR and a VH through a human in-the-loop cyber-physical-social system(CPSS)has not been taken yet[23].

Trust of one agent in another agent is mandatory for collaborative tasks[24].Human trust in collaborating robots has been studied[25],but human trust in collaborating VHs as well as trust between two artificial agents of heterogeneous realities(e.g.,HR’s trust in VH and VH’s trust in HR)have not been studied.The trust in each other may be used to plan their role and autonomy in their collaboration.Appropriate computational models of trust are necessary to measure realtime trust between a HR and a VH.However,trust modeling and measurement methods have not been proposed yet.On the other hand,in mixed-initiative cases,turns in collaboration are negotiated between participating agents rather than solely determined by a single agent[26].Mixed-initiatives between a HR and a VH may make their collaboration more participatory,intuitive and natural,which may enhance their individual contribution to the collaboration.The bilateral trust status between a HR and a VH may trigger their taking turn in the mixed-initiative collaboration.However,possibility of such bilateral trust-triggered mixed-initiatives in the collaboration between a HR and a VH has not been investigated yet.

Being motivated by aforementioned background and limitations,the preliminary initiatives proposed in[19]−[21]are integrated and extended in this article,and the broad objective is determined as to propose a trust-triggered mixed-initiative adaptive social ecology between a HR and a VH through a CPSS-based common platform in order to collaboratively perform a real-world common social task(e.g.,searching for a hidden object).A framework is developed to implement the collaboration.Bilateral trust models between a HR and a VH are derived,and real-time trust measurement methods are proposed.Trust-triggered mixed-initiative is incorporated in the collaboration.A comprehensive scheme is developed to evaluate the collaborating agents and their collaboration.The results are proposed to use to develop adaptive social ecology comprising intelligent agents of heterogeneous realities to assist humans in real-world tasks,e.g.,assisted living.

II.THEORETICAL CONCEPTS:CPSS AND AGENT ECOLOGY

A.CPS With Applications in Robotics and CPSS

A cyber-physical system as in Fig.1(a)refers to integrating a cyber system(3C-computing,communication,and control components that are discrete)and physical facilities(natural and human-made physical systems governed by the laws of physics and operated in continuous time)at all scales and levels to realize the engineered systems.A CPS may usually exceed an ordinary system in terms of functionality,controllability,efficiency,safety,autonomy,usability,stability,performance,transparency,security,scalability,robustness,adaptability,reliability,intelligence,energy-efficiency,operability and coordination[22],[23].There are feedback loops(e.g.,tactile,force,vision)in the CPS where the physical processes may affect the 3C processes and vice versa[22],[23].The goals of research in CPS are to understand the fundamental science and to develop rapid and reliable design and integration techniques of CPS applicable to a wide variety of application domains[22],[23].

Fig.1.Fundamental concepts of(a)the CPS,and(b)the CPSS.

Robotic systems are an important class of CPS.The ability of robots to interact intelligently with the world depends on embedded 3C processes and on perception of the world around them.In the near future,robots will need to be telerobots under the CPS.The robots will get intelligence through the air and functionalities through networking.The sensors,actuators,processors,databases,control,etc.will work together without the need to be collocated[22],[23].In such cases,robust controls will be needed to deliver the performance of robotic systems under CPS in the face of real-world problems such as network dynamics,jitter,delay,congestion,packet loss,instabilities,etc.Power requirements in mobility,manipulation,computation and communication for robotic systems need to bead dressed.However,opportunities in CPS research that can advance robotics research have not been investigated properly.

The robotic systems under the CPS will have significant interactions with humans.Hence,the notion of trust between humans and robots will need to be established[24].As the CPS serves humans,incorporation of humans in the CPS is necessary,which gives the birth to the CPH(cyber-physical human)system involving human factors and interactions between humans and the cyber and/or physical systems.The CPH is thus further broadened integrating more human/social factors,which forms the CPSS(Fig.1(b))[23].However,CPSS involving robots especially integrating a HR and a VH for real-world tasks for humans has never been investigated.

B.Adaptive Agent Ecology

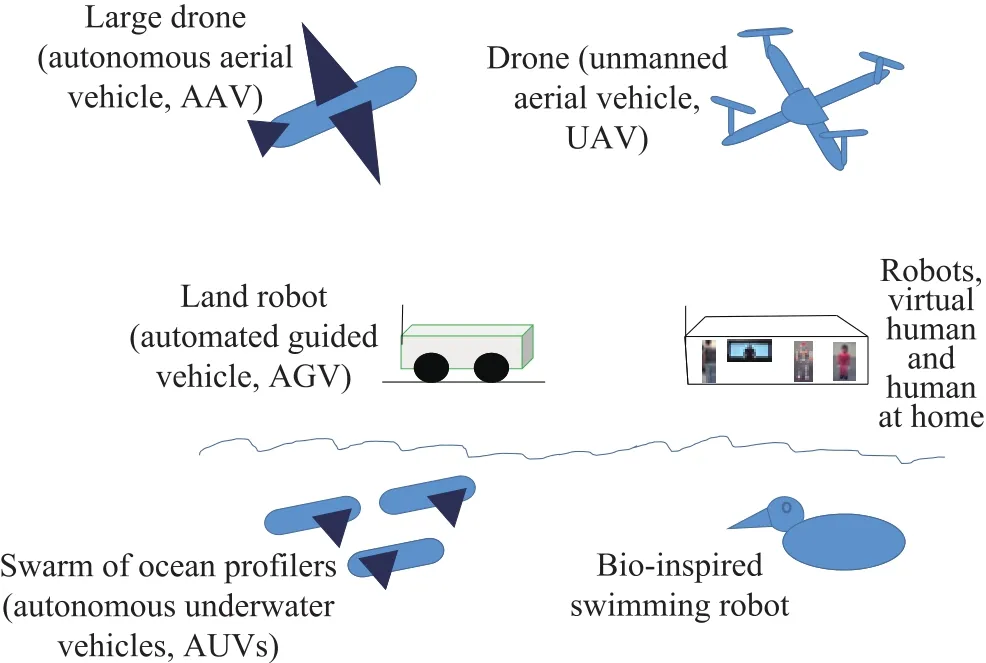

Ecology is a branch of biology that deals with relations and interdependencies of organisms to one another and to their physical surroundings.Being inspired by biology,the central concept behind the artificial agent ecology is that the complex social tasks may be performed through the cooperation of heterogeneous networked and bi-laterally communicating and interacting agents pervasively embedded in everyday environments.Such artificial agent ecology performing in a coordinated,goal-oriented,autonomous and adaptive way may be useful in unstructured social environments,e.g.,households[21],[27].As Fig.2 illustrates,the agents such as AAV,AGV,AUVs,UAV and swimming robot being connected through appropriate networks may communicate with each other directly or via humans or with other human-like artificial agents such as HRs and VHs for exchanging information(e.g.,travel status,geographical information,weather)and functional instructions and providing/receiving supports(security,emergency)on theway of their own activities.Building smart spaces(cities,homes)in this way may reduce application complexity and costs of the agents as well as enhance individual values and contributions enabling services that may not be performed by an individual agent alone[21].The agent ecology needs learning and adapting abilities,and gaining social acceptance and producing social impacts[21].The ecology demands integrated software platforms,intelligent controllers and information processing algorithms(perception,attention),robust communications,actuators,sensors,etc.However,effective initiatives for such ecology have not been taken yet enormously.Especially,agent ecology implemented through CPSS that may integrate the benefits of both ecology and CPSS seems to be promising,but has not received much attention yet.

Fig.2.An artificial adaptive social ecology among many networked and bilaterally communicating intelligent agents in a smart city(or space).

III.COLLABORATION FRAMEWORK AND EXPERIMENTAL SETUP

A.Framework for Collaboration in the Agent Ecology

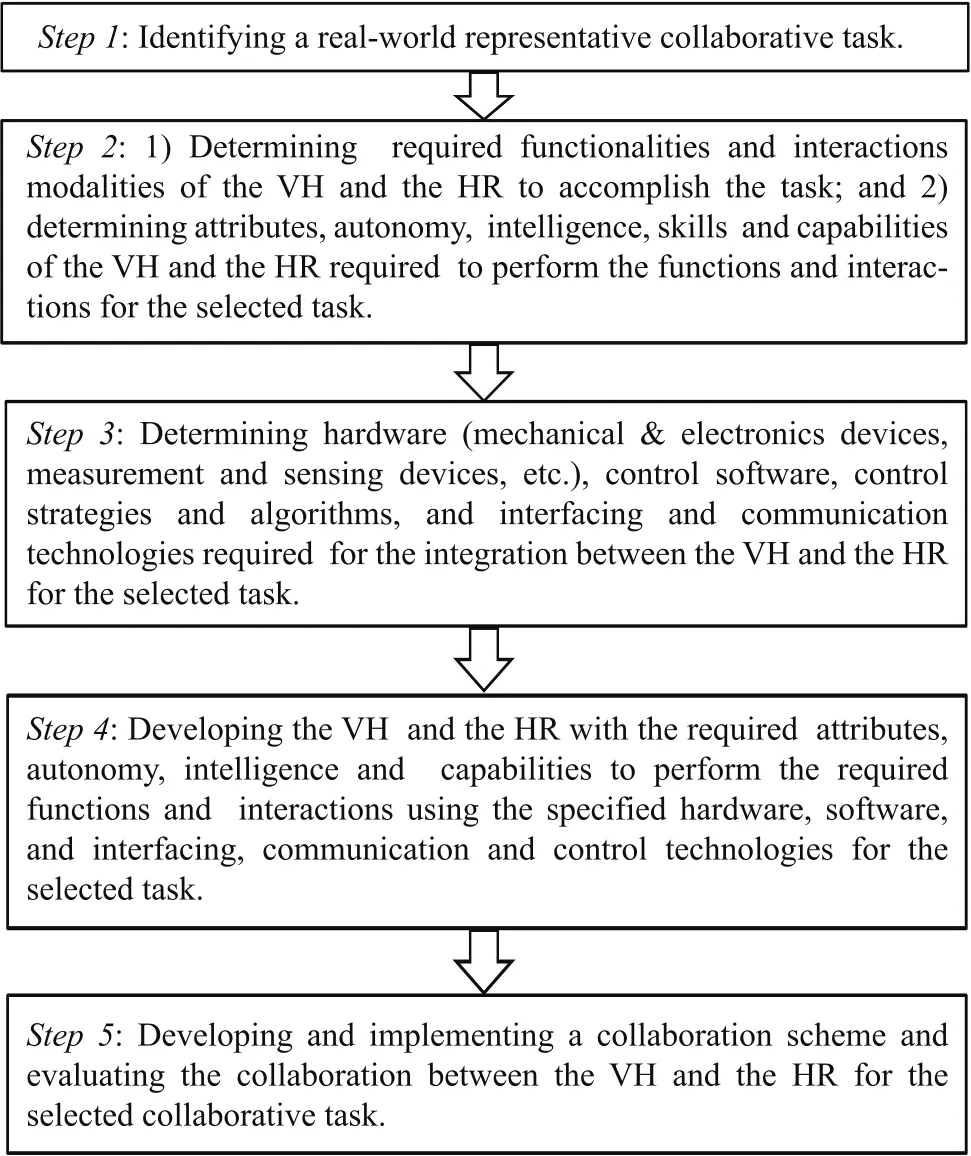

The proposed ecology illustrated in Fig.2 is vast,ambitious,and it needs sophisticated technologies and facilities.As a representative initiative(partial)toward such robust ecology,here only the ecology in the form of collaboration between a HR and a VH mediated by humans through a CPSS platform for a real-world task is investigated.A detailed framework is developed to perform the collaboration,as in Fig.3[19]−[21].

Fig.3.A 5-step framework(guidelines)to implement the collaboration between a humanoid robot and a virtual human for a real-world task.

B.Experimental Setup

According to Step 1 of the framework(Fig.3),a representative collaborative task in a home environment was identified as shown in Fig.4(a)[19]−[21].For the task,10 rectangular black paper boxes are kept in a room(room 2),5 boxes are put in the left side,and the remaining boxes are put in the right side.The experimenter(human 1)travels to room 2 and hides a small object inside any of the 10 boxes.A HR stands at “P1”and a VH appears in a screen at “P3”.A human(human 2)stands behind the HR at“P2”.The hidden object is kept confidential to human 2.It is assumed that human 2 wants to get the hidden object and thus asks the artificial agents(VH,HR)to find out it for him/her.The agents begin collaboration between them to search out the object for human 2.Here,human 2 is the beneficiary(service receiver)of the collaboration between the service providers(VH,HR).One of the artificial agents is the “master/leader”who knows the location of the hidden object.The other agent does not know the location,and thus acts as the “follower”of the master agent’s instructions to find out the object[28],[29].Here,“collaboration”means the joint action of the master and the follower agents for a common goal(finding out the hidden object)for human 2.The computers with all required software for controlling the HR and the VH through the common platform are put in room 1.There is a sound system near P3 in room 2 to transmit the voice of the VH.Kinect cameras are put in room 2.The actual experimental setup for room 2 is shown in Fig.4(b)[19]−[21].

Remark 1:Human 2may himself/herself find out the hidden object instead of receiving supports from the VH and the HR.However,let us consider cases if 1)human 2 has no know ledge of the location of the object,and 2)human 2 is disabled or busy and thus wants to get the object searched out by the agents through their collaboration(assisted living).

Remark 2:One agent(VH or HR)may find out the object alone without collaborating with another one.However,let us consider the cases if 1)one agent has no know ledge about the correct location of the hidden object;2)one agent who has know ledge about the object is not physically present in the site and appears through telepresence and helps another local agent to find out the object;3)one agent is less intelligent,but more physically skillful to find out the object,and the vice versa;and 4)as both agents are artificial with limited skills,intelligence and capabilities,the collaboration between them may benefit each other with complementary attributes,intelligence and skills,which may ease the searching task.

Fig.4.(a)Layout of the room in a home where the real-world collaboration between a HR and a VH takes place to find out the hidden object.(b)Interior view of room 2 that shows the actual collaboration environment.

IV.DEVELOPING THE INTELLIGENT AGENTS AND THE COMMON PLATFORM FOR INTEGRATING THE AGENTS UNDER CPSS

A.Requirements for Integrating a HR and a VH

Kapadiaet al.identified a few requirements and explained the key limitations in control,representation,locomotion,perception and autonomy of the state-of-the-art VHs[30].These requirements and limitations need to be considered when generating a VH for successful interaction with a HR.A few additional requirements for generating interactive VHs and HRs were introduced in[31].Effective integration of a HR with a VH for the proposed task also needs to satisfy a set of other requirements as given in Step 2 of the collaboration framework(Fig.3).Thus,the HR and the VH need to be enriched with:1)a set of functionalities and skills,e.g.locomotion,actions,gesture,voice,gaze,facial expression,manipulation,attention,bilateral communication;2)a set of attributes,e.g.,embodiment,anthropomorphism,stability;3)intelligence,e.g.,perception,recognition and decision-making;and 4)interaction abilities and modes,e.g.,auditory(speech),visual,demonstrative,body lingual[8],[9],[32],[33].The agents need to see and recognize each other,objects and environment,speak and listen to the counterpart for verbal instructions about the search path for the hidden object,generate and recognize gesture to demonstrate/recognize the search path,etc.They may need to have mobility to search the object and point it out when it is found.The agents need to be enriched with proper technologies,controls and decision-making methods and algorithms,interfaces,sensors and communication platform to fulfill these requirements.They should be as human-like in appearance and performance as possible to enhance their social acceptance and impacts[7].

B.Developing the Intelligent Agents

According to Steps 3 and 4 of the framework(Fig.3),required hardware components,software packages and controls and communication technologies are determined,and the agents are developed using these facilities for the collaboration.

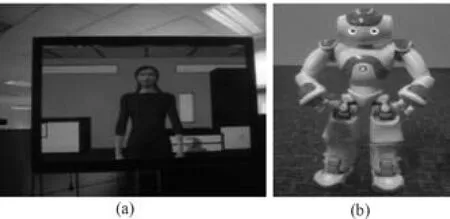

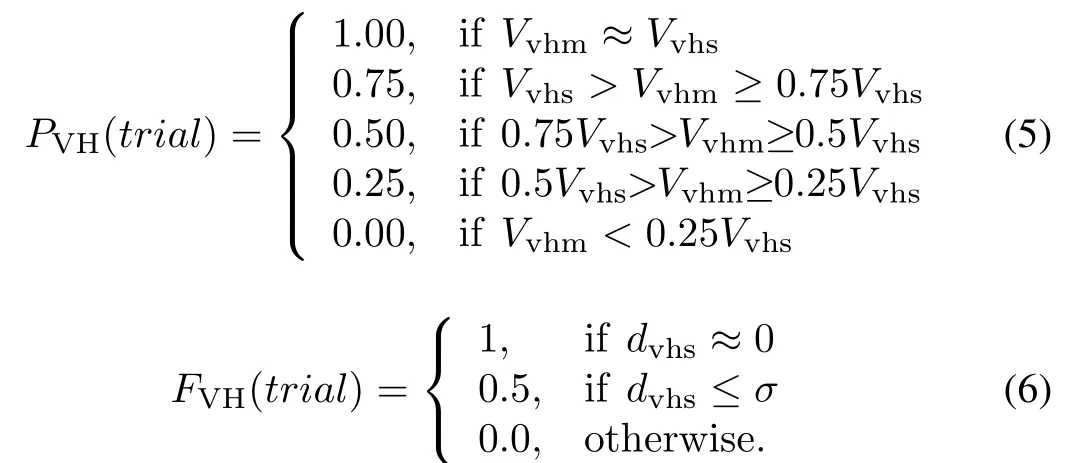

1)TheVH:An intelligent VH is developed as shown in Fig.5(a) [19]−[21]. Smart body system(http://smartbody.ict.usc.edu/)is used for animation and control of the VH.The model is developed based on the joints and skeleton requirements of smart body,and exported to 3D Auto desk Maya software(http://www.autodesk.com/).The VH specifications follow biomimetics approach,e.g.,hand movement and locomotion speeds,body dimensions,gesture trajectories,joint angles,etc.are determined being inspired by those for humans[7].For the graphical rendering,ogre(http://www.ogre3d.org/)is used.Application programming interfaces(APIs)for the functions,e.g.,actions,gesture,expressions,locomotion,recognition,etc.are developed and archived in the control server for the VH.For example,the functions include gestures(e.g.,turn head,look at a position),text to speech,gaze,manipulation,locomotion(walk to a position),facial expressions(emotions),actions,etc.The VH is displayed in a screen as shown in Fig.5(a).

Fig.5.(a)Intelligent virtual human.(b)Intelligent humanoid robot.

Fig.6.The common platform for the proposed collaboration integrating the HR and the VH(CIP-HA).

2)TheHR:A NAO (http://www.aldebaran-robotics.com/en/)robot as in Fig.5(b)is used in the collaboration[19]−[21].APIs for functions(e.g.,stand up,sit down,walk,wave hand,shake hand,grab and release an object,speech(text to speech),look at a position,point at something)and attributes are developed that make it intelligent and skillful for the collaboration.The control software and the APIs for the functions are archived in a robot control server.The HR is able to perceive the environment through sensors(e.g.,vision cameras),make decision based on adaptive rules and stored information,and react by talking,moving or showing emotions and gestures.

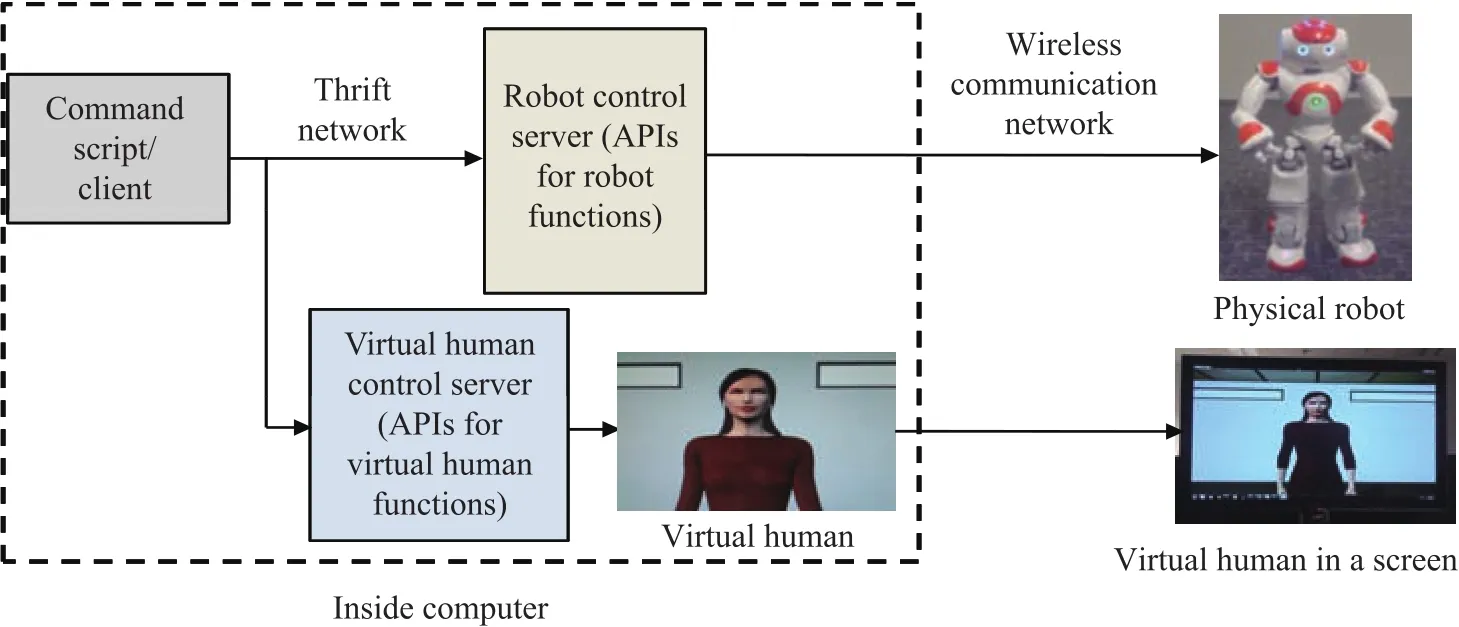

C.Developing the Common Communication Platform

A common communication platform to integrate the HR and the VH is developed and installed in a computer system as in Fig.6[19]−[21].Animation of each function for the VH or the HR is commanded from a common client networked with the control server through Thrift interface[34].A RPC(remote procedure call)library handles the communication between the command script and the control server[35].The modular and flexible RPC relies on a server/client relation allow ing inter-process communication,which motivated to use the RPC.Thrift is preferred over robot operating system(ROS)because ROS runs on Linux/CORBA and it is complex[36].Instead,Thrift is reliable,supports cross-platform/language,and performs well.The VH control server is connected to a display window within the computer or to an external screen.The HR control server is connected to the physical HR using wireless network.The platform is named as“common interaction platform for heterogeneous agents(CIP-HA)”,which can be used to operate agents of heterogeneous realities specifying the agent during function call.

Each agent has individual control server containing function APIs that may be called in the client script.The APIs for functions of the VH and HR are generated in such away that the functions are as similar as possible,which result in similar behaviors in the agents within the mechanical/physical limits for each particular function.For example,“point at something”is a function,and if it is called for the VH,the VH will show a posture pointing at something.Similarly,if this function is called for the HR,the robot will show a similar posture of pointing at something.The difference is that the VH will perform inside the screen,but the HR will perform physically.The collaboration is treated as an agent ecology(with limited agents,but more agents can be added to increase the capacity)as the agents depend on each other for the collaboration[21].

D.The CPSS Between the VH and the HR

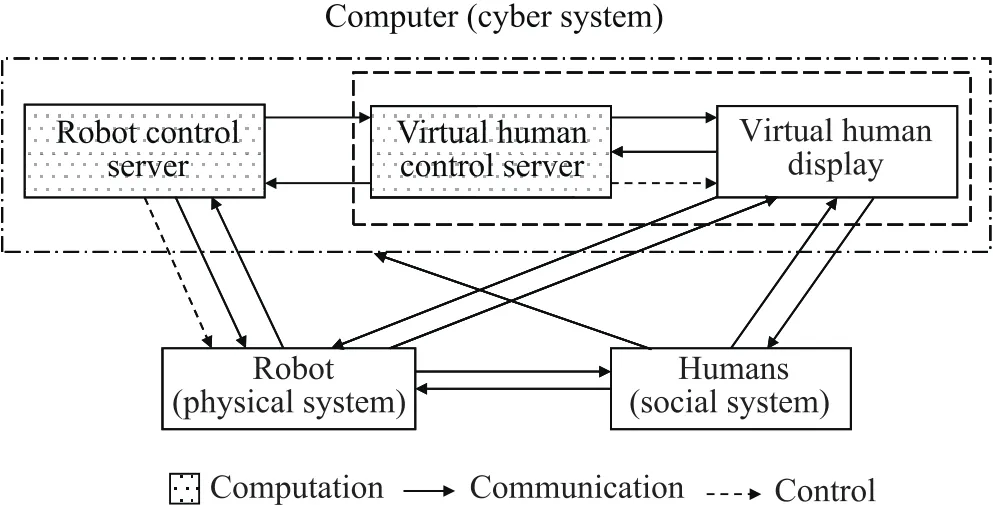

Based on the concepts in Section II,the integration between the VH and the HR through the common platform(Fig.6)may be formulated as a CPSS as depicted in Fig.7[23].In Fig.7,the pattern filled blocks indicate computation,and the black and dash arrows indicate communication and control among cyber,physical and social systems,respectively.Here,human 1(experimenter)and human 2(service beneficiary)along with their cognitive abilities and mental world/space form the societal system[37].Alternatively,the VH and HR may be treated as the physical systems,and the model-based trust computation may be treated as the cyber system[22].

Fig.7.CPSS between VH and HR through common communication platform.

V.BILATERAL TRUST-TRIGGERED MIXED-INITIATIVE COLLABORATION SCHEME

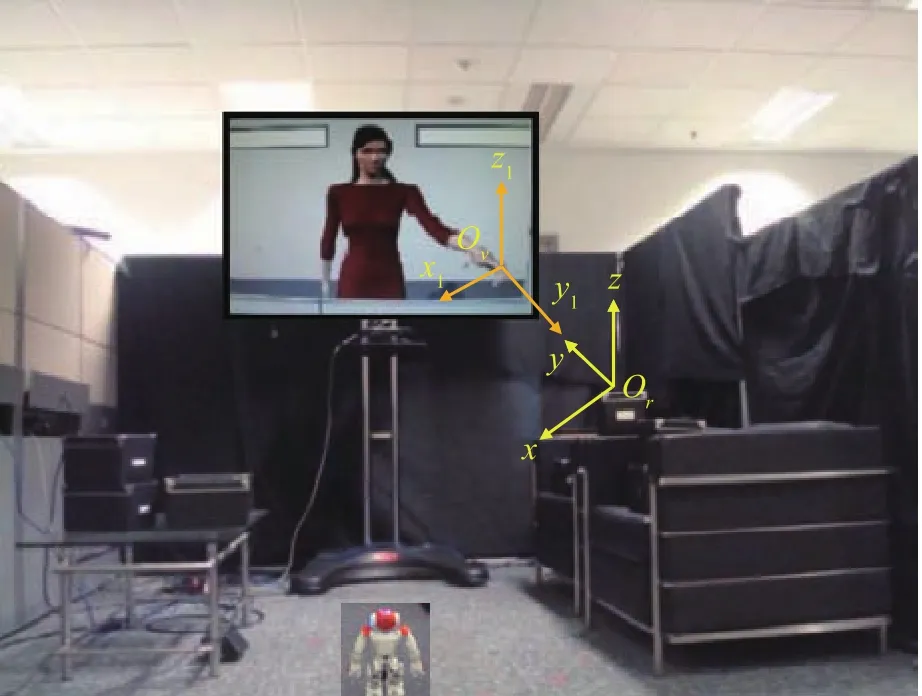

As per Step 5 of the framework in Fig.3,in this section,a collaboration scheme is proposed between the HR and the VH.As Fig.8 illustrates[19]−[21],for the real-world position of each box(e.g.,Or=[x,y,z]T),the corresponding position of the VH’s fingertip(e.g.,Ov=[x1,y1,z1]T)in the virtual world appropriate for pointing at the real-world box by the VH is determined through proper calibration and trial and error.

A.The Collaboration Procedures

The experimenter(human 1)hides an object inside any of the 10 boxes and inputs the position information of the object/box(e.g.,Or=[x,y,z]T)to the computer system.To find out the hidden object through the collaboration between the artificial agents,one agent(either the HR or the VH)should act as the master and the other agent as the follower.Let us consider the case first when the VH is the master and the HR is the follower.Then,the opposite may be considered.

1)The VH is the Master and the HR is the Follower:As Fig.8 shows,during the collaboration,the VH appears in the screen,and shows some gestures(e.g.,stands straight,looks at the HR standing in front of her based on head tracking,shows gaze/attention at the HR)and emotional expressions(e.g.,smiles at the HR).The VH also uses some verbal expressions(speech),e.g.,the VH tells,“hi HR!I will help you find out the hidden object,follow me”.Being the master,the VH inherits the position information of the target object/box in the real-world,e.g.,Or=[x,y,z]Tfrom the computer system.Based on this information,the VH turns her face toward the correct location of the box containing the hidden object,moves(walks)toward the target box,and uses the pre-specified position and posture of her fingertip(e.g.,Ov=[x1,y1,z1]T)in the virtual environment appropriate for pointing at the realworld target box as shown in Fig.8.Then,the VH,based on pre-stored information,uses additional verbal instructions to clarify the position of the hidden object to the HR.For example,the VH tells,“hi HR,the object is inside that box.The box containing the object is lying on a sofa closer to the screen.It is on top of another box”.

Fig.8.Arrangement of the 10 boxes,positions of the VH and the HR in the room,and an example of mapping between the pointing(fingertip)position of the VH in the virtual environment and the corresponding position of a target box in the physical environment.

Then,the follower agent(HR)tries to identify the correct location of the box based on the instructions it receives from the master agent(VH).The VH’s fingertip position may be recognized by a Kinect camera or by the vision system embedded in the HR head,which then may help determine the corresponding position of the real-world box(target position for the HR).However,this procedure of recognition is not so reliable or robust.Hence,the VH’s fingertip position in the virtual environment is shared to the HR through the computer system,which helps the HR determine the corresponding position of the target box in the real-world space based on the preplanned mapping(Fig.8).Once the target position is determined,the HR uses some verbal expressions such as it tells,“hi VH!thank you for instructing the location of the object,now I may try to find it”.Then,the HR shows some gestures such as it turns its face toward the target position,walks to near the target,stops walking,looks at the targeted position(box),points at the box and tells,“I have found the box where the object may exist,thank you VH for your help”.In fact,the HR cannot open the box due to limitation of its skills at the current stage.Hence,the experimenter(human 1)opens the pointed box on behalf of the HR and checks whether the object exists inside the box.

2)The HR is the Master,the VH is the Follower:Similar story as above occurs if the HR is the master and the VH is the follower.In this case,the HR and the VH stand face to face as in Fig.8.At the beginning,the HR uses similar gestures and verbal expressions as the VH uses during its role as the master agent.Being the master,the HR inherits the position information of the target object/box in the real-world,e.g.,Or=[x,y,z]T.Then,the HR moves to near the target position and points at the target box.An inertial measurement unit(IMU)attached onto the fingertip of the HR hand can measure the position of the fingertip,which can be passed to the VH through the computer system.Then,the corresponding fingertip position of the VH can be determined based on the preplanned mapping(Fig.8),which is the target position for the VH.Then,the VH follows the instructions of the HR in the similar way as the HR(as a follower)follows the instructions of the VH.The VH moves toward the target position(within the screen),points from the virtual world at the target box in the physical world as illustrated in Fig.8,and then tells,“hi HR!I have found the box,the box is that one where the object may exist”.The VH cannot open the box as it cannot come outside the screen.Hence,the experimenter(human 1)opens the pointed box on behalf of the VH and checks whether the object exists inside the box(in another sense,the human 1 checks whether the VH points at the correct box based on the HR’s instructions).In the future,allied technologies may be available as peripheral devices of the VH,which may open the box with the discretion of the VH without taking help from the human 1.

Once the object is found through the collaboration between the agents,the service beneficiary(human 2)may obtain the object.Here,the HR and the VH’s gestural,emotional and verbal expressions are used just to mimic human-human interactions to make the collaboration more natural[7].

B.Strategy of Determining Master and Follower Agent

Whether the VH or the HR acts as the master agent depends on the bilateral trust between them.The collaboration scheme including switching of master(leader)-follower role based on bilateral trust is shown in Fig.9[19]−[21],[29],whereTVH2HR(trial)is the VH’s trust in the HR in a trial(a run),andTHR2VH(trial)is the HR’s trust in the VH in that trial.The master takes the initiative to find out the hidden object,and hence the initiatives also switch between the agents depending on bilateral trust.The collaboration is thus considered as a mixed-initiative collaboration triggered by bilateral trust[26].In real implementation,switch between the agents’roles as master or follower as well as their turn of taking initiatives may be controlled by a finite state machine(FSM)model as shown in Fig.10[38].When a new trial(run)starts,the roles of the agents as a master and a follower(as well as the role of taking initiative for collaboration)are decided based on the bilateral trust values of the agents in the immediate past trial(prior trial).

Fig.9.Bilateral trust-triggered real-world mixed-initiative collaboration between a HR and a VH in a trial based on the trust values in the prior trial.

Fig.10. Bilateral trust-triggered FSM model for switching the role of the agents as master or follower as well as for switching their turns of taking initiatives in the collaboration for a trial.The state can change only at the beginning of a trial based on the trust values of the agents in the prior trial.

C.Modeling and Real-Time Measurement of HR-VH Trust

Modeling and measurement of trust is an enabling step to implement the collaboration,which is described below.

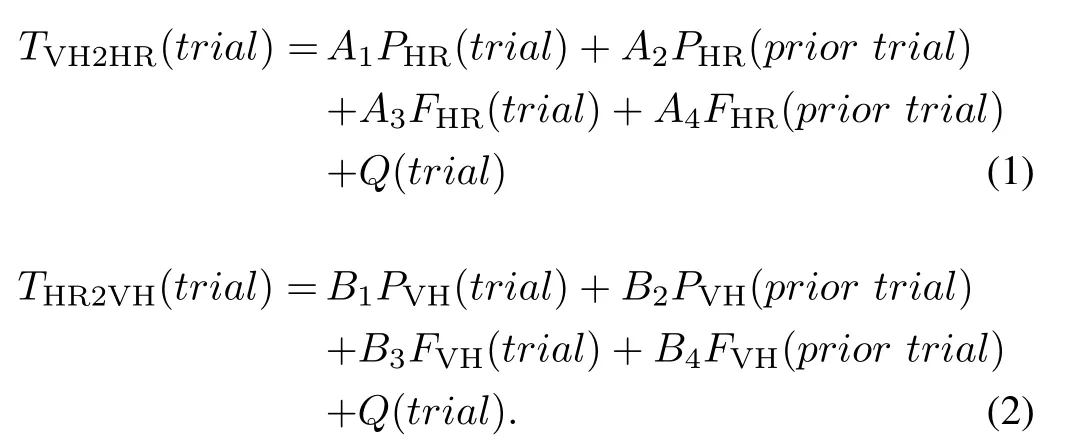

1)Trust Modeling:Trust of one agent in another agent may depend on many factors.In Lee and Moray’s study,a time-series model based only on performance and faults of automation(artificial agent)was used to compute human’s(biological agent)trust in automation[39].Trust is actually a perceptual issue and the human has actual feeling of trust in an artificial agent or in another human.However,it is impossible to generate similar feelings of trust of an artificial agent in another artificial agent.Nevertheless,the idea in Lee and Moray’s study may be extended to derive the computational model of an artificial agent’s trust(e.g.,VH’s trust)in another artificial agent(e.g.,HR)as in(1)and(2)[19]−[21].In(1)and(2),PHR(trial)is the reward score for performance status of the HR,FHR(trial)is the reward score for fault status of the HR(reward for making less or no fault)in a trial,PVH(trial)is the reward score for performance status of the VH,FVH(trial)is the reward score for fault status of the VH,andQ(trial)is the noise and perturbation in a trial.AiandBiare the real-valued constants(i=1,2,3,4)that depend on tasks and agents.

2)Trust Measurement:For measuringTVH2HR(trial)andTHR2VH(trial)in real-time,it is necessary to have real-time measurements of performance and fault of the HR and the VH[19]−[21].Movement speed of the HR from its initial position to target position is considered as a criterion of HR performance.Deviation of actual position pointed by the HR fingertip from target position is considered as a criterion of HR fault.Ideally,the HR speed and the target position of the fingertip are fixed(set by programming),and thus there should have no deviation.However,external disturbances(e.g.,friction between floor surface and HR feet during walking,resistance of air)and change in HR stiffness due to heat generated by the actuating motors may be the reasons behind the potential deviations in HR path trajectory,which may cause the deviation between the actual and the target pointing positions.An IMU attached onto HR hand fingertip can be used to measure its actual fingertip position when it points at the target box.LetVrmis the measured(actual)absolute walking speed of the HR,Vrsis the set(desired)absolute speed for the HR,drsis the absolute shortest distance between the target position(position of the targeted box)and the actual position of the HR fingertip when it points at the box.The targeted position for a box is considered as the center of the top view of the box if it is not stacked with another box.The center of the front side(facing the HR)of the box is the targeted position for the box if the box is stacked with other boxes.δis a small magnitude of threshold determined based on the particular application.PHR(trial)andFHR(trial)may be objectively measured in real-time following(3)and(4).

Similarly,the movement speed and the deviation of the actually pointed position from the targeted pointing position of the VH are considered as its performance and fault criterion,respectively.Same as the HR,speed and targeted pointing position of the VH are ideally fixed,but the communication delay between the client and the control servers,software error and system instability may reduce the speed of the VH.All these including other uncertainties such as calibration(mapping)error,unnoticed displacement of the screen or the box,etc.may cause deviation in the VH’s pointed position from the targeted position.IfVvhmis the actual absolute speed of the VH(obtained through the computer system for the VH),Vvhsis the absolute speed set for the VH,dvhsis the absolute shortest distance between the targeted(set)position of the fingertip in the virtual environment appropriate for pointing at the corresponding real-world box(e.g.,Ovin Fig.8)and the actual position of the VH’s fingertip in the virtual environment(obtained through computer system),thenPVH(trial)andFVH(trial)may be measured in real-time following(5)and(6),whereσis a small magnitude of threshold determined based on the application.

Note 1:δandσneed to be adjusted as the chance of the HR being affected by external disturbances is higher than that of the VH.One approach is to considerδ≫σ,which may help compare the faults between two agents on similar extent.

Note 2:PVHandPHRare between 0(least performance)and 1(maximum performance),andFVHandFHRare between 0(there is some fault)and 1(there is no fault).In fact,PVHandPHRmean the reward for showing good performance,andFVHandFHRmean the reward for making no or less fault.

Note 3:The proposed trust models may be verified using model verification tools[40],but the models are validated here based on experimental evaluation results,as follows.

VI.THE EXPERIMENTAL EVALUATION

A.The Evaluation Scheme

Collaboration between the HR and the VH is evaluated using 4 categories of evaluation criteria:1)attributes of the master agent;2)quality of interactions between the agents;3)task performance;and 4)like ability of the service beneficiary.The attributes of the master agent is evaluated based on the following criteria:1)level of anthropomorphism;2)level of embodiment;3)quality of verbal and facial expressions,gestures and actions;and 4)level of stability of the agent.The quality of interactions between the agents is evaluated based on the following criteria:1)cooperation level;2)clarity of instructions of the master agent and the level of utilization of the instructions by the follower agent;3)level of engagement between the agents;4)naturalness(similarity with human human interactions)in the interactions;5)prospect/potential of long term companionship between the agents;6)perceived cognitive workload of the follower agent;7)situation awareness of the follower agent;and 8)team fluency.The attributes and quality of interactions(except workload and team fluency)are subjectively evaluated by human 2 using a Likert scale[6]as shown in Fig.11.The workload is assessed by human 2 using NASA TLX[6],[25].The follower agent being an artificial agent cannot evaluate its own cognitive workload and situation awareness in the collaboration.Hence,the human 2 evaluates these as if he/she were the follower agent.

Fig.11. Likert scale to assess the attributes of the master agent,quality of the interactions between the agents,and the CPSS performance.

Team fluency is the coordinated meshing of joint efforts and synchronization between the VH and the HR during the collaboration[41].Four criteria are used to measure the team fluency objectively as follows:

1)HR and VH’s idle time:it is the time that the HR and the VH wait for information processing,sensory input,computing and decision-making,etc.

2)Non-concurrent activity time:it is the amount of time during a trial that is not concurrent between the two agents when it should be concurrent.

3)Functional delay: it is the time between end of one agent’s action and start of another agent’s action.

Each criterion is expressed as a % of the total trial duration.It is measured by the experimenter based on the time data taken using stopwatches.

The task performance is evaluated objectively following two criteria:1)efficiency;and 2)success rate in finding the hidden object.The efficiency and success rate are measured following(7)and(8),respectively.In(7),Ttis the targeted time to complete the collaborative task for a trial andTais the actual time for the task.In(8),ntfis the total number of collaboration trails where the follower agent fails to find out(point out)the object based on the instructions received from the master agent,andntis the total number of trails.

Service beneficiary(human 2)’s like ability of the services received through the collaboration between the HR and the VH is expressed in terms of the level of the service beneficiary’s:1)satisfaction in the service;2)own trust in the collaboration;and 3)dependability in the service.These are assessed by human 2 using the Likert scale(Fig.11).

In addition to the above evaluations,the human 2 also evaluates the overall performance of the collaborative CPSS based on the following criteria using the Likert scale(Fig.11):1)quality/suitability of the entire system design with CPSS components;2)transparency;3)security;4)scalability;5)robustness;6)trust;7)power/energy efficiency;8)system rapidness;9)integration techniques;10)autonomy;11)usability;and 12)safety.

B.Experimental Details

1)Recruitment of Subjects:Thirty one(31)human subjects(engineering students,males 26,females 5,mean age 25.67 years with variance 3.58 years)were recruited to participate in the experiment.One subject was used as the experimenter(human 1).The remaining 30 subjects were used as the service beneficiary(human 2).A ll the subjects reported to be physically and mentally healthy.

2)Experimental Objectives:The objectives of the experiment were to evaluate the effectiveness of the collaboration scheme(Section V)between the HR and the VH using the common platform for the real-world collaborative task in the ecology through the CPSS.

3)Hypothesis:It was hypothesized that the VH and the HR operated through the common platform based on similar functions(APIs)would show similar satisfactory behaviors,and more human-like attributes would enhance the quality of the collaboration.

4)Experimental Procedures:The default protocol was that the VH served as the master agent and the HR served as the follower agent to find out the hidden object through collaboration between them following the collaboration scheme.However,depending on the trust value,the role of the master and the follower agents might switch.The collaboration procedures are illustrated in Fig.9.At first,practice trials were performed.The information on agent performance and faults obtained in practice trials were used to compute the constants(AiandBi)of the trust models in(1)and(2)following the autoregressive moving average model(ARMAV)[42],as given in Table I.The necessary thresholds to compute the trust were also decided(Table I).Then,the actual experimental sessions started.

TABLE I CONSTANTS AND THRESHOLDS FOR TRUST COMPUTATION

In the actual experiment,the experimenter(human 1)kept an object hidden in any of the 10 boxes(Fig.4),for example,in box 7.The VH appeared at P3 keeping the face toward the point P1 where the HR stood.The service beneficiary(one of the 30 subjects,human 2)stood at P2 keeping his/her face toward P3.The service beneficiary sent a command to start the collaboration by pressing the “enter”button of a laptop.Then,the master agent instructed(once only)the follower agent how to find out the hidden object through their collaboration.The collaboration procedures included verbal,gestural and emotional expressions and functions as described in Section V.At the end of a collaboration trial,the service beneficiary(human 2)subjectively evaluated the attributes of the master agent,quality of the interactions between the agents,like ability of the service as well as the CPSS as a whole following the evaluation scheme.To measure the team fluency and the task performance,the experimenter recorded various time related data for the trial and also recorded whether or not the collaboration was successful to find out the hidden object.The service beneficiary(human 2)was then replaced by another subject and the trial was repeated.In this way,the experiment was conducted for all 30 subjects separately.Thus,in each trial,1 subject out of 30 acted as the service beneficiary(human 2).

Remark 3:Literatures show that a CPSS should have integration and interaction with humans[37],[43].Human’s mental space and cognition are integral parts of a CPSS that affect command,computation,communication and control relationships between the physical and the cyber systems[44].Human’s intent is reflected in the operation of a CPSS[45].In a CPSS,a human component may observe the CPS,collect data,investigate the data and report on the physical and the cyber environments of the CPS[46].In a CPSS,the human may monitor the entire CPS[47],and evaluate the services he/she receives from the CPS[48].Finally,the human may serve as the consumer/beneficiary of the services provided by the CPS[49],and act as an active user of the CPS[50],[51].In the proposed experiment,two types of human(social)components are involved:1)the experimenter(human 1);and 2)the service beneficiary(human 2).The human 1 hides the object and inputs object’s position coordinates to the computer system,which affects computation and control of the CPS(e.g.,the target position of the VH or the HR is determined based on object’s coordinate information)[44].The human 1 also opens the pointed box on behalf of the HR and the VH.The human 2 puts his/her command by pressing the“Enter”button of the laptop to start the collaboration between the HR and the VH under the CPS.Such command reflects human’s intent[45]and shows a communication with the CPS that affects the control(starting the system)and computation(computation of the target position for the VH or the HR)[44].A ll these also indicate human’s integration and interaction with the CPS[37],[43].In addition,the human 2 receives services from the CPS between the VH and the HR[48],uses the services[50],[51],observes[46],monitors[47]and evaluates the CPS and the agents performance[48]and reports the assessment[46].In addition,as in Fig.7,human 1 and human 2 always maintain visual communications with the HR and the VH in the CPS.It is to posit that the above involvements of humans with the CPS as societal components prove the CPS as a CPSS[23].

More contributions of the human components in the communication,computation and control of the CPSmay further justify and clarify the proposed CPS as a CPSS.For example,the service beneficiary’s assessment through the gaming interface of a hand-held smart phone may be a real-time communication to the CPS,which may affect the computation and control of the CPS(e.g.,the VH-HR collaboration may slow down,stop or accelerate based on human’s feedbacks).

Remark 4:as Fig.7 shows,humans always maintain visual communications with the VH.The human 1 inputs the correct coordinate positions of the hidden object to the computer system,which affects the computation(target position for the VH to point at the targeted box)in the cyber system that may affect the VH’s performance.The human 1 also opens the pointed box on behalf of the VH.The human 2 puts his/her command by pressing the “enter”button of the laptop to start the collaboration of the VH with the HR.The human 2 receives services from the collaboration of the VH with the HR,observes and monitors the collaboration,uses the services,and evaluates the services including the attributes and interaction quality of the VH.A ll these demonstrate meaningful collaboration between humans and the VH.

Remark 5:The interdependence among the VH,HR and humans in the collaboration to perform a particular unique goal(searching a hidden object)acted as an agent ecology[21],[27].The ecology was adaptive as itwas affected by the performance and faults of the VH and HR,and by the humans’inputs and commands to the system on dynamic contexts.

V II.EXPERIMENTAL RESULTS

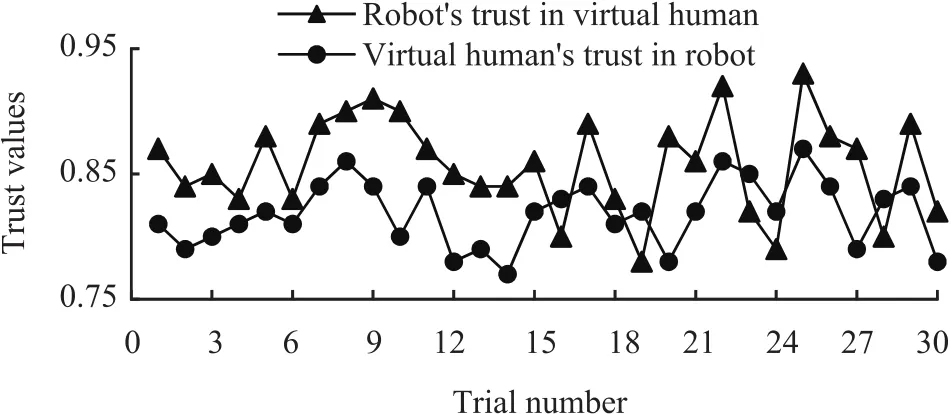

Fig.12 shows the values of HR’s trust in VH and VH’s trust in HR for the trials.The results show that the trust of the agents in each other was high(77%and above),which indicates their willingness(here,rationality or practicality)to collaborate with each other for the common task.The high trust also proves the ability of the artificial agents to produce high performance and avoid faults during the task[24],[39].The results thus justify the effectiveness of generating similar and satisfactory skills and capabilities in the artificial agents of heterogeneous realities for perform ing a real-world common task through a common platform under the CPSS.The results show that the VH served as the master agent and the HR served as the follower agent in 77%trials as the HR’s trust in the VH was greater than the VH’s trust in the HR in 77%trials.However,in the remaining 23%trials,the role of the agents as master and follower(and hence their turns in taking the initiatives)were switched based on the bilateral trust values according to the collaboration scheme in Fig.9[29].The results thus prove the effectiveness of mixed-initiatives in the collaboration[26].

Fig.12.Values of HR’s trust in VH and VH’s trust in HR for the 30 trials.

Fig.13 shows that the attributes of the HR as a master agent were perceived higher than those of the VH as a master agent by the subjects.Note that in Fig.13 and in the forthcoming figures,VH-HR means a collaboration protocol where VH is the master agent and HR is the follower agent.Similarly,HRVH means a collaboration protocol where HR is the master agent and VH is the follower agent.Analysis of variance(ANOVA)showed that variations in attribute scores between subjects for each criterion were statistically nonsignificant(e.g.,for level of anthropomorphism,F29,29=1.44,p>0.05),which indicate the generality of the results.However,variations in attribute scores between VH and HR as the master agents were statistically significant(F1,29=23.19,p<0.05),which indicate that the subjects perceived the attributes of the two artificial master agents differently.It might happen due to the reason that though both agents were artificial,the HR had three dimensions in the physical environment,but in contrast,the VH had only two dimensions within the screen.However,the results for the stability were slightly different.The stability of the HR was lower than that of the VH probably due to the reason that the HR was affected by disturbances such as floor roughness,motor temperature,air resistance,etc.Nevertheless,the attributes of both artificial agents were satisfactory according to the scale in Fig.11,which justify the effectiveness of generating human-like satisfactory attributes in artificial agents of heterogeneous realities for real-world task[7].

Fig.13.Evaluation results of the attributes of the master agents for different collaboration protocols.

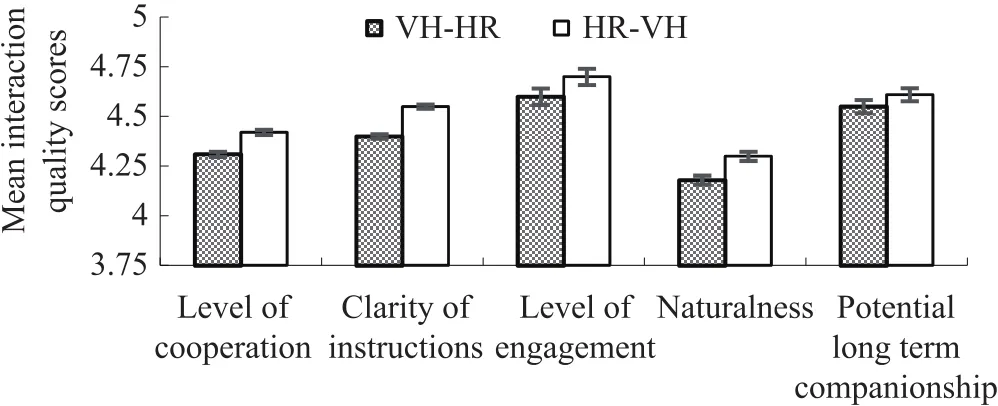

Fig.14 shows the quality of the interactions perceived by the subjects for the VH and the HR as the master agents.The results show that the agent’s interaction quality seems to commensurate with the agent’s attributes in Fig.13,which justify the adopted hypothesis that more human-like attributes in artificial agents enhance the quality of their interactions with other agents as perceived by humans[7].It is noted that the quality of interactions between the artificial agents was satisfactory according to the scale in Fig.11,which proves that similar and satisfactory interaction abilities were generated in the artificial agents of heterogeneous realities for the realworld common task through the common platform.Fig.14 shows that the interaction quality of the HR as the master agent was better than that of the VH.ANOVA shows that variations in interaction quality scores between subjects for each criterion were statistically nonsignificant(e.g.,for level of cooperation,F29,29=1.47,p>0.05)indicating generality of the results.However,variations in scores between VH and HR as the master agents were statistically significant(F1,29=14.61,p<0.05),which indicate that the subjects perceived the interaction quality of the two artificial master agents differently.

Fig.14. Interaction quality between the agents perceived by humans for different collaboration protocols.

Figs.13 and 14 show that the attributes and interaction quality of the HR as the master agent were better than those of the VH.However,Fig.12 shows that the HR’s trust in the VH was higher than the VH’s trust in the HR,which means that the performance and fault avoidance abilities of the VH were higher than those of the HR.This is why,inmost cases,the VH served as the master agent and took the collaboration initiative(Fig.12),and the HR served as the follower agent.It might happen due to the reason that the speed of the HR was slower than that of the VH,which reduced the performance of the HR.Again,the HR was easily affected by disturbances that reduced its fault avoidance ability.Lower performance(speed)and higher faults(caused by disturbances)of the HR might result in comparatively lower trust of the VH in the HR[24],[39].

Fig.15 shows that human subjects’workload for temporal demand,performance,effort and frustration increased when the VH served as the master agent.ANOVA shows that the increment was statistically significant(e.g.,for frustration,F1,29=136.78,p<0.05).The reasons might be that the slower motion of the robot and its vulnerability caused by external disturbances generated more cognitive workload on the service beneficiary(subjects)in terms of temporal demand,performance,effort and frustration.Between the VH and the HR as master agents,workload for mental and physical demand for the cases when the HR served as the master agent was lower than that for the cases when the VH served as the master agent.However,such difference was not statistically significant(for example,for the physical demand,F29,29=1.52,p>0.05).It happened as humans observed the HR and understood its actions physically.In general,it is noted that for both VH and HR as the master agents,the overall cognitive workload of the service beneficiary was not so high(35.08%when the VH was the master agent,42.66%when the HR was the master agent),which proved that the agents did not produce too much workload in the humans who received services from the agents[6],[18],[25].ANOVA shows that variations in workload scores between subjects for each workload dimension were statistically nonsignificant(e.g.,for effort,F29,29=1.88,p>0.05),which indicate the generality of the results.

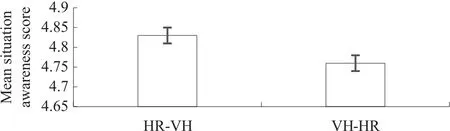

Fig.16 shows that the HR as the master agent produced better situation awareness in the beneficiary humans due to its physical existence,better embodiment and more human-like features[7].However,for both cases,the situation awareness was satisfactory as per the scale shown in Fig.11,which prove the effectiveness of the collaboration between the VH and the HR through the common platform for the benefits of humans.ANOVA shows that variations in awareness scores between subjects were statistically nonsignificant(F29,29=1.09,p>0.05),which indicate the generality of the results.

Fig.16. Mean situation awareness of service beneficiaries(subjects,human 2)between the cases when the VH and the HR acted as the master agents.

Table II shows the evaluation results with standard deviations for different team fluency criteria for different interaction protocols[41].The results show that the trend in team fluency among different interactions match that in the interaction quality(Fig.14).Less idle time,non-concurrent activity and delay indicate more fluency.The fluency was higher when the HR acted as the master agent.It happened due to the physical existence and better embodiment of the HR over the VH.The results show that the team fluency was high for both VH-HR and HR-VH protocols,which proves the effectiveness of the collaboration between the artificial agents of heterogeneous realities.

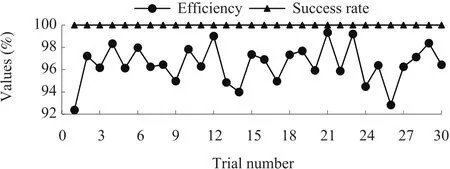

Fig.17 shows that the success rate in finding out the hidden object through the collaboration between the artificial agents(HR,VH)was 100%.Efficiency in the collaborative search task was also high(>92%).High team fluency(Table II)mightpositively influence the efficiency in the collaboration[41].A ll the above results indicate the effectiveness of the real-world collaboration between the HR and the VH for the common real-world task under the CPSS.Again,the HR and the VH were operated through the common platform,and the results thus prove the effectiveness of the common platform between the artificial agents as well.

TABLE II TEAM FLUENCY EVALUATION RESULTS FOR DIFFERENT INTERACTION PROTOCOLS(STANDARD DEVIATIONS)

Fig.17. Efficiency and success rate in the collaborative search task between the HR and the VH for various trials.In most trials,the VH served as the master agent(VH-HR)and the HR served as the follower agent(HR-VH).

Fig.18 shows the like ability of the service beneficiaries(human 2,subjects)for the services they received from the collaboration between the HR and the VH.The results show that the service beneficiaries were satisfied with the services and thus their own trust toward the collaboration between the artificial agents was also high[24].High level satisfaction and trust of the service beneficiaries in the collaboration indicate their interest to receive the services.A ll these including high dependability of the service beneficiaries on the services(Fig.18)justify the potential of the collaboration between the HR and the VH for the benefits and welfare of humans for various purposes,e.g.,assisted living,social companionships for old and lonely people,etc.The results show that the like ability was higher when the VH served as the master agent than when the HR served as themaster agent.

Fig.18. Evaluation of service beneficiary’s like ability in the services provided through the collaboration between the HR and the VH.

The results in general show that,being a physical agent,the HR had better attributes and interaction quality than the VH,but the HR’s lower stability and vulnerability to disturbances compared to its virtual counterpart(the VH)might reduce its performance,accuracy,trustworthiness and like ability,which might reduce its role in taking initiatives in the mixed-initiative collaboration.Despite having slight differences in attributes,interaction quality,stability,performance and accuracy between the HR and the VH as master agents,the fact is that the real-world collaboration between the agents through the common platform under the CPSS was very successful.

The above results present the attributes and performance of the individual agents under the CPSS.However,it seems to be necessary to evaluate the effectiveness of the CPSS as a whole from the system of systems(SoS)points of views[21],[23].Fig.19 shows the evaluation results of the performance of the CPSS for interactions between the agents based on the criteria mentioned in Section VI-A.Here,system design indicates the design of the HR and its networks with the VH.The results show that the CPSS performance becomes satisfactory if the system design with the components of the CPSS is good and compatible with the overall objective of the CPSS.Transparency means the clearness of the inherent working mechanisms and the principles of the CPSS to its human evaluators.Security is the capability of the CPSS to keep it unaffected in the midst of disturbances and interferences.Scalability is the possibility of extension of the CPSS for more complex tasks using similar principles.Robustness is the ability of the CPSS to cope with various changing situations.Trust is the reliability of the performance of the CPSS in front of its human users.Power and energy requirements of the CPSS mean the requirements of power and energy to drive the whole CPSS.Rapidness is how fast the CPSS works and reaches its goal.Integration is the overall coordination of the components of the CPSS to configure and operate the entire CPSS.Autonomy is the ability of the agents under the CPSS to make own decisions in various situations.Usability is how useful the overall CPSS is to assist the humans in various activities,and safety is the safety of the humans who may come in contact with the CPSS to receive assistance.The results show satisfactory performance of the entire CPSS for the above criteria according to the scale given in Fig.11.Note that the evaluation of the CPSS is subjective.However,subjective evaluation is useful as appropriate objective evaluation of CPSS is a challenge[37].Again,the subjective assessment was conducted using formal method(Likert scale[6]),which made the results reliable.

Fig.19. Mean(n=30)evaluation scores(with standard deviations)of the performance of the whole CPSS for interactions between the VH and the HR.

VIII.CONCLUSIONS AND FUTURE WORKS

Two artificial agents of heterogeneous realities(a physical HR and a VH)were developed with a set of similar skills,intelligence and capabilities.The agents were integrated through a common platform under the CPSS to perform a realworld common task.Bilateral trust models for the agents were derived and a mixed-initiative collaboration scheme between the agents was developed to switch their roles and initiatives in the collaboration.The switch in their roles and the initiatives was implemented using a FSM model triggered by bilateral trust between them in the prior trial.The major novelties and contributions are as follows:1)intelligence,autonomy and capabilities of the VH and the HR are enhanced;2)the VH is empowered to perform beyond the virtual environment;3)a common platform is demonstrated to operate both agents of heterogeneous realities;4)bilateral trust model and realtime trust measurement are proposed to establish trust in the artificial agents of heterogeneous realities;5)mixed-initiative interactions are staged between the two artificial agents;6)a CPSS and an adaptive agent ecology(partly)between artificial agents of heterogeneous realities mediated by humans are illustrated;and 7)a comprehensive evaluation scheme is proposed to evaluate the interactions between the agents under the CPSS for the ecology.The evaluation results justify the effectiveness of the novel approaches.The results may be useful to develop social ecologies and CPSSs using smart agents of heterogeneous realities for assisted living,social companionships,smart homes,spaces and cities,etc.

The main limitations of the presented works are that activities of the VH and HR were pre-arranged and thus were limited and less natural.For example,objects were hidden only in a few pre-arranged locations.Again,measurement of trust between the agents may be less robust due to limitations in sensor applications.Thus,the presented approaches and results can be treated as initial efforts toward a very big emerging technology.In the future,agent intelligence will be enhanced to make the collaboration feasible for objects hidden in any location in the space.More embedded,wearable and ambient sensing technologies will be used to make the real-time measurements more repeatable,reproducible and robust.Novel controls will be used to control the collaboration within the desired specifications.Human’s involvement in the CPS in its control,computation and communication will be enhanced,which may further justify the concept of CPSS for the proposed collaborative task.The collaboration under the CPSS will be expanded to implement the entire ecology outlined in Fig.2.

ACKNOWLEDGEMENT

The author acknowledges all the supports that he received from his past colleagues,superiors and research staffs at Nanyang Technological University for the research and development presented herein.

[1]M.J.Salvador,S.Silver,and M.H.Mahoor,“An emotion recognition comparative study of autistic and typically-developing children using the zeno robot,”inProc.2015 EEE Int.Conf.Robotics and Automation(ICRA),Seattle,WA,USA,2015,pp.6128−6133.

[2]A.Alkhalifah,B.A lsalman,D.A lnuhait,O.Meldah,S.A loud,H.S.A l-Khalifa,and H.M.Al-Otaibi,“Using NAO humanoid robot in kindergarten:a proposed system,”inProc.2015 IEEE 15th Int.Conf.Advanced Learning Technologies,Hualien,Taiwan,China,2015,pp.166−167.

[3]A.Wagoner,A.Jagadish,E.T.Matson,L.EunSeop,Y.Nah,K.K.Tae,D.H.Lee,and J.E.Joeng,“Humanoid robots rescuing humans and extinguishing fires for cooperative fire security system using HARMS,”inProc.2015 6th Int.Conf.Automation,Robotics and Applications,Queenstown,New Zealand,2015,pp.411−415.

[4]Y.D.Pan,H.Okada,T.Uchiyama,and K.Suzuki,“Direct and indirect social robot interactions in a hotel public space,”inProc.2013 IEEE Int.Conf.Robotics and Biomimetics,Shenzhen,China,2013,pp.1881−1886.

[5]S.Yoshida,T.Shirokura,Y.Sugiura,D.Sakamoto,T.Ono,M.Inami,and T.Igarashi,“RoboJockey:designing an entertainment experience with robots,”IEEE Comput.Graphics Appl.,vol.36,no.1,pp.62−69,Jan.−Feb.2016.

[6]S.M.M.Rahman and Y.Wang,“Dynamic affection-based motion control of a humanoid robot to collaborate with human in flexible assembly in manufacturing,”inProc.ASME 2015 Dynamic Systems and Controls Conf.,Columbus,Ohio,USA,2015.

[7]S.M.M.Rahman,“Generating human-like social motion in a humanlooking humanoid robot:the biomimetic approach,”inProc.2013 IEEE Int.Conf.Robotics and Biomimetics,Shenzhen,China,2013,pp.1377−1383.

[8]G.Castellano and C.Peters,“Socially perceptive robots:challenges and concerns,”Interact.Stud.,vol.11,no.2,pp.201−207,2010.

[9]I.Leite,C.Martinho,and A.Paiva,“Social robots for long-term interaction:a survey,”Int.J.Soc.Robotics,vol.5,no.2,pp.291−308,Apr.2013.

[10]M.Hays,J.Campbell,M.Trimmer,J.Poore,A.Webb,C.Stark,and T.King,“Can role-play with virtual humans teach interpersonal skills?”inProc.Interservice/Industry Training,Simulation and Education Conf.(I/ITSEC),Orlando,FL,USA,2012.

[11]C.S.Lányi,Z.Geiszt,P.Károlyi,á.Tilinger,and V.Magyar,“Virtual reality in special needs early education,”Int.J.Virtual Reality,vol.5,no.4,pp.55−68,2006.

[12]J.C.Campbell,M.J.Hays,M.Core,M.Birch,M.Bosack,and R.E.Clark,“Interpersonal and leadership skills:using virtual humans to teach new officers,”inProc.Interservice/Industry Training,Simulation,and Education Conf.,2011.

[13]N.Saleh, “The value of virtual patients in medical education,”Ann.Behav.Sci.Med.Educ.,vol.16,no.2,pp.29−31,Sep.2010.

[14]P.V.Law ford,A.V.Narracott,K.M cCormack,J.Bisbal,C.Martin,B.Brook,M.Zachariou,P.Kohl,K.Fletcher,and V.Diaz-Zucczrini,“Virtual physiological human:training challenges,”Phil.Trans.R.Soc.A,vol.368,no.1921,pp.2841−2851,May 2010.

[15]W.Swartout,J.Gratch,R.W.Hill,E.Hovy,S.Marsella,J.Rickel,and D.Traum,“Toward virtual humans,”AIMag.,vol.27,no.2,pp.96−108,Jul.2006.

[16]A.Reina,M.Salvaro,G.Francesca,L.Garattoni,C.Pinciroli,M.Dorigo,and M.Birattari,“Augmented reality for robots:virtual sensing technology applied to a swarm of e-pucks,”inProc.2015 NASA/ESA Conf.Adaptive Hardware and Systems(AHS),Montreal,QC,Canada,2015,pp.1−6.

[17]S.M.M.Rahman and R.Ikeura,“Weight-prediction-based predictive optimal position and force controls of a power assist robotic system for object manipulation,”IEEE Trans.Industr.Electron.,vol.63,no.9,pp.5964−5975,Sep.2016.

[18]S.M.M.Rahman and R.Ikeura,“Cognition-based control and optimization algorithms for optimizing human-robot interactions in power assisted object manipulation,”Journal of Information Science and Engineering,vol.32,no.5,pp.1325−1344,Sep.2016.

[19]S.M.M.Rahman,“Evaluating and benchmarking the interactions between a humanoid robot and a virtual human for a real-world social task,”inProc.2013 Int.Conf.Advances in Information Technology:Advances in Information Technology,Bangkok,Thailand,2013,pp.184−197.

[20]S.M.M.Rahman,“Collaboration between a physical robot and a virtual human through a unified platform for personal assistance to humans,”Personal Assistants:Emerging Computational Technologies,A.Costa,V.Julian,and P.Novais,Eds.Springer,2017,pp.149−177.

[21]S.M.M.Rahman,“People-centric adaptive social ecology between intelligent autonomous humanoid robot and virtual human for social cooperation,”inProc.2013 Int.Joint Conf.Ambient Intelligence:Evolving Ambient Intelligence,Dublin,Ireland,2013,pp.120–135.

[22]X.P.Guan,B.Yang,C.L.Chen,W.B.Dai,and Y.Y.Wang“A comprehensive overview of cyber-physical systems:from perspective of feedback system,”IEEE/CAA J.Automat.Sin.,vol.3,no.1,pp.1−14,Jan.2016.

[23]G.Xiong,F.H.Zhu,X.W.Liu,X.S.Dong,W.L.Huang,S.H.Chen,and K.Zhao,“Cyber-physical-social system in intelligent transportation,”IEEE/CAA J.Automat.Sin.,vol.2,no.3,pp.320−333,Jul.2015.

[24]S.M.M.Rahman,Y.Wang,I.D.Walker,L.Mears,R.Pak,and S.Remy,“Trust-based compliant robot-human handovers of payloads in collaborative assembly in flexible manufacturing,”inProc.2016 IEEE Int.Conf.Automation Science and Engineering,FortWorth,TX,USA,2016,pp.355−360.

[25]S.M.M.Rahman,B.Sadr,and Y.Wang,“Trust-based optimal subtask allocation and model predictive control for human-robot collaborative assembly in manufacturing,”inProc.ASME 2015 Dynamic Systemsand Controls Conf.,Columbus,Ohio,USA,2015.

[26]R.Chipalkatty,G.Droge,and M.B.Egerstedt,“Less is more:mixedinitiative model-predictive control with human inputs,”IEEE Trans.Robot.,vol.29,no.3,pp.695−703,Jun.2013.

[27]G.Amato,D.Bacciu,M.Broxvall,S.Chessa,S.Coleman,M.DiRocco,M.Dragone,C.Gallicchio,C.Gennaro,H.Lozano,T.M.M cGinnity,A.M icheli,A.K.Ray,A.Renteria,A.Saffiotti,D.Swords,C.Vairo,and P.Vance, “Robotic ubiquitous cognitive ecology for smart homes,”J.Intell.Robot.Syst.,vol.80,no.1,pp.57−81,Dec.2015.

[28]Y.Z.Song and W.Zhao,“Leader follower multi-agents network with discrete-time rendezvous via swarm social system strategies,”inProc.31st Chinese Control Conf.,Hefei,China,2012,pp.6081−6086.

[29]Y.N.Li,K.P.Tee,W.L.Chan,R.Yan,Y.W.Chua,and D.Limbu,“Continuous role adaptation for human-robot shared control,”IEEE Trans.Robot.,vol.31,no.3,pp.672−681,Jun.2015.

[30]M.Kapadia,A.Shoulson,C.Boatright,P.Huang,F.Durupinar,and N.I.Badler, “What’s next?the new era of autonomous virtual humans,”Motion in Games,M.Kallmann and K.Bekris,Eds.Berlin,Heidelberg:Springer,2012,pp.170−181.

[31]J.Gratch,J.Rickel,E.Andre,N.Badler,J.Cassell,E.Petajan,and N.Badler,“Creating interactive virtual humans:some assembly required,”IEEE Intell.Syst.,vol.17,no.4,pp.54−63,Jul.−Aug.2002.

[32]Z.Kasap and N.Magnenat-Thalmann,“Intelligent virtual humans with autonomy and personality:state-of-the-art,”New Advances in Virtual Humans,N.Magnenat-Thalmann,L.C.Jain,and N.Ichalkaranje,Eds.Berlin,Heidelberg:Springer,2008,pp.43−84.

[33]Y.L.Kang,B.Subagdja,A.H.Tan,Y.S.Ong,and C.Y.M iao,“Virtual characters in agent-augmented co-space,”inProc.11th Int.Conf.Autonomous Agents and Multiagent Systems,Valencia,Spain,2012,pp.1465−1466.

[34]B.R.Chang,H.F.Tsai,C.L.Guo,and C.Y.Chen,“Remote cloud data center backup using HBase and Cassandra with user-friendly GUI,”inProc.2015 IEEE Int.Conf.Consumer Electronics,Taipei,Taiwan,China,2015,pp.420−421.

[35]N.Kumar,A.Kumar,and S.Giri,“Design and implementation of three phase commit protocol(3PC)directory structure through Remote Procedure Call(RPC)application,”inProc.2014 Int.Conf.Information Communication and Embedded Systems,Chennai,India,2014,pp.1−5.

[36]I.Rodriguez,A.Astigarraga,E.Jauregi,T.Ruiz,and E.Lazkano,“Humanizing NAO robot teleoperation using ROS,”inProc.14th IEEERAS Int.Conf.Humanoid Robots,Madrid,Spain,2014,pp.179−186.

[37]S.G.Wang,A.Zhou,M.Z.Yang,L.Sun,C.H.Hsu,and F.C.Yang,“Service composition in cyber-physical-social systems,”IEEE Trans.Emerg.Topics Comput.,2017,doi:10.1109/TETC.2017.2675479.(to be published)

[38]Q.M.Meng and A.L.Chen,“Artificial emotional interaction model based on EFSM,”inProc.2011 Int.Conf.Electronic and Mechanical Engineering and Information Technology,Harbin,Heilongjiang,China,2011,pp.1852−1855.

[39]J.D.Lee and N.Moray,“Trust,self-confidence,and operators’adaptation to automation,”Int.J.Human-Comput.Stud.,vol.40,no.1,pp.153−184,Jan.1994.

[40]C.L.Heitmeyer and E.I.Leonard,“Obtaining trust in autonomous systems:tools for formal model synthesis and validation,”inProc.2015 IEEE/ACM 3rd FMEWorkshop on Formal Methods in Software Engineering,Florence,Italy,2015,pp.54−60.

[41]G.Hoffman,“Evaluating fluency in human-robot collaboration,”inProc.RSS Workshop on Human-Robot Collaboration,2013.

[42]L.Wu,J.Y.Yan,and Y.J.Fan,“Data mining algorithms and statistical analysis for sales data forecast,”inProc.2012 5th Int.Conf.Computational Sciences and Optimization,Harbin,China,2012,pp.577−581.

[43]W.Guo,Y.Zhang,and L.Li,“The integration of CPS,CPSS,and ITS:A focus on data,”Tsinghua Sci.Technol.,vol.20,no.4,pp.327−335,Aug.2015.

[44]Z.Liu,D.S.Yang,D.Wen,W.M.Zhang,and W.J.Mao,“Cyberphysical-social systems for command and control,”IEEE Intell.Syst.,vol.26,no.4,pp.92−96,Jul.−Aug.2011.

[45]B.Guo,Z.W.Yu,and X.S.Zhou,“A data-centric framework for cyberphysical-socialsystems,”IT Profess.,vol.17,no.6,pp.4−7,Nov−Dec.2015.

[46]C.Huang,J.Marshall,D.Wang,and M.X.Dong,“Towards reliable social sensing in cyber-physical-social systems,”inProc.2016 IEEE Int.Parallel and Distributed Proc.Symp.Workshops,Chicago,IL,USA,2016,pp.1796−1802.

[47]Z.C.M.Candra,H.L.Truong,and S.Dustdar,“On monitoring cyber-physical-social systems,”inProc.2016 IEEE World Congress on Services(SERVICES),San Francisco,CA,USA,2016,pp.56−63.

[48]J.W.Huang,M.D.Seck,and A.Gheorghe,“Towards trustworthy smart cyber-physical-social systems in the era of Internet of Things,”inProc.11th System of Systems Engineering Conf.,Kongsberg,Norway,2016,pp.1−6.

[49]C.C.Lin,D.J.Deng,and S.Y.Jhong,“A triangular nodetrix visualization interface for overlapping social community structures of cyberphysical-social systems in smart factories,”IEEE Trans.Emerg.Topics Comput.,2017,doi:10.1109/TETC.2017.2671846.(to be published)

[50]Z.Su,Q.F.Qi,Q.C.Xu,S.Guo,and X.W.Wang,“Incentive scheme for cyber physical social systems based on user behaviors,”IEEE Trans.Emerg.Topics Comput.,2017,doi:10.1109/TETC.2017.2671843.(to be published)

[51]X.Zheng,Z.P.Cai,J.G.Yu,C.K.Wang,and Y.S.Li,“Follow but no track:privacy preserved profile publishing in cyber-physical social systems,”IEEE Int.Things J.,2017,doi:10.1109/JIOT.2017.2679483.(to be published)

杂志排行

IEEE/CAA Journal of Automatica Sinica的其它文章

- Encoding-Decoding-Based Control and Filtering of Networked Systems:Insights,Developments and Opportunities

- Internet of Vehicles in Big Data Era

- Residential Energy Scheduling for Variable Weather Solar Energy Based on AdaptiveDynamic Programming

- From Mind to Products:Towards Social Manufacturing and Service

- Analysis of Autopilot Disengagements Occurring During Autonomous Vehicle Testing

- A Methodology for Reliability of WSN Based on Software De fined Network in Adaptive Industrial Environment