Learning multi-kernel multi-view canonical correlations for image recognition

2016-07-19YunHaoYuanYunLiJianjunLiuChaoFengLiXiaoBoShenGuoqingZhangandQuanSenSuncTheAuthor06ThisarticleispublishedwithopenaccessatSpringerlinkcom

Yun-Hao Yuan(),Yun Li(),Jianjun Liu,Chao-Feng Li,Xiao-Bo Shen,Guoqing Zhang,and Quan-Sen Sun?cThe Author(s)06.This article is published with open access at Springerlink.com

Research Article

Learning multi-kernel multi-view canonical correlations for image recognition

Yun-Hao Yuan1,2(),Yun Li1(),Jianjun Liu2,Chao-Feng Li2,Xiao-Bo Shen3,4,Guoqing Zhang3,and Quan-Sen Sun3

?cThe Author(s)2016.This article is published with open access at Springerlink.com

AbstractIn this paper,we propose a multi-kernel multi-view canonical correlations(M2CCs)framework for subspace learning.In the proposed framework,the input data of each original view are mapped into multiple higher dimensional feature spaces by multiple nonlinear mappings determined by different kernels.This makes M2CC can discover multiple kinds of useful information of each original view in the feature spaces.With the framework,we further provide a specific multi-view feature learning method based on direct summation kernel strategy and its regularized version.The experimental results in visual recognition tasks demonstrate the effectiveness and robustness of the proposed method.

Keywordsimage recognition;canonical correlation;multiple kernel learning;multi-view data;feature learning

1 Introduction

Multi-view canonical correlation analysis(MCCA)

1Department of Computer Science,College of Information Engineering,Yangzhou University,Yangzhou 225127,China.E-mail:Y.-H.Yuan,yyhzbh@163.com();Y. Li,liyun@yzu.edu.cn().

2Department of Computer Science,Jiangnan University,Wuxi 214122,China.E-mail:J.Liu,liuofficial@163.com;C.-F.Li,wxlichaofeng@126.com.

3School of Computer Science,Nanjing University of Science and Technology,Nanjing 210094,China.E-mail:X.-B.Shen,njust.shenxiaobo@gmail.com;G. Zhang,xiayang14551@163.com;Q.-S.Sun,sunquansen@ njust.edu.cn.

4SchoolofInformationTechnologyandElectrical Engineering,the University of Queensland,Brisbane QLD 4072,Australia.

Manuscript received:2015-12-01;accepted:2016-02-08[1,2]is a powerful technique for finding the linear correlations among multiple(more than two)high dimensional random vectors.Currently,it has been applied to various real-world applications such as blind source separation[3],functional magnetic resonance imaging(fMRI)analysis[4,5],remote sensing image analysis[6],and target recognition[7].

In recent years,the generalizations of MCCA haveattractedincreasingattentionandsome impressiveresultshavebeenobtained.Among all the extensions,an attractive direction is the nonlinear one.Bach and Jordan[8]proposed a kernel MCCA(KMCCA)method which minimizes the minimal eigenvalue of the correlation matrix of the projected univariate random variables.Later,Yu et al.[9]presented a weighted KMCCA1Although the authors refer to their method as weighted multiple kernel CCA,it is necessary to point out that the real meaning of“multiple kernel”is to use m kernel functions for all the m views,i.e.,only one kernel for each view,rather than conventional multiple kernel learning in the popular literature[10,11].to extract lowdimensionalprojectionsfromheterogenous datasets for data visualization and classification tasks.Recently,Rupnik and Shawe-Taylor[12]developed another KMCCA method directly based on the sum of correlations criterion[2],which can be regarded as a natural extension of kernel CCA (KCCA)[8,13]and has been demonstrated to be effective in cross-lingual information retrieval.

However,in practice KMCCA must face two mainissues.Thefirstishowtoselectthe types and parameters of the kernels for good performance.Currently,although the choice for kernel types and parameters can usually be achieved bysomecrossvalidationmethods[14],these methods have expensive computational costs when handlingalargenumberofkerneltypesandparameters.Second,KMCCA essentially is a singlekernel-based learning method,i.e.,only one kernel function for each view.As pointed out in Ref.[15],a single kernel can only characterize some but not all geometrical structures of the original data.Thus,it is obvious that KMCCA does not sufficiently exploit the geometrical information hidden in each view.This may lead to the challenge that KMCCA is not always applicable to the data with complex multi-view structures.

Over the past few years,researchers have shown the necessity to consider multiple kernels rather than a single fixed kernel in practical applications;see,for example,Refs.[10,11,16,17].Multiple kernel learning(MKL),proposed by Lanckriet et al.[10]in the case of support vector machines(SVM),refers to the process of learning the optimal combination of multiple pre-specified kernel matrices.Using the idea of MKL,Kim et al.[18]proposed to learn an optimal kernel over a given convex set of kernels for discriminant analysis,while Yan et al.[19]presented a non-sparse multiple kernel Fisher discriminant analysis,which imposes a general lpnorm regularization on the kernel weights.Lin et al.[20]generalized the framework of MKL for a set of manifold-based dimensionality reduction algorithms.These investigations above have shown thatlearningperformancecanbesignificantly enhanced if multiple kernel functions or kernel matrices are considered.

Thispaperisanextendedversionofour previous work[21].In contrast,in this paper wepresentageneralmulti-kernelmulti-view canonical correlations(M2CCs)framework for joint image representation and recognition,and show theconnectionstootherkernellearning-based canonical correlation methods.In the proposed framework,the input data of each view are mapped into multiple higher dimensional feature spaces by implicitly nonlinear mappings determined by different kernels.This enables M2CC to uncover multiple kinds of characteristics of each original view in the feature spaces.Moreover,the M2CC framework can be employed as a general platform fordevelopingnewmulti-viewfeaturelearning algorithms.Based on the M2CC framework,we presentanexamplealgorithmformulti-view learning,and further suggest its regularized version that can avoid the singularity problem,prevent the overfitting,and provide the flexibility in real world.In addition,more experiments are done to evaluate the effectiveness of the proposed method.

2Kernel MCCA

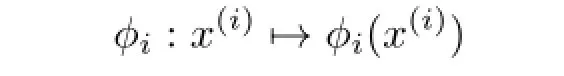

KMCCA [12,22]can not only be considered as a nonlinear variant of MCCA,but also a multiview extension of KCCA.Specifically,given m viewsfrom the same n images,where)represents a data matrix of the ith view containing pi-dimensional sample vectors in its columns,assume there is a nonlinear mapping for each view X(i),i.e.,

which implicitly projects the original data into a higher dimensional feature space Fi.Let

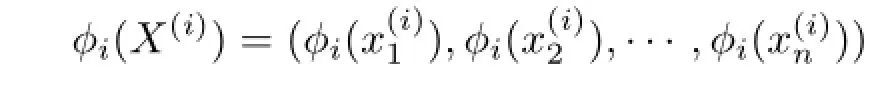

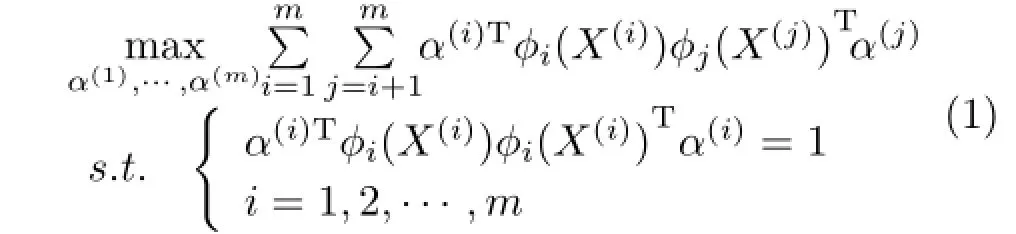

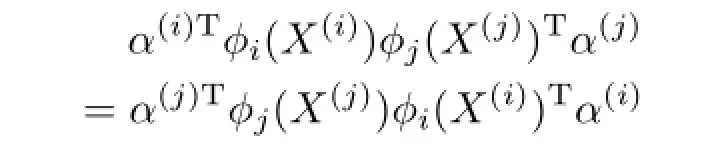

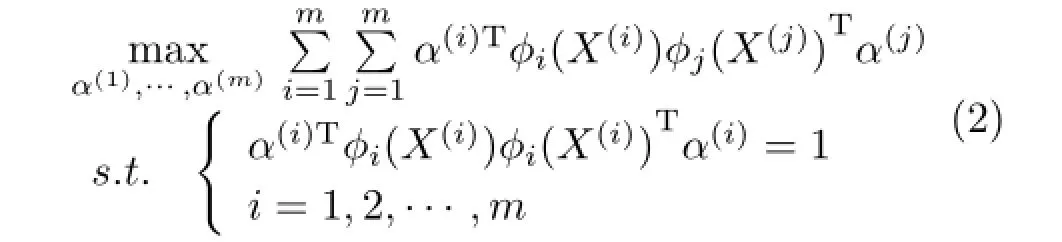

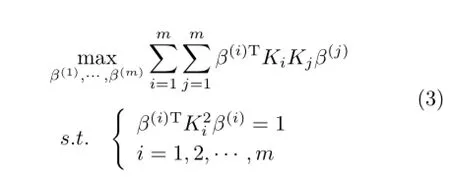

denotethetransformeddataoforiginal X(i).KMCCA aims to compute one set of projection vectors{α(i)∈Fi}mi=1by the following optimization problem: Note that we assume that every φi(X(i))in Eq.(1)has been centered,i.e.,)=0,i= 1,2,···,m.The details about the data centering process can be found in Ref.[23].

Taking advantage of the following two equations:

and

we can equivalently transform the optimization problem in Eq.(1)into the following:

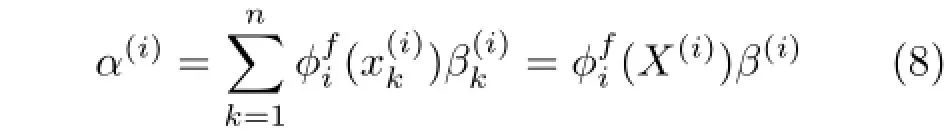

Let α(i)=φi(X(i))β(i)with β(i)∈Rn.By means of kernel trick[8,23],the problem in Eq.(2)can be reformulated as

where Ki=φi(X(i))Tφi(X(i))is the kernel Gram matrix determined by a certain kernel function.

Using the Lagrange multiplier technique,we can solve the problem in Eq.(3)by the following multivariate eigenvalue problem(MEP)[24]:

3 Multi-kernel multi-view canonical correlations framework

In this section,we use the idea of MKL to build amulti-kernelmulti-viewcanonicalcorrelations (M2CCs)framework,where each set of original data are mapped into multiple high dimensional feature spaces.

3.1Motivation

As discussed in Section 1,on one hand,KMCCA is very time-consuming to choose appropriate kernel types and parameters for the optimal performance in practical classification applications.Also,KMCCA only employs a kernel function for each of multiple views.Thus,in essence it is a single kernelbasedsubspacelearningmethod.Thismakes KMCCA more difficult to discover multiple kinds of geometrical structure information of each original view in the higher dimensional Hilbert space.On the other hand,many studies[15,18-20]show that MKL can significantly improve the learning performance forclassificationtasksandhasthecapability of uncovering a variety of different geometrical structures of the original data.Moreover,MKL can also help kernel-based algorithms relax the selection of kernel types and parameters.Motivated by the advantages of MKL,we consider multiple kernel functions for each original view and propose a multikernel multi-view canonical correlations framework for multi-view feature learning,which can provide a unified formulation for a set of kernel canonical correlation methods.To the best of our knowledge,such an MKL framework of MCCA is novel.

3.2Formulation

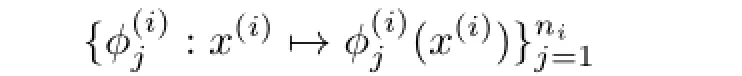

Suppose m-view features from the same n imagesith view and pidenotes the dimensionality of the samples.For each view X(i),assume there are ni>1 nonlinear mappings:

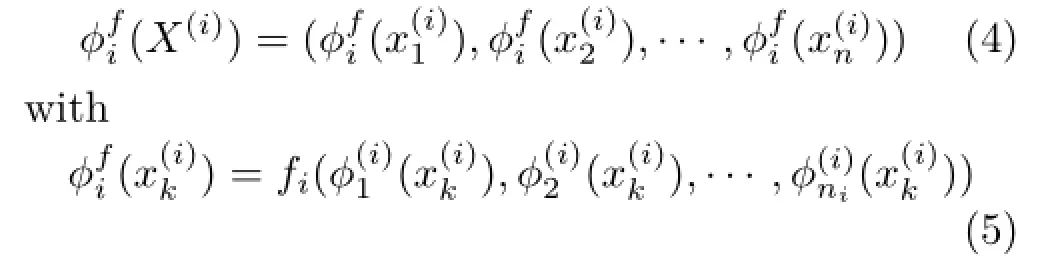

which implicitly map the original data X(i)into nidifferenthigherdimensionalfeaturespaces,respectively.Note that the number of nonlinear mappings,ni,maybedifferentfordifferent views.Let us denote

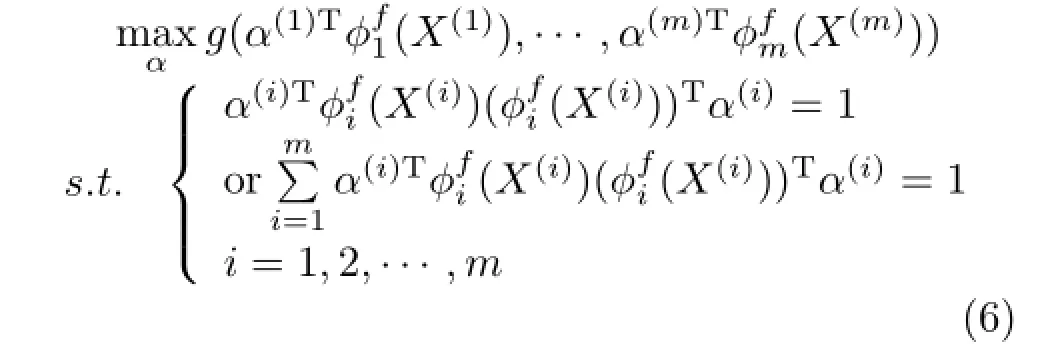

where fi(·)is an ensemble function of nonlinear mappings,i=1,2,···,m and k=1,2,···,n.Letα(i)be the projection axis of φfi(X(i))in the feature space,then the M2CC framework can be defined as

where g(·)denotes a multi-view correlation criterion function among the projectionsNote that we assume eachhas been centered.

As can be seen from Eq.(6),it is clear that many classical kernel canonical correlation methods can be subsumed into the M2CC framework by defining different multi-view correlation criteria and ensemble mappingsif we impose that the number of nonlinear mappings in each view is equal to one,i.e.,n1=n2=···=nm=1.For example:

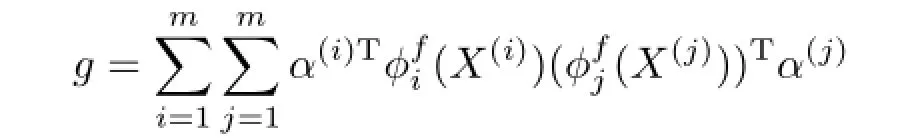

•Reduce to KMCCA.When the multi-view correlation criterion function g is defined as the sum of correlations between every pair of views,i.e.,

•Reduce to KCCA.When m=2 and multi-view correlation criterion g is defined as the correlation between two views,i.e.,

i=1,2,M2CC becomes KCCA.

As a result,one can design new multiple view data learning algorithms via defining different multiview correlation criterion functions and ensemble mappings

4 Example algorithm:direct sum based M2CC

In this section,we give a specific multi-view learning algorithm,where all nonlinear mappings for each view share the same weight.We also present its regularized version which can prevent overfitting and avoid the singularity of the matrix.

4.1Model

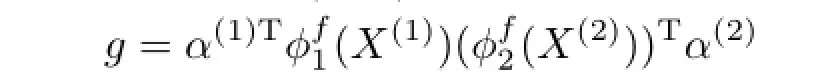

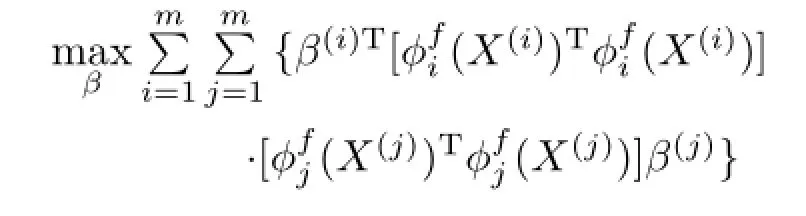

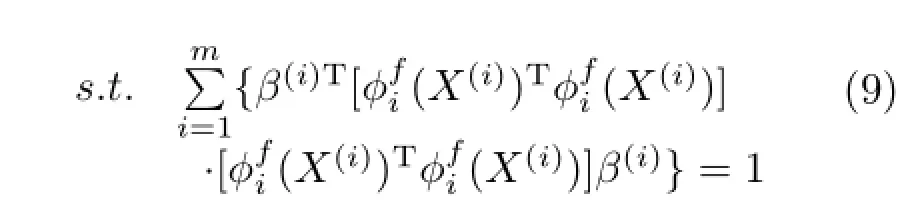

By means of the idea from the sum of correlations [12,22],our direct summation based M2CC model can be defined as

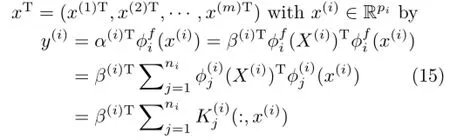

Using the dual representation theorem,we have

where βT=(β(1)T,β(2)T,···,β(m)T)∈Rmn.

As we can see,different ensemble mappingsin Eq.(9)will result in different models.Thus,in this paper we define these ensemble mappings as

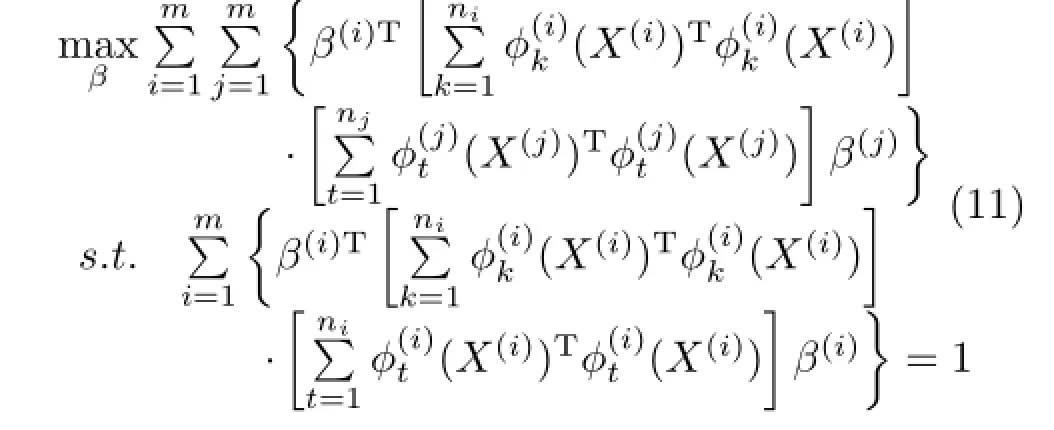

i=1,2,···,m.AccordingtoEq.(10), the optimization problem in Eq.(9)can be further converted as

4.2Algorithmic derivation

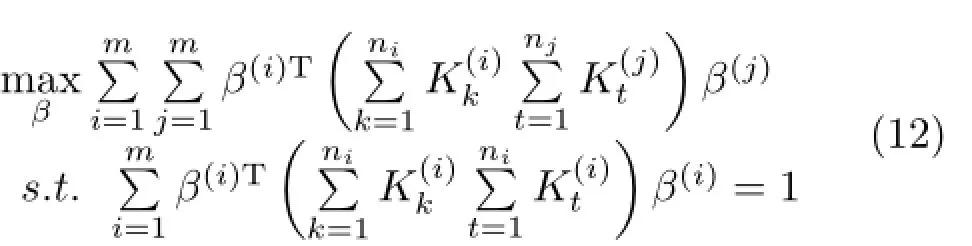

To solve the optimization problem in Eq.(11),weusing the kernel trick[21],where K(i)kdenotes the kernel matrix corresponding to the kth nonlinear mapping in the ith view,and k=1,2,···,ni.Now,the problem in Eq.(11)can be formulated equivalently as

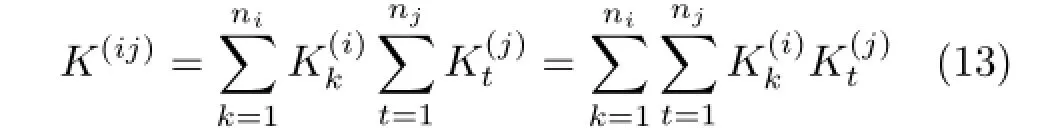

Let us denote

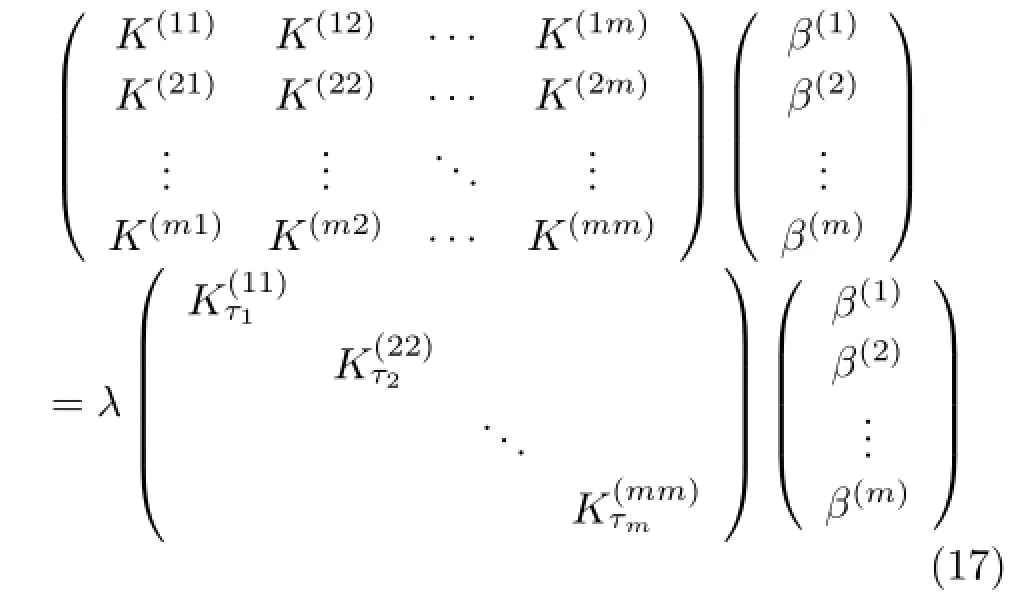

By the Lagrange multiplier technique,we can solve the problem in Eq.(12)by the following generalized eigenvalue problem:

It is clear that the objective function in Eq.(12)canbemaximizeddirectlybycalculatingthe eigenvectorsoftheeigen-equation(14).Thus, we choose a set of eigenvectorscorresponding to the first d largest eigenvalues as the dual solution vectors of our method.Once the dual solution vectors are obtained,we can perform multi-view feature extractionforagivenmulti-viewobservation whereisann-dimensionalcolumndenoting the jth kernel function in the ith view, i=1,2,···,m.

4.3Regularization

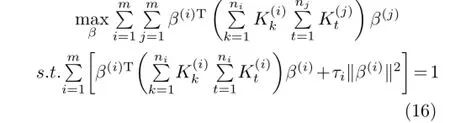

In real-world applications,it is possible that the matrix diag(K(11),K(22),···,K(mm))in Eq.(14)is singular.In such case,the classical algorithm can not be directly used to solve the generalized eigenvalue problem.Thus,to avoid the singularity and prevent overfitting,we need to build a regularized version,which is the following: whereare the regularization parameters and k·k denotes the 2-norm of vectors.

Following the same approach as in Section 4.2,we have

If the singularity/overfitting problem occurs,or some applications need to control the flexibility of the proposed method,we can utilize Eq.(17)instead of Eq.(14)to calculate the dual vectors

5 Experiments

In this section,we perform two face recognition experiments to test the performance of our proposed methodusingthefamousAT&T1http://www.cl.cam.ac.uk/research/dtg/attarchive/facedatabase. html.andYale databases.Moreover,we compare the proposed method with kernel PCA(KPCA)and KMCCA for revealing the effectiveness.In all the experiments,the nearest neighbor(NN)classifiers with Euclidean distance and cosine distance metrics are used for recognition tasks.

5.1Candidate kernels

In our experiments,we adopt three views in total from the same face images and we use three kinds of kernel functions for the ith view in our proposed method,as follows:

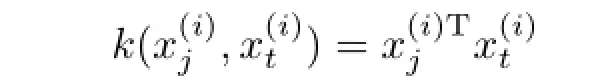

•linear kernel

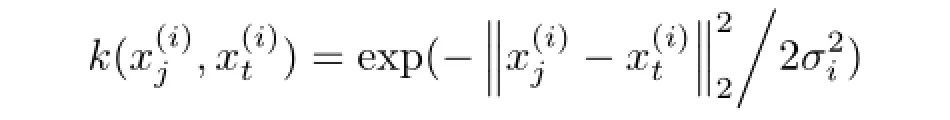

•Radial basis function(RBF)kernel where σiis set to the average value of all the l2-norm distancesas used in Ref.[15];

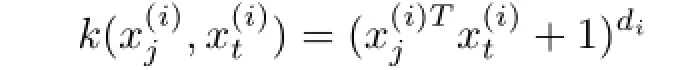

•polynomial kern?el

where diis set to i+1,i=1,2,3.

In KMCCA,we use the above three kinds of kernels with the same parameters,i.e.,linear kernel for the first view,RBF kernel for the second,and polynomial kernel for the last.

In addition,for a fair comparison with KMCCA and our proposed method,we perform KPCA by first stacking three views together into a single view and then using one of the above-described kernels.

5.2Compared methods

To demonstrate how the recognition performance can be improved by our method,we compare thefollowing nine methods:

•KPCA-Lin which uses a linear kernel.

•KPCA-RBF which uses an RBF kernel.

•KPCA_PolA which uses a polynomial kernel with order A,where A_takes 2,3,and 4 respectively.

•KMCCA_PolA where one of three views uses the polynomial kernel with order A and A takes 2,3,and 4 respectively.

•Our method which is the new one proposed in this paper.

5.3Experiment on the AT&T database

The AT&T database contains 400 face images from 40 persons.There are 10 grayscale images per person with a resolution of 92×112.In some persons,the images are taken at different time.The lighting,facial expressions,and facial details are also varied.The images are taken with a tolerance for some tilting and rotation of the face up to 20 degree,and have some variation in the scale up to about 10%.Ten images of one person are shown in Fig.1.

Inthisexperiment, weemploythesame preprocessing technique as used in Refs.[25-27]to obtain three-view data.That is,we first perform Coiflets,Daubechies,andSymletsorthonormal wavelet transforms to obtain three sets of lowfrequency sub-images(i.e.,three views)from original face images,respectively.Then,the K-L transform is employed to reduce the dimensionality of each view to 150.The final formed three views,each with 150 dimensions,are used in our experiment.

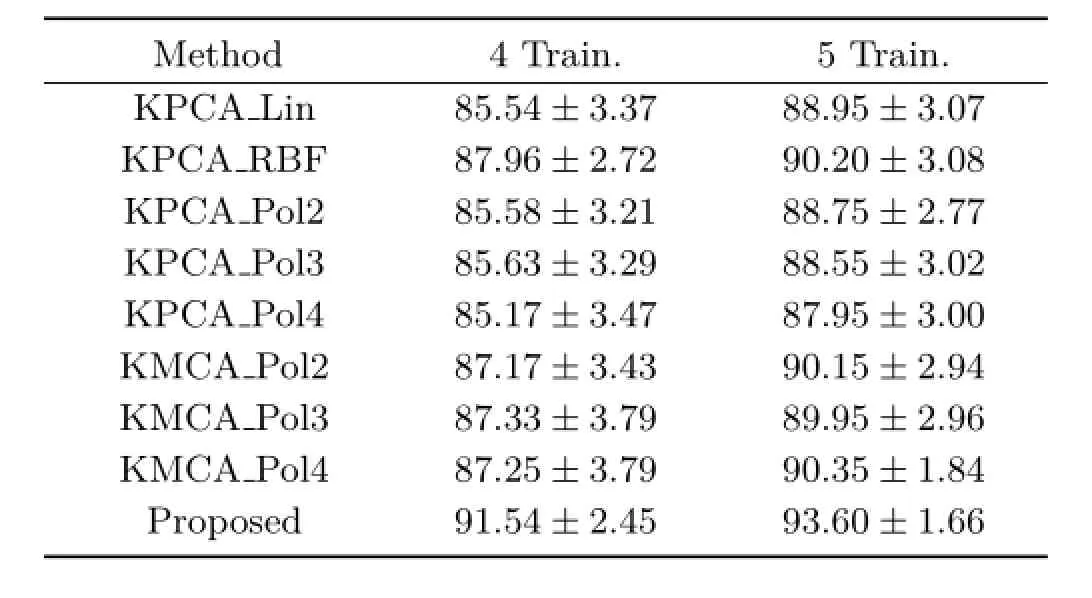

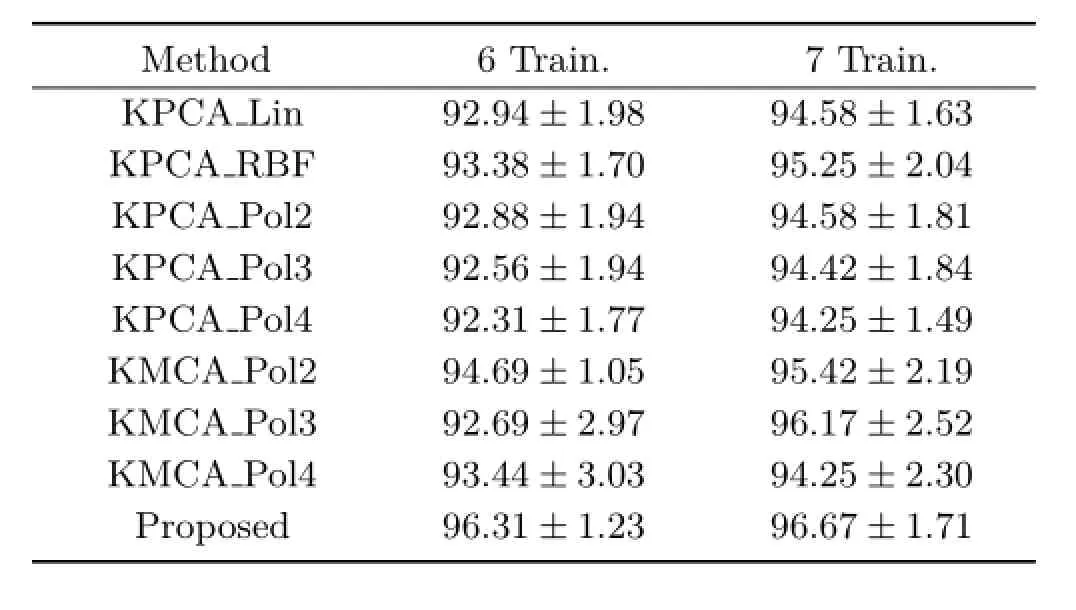

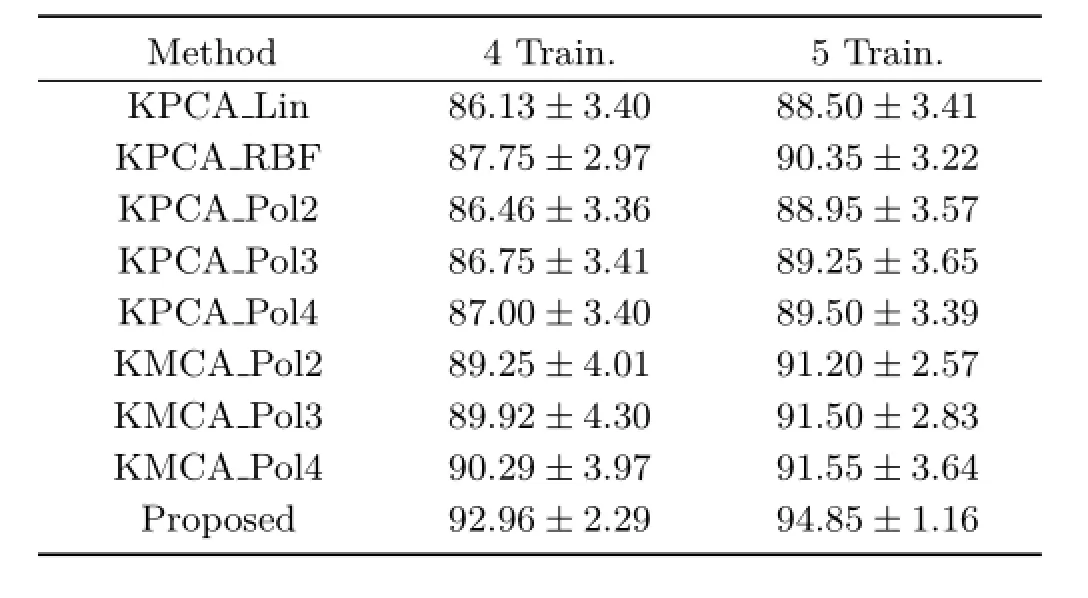

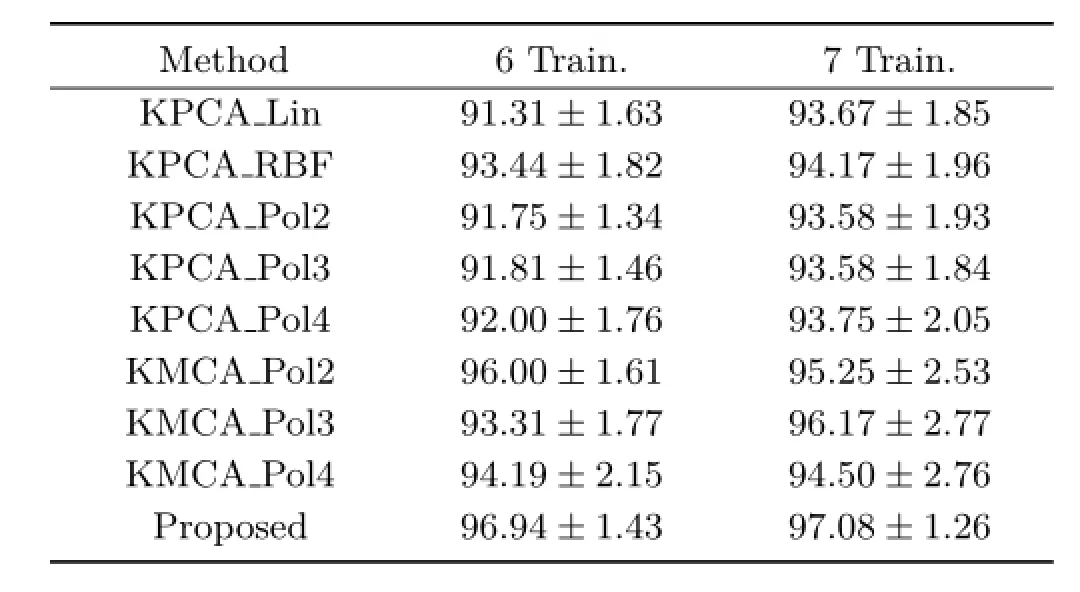

In this experiment,N images(N=4,5,6,and 7)per person are randomly chosen for training,while the remaining 10-N images are used for testing.For each N,we perform 10 independent recognition tests to evaluate the performances of KPCA,KMCCA,and our method.Tables 1-4 show the average recognition rates of each method under NN classifiers with Euclidean distance and cosine distance and their corresponding standard deviations.

Fig.1 Ten face images of a person in the AT&T database.

From Tables 1-4,we can see that our proposed method outperforms KMCCA and the baseline algorithm KPCA,no matter how many training samples per person are used.Particularly when the number of training samples is less,our method improves more compared with other methods.On the whole,KMCCA achieves better recognition results than KPCA.Moreover,KPCA with RBF kernel performs better than with linear and polynomial kernels.

Table 1 Average recognition rates(%)with 4 and 5 training samples under Euclidean distance on the AT&T database and standard deviations_____________________________________________________

Table 2 Average recognition rates(%)with 6 and 7 training samples under Euclidean distance on the AT&T database and standard deviations_________________________________________________________

Table 3 Average recognition rates(%)with 4 and 5 training samples under cosine distance on the AT&T database and standard deviations_____________________________________________________

Table 4 Average recognition rates(%)with 6 and 7 training samples under cosine distance on the AT&T database and standard deviations

5.4Experiment on the Yale database

The Yale database[28]contains 165 grayscale images of 15 persons.Each person has 11 images with different facial expressions and lighting conditions,i.e.,center-light,with glasses,happy,left-light,without glasses,normal,right-light,sad,sleepy,surprised,and wink.Each image is cropped and resized to 100×80 pixels.Figure 2 shows eleven images of one person.

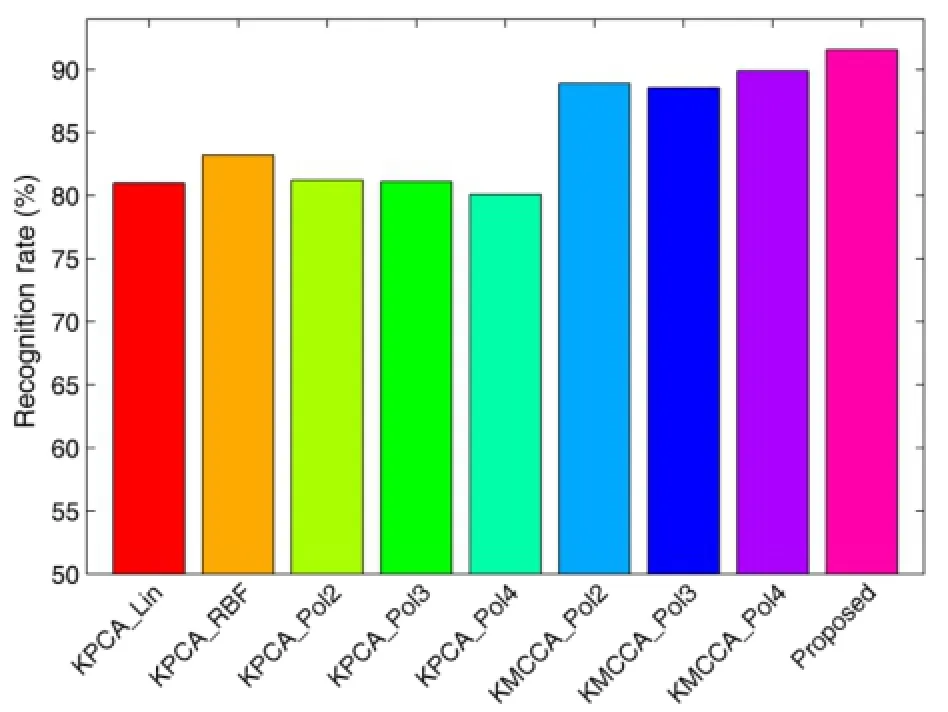

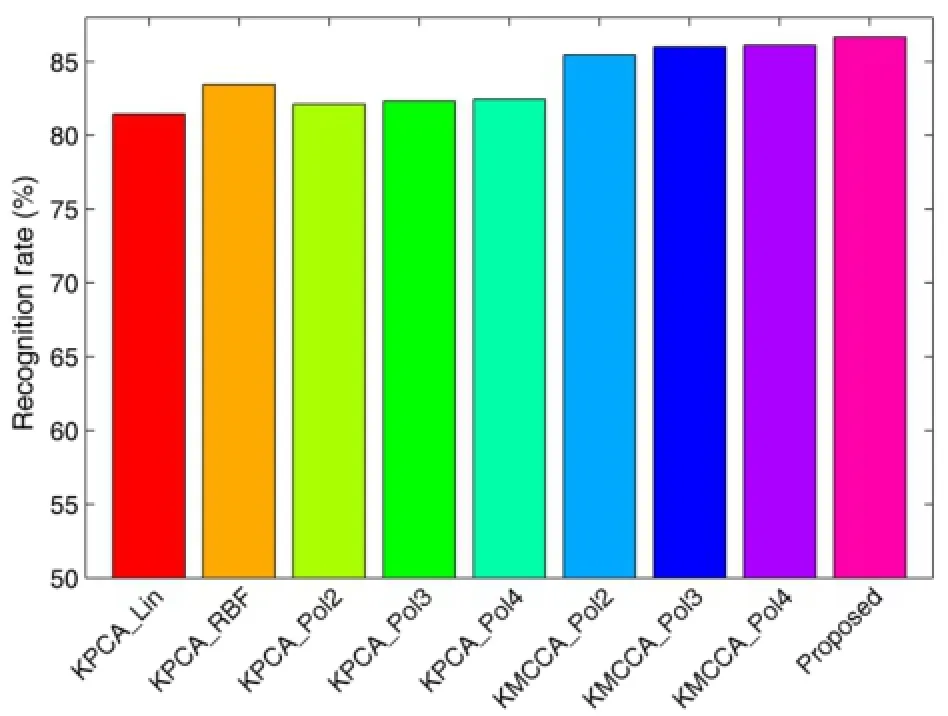

In this experiment,the Coiflets,Daubechies,and Symlets wavelet transforms are again performed on original face images to form three-view data.Also,their dimensions are,respectively,reduced to 75,75,and 75 by K-L transform.For each person,five images are randomly selected for training,and the remaining six images for testing.Thus,the total number of training samples and testing samples is,respectively,75 and 90.Ten-run tests are performed to examine the recognition performances of each method.Figures 3 and 4 show the average recognitionresultsofeachmethodunderthe Euclidean and cosine NN classifiers.As can be seen from Figs.3 and 4,our proposed method is superior to KPCA and KMCCA.KPCA performs the worst and the RBF kernel is still more effective than other kernels in KPCA.These conclusions are overall consistent with those drawn from Section 5.3.

Fig.2 Eleven images of a person in the Yale face database.

Fig.3 Average recognition rates with Euclidean distance on the Yale database.

Fig.4 Average recognition rates with cosine distance on the Yale database.

6 Conclusions

In this paper,we have proposed an M2CC framework for multi-view image recognition.The central idea ofM2CCistomapeachofmultipleviews to multiple higher dimensional feature spaces by multiple nonlinear mappings determined by different kernels.This enables M2CC to discover multiple kinds of useful information of each original view in the feature spaces.In addition,the M2CC framework can be used as a general platform for developing new algorithms related to MKL as well as MCCA.As shown in this paper,we have proposed a new specific multi-view feature learning algorithm where all the nonlinear mappings for each view aretreated equally.Two face recognition experiments demonstrate the effectiveness of our method.

Acknowledgements

This work is supported by the National Natural ScienceFoundationofChinaunderGrant Nos.61402203,61273251,and61170120,the FundamentalResearchFundsfortheCentral Universities under Grant No.JUSRP11458,and the Program for New Century Excellent Talents in University under Grant No.NCET-12-0881.

References

[1]Horst,P.Relations among m sets of measures. Psychometrika Vol.26,No.2,129-149,1961.

[2]Kettenring,J.R.Canonical analysis of several sets of variables.Biometrika Vol.58,No.3,433-451,1971.

[3]Li,Y.O.;Adali,T.;Wang,W.;Calhoun,V.D. Joint blind source separation by multiset canonical correlation analysis.IEEE Transactions on Signal Processing Vol.57,No.10,3918-3929,2009.

[4]Correa,N.M.;Eichele,T.;Adalı,T.;Li,Y.-O.;Calhoun,V.D.Multi-set canonical correlation analysis for the fusion of concurrent single trial ERP and functional MRI.NeuroImage Vol.50,No.4,1438-1445,2010.

[5]Li,Y.-O.;Eichele,T.;Calhoun,V.D.;Adali,T.Group study of simulated driving fMRI data by multiset canonical correlation analysis.Journal of Signal Processing Systems Vol.68,No.1,31-48,2012.

[6]Nielsen,A.A.Multiset canonical correlations analysis and multispectral,truly multitemporal remote sensing data.IEEE Transactions on Image Processing Vol.11,No.3,293-305,2002.

[7]Thompson,B.;Cartmill,J.;Azimi-Sadjadi,M.R.;Schock,S.G.A multichannel canonical correlation analysis feature extraction with application to buried underwater target classification.In:Proceedings of International Joint Conference on Neural Networks,4413-4420,2006.

[8]Bach,F.R.;Jordan,M.I.Kernel independent component analysis.The Journal of Machine Learning Research Vol.3,1-48,2003.

[9]Yu,S.;De Moor,B.;Moreau,Y.Learning with heterogenous data sets by weighted multiple kernel canonical correlation analysis.In:Proceedings of IEEE Workshop on Machine Learning for Signal Processing,81-86,2007.

[10]Lanckriet,G.R.G.;Cristianini,N.;Bartlett,P.;Ghaoui,L.E.;Jordan,M.I.Learning the kernel matrix with semidefinite programming.The Journal of Machine Learning Research Vol.5,27-72,2004.

[11]Sonnenburg,S.;R¨atsch,G.;Sch¨afer,C.;Sch¨olkopf,B. Large scale multiple kernel learning.The Journal of Machine Learning Research Vol.7,1531-1565,2006.

[12]Rupnik,J.;Shawe-Taylor,J.Multi-view canonical correlation analysis.In:Proceedings of Conference on Data Mining and Data Warehouses,2010.Available at http://ailab.ijs.si/dunja/SiKDD2010/Papers/Rupnik Final.pdf.

[13]Hardoon,D.R.;Szedmak,S.R.;Shawe-Taylor,J.R.Canonical correlation analysis:An overview with application to learning methods.Neural Computation Vol.16,No.12,2639-2664,2004.

[14]Chapelle,O.;Vapnik,V.;Bousquet,O.;Mukherjee,S.Choosing multiple parameters for support vector machines.Machine Learning Vol.46,Nos.1-3,131-159,2002.

[15]Wang,Z.;Chen,S.;Sun,T.MultiK-MHKS:A novel multiple kernel learning algorithm.IEEE Transactions on Pattern Analysis and Machine Intelligence Vol.30,No.2,348-353,2008.

[16]Rakotomamonjy,A.;Bach,F.;Canu,S.;Grandvalet,Y.More efficiency in multiple kernel learning.In: Proceedings of the 24th International Conference on Machine Learning,775-782,2007.

[17]Xu,X.; Tsang,I.W.; Xu,D.Softmargin multiple kernel learning.IEEE Transactions on Neural Networks and Learning Systems Vol.24,No.5,749-761,2013.

[18]Kim,S.-J.;Magnani,A.;Boyd,S.Optimal kernel selection in kernel fisher discriminant analysis.In: Proceedings of the 23rd International Conference on Machine Learning,465-472,2006.

[19]Yan,F.;Kittler,J.;Mikolajczyk,K.;Tahir,A.Nonsparse multiple kernel fisher discriminant analysis.The Journal of Machine Learning Research Vol.13,No.1,607-642,2012.

[20]Lin,Y.Y.;Liu,T.L.;Fuh,C.S.Multiple kernel learning for dimensionality reduction.IEEE TransactionsonPatternAnalysisandMachine Intelligence Vol.33,No.6,1147-1160,2011.

[21]Yuan,Y.-H.;Shen,X.-B.;Xiao,Z.-Y.;Yang,J.-L.;Ge,H.-W.;Sun,Q.-S.Multiview correlation feature learning with multiple kernels.In:Lecture Notes in Computer Science,Vol.9243.He,X.;Gao,X.;Zhang,Y.et al.Eds.Springer International Publishing,518-528,2015.

[22]Kan,M.;Shan,S.;Zhang,H.;Lao,S.;Chen,X.Multi-view discriminant analysis.In:Lecture Notes in Computer Science,Vol.7572.Fitzgibbon,A.;Lazebnik,S.;Perona,P.;Sato,Y.;Schmid,C.Eds.Springer Berlin Heidelberg,808-821,2012.

[23]Sch¨olkopf,B.;Smola,A.;M¨uller,K.-R.Nonlinear componentanalysisasakerneleigenvalue problem.NeuralComputationVol.10, No.5,1299-1319,1998.

[24]Chu,M.T.;Watterson,J.L.On a multivariate eigenvalue problem,part I:Algebraic theory and apower method.SIAM Journal on Scientific Computing Vol.14,No.5,1089-1106,1993.

[25]Yuan,Y.-H.;Sun,Q.-S.Fractional-order embedding multiset canonical correlations with applications to multi-feature fusion and recognition.Neurocomputing Vol.122,229-238,2013.

[26]Yuan,Y.-H.;Sun,Q.-S.Graph regularized multiset canonicalcorrelationswithapplicationstojoint featureextraction.PatternRecognitionVol.47,No.12,3907-3919,2014.

[27]Yuan, Y.-H.;Sun, Q.-S.Multisetcanonical correlations using globality preserving projections with applications to feature extraction and recognition. IEEE Transactions on Neural Networks and Learning Systems Vol.25,No.6,1131-1146,2014.

[28]Dai,D.Q.;Yuen,P.C.Face recognition by regularized discriminant analysis.IEEE Transactions on Systems,Man,and Cybernetics,Part B(Cybernetics)Vol.37,No.4,1080-1085,2007.

Yun-HaoYuan received his M.Sc. degreeincomputerscienceand technology from Yangzhou University (YZU),China,in 2009,and Ph.D. degreeinpatternrecognitionand intelligencesystemfromNanjing University of Science and Technology (NUST),China,in 2013.He received two National Scholarships from the Ministry of Education,China,an Outstanding Ph.D.Thesis Award,and two Topclass Scholarships from NUST.He was with the Department of Computer Science and Technology,Jiangnan University,China,from 2013 to 2015,as an associate professor.He is currently an assistant professor with the Department of Computer Science and Technology,College of Information Engineering,YZU.He is the author or co-author of more than 35 scientific papers.He serves as a reviewer of several international journals such as IEEE TNNLS,IEEE TSMC: Systems.He is a member of ACM,International Society of Information Fusion(ISIF),and China Computer Federation (CCF).His research interests include pattern recognition,machine learning,image processing,and information fusion.

YunLi received his M.Eng.degree incomputerscienceandtechnology from Hefei University of Technology,China,in 1991,and Ph.D.degree in control theory and control engineering from Shanghai University,China,in 2005.He is a professor with the School of Information Engineering,Yangzhou University,China.He is the author of more than 70 scientific papers.He is currently a member of ACM and China Computer Federation(CCF).His research interests includepatternrecognition,informationfusion,data mining,and cloud computing.

Jianjun Liu received his B.Sc.degree inappliedmathematicsandPh.D. degreeincomputersciencefrom NanjingUniversityofScienceand Technology, China, in2009and 2014,respectively.He is currently a lecturer with the School of Internet ofThingsEngineering,Jiangnan University,China.His research interests are in the areas of spectral unmixing,hyperspectral image classification,image processing,sparse representation,and compressive sensing.

Chao-FengLireceivedhisPh.D. degreeinremotesensingimage processing from the Chinese University of Mining and Technology,Xuzhou,China,in 2001.He has published more than 70 technical articles.Currently,he is a professor with Jiangnan University. His research interests include image processing,computer vision,and image and video quality assessment.

Xiao-Bo Shen received his B.E.degree incomputerscienceandtechnology fromNanjingUniversityofScience andTechnology, China, in2011,where he is currently working toward his Ph.D.degree with the School of Computer Science and Technology.His researchinterestsincludepattern recognition,imageprocessing,computervision,and information fusion.

Guoqing Zhang received his B.Sc. and master degrees from the School ofInformationEngineeringin YangzhouUniversityin2009and 2012,respectively.He is currently a Ph.DcandidatewiththeSchoolof ComputerScienceandEngineering,NanjingUniversityofScienceand Technology,China.His research interests include pattern recognition,machinelearning,imageprocessing,and computer vision.

Quan-SenSunreceivedhisPh.D degreeinpatternrecognitionand intelligencesystemfromNanjing University of Science and Technology (NUST),China,in2006.Heisa professorwiththeDepartmentof Computer Science in NUST.He visited the Department of Computer Science and Engineering,the Chinese University of Hong Kong,in 2004 and 2005.He has published more than 100 scientific papers.His current interests include pattern recognition,image processing,remote sensing information system,and medicine image analysis.

Open AccessThe articles published in this journal aredistributedunderthetermsoftheCreative Commons Attribution 4.0 International License(http:// creativecommons.org/licenses/by/4.0/), whichpermits unrestricted use,distribution,and reproduction in any medium,provided you give appropriate credit to the original author(s)and the source,provide a link to the Creative Commons license,and indicate if changes were made.

Other papers from this open access journal are available free of charge from http://www.springer.com/journal/41095. To submit a manuscript,please go to https://www. editorialmanager.com/cvmj.

杂志排行

Computational Visual Media的其它文章

- Fitting quadrics with a Bayesian prior

- Efficient and robust strain limiting and treatment of simultaneous collisions with semidefinite programming

- 3D modeling and motion parallax for improved videoconferencing

- Rethinking random Hough Forests for video database indexing and pattern search

- A surgical simulation system for predicting facial soft tissue deformation

- Accurate disparity estimation in light field using ground control points