View-invariant Gait Authentication Based on Silhouette Contours Analysis and View Estimation

2015-08-09SongminJiaLijiaWangandXiuzhiLi

Songmin Jia,Lijia Wang,and Xiuzhi Li

View-invariant Gait Authentication Based on Silhouette Contours Analysis and View Estimation

Songmin Jia,Lijia Wang,and Xiuzhi Li

—In this paper,we propose a novel view-invariant gaitauthentication method based on silhouette contours analysis andview estimation.The approach extracts Lucas-Kanade based gaitfl ow image and head and shoulder mea′n shape(LKGFI-HSMS)of a human by using the Lucas-Kanades method and procrustesshape analysis(PSA).LKGFI-HSMS can preserve the dynamicand static features of a gait sequence.The view betw′een a personand a camera is identi fi ed for selecting the targets gait featureto overcome view variations.The similarity scores of LKGFIand HSMS are calculated.The product rule combines the twosimilarity scores to further improve the discrimination powerof extracted features.Experimental results demonstrate that theproposed approach is robust to view variations and has a highauthentication rate.

Index Terms—Silhouette contours analysis,view estimation,Lucas-Kanade based gait fl ow image,head and shoulder meanshape,gait recognition.

I.INTRODUCTION

GAIT analysis is a newly emerged biometrics which utilizes the manner of walking to recognize an individual[1]. During the past decade,gait analysis has been extensively investigated in the computer vision community.The approaches for gait analysis can be mainly divided into two categories:model-based methods and appearance-based methods[2].Model-based gait analysis methods[3-4]focus on studying the movement of various body parts and obtaining measurable parameters.These methods can be less affected by the viewpoint[5].However,complex searching and mapping processes will signi fi cantly increase the size of the feature space and computational cost[6].

Appearance-based gait analysis methods do not need prior modeling,but operate on silhouette sequences to capture gait characteristics[6].Statistical methods[7-9]are usually applied to describe the silhouettes for their low computational cost. Among them,gait energy image(GEI)[10]is a commonly used method.It re fl ects the major shape of the gait silhouettes and their changes over a gait cycle.However,it loses some intrinsic dynamic characteristics of the gait pattern.Zhang et al.[2]proposed active energy image(AEI)constructed by calculating the active regions of two adjacent silhouette images for gait representation.AEI is projected to a low-dimensional feature subspace via a two-dimensional locality preserving projections (2DLPP)method for improving the discrimination power. Afterwards,Roy et al.[11]took advantage of the key pose state of a gait cycle for extracting a novel feature called pose energy image(PEI).However,it suffers a high computational burden when dealing with PEI.To overcome this shortcoming, pose kinematics was applied to select a set of most probable classes before using PEI.Pratik et al.[12]presented a pose depth volume(PDV)method by using a partial volume reconstruction of the frontal surface of each silhouette.Zeng et al.[13]proposed a radial basis function(RBF)network based method to extract gait′dynamics feature.For recognition,a constant RBF network is obtained from training samples,then the test gait can be recognized according to the smallest error principle.Recently,Lam et al.[14]constructed a gait fl ow image (GFI)by calculating an optical fl ow fi eld between consecutive silhouettes in a gait period to extract motion feature.However, Horn-Schunck′s approach is used to obtain the optical fl ow fi eld,which results in computational complexity.To alleviate the computational cost,Lucas-Kanade′s optical fl ow based gait fl ow image(LKGFI)[15]considering view is proposed to identify human.The Lucas-Kanade′s approach,which is in low computing cost,is adopted to calculate the optical fl ow fi eld.However,the LKGFI can only capture the motion characteristic of a gait,but ignore the structural characteristic which is also important in representing a gait.

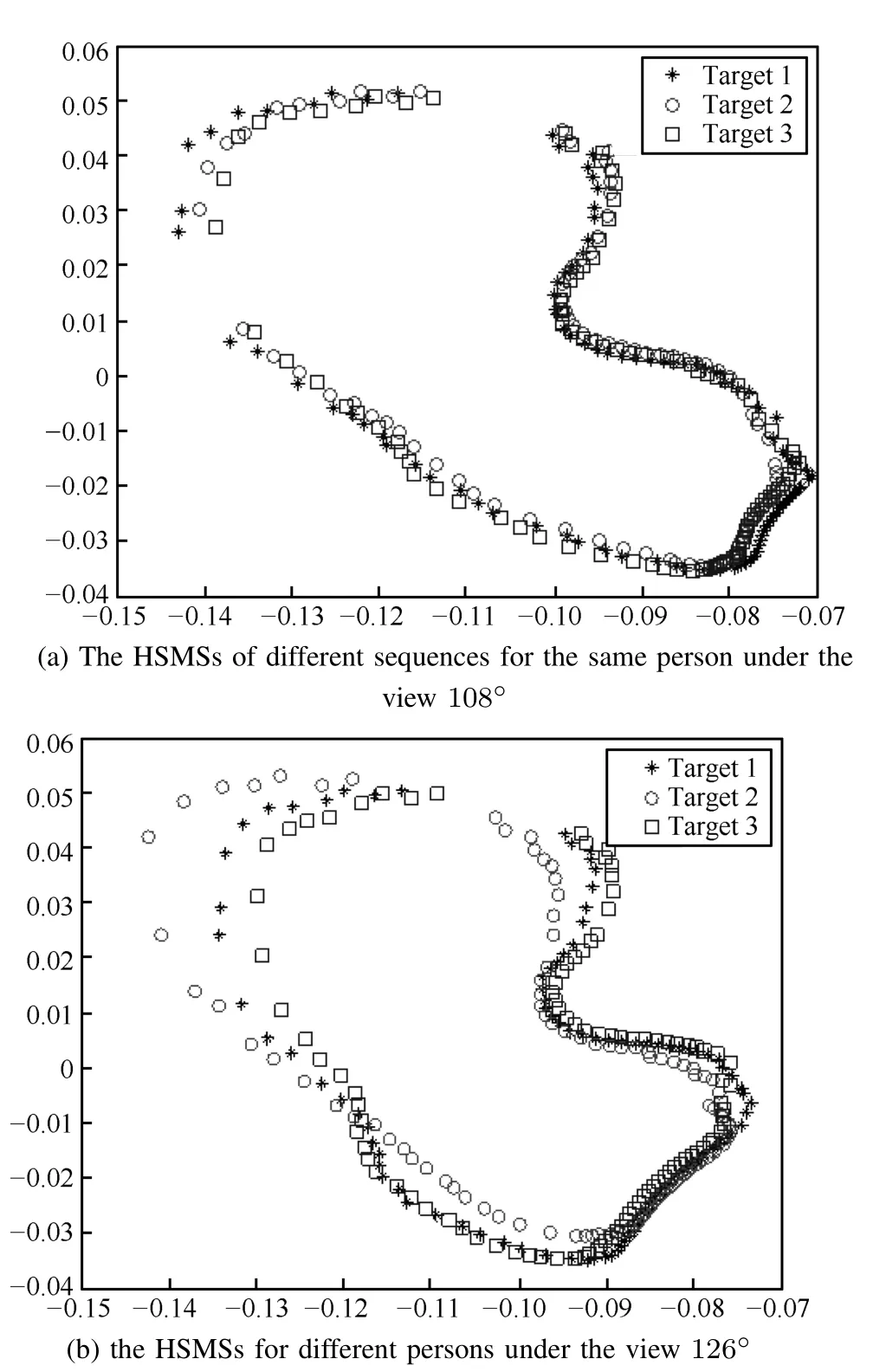

Procrustes shape analysis(PSA)is a popular method in direction statistics to obtain a shape vector which is invariant to scale,translation and rotation[6].Wang et al.[7]used the mean shape of silhouette contours analyzed with PSA to capture the structural characteristic of a gait,especially the shape cues of body biometrics.Choudhury et al.[6]presented a recognition method combining spatio-temporal motion characteristics and statistical and physical parameters(STM-SPP)by analyzing the shape of silhouette contours using PSA and elliptic Fourier descriptors(EFDs).This feature is usually robust to carrying condition.Inspired by STM-SPP,we extract the head and shoulder mean shape(HSMS),which is rarely changed while a person is walking,as the static feature of a gait by using the PSA.

Most of the existing recognition methods can achieve good performance in the single view case[16-17].These methods can only be conducted with the assumption of the same view,which will signi fi cantly limit their potential applications in real-world.To overcome this drawback,Bodor et al.[18]proposed to use image-based rendering to generate optimal inputs sequences for view-independent motion recognition. The view is constructed from a combination of non-orthogonalviews taken from several cameras.Liu et al.[19]conducts joint subspace learning(JSL)to obtain the prototypes of different views.Then,a gait is represented by the coeffi cients from a linear combination of these prototypes in the corresponding views.Kusakunniran et al.[20]developed a view transformation model(VTM)by adopting singular value decomposition(SVD)technique to project gait features from one viewing angle to the other one.However,it is hard to obtain an optimized VTM,and this method depends on the number of training data which will lead to huge memory consumption.Considering gait sequences collected from different views of the same person as a feature set,Liu et al.[21]devised a subspace representation(MSR)method to measure the variances among samples.Then,a marginal canonical correlation analysis(MCCA)method is proposed to exploit the discriminative information from the subspaces for recognition.

In this study,we propose a novel method based on analyzing silhouette contours and estimating view for gait recognition. The basic idea of silhouette contours analysis is to extract LKGFI-HSMS from a gait sequence for representing dynamic and static characteristics.LKGFI-HSMS is constructed by calculating an optical fl ow fi eld with the Lucas-Kanade′s approach and analyzing the head and shoulder shape with PSA. In our previous study[22],a view-invariant gait recognition method based on LKGFI and view has been proposed.To further improve recognition rate,HSMS is introduced to represent the static information.HSMS characterizes a person′s head and shoulder contours by analyzing the con fi guration matrix with PSA.Moreover,to overcome the view problem in gait recognition,view is identi fi ed as a criterion to select the target′s gait features in a feature database.Finally,our method is conducted on gait database,and the results illustrate that our method is improved in terms of computation cost and recognition rate.The contributions of this paper are as follows:

1)As a static feature of a human motion,HSMS is fi rstly proposed.The HSMS is the head and shoulder mean shape extracted from a gait sequence by using the Procrustues Shape Analysis method.Since the head and shoulder silhouette is usually unsheltered,the HSMS is stable when applied on gait authentication.

2)The HSMS and LKGFI are combined to analyze gait. The HSMS captures the most salient static feature from a gait sequence,while the LKGFI extracts more dynamic information of a human motion.Taking advantage of both the features,the LKGFI-HSMS has a powerful discriminative ability.

3)To resolve the view problem,walking direction is estimated and used as a selection criterion for gait authentication. The walking direction is computed based on the positions and heights at the walking beginning and ending points in a gait cycle.View is identi fi ed according to the obtained walking direction.For gait authentication,the view is applied to select the target′s gait features in a feature database.This approach guarantees our method performs well when there are view changes.

II.OVERVIEW OF THEAPPROACH

As mentioned above,most previous work adopted lowlevel information such as single static features(GEI[10], STM-SPP[6])or single dynamic information(AEI[2],GFI[14]). Commonly,the dynamic features included in human walking motion are suf fi cient for gait analysis.However,it is unstable when there are self-occlusions of limbs.For obtaining optimal performance,the statistical analysis should be applied to the temporal patterns of an individual.Therefore,we consider higher level information including both the static and dynamic features for gait recognition.Moreover,most of the available gait analysis algorithms achieve good performance in the frontal view.However,their performance is seriously affected when the view changes.Based on the above consideration,we present a novel method based on analyzing silhouette contours and estimating view for gait recognition.

The proposed approach is shown in Fig.1.For each input sequence,preprocessing is fi rstly conducted for extracting gait silhouettes and gait cycle.Secondly,the gait′s dynamic feature LKGFI and static feature HSMS are extracted from the gait silhouettes.Simultaneously,the walking direction is recognized and the view between the person and the camera is determined.Finally,the view is applied for fi nding the target′s LKGFI(HSMS)in the corresponding database estimated in advance,and then the similarity scores are calculated by using the Euclidean distance metric.Furthermore,the product rule is applied to combine the classi fi cation results of LKGFI and HSMS.

III.GAITRECOGNITIONUSINGLKGFI-HSMSANDVIEW

Here,two classi fi ers are estimated for gait recognition:one is based on dynamic feature LKGFI and view,the other is based on the static feature HSMS and view.The scores obtained from the two classi fi ers represent two gait′s similarity. However,a single classi fi er′s performance is unsatisfactory.To further improve algorithm′s ef fi ciency and accuracy,the two classi fi ers are combined by using a product rule.

A.Silhouettes Extraction

Silhouettes extraction involves segmenting regions corresponding to a walking person in a cluttered scene[6].The gait sequence is fi rst processed by using background subtraction,binarization,Morphologic processing and connectedcomponent analysis.Then,the silhouette images in each frame are extracted and their bounding boxes are computed.Furthermore,silhouettes are normalized to fi xed size(120×200) and aligned centrally with respect to their horizontal centers to eliminate size difference caused by the distance between the person and the camera.In addition,gait cycle is estimated in an individual’s walking sequence according to the heightwidth ratios of silhouettes[14].After gait period is determined, silhouette images would further be divided into several cycles and hence made ready for generating LKGFI and HSMS.

B.Human Recognition Based on LKGFI and View

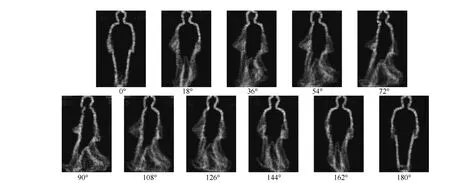

Lam[14]proposed GFI for human recognition.An optical fl ow fi eld or image velocity fi eld is calculated from a gait sequence with the Horn-Schunck′s method to generate GFI. The optical fl ow fi eld represents the motion of the moving human in the scene.Therefore,GFI contains the human′s motion information in a gait period.Unfortunately,every pixel in the image should be computed to obtain the dense opticalfl ow in the Horn-Schunck′s method,which will suffer a high computational burden.In contrast,Lucas-Kanade′s approach is a popular sparse optical fl ow function,where a group of corners of the image are extracted before the optical fl ow is calculated.Therefore,Lucas-Kanade′s approach can reduce computing cost and be applied to generate LKGFI[22].The LKGFIs of a person under different views are shown in Fig.2.

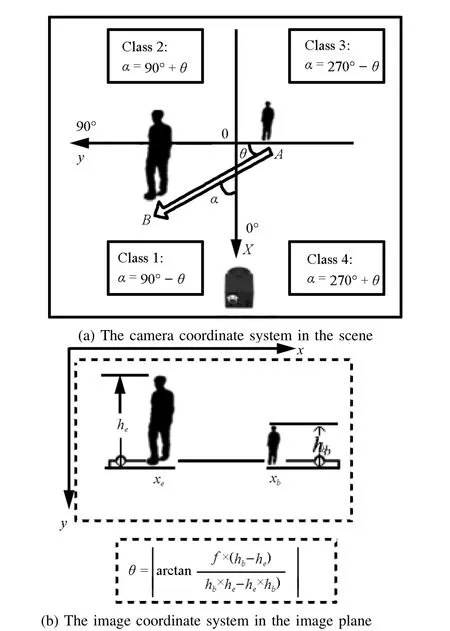

Most of the existing gait recognition methods suffer from view variations.In order to resolve this problem,we have proposed a gait recognition approach based on LKGFI and view in the literature[15,22].A person′s walking direction is computed based on the positions and heights at the walking beginning and ending points in a gait cycle as shown in Fig.3. We suppose that the walking direction is 0°when a person walks towards the camera along its optical axis and it is 90°when the person walks in parallel with the camera.The walking direction is divided into four main categories based on the four quadrants of the camera′s coordinate system.Then, the view is identi fi ed according to the relationship between walking directions and views.

For human authentication,a database about a target′s gait features(LKGFI)under different views is established in advance.Then,once there is a passerby,his/her LKGFI will be extracted and the walking direction will be computed. Afterwards,the view,identi fi ed according to the walking direction,is applied to select the target′s LKGFI in the feature database.At last,the gait authentication is solved by measuring similarities among gait sequences.We make use of the Euclidean distance to measure the similarity.Obviously, the two gaits,having the smaller distance measures,are more similar.

whereN=120×200 is the number of pixels in the LKGFI image.LKGFIGnandLKGFIPnare the values of then-th point in the target′s imageLKGFIGand the person′s imageLKGFIP,respectively.

The LKGFI database of the target under different views is established in advance for this method.In the phase of gait recognition,the view is estimated precisely and treated as the criterion to choose the target′s gait feature.The proposed method is robust to view variations.

Fig.1.The illustration of human recognition using LKGFI-HSMS and view.

Fig.2.The illustration of LKGFIs of a person under different views.

Fig.3.The illustration of determining walking direction.αis the walking direction.θis the angle between the walking direction andyaxis.xbandxeare the starting point and the ending point in the image plane,respectively.hbandheare the silhouettes′heights atxbandxe.

C.Human Recognition Based on HSMS and View

As a prominent feature of upper-body,the head and shoulder silhouette can be identi fi ed easily and is usually unsheltered.It is an ideal module for identifying a human being whether the person is under the frontal view or the rear view.In addition, PSA provides an ef fi cient approach to obtain mean shape, especially for coping with 2-D shapes[6].The obtained shape feature is invariant to scale,translation and rotation.Moreover, the Procrustes mean shape has considerable discriminative power for identifying individuals[7].Thus,this work extracts HSMS from a gait sequence using PSA for human recognition.

Once a person′s silhouettes have been obtained,the head and shoulder models can be extracted according to morphology(i.e.,0.35Hmeasured from the top of the bounding box[10]whereHis the box′s height).Then,each head and shoulder contour is approximated by 100 points(xi,yi),i= 1,2,···,100 using interpolation based on point correspondence analysis[23].Furthermore,the contour is de fi ned in a complex plane asZ=[z1,z2,···,zi,···,zk],zi=xi+jyi,k=100.

Togetthecon fi gurationmatrix,itisnecessaryto center the shape by de fi ning a complex vectorU= [u1,u2,···,ui,···,uk]T,ui=zi-¯z,¯z=(¯x,¯y).Given a set ofNhead and shoulder shapes in a gait cycle,Ncomplex vectorsUi(i=1,2,···,N)are gotten and the con fi guration matrix is computed:

where the superscript“∗”means the complex conjugate transpose.

As a result,the Procrustes mean shapeˆUcorresponding to the largest eigenvector ofSrepresents HSMS for gait recognition.ˆUis a complex vector whose length isk.It characterizes the head and shoulder shape of a gait sequence. The illustration of the HSMS is shown in Fig.4.

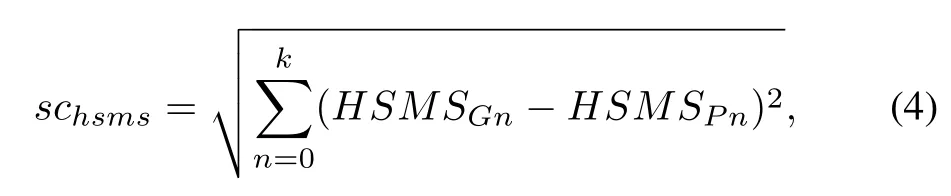

The gait recognition method using HSMS and view is similar to the approach based on LKGFI and view mentioned above.The similarity score between the target′s HSMS and the person′s HSMS is calculated as:

wherek=100 is the number of HSMS.HSMSGnandHSMSPnare then-th points of the target′sHSMSGand the person′sHSMSP,respectively.

D.Combining Rule

We have calculated the similarity scores for LKGFI and HSMS,respectively.However,the recognition rate obtained by using a single classi fi er is unsatisfactory.To improve the system′s ef fi ciency and accuracy,the two classi fi ers are combined.Since a variety of fusion approaches are available for combining independent classi fi ers,we adopted the product rule.

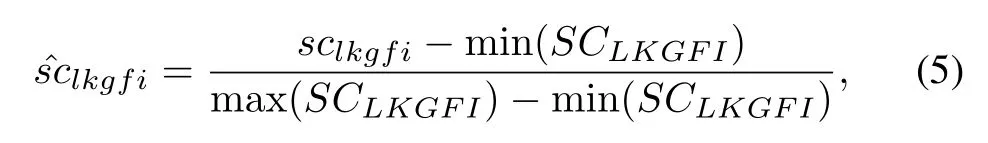

These similarity scores cannot be directly combined because they are with quite different ranges and distributions.Therefore,to make these scores comparable for fusion,they must be transformed to a same scale.The linear mapping method is applied here.

and

whereSCLKGFIandSCHSMSare the vectors of the similarity scores before mapping,whilesclkgfiandschsmsare the elements of theSCLKGFIandSCHSMS.ˆsclkgfiandˆschsmsare the results of the linear mapping.

Then,the product rule combinesˆsclkgfiandˆschsmsto obtain the fi nal decision,which enhances the veri fi cation performance.The product rule is:

The human authentication based on LKGFI-HSMS is implemented as follows:

Fig.4.The illustration of HSMS.

Step 1.A database about a target′s gait features(LKGFI and HSMS)under different views is established in advance.

Step 2.Once a person is coming,his/her gait features (LKGFIPandHSMSP)are extracted.

Step 3.Walking direction of the passerby person is computed and view is obtained.

Step 4.The target′s gait features(LKGFIGandHSMSG) under the special view are selected from the established database.

Step 5.The similarity scores for LKGFI and HSMS are obtained according to(1)and(4),respectively.

Step 6.The similarity scores from step 5 are mapped according to(5)and(6).

Step 7.The mapped similarity scores are combined according the product rule(7)to obtain the fi nal decision.

Step 8.The passerby is identi fi ed according to the relationship between the fi nal decision and a threshold.

IV.EXPERIMENTAL RESULTS

A.Experiment on CASIA B Database

This section presents the results of person identi fi cation experiments conducted on the CASIA B database.This database contains sequences of 124 persons from 11 views,namely 0°,18°,36°,54°,72°,90°,108°,126°,144°,162°,180°. Six normal gait sequences are captured for each person under different views.The database is divided into 2 subgroups:the fi rst subgroup of one person is used for establishing the LKGFI (HSMS)database;the rest of other persons are for evaluating the veri fi cation rate of the proposed recognition algorithm.For view identi fi cation,the direction range of 0°~180°is divided into 11 regions for the views(0°,18°,36°,54°,···,180°). The fi rst region of 0°~9°is for the view 0°.The last region of 171°~180°is for the view 180°.The middle area is equally divided and each region has a range of 18°:9°~27°for the view 18°,27°~45°for 36°,45°~63°for 54°, etc.All experiments are implemented using Opencv 2.1 in the Microsoft Visual Studio 2008 Express Edition environment.

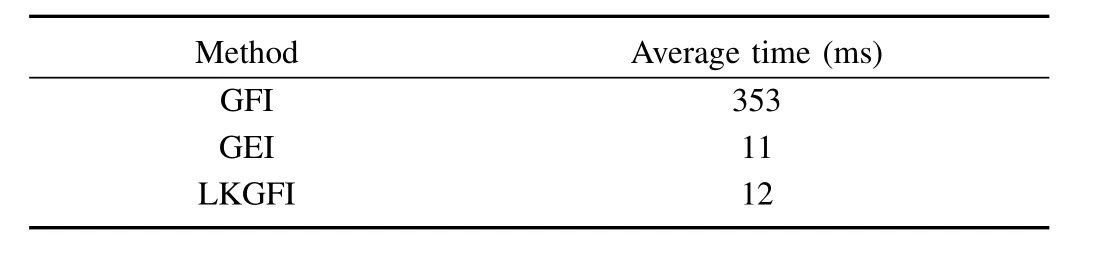

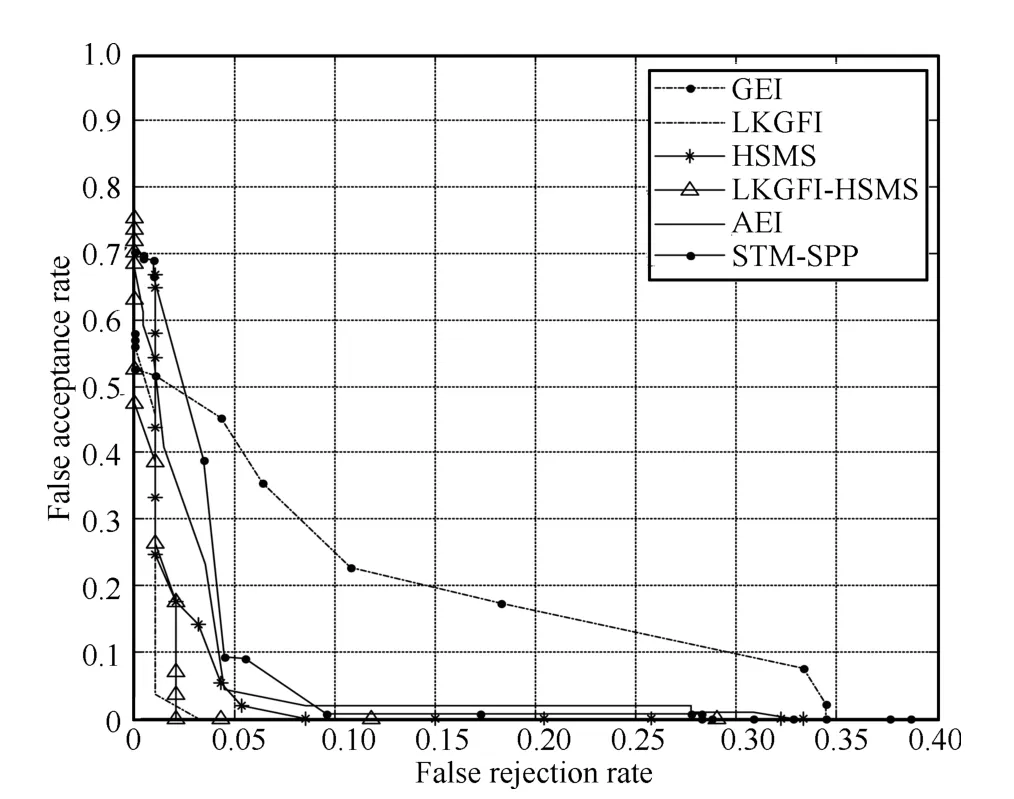

The veri fi cation performance of LKGFI-HSMS is compared with the following gait representation approaches:GEI,GFI, LKGFI,HSMS,AEI[2],and STM-SPP[6].The target is the 001thperson in the CASIA B database.The testing gait sequences include 124 persons walking under 11 views.LKGFIHSMS can preserve more dynamic and static information of a person′s silhouette contours using the Lucas-Kanade′s method and PSA.It can achieve a very good discrimination power and low computing cost.The testing person can walk under different views.The view is extracted using the proposed approach to select the target′s gait feature before recognition, which can deal with view variations.To evaluate the ef fi ciency of the GFI and LKGFI,all of the gait sequences for every person are tested one time.As a result,we can get the average time consume illustrated in Table I.To construct the GFI[12], the Horn-Schunck′s method,suffering a high computational load,is adopted to get the dense optical fl ow.In contrast,the Lucas-Kanade′s approach is used to construct the LKGFI to reduce the computational cost.It is shown that the LKGFI can satisfy the real-time requirement.Moreover,the results show that both the LKGFI and GEI can reach a lower operation time(12ms for LKGFI and 11ms for GEI).However,the LKGFI can achieve better performance than GEI,which will be discussed below.To further evaluate the proposed method, we compare the average false rejection rates(FRR)at the false acceptance rates(FAR)of 4%and 10%for different gait representations[4].The LKGFI only captures the motion characteristic of a gait sequence in a period,while the HSMS is only a shape cue.The LKGFI-HSMS can capture both the motion and structural characteristics of a gait.Therefore, the LKGFI-HSMS has the most discrimination power.The results are shown in Table II.The FRRs achieved by LKGFIHSMS are the least in the same FAR cases.Fig.5 shows the receiver operating characteristic(ROC)curves.Thex-axis is for the false rejection rate,while they-axis is for the false acceptance rate.The smaller the area below the ROC curve is,the better the performance is.The experiment results show that the area below the ROC curve achieved by LKGFI-HSMS is the smallest.It is evident from Table II and Fig.5 that the veri fi cation performance of LKGFI-HSMS is better than the other gait representations and our proposed approach is robust to view variations.

TABLE I AVERAGE TIME CONSUME OF CONSTRUCTING GFI, ___________________GEI AND LKGFI

TABLE II AVERAGE FALSE REJECTION RATES(%)AT THE FALSE ACCEPTANCE RATE(%)OF 4%AND 10%FOR GEI,LKGFI, HSMS,AEI,STM-SPP,AND LKGFI-HSMS ON ________________CASIA B DATABASE

Fig.5.Receiver operating characteristic(ROC)(%)by different gait representations:GEI,LKGFI,HSMS,AEI,STM-SPP,and LKGFIHSMS on CASIA B database.

B.Experiment on NLPR Database

This section presents the experimental results conducted on the NLPR database.This database contains 2880 sequences for 20 persons from 3 views(0°,45°,90°).Four normal sequences are captured under each views.For identi fi cation, the direction range 0°~90°is divided into 3 regions for each views,which means:0°~36°for 0°,37°~63°for 45°,and 64°~90°for 90°.The sequences for the person“fyc”is chosen as the target,while the rest of other persons are for evaluating the veri fi cation rate of the LKGFI-HSMS, GEI,GFI,LKGFI,HSMS,AEI,and STM-SPP.We test these methods in two terms:the fi rst one is the average FRR at FAR of 4%and 10%for different gait representation,the second is the ROC curves.The results are shown in Table III and Fig.6,respectively.The results further illustrate that the veri fi cation of the LKGFI-HSMS is better than the other gait representations.

V.CONCLUSION

In this paper,we have proposed a gait representation,i.e., LKGFI-HSMS,which can analyze the motion and shape of silhouette contours of a person in a video sequence.Firstly, LKGFI was generated by calculating an optical fl ow fi eld with the Lucas-Kanade′s method.This method can preserve more motion information of the silhouette contours and signi fi cantly reduce the processing time.Secondly,HSMS characterizing the head and shoulder shape of the person in a gait cycle was analyzed by using PSA.It has a very good discrimination power and lower computing cost due to the part-based shape analysis instead of whole contour shape analysis.Thirdly, considering view,the method can deal with view variations. Furthermore,the veri fi cation performance was enhanced by combining the above two classi fi ers.Experimental results have shown the effectiveness of the proposed approach compared with several related silhouette-based gait recognition methods.

TABLE III AVERAGE FALSE REJECTION RATES(%)AT THE FALSE ACCEPTANCE RATE(%)OF 4,%AND 10%FOR GEI,LKGFI, HSMS,AEI,STM-SPP,AND LKGFI-HSMS ON NLPR ____________________DATABASE

Fig.6.Receiver operating characteristic(ROC)(%)by different gait representations:GEI,LKGFI,HSMS,AEI,STM-SPP,and LKGFIHSMS on NLPR database.

REFERENCES

[1]Yang X C,Zhou Y,Zhang T H,Shu G.Yang J.Gait recognition based on dynamic region analysis.Signal Processing,2008,88(9):2350-2356

[2]Zhang E,Zhao Y W,Xiong W.Active energy image plus 2DLPP for gait recognition.Signal Processing,2010,90(7):2295-2302

[3]Boulgouris N V,Chi Z X.Human gait recognition based on matching of body components.Pattern Recognition,2007,40(6):1763-1770

[4]Zhang R,Vogler C,Metaxas D.Human gait recognition at sagittal plane.Image and Vision Computing,2007,25(3):321-330

[5]Liu Y Q,Wang X.Human gait recognition for multiple views.Procedia Engineering,2011,15:1832-1836

[6]Choudhury S D,Tjahjadi T.Silhouette-based gait recognition using Procrustes shape analysis and elliptic Fourier descriptors.Pattern Recognition,2012,45(9):3414-3426

[7]Wang L,Tan T N,Hu W M,Ning H Z.Automatic gait recognition based on statistical shape analysis.IEEE Transactions on Image Processing, 2003,12(9):1120-1131

[8]Shutler J D,Nixon M S.Zernike velocity moments for sequence-based description of moving features.Image and Vision Computing,2006, 24(4):343-356

[9]Shutler J D,Nixon M S,Harris C J.Statistical gait description via temporal moments.In:Proceedings of the 4th IEEE Southwest Symposium on Image Analysis and Interpretation.Austin,TX:IEEE,2004. 291-295

[10]Han J,Bhanu B.Individual recognition using gait energy image.IEEE Transactions on Pattern Analysis and Machine Intelligence,2006,28(2): 316-322

[11]Roy A,Sural S,Mukherjee J.Gait recognition using pose Kinematics and pose energy image.Signal Processing,2012,92(3):780-792

[12]Pratik C,Aditi R,Shamik S,Jayanta M.Pose depth volume extraction from RGB-D streams for frontal gait recognition.Journal of Visual Communication and Image Representation,2014,25(1):53-63

[13]Zeng W,Wang C,Yang F F.Silhouette-based gait recognition via deterministic learning.Pattern Recognition,2014,47(11):3568-2584

[14]Lam T H W,Cheung K H,Liu J N K.Gait fl ow image:a silhouettebased gait representation for human identi fi cation.Pattern Recognition, 2011,44(4):973-987

[15]Jia S M,Wang L J,Wang S,Li X Z.Personal identi fi cation combining modi fi ed gait fl ow image and view.Optical and Precision Engineering, 2012,20(11):2500-2507

[16]Wang L,Ning H,Tan T,Hu W.Fusion of static and dynamic body biometrics for gait recognition.IEEE Transactions on Circuits and Systems for Video Technology,2004,14(2):149-158

[17]Barnich O,Droogenbroech M V.Frontal-view gait recognition by intraand inter-frame rectangle size distribution.Pattern Recognition Letters, 2009,30(10):893-901

[18]Bodor R,Drenner A,Fehr D,Masoud O,Papanikolopoulos N.Viewindependent human motion classi fi cation using image-based reconstruction.Image and Vision Computing,2009,27(8):1197-1206

[19]Liu N,Lu J W,Tan Y P.Joint subspace learning for view-invariant gait recognition.IEEE Signal Processing Letters,2011,18(7):431-434

[20]Kusakunniran W,Wu Q,Li H D,Zhang J.Multiple views gait recognition using view transformation model based on optimized gait energy image.In:Proceedings of the 12th IEEE International Conference on Computer Vision Workshops.Kyoto:IEEE,2009.1058-1064

[21]Liu N N,Lu J W,Yang G,Tan Y P.Robust gait recognition via discriminative set matching.JournalofVisualCommunicationandImage Representation,2013,24(4):439-447

[22]Wang L J,Jia S M,Li X Z,Wang S.Human gait recognition based on gait fl ow image considering walking direction.In:Proceedings of the 2012 IEEE International Conference on Mechatronics and Automation. Chengdu,China:IEEE,2012.1990-1995

[23]Mowbray S D,Nixon M S.Automatic gait recognition via Fourier descriptors of deformable objects.In:Proceedings of the 4th International Conference on Audio-and Video-Based Biometric Person Authentication.Guildford:Springer-Verlag,2003.556-573

Songmin Jia Professor at the College of Electronic Information&Control Engineering,Beijing University of Technology.Her research interests include robotics,machine learning,visual computation,and image processing.Corresponding author of this paper.

Lijia Wang graduated from Zhengzhou University,China in 2005.She received her M.Sc.degree from Zhengzhou University,China,in 2008.She is currently a Ph.D.candidate at the College of Electronic Information&Control Engineering,Beijing University of Technology.Her research interests include visual computation and image processing.

received the Ph.D.degree from Beihang University,China in 2008. He is currently a lecturer at the College of Electronic Information&Control Engineering,Beijing University of Technology.His research interests include image processing and visual computation.

Manuscript received March 28,2014;accepted November 27,2014. This work was supported by National Natural Science Foundation of China(61105033,61175087).Recommended by Associate Editor Xin Xu.

:Songmin Jia,Lijia Wang,Xiuzhi Li.View-invariant gait authentication based on silhouette contours analysis and view estimation.IEEE/CAA Journal of Automatica Sinica,2015,2(2):226-232

Songmin Jia is with the College of Electronic Information&Control Engineering,Beijing University of Technology,Beijing 100124,China(email:jsm@bjut.edu.cn).

Lijia Wang is with the College of Electronic Information&Control Engineering,Beijing University of Technology,Beijing 100124,China,and also with the Department of Information Engineering and Automation,Hebei College of Industry and Technology,Shijiazhuang 050091,China(e-mail: wanglijia1981@hotmail.com).

Xiuzhi Li is with the College of Electronic Information&Control Engineering,Beijing University of Technology,Beijing 100124,China(e-mail: xiuzhi.lee@163.com).

杂志排行

IEEE/CAA Journal of Automatica Sinica的其它文章

- Single Image Fog Removal Based on Local Extrema

- An Overview of Research in Distributed Attitude Coordination Control

- Operation Ef fi ciency Optimisation Modelling and Application of Model Predictive Control

- Cloud Control Systems

- Stable Estimation of Horizontal Velocity for Planetary Lander with Motion Constraints

- Production Line Capacity Planning Concerning Uncertain Demands for a Class of Manufacturing Systems with Multiple Products