Stable Estimation of Horizontal Velocity for Planetary Lander with Motion Constraints

2015-08-09WeiShaoShulinSuiLinMengandYaobinYue

Wei Shao,Shulin Sui,Lin Meng,and Yaobin Yue

Stable Estimation of Horizontal Velocity for Planetary Lander with Motion Constraints

Wei Shao,Shulin Sui,Lin Meng,and Yaobin Yue

—The planetary lander usually selects image feature points and tracks them from frame to frame in order to determine its own position and velocity during landing.Aiming to keep features tracking in consecutive frames,this paper proposes an approach of calculating the fi eld of view(FOV)overlapping area in a 2D plane.Then the rotational and translational motion constraints of the lander can be found.If the FOVs intersects each other,the horizontal velocity of the lander is quickly estimated based on the least square method after the ill-conditioned matrices are eliminated previously.The Monte Carlo simulation results show that the proposed approach is not only able to recover the ego-motion of planetary lander,but also improves the stabilization performance.The relationship of the estimation error,running time and number of points is shown in the simulation results as well.

Index Terms—Planetary landing,feature tracking,overlapping area,horizontal velocity estimation,ill-conditioned matrices, fi eld of view(FOV).

I.INTRODUCTION

THE key factor for the future planetary landing missions is the onboard autonomy,particularly in the relevant missions towards planets at high distances from Earth.As an example,the travel time of a radio frequency signal requires 4 to 22 minutes to cover the distance between the Earth and Mars.When landing on Mars,for example,non-zero steady state winds could effect horizontal velocity at impact,which might burst the airbags or the lander[1-2].So the horizontal velocity could be reduced by fi ring the retro-rockets into the wind using transverse impulse rockets system(TIRS)prior to landing.However,the error in position due to a bias in the accelerometer will have quadratic growth with time,and the errors in misalignment of the accelerometer axes will propagate into position errors as well.In order to provide accurate estimates of the lander position and velocity,a tightly coupled estimation process is employed to use featuresobservable from a camera,such as the descriptions of some early works[3-4].Descent image motion estimation system (DIMES)[5],developed by NASA,can be considered as the fi rst historical attempt to exploit computer vision to control a spacecraft during the entry,decent,landing(EDL)phase. DIMES software tracked all of the features correctly,but discarded the second feature of the second image pair,because the Harris operator[6]was not scale invariant and sensitive to noise.Recently,several studies have shown various visual techniques to estimate position and velocity parameters[7-10]. However,many recent advanced results from the fi eld of computer vision were achieved,thanks to the availability of high computational power,but were often not able to meet the hard real-time performance[11-13].

By tracking multiple features and applying checks on feature correlation across two images,a novel approach of direct horizontal velocity estimation is employed.This method is real-time and low-computational.It can be used to land on the planet without relying on a map or some known features of the ground.Compared with the algorithm of DIMES,the proposed method is more accurate and has lower complexity than the above-mentioned algorithms in the literature.

Note that there is another signi fi cant problem before the horizontal velocity is estimated.That is,the feature points have to be selected inside the overlap among at least two successive images.Because of the complexity of the planetary environment(such as Mars weather),the trajectories may have stronger winds and consequently greater horizontal velocity and attitude excursions.Then the cases of very small overlap or nonoverlap area between the adjacent fi eld of views(FOVs) may usually occur when matching feature points in different images.So the velocity cannot be estimated due to lack of correspondence between two images.Therefore,with the limited FOVs of the navigation camera,and the low frame rates,it is necessary to get the relationship between overlapping area and the camera motion.The computations of the fi eld of view lines(FOV lines)are essentially the edges of the footprint, which help us establish correspondence between trajectories.

The paper is organized as follows.In Section II,the camera model and the overlapping area formulas as well as the motion constraints of planetary lander with overlapping FOVs are illustrated.Section III provides a discussion of the horizontal velocity estimation based on the least square method.Finally some conclusions are drawn in Section IV.

II.OVERLAPPING AREA REPRESENTATION

Finding the intersected polygons and computing the overlapped areas is the key issue to feature tracking.In order to prevent gaps from occurring due to crab,tilt and fl ying height variations,the amount of overlapping is normally greater than 50%.So,before computing the horizontal velocity of lander,the relationship between the overlapping area and the movement of lander must be found.

A.The Camera Model

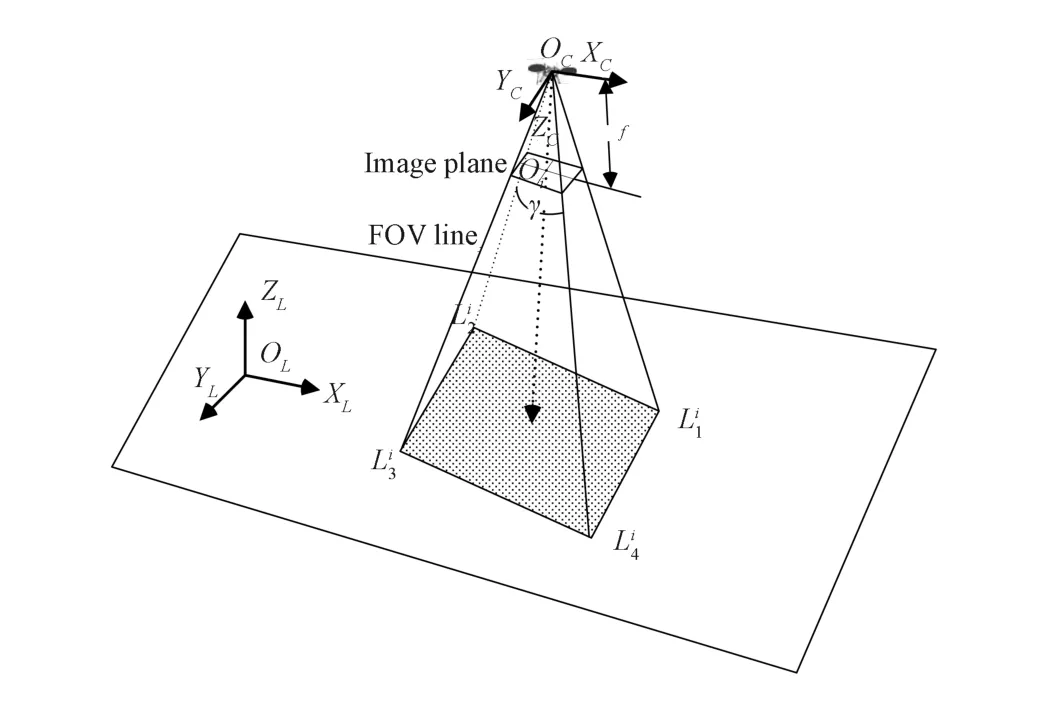

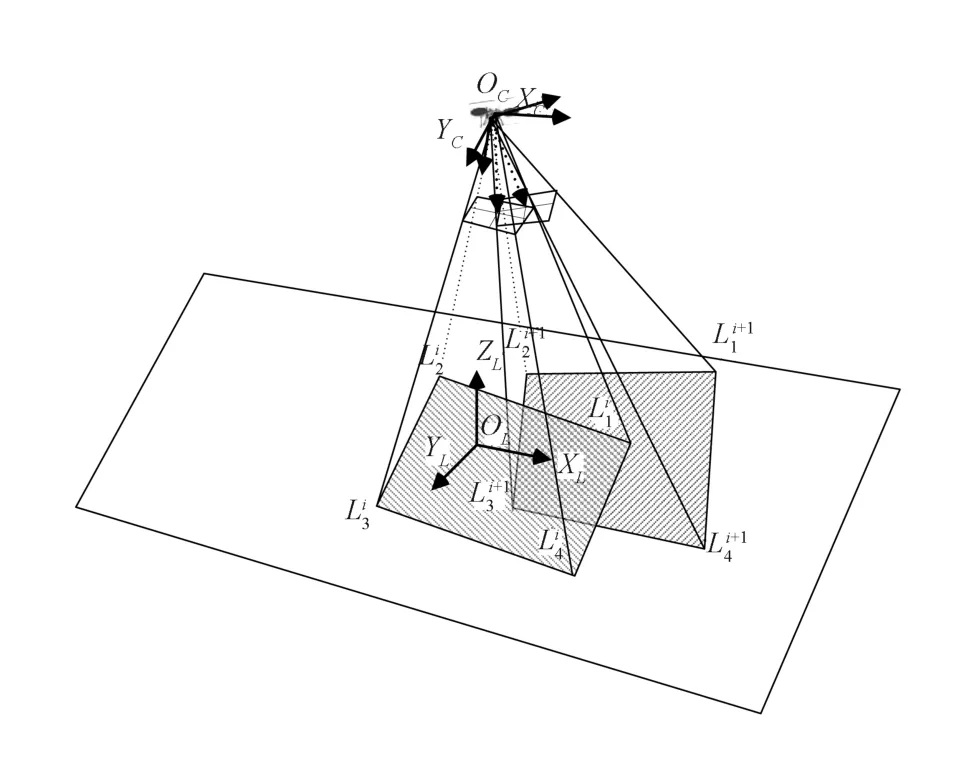

The camera rig mounted on the planetary lander produces a 2D image by using a projection transformation of the landing site scene.In this paper,a pin-hole model is used to describe the geometry of camera imaging principles,as shown in Fig.1.The camera coordinate systemOc-XcYcZcfi xed relative to the spacecraft body frame is established as follows.The center of cameraOcis considered as the origin of the camera coordinates.TheZcaxis is coinciding with the optical axis and points toward the ground.TheXcaxis points in the direction of the scan.TheYcaxis completes the righthanded orthogonal coordinate system and is parallel to the long axis of the detector arrays.γis the camera′s angle of view measured diagonally.The origin of image coordinatesOiis the intersection point of principle axis and image plane. The image plane is parallel to axesXcandYc,and is located at distanceffrom the originOc.

Fig.1.Camera model and its coordinate system.

where(cx,cy)are the coordinates of image centerOi,fxandfyare the local lengths along thexaxis andyaxis,respectively.

Radial lens distortion is the dominant distortion in the descent imagery.The observed focal plane coordinates(u′,v′)are then assumed to be related to the ideal coordinates(u,v)by a purely radial transformation

Finally,the distortion Δr=r′-ris modeled by a polynomial of the form

In order to consistently tracking feature points,the relations between adjacent cameras should be fi rst estimated of fl ine by fi nding the limits of FOV of a camera as visible in the other cameras.The FOV of theith camera is a rectangular pyramid in the space.The intersections of FOV lines with the ground plane are denoted as(Fig.1). As far as the camera pairi,i+1 are concerned,the only locations of interest in the two images for handoff areand(j∈{1,2,3,4}).

B.Pure 3D Translation

With the assumption that the landing region is approximately fl at compared to the height of the camera,so the algorithm does not need to account for depth of features. Generally,the lander fl ies over the area to be photographed and takes a series of images,each of which overlaps the preceding image and the following image,so that an unbroken coverage of the area is obtained.In this case,all that needs to be done is to compute whether the FOV associated lines in the two images cross each other or not.

Some assumptions are used in order to simplify computational processes so that they are easier to be represented. Assume that the initial position of the lander in the landing site cartographic coordinate system isCCC=[CxCyCz]T, itsZcaxis is perpendicular to the ground,its axesXcandYcare parallel to the axesXLandYL,respectively,and the shear deformations will be neglected.After taking a movement ΔCCC=(ΔCz>0)without any attitude change,its position becomesCCC′=CCC+ΔCCC=(Fig.2).

Fig.2.3D translation scenario of camera pair.

Usingprojectionformula,thefourcoordinatesoflimited by FOVs on the landing site plane can be easily derived,which are

The ground plane coordinates of(j∈{1,2,3,4})can be obtained similarly.The following discussion will analyze overlapping area between two rectangles in 5 different cases.

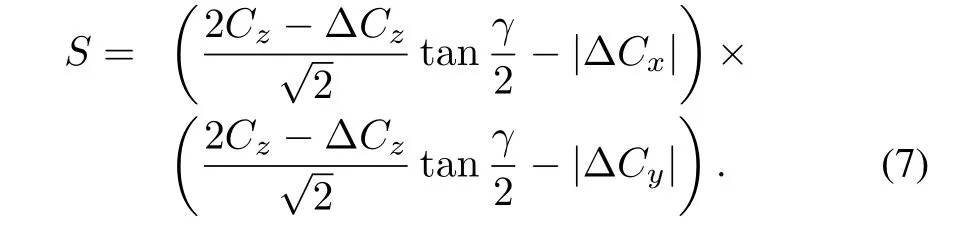

1)If the translations inxdirection andydirection satisfy condition(6),i.e.,

the overlapping rectangle corresponding to the two FOVs are shown in Fig.3.

Fig.3.Overlapping region in Case 1.

The area of the overlapping rectangle is given by

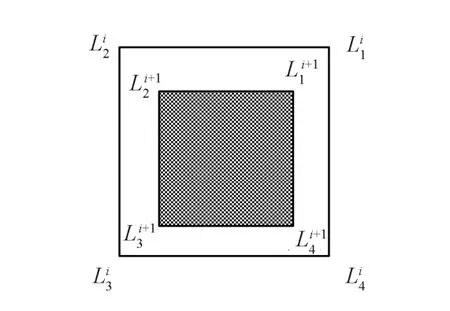

2)If the translations inxdirection andydirection satisfy condition(8),i.e.,

the overlapping rectangle corresponding to the two FOVs can be shown in Fig.4.

Fig.4.Overlapping region in Case 2.

The area of the overlapping rectangle is given by

3)If the translations inxdirection andydirection satisfy condition(10),i.e.,

the overlapping rectangle corresponding to the two FOVs can be shown in Fig.5.

Fig.5.Overlapping region in Case 3.

The area of the overlapping rectangle is given by

4)If the translations inxdirection andydirection satisfy the condition(12),i.e.,

the overlapping area corresponding to the two FOVs can be shown in Fig.6.

Fig.6.Overlapping region in Case 4.

The area of the overlapping rectangle is given by

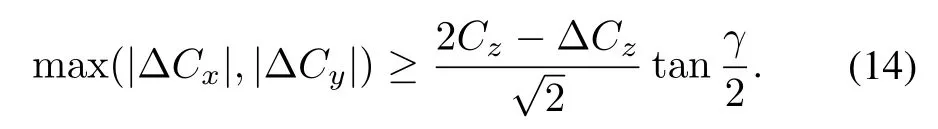

5)Obviously,there is no overlapping region between the two images if the translations satisfy condition(14),i.e.,

Based on the above discussion,the areas of the overlapping rectangle are affected by the altitudeCzand its translation ΔCzand the horizontal translations ΔCx,ΔCy.

C.Rotation Around the xx,,yy,,zz Axes

A case arises when the lander possesses rotational movement around thex,y,zaxes with the rotation anglesψ,θ,φ.Assuming thezaxis of the lander is vertical to the ground plane and points to the origin of the landing site cartographic coordinate system in the fi rst frame,perspectives and projections of the four FOV corners in the second image with the rotation are shown in Fig.7 without considering the horizontal or vertical translation.Such a sequence of rotations (rotate fi rst about thexaxis,then theyaxis,and fi nally thezaxis)can be represented as the multiplication of three matrices, one for each axis of the coordinate system,depending on the order of rotation around the axes.

Fig.7.Rotation scenario of camera pair.

Using rigid body dynamics,the coordinates of the FOV cornerin camera coordinate systemOc-XcYcZcare

Further,the coordinates of the intersection point of the FOV lineand the ground plane are

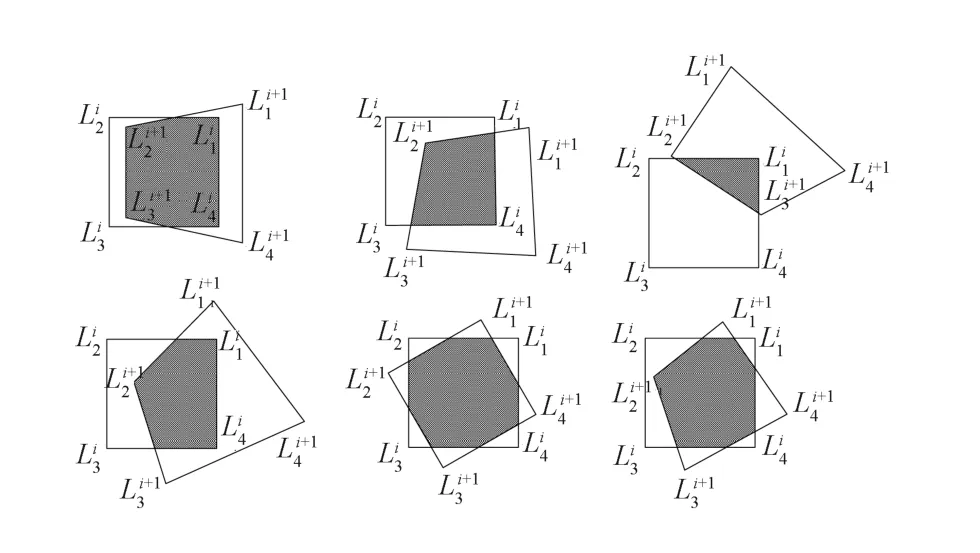

Similarly,the other coordinates limited by FOVs can be obtained.In such a scenario,it is essential to compute the overlapping area between adjacent images corresponding to their perspective quadrilaterals.Obviously,both quadrilaterals are convex,as well as the intersection polygon.The overlapping regions can be divided into many cases(some of which are shown in Fig.8).In order to compute the overlapping area,the position of the vertexes and the quadrilateral edge intersections should be considered,and the algorithm goes as follows.

Fig.8.Examples of intersection polygon of two FOVs caused by rotation.

1)For each vertex of the fi rst quadrilateral,check whether it is contained inside the second one.If it is,store coordinates of the point.

2)For each vertex of the second quadrilateral,check whether it is contained inside the fi rst one.If it is,store coordinates of the point.

3)For each edge of one of the quadrilaterals(no matter which one),check intersections with edges of the other.Store coordinates of intersection points.

4)Order the triangles to form a convex polygon.

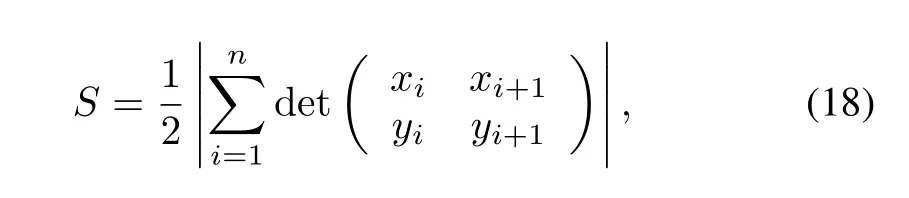

5)Compute the area using the shoelace formula[9]as a cyclic sum of determinants:

whereSis the area of the overlapping region,nis the number of sides of the polygon,and(xi,yi),i=1,2,···,nare the vertexes of the polygon ordered in step 4.Note thatxn+1=x1,andyn+1=y1.

The algorithm can also be used for the computation of pure 3D translation and both rotation and translation.In a similar way,the overlap area among more than two successive frames can be computed.

D.Motion Constraint Simulation

In this section,simulation of the Mars exploration rover (MER)descending and landing is taken[1].The series of simulation results are carried out to re fl ect partially the major aim of establishing the relationship between the motion and the overlapping area.As shown in Fig.9 three images are taken during descent at roughly 2000m,1700m and 1400m above the Mars surface.The 45-degree FOV navigation camera with focal length 14.67mm is selected,because it has the best balance between pixel resolution and image overlap given the descent dynamics.It starts by tracking two features between the fi rst image and the second,and two features between the second image and the third.The images are taken 13.75s apart, given a nominal descent speed of 75m/s.

Using the overlapping area computation algorithm in Section II,the vertexes and the quadrilateral edge intersections can be found out.The different overlap regions from frame to frame can be computed and represented in different colors as shown in Fig.10.

Fig.9.Mars landing and the FOVs of lander.

Fig.11(a)shows a schematic relationship betweenS/S0and the translation alongx,yaxes from 2000 meters to 1700 meters,whereSis the overlapping area,andS0is the projected visual area in the fi rst image.The maximum ratio of area is 85%,because the projected visual area becomes smaller during descent.In order to maintain overlapping area ratio greater than 70%,it needs that the horizontal velocity is at most 104m/s when the lander descends from 2000m to 1700m,and 85m/s is the maximum horizontal velocity when descending from 1700m to 1400m.

Fig.10.Overlap regions of different images.

Indeed,in the descending and landing process,the horizontal velocity of the lander is no more than decameters per second.According to(7),(9),(11),and(13),the overlapping area is larger if the attitude of lander is higher.If the lander takes images at high altitude,for example,at the time of the heat shield separation(about 5~9km),the constraint of the horizontal velocity is about hundreds of m/s.

Fig.11(b)shows the relationship betweenS/S0and the translation along thex,zaxes(velocity in directionyis assumed to be zero).Simulation results are presented to show that if the horizontal velocity inxdirection expected during descent is 30 m/s,the vertical velocity should be less than 333m/s when the lander descends from 2000m to 1700m.

Without considering translation,formula(18)makes for a relatively easy calculation of the overlapping area.The relationship betweenS/S0and rotation around thex,yaxes is shown in Fig.12(a),in whichψ,θare the rotation of radians about thexandyaxes,respectively;γis the FOV angle. Obviously,there is no overlapping region if the rotation radians around the two axes exceed half ofγ.To keep overlapping area ratio more than 70%,the rotation angle should be less than about 5.9°.

Fig.11.Relationship betweenS/S0and translation.

Fig.12.Relationship betweenS/S0and rotation.

The relationship betweenS/S0and rotation around thex,zaxes is shown in Fig.12(b),whereφis the rotation of radians about thezaxis.As can be seen from the graph,if the landerjust rotates around thezaxis,the minimum overlapping area ratio is 75%,and rotating around thezaxis has smaller effects on the overlapping area than rotating around thexaxis.

III.HORIZONTAL VELOCITY ESTIMATION USING FEATURE MATCHING

A.Feature Points Matching and Horizontal Velocity Estimation

Horizontal velocity is estimated by feature points matching between two consecutive images of the sequence acquired during the descent phase.Recently,feature points matching algorithm named af fi ne scale-invariant feature transform(ASIFT) has been proposed[14].It simulates all possible image views and thus is fully af fi ne invariant.ASIFT can handle much higher transition tilts than scale-invariant feature transform (SIFT)[15]or speeded up robust feature(SURF)[16],and it has characteristics of translation,scale,rotation and af fi ne invariant.Examples of matched ASIFT features(384 points matching)are shown in Fig.13,where MER-B landing images are taken at altitudes of approximately where and 1690m.

Fig.13.Feature points matching by ASIFT.

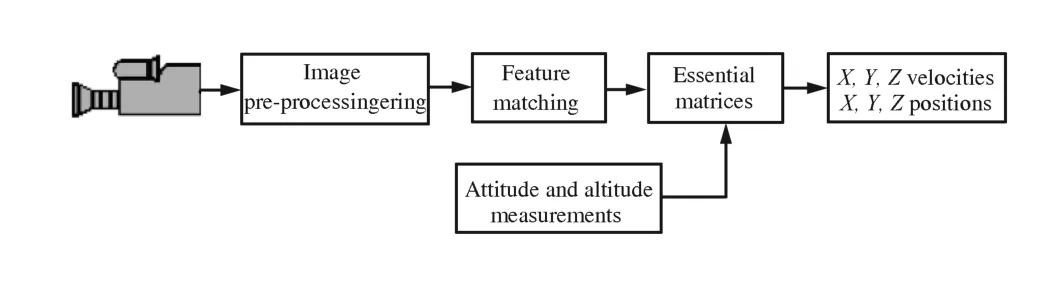

Since several hundreds of feature points can be tracked in the overlapping area of the image sequence,such correspondences are used to estimate the relative position between two views,which is the essential matrixE.To this purpose,we adopt an approach which exploits a prior knowledge on the attitude and altitude measurements(Fig.14).

Fig.14.Comparison of feature matching by different algorithms.

Correspondence methods for extracting 3D structure from two views of a given scene are based on the epipolar geometry.{(mmmi,}are the points in the scene projected to the fi rst and the second views.The relationship betweenmmmiandis described by the formulawheremmmi=[uivif]Tandinclude the image coordinates of the matching points and the focus.Eis the essential matrix, expressing cross product as matrix multiplication,

Calculating the essential matrix requires the knowledge of the intrinsic camera plus a number of corresponding pairs of points from the two views,which have already been obtained in the earlier stage.In addition,ground relative attitudeRcomes from inertial measurement unit(IMU),and vertical altitudetzcomes from radar altimeter.So the epipolar geometry can be reconstructed by estimating the essential matrix from point correspondences.Each correspondence leads to a homogeneous equation of the simple form,i.e.,

Two unknown parameterstx,tycan be estimated using at least two corresponding point pairs.But there are normally more than dozens of matching points,so the method of least squares is used for calculating the parameters of the best fi t. Thustxandtyare easy to be computed and given by

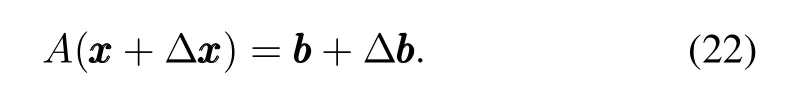

After estimating the relative position,the horizontal velocity can be easily gained by the image interval time and the rotation matrix.In addition,errors in the attitude and altitude estimates, and radial distortion would leak into the horizontal velocity estimate.This coupling of sensor errors have to be accounted for when generating a total velocity estimation error budget for simulation.Some of the representative parameters are shown in Table I.

B.Gross Error Elimination

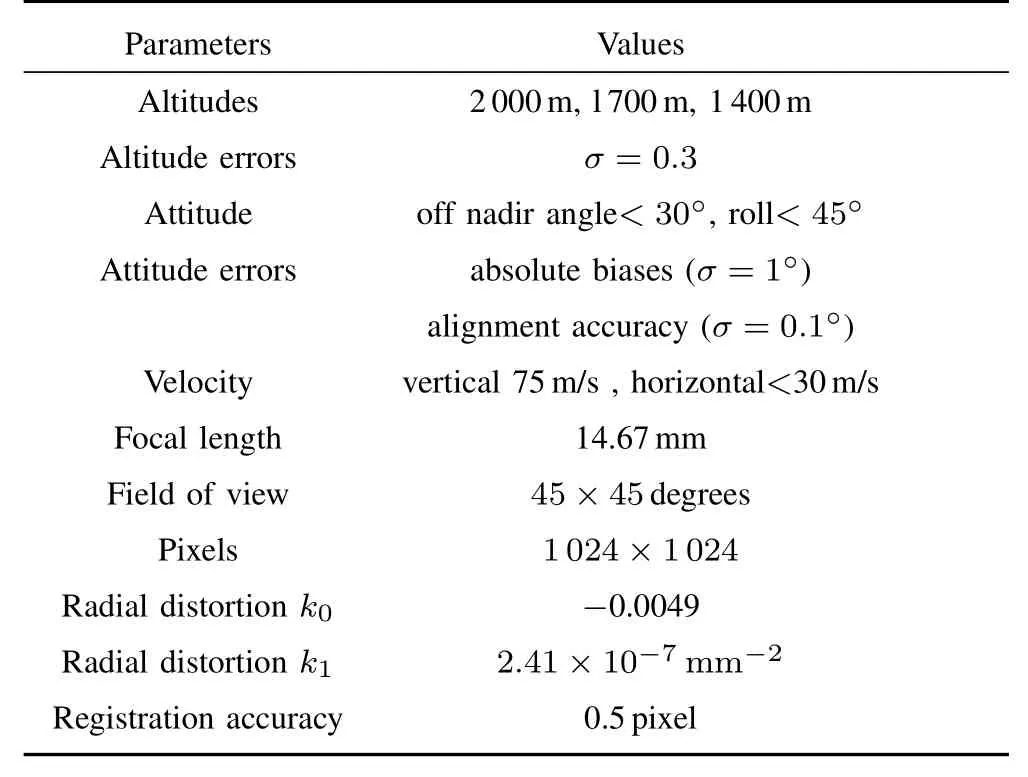

Obviously,the estimation of horizontal velocity is critically important,with any miscalculation potentially leading to the mission′s failure.But in the computation oftxandty,the least squares may not be the ideal solution when gross errors appear (up to hundreds of m/s,see Fig.15),especially using less than 5 equations.The gross errors are not caused by the egregious mismatches,but due to the ill-conditioned matrices with large condition numberκ(A)=kAkkA-1k(Fig.16).The condition number times the relative change in the data bound the relative change in the solution.The proof is as follows.

Proof.Letxdenote the solution vector of the linear systemA xxx=bbb.If we changebbbtobbb+Δbbb,the new solution isxxx+Δxxxwith

TABLE I REPRESENTATIVE PARAMETERS IN SIMULATION

So matrixC=ATAis badly conditioned or ill-conditioned if condition numberκ(A)=is large,the relative error inxxxcan be much larger than that inbbborA.□

Therefore it is extremely important in the work of numerical modeling to ensure that the constructed matrix has a low condition number.Existing methods for this problem are mostly based upon the singular value decomposition(SVD) methods,iterative methods[17],or some other methods[18-19]. These methods have been proven to be a cure for such illconditioned linear algebraic systems,although they generally converge very slowly or do not have very obvious effects on some ill-conditioned matrices.

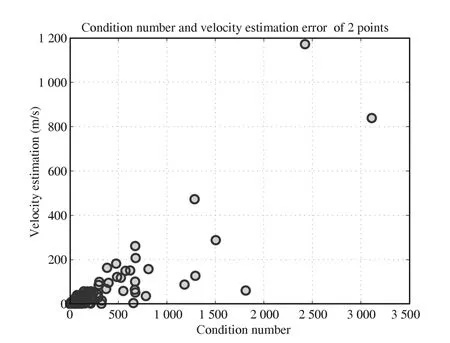

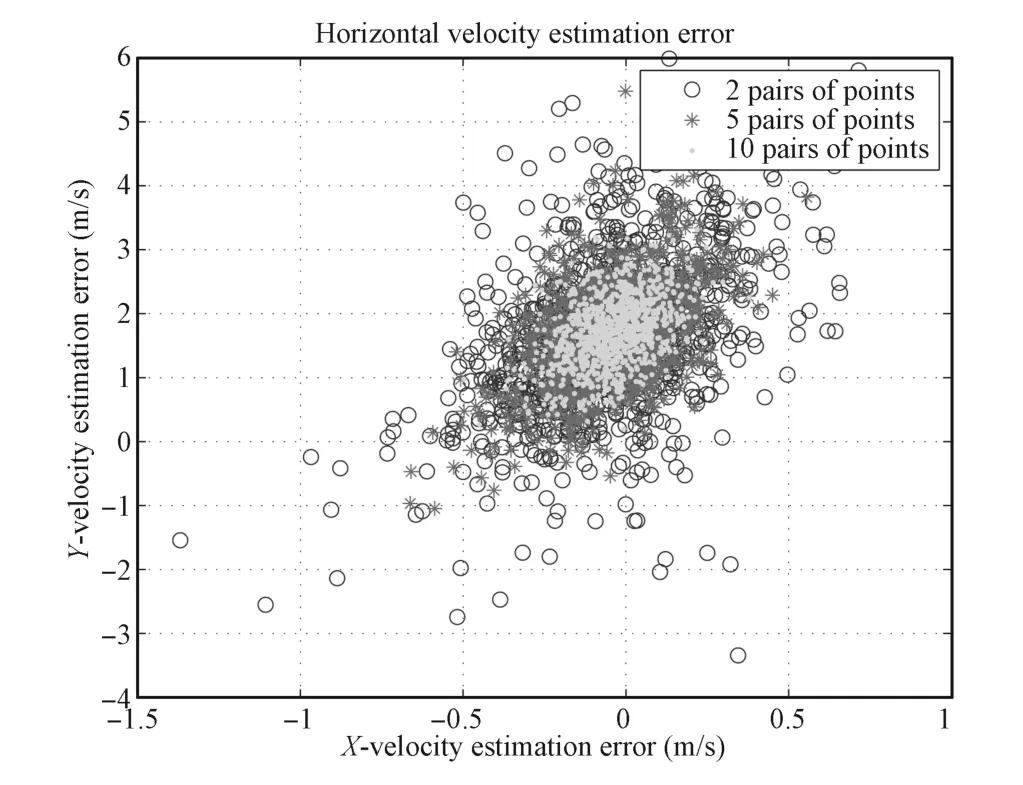

The matrices of ill-conditioned systems are characterized by large condition numbers,and usually dozens of matching points are available.Then the points that associate with large condition number matrices(using matrixAinstead of matrixCfor the sake of simplicity)can be fi rstly eliminated before solving fi nal governing equations.By this way,complex calculations mentioned above can be avoided.Furthermore, the solution becomes more stable.Thousands of Monte Carlo runs have been conducted using this algorithm to produce horizontal velocity estimation,and no gross errors appear. Results are presented in Fig.17.

Fig.18 shows an obvious improvement in accuracy,after eliminating the large condition number matrices(κ(A)>10). The velocity estimating accuracy increases with the number of the available feature points,both in matrices with or without elimination of large condition numbers.Addressing the least square theory,the accuracy of estimating parameters for linear system embedded in noise is inversely proportional towherenis the number of equations andtis the number of parameters.Using 6 pairs of points,the error of velocity estimation can reach as low as 2.5m/s,and little increase in accuracy is achieved with more than 10 feature points.There is also an increase of systematic errors(about 2m/s in this simulation)tending to be consistent in the radial lens distortion variation and the direction of lander movement.When metric and quality results are required,automatically correcting the radial lens distortion is necessary before computing the velocity.

Fig.15.Velocity estimation with gross error.

Fig.16.Velocity estimation error and the condition numbers.

The estimation accuracy increases at the expense of long computation time.In principle,for the least squares withnequations andtunknown parameters,it takes O(t2n)to multiplyATbyA,O(tn)to multiplyATbyL,O(t3)to compute the Cholesky or LU factorization ofATAand then to compute the productC-1ATL.Asymptotically,O(t2n) dominates O(tn),so the O(tn)can be ignored.Sincen≥t, which means that O(t2n)asymptotically dominates O(t3),the total time complexity is O(t2n).Particularly,computing theCholesky or LU decomposition ofATAtakes signi fi cantly longer than multiplyingATbyA.The relationship between running time and the number of points is shown in Fig.19.

Fig.17.Velocity estimation error after elimination of large condition numbers.

Fig.18.Relationship between velocity estimation error and point number.

Fig.19.Relationship between running time and point number.

Whenn=2,the running time is signi fi cantly reduced, because it does not need to compute the inverse matrix with decomposition.

IV.CONCLUSION

In this paper,a new concept for visual navigation is presented based on the analysis of motion constraints and stable estimation of horizontal velocity is determined as well.Based on the projection of FOV lines,a method is presented to calculate the overlapping area between the consecutive frames. The percentage of overlapping speci fi es the allowable range of movement including translation and rotation for constraints. The proposed approach in this paper uses more than two feature points to estimate the horizontal velocity,which is more accurate than the method of DIMES,where only two points are used.Further,the proposed approach in this paper is able to eliminate the large condition number matrices to improve its stability.

REFERENCES

[1]Johnson A,Willson R,Goguen J,Alexander J,Meller D.Field testing of the mars exploration rovers decent image motion estimation system. In:Proceedings of the 2005 International Conference Robotics and Automation.Barcelona,Spain:IEEE,2005.4463-4469

[2]Tweddle B E.Computer Vision Based Navigation for Spacecraft Proximity Operations[Master dissertation],Massachusetts Institute of Technology,USA,2010.

[3]van Pham Bach V P,Lacroix Simon L,Devy Michel D.Vision-based absolute navigation for descent and landing.Journal of Field Robotics, 2012,29(4):627-647

[4]Cheng Y,Goguen J,Johnson A,Legetr C,Matthies L,Martin M S, Willson Ret al.The Mars exploration rovers descent image motion estimation system.In:Proceedings of the 2004 IEEE Intelligent Tutoring Systems,Los Alamitos,USA:IEEE,2004.13-21

[5]Johnson A,Willson R,Cheng Y,Goguen J,Leger C,Sanmartin M, Matthies Let al.Design through operation of an image-based velocity estimation system for Mars landing.International Journal of Computer Vision,2007,74(3):319-341

[6]Harris C,Stevens M.A combined corner and edge detector.In:Proceedings of the 4th Alvey Vision Conference.England:University of Manchester,1988.147-151

[7]Flandin G,Polle B,Frapard B,Vidal P,Philippe C,Voirin T.Vision based navigation for planetary exploration.In:Proceedings of the 32nd Annual AAS Rocky Mountain Guidance and Control Conference. Breckenridge,Colorado:2009.277-296

[8]Lanza Piergiorgio L,Noceti Nicoletta N,Maddaleno Corrado M,Toma Antonio T,Zini Luca Z,Odone Francesca O.A vision-based navigation facility for planetary entry descent landing.In:Proceedings of the 12th International Conference on Computer Vision.Florence,Italy:Springer, 2012.546-555

[9]Rigatos Gerasimos G R.Nonlinear Kalman fi lters and particle fi lters for integrated navigation of unmanned aerial vehicles.Robotics and Autonomous Systems,2012,60(7):978-995

[10]Li M Y,Mourikis Anastasion I M.High-precision,consistent EKF-based visual-inertial odometery.International Journal of Robotics Research, 2013,32(6):690-711

[11]Paul A J,Lorraine E P.A historical compilation of software metrics with applicability to NASA′s Orion spacecraft fl ight software sizing.Innovations in Systems and Software Engineering,2011,7(3):161-170

[12]Dubois M O,Parkes S,Dunstam M.Testing and validation of planetary vision-based navigation systems with PANGU.In:Proceedings of the 21st International Symposium on Space Flight Dynamics.Toulouse, France:ISSFD,2009

[13]Newcombe R A,Davison A J.Live dense reconstruction with a single moving camera.In:Proceedings of the 2010 International Conference on Computer Vision and Pattern Recognition.San Francisco,USA:IEEE, 2010.1498-1505

[14]Morel J M,Yu G S.ASIFT:a new framework for fully af fi ne invariant image comparison.SIAM Journal on Imaging Sciences,2009,2(2): 438-469

[15]Lowe D G.Distinctive image features from scale-invariant keypoints.International Journal of Computer Vision,2004,60(42):91-110

[16]Bay H,Ess A,Tuytelaars T,van Gool L V.SURF:speeded up robust features.Computer Vision and Image Understanding,2008,110(3): 346-359

[17]Peris R,Marquina A,Candela V.The convergence of the perturbed Newton method and its application for ill-conditioned problems.Applied Mathematics and Computation,2011,218(7):2988-3001

[18]Salahi M.On regularization of ill-conditioned linear systems.Journal of Applied Mathematics,2008,5(17):43-49

[19]Brezinski C,Novati P,Redivo Z M.A rational Arnoldi approach for ill-conditioned linear systems.Journal of Computational and Applied Mathematics,2012,236(8):2063-2077

Wei Shao Associate professor at Qingdao University of Science&Technology.He received his Ph.D. degree from Harbin Institute of Technology in 2009. His research interests include deepspace autonomous navigation and computer vision.Corresponding author of this paper.

Shulin Sui President of the School of Mathematics &Physics,Qingdao University of Science&Technology.He received his Ph.D.degree from Beijing Jiaotong University.His research interests include optimality theory and autonomous navigation.

received her master degree from Qingdao University of Science&Technology in 2014.Her main research interest is autonomous navigation.

Manuscript received October 10,2013;accepted May 27,2014.This work was supported by National Basic Research Program of China(973 Program) (2012CB720000),National Natural Science Foundation of China(61104187) and Promotive Research Fund for Excellent Young and Middle-aged Scientists of Shandong Province(BS2012NY003).Recommended by Associate Editor Bin Xian.

:Wei Shao,Shulin Sui,Lin Meng,Yaobin Yue.Stable estimation of horizontal velocity for planetary lander with motion constraints.IEEE/CAA Journal of Automatica Sinica,2015,2(2):198-206

Wei Shao is with the College of Automation&Electronic Engineering, Qingdao University of Science&Technology,Qingdao 266042,China(email:greatshao@126.com).

Shulin Sui is with the School of Mathematics&Physics,Qingdao University of Science&Technology,Qingdao 266061,China(e-mail:@hotmail.com).

Lin Meng is with the College of Automation&Electronic Engineering, Qingdao University of Science&Technology,Qingdao 266042,China(email:menglin0216@126.com).

Yaobin Yue is with the School of Mathematics&Physics,Qingdao University of Science&Technology,Qingdao 266061,China(e-mail: yybs2002@163.com).

Yaobin Yue Lecturer at the College of Automation&Electronic Engineering,Qingdao University of Science&Technology.He received his master degree from Shandong University of Science and Technology in 2006.His research interests include control theory study and engineering application.

杂志排行

IEEE/CAA Journal of Automatica Sinica的其它文章

- Single Image Fog Removal Based on Local Extrema

- An Overview of Research in Distributed Attitude Coordination Control

- Operation Ef fi ciency Optimisation Modelling and Application of Model Predictive Control

- Cloud Control Systems

- Production Line Capacity Planning Concerning Uncertain Demands for a Class of Manufacturing Systems with Multiple Products

- Consensus Seeking for Discrete-time Multi-agent Systems with Communication Delay