Radial-curve-based facial expression recognition

2015-04-22YUELei岳雷SHENTingzhi沈庭芝ZHANGChao张超ZHAOShanyuan赵三元DUBuzhi杜部致

YUELei(岳雷),,SHENTing-zhi(沈庭芝),ZHANGChao(张超),ZHAOShan-yuan(赵三元),DUBu-zhi(杜部致)

(1.School of Information and Electronics, Beijing Institute of Technology, Beijing 100081, China;2.School of Computer Science and Technology, Beijing Institute of Technology, Beijing 100081, China)

Radial-curve-based facial expression recognition

YUE Lei(岳雷)1,, SHEN Ting-zhi(沈庭芝)1, ZHANG Chao(张超)1, ZHAO Shan-yuan(赵三元)2, DU Bu-zhi(杜部致)1

(1.School of Information and Electronics, Beijing Institute of Technology, Beijing 100081, China;2.School of Computer Science and Technology, Beijing Institute of Technology, Beijing 100081, China)

A fully automatic facial-expression recognition (FER) system on 3D expression mesh models was proposed. The system didn’t need human interaction from the feature extraction stage till the facial expression classification stage. The features extracted from a 3D expression mesh model were a bunch of radial facial curves to represent the spatial deformation of the geometry features on human face. Each facial curve was a surface line on the 3D face mesh model, begun from the nose tip and ended at the boundary of the previously trimmed 3D face points cloud. Then Euclid distance was employed to calculate the difference between facial curves extracted from the neutral face and each face with different expressions of one person as feature. By employing support vector machine (SVM) as classifier, the experimental results on the well-known 3D-BUFE dataset indicate that the proposed system could better classify the six prototypical facial expressions than state-of-art algorithms.

facial expression; radial curve; Euclidean distance; support vector machine (SVM)

Facial expression plays a key role in non-verbal face-to-face communication. As Mehrabian[1]mentioned, facial expressions have paramount impact on human interaction: about 55 percent of the effectiveness of a conversation relies on facial expressions, 38 percent is conveyed by voice intonation, and 7 percent by the spoken words. Therefore, automatic facial expression is essential to unleash the potential of many applications such as intelligent human-computer interaction (HCI) social analysis of human behavior and human emotion analysis.

The pioneer research of human expression couldbe traced back to 1872, when Darwin pointed out the universality of facial expression on the basis of his evolutionary theory for the very first time, no matter what nation and ethnic group the human being is. This early study demonstrates the universality of facial expression and is the basis of the declaration of the six (anger, disgust, fear, happiness, sadness, and surprise) basic expressions.

The early research on facial-expression recognition (FER) is mostly focused on 2D because of the lacking of publicly available 3D facial expression dataset. For the 2D FER, readers can refer to the resent survey papers[2,11]for a comprehensive understanding. In the year 2006, a 3D mesh dataset 3D-BUFE[3]was introduced by Yin et al. Since then, the research has been focused on 3D FER.

Jun Wang et al.[4]used so called principal curvature, primitive geometric features or topographic contexts to extract the features. The principal curvatures are: peak, ridge, saddle, hill, flat, ravine, or pit. Hill-labeled pixels can be further specified as one of the labels convex hill, concave hill, saddle hill or slope hill. Saddle hills can be further distinguished as concave saddle hill or convex saddle hill. Saddle can be specified as ridge saddle or ravine saddle. So there are a total of 12 types of topographic labels. Among these 12 features, the following 6 features are more important than others: convex hill, concave hill, convex saddle hill, concave saddle hill, ridge and ravine. The face image is segmented into eight sub regions, and the numbers of the six topographic features is counted in these 8 sub regions as feature. Soyel et al.[5]relied on the distance vectors retrieved from 3D distribution of facial feature points to classify universal facial expressions. Tang et al.[6]proposed a novel automatic feature selection method based on maximizing the average relative entropy of marginalized class-conditional feature distributions and applied it to a complete pool of candidate features composed of normalized Euclidean distances between manually located facial feature points in the 3D points cloud.

1 Fully automatic radial curve features extraction

Studies have been done to analyze 3D facial expression and promising classification rates have been obtained. Tang et al.[6]got an average recognition rate of 95.1%. But most of them utilized manually located fiducial points labeled on the 3D facial mesh model to extract feature, which lead to a semi-automatic procedure that couldn’t be used in real time to analyze human emotion. So, we tried to propose a fully automatic facial radial curve based feature extraction procedure to deal with this problem.

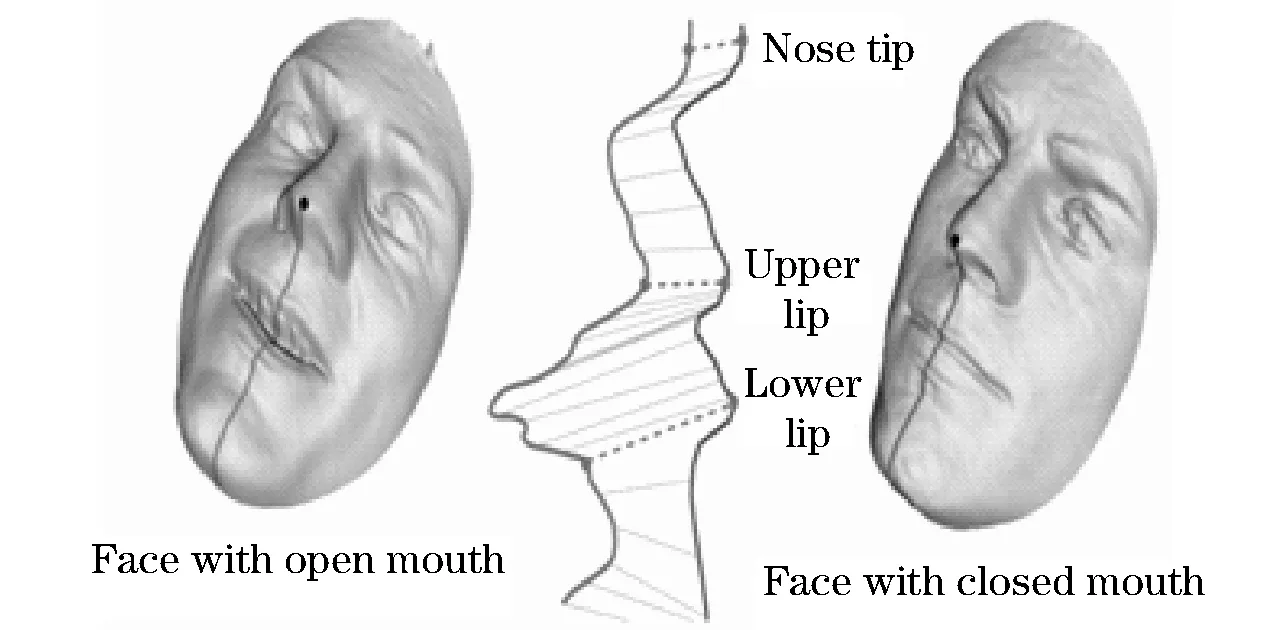

Inspired by the difference between the cutting curves of the same person’s faces when applying different expressions (Fig.1), we introduced a new facial feature, which calculate the distance between radial cutting curves on the surface of 3D facial mesh model. First, we automatically located the nose tip points on a facial points cloud from the 3D-BUFE dataset. The coordinate system correspond to the points cloud from that dataset is strict, which means that the positive direction ofX-axis is to the level right, the positive direction ofY-axis is straight up and the positive direction ofZ-axis is vertical to the plane defined byX-axis andY-axis. And with the knowledge of anatomy, we knew that, the point on each 3D facial mesh model with the maximumZvalue would be the tip of the nose.

Fig.1 Difference between facial curves

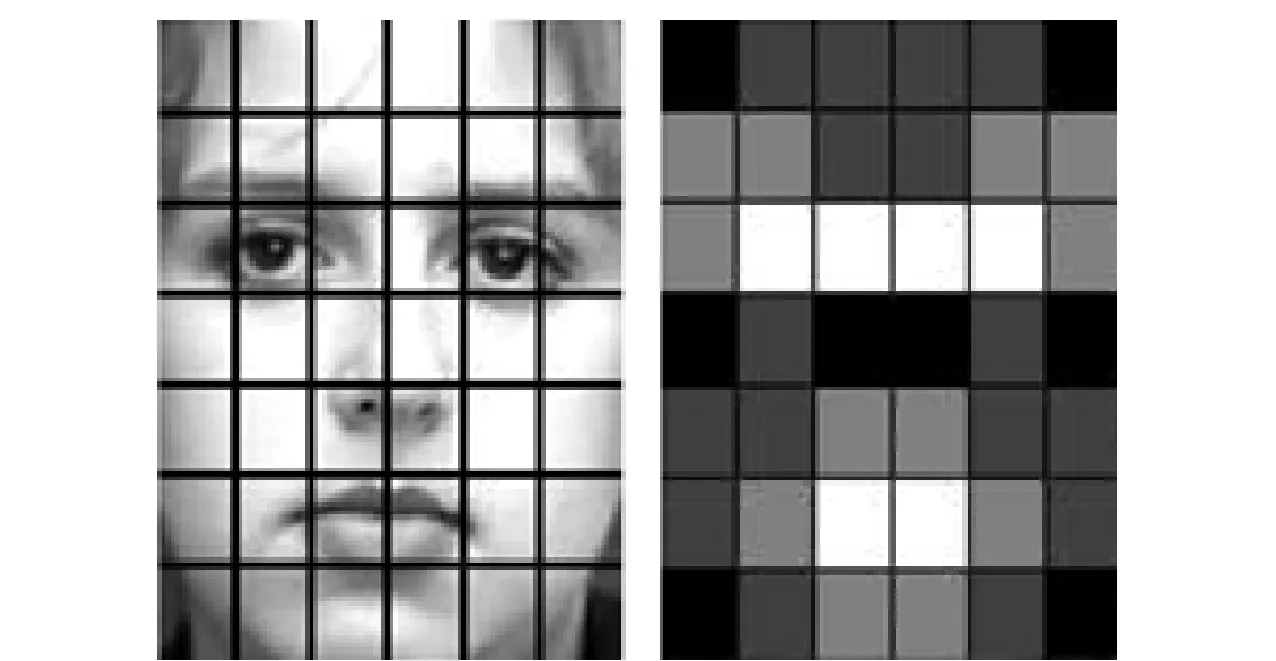

After the nose tip on the points cloud was located, we drew a bunch of planes that were vertical to theX-Yplane through this point and extracted the intersection line of these planes and the surface of 3D mesh model as radial curves. The angles between adjacent facial curves were not even, because different parts of the face contribute to the tense of expressions in different ways. Generally speaking, eyes and mouths illustrate more powerful distinguish abilities than other parts of face, such as cheek. Fig.2 shows the experimental results of the importance of different parts on a single face. The brighter the color is, the bigger importance that part represents[7]. Then we concatenated the even samples of each radial facial curve started from nose tip ended at the edge of each mesh model to represent a single face. In this article, we employed different parts with different importance schemes like this. As shown in Fig.2, the parts of eyes and mouth have higher weight. We drew dense facial curves in these parts, like 5° between adjacent facial curves, and sparse curves at other parts of face, like 10° between adjacent facial curves.

Fig.2 Different parts of a face represent different importance

When facial curves were extracted from an apex frame of each expression and the corresponding neutral face, we evenly sampled each facial curve intondimensional vectorEn, and calculated the Euclidean distance as

d=|En-Nn|=

(1)

betweentwocorrespondingcurvevectors(En,Nn) on apex expression face and neutral face (Nnrepresents the corresponding curve vector on natural face), such as the left line of the two lines in middle of Fig.2 as apex happiness and right line of the two lines as neutral face.

Then we concatenated the Euclidean distance generated from all the corresponding facial curves as a histogram featureDto feed to the classifier.

2 Automatic classification

A FER system is usually composed of three components: face detection, expression feature extraction and classification. The 3D mesh models provided in the BU-3DFE dataset were already pre-trimmed, so when 3D radial-curve-based feature was automatically extracted, the next and last component of the fully automatic 3D FER task was automatic classification.

We employed the widely used support vector machine (SVM)[9]as the classier. SVM is supervised learning models that associated with learning algorithms which recognizes patterns and analyzes data, and is widely used for classification and regression analysis tasks. In our proposal, we did our experiments with ten times cross validation. Each time we randomly picked 80 subjects as the training set and the other 20 subjects as the testing set. We did not pick the training and testing samples as regard to the ratio of the numbers of male and female subjects. Because according to the Facial Action Unit Coding System (FACS) by P. Ekman and W. Friesen[10], there is no sign that different gender conducts the same facial expression with different AU combinations and the same expression should be delivered in the same way. When feed the feature to SVM, grid search method was employed to search for the parameter to maximize the classification rate automatically. And different kernel functions were tried to guarantee the best performance.

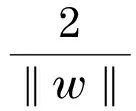

2.1 Support vector machine

The main scheme of SVM is as follows. Given some training dataDwhich is a set ofmpoints with the form

(2)

Whereyiis either 1 or -1, indicating which class the pointxibelongs to. Eachxiis ap-dimensional real vector. The goal of SVM is to find the maximum-margin hyper planes that separate the points which havingyi=1 from those havingyi=-1. The two marginal hyper planes could be represented as

(3)

(4)

whereyi[(wxi)+b]-1≥0,i=1,2,…,nis the constraint. The equations above are based on the assumption that training dataDis linearly dividable. If the assumption is invalid, we used a kernel function to map the low dimension sample points into high dimension ones which could help to make the data linearly dividable. The mostly used kernel functions are linear, polynomial, RBF and sigmoid. There is no identified evidence showing which kernel function is better than other for a specific task. Therefore, when do classification, we tried different kernel functions and keep the best results.

3 Experiments

We conducted our experiments with the 3D expression mesh models from the widely used 3D-BUFE dataset[3].The automatically extracted facial radial curve features were feed to SVM by ten times cross validation with optimized parameters selected automatically with grid searching scheme.

3.1 BU-3DFE dataset

The BU-3DFE dataset was developed in Binghamton University for the purpose of evaluating 3D FER algorithms. The databases come in two versions, one with the static data and the other with dynamic data. The static database includes still color images, while the dynamic database contains video sequences of subjects with expressions. The databases also include the neutral expression with the six prototypic expressions.

There were totally 100 subjects who participated in face scan, including undergraduates, graduates and faculty from various departments of Binghamton University (e.g. Psychology, Arts, and Engineering) and State University of New York (e.g. Computer Science, Electrical Engineering, and Mechanical Engineering). The majority of participants were undergraduates from the Psychology Department. The resulting database consists of about 60% female and 40% male subjects with a variety of ethnic/racial ancestries, including White, Black, East-Asian, Middle-east Asian, Hispanic Latino, and others. Each subject performed seven expressions (six prototype expressions and neutral) in front of the 3D face scanner. With the exception of the neutral expression, each of the six prototypic expressions (i.e. happiness, disgust, fear, angry, surprise, and sadness) includes four levels of intensity. The six prototype expressions including the neutral face were shown in Fig.3. Therefore, there are 25 3D expression models for each subject. As a result, the dataset in total contains 2 500 3D facial expression models and each model has its corresponding 2D texture image.

Fig.3 Samples of seven expressions (from left to right): anger, disgust, fear, happiness, neutral, sadness, and surprise

3.2 Experiment results

We carried out our experiment using LIBSVM[8], a widely used SVM library developed by Lin et al., with ten times cross validation under the previously mentioned training and testing set partition scheme. The classification results show that when RBF kernel function was employed, we could get the best classification results. The confusion matrix (Tab.1) shows promising classification rates in the FER society. We got the best recognition rate of 94.2% on surprise mainly because when human do such expression the mouth and eyes deformed the most compared to other expressions and the difference between the curves on a surprising face and a neutral face are huge. And the largest recognition rate is 78.5% on fear. From the confusion matrix, angry is always misclassified with fear and fear tent to be misclassified with disgust and happiness.

Tab.1 Confusion matrix %

Comparing to the state-of-art FER algorithm proposed by Tang et al.[6], we did better in some particular expressions, like disgust; but a little bit worse in the others. But our proposal has a huge advantage compared to the scheme proposed in their article. They used a subset of the 83 manually located fiducial points on the 3D facial points cloud to extract 24 Euclidean distances as feature, which lead to a semi-automatic procedure. And a useful real time human expression and emotion analysis system couldn’t bear that much of human interaction.

Our proposal still has potential to improve. For example, we just evenly sampled the curves, which is not precise. So, we will continue this research to get better classification results.

4 Conclusion

In this paper, we introduced a new feature based on facial curves generate from 3D face mesh model to handle FER task, and achieved promising recognition rate. When generating the feature histograms, we just evenly sampled the curves, which is not precise. From Fig.2, along each curve from nose tip to facial edge, the weight of each sample value is different. So, we will take this into consideration to further improve the recognition rate.

[1] Mehrabian A. Communication without words[J]. Psychology Today, 1968, 2(4):53-56.

[2] Pantic M. Machine analysis of facial behavior: naturalistic & dynamic behavior[J].Philosophical Transactions of the Royal Society B Biological Sciences, 2009,364(1535):3505-3513

[3] Yin L, Wei X, Sun Y, et al. A 3d facial expression database for facial behavior research[C]∥International Conference on Automatic Face and Gesture Recognition,Southampton, UK,2006.

[4] Wang J, Yin L, Wei X. 3D Facial expression recognition based on primitive surface feature distribution[C]∥IEEE Conference on Computer Vision and Pattern Recognition, New York, USA, 2006.

[5] Soyel H, Demirel H. Facial expression recognition using 3D facial feature distances[C]∥International Conferenceon Image Analysis and Recognition, Montreal,Canada,2007.

[6] Tang H, Huang T. 3D facial expression recognition based on automatically selected Features[C]∥IEEE Conference on Computer Vision and Pattern Recognition,Anchorage, Alaska, USA,2008.

[7] Shan C, Gong S, McOwan P. Robust facial expression recognition using Local Binary Patterns[C]∥IEEE International Conference on Image Processing, Genova, Italy,2005.

[8] Chang C, Lin C. LIBSVM: a library for support vector machines[J]. ACM Transactions on Intelligent Systems and Technology, 2011,2(27):1-27.

[9] Cortes C,Vapnik V. Support-vector networks[J]. Machine Learning, 1995,20: 273-297.

[10] Ekman P, Friesen W. Facial action coding system: a technique for the measurement of facial movement[M]. Palo Alto: Consulting Psychologists Press, 1978.

[11] Zeng Z, Pantic M, Roisman I, et al. A survey of affect recognition methods: audio,visual, and spontaneous expressions[J]. IEEE Transaction on Pattern Analysis and Machine Intelligence, 2009, 31(1):39-58

(Edited by Cai Jianying)

10.15918/j.jbit1004-0579.201524.0412

TP 37 Document code: A Article ID: 1004- 0579(2015)04- 0508- 05

Received 2014- 02- 28

Supported by the National Natural Science Foundation of China(60772066)

E-mail: napoylei@163.com

猜你喜欢

杂志排行

Journal of Beijing Institute of Technology的其它文章

- Influence of shear sensitivities of steel projectiles on their ballistic performances

- Generalized ionospheric dispersion simulation method for wideband satellite-ground-link radio systems

- Multi-subpulse process of large time-bandwidth product chirp signal

- Factor-graph-based iterative channel estimation and signal detection algorithm over time-varying frequency-selective fading channels

- Nano-silica particles enhanced adsorption and recognition of lysozyme on imprinted polymers gels

- Similarity matrix-based K-means algorithm for text clustering