Kernel principal component analysis networkfor image classification

2015-03-01WuDanWuJiasongZengRuiJiangLongyuLotfiSenhadjiShuHuazhong

Wu Dan Wu Jiasong Zeng Rui Jiang LongyuLotfi Senhadji Shu Huazhong

(1Key Laboratory of Computer Network and Information Integration of Ministry of Education,Southeast University, Nanjing 210096, China)(2Institut National de la Santé et de la Recherche Médicale U 1099, Rennes 35000, France)(3Laboratoire Traitement du Signal et de l’Image, Université de Rennes 1, Rennes 35000, France)(4Centre de Recherche en Information Biomédicale Sino-Français, Nanjing 210096, China)

Kernel principal component analysis networkfor image classification

Wu Dan1,4Wu Jiasong1,2,3,4Zeng Rui1,4Jiang Longyu1,4Lotfi Senhadji2,3,4Shu Huazhong1,4

(1Key Laboratory of Computer Network and Information Integration of Ministry of Education,Southeast University, Nanjing 210096, China)(2Institut National de la Santé et de la Recherche Médicale U 1099, Rennes 35000, France)(3Laboratoire Traitement du Signal et de l’Image, Université de Rennes 1, Rennes 35000, France)(4Centre de Recherche en Information Biomédicale Sino-Français, Nanjing 210096, China)

Abstract:In order to classify nonlinear features with a linear classifier and improve the classification accuracy, a deep learning network named kernel principal component analysis network (KPCANet) is proposed. First, the data is mapped into a higher-dimensional space with kernel principal component analysis to make the data linearly separable. Then a two-layer KPCANet is built to obtain the principal components of the image. Finally, the principal components are classified with a linear classifier. Experimental results show that the proposed KPCANet is effective in face recognition, object recognition and handwritten digit recognition. It also outperforms principal component analysis network (PCANet) generally. Besides, KPCANet is invariant to illumination and stable to occlusion and slight deformation.

Key words:deep learning; kernel principal component analysis net (KPCANet); principal component analysis net (PCANet); face recognition; object recognition; handwritten digit recognition

Received 2015-05-04.

Biographies:Wu Dan (1990—), female, graduate; Shu Huazhong (corresponding author), male, doctor, professor, shu.list@seu.edu.cn.

Foundation items:The National Natural Science Foundation of China (No.61201344, 61271312, 61401085, 11301074), the Research Fund for the Doctoral Program of Higher Education (No.20120092120036), the Program for Special Talents in Six Fields of Jiangsu Province (No.DZXX-031), Industry-University-Research Cooperation Project of Jiangsu Province (No.BY2014127-11), “333” Project (No.BRA2015288), High-End Foreign Experts Recruitment Program (No.GDT20153200043), Open Fund of Jiangsu Engineering Center of Network Monitoring (No.KJR1404).

Citation:Wu Dan, Wu Jiasong, Zeng Rui, et al. Kernel principal component analysis network for image classification[J].Journal of Southeast University (English Edition),2015,31(4):469-473.[doi:10.3969/j.issn.1003-7985.2015.04.007]

Amajor difficulty of image classification is the considerable intra-class variability, arising from different illuminations, rigid deformations, non-rigid deformations and occlusions, which are useless for classification and should be eliminated. Deep learning structures like deep convolutional networks have the ability to learn invariant features[1]. Bruna et al.[2]built a scattering network (ScatNet) which is invariant to both rigid and non-rigid deformations. Chan et al.[3]constructed a principal component analysis network (PCANet), which cascaded principal component analysis (PCA), binary hashing, and block-wise histogram. PCANet achieves the state-of-the-art accuracy in many datasets of classification tasks, such as extended Yale B dataset, AR dataset, and FERET dataset. Kernel PCA (KPCA)[4-5]is a nonlinear generalization of PCA in the sense that it performs PCA in the feature spaces of arbitrary large dimension. KPCA can generally provide a better recognition rate than ordinary PCA due to the following two reasons: 1) KPCA uses an arbitrary number of nonlinear components, while ordinary PCA uses only a limited number of linear principal components; 2) KPCA has more flexibility than ordinary PCA since KPCA can choose different kernel functions (for example, Gaussian kernel, Polynomial kernel, etc.) for different recognition tasks, while ordinary PCA uses only linear kernel functions.

In this paper, we propose a new deep learning network named kernel principal component network (KPCANet), which cascades two KPCA stages and one pooling stage. When the kernel function is linear, the proposed KPCANet degrades to the PCANet[3]. Experimental results show that the proposed KPCANet is invariant to illumination and stable to slight non-rigid deformation, and it generally outperforms PCANet in both face recognition and object recognition tasks.

1KPCANet

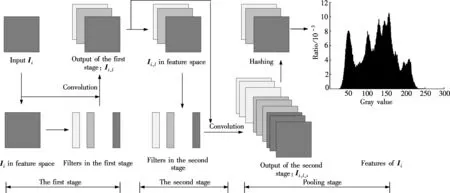

Fig.1 shows the whole structure of the proposed KPCANet, which consists of two KPCA stages and one pooling stage. Suppose that the patch size isk1×k2at all stages, and all the input images are of sizem×n.

1.1 The first stage of KPCANet

We inputNimages Ii(i=1,2,…,N) that belong tocclasses, and take a patch pi,j∈Rk1×k2centered in thej-th

Fig.1 The detailed block diagram of the proposed KPCANet

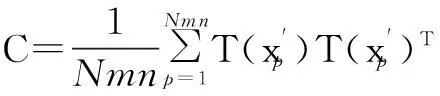

T: Rk1k2×k1k2→F,X|→XF

(1)

(2)

1.2 The second stage of KPCANet

1.3 The pooling stage of KPCANet

EveryL2input images are binarized and converted to an image with

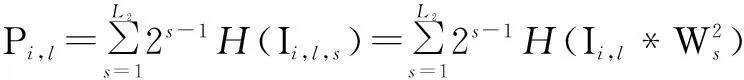

i=1,2,…,N;l=1,2,…,L1

(3)

whereHis the Heaviside step (like) function[3].

Each of theL1images Pi,l(l=1,2,…,L1) is then partitioned intoBblocks. We compute the histogram of the decimal values in each block, and concatenate all theBhistograms into one vector denoted as Bhist(Pi,l). Finally, the KPCANet features of Iiare given by

fi=[Bhist(Pi,1),Bhist(Pi,2),…,Bhist(Pi,L1)]T∈R(2L2)L1B

(4)

Since deep architectures are composed of multiple levels of nonlinear operations, such as in complicated propositional figuree re-using many sub-figuree[6], the first two stages of KPCANet are set to be the same in this paper, we can re-use the whole structure of the first stage as well.

2Experimental Results

We evaluate the performance of the proposed KPCANet on various databases including MNIST, USPS, Yale face dataset, COIL-100 objects dataset, and AR dataset. Besides, we compare KPCANets that cascade various (from one to three) stage(s) of the KPCA layer in this paper. All the features learned by KPCANet are classified with a SVM classifier.

2.1 Comparison of KPCANet in different recognition tasks

In this section, we use various kernel functions to evaluate the performance of the proposed KPCANet in recog-

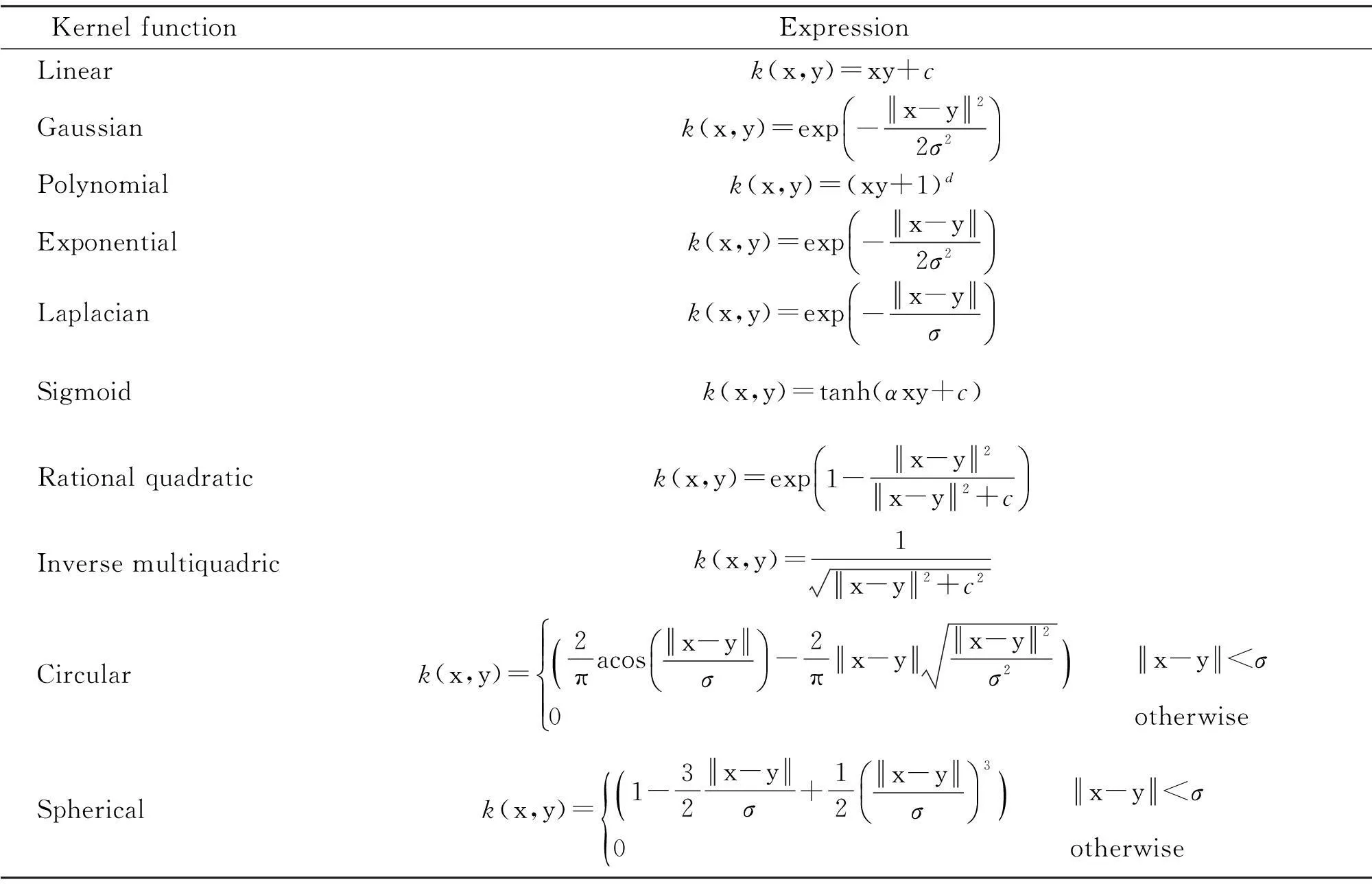

nition tasks including handwritten digit recognition, face recognition and object recognition. Kernel functions that are used in this paper are presented in Tab.1.

MNIST[7]and USPS[8]are used to evaluate the performance of KPCANet on handwritten images. MNIST contains 60 000 train images and 10 000 test images, and all images are of size 28×28 pixel. USPS contains 9 298 images of size 16×16 pixel in total, 5 000 of which are chosen randomly to train KPCANet and the rest are for testing. The Yale face database[9]is used to evaluate the performance of the proposed KPCANet on face images. It contains 165 grayscale images of 15 individuals in GIF format, and each individual contains 11 images with different facial expressions or configurations: center-light, wearing glasses, happy, left-light, wearing no glasses, normal, right-light, sad, sleepy, surprised, and winking. All images of this database are cropped to size 64×64 pixel, 90 of which are chosen randomly to train the proposed KPCANet and the rest are for testing. COIL-100 (Columbia Object Image Library)[10]is a database of the color images of 100 objects. The images of the objects are taken at pose intervals of 5°, and they correspond to 72 poses per object. All images are transformed into gray images and cropped to size 32×32 pixel. Half images of each object are chosen randomly to train KPCANet and the others are for testing.

Tab.1 Various kernel functions used in this paper

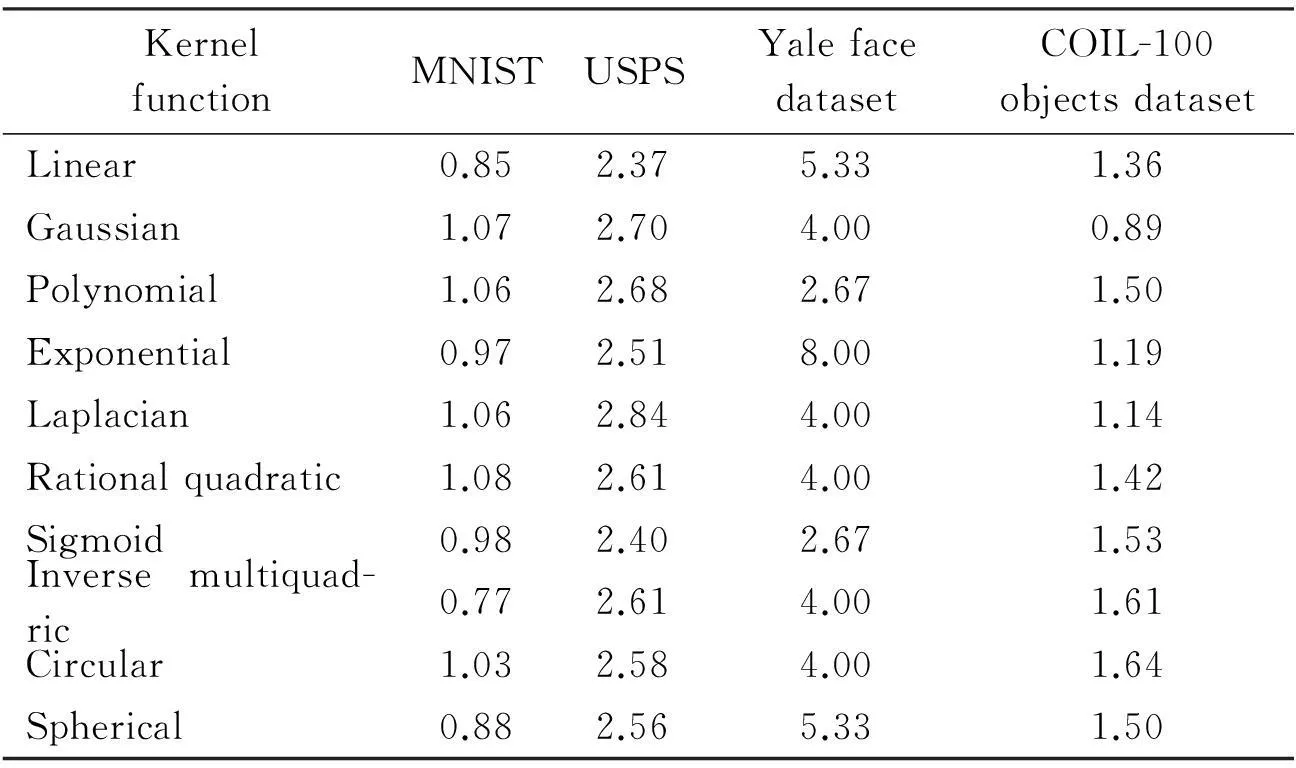

The performances of different kernel functions on datasets including MNIST, USPS, Yale face dataset and COIL-100 dataset are presented in Tab.2. Both the patch size and the block size are set to be 8×8 pixel, and the filter number is set to be 8 at all stages. The overlapping ratio of block is 0.5.

Tab.2 Comparison of error rates of KPCANet with various kernel functions on different datasets %

It can be seen from Tab.2 that the performance of PCANet performs better than KPCANet in handwritten digit recognition generally, while the latter outperforms the former in face recognition and object recognition.

2.2 Face recognition on AR face dataset

The properties of KPCANet are tested by performing KPCANet on the AR dataset[11]. The AR dataset contains about 4 000 color images of size 165×120 pixel from 126 individuals. The subset of the data that contains 100 individuals including 50 males and 50 females is chosen. The color images are converted to gray scale ones. Each individual consists of two images with frontal illumination and neutral expression, which is used as the training samples. The other images including 24 images varying from illumination to disguise are used for testing.

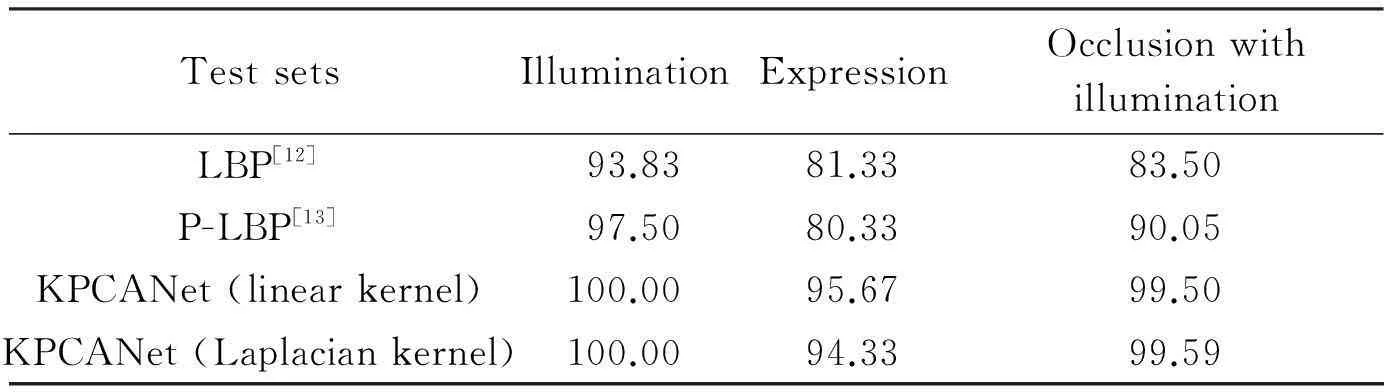

The patch size and the block size are set to be 7×7 pixel and 8×8 pixel, respectively. The overlapping ratio of the block is 0.5. We compare the proposed KPCANet with LBP[12]and P-LBP[13]in Tab.3. KPCANet with linear kernel function and Laplacian kernel function is used in this experiment. From Tab.3, one can see that when the images only undergo the change of illumination, the testing accuracy rate achieves 100% with both linear kernel KPCANet and Laplacian kernel KPCANet. It is demonstrated that KPCANet is invariant to illumination. Besides, KPCANet outperforms LBP[12]and P-LBP[13]on different expressions and disguises under various illumination conditions, showing that KPCANet is robust to small deformation and occlusion.

Tab.3 Comparison of accuracy rates of the methods on the AR face database %

2.3 KPCANet with various stages in AR face dataset

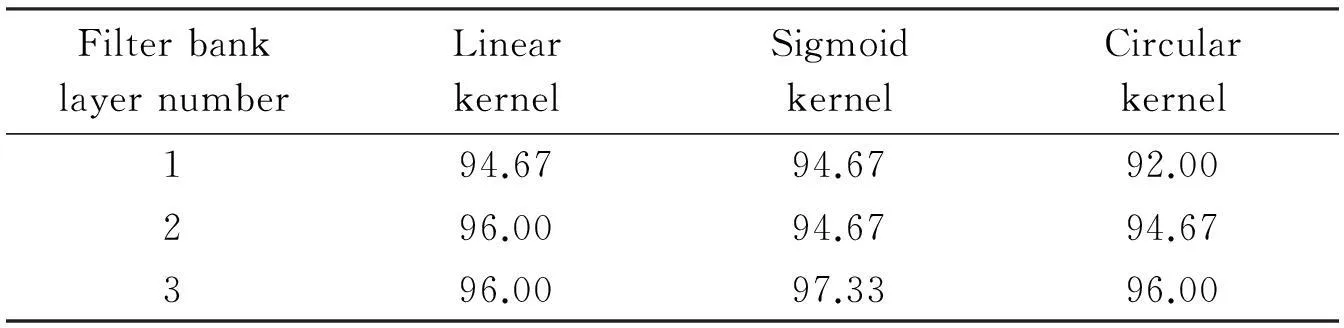

KPCANet, which cascades different numbers of the KPCA filter bank layer and a pooling layer, is performed with the AR face dataset used in Section 2.2, and all images are cropped to size 32×32 pixel. Linear kernel, sigmoid kernel and circular kernel are chosen here in order to simplify the results. The patch size and the block size are set to be 7×7 pixel and 8×6 pixel, respectively. The overlapping ratio of the block is 0.5. The results are shown in Tab.4.

Tab.4 Comparison of accuracy rates of KPCANet with different number of stages on the AR face dataset %

From Tab.4, we can see that the accuracy rate increases as the number of KPCA filter bank layers increases in the KPCANet, however, the training time grows exponentially at the same time.

3Conclusion

In this paper, we propose the KPCANet, which is an extension of PCANet, for image classification. The proposed KPCANet cascades kernel PCA, binary hashing and block-wise histogram. Experiments prove that KPCANet with different kernel functions is stable in general and also is invariant to illumination and stable to slight deformation and occlusion. Moreover, KPCANet is suitable for the recognition of handwritten images, face images and object images.

References

[1]LeCun Y, Kavukcuoglu K, Farabet C. Convolutional networks and applications in vision[C]//Proceedingsof2010IEEEInternationalSymposiumonCircuitsandSystems. Paris, France, 2010: 253-256.

[2]Bruna J, Mallat S. Invariant scattering convolution networks[J].IEEETransactionsonPatternAnalysisandMachineIntelligence, 2013, 35(8): 1872-1886.

[3]Chan T H, Jia K, Gao S, et al. PCANet: a simple deep learning baseline for image classification?[J].arXivpreprintarXiv: 1404.3606, 2014.

[4]Schölkopf B, Smola A, Müller K R. Kernel principal component analysis[C]//InternationalConferenceonArtificialNeuralNetworks. Lausanne, Switzerland, 1997: 583-588.

[5]Schölkopf B, Smola A, Müller K R. Nonlinear component analysis as a kernel eigenvalue problem[J].NeuralComputation, 1998, 10(5): 1299-1319.

[6]Bengio Y. Learning deep architectures for AI[J].FoundationsandTrends®inMachineLearning, 2009, 2(1): 1-127.

[7]LeCun Y, Bottou L, Bengio Y, et al. Gradient-based learning applied to document recognition[J].ProceedingsoftheIEEE, 1998, 86(11): 2278-2324.

[8]Hull J J. A database for handwritten text recognition research[J].IEEETransactionsonPatternAnalysisandMachineIntelligence, 1994, 16(5): 550-554.

[9]Georghiades A S, Belhumeur P N, Kriegman D J. From few to many: illumination cone models for face recognition under variable lighting and pose[J].IEEETransactionsonPatternAnalysisandMachineIntelligence, 2001, 23(6): 643-660.

[10]Nene S A, Nayar S K, Murase H. Columbia object image library (COIL-20), CUCS-005-96 [R]. New York: Department of Computer Science, Columbia University: 1996.

[11]Martinez A M, Benavente R. The AR face database, CVC technical report #24[R]. CVC, 1998.

[12]Ahonen T, Hadid A, Pietikäinen M. Face description with local binary patterns: application to face recognition[J].IEEETransactionsonPatternAnalysisandMachineIntelligence, 2006, 28(12): 2037-2041.

[13]Tan X, Triggs B. Enhanced local texture feature sets for face recognition under difficult lighting conditions[J].IEEETransactionsonImageProcessing, 2010, 19(6): 1635-1650.

doi:10.3969/j.issn.1003-7985.2015.04.007

杂志排行

Journal of Southeast University(English Edition)的其它文章

- Mitigation of inter-cell interference in visible light communication

- Modified particle swarm optimization-based antenna tiltangle adjusting scheme for LTE coverage optimization

- Distribution algorithm of entangled particles for wireless quantum communication mesh networks

- CFD simulation of ammonia-based CO2 absorption in a spray column

- Simulation and performance analysis of organic Rankine cycle combined heat and power system

- Experimental investigation on heat transfer characteristics of a new radiant floor system with phase change material