Improved YOLOv4 for real-time detection algorithm of low-slow-small unmanned aerial vehicles

2024-02-18WUXuanZHANGHaiyangZHAOChangmingLIZhipengWANGYuanze

WU Xuan,ZHANG Haiyang,ZHAO Changming,LI Zhipeng,WANG Yuanze

(School of Optics and Photonics,Beijing Institute of Technology,Beijing 100081,China)

Abstract:In order to solve the low accuracy in low-slow-small unmanned aerial vehicles (UAVs) mission on embedded platform and deployment problem of poor real-time performance,a small UAV target detection algorithm based on improved YOLOv4 was proposed.By increasing the shallow characteristic figure,improving the anchor,enhancing the small target,and the detection performance of network for small target was improved,through sparse training and model pruning,the model running time was greatly reduced.The average accuracy (mAP) reaches 85.8% on the 1080Ti,and the frame rate (FPS) reaches 75 frame/s,which achieving network lightweight.This lightweight model was deployed on the Xavier edge computing platform,which could achieve the UAV target detection speed of 60 frame/s.Experimental results show that,in compared with YOLOv4 and YOLOv4-TINY,this algorithm achieves the balance of running speed and detection accuracy,and can effectively solve the problem of UAV target detection on embedded platform.

Key words:low-slow-small unmanned aerial vehicles;target detection;YOLOv4;pruning;embedded

Introduction

With the rapid development of aviation technology and the upgrade of communication technology,the unmanned aerial vehicles (UAVs) have been widely used in fire fighting[1],agricultural monitoring[2]and other fields.UAV has low flying altitude,uncertain flight trajectory and high flexibility[3],which will pose a threat to public security and privacy when used by criminals.It is necessary to take countermeasures against UAVs,and UAV target detection is the key to interfere and strike them.

Common UAV target detection methods include classical moving target detection based on optical flow method and frame difference method[4].Since AlexNet network was proposed,deep learning has been gradually applied to object detection[5].Although the detection accuracy of two-stage algorithms such as RCNN(region with convolutional neural network feature)[6],Fast R-CNN[7],Faster R-CNN[8],SPPNet(spatial pyramid pooling network)[9]is significantly improved compared with traditional algorithms,they cannot meet the real-time requirements of engineering.In one-stage algorithm,SSD(singleshot multibox detector) algorithm[10]adopts multi-scale feature map combined with anchor mechanism to improve the detection accuracy as much as possible while ensuring the speed.For small target detection,WANG Ruoxiao et al.reduced the channels of VGG16 to meet the realtime detection of UAV on the embedded platform[11].LIN T Y et al.proposed RetinaNet,which uses focal loss to overcome the class imbalance problem caused by high foreground to background ratio[12].RAZA M A et al.proposed BV-RNet,which can effectively detect small scale targets by extracting dense features and optimizing predefined anchor points[13].SUN Han et al.proposed a lightweight detection network for UAVs:TIB-Net[14].In view of the lack of texture and shape features of infrared UAVs,DING Lianghui et al.enhanced the high-resolution network layer and adopted the adaptive pipeline filter (APF) based on temporal correlation and motion information to correct the results[15].FANG H et al.transformed the infrared small UAV target detection into nonlinear mapping from infrared image space to residual image and got better detection performance in complex background[16].YOLO(you only look once) algorithm uses wholeprocess convolution for target discrimination and candidate box prediction[17],which has high detection accuracy and fast detection speed.HU Y et al.used feature maps of 4 scales to predict bounding boxes in YOLOv3 to obtain more texture and contour information,the mAP was increased by about 4.16%[18].LI Zhipeng et al.used the super-resolution algorithm to reconstruct high-resolution UAV images,and used YOLOv3 to realize the effective detection of low-slowsmall UAVs[19].

The lack of semantic information in small UAV target imaging will reduce the detection accuracy,and the memory and computing power of the embedded platform are limited,which cannot meet the real-time requirements of UAV detection tasks,there is a lack of high-precision real-time target detection algorithms for small UAV.Aiming at the above problems,this paper improves the mAP(mean average precision) by 6.2% and the FPS(frame per second) by 22 frame/s on the basis of YOLO4 through model improvement and pruning,and achieves 85.6% mAP and nearly 60 frame/s detection performance with half-precision deployment on the embedded platform.Experiments have verified the effectiveness of this method for high-precision realtime detection of low-slow-small UAV targets.

1 Algorithm design for low-slow-small UAV target detection

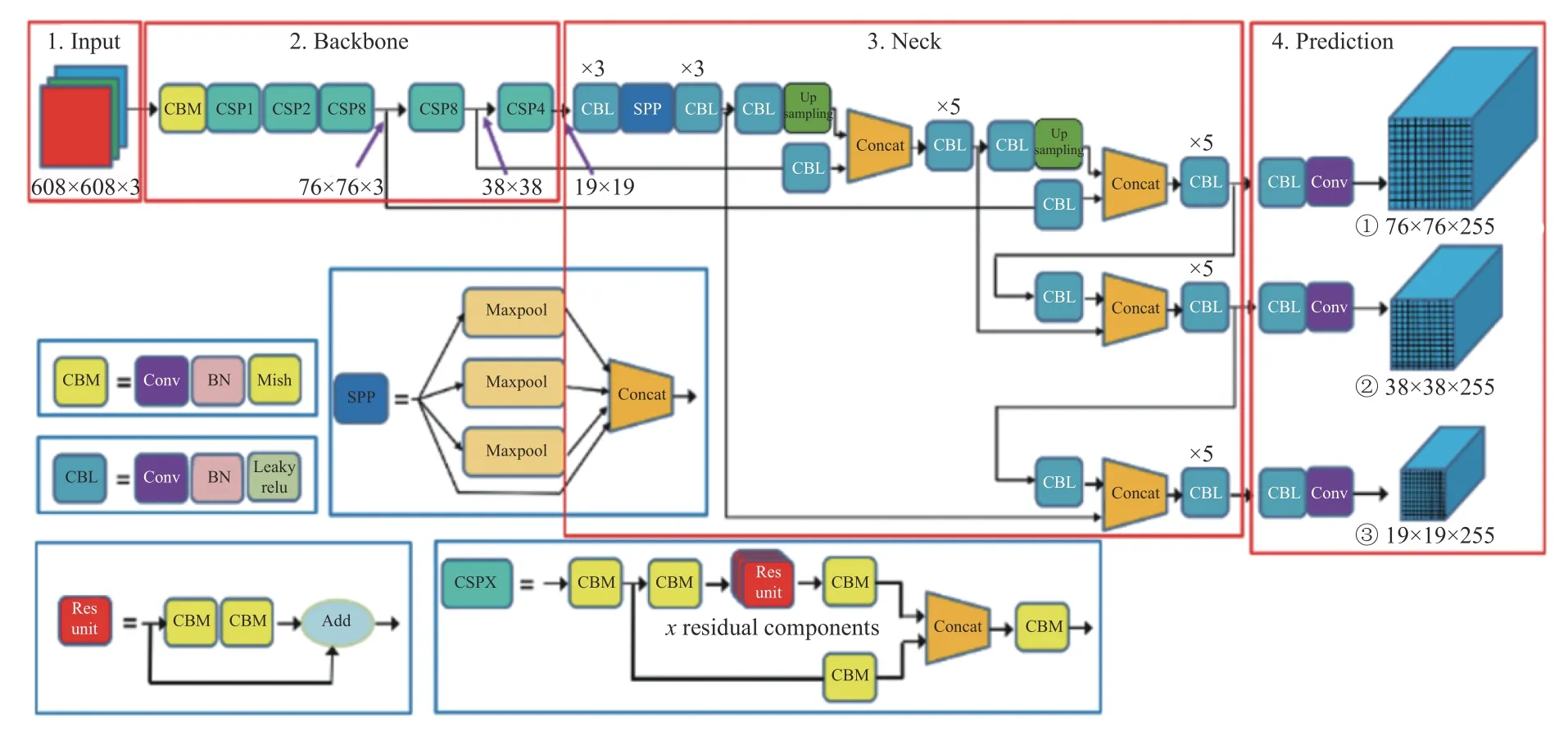

YOLOv4 algorithm was proposed in 2020.Compared with YOLOv3,it has been optimized in backbone network,multi-scale fusion,activation function,loss function and other aspects[20],its structure is shown in Figure 1.The backbone network part refers to the idea of jump connection of CSPNet[21],and forms CSPDarkNet53 on the basis of DarkNet53(as shown in the residual part in Fig.1),which enhances the network feature extraction ability and speeds up the network training speed.The neck part uses the SPP structure (see SPP structure diagram in Fig.1) to improve the size of the receptive field,and then PANet is used to achieve the fusion of feature maps of different scales and sizes.Through repeated feature extraction,the feature extraction capability of network for objects of different sizes is effectively enhanced.In the position loss function,CIoU(complete intersection over union) is used to comprehensively evaluate the overlap area,aspect ratio,distance of the center position and other factors between the ground truth box and the predicted box.The Mish activation function is used to avoid gradient saturation.

Fig.1 Network structure diagram of YOLOv4

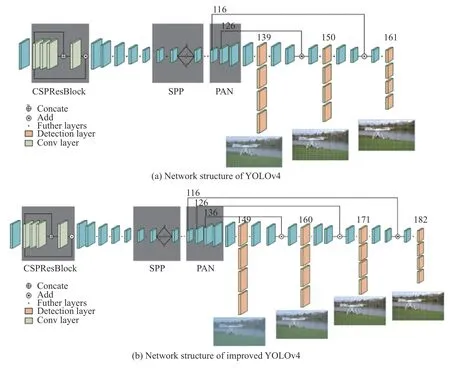

Fig.2 Comparison of YOLOv4 network structure before and after improvement

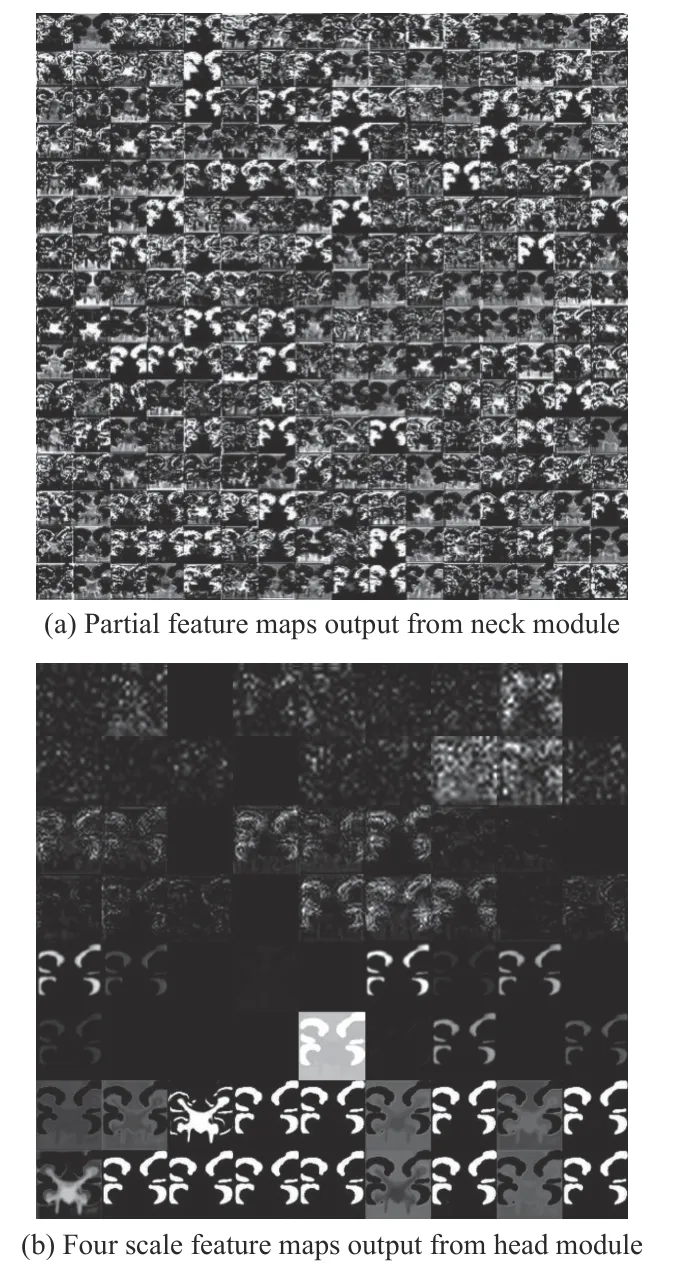

Fig.3 Feature maps of improved YOLOv4

Since YOLOv4 performs well in the field of traditional target detection and has made some optimization for small target detection,this paper improved the YOLOv4 algorithm according to the characteristics of low-slow-small UAV targets.

1.1 Improvements to YOLOv4

There are still some problems in YOLOv4 algorithm for the detection of low-slow-small UAV targets: the feature maps extracted by YOLO4 have fewer small target features; deep feature extraction network makes UAV features easy to be lost; the generalization ability of anchor adopted in YOLOv4 algorithm for small targets is weak[22].This paper improves YOLOv4 from the aspects of network structure,small target enhancement and candidate box adjustment.

1.1.1 Network structure improvement

As shown in Figure 2,this work improves the feature fusion part of YOLOv4 by up-sampling the shallow feature map and splicing it with the shallow UAV feature image,adding the output branch with a scale of 104×104 pixel.Figure 3 shows the feature maps output from neck and head of the improved YOLOv4.More details of UAV are obtained in the newly added scale,which is conducive to the improvement of UAV detection accuracy.The improved network makes full use of the low-level and high-level information,and achieves the detection of small object scale through the new detection layer.

1.1.2 Adjustment of anchor boxes

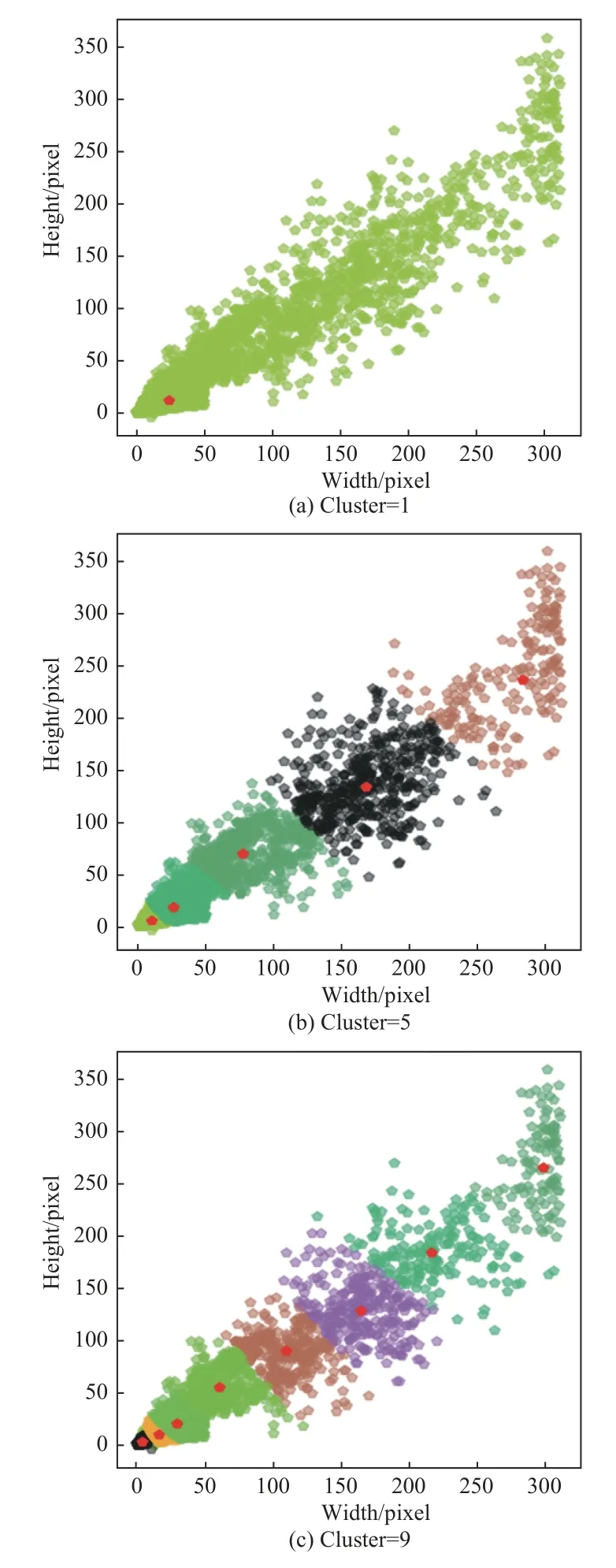

YOLOv4 adoptsk-means clustering,kis the number of clusters,the higher the value of k,the better the quality of the preset anchor box,which is conducive to the convergence of the model in the training process[23].YOLOv4 allocates 3 anchor boxes to each scale,and gets 9 anchor boxes in total.k-Means randomly selectskinitial cluster centers,which can greatly affect the results when not initialized properly.

The improved YOLOv4 adoptsk-means ++ to cluster UAV samples,k-means ++ randomly selects a cluster center and calculates the distance with other samples.The sample with larger distance is more likely to become the next cluster center,untilkcluster centers are obtained.Euclidean distance is used to measure the distance between the sample and the cluster center,and the objective function of clustering is expressed as follows:

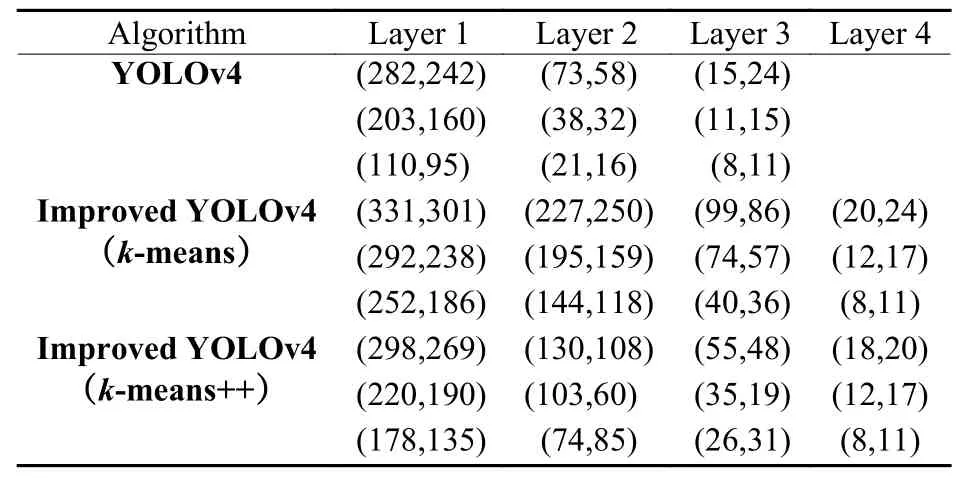

wherekis the number of clusters,kiis theithcluster,and dist(ci,x)2is the squared distance from samplexto theithcluster centerci.For the improved YOLOv4,resize the image to 416×416 pixel,3 anchor boxes are assigned to the feature maps of each scale,resulting in a total of 12 anchor boxes.The clustering process for anchor boxes is shown in Fig.4.The clustering results are shown in Table 1.Thek-means ++ makes the anchor frame of clustering pay more attention to small targets,and the clustering result is more consistent with the real label.

Fig.4 Process of obtaining anchor boxes by k-means++clustering

Fig.5 UAV data augmentation

Table 1 Clustering results of different clustering methods in training set

1.1.3 Data augmentation for small UAV targets

The mosaic data enhancement used in YOLOv4 will randomly scale the target,possibly resulting in serious loss of drone target information.This paper adopts the method of copying multiple UAVs into one image to increase the number of UAVs (as shown in Figure 5),so that the model pays more attention to small UAVs and improves the contribution of small UAVs to the loss function[24].

1.2 Model pruning of improved YOLOv4 algorithm

Network pruning reduces network parameters and computational complexity by removing a large number of unimportant channels to improve inference speed,its general process includes sparse training,network pruning,and model fine-tuning[25].

1.2.1 Sparse training

The scale factor γ of the batch normalization (BN)layer is used as the index to evaluate the importance of the channel,andL1 regularization is used to train γ,the loss function is expressed as:

whereL(γ) is the total loss function,l(γ)YOLOv4is the loss function of YOLOv4,‖γ‖1is the penalty term,andprepresents the parameter factor ofL1 norm.

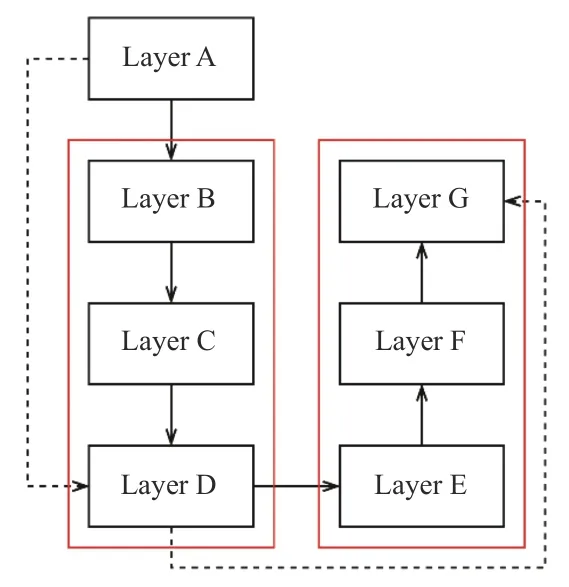

1.2.2 Network pruning

Channel pruning is carried out according to the sparsely trained γ value,the channel corresponding to a small γ value has a small contribution to the network inference results.Sort the value ofsand set the pruning rate to remove unimportant channels in the network.The channel pruning of shortcut structure refers to the practice of SlimYOLOv3[26],as shown in Figure 4.Assuming that layer A retains channels 1 and 2,layer C retains channels 2 and 3,and layer F retains channels 2 and 4,layer A,C,D,F and G retains channels 1,2,3 and 4.

Layer pruning is based on the γ value of the convolution module before the shortcut layer.The two convolution modules before the shortcut layer are pruned together with it.As shown in the red box of Fig.6,when layer D is cut,layer B and layer C are also cut.

Fig.6 Structure diagram of shortcut layer

2 Experiments

2.1 Experimental setting

A large number of UAV images (with the size of 1 920×1 080 pixel) collected by the camera were combined with the UAV Dataset (Drone Dataset,Dronedata-2021) to form an experimental dataset containing 20 000 UAV images,of which 80% were used as the training set and the rest were used as the testing set.

The comparison experiments of model improvement and pruning were carried out on windows10 operating system,equipped with i7-7700 processor and NVIDIA GeForce GTX 1080Ti.The network was implemented by Pytorch1.6-GPU.The input image was resized to 416×416 pixel,batch size was set to 8,initial learning rate was set to 0.002324,and Adam optimization strategy was used.The network was trained using a fine-tuning approach to reduce training time,first on the COCO dataset and then on the UAV training set.Finally,the embedded computing performance was verified on a Jetson AGX Xavier (16GB).

2.2 Evaluation index

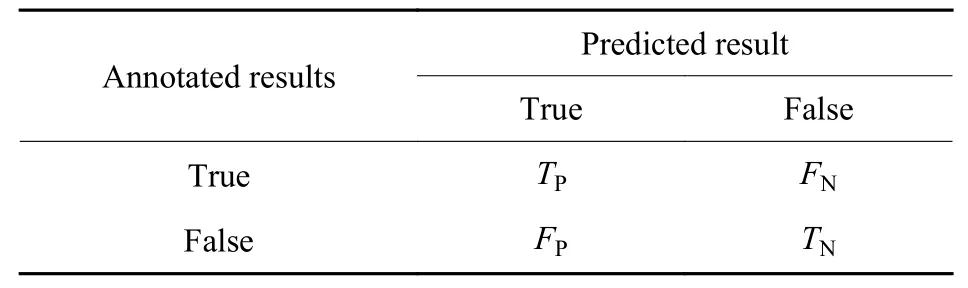

In object detection,mAP and FPS are commonly used for evaluation,where FPS represents the model inference speed,and mAP needs to be calculated by confusion matrix (see Table 2).

Table 2 Confusion matrix

The average precision (AP) is the area enclosed by the PR curve plotted with precision (P) and recall (R).See Formula (3) and Formula (4) for the calculation of accuracy and recall,and AP is calculated by Formula(5):

mAP is the average accuracy of all categories,which can be calculated by Formula (6):

2.3 Performance comparison before and after algorithm improvement

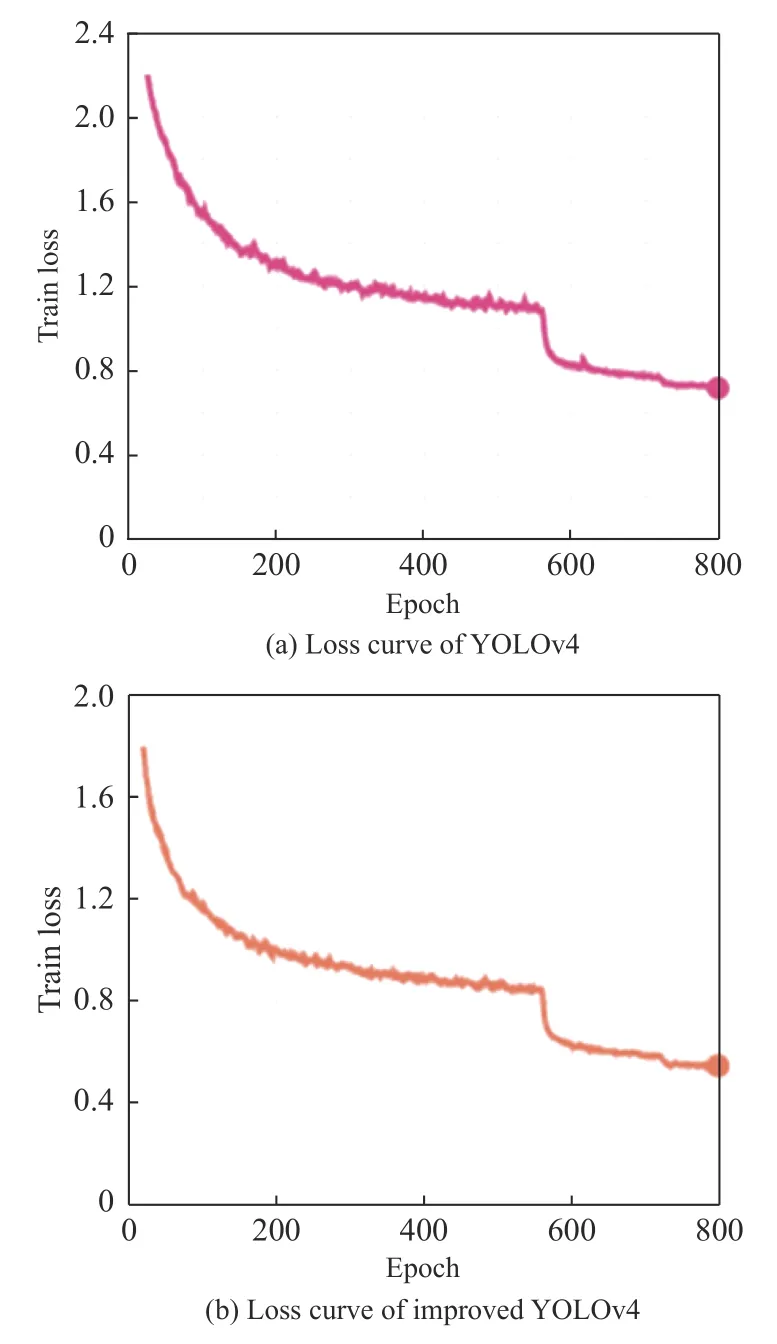

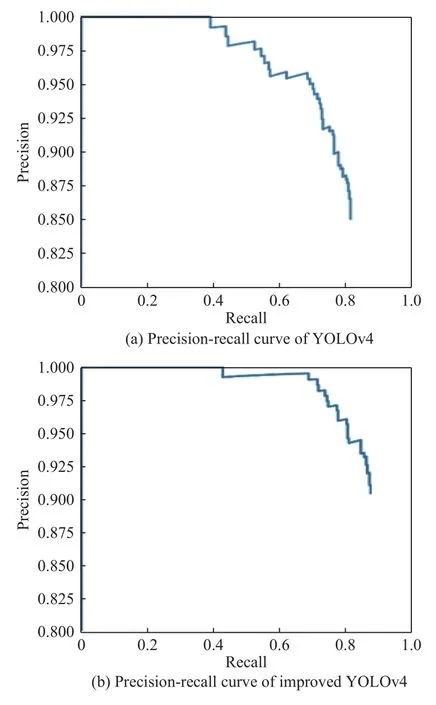

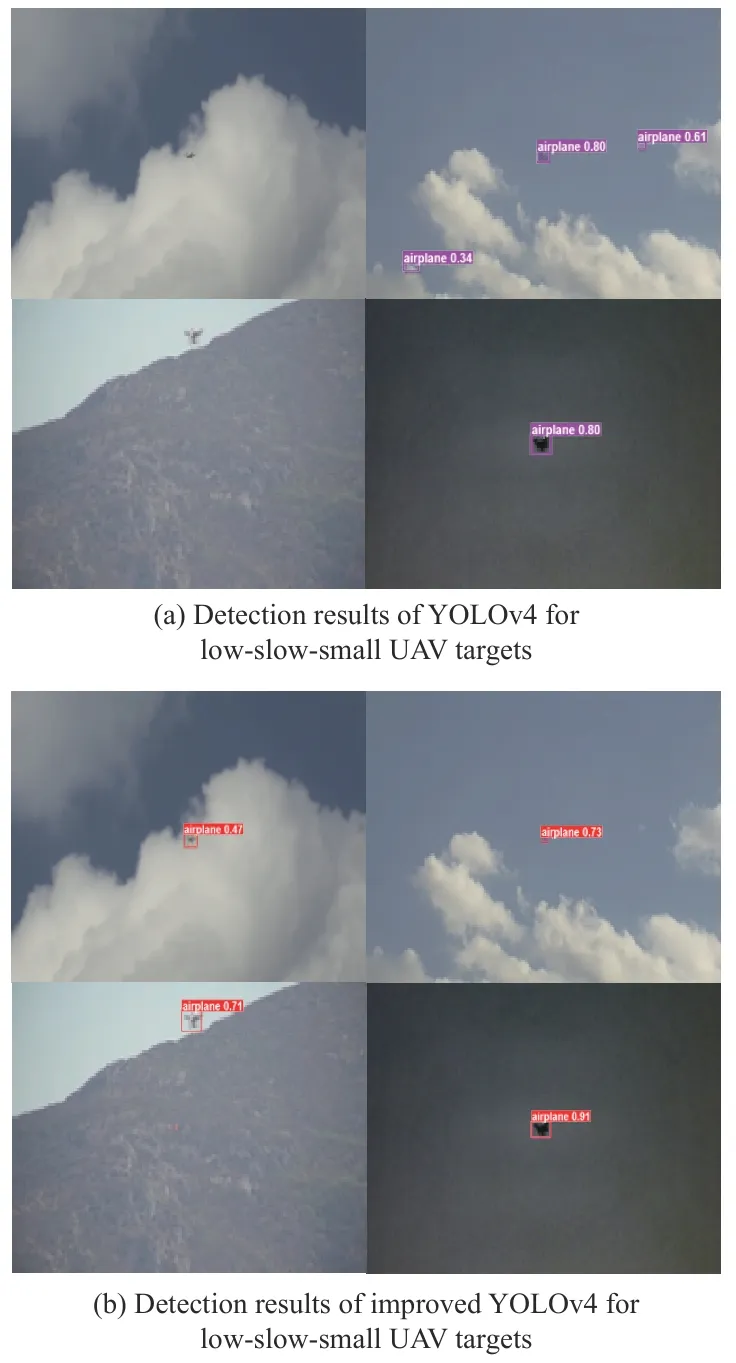

The IoU(intersection over union) threshold is set to 0.5 to test the algorithm before and after improvement.Fig.7 shows the loss curve,the improved YOLOv4 has better convergence effect on the UAV data set,and the loss is reduced to below 0.6 after training.The PR curve plotted against recall and precision is shown in Fig.8,where the curve closer to the top right corner indicates better detection performance.The PR curve of the improved YOLOv4 completely enveloped the curve of the original YOLOv4,proving its stronger detection ability.Fig.9 shows the detection results for low-slow-small UAVs.Compared with the original YOLOv4,the improved YOLOv4 adds a small UAV target prediction branch and adjusts the candidate box,which can reduce missed detection and false detection,improve the prediction accuracy of the size and position of the bounding box.

Fig.7 Comparison of loss curves during training of YOLOv4 and improved YOLOv4

Fig.8 Comparison of precision-recall curves of YOLOv4 and improved YOLOv4

Fig.9 Comparison of detection results of YOLOv4 and improved YOLOv4 on UAV dataset

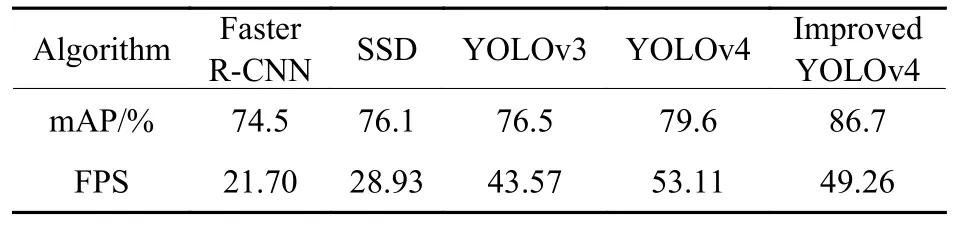

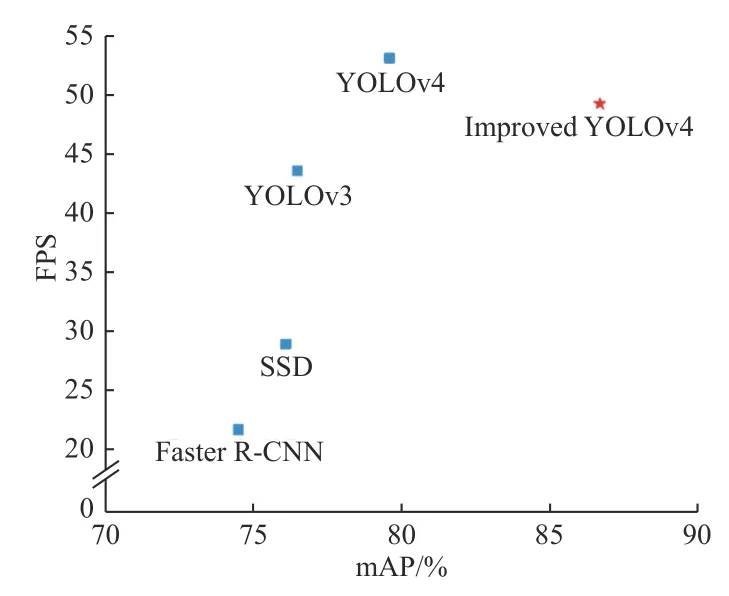

The comparison results of mAP and FPS of different algorithms are shown in Table 3 and Fig.10.

Table 3 Detection effects of different algorithms

Fig.10 Detection effects of different algorithms

The mAP of the improved YOLOv4 is 7.1% higher than that of the original YOLOv4,the FPS of the improved algorithm is 49 on NVIDIA GeForce GTX 1080Ti,which is slightly lower than the original YOLOv4.The experiment proves that the improved YOLOv4 algorithm performs better in mAP and FPS than YOLOv3,SSD and other algorithms,it achieves a good balance in FPS and mAP,and has a stronger detection ability for small UAV targets.

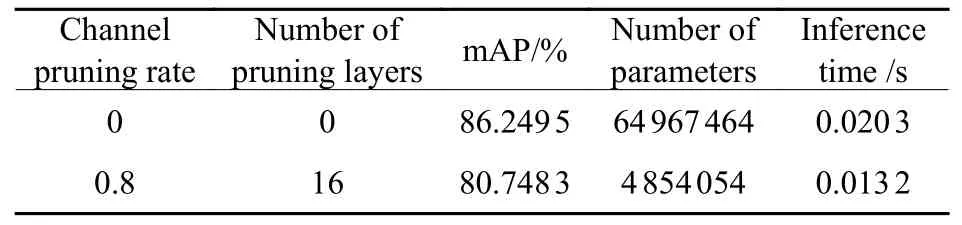

2.4 Comparison of model performance before and after pruning

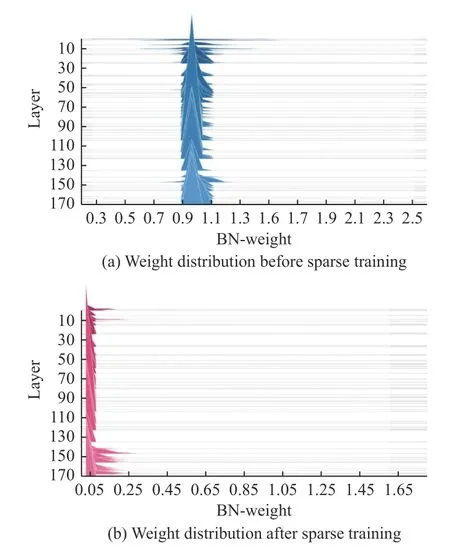

Sparse training was performed by local sparsity rate decay,setting sparsity rate to 0.001 and epoch to 300.In the last 50% training phase,85% of the network channels are sparsified normally,and the remaining 15% channels are sparsified with a sparsity rate of 1%to prevent the model accuracy from decreasing sharply.

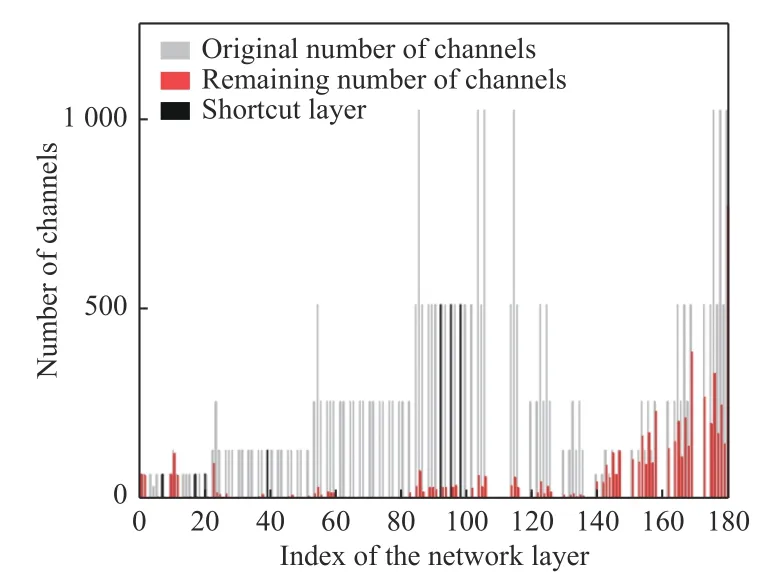

The weights of BN(batch normlization) layers before and after sparse training are shown in Fig.11.During the training process,the weight distribution gradually approaches 0,and channels with weights close to 0 will be pruned preferentially.The pruning rate is set to 0.8,the number of pruning layers is 16,and a total of 27 137 channels and 48 layers are pruned.The pruning result is shown in Fig.12.The weights of the middle part of the network are closer to 0,so most channels are pruned,and the clipped layers are the layers with fewer remaining channels after channel pruning.Table 4 shows the network performance before and after pruning.After pruning,the number of model parameters is reduced to 7.5% of the original,the model size is reduced from 248 MB to 18.5 MB,and the detection speed is improved from 49 frame/s to nearly 75 frame/s (1080Ti).Finally,we recover the mAP to 85.8% with 100 epochs of model fine-tuning.

Table 4 Comparison of network pruning results with different parameters

Fig.11 Weight distribution of BN layers in sparse training

Fig.12 Comparison of network layers and channels in improved YOLOv4 before and after pruning

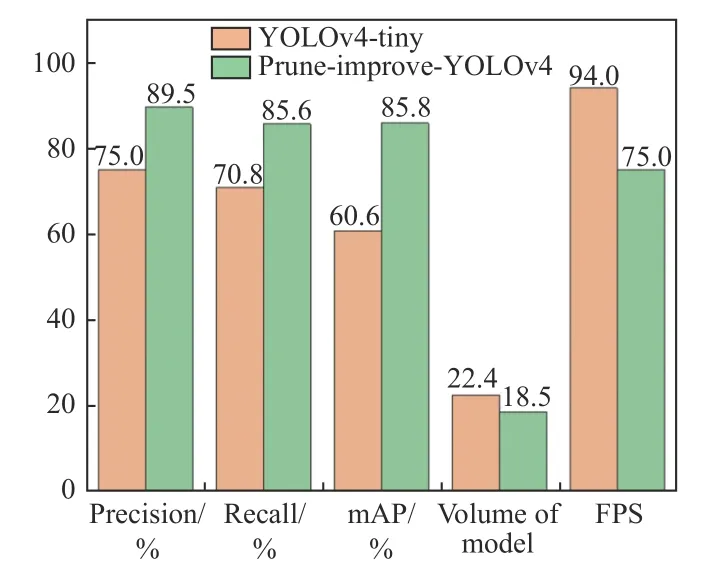

Fig.13 Performance comparison of prune-improve-YOLOv4 and YOLOv4-tiny

Fig.14 Detection result of prune-improve-YOLOv4 deployed on Xavier platform

Figure 13 shows the comparison between the pruned model and YOLOv4-tiny.Although the detection speed of the lightweight model based on the improved YOLOv4 is slightly lower than that of YOLOv4-tiny,it has greater advantages than YOLOv4-tiny in terms of precision,recall and mAP.Experiments show that the network is suitable for low-slow-small UAV detection.

2.5 Deployment experiment on embedded platform

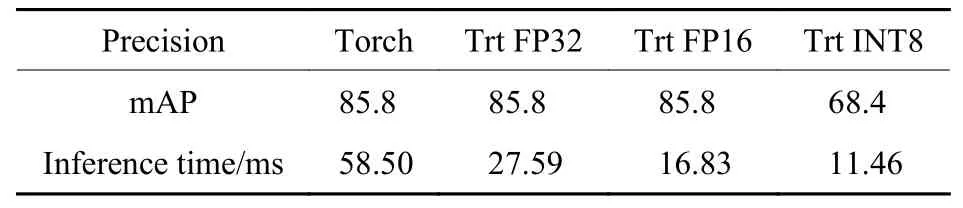

The data of the model is stored in the form of 32-bit double floating point precision (FP32),which will occupy a large memory space and increase the inference time.Using low precision data (FP16,INT8) for inference can reduce the storage space and bandwidth required for calculation and improve the inference speed.In this experiment,the prune-improve-YOLOv4 is accelerated and quantized by TensorRT on the Xavier platform.The Xavier running power is 30 W,the test image size is 3×416×416 pixel,the mAP and inference time under different precision are shown in Table 5.

Table 5 Inference performance of prune-improve-YOLOv4 deployed with different precision on Xavier

The mAP shows almost no drop for half-precision(FP16) inference,but shows a large drop for INT8 inference.In this paper,semi-precision (FP16)deployment is adopted to achieve 85.8% mAP and a detection speed of nearly 60 frame/s on Xavier,the detected image is shown in Figure 14.Experiments show that the prune-improve-YOLOv4 can meet the real-time and high-precision requirements of detecting low-slow-small UAV targets using embedded platform.

3 Conclusion

Aiming at the difficulties of small UAV target semantic information is less and the target is not obvious,this paper firstly improves the original YOLOv4 network from three aspects: algorithm network structure,small target enhancement and anchor box allocation.The improved YOLOv4 network can detect most UAVs and the position of the boundary box is more accurate.Recall,accuracy and mAP have been greatly improved.Then,by setting the channel pruning rate of 0.8 and the layer pruning number of 16,the network parameters and inference time are greatly reduced.Finally,85.8% mAP and nearly 60 FPS realtime detection are achieved on Xavier.Experimental results show that the proposed algorithm achieves higher accuracy and speed than YOLOv4 in small target detection,and can be used for embedded equipment to carry out real-time detection of low-slowsmall UAV.