Autonomous Vehicles

——the Remaining Challenges

2021-05-12HueiPeng

Huei Peng

Department of Mechanical Engineering,University of Michigan,Michigan 48105,USA

[Abstract] Autonomous vehicles are essential for mobility in big cities,just like how elevators make high⁃rise buildings livable. While significant progress has been achieved over the last 15 years,there are still several re⁃maining challenges,namely:cost,robust performance,and trust. To address these challenges,this paper discuss⁃es research at Mcity.

Keywords:autonomous vehicles;testing and evaluation

1 Introduction

2020 will be remembered as a very special year.The COVID⁃19 virus was running rampant in most of the world almost the entire year. As of March 2021,115 million people have caught the virus,and more than 2.5 million people have lost their lives worldwide.This was also supposed to be the year when we see many Level⁃4 automated vehicles on the roads. Robo⁃taxis were supposed to be making money for Tesla own⁃ers while they work. Level⁃4 vehicles were supposed to be available for purchase from many car companies. It is now clear that none of these promised breakthroughs was realized in 2020.What happened?

It turns out autonomous vehicles(AV),or Levels 4⁃5 automated vehicles,were not immune from hypes,just like MEMS,Nano,AI,and many other emerging technologies that people were very eager to enjoy full benefits from. Few of these emerging technologies be⁃came reality within the originally predicted time frame.We were not wrong about the long⁃term potentials,but we were wrong,again,and underestimated the chal⁃lenges. Challenges in both technical and non⁃technical aspects that we knew,or we did not know,turn out to be harder to overcome. What will happen next and where do we go from here?

I have been associated with AV research through⁃out my career—my Ph.D. research at UC Berkeley is on“highway automation”. At the University of Michi⁃gan,I am currently directing Mcity,the first purpose⁃built test facility for AVs in the world. The goal of this paper is to summarize what I have seen,and point out the remaining challenges,and what we are doing at Mc⁃ity to address these challenges.

2 The Promise

Without questions,human drivers are amazingly capable of handling a wide variety of uncertain traffic and environmental conditions. However,their perfor⁃mance can deteriorate quickly and significantly when they are tired,drunk,road⁃raged or distracted. World⁃wide,about 1.3 million people died each year in motor⁃vehicle crashes.In addition,the world population is ag⁃ing steadily. As a result,finding willing and qualified professional drivers,like bus drivers in rural Japan,or long⁃haul truck drivers in the US,is increasingly diffi⁃cult. Automated technologies can be used to create guardian,co⁃pilot,or chauffer systems,so that ground mobility is safer,more accessible,and more efficient in the aging world. The potential is tremendous,which is part of the reason why there were over⁃promises and hypes. It is important to recognize that this“hype cy⁃cle”is typical,and has happened to the vast majority of emerging technologies. It is important to understand the remaining challenges so that we can focus our atten⁃tion and resource to solve them.

3 The Remaining Challenges

The concept of Technology Readiness Levels(TRLs)was developed by NASA to measure the matu⁃rity of technologies. After years of research and invest⁃ment,AVs from many leading companies are already at TRL 7⁃9. Some are already piloted in small fleets to move people or goods(TRL 9),albeit many still re⁃quire a safety driver or a remote supervisor. Achieving high TRL levels is a great achievement. However,that alone does not guarantee a viable and successful busi⁃ness. In general,the main challenges AVs are facing fall into three categories:cost,robust performance,and trust.

3.1 Cost

Some of the AV hardware components were very expensive. Lidars were tens of thousands of dollars just a couple of years ago,but very recently some very good ones are available for hundreds of dollars. RTK(Real⁃time kinematic)GPS that provide centimeter⁃accuracy are in a very similar situation. GPUs or specially de⁃signed chips like EyeQ5 from MobilEye are also mov⁃ing along a similar trajectory—will cost“only”hun⁃dreds of dollars in the near future. Overall,hardware cost has reduced by 1⁃2 orders of magnitude and is on a good trajectory.

The other two major cost elements:software sys⁃tems and development vehicle fleets,however,were moving in the opposite direction.Because of the limited supply of talents,there was fierce competition among companies by poaching from each other or from aca⁃demic institutions,which drives salary higher and ta⁃lent supply lower. In that sense,the slow⁃down in the economy worldwide due to COVID⁃19 may be a bless⁃ing in disguise. Continuing to increase talent supply is critical for the long⁃term viability of the AV industry.

Larger AV teams maintain dozens,if not hun⁃dreds of development vehicles. Many companies only tested in places with a friendly climate like California,Arizona,and Singapore. Collecting data and accumu⁃lating experience on the public roads not only costs a lot,it is also not efficient to learn challenging driving situations. Unfortunately,public⁃road testing is still used much too often than it should. Percentage⁃wise,simulations/controlled track testing should be more than 99.9% of the overall development in my opinion. I will elaborate on the importance of using“accelerated evaluation”technologies in the later part of this paper,which is a practice that is possible only through simula⁃tions and track testing at facilities like Mcity,J⁃town or A NICE CITY. By reducing real⁃world testing and em⁃bracing“accelerated evaluation”techniques,the cost related to AV development can be reduced drastically.Since the hardware cost is now under control,this is the most important aspect of further cost reduction.

3.2 Robust Performance

AVs rely on software that handles a variety of func⁃tions such as perception,object tracking,behavior pre⁃diction,path planning,and control. Currently,the weakest link is perception,i.e.,understanding what the sensors are telling us:where is the AV relative to the lane lines/drivable areas,are there other road users(cars,pedestrians,cyclists,etc.),and what are their positions and velocities. So lane/road detection and ob⁃ject detection are the two main perception tasks. While deep neural network(DNN)⁃based perception systems have progressed so much that they now consistently out⁃perform the traditional machine⁃vision approaches by a wide margin,they are not good enough yet. There are several websites that track the“leaderboard”of DNNs that achieve the best detection results. For road/lane detection,for example,open ⁃ source datasets from KITTI[1],TuSimple[2],and CULane[3]can be used.Ac⁃curacy/precision of the best lane detection DNNs today are only around 95% and the F1 ratio(which takes both false positives and false negatives into account)is around 75%. The majorities of the road/lane detection datasets contain images that are relatively nice,i.e.,the lane⁃lines are reasonably maintained,not too much shadow/occlusion and no snow. For object⁃detection DNNs(see Refs[4-5]),the reported precision is simi⁃lar but lower.For easy cases(close distance,not much occlusion)the precision is around 96%,and 90% for harder cases (faraway,more occlusion) using the smaller and simpler KITTI dataset[4].A larger and more challenging set of data became available more recent⁃ly[5]and the performance of the best DNNs is about 80% for easy cases and 75% for hard cases. In short,the error rate(false positive and false negative)is still too high.

The perception algorithms can use multiple cam⁃era frames or multiple sensors to improve the final de⁃tection performance,thus the single⁃frame accuracy does not need to be perfect. Nevertheless,the single⁃frame machine vision precision must be improved sig⁃nificantly,to reduce both false positive and false nega⁃tive rates by at least a factor of 5⁃10,and even more for images under adverse but realistic weather and environ⁃mental conditions,so that autonomous driving is ap⁃proaching the performance of attentive drivers. The progress over the last few years is very impressive,yet more needs to be done. Sensor fusion holds significant potential but this is a highly competitive area,so unfor⁃tunately few results were reported in the open literature.

3.3 Trust

Just like many other new technologies,trust is not given,and instead must be earned through demonstrat⁃ed good behavior over time. AV is still a novel system today with little track record,and the situation is not too different from the early days of aviation.In the early years of the 20thcentury,the heavier⁃than⁃air flight was demonstrated,the Air Commerce Act which regulariz⁃es commercial aviation was passed in 1926,Charles Lindbergh made his transatlantic flight in 1927. Did people flock to buy tickets when commercial flights be⁃came available?

It turns out commercial aviation did not grow rapid⁃ly until after World War Ⅱ,a good 20 years after Lind⁃bergh’s transatlantic flight and passing of the govern⁃ment regulation. The explosive growth in commercial aviation happened due to three facts:(i)airplanes were much improved during World War Ⅱ;(ii)trust was built over time after many hundreds of thousands of war⁃time flights;and(iii)tens of thousands of experi⁃enced engineers and technicians were trained during the War,enabling safe and affordable commercial avia⁃tion.

It is apparent we can learn a lot from the history of commercial aviation—where the“trust”issue is argu⁃ably much more severe. Several recent works studied the issue of trust for AV. Survey/poll from PAVE[6],AAA[7],and JDPower[8]showed that about two⁃thirds of those surveyed do not have high confidence in AVs.Some argue that privacy,security,reliable perfor⁃mance[9],clear bi ⁃ directional communication,and multiple modes of interactions[10]are the keys for build⁃ing trust,the latter seems to be inspired by the earlier findings from other fields[11]. These identified factors should be handled differently.Privacy,security and bi⁃directional communication should be included in the AV designs and development,which are the responsi⁃bility of the AV manufacturers. The reliable perfor⁃mance should be evaluated both by the AV manufactur⁃ers and the government/independent evaluators. The latter is needed for trust⁃building.

4 The Mcity ABC Test Concept

We developed the Mcity ABC test procedure for the performance evaluation of AVs. The ABC test con⁃cept can be used in simulations or closed test tracks,as a cheaper and faster alternative to public⁃road test⁃ing. This test concept can be conducted by AV compa⁃nies,but it is very important that a government agency or trusted third⁃party conduct the test to provide an im⁃partial and independent assessment of the AV perfor⁃mance—critical to improving public trust. The main goal is to check for robust performance within the clear⁃ly defined operational design domain(ODD). Before we describe the ABC test procedure,we will first de⁃scribe the recent effort at Mcity to use simulations and augmented reality technologies for AV performance as⁃sessment.

4.1 Simulation and Augmented Reality

It is commonly accepted that simulations can be a cheaper,faster,and safer alternative to actual vehicle testing. The Achilles’heel of today’s AV simulation software is sensor modeling,including under adverse weather and lighting conditions. Modeling sensor out⁃puts is important because the perception sub⁃system is the weakest link of today’s AVs. While in general,we have excellent camera models,but lidar and radar models need more work.

We have worked with eight software companies,each one had built a digital twin of the Mcity test facili⁃ty. We also instrumented a vehicle and collected data under selected driving scenarios(see Fig. 1),and make the data available for all Mcity industrial mem⁃bers[12]. The goal is to enable rigorous virtual sensor model development,so that these AV simulation soft⁃ware,once validated,can become trustable alterna⁃tives to real vehicle testing.

Fig.1 Example common driving scenarios for AV sensor model validation

In addition to working with commercial AV simu⁃lation software providers,we have also developed digi⁃tal twins of the Mcity test facility using open⁃source soft⁃ware CARLA[13]and SUMO[14](see Fig. 2). CARLA has a comprehensive set of features for AV simulations.However,today it does not come with reasonable be⁃haviors from“other road users”. SUMO,on the other hand,has a few built⁃in driver models to choose from.When we need to simulate interactions with other vehi⁃cles,we would create and simulate these other vehicles in SUMO and send the vehicle motion variables to CARLA—which has much nicer graphics. In CARLA,we can simulate sensor measurement from camera and lidar easily,but the rendering of image pixels and lidar points takes a lot of communication and computation and requires 5G/Edge computing capabilities,which is currently under development. Today,we usually as⁃sume“perfect sensors”,i.e.,the positions and veloci⁃ties of other vehicles are known precisely. We can also simulate“V2X sensors”,where the kinematic informa⁃tion from other vehicles contains small stochastic errors.

Fig.2 CARLA(left)and SUMO(right)models of the Mcity test facility

We have also developed an augmented reality test⁃ing process at Mcity,in which many cars are simulated in SUMO,and the information is passed on to the real test AV through either DSRC or 4G/wifi(5G is under development).As such,it is possible for the AVs to in⁃teract with other vehicles,which is very important to test some AV features(such as path planning and deci⁃sion making)and performance(such as ride comfort and fuel economy). The graphic⁃user⁃inter face for the augmented reality tests is shown in Fig. 3. The plot on the left shows a bird’s eye view and the plot on the right shows SUMO which simulates all virtual vehicles.An in⁃depth discussion about the concept and imple⁃mentation of augmented reality techniques at Mcity can be found in Ref.[15].

Simulations and augmented reality are used heavi⁃ly in our AV design and development process. The ABC test process to be described in the next section is used extensively in our AV evaluation process.

Fig.3 GUI on the Mcity test AV to conduct augmented reality tests

4.2 Mcity ABC Test Procedure

The Mcity ABC Test is a three⁃pronged approach for testing AVs either in simulations or inside a closed test track,ideally before their public⁃road testing and deployment. The three components are accelerated evaluation,behavior competence,and corner cases.The accelerated evaluation focuses on more efficient testing for risky driving situations instead of nonevent⁃ful situations,behavior competence on demonstrating the ability to be safe in an array of scenarios,and cor⁃ner cases on pushing the limit toward the boundary(corners)of the ODD of the AV.

ODD is an important concept to embrace.If we re⁃call that commercial passenger aviation builds on suc⁃cessful agriculture and military applications,before people have enough confidence flying in an airplane.Similarly,low⁃speed and geo⁃fenced AV deployments with small ODDs help to build confidence and trust.Shooting for the bragging right of Level⁃5 AV neither helps to address the problem of building trust,nor is a well⁃defined engineering problem. All rational AV de⁃velopers should embrace the concept of ODD,and aim for doing a thorough job within the bound. The future is Level⁃4 AVs with gradually enlarged ODD,instead of aiming to achieve Level⁃5 AVs. Level⁃5 AV literally means whenever,whatever and wherever,an inspiring goal,but an ill⁃defined engineering problem.

4.2.1 Behavior Competence Test

Out of the three components of the Mcity ABC test,the behavior competence test is the core. And the key concept of the behavior competence test is“scena⁃rio”.We believe all“acceptance test”such as those for regulatory/approval purposes should consist of singular scenarios.For example,we will test pedestrians at inter⁃sections,unprotected left⁃turn and door⁃opening of a parked car separately,instead of one that combines all three.This is because of the exponential growth of combi⁃nations,which all practical regulation/approval tests must avoid. Mcity researchers compiled a list of 50 sin⁃gular scenarios[16](see Fig. 4 for some examples). The subset of those singular scenarios that should be includ⁃ed,and the bounds of the test case parameters,should be defined based on the ODD of the AV. Once the sub⁃set of the scenarios is selected,the selection of the test case parameters is the key of the Mcity ABC test con⁃cept,which considers four factors:(1)the test case parameters should reflect naturalistic road user be⁃haviors;(2)they should be selected stochastically,rather than deterministically;(3)they should be se⁃lected in an“accelerated evaluation”fashion;and(4)they should cover“the corners”of the ODD.

4.2.2 Selecting Test Case Parameters

Fig.4 Examples from the 50 Mcity behavior competence test scenarios

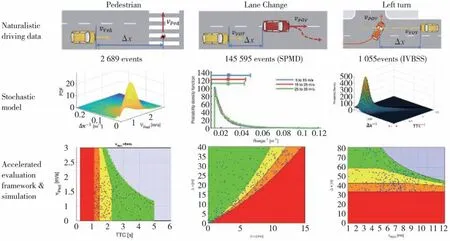

In order to ensure the test case parameters are se⁃lected based on actual road⁃user behaviors,the first step is to collect naturalistic driving data,and build a stochastic model. This is easy to say and hard to do. At the University of Michigan,even with millions of miles of data collected,we still do not have enough data for all scenarios. This is because for some scenarios(e.g.,cut⁃in)the interaction can be captured by on⁃vehicle sensors,but other scenarios(e.g.,round⁃about)are better collected using road⁃side sensors.Most of the da⁃ta we have collected is from instrumented vehicles—a weakness for us,as well as for the Pegasus project in the EU and the SAKURA project in Japan,when most of the data are from instrumented vehicles. Figure 5 shows 3 example scenarios where we have enough data to build useful stochastic models[17]. SPMD[18]is the safety pilot model deployment database,IVBSS[19]is the integrated vehicle bases safety system database.

The statistical model constructed represents what human drivers and pedestrians do when they encounter another vehicle. Depending on the given conditions at the beginning of an event,the space of all possible events is divided into“impossible”(red),“possible,”and“trivial”(blue)regions first,and the“possible”region is further divided into three sub⁃regions:orange(highly challenging,or hard),yellow(moderately challenging,or medium)and green(low⁃challenge,or easy). Take the pedestrian crossing scenario as an ex⁃ample.“Impossible”represents the cases when the pe⁃destrian suddenly dashes in front of the vehicle,and there is simply no time for the vehicle to brake or swerve to avoid a crash.“Trivial”captures the cases when the pedestrian walks in front of the vehicle at such a far distance that the vehicle does not need to take any action. The AV can drive at its current speed,a crash or near⁃miss(defined by a minimum separation distance or time margin)will not occur.All cases in⁃be⁃tween“Impossible”and“Trivial”are“Possible”,which then needs to be further divided. The technique behind separating the“Possible”space into different regions is control reachability analysis and assumed de⁃lay/maximum braking capabilities of the AV. The de⁃tails can be found from Ref.[17].

Fig.5 Data and stochastic models for several scenarios

In Fig. 5,in the lowest row of the figure,the dots(each representing a stochastically sampled test case)are evenly distributed in the easy,medium,and hard regions,and some are approaching the red⁃zone(the impossible region). In combination with Fig. 6,where cut-in cases are shown,it becomes clear how one achieves“accelerated evaluation”. If the statistical model is sampled naturalistically,i.e.,based on ob⁃served data collected from the public roads,all the 300 samples fall within the“easy”region. This obviously would be statistically close to what one would experi⁃ence on the public roads. However,this is not efficient for a regulation/approval test,i.e.,all tests are easy and boring. In order to achieve“accelerated evalua⁃tion”,as an example,we can sample 1/3 from the“easy”region,1/3 from the“moderate”region and 1/3 from the“hard”region. The same 300 samples would expose the AV to much more difficult test cases,achieved within tens of total miles driven,instead of millions of miles in order to catch those corner cases(close to the boundary of orange and red regions). The theoretical basis of the accelerated evaluation concept is rigorously presented in Ref.[20]and subsequent pa⁃pers,and what we presented above is a simpler expla⁃nation.

Fig.6 Vehicle cut-in cases. Sampled naturalistically(up)vs. sampled evenly in the three(possible)colored re‐gions(bottom). Each dot represents a random sample

Now let’s talk about“corner cases”or“edge cases”.This is a topic lightly discussed in the literature. In Ref.[21],the term corner case is defined as“two or more parameter values are each within the capabilities of the(AV)system,but together constitute a rare con⁃dition that challenges its capabilities”and edge case is defined as“scenario in which the extreme values or even the very presence of one or more parameters re⁃sults in a condition that challenges the capabilities of the system.”This is a new DIN⁃SAE standard,and the definitions seem to be inspired solely by the words“edge”and“corner”. If the DIN⁃SAE definitions are followed,what we were referring to in this paper should be called“edge cases”. Reference[21]is new and not widely accepted yet—and certainly not known when we invented the concept of the Mcity ABC test.

There were two main approaches for collecting cor⁃ner cases(or edge cases if the DIN/SAE terminology is used). The first approach,which we call the“Many⁃many miles”(MMM approach),involves collecting as many miles of driving data on the public roads as possi⁃ble—typically hundreds of thousands or millions of miles,and identifying the most bizarre/rare case as“corner cases”. For example,boxes falling from the pickup truck in front,wild pig running across the road,heavy fog,near⁃misses,crashes,etc. However,this way of collecting“corner cases”is based on luck and randomness. Many in the field of AV have heard talks(semi⁃jokingly)advocating“a lady sitting in a wheelchair chasing a goose”as a corner case that should be tested. It is hard to understand how many players/factors need to be accurately reproduced in“re⁃playing”that particular situation,and the probability of that particular case occurring in the real⁃world again.For example,let’s say a particular near⁃miss case was found after millions of miles,and the desire is to recon⁃struct the particular situation. Should we control the ambient temperature,humidity,road friction coeffi⁃cient,and the precise motions of just one vehicle,or several vehicles in the vicinity?In many cases,the MMM approach equals to using cameras only,and not all the information mentioned above was captured. And the value of“replaying”the condition is questionable. Fi⁃nally,Fig.6 shows our experience:most of the collect⁃ed cases from the real⁃world are not really that chal⁃lenging,thus the MMM approach is not cost⁃effective in collecting corner cases.

The second approach,which we call the evil rag⁃ing driver(ERD)approach,involves a more methodi⁃cal thinking exercise exploring the known weakness of the AV. For example,(i)while driving under a thick tree canopy,a stopped vehicle suddenly appears be⁃cause the truck that you′d been following just cut out;(ii)the primary other vehicle is a black car,on roads covered by snow,in a dark snowy night coming at the AV at the highest possible speed at an intersection,suddenly appears from behind building occlusion... It can be seen that the number of combinations can grow quickly,depending on how many independent factors we explore.A major culprit lies in the fact that it is well known that one can easily design an“impossible”situa⁃tion where a crash is unavoidable. An example is that a vehicle traveling from the opposite direction suddenly crosses the double⁃yellow line,and enters the traveling lane of the AV.While this type of crash does happen in the real world,testing this situation on a test track is meaningless. No AV can be designed to avoid all test cases created through the ERD process—because many are impossible to avoid. Testing impossible⁃to⁃avoid cases are neither helpful in gaining insight into the safe⁃ty behavior of the AV nor practical. The idea of“check the corner cases”should be to find very challenging BUT possible cases systematically,and not finding im⁃possible cases(the impossible region is shown in red in Figs.5 and 6).Finally,since the ERD test cases ex⁃plore the weakness of the AV,it is likely the test cases for AVs that use cameras only vs. those that use lidars will be different. Therefore,the ERD concept is not vi⁃able for government⁃endorsed regulatory/approval tests—as those tests must be transparent and fair.

The Mcity ABC test approach as shown in Fig. 6,obviously covers many“edge cases”—those points at the border of the red and orange regions. The approach is better than both the MMM and ERD concepts be⁃cause the tests are done efficiently,and all cases test⁃ed are in the“possible”region.

In summary,the Mcity ABC test concept selects the behavior competence test scenarios based on the ODD of the AV,and selects the test case parameters to cover corner cases and the tests are done in an acceler⁃ated fashion.The test consists of a finite number of sce⁃narios and a finite number of test cases for each scena⁃rio.Finally,the tests are stochastic but fair.

5 Conclusions

The three major remaining challenges for autono⁃mous vehicles are cost,robust performance,and trust.The Mcity ABC test process was developed to address all three challenges. In the next few years,companies likely will request approval for Level⁃4 AVs,for de⁃ployment or for sale in some parts of the world. A gov⁃ernment⁃sanctioned test procedure is urgently needed for both public safety and trust. We hope this concept,formed after two years of development by researchers from both academia and industry at Mcity,can help governments and standards⁃setting organizations in de⁃fining their approval tests.