Mixed reality based respiratory liver tumor puncture navigation

2019-02-27RuotongLiWeixinSiXiangyunLiaoQiongWangReinhardKleinandPhengAnnHeng

Ruotong Li, Weixin Si, Xiangyun Liao, Qiong Wang(), Reinhard Klein, and Pheng-Ann Heng

Abstract This paper presents a novel mixed reality based navigation system for accurate respiratory liver tumor punctures in radiofrequency ablation(RFA).Our system contains an optical see-through head-mounted display device (OST-HMD), Microsoft HoloLens for perfectly overlaying the virtual information on the patient, and a optical tracking system NDI Polaris for calibrating the surgical utilities in the surgical scene.Compared with traditional navigation method with CT,our system aligns the virtual guidance information and real patient and real-timely updates the view of virtual guidance via a position tracking system. In addition,to alleviate the difficulty during needle placement induced by respiratory motion, we reconstruct the patientspecific respiratory liver motion through statistical motion model to assist doctors precisely puncture liver tumors. The proposed system has been experimentally validated on vivo pigs with an accurate real-time registration approximately 5-mm mean FRE and TRE,which has the potential to be applied in clinical RFA guidance.

Keywords mixed reality;human computer interaction;statistical motion model

1 Introduction

Radiofrequency ablation (RFA) therapy is a widely used mini-invasive treatment technology for liver tumor. Doctors insert a radiofrequency electrode into the target tissues of patient, and the increasing temperature (greater than 60°C) will produce degeneration and coagulation necrosis on the local tissues [1]. RFA has become a widely and clinically accepted treatment option for destruction of focal liver tumors with several advantages, such as low trauma, safety, effectiveness, and quick postoperative recovery [2, 3].

Traditional RFA surgery is navigated by the computed tomography(CT),ultrasound,or magnetic resonance (MR) image for surgeons to insert the needle into the target tumor. Then the tumor can be completely coagulated with a safety margin and non-injury of critical structures during energy delivery. Thus, the navigation imaging information plays a key role in the safe and precise RFA planning and treatment for the target tumor [4].In general, much surgical experience and skill are required to perform safe and accurate needle insertion with 2D navigation images, because current 2D image-based navigation modality can only provide limited information without 3D structural and spatial information of the tumor and surrounding tissues.Besides, the display of 2D guided images shown on the screen further increases the operation difficulty.As it lacks direct coordinating of hands and vision,the precision is quite depending on surgeons’ experience[5, 6].

Mixed reality (MR) can provide the on-patient see-through navigation modality and enhance the surgeon’s perception of the depth and spatial relationships of surrounding structures through the mixed reality-based fusion of 3D virtual objects with real objects [7]. In this regards, surgeons can directly observe the target region with the on-patient see-through 3D virtual tumor registered and overlaid on the real patient, thus enabling the surgeons still remain cognizant of and engage in the true surgical environment and greatly benefit the surgeons’ operation efficiency and precision. As an optical see-through head-mounted displays (OSTHMD), Microsoft HoloLens has been significantly used in recent years in various fields, including the medical education and surgical navigation. The HoloLens can project the 3D personalized virtual data in specified position of patients, which can well support the on-patient see-through modality for medical guidance. Compared with the traditional 2D image-based navigation modality, the surgeons are able to comprehensively and intuitively recognize the characteristics of liver and tumor, which can benefit the surgeons in needle path planning for liver punctures more efficiently and accurately.

Another challenge for safe and precise RFA liver therapy is the respiratory liver motion, which is an inevitable issue in clinical practice and has a great impact during the RFA procedure for liver tumor punctures. Doctor may encounter difficulties in locating the target tumor area induced by respiratory liver motion, as the pre-operative imaging is severely different from that during the RFA therapy due to the organ movement induced by the respiratory. In clinical practice, it is also worth noticing that there exist techniques to physically or physiologically ease the respiratory liver motion issue in a straightforward way, such as respiratory gating [8], anesthesia with jet ventilation [9], and active breathing control(breathing holding) [10]. However, these methods induce either extra cost or psychological burden to the patient, and are not a universal way to be practical enough for every patient. For example, it is hard and unrealistic for the patients to hold their breath too long in the breathing holding method[10]. Therefore, it is in an urgent need to develop a new method to tackle this issue, allowing patients to breathe freely during the whole RFA procedure for liver tumor. In this regard, analyzing the patientspecific respiratory organ motion based on 4D liver CT images and compensating the respiratory motion with the mathematical model would be a promising way to achieve both time- and cost-effective and absolutely accurate delivery for each patient.

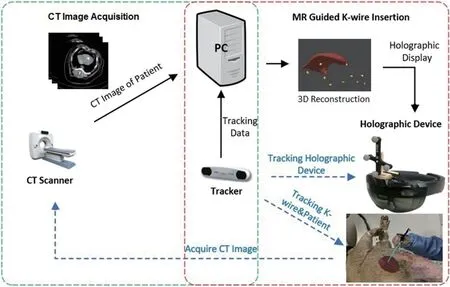

To enable the patient breath freely during the RFA procedure and achieve safe and accurate RFA operation, we developed a mixed reality-based navigation platform via Microsoft HoloLens for RFA liver therapy with respiratory motion compensation,which provides a real-time on-patient see-through display, navigating exactly where the surgeon should insert the needle during the whole RFA procedure.By reconstructing the patient-specific organ motion using 4D-CT images, we adopt a precise statistical motion model to describe the respiratory motion and its variability in 4D for compensating the respiratory motion of liver tumors. In our platform, we propose a new calibration procedure to properly align the coordinate system of rendering with that of the tracker, and then automatically register rendered 3D graphics of liver and tumor with tracked landmarks,liver and tumor in the vivo pig, assisting surgeons in accurately approaching the target tumors, making the operation simpler, more efficient and accurate.The overview of our system is as shown in Fig. 1.

2 Related work

Many researchers have explored image-based navigated ablation of tumors and evaluated the accuracy of 2D image navigation modalities which have evolved considerably over the past 20 years and are increasingly used to effectively treat small primary cancers of liver and kidney, and it is recommended by most guidelines as the best therapeutic choice for patients with early stage hepatocellular carcinoma[11]. To achieve accurate pre-procedural planning,intra-procedural targeting, and post-procedural assessment of the therapeutic success, the availability of precise and reliable imaging techniques would be the essential premise [12, 13]. Among all 2D image navigation modalities, ultrasound (US) is the most widely used imaging technique for navigating percutaneous ablations for its real-time visualization of needle insertion and monitoring of the procedure [14]. However, the ultrasound images are not clear enough for distinguish the target area.Other 2D image navigation modalities, including the computed tomography (CT), magnetic resonance imaging (MRI), or positron emission tomography(PET),can provide more clear navigation images[15],but cannot provide real-time navigation information for intra-operative process in the RFA therapy.Besides, Amalou and Wood [16] adopted another modality of electromagnetic tracking by referencing to pre-operative CT imaging,which utilizes miniature sensors integrated with RFA equipment to navigate tools in real-time. They have achieved successfully during a lung tumor ablation with the accuracy of 3.9 mm.

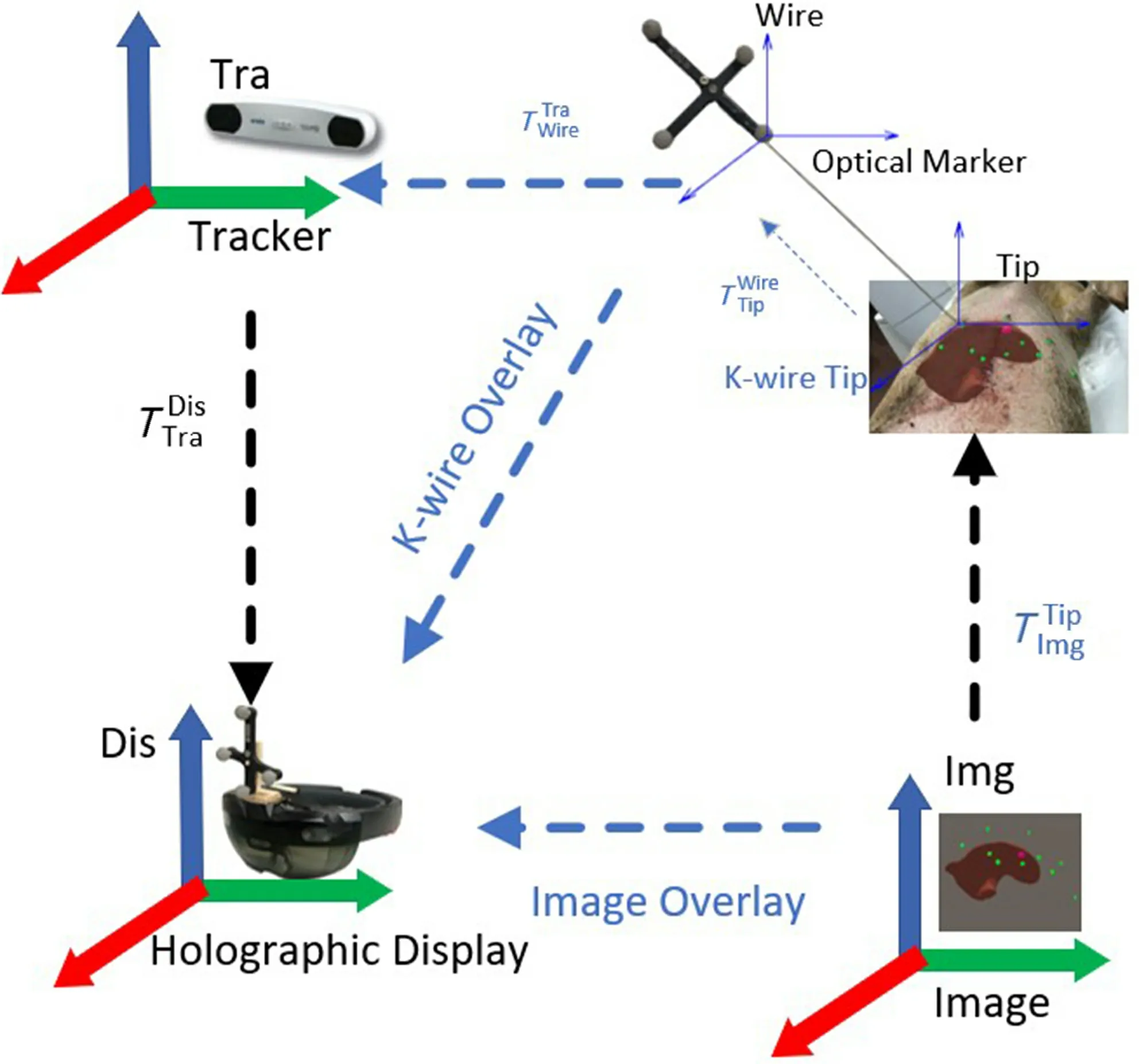

Fig. 1 Mixed reality-based needle insertion navigation.

To overcome the disadvantages of 2D image based navigation, mixed reality approaches have been proposed to navigate the physician during the intervention in recent years. To display virtual models of anatomical targets as an overlay on the patient’s skin, Sauer et al. [17] used a head-mounted display(HMD)for the physician to wear,providing the mixed reality-based navigation for the surgery. In other cases, Khan et al. [18] projected the 3D virtual objects with a semi-transparent mirror, and thus the surgeons can manipulate the needle behind the mirror which shows the overlay 3D virtual objects floating inside or on top of the patient’s body,providing a relative spatial information and mixed reality navigation for the surgeons. Ren et al. [19]realized the semi-transparent overlays for overlapping ablation zones and surrounding essential structures,and they projected the current needle trajectory and the planned trajectory onto the different anatomical views, thus navigating the surgeons’ operation in a mixed reality environment. Chan and Heng [20]introduced a new visualization method, which adopts a volumetric beam to provide depth information of the target region for the surgeons, and they also offered information about the orientation and tilting by combining a set of halos.

To ease the negative impact on accuracy of liver punctures induced by respiratory motion, many approaches have been proposed to tackle this issue in radiation therapy [21, 22]. Though numerous approaches for motion-adapted 4D treatment planning and 4D radiation have been reported, the clinical implementation of 4D guidance of tumor motion is currently still in its infancy. However, most existing methods lack qualitative and quantitative analysis of respiratory motion-related effects. By introducing the 4D-CT images of respiratory liver and tumor, the variations in depth and frequency of breathing effect can be numerically reconstructed with the statistical model. Besides, simulation-based flexible and realistic models of the human respiratory motion have the potential to deliver valuable insights into respiration-related effects in radiation therapy.This model can offer the possibility to investigate motion-related effects for variable anatomies, for different motion patterns and for changes in depth and frequency of breathing,and finally can contribute to the efficient, safe, and accurate RFA therapy.

3 Methodology

3.1 Method overview

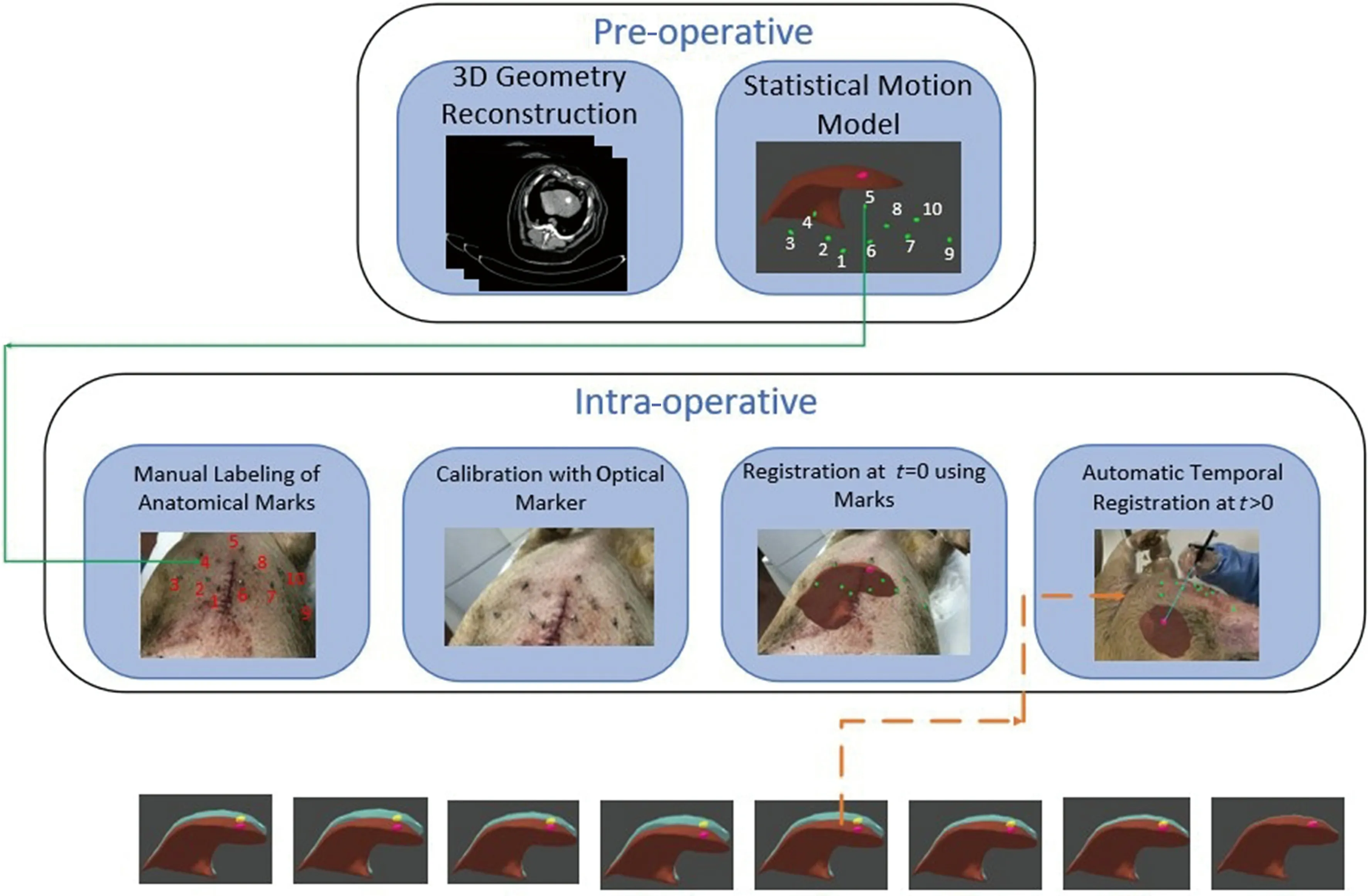

To achieve high precision RFA, we propose mixed reality-based navigation for respiratory liver tumor puncture to provide surgeons with virtual guidance of personalized 3D anatomical model. High matching degree between the real patient and virtual geometry model is prerequisite to achieve our goal. The match requirements are in two aspects. First, the virtual anatomical model must be with the same shape and deform in the same way as that of the real patient.Secondly, the virtual geometry should be displayed aligning with the real patient in operation. To fulfill the requirements, we design the system with the workflow as illustrated in Fig. 2. In a preparation step the 3D virtual liver structures are reconstructed from CT images and a statistical motion model is estimated for respiratory motion compensation with assistance of landmarks. Then, during the surgery, the liver structure is updated and registered to the real patient timely with motion estimated from positions of landmarks.

3.2 Medical data acquisition and preprocessing

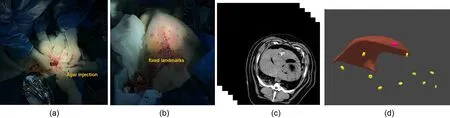

We employ a vivo pig to perform the needle insertion operation. The accuracy of needle insertion is greatly impacted by the anatomic structures of the abdomen,which is the working area of the liver RFA procedure.Here, we adopt the Materialise Mimics software to manually segment the CT images of the abdomen,extracting different types of tissues and accurately reconstructing the 3D geometric model of the patientspecific liver and tumor. In addition, 10 metal landmarks are placed on the skin near the ribs before CT scanning for further anatomical motion modeling.

3.3 Virtual-real spatial information visualization registration

Fig. 2 Statistical model based respiratory motion compensation.

During needle-based interventions, the surgeons cannot observe the internal structure of liver anatomy directly. Thus, landmark structures have to be remembered from the pre-interventional planning image to guess the correct insertion direction via the limited information. This procedure highly depends on personal experience and may lead to a significant miscalculation in needle placement and thus failed tumor ablation. To solve this problem, we propose a manual registration method for accurately overlaying the 3D virtual structures of the liver on patient, as shown in Fig. 1. The NDI tracking system is adopted to acquire the transform of virtual liver structure in the rendering space. Each objects’ transformation matrix in the rendering can be decomposed into two components, namely the transformation matrix from object’s local coordinate system to local NDI coordinate system and transformation matrix from local NDI coordinate system to the view coordinate of the display device. Finally, by registering virtual objects to real objects, we can render the virtual structure using the HoloLens to provide needle insertion guidance for surgeons.

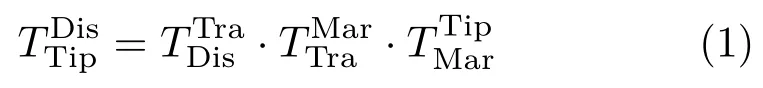

To acquire the transformation matrix from NDI system to Hololens system, we fix a k-wire of NDI system on the Hololens and statically bind the HoloLens system with a fixed virtual coordinate system of the k-wire. We can easily get the transformation matrix from k-wire markers to the HoloLens, denoted asMeanwhile, we can acquire the transformation matrix of the k-wire to the space of NDI Tacker, denoted asThese two matrices together form the transformation matrix from NDI coordinate system to the view coordinate in rendering space.

After calibrating the coordinates between the Microsoft HoloLens and NDI tracking system, we can easily acquire the position of the landmarks via the tip of the k-wire. To accurately overlay the 3D virtual liver structure on the real object, we need to ensure the precise transformation between HoloLens,markers, and NDI tracking system.

As shown in Fig. 3, the position of the markers(optical tracking spheres) can be acquired with the NDI tracking system, denoted asAs the position of k-wire’s tip is known to the k-wire, by moving the tip of k-wire to the landmarks of the patients, we can acquire the landmarks’ position via the optical markers of k-wire, denoted asThe transformation matrix between the Microsoft HoloLens and optical trackers is

The 3D virtual liver structures reconstructed by the CT images can overlay on the real object via the following transformation matrix:

Fig. 3 Registration of 3D virtual structure and real object.

3.4 Statistical model based respiratory motion compensation

To overcome the respiratory liver motion during the needle insertion, we reconstruct the patient-specific organ motion via statistical motion model to describe the breathing motion and its variability in 4D images,for compensating the respiratory motion of liver tumors.

3.4.1 4D-CT based statistical modeling

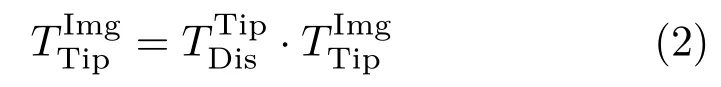

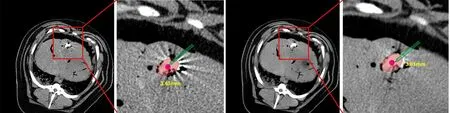

The first step to perform statistical model based respiratory motion compensation is to acquire the ground truth of the respiratory motion via 4DCT imaging, which records the deformation or displacement of the internal organ under the freebreathing respiratory motion. Figure 4 illustrates some typical slices of the 4D-CT images during respiratory motion and the reconstructed patientspecific 3D anatomy of liver and tumor at the corresponding time.

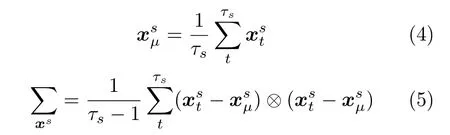

In essence, we need to build the statistical model by analyzing the dynamic shape deformations of the target liver shape during the short term of the respiratory motion. In this work, we adopt the 4DCT based statistical motion in Ref. [23] to estimate the respiratory motion of liver. The model assumes that the shape of 3D reconstructed liver anatomy in exhalation state obeys Gaussian distributionand deforms with respect to previously observed shapers.

Fig. 4 Patient-specific respiratory motion reconstruction. The red region shows the shape of the liver at the fully inhalation stage, while the light blue part region shows the shape of the liver at different respiration stage.

Except the shape change modeling in the same time of exhalation state, we also need to consider the shape changes over time. In the 4D-CT image acquisition, we reconstruct the 3D liver anatomy for several times, and assume the shape changes to be a mixture of Gaussian distributions.

The first two moments of the mixturep(x) is

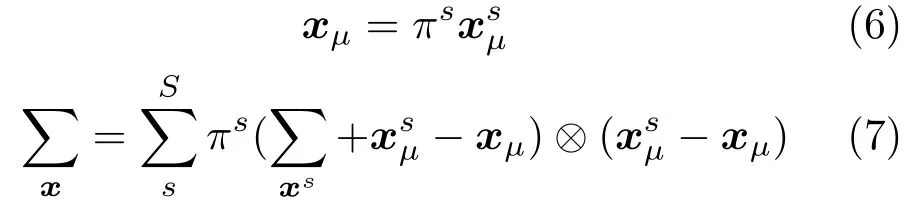

Finally, the shape changes is parameterized byhereφiareNorthogonal basis vectors. By combining of the above shape and motion modeling, the liver shape at a specific time can be represented by

3.4.2 Liver correspondence establishment

Before applying statistical model-based liver shape analysis in intra-operation phase,we need to establish the correspondence between all shapes of the reconstructed pre-operative 4D-CT images during the respiratory motion by defining a common topology,then apply the intra-operative data to compute the correct shapes for all time steps via non-rigid registration to navigate the surgery.

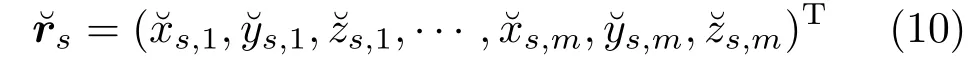

In the first step, we aim to establish the liver mechanical correspondence for each shape during the respiratory motion. Here we select the reference shape at full expiration status as the start (reference shape). Then, we align all the surface points of the reconstructed 3D shape using rigid registration at each time step during respiratory motion. The translation matrixTsand rotation matrixRsfor each shapescan be computed by

whereµ0is the mean of all aligned points.

After aligning the shapes via rigid registration, we need to perform non-rigid registration to establish the correspondence among individual shapes during the respiratory motion. To reduce the bias of mean shape to the reference shape, we adopt an iterative group-wise registration [23] of the shape to establish the correspondence.

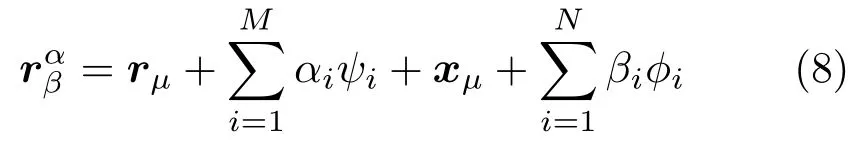

As the above liver correspondence is established for the shapesand timet, which is a temporal correspondence and must be along the motion sequence for each shape during the respiratory motion.For all individual time steps, we can register the reference shape using the deformation field obtained from non-rigid registration. Thus, the position of the surface point for each reconstructed shape can be obtained over time. Here, the final liver correspondence can be represented by the following registered shape vector:

3.4.3 Statistical motion modeling

After establishing the liver correspondence for all time step, we can replace the shape model termrµ+with a fixed reference vector:

By applying the offset between each sample shape and the reference shape, the motion model can be computed by

The arithmetic mean is

The motion of liver shape can be represented as the data matrix by fathering the mean-free data of all liver shapes during the respiratory motion.

To compensate the respiratory motion, we need to acquire each landmarks’ position at different time in the 4D-CT images and use the position to model the inter-position during the movement of the liver. As shown in Fig. 2, we have labeled each landmark and correlate them to the landmarks on the 3D virtual model. By acquiring the markers’ positions with the NDI tracking system, we can trigger synchronization and then predict the current time and shape of the liver via the estimated statistical model with respiratory motion compensation. By displaying the real-time position of the target tumor in the mixed reality environment, our system enables the “seethrough” navigation for the surgeons to accomplish the needle insertion.

4 Results

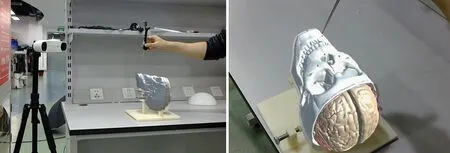

In the experiment, we conduct an animal comparison experiments between mixed reality-navigated needle insertion and traditional pre-operative CT imagingnavigated freehand needle insertion for liver RFA.The center of the tumor is set as the accurate target position for needle insertion. The animal experiment setting is as shown in Fig. 5. All experiments are conducted on a Microsoft HoloLens,NDI Polaris,and a notebook equipped with Intel(R) i7-4702MQ CPU,8G RAM, and NVIDIA GeForce GTX750M.

4.1 Registration accuracy validation

Fig. 5 Animal experiment setting and 3D reconstruction results. (a) Tumor implantation using agar. (b) Metal landmark placement. (c) CT imaging. (d) 3D reconstruction of liver, tumor, and 10 metal landmarks.

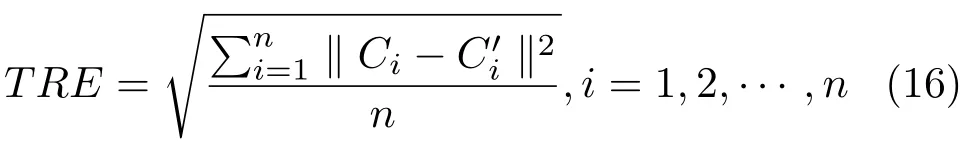

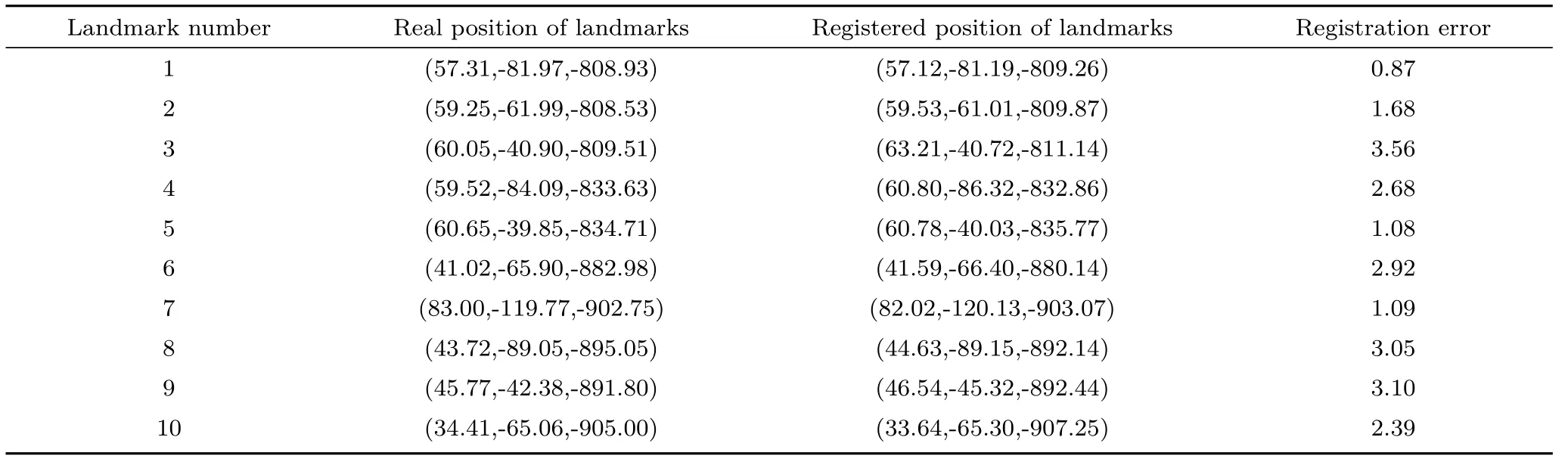

In this section, we design an accurate 3D printed template and skull to validate the registration accuracy and verify the accuracy of our mixed reality-based navigation. In preparation, we build a 3D-printed skull with 10 landmarks which lies at a standard distance with high precision, and correspondingly label the 10 landmarks on the virtual skull model. In experiments, we first put the 3Dprinted skull on the tracking area of the 3D tracking and positioning system. Based on the real and virtual scene registration by the HoloLens, the position of the landmarks on the 3D-printed skull and the virtual skull can be obtained. Here we check whether the virtual skull model is aligned with the 3D-printed skull, and calculate the relative position of the landmarks both by the tracking system and the virtual markers on the virtual skull. The distance between these two positions can be used to validate the accuracy of our system. Supposing the positions of the markers on the 3D-printing skull and template areC1,C2,··· ,Cn, the calculated positions of these markers with our method areThen,the registration error can be computed by

The accuracy validation experiment is shown in Fig. 6; the real position and the registration position of all feature points are as shown in Table 1. The average target registration error (TRE) is 2.24 mm.

4.2 Needle insertion comparison

In this section, we conduct an animal experiment of needle insertion. Based on real needle insertion for liver RFA procedure, we measure and compare the needle insertion accuracy using traditional CT-guided freehand operation and our mixed reality-navigated operation.

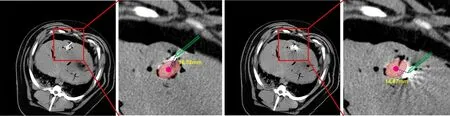

Figure 7 demonstrates the traditional freehand needle insertion navigated by the CT images for 2 times. The surgeons need to observe and measure the position of the liver tumor in the pre-operative CT image, and then perform freehand needle insertion on the animal. The results in Fig. 7 demonstrate the needle insertion accuracy, which is 16.32 and 14.87 mm, respectively. The CT image navigation could only provide 2D images without visual cue of the internal structure of liver and tumors.

Fig. 6 Automatic registration accuracy validation; red points are landmarks.

Table 1 Performance statistics of automatic registration (Unit: mm)

Fig. 7 Results of traditional CT-navigated needle insertion.

Figure 8 illustrates the mixed reality-based navigation for needle insertion for liver RFA. By reconstructing the 3D model of animal abdomen and registering it to the real animal, we can clearly observe the internal structure of the animal abdomen,including the target tumors, which greatly facilitates needle insertion operation and reduces the operation difficulty. Also, surgeons can insert the needle via“see-through” display, which benefits the surgeon to directly coordinate their vision and operation, and thus raising the needle insertion precision. Since the tip of the needle is invisible when it inserts into the liver, we display the tip of the k-wire in the holographic environment to clearly demonstrate the tip’s position during the needle insertion, to provide accurate guidance for the surgeons. Figure 9 illustrates the accuracy results of mixed reality-guided needle insertion for 2 times, which are 3.43 and 3.61 mm. With our mixed reality guidance, the surgeon can precisely insert the needle into the liver tumor.

Fig. 8 Mixed reality-navigated needle insertion.

Besides, for free-hand CT-guided insertion, the surgeon takes 25 min to finish the pre-operative CT scanning, tumor measurement, and needle insertion. Our mixed reality method can achieve fast registration, and the surgeon takes only 5 min to finish the registration and needle insertion. This result demonstrates the effectiveness of our mixed reality-guided needle insertion.

5 Conclusions

Fig. 9 Results of our mixed reality-based needle insertion navigation.

In this paper, we propose a novel mixed realitybased surgical navigation modality to optimize the traditional image-based navigated modality for respiratory liver tumors punctures. The proposed mixed reality-based navigation system enables us visualize a 3D preoperative anatomical model on intra-operative patient, thus providing direct visual navigation information and depth perception for the surgeons. Besides, with the aid of statistical motion model based respiratory motion compensation,surgeons can accurately insert the needle into the tumor, avoiding the error induced by the respiratory liver motion. We perform a comparison on an animal to show the difference between mixed realitybased navigation and traditional CT imaging based navigation for needle insertion in in-vivo animal test.The experimental results showed the advantages of the mixed reality guided needle insertion for liver RFA surgery, which can assist the surgeons with simpler,more efficient, and more precise operation.

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China(Nos. U1813204 and 61802385), in part by HK RGC TRS project T42-409/18-R, in part by HK RGC project CUHK14225616, in part by CUHK T Stone Robotics Institute, CUHK, and in part by the Science and Technology Plan Project of Guangzhou (No.201704020141). The authors would like to thank Yanfang Zhang and Jianxi Guo (Shenzhen People’s Hospital) for providing the medical support, and Rui Zheng for the useful discussions. Special thanks to the reviewers and editors ofComputational Visual Media.

Open AccessThis article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format,as long as you give appropriate credit to the original author(s)and the source, provide a link to the Creative Commons licence, and indicate if changes were made.

The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use,you will need to obtain permission directly from the copyright holder.

To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

Other papers from this open access journal are available free of charge from http://www.springer.com/journal/41095.To submit a manuscript, please go to https://www.editorialmanager.com/cvmj.

杂志排行

Computational Visual Media的其它文章

- Computational Visual Media TOTAL CONTENTS IN 2019

- SpinNet: Spinning convolutional network for lane boundary detection

- A three-stage real-time detector for traffic signs in large panoramas

- Adaptive deep residual network for single image super-resolution

- InSocialNet: Interactive visual analytics for role–event videos

- Evaluation of modified adaptive k-means segmentation algorithm