Prediction of Aircraft Engine Health Condition Parameters Based on Ensemble ELM

2013-09-16DaLeiShiShengZhong

Da Lei,Shi-Sheng Zhong

(School of Mechatronics Engineering,Harbin Institute of Technology,Harbin 150001,China)

1 Introduction

Through the prediction of parameters indicating health condition,the performance trend of aircraft engine can be monitored and well understood,which can support the maintenance decision making process to realize the condition based maintenance(CBM).Thus,it is critical to forecast the trend of the aircraft engine health condition parameters.

With perfect theoretical properties,time series analysis models are utilized to predict the aircraft engine health parameters[1-2].Nevertheless,the mostly used ARMA is essentially linear model,and can hardly model the health condition parameters’nonlinear time variant process precisely.The artificial neural network(ANN),with good approximation capability[3],is an effective nonlinear mapping tool,and is widely used to predict the aircraft engine health parameters[4-5].However,ANN requires synchronous and instantaneous inputs.To solve this problem,the process neural networks(PNN)which takes continuous functions as inputs and realizes time accumulation operation through integration operating is presented in Ref.[6].PNN releases the input limit of ANN,and transforms the time series prediction problem into a functional approximation problem to enhance its generalization capability.Therefore,PNN can have higher precision in the prediction of aircraft engine health parameters[7-8].However,just as ANN,PNN needs a tedious training process,because it has to tune the weights in an iteration way.Aiming at such problem,Huang[9]proposed the extreme learning machine(ELM)whose output weights can be calculated directly.Moreover,ELM inherently has good generalization capability.With such advantages,ELM is more adaptive to predict of aircraft engine health parameters.

Nevertheless,due to the complexity of the varying process of the aircraft engine health parameters,a complex tuning procedure is always required to get the optimal parameters while utilizing single model to predict such time series,which adds more difficulty for the practical application.In view of such problem,an aircraft engine health parameters prediction approach based on ensemble ELM(EELM)is proposed in this paper.The proposed approach utilizes AdaBoost.RT[10]as the basic framework,and employs ELM as weaker leaner to establish the ensemble learning model.Then the ensemble model is utilized to predict the real aircraft engine health condition parameter time series,and then follows the contrast experiments.

2 Extreme Learning Machine

ELM is essentially a kind of single hidden layer feedforward neural networks.The difference is that ELM transforms the weights tuning problem into a linear equation solution problem.While the weights connecting the inputs and hidden neurons can be randomly assigned,the output weights can be calculated directly by solving the Moore-Penrose inverse of a matrix.

For the time series prediction problem,given N training samples,where

is the i-th input vector with dimension of n,and tj∈R is the corresponding target,and then the solution is an estimator learning from these N training samples which can generate the expected outputs according to the given inputs.Supposed that an ELM model with L hidden neurons is used as the estimator,and then the output can be expressed as:

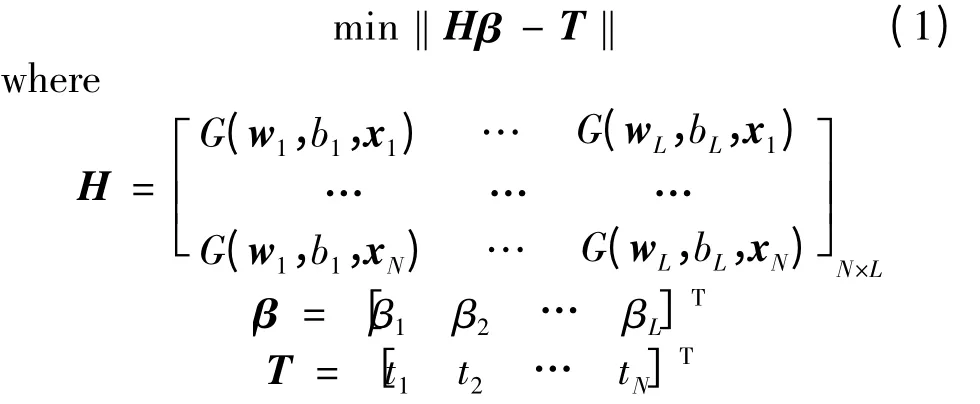

where g(·)is the activation function of the hidden neurons;wiis the weight vector connecting the i-th hidden neuron and the inputs and wi=[wi1wi2…win]T;βiis the weight connecting the i-th hidden neuron and the output.The training target of ELM is to minimize the error between the outputs and the targets,which is min,and it can be rewritten in the matrix form:

Thus,the training process can be seen as the solving process of a linear system as Eq.(1).Moreover,the hidden layer parameters wiand bican be assigned randomly.Therefore,the only unknown parameter of ELM is the output weightβ.Then,ELM actually defines a linear system:

Its minimal norm solution is

where H†is the Moore-Penrose inverse of matrix H.The minimal norm solution ensures that ELM can have minimal output weight while getting minimal training error,so it has good generalization ability.

3 Ensemble ELM Prediction Model

3.1 Modified AdaBoost.RT Algorithm

AdaBoost.RT is an ensemble learning algorithm for regression problem based on AdaBoost[11].By utilizing a thresholdφ,AdaBoost.RT transforms the regression problem to a classification problem.The training samples are divided into two classes according to the comparison results of the training errors andφ.The samples having training errors larger thanφget large weights,so the learning machine will focus on these samples in the following training process.With the intuitiveness and simplicity,AdaBoost.RT is widely used[12-14].So,AdaBoost.RT is used as the basic framework of the ensemble ELM model in this paper.

In the practical application of AdaBoost.RT,the thresholdφshould be given firstly.However,the prediction performance is sensitive toφ,both too large or too smallφwill lead to unexpected results.Thus,it is always difficult to choose a suitableφ.Therefore,a method adjustingφaccording to the trainging errors is put forward in this paper.In this method,φis firstly given a initial value,and then is adjusted according to

whereεtandεt-1are two adjacent training errors.If the training error is getting larger,then increaseφto get more correct samples to make the following learning machine focus on the hardest samples.If the traning error is getting samller,then decreaseφto get sufficent traning samples.

The basic procedures of the modified AdaBoost.RT is as follows:

Step 1Determine the inputs.Choose N training samples,where tj∈R the corresponding output of the input xj;give a set of weak learners;set the maximal iteration times T and the thresholdφ.

Step 2Initialization.Set the initial iteration t=1,initialize the sample weights as Dt(i)=N-1,i=1,2,…,N,and let the initial training errorεt=0.

Step 3Iteration.Generate a training set by resampling from the initial sample set according to the distribution Dt,then train a weaker learner and calculate the training errorεt=∑Dt(i),i:|(ft(xi)-yi)/yi|>φ,letβt=,then update Dtaccording to

where Ztis the normalization factor to ensure that Dtis a distribution.Then,adjustφaccording to Eq.(2).Let t=t+1,and if t>T,output the final results.

Step 4Output.

3.2 Ensemble ELM Model

The modified AdaBoost.RT algorithm in Section 3.1 is used as the basic framework to establish an ensemble learning model using ELM as weak learner.For the ensemble learning algorithm,diversity of individual weak learners is critical to the final results[15].Thus,a set of ELM with different structures is utilized as weak learners,denoted asEach ELMthas different number of hidden layer neurons,so the diversity is increased.In addition,the input weights are randomly assigned which can also lead to the diversity of the weak learners.The proposed ensemble ELM model can be trained according to the procedures in Section 3.1.

Tian[12]proposed an ensemble ELM model using AdaBoost.RT as the basic framework to predict the temperature of molten steel in ladle furnace.However,the model proposed in this paper is different from Tian’s model in two aspects:

1)Tian’s model employs a set of ELM with the same number of hidden neurons,and just one weak learner is trained in each iteration.However,in this paper,a set of ELM with different hidden neurons is used as weak learners.Thus,a set of weak leanersare trained in each iteration,and the one with the best performance is accepted as the current weak learner.

2)In order to adjust the thresholdφadaptively,Tian’s model introduces a new control parameter.In this paper,φis adjusted adaptively accroding to Eq.(8),so no additional parameter is necessary.

4 Applications

Fuel flow(FF)is one of the most important health condition parameters indicating the performance condition of aircraft engine.And the engine performance deterioration can be grasped through the monitoring of the FF varying trend.Therefore,FF is used as an example to demonstrate the application of the proposed ensemble ELM based approach to the prediction of engine health condition parameters.The aircraft engine health condition parameters are influenced by many factors,such as height,atmospheric temperature and Mach.Thus,deviation values are always calculated to eliminate the influences.Therefore,FF deviation values are used in this paper.

The FF data used in this paper are the actual monitoring data from a native airline company,and is denoted as.Firstly,a set of training samples needs to be generated.In this paper,the previous m points are utilized to predict the following(m+1)-th point.With m=6,a sample set with length of 199 is generated,which can be denoted as,where INi={GWFMi,…,GWFMi+5}is the i-th input,and diis the corresponding output.The previous 149 samples are utilized to train the model and the left 50 samples are used as testing samples.

Generating a set of ELM with different structures,the hidden neuron number of these ELM increases from 4 to 30,and each ELM has six input units and one output unit.After the aforementioned operation,the size of the weak learner set is 27,and at the same time,these weak learners are initialized by assigning the input weights randomly.The work in Ref.[16]shows that the performance of AdaBoost.RT can be accepted and be stable if 0<φ<0.4.Thus,φis assigned a initial value of 0.2 in this paper.After initialization,the ensemble ELM model can be trained and tested according to the procedures descriped as Section 3.1.

The mean absolute percentage error(MAPE)and mean square error(MSE)are utilized as performance indices in this paper.MAPE and MSE can be calculated as

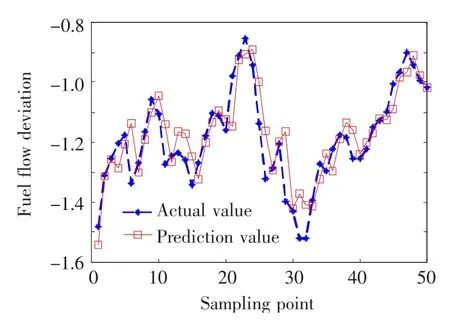

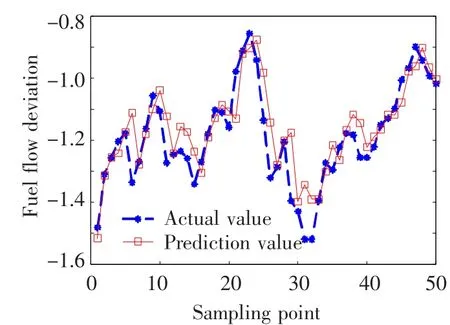

where N is the prediction horizon;yiis the actual value and yiis the prediction value.It can be found that the ensemble ELM model gets the best performance when the maximal iteration number is set as 10.Considering the randomness during the training and testing process,the prediction test runs 20 times with the fixed maximal iteration number 10,and the average results of 30 tests are used as the final results.Fig.1 shows the final results.

Fig.1 Prediction results of EELM

For comparison,the single ELM and PNN model are utilized to predict the same time series.For ELM,the samples for the ensemble ELM can be used directly.The single ELM model has six input units and one output unit,and the number of hidden neurons is determined by grid search.For PNN,the discrete FF samples need to be fit into continuous functions firstly.By using the previous six sample points to fit a continuous function as input,a sample set with size of 199 can be generated,which is denoted as,where IFiis the i-th continuous function input generated from samples{GWFMi,…,GWFMi+5},and diis its target value.The input and output units of PNN are both one and the hidden neuron is also determined by grid search.

In this paper,when the hidden neuron number of ELM and PNN are 7 and 11 respectively,each of the models has the best performance.With the optimal structure,both ELM and PNN are run 20 times and the average results are used as the final results.Figs.2 and 3 show the prediction results of single ELM and PNN respectively.

Fig.2 Prediction results of single ELM

Fig.3 Prediction results of single PNN

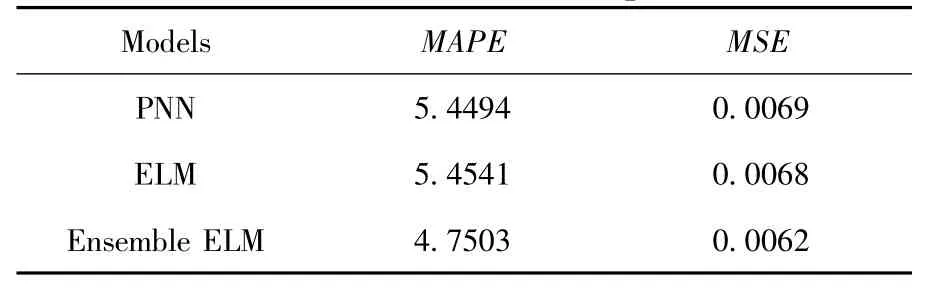

The comparison of the three models’performance is shown in Table 1.It can be seen that,the performance of PNN is similar to ELM’s,but the performance of ensemble ELM is much better than PNN’s and ELM’s.The above comparison declares the effectiveness of the proposed ensemble ELM based approach,when it is used to predict the FF time series.

Table 1 Performance comparison

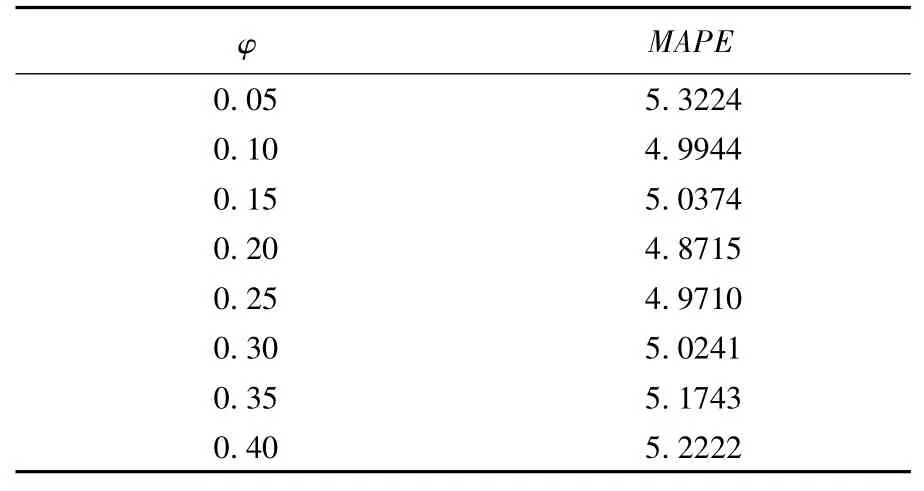

In order to validate the threshold adjusting method proposed in this paper,a set of test runs with fixed threshold,and the results are shown in Table 2.The results show that the performance of the proposed model is sensitive toφ,and even the best result getting by the fixedφis still worse than the result getting by the proposed method in this paper.It can be concluded that the proposed threshold adjusting method is effective.

Table 2 Prediction results with fixed threshold

Tian’s model is also utilized to predict the same FF time series.For simplicity,the number of hidden neuron number of ELM used in Tian’s model is set as 11 directly according to the results of the former tests in this paper.The test runs 20 times,after that,the average results are used as the final results,which are shown in Fig.4.

Fig.4 Prediction results of Tian’s model

The MAPE and MSE of Tian’s model are 4.7510 and 0.0067 respectively.It can be concluded that,except MSE,which is slightly larger,the accuracy of Tian’s model is similar to the model proposed in this paper.Furthermore,there may still be a tedious process to choose a proper structure of ELM without any prior information while using Tian’s model.However,only a loose range is needed while using the model proposed in this paper,since only the weak learner with the best performance is accepted in each iteration.Besides,Tian’s model introduces a new parameter to adjust the threshold,and this is unnecessary in the model proposed in this paper.Thus,the model proposed in this paper is less complex in practical application.

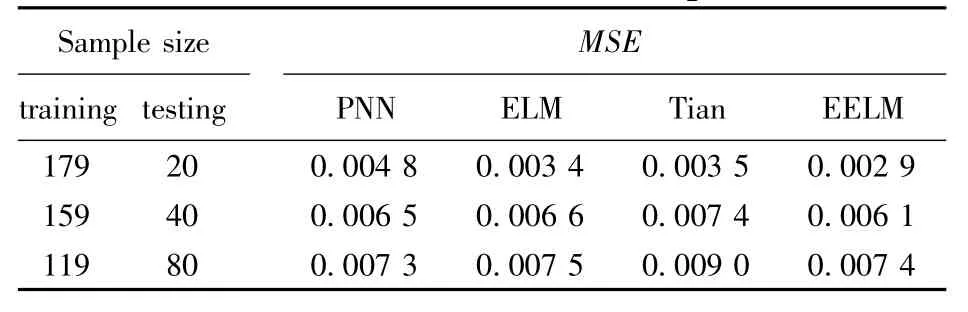

In order to test the performance of the proposed method with different ratios of training samples and testing samples,three more tests were carried out in this paper,and the results are shown in Tables.3 and 4,where Tian represents Tian’s model described in Ref.[12].

Table 3 MAPE with different sample ratio

Table 4 MSE with different sample ratio

It can be concluded from the comprehension comparison of the results of the three tests that the EELM proposed in this paper has the best performance,which means that it is adaptive to the problem of aircraft engine health condition parameter prediction.In addition,though EELM is only used to predict the fuel flow time series in this paper,it can be utilized to forecast other health parameter series such as exhausted gas temperature in a similar way.

5 Conclusions

1)An aircraft engine health condition parameters prediction approach based on ensemble ELM is proposed in this paper.The AdaBoost.RT algorithm is modified firstly to adjust its threshold adaptively during the training process.Then it is utilized as the basic framework to establish the ensemble ELM model,using a set of ELM with different structures as weak learners.

The proposed approach is utilized to predict the practical fuel flow time series,and the results show that it has good performance.

2)The comparison experiments show that,the proposed method has higher precision than single ELM and single PNN,and has no worse accuracy than the similar ensemble model using ELM as weak learner.

3)Compared with the contrast approaches in thispaper,the parameter tuning process of the proposed method is more simple,thus is more convenient for practical application.

[1]Li X B,Cui X L,Lang R L.Forecasting method for aeroengine performance parameters.Journal of Beijing University of Aeronautics and Astronautics,2008,34(3):253-256.(in Chinese)

[2]Hu J H,Xie S S.AR model-based prediction of metal content in lubricating oil.Gas Turbine Experiment and Research,2003,16(1):32-36.(in Chinese)

[3]Hornik K,Stinchcombe M,White H.Multilayer feedforward networks are universal approximators.Neural Networks,1989,2(5):359-366.

[4]Brotherton T,Jahns G,Jacobs J,et al.Prognosis of faults in gas turbine engines.2000 IEEE Aerospace Conference Proceedings.Piscataway:IEEE.2000.163-171.

[5]Jaw L C.Recent advancements in aircraft engine health management(EHM)technologies and recommendations for the next step.ASME Conference Proceedings.Reno,Nevada:ASME.2005.683-695.

[6]He X G,Liang J Z.Some theoretical issues on procedure neural networks.Engineering Science,2000,2(12):40-44.(in Chinese)

[7]Ding G,Zhong S S.Aircraft engine lubricating oil monitoring by process neural network.Neural Network World,2006,16(1):15-24.

[8]Zhong S S,Li Y,Ding G,et al.Continuous wavelet process neural network and its application.Neural network world,2007,17(5):483-496.

[9]Huang G B,Zhu Q Y,Siew C K.Extreme learning machine:theory and applications.Neurocomputing,2006,70(1/2/3):489-501.

[10]Solomatine D P,Shrestha D L.AdaBoost.RT:A boosting algorithm for regression problems.2004 IEEE International Joint Conference on Neural Networks.Piscadaway:IEEE.2004.1163-1168.

[11]Freund Y,Schapire R.A decision theoretic generalization of on-line learning and an application to boosting Computational Learning Theory.Proceedings of the Second European Conference on Computational Learning Theory.London:Springer-Verlag.1995.23-37.

[12]Tian H X,Mao Z Z.An ensemble elm based on modified adaboost.rt algorithm for predicting the temperature of molten steel in ladle furnace.IEEE Transactions on Automation Science and Engineering,2010,7(1):73-80.

[13]Yang Y H,Lin Y C,Su Y F,et al.Music emotion classification:a regression approach.2007 IEEE International Conference on Multimedia and Expo.Piscataway:IEEE,2007.208-211.

[14]Schclar A,Tsikinovsky A,Rokach L,et al.Ensemble methods for improving the performance of neighborhoodbased collaborative filtering.Proceedings of the third ACM conference on Recommender systems.New York:ACM.2009.261-264.

[15]Polikar R.Ensemble based systems in decision making.Circuits and Systems Magazine,2006,6(3):21-45.

[16]Shrestha D L,Solomatine D P.Experiments with AdaBoost.RT,an improved boosting scheme for regression.Neural Computation,2006,18(7):1678-1710.

杂志排行

Journal of Harbin Institute of Technology(New Series)的其它文章

- Research of Multiagent Coordination and Cooperation Algorithm

- Research on Two-Step Hydro-Bulge Forming of Ellipsoidal Shell with Larger Axis Length Ratio

- An Ant Colony Algorithm Based Congestion Elusion Routing Strategy for Mobile Ad Hoc Networks

- Robust Audio Blind Watermarking Algorithm Based on Haar Transform

- Adaptive Neighboring Selection Algorithm Based on Curvature Prediction in Manifold Learning

- Impact of Online Community Structure on Information Propagation:Empirical Analysis and Modeling