Advances in neuromorphic computing:Expanding horizons for AI development through novel artificial neurons and in-sensor computing

2024-03-25YuboYang杨玉波JizheZhao赵吉哲YinjieLiu刘胤洁XiayangHua华夏扬TianruiWang王天睿JiyuanZheng郑纪元ZhibiaoHao郝智彪BingXiong熊兵ChangzhengSun孙长征YanjunHan韩彦军JianWang王健HongtaoLi李洪涛LaiWang汪莱andYiLuo罗毅

Yubo Yang(杨玉波), Jizhe Zhao(赵吉哲), Yinjie Liu(刘胤洁), Xiayang Hua(华夏扬),Tianrui Wang(王天睿), Jiyuan Zheng(郑纪元), Zhibiao Hao(郝智彪),, Bing Xiong(熊兵),,Changzheng Sun(孙长征),, Yanjun Han(韩彦军), Jian Wang(王健),Hongtao Li(李洪涛), Lai Wang(汪莱),,‡, and Yi Luo(罗毅),,§

1Department of Electronic Engineering,Tsinghua University,Beijing 100084,China

2Beijing National Research Center for Information Science and Technology,Tsinghua University,Beijing 100084,China

Keywords: neuromorphic computing, spiking neural network (SNN), in-sensor computing, artificial intelligence

1.Introduction

Artificial intelligence(AI)is the general term for the science and technology which uses computers to simulate human intelligent behaviors and trains computers to learn human behaviors such as learning, judgment, and decision-making.The development of AI has brought huge economic benefits to mankind and has benefited all aspects of life,even as it has greatly promoted social development and brought social development into a new era.[1,2]Artificial neural network(ANN)has played significant role in the development of AI,which is the cornerstone of AI systems.ANN models can be divided into three developing generations depending on their computational units, according to Wolfgang Maass in 1996.[3]The first invented neural network model’s computational unit is based on McCulloch-Pitts neurons,often called perceptron or threshold gate.The basic principle is that for the input Boolean signals (i.e., 0 and 1), the perceptron adds all the signals and compares them to the threshold, and then outputs a Boolean value of 0 or 1 (see Fig.1(a)).This model is universal for computations with digital input and output,and every Boolean function can be computed by some multilayer perceptron with a single hidden layer.[3]

The second generation of neural networks introduces derivable and nonlinear activation functions (i.e., RELU or Sigmoid) based on the first generation.Besides, it transforms the original Boolean input linear superposition into a weighted sum of inputs (see Fig.1(b)).[4]Therefore, it can not only calculate continuous values and strengthen the fitting characteristics but also be able to use the gradient descent method in backpropagation (namely, BP algorithm) to modify the weight coefficient of the network to enhance the computational accuracy of complex models further.[5]Based on the second-generation neural network model, various ANN neural network architectures and algorithms are proposed.A distinct historical landmark is the 2012 success of AlexNet in the ILSVRC image classification challenge.[6,7]AlexNet became known as a deep neural network (DNN),composed of eight sequential layers of end-to-end learning,including a sum of 60 million trainable parameters.[4]Since then, there has been a boom in deep learning in the AI domain of speech recognition,[8]object detection,[9-13]image recognition,[7,14,15]etc.

Nonetheless,it is evident that the complex hidden layers and huge trainable parameters put forward a severe test for DNN.On the one hand, it relies too much on a large amount of data.Once the model lacks enough data-driven, it would be difficult to train a suitable AI model.If the sample information is disturbed or attacked,the results of the network will be unpredictable.[14]The robustness generated by perturbation training alone cannot ensure the network’s security.[16]On the other hand, the parameters of DNN have no specific physical or biological meaning.As a black box that relies entirely on data stacking,it is not explanatory,thus making many people skeptical about its authenticity.The most important thing is that every unit of the DNN is doing MAC operations almost all the time, creating a lot of power consumption.Although people are looking for new algorithms to achieve high classification accuracy and low computational power consumption,the high-power-consumption brought by the DNN architecture itself cannot be avoided.

In addition to algorithmic and device-level improvements,another way to improve accuracy and reduce power consumption is to find inspiration from brain science.The human brain does not need a lot of data input to produce efficient learning behavior (or high classification accuracy).What is more, the energy consumption and computational cost of sophisticated calculation in the human brain are relatively low.To reduce energy consumption and improve efficiency, people have further strengthened the research on biomimetics of biological neurons, and the experimental results accumulated in the past exclaim that the time of single-action potential(or spike) is often used in the biological nervous system to encode information.[3]Therefore,a neuromorphic computing network model that utilizes spike neurons as computational units,namely,the spike neural network(SNN),came into being.

In essence,SNN belongs to a type of neuromorphic computing architecture.In addition to using neurons and synapses for computation and transmission like the second-generation networks, SNN also incorporates temporal information into the model.That is, neurons in SNN only work when the membrane potential changes (namely, when the impulse response arrives) rather than transmitting information during each propagation cycle.The typical case of SNN can be seen in Fig.1(c).During a specific time window,the soma weights the spike pulse information arriving at each moment, and the signal in the entire time window is integrated to update the membrane potential, and when the membrane potential exceeds the threshold, the soma (neuron) will ignite to release a downstream spike.[4]The past history of each spike neuron in the time domain significantly influences the current state apart from the information propagating in the spatial environment.Since the neuron is only activated when the total membrane potential exceeds a threshold, the overall spike signal is usually sparse.In addition, MAC operations can be eliminated if the integration time window is set to 1 since the input spikes are only 0 or 1.Hence,as the third generation of neural networks, spike neural networks (SNN) have several advantages compared to traditional ANN and DNN.Compared to compute-intensive ANN networks, SNN typically requires lower power consumption because it deals with or transmits sparse data, thus improving energy efficiency.Besides, SNN utilizes pulse-based encoding, sufficiently employing temporal information of spikes to represent and process signals,which makes it suitable for time-series data processing.Last but not least, SNN mimics and emulates the working mechanism of biological neural networks,as it transmits information somewhat like synaptic transmission of electrical signals between neurons, and this merit suggests that SNN may tackle cognitive problems better than the last two generations.

In addition, over 80% of human information input in biological neural networks comes from the visual system.Individuals must continuously receive, store, and process a large amount of visual information to coordinate their activities.The retina and brain can efficiently complete this task with extremely low power consumption, relying on the exquisite multi-level structure and spike neuron mechanisms of the retinal neural networks.For example, the eyeball has different sensitivities to different targets to achieve selective reception and transmission of information, and the retina has different receptive fields to pre-process and compress information.[17,18]Completing the same visual task,the retina is four orders of magnitude higher in the energy efficiency than the traditional hardware systems.Therefore,inspired by retinal neural networks, the development of insensor computing is also one of the current research hotspots in the field of neuromorphic computing.

Based on the fantastic development of microelectronic devices, much progress in artificial neural/synaptic devices and circuits has been achieved.[19,20]It also has demonstrated that the fabrication of bio-mimicking circuits on a very large scale is possible.[21]

In the following parts, the SNN models mimicking the mechanism of biological neurons, together with the supporting functional materials and artificial neuron devices, are reviewed.Then, the recent progress of in-sensor computing chips,as a promising neuromorphic computing technology,is also introduced.

2.SNN

SNN architecture receives complex data, encodes them into zero-one spike sequences, and then inputs this information into SNN computational units.It is unknown how the human brain encodes the received externally rich analog information.However,people have invented several mathematical methods to process the input signals in the field of machine learning.One common approach is a frequency-based method: normalizing the original pixel intensity as the probability of emitting a pulse per unit time step, which follows a specific probability distribution, such as the Poisson distribution.If the time step is set to 20, it will produce 20 pulse matrices to get the corresponding contour, and the longer the time, the more we can reconstruct the original image.The other method uses an ANN encoder to generate a global pulse signal, which accepts the intensity value signal of multiple pixels of the picture as input, generates a pulse as an output(equivalent to feature acquisition),uses the generated pulse to do backpropagation to update the weight,and finally performs neuron calculation based on SNN architecture on the encoded input signal to realize the SNN function.

Based on the study of brain neurons, a variety of SNN mathematical models have been created,such as the spike response model(SRM),Hodgkin-Huxley(H-H)neuron model,Wilson model, Hindmarsh-Rose (H-R) model, Izhikevich spiking neuron model and leaky integrate and fired (LIF)model.Spike response model particularly describes how one neuron responds to the input spikes from other neurons.The SRM captures the temporal response properties and the effects of input spikes on the self-activation of a neuron.The core prospective of SRM is that the membrane potential of a neuron changes over time in response to inputs from other neurons, and it utilizes a linear kernel to calculate the functional relationship of membrane potential change with respect to input spikes.The mathematical expression of SRM can be expressed by the following equations:

The H-H neuron model is a classical mathematical model that depicts the change of neuron membrane potential.The cell membrane can store electric charge and can be referred to as a capacitorCmin circuits, while Na+and K+ion channel is modeled as a variable conductivitygin series with a batteryE.Erepresents the equilibrium potential of a particular ion,andgreflects the permeability of the channel to the specific ion.The dynamic conductivitygdepends on the voltageVmacross the membrane.Generally, a H-H model requires four ordinary differential equations as follows:

whereαi(t) indicates the conversion rate from prohibition to permission andβi(t) vice versa, andn,m,hlimit the transmission of Na+and K+, and the parameters with subscript l represent those of the leaking channel.The mathematical expression of SRM model is relatively simple and can obtain the temporal correlations between neurons.However, it neglects the dynamic properties of neurons and cannot fit well with complicated networks.As H-H model considers more complex situations and it needs four ordinary differential equations, it is able to capture the accurate biological characteristics of membrane potential.Nonetheless, it is rather difficult to carry out large-scale simulations.The most commonly used SNN model is the leaky integrate and fired model,[22]whose main idea is to launch a spike when the sum of collected signals(or the membrane potential)exceeds the threshold and to return to the resting potential after ignition.It also adopts a refractory period model after the ignition, which means the neuron will not be excited for a period.

It is vital for us to get a view of several partitions of a neuron and their functions.(1) The axon generates an action potential,releases neurotransmitters,and produces a pulse.(2)Dendrites or cell bodies receive neurotransmitters,generating a potential difference between inner and outer membranes to form an electrical current.(3) Leakage current is a biological concept, which is the loss of membrane potential during axonal transmission and is replaced by resistance in hardware circuits.

Neuronal dynamics can be conceived as a summation process combined with a mechanism that triggers an action potential when the sum of inputs exceeds a threshold voltage.[23]Whenures<uthand the summation voltageui(t)reaches the thresholduth, it will trigger action potential pulses.All the emitted pulses’shape is similar,and the transmitted information essentially lies in whether a pulse is at a specific time.This process is expressed by an integrate and fire (namely,IF)model,and its key points include(1)the formula of membrane potential,ui(t)and(2)generation mechanism of a pulse.There is only one capacitor in the IF model

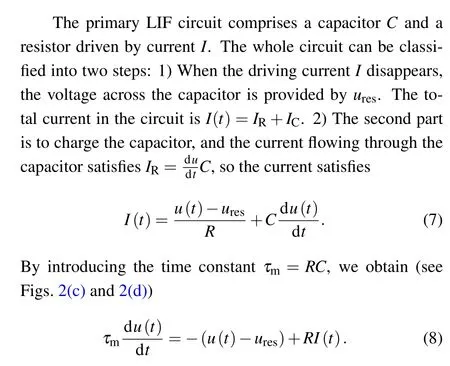

In general,the action potential of neurons is mainly realized by the dynamic selectivity of Na+and K+.The classical Hodgkin and Huxley[22]model equates the potential of various ions to a battery,refers to gated channels as a regulated variable sodium resistance,and equates the passive nature of the membrane potential to a capacitor.The above are then connected in parallel to obtain a model of the electrical properties of the neuron.If considering only the passive characteristics of membrane potential and the triggering of action potential, we can derive a LIF model that simplifies the original H-H model to a parallel connection of a capacitor,a battery,and a variable resistance,namely,leaky integrate and fire model(see Fig.2(a)).

Usually,the LIF model is very similar to the real biological neuron.The cell membrane is not a perfect insulator(it is a capacitor),so it will constantly exchange ions inside and outside the membrane.As a consequence,the surface charge will slowly leak from the membrane (see Figs.2(a) and 2(b)).[23]When there is only one input, the voltage will automatically leak and return to the rest stateures.In the LIF model, it is generally believed that the potential will first drop belowuresand then rise to the resting potential(see Figs.2(c)and 2(d)).

Fig.2.Leaky integrate and fire model.(a)A neuron,which is enclosed by the cell membrane(big circle),receives a(positive)input current I(t), which increases the electrical charge inside the cell.The cell membrane acts like a capacitor in parallel with a resistor, which is in line with a battery of potential ures (zoomed inset).(b) The cell membrane reacts to a step current (top) with a smooth voltage trace (bottom).(c) Detailed activity of membrane potential during one pulse arrival.(d) Simulation of LIF model with ures =-60 mV, upeak =30 mV and uini=-70 mV.

Assuming that a spike signal is received at time 0, hence the membrane potential isUres+Δu.If the subsequent input current is 0,after sufficient time,the membrane potential will relax to the resting potential exponentially, and the solution of the equation is

To summarize, the core idea of the LIF model is that if the input of the pre-neuron does not exceed the threshold, the acquired potential will return to the resting state.Suppose a neuron receives several pulses and integrates them to reach a threshold point.In that case, it will emit a pulse signal that stimulates another post-neuron,causing a refractory period after firing.Even if a stimulus is given to the firing neuron,it will not provide a corresponding response.[24]Based on the study of brain neurons,a variety of SNN mathematical models have been created,and several SNN functions have been realized using different devices,some of which will be described below.

2.1.LTP/LTD

2.1.1.Physiological mechanisms

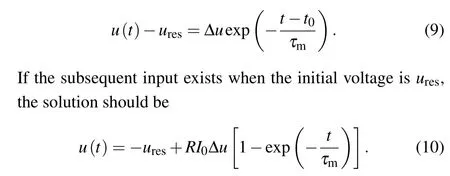

Long-term potentiation and long-term depression are two significant phenomena(see Fig.3(a))in neuroscience that underlie synaptic plasticity, which correspond to the persistent strengthening or weakening of synapses due to recent activity patterns.Long-term potential (LTP) was first discovered in the rabbit hippocampus.Suppose that there are two connected neurons CA3 and CA1 (see illustration in Fig.3(b)).When we impose a single spike on the axon of CA3,we shall obtain a low degree of potential change from B’s postsynaptic membrane.If we stimulate the pre-neuron A with a highfrequency signal, we can get a high level of potential difference(2-3 times the potentials before)after imposing the same single spike on the axon of the pre-neuron.Interestingly, the phenomenon lasts for several hours or even a few days,and we refer to the appearance as long-term potentiation.[25]Regarding long-term depression, we should utilize a low-frequency stimulation on the pre-neuron instead and get a lower degree potential than the original one.

LTP is usually classified into two stages, namely, early-LTP[26]and late-LTP,[27]both of which exclaim that exerting repetitive stimulations on the presynaptic cell can enhance the synaptic efficacy of postsynaptic.The physical mechanisms of LTP and LTD are still for discovery,however,some successful mathematical models have been developed, such as the Hebbian learning model.[28,29]The core of the Hebbian learning model considers that continuous and repetitive reflexes tend to cause persistent changes in cells, increasing the cell’s stability.When CA3’s axons are close enough to stimulate CA1 and repeatedly to stimulate it,one or two cells will experience some metabolic changes, which makes the efficiency of CA3(as firing cells of neuron CA1)increase.

2.1.2.Mathematical model

Hebbian theory focuses on how neurons might connect themselves to become engrams.From the perspective of ANN,it can be described as a method to determine how to change the weight between different neurons.The weight increases if both neurons are activated simultaneously and reduce if activated separately.Within binary neurons(spiking activations),connections would be set to 1 if the connected neurons have the same activation for a pattern.When several training patterns are used,the expression becomes an average of individual ones[30]

wherewijis the weight of the connection between neuronsiandj,xkithekth input from neuroni,andpthe total number of input patterns.A classical mathematical model is BCM theory, which modifies the traditional prior-Hebbian model with biological and experimental justification.

The BCM model adopts a sliding threshold(see Fig.1(c))for LTP and LTD induction, and points out that a dynamic adaptation of the time-averaged postsynaptic activity stabilizes synaptic plasticity.In the BCM model,a postsynaptic neuron tends to experience the LTP process if it is in a higher-reactive state (e.g., pre-neuron is firing at high frequency) or LTD if it is in a lower-reactive condition.[31]The BCM model can be expressed as follows:

In the above equations,the neuron output is assumed to be the result of the linear superposition,and the weight decays exponentially with the magnitude ofε.The expressions also define the modified activity threshold as the power of the mathematic expectation of the neuron’s output divided by a constanty0(see Fig.3(d)).

Fig.3.Long-term potentiation and long-term depression.(a) Physiological mechanism schematic diagram of the LTP.A.During normal,low-frequency synaptic transmission, glutamate released from CA3 Schaffer collateral axons acts on both NMDA and AMPA.But sodium and K+ can only flow through AMPA, for NMPA is blocked by Mg2+.B.During high-frequency tetanus, the large depolarization of the postsynaptic membrane relieves the Mg2+, allowing Ca2+, Na+, and K+ to flow through these channels.C.Second-messenger cascades activated during induction of LTP have two main effects on synaptic transmission: phosphorylation through activation of AMPA.In addition,the postsynaptic cell releases retrograde messengers that activate protein kinases to enhance subsequent transmitter release.[32] (b)LTP is the persistent increase in synaptic strength following high-frequency stimulation of a chemical synapse.[33] (c)Sliding threshold for LTP or LTD in BCM rule.(d)Simulation of weight,threshold,and output of a-7-input-neuron model after 3000 iterations based on BCM method.

2.2.STD/STF

2.2.1.Physiological mechanisms

Synaptic plasticity is the lasting phenomenon or change of synapses’strength,function,and efficiency characteristics.Short-term memory plasticity (STP), also called dynamical synapses, refers to the appearance in which synaptic efficacy enhances or weakens over time to reflect the history of presynaptic activity.[34,35]There are two opposite effects on synaptic efficacy: Short-term depression (STD) and short-term facilitation(STF).Unlike LTP,STP has shorter time scales that last for a hundred milliseconds and will quickly return to the ground state level.[36]

The signaling process at the terminal of a presynaptic neuron’s axon consumes neurotransmitters.STD should happen as the number of neurotransmitters between two short interval pulses is too poor to transmit potential signals, which leads to the decrease of the membrane potential of the postsynaptic neuron.At the same time,STF is induced by an influx of calcium ions into the axon terminal after the arrival of a spike, which raises the release probability of neurotransmitters.

STP plays a profound role in brain functions.From the perspective of computation, the time scale of STP lies between fast neural signaling (on the order of milliseconds)and experience-induced learning (on the demand of minutes or more), which has the same time scale of many behaviors in daily life such as motor control, speech recognition, and working memory.Hence, it is vital to establish a neural substrate using STP to cope with temporal information on the relevant time scales.STP claims that the postsynaptic neuron response depends on the presynaptic activity history,which is a process of extracting and using historical information.In a large network, the STP can greatly enrich the network’s dynamic behavior, conferring information processing capacities that would be difficult to implement using static connections on the neural system.

2.2.2.Mathematical model

According to Tsodyks and Markram’s theory, STD is modeled by a normalized variablex(0≤x ≤1),representing the fraction of resources that remain available after neurotransmitter depletion.The STF effect is expressed by a parameteru,which denotes the fraction of available resources ready for utilization (namely, release probability).The arrival of each spike will induce the influx of calcium on the pre-synaptic membrane, which increases the release probabilityu.A total neurotransmitter ofxuis consumed in order to produce a postsynaptic spike.Between spikes, the releasing probabilityuwill decay back to 0 with time time-constantτfandxwill recover to 1 with time constantτd.[37]To summarize,the dynamics of STP can be expressed by

wheretspdenotes the arrival time of spikes, andUis the increment ofuinduced by a spike.The upper label ‘±’ corresponds to the time after and before the arrival of spikes.It can be deduced thatu+=u-+U(1-u-)from Eq.(15)andfrom Eq.(17), whereArepresents the response amplitude produced by the total release of all the neurotransmitters (u=x=1), called the absolute synaptic efficacy of the connections.

Whent=tsp, the release probabilityuwill rise and lead to STF.The moment STF appears, the number of available neurotransmitters should decrease,resulting in STD(see Fig.4(a)).After the spike,urecovers to 0 with a constantτfandxreturns to 1 withτd.It can be seen thatuandxare inversely kinetic processes, and the productE=uxcharacterizes whether the synaptic efficacy is dominated by STD or STF.(1)Ifτd ≫τf,andUis within an extensive enough parameter range, the significant drop inxcaused by an initial spike takes a long time to recover to 1,and is therefore STDdominated(see Fig.4(b)).Whenτf ≫τd,andUis small,the synaptic efficacy increases gradually as the spike arrives, so the synapse is STF-dominated(see Fig.4(c)).

STP can change the activity or number of AMPAR or NMDAR receptors on the postsynaptic membrane according to the history of presynaptic activity and then affect the distribution of potentials on membrane.STD-dominated synapses are suitable for low-frequency signal transmission, whereas STF is used for high ones.By adding a perturbation term to the firing rate,the filtering characteristics of dynamic synapses can be derived when the firing rate of the presynaptic population changes arbitrarily with time so as to improve the transmission of neuronal information.

Fig.4.Short-term plasticity and spike-timing dependent plasticity.(a)Two inversely kinetic processes of STD and STF.[37] (b)The dynamics of an STFdominating synapse.(c) The dynamics of an STD-dominating synapse.(d) A basic model of STDP and an illustration of STDP learning window.(e) A simulation of the basic STDP model, where there are 800 excitatory neurons and 200 inhibitory neurons.(f) An illustration of online implementation of STDP models.Top: A presynaptic spike leaves a trace xj(t) which is read out (arrow) at the moment of the postsynaptic spike.The weight change is proportional to that value xj(tpost i ).Bottom: A postsynaptic spike leaves a trace y(t)which is read out(arrow)at the moment of a presynaptic spike.[38]

2.3.STDP

2.3.1.Physiological mechanisms

Spike-timing dependent plasticity (STDP) is one form of Hebbian learning model with temporal asymmetry, which is influenced by the tight temporal correlations between the spikes of pre-and postsynaptic neurons.For STDP,if repeated and continuous presynaptic pulses arrive a few milliseconds before the postsynaptic pulse fires, it should undergo an LTP process.While LTD is triggered as a repetitive presynaptic pulse arrives after a postsynaptic one.The change in synaptic potential can be plotted as a function of the time difference between the pre- and post-synapses, and we call it the STDP function or the learning window(see Fig.4(d)).This function varies depending on the type of synapse.The fast variation of the STDP function with the relative timing of spikes indicates the possibility of time coding on a millisecond time scale.

Postsynaptic neurons will fire shortly before or after injecting short current pulses to stimulate presynaptic neurons(or presynaptic pathways).The short interval pulses are typically repeated 50-100 times in pairs at a fixed frequency.The synaptic weight is measured as the postsynaptic potential’s amplitude(or initial slope).The change in synaptic weight is plotted as a function of the relative time between the arrival of the presynaptic spike and the postsynaptic one(see Fig.4(d)).STDP is considered biologically plausible.In the intact brain,action potentials are usually timed precisely according to external stimuli,although this does not apply to all brain regions and cell types.[39,40]

2.3.2.Mathematical model

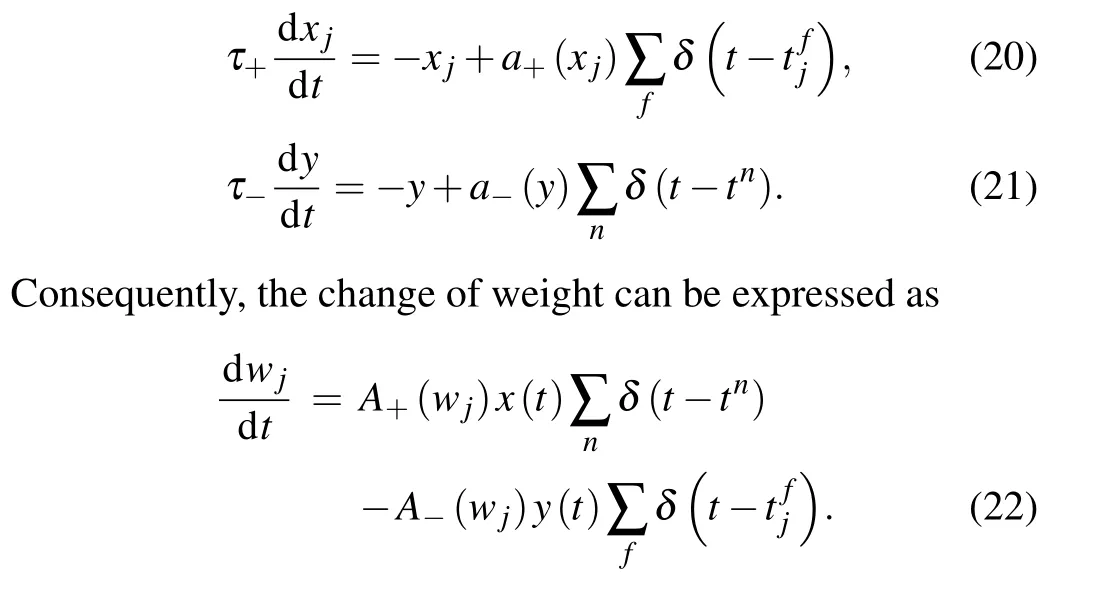

ParameterA+andA-might rely on the values of current weight,[39]and the time constantτis about 10 milliseconds(see Fig.4(e)).

STDP function in the equation above can be implemented based on an online update principle by utilizing the following assumptions.Each presynaptic spike arrival leaves a tracexj(t)which is updated by an amounta+(xj)at the moment of spike arrival and decays exponentially without spikes.Similarly, each postsynaptic spike leaves a tracey.The trace can characterize back propagation caused by potential synaptic voltage or calcium influx caused by back propagation potential.

Therefore,weight increases to a certain number at the instant of the emission of postsynaptic pulse, and the increase depends on the presynaptic pulse tracexj.By the same token,the weight is depressed at the moment of presynaptic spikes,and the decrease depends on the previous postsynaptic pulse tracey(see Fig.4(f)).

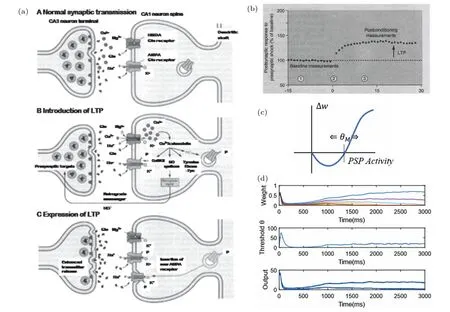

The training process, weight assignment of connecting presynaptic and postsynaptic neurons, is essential for constructing SNN based on the synaptic devices.The training methods of SNN can be divided into the following types: supervised learning, unsupervised learning, and ANN transformation into SNN models.(1) Supervised learning: The typical SNN model is composed of three processes: charging,discharging,and resetting potential,and when the membrane potential exceeds the threshold,the pulse is released,which corresponds to an underivable step function,so the BP algorithm cannot be used to correct the weight.To achieve supervised learning, people use the sigmoid function to approximate the step function to realize backpropagation.Another path is to use the time-based learning method,that is,using a continuous function to substitute the discrete relationship of potential concerning time and then to derive time according to a whole series of spike signals to complete backpropagation.[43,44]These two paths are based on gradient settings and lack biological rationality.(2) Unsupervised learning: The algorithm structure is designed by imitating the synaptic plasticity mechanism existing in biological neurons, and unsupervised learning can be realized in SNN.[45,46]Synaptic plasticity refers to the impulse response of a pair of neurons in the presynaptic membrane and postsynaptic membrane that affects the weight of synapses between the two(such as STDP),and algorithms based on synaptic plasticity usually use the learning rateAand the time constantτto characterize the potential informationu(t).(3) Transformation from ANN to SNN: Caoet al.propose a method to tailor the CNN architecture to meet the requirement of SNN, train the untrimmed CNN in the same way as a traditional CNN would do, and finally apply the outcome weights derived from the tailored CNN to an SNN architecture.[47]In addition, there are a variety of ANN-to-SNN conversion methods.[48,49]However,the converted SNNs perform poorly during inference,making them undesirable.

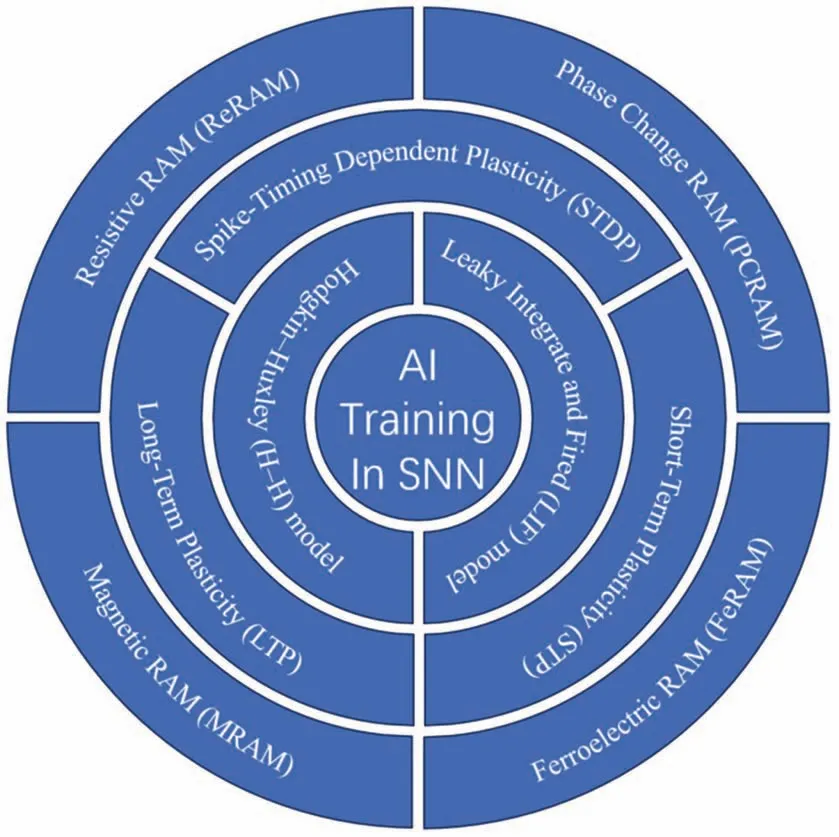

Fig.5.The hardware implementation of SNN architecture.

The architecture of SNN is shown in Fig.5.The LIF model, H-H model, and other models were proposed as inspired by brain neural networks to achieve neuromorphic computing with high efficiency and low energy consumption.Implementing neuromorphic computing needs neuromorphic devices that mimic synaptic plasticity(such as long-term,shortterm, and spike-time-dependence plasticity).The neuromorphic devices will be discussed in the next section.

3.Artificial synapse and neuron device

For the realization of hardware-based SNNs, development of an artificial synapse and neuron is crucial.As shown in Fig.6(a),the biological synapse is the connection between two neurons, which can memorize the synaptic weight and control the weight with synaptic plasticity.Normally, the synaptic weight is represented as a tunable electrical conductance,which can reflect the synaptic plasticity.Most of the artificial synapses accept the voltage input from the presynaptic neurons and emit the current output to the postsynaptic neurons, as a biological synapse does.So, the basic requirement of synaptic devices is that the connection strength that usually appears as conductance or resistance can be modulated by external stimulus.Since neuromorphic systems possess the ability to remember and calculate at the same time,most of the artificial synapse devices are evolved from memory devices or memory cells.Based on different physical mechanisms,resistive random access memory(ReRAM),phase change random access memory(PCRAM),ferroelectric random access memory(FeRAM),and magnetic random access memory(MRAM)are among the most promising artificial synapse devices.

Fig.6.(a) Biological neurons receive input from other neurons through connected synapses.[52] (b) An artificial LIF neuron model with accumulating inputs.[24] (c)A H-H neuron circuits using memristors.[51] (d)Schematic illustration of various artificial neuron devices.[52]

By contrast, the neuron generates spike signals as the output only when the input transmitted from the synapses is above a threshold as shown in Fig.6(a).A threshold function is to judge whether signals are sufficiently collected from the synapses.Most of the artificial neurons take the current input from the previous synapses and produce the voltage output to the next synapses as the form of spiking.ReRAM,PCRAM,FeRAM and MRAM all can switch freely between high and low resistance states, which can help to realize the threshold judgement.But it is difficult to realize the neuron function in single device.Additional devices or circuits,such as capacitor or reset circuits,are need to complete the entire neuron function.For example, the threshold switching memristor (TSM)neuron acts as a post-neuron to integrate the signals from multiple inputs via a capacitor.[50]In Fig.6(b),when the accumulated capacitor potential approaches a certain value,the neuron fires and outputs a spiking pulse.[24]The H-H neuron model was built using memristors as shown in Fig.6(c).This circuit can replicate complex neuronal dynamics,such as all-ornothing pulses,bifurcation thresholds,signal gain,and the refractory period of continuous pulse firing states.[51]As illustrated in Fig.6(d), different from voltage input (Vin) and current output(Iout)operation mode of the synapse,each artificial neuron has a different operation scheme according to whether its input and output are the voltage and current or vice versa,as the working principles differ.[52]Considering that a biological neuron accepts ions from the synapses and generates a membrane potential as the form of spiking,an artificial neuron device does better to follow this operation mode,it receives current input(Iin)and fires voltage output(Vout).It is worth noting that numerous traditional synapses update their weights according to each analog conductance change and produceIout.The collectedIoutfrom the synapses is applied to a neuron asIin,then the neuron gives rise toVout,which will beVinto the next synapses.On the contrary, when the neuron device acceptsVinor generatesIout,an additional current-voltage(I-V)converter is necessary between the neuron and the synapses owing to the reversed operation mode.

3.1.ReRAM

ReRAM devices, also called memristors, usually own a simple metal/insulator/metal sandwich structure with two terminations (anode and cathode).The insulator layer between the anode and cathode is a resistive layer.When applying appropriate voltage (consecutive sweep or pulse) to the cathode, the conductivity of the resistive layer could change significantly.The conductive change can be maintained even if the voltage signal is removed, called a nonvolatile device.In another case, the resistance changes at above threshold voltage and recovers to the initial value after the voltage signal is removed, called a volatile device or threshold switching.Using this threshold switching, neuronal IF operation can be achieved.First, when the input is applied in the form of current, the charges are integrated in the membrane capacitor because the device is in the high resistance state (HRS) initially.The voltage applied to the threshold switching memristor is then elevated by the integrated charges in the membrane capacitor.When the applied voltage reaches the threshold voltage,the integrated charges are fired because the device changes to the low resistance state (LRS).This type of LIF operation induces neuronal spiking.[24]

The “set” operation is to change the device from HRS to LRS, and the “reset” is the opposite operation.As shown in Fig.7(a), there are two types of switching characteristics:unipolar switching and bipolar.For unipolar switching,the set and reset operations can be done with voltage pulses with the same polarity, while for bipolar switching, the voltage pulses to induce the set and reset operations are of different polarities.

The mechanism of resistive change is related to the forming and rupture of filamentary in the resistive layer accompanied by the mitigation of cation or anion.The mechanism can be categorized into valence change mechanism (VCM), electrochemical metallization(ECM),and thermochemical mechanism (TCM).As TCM cannot be effective independently,subsequent discussion will be divided into VCM, ECM, and hybrid mechanisms.

3.1.1.VCM

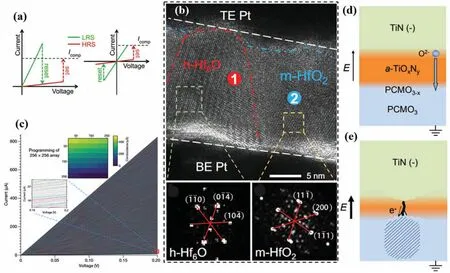

Typically, the resistive layer material is a metal oxide film, such as HfO2, Ta2O5, TiO2, etc.Oxygen atoms could be knocked out of the lattice of metal oxide and migrate to an anode under an electric field induced by the applied voltage.Oxygen anion migration will leave oxygen vacancies, which can be viewed as a type of defect.The localized oxygen vacancies lead to the formation of the sub-oxide phase as a conductive filament, which connects the anode and cathode and results in the resistive switching from HRS to LRS.As shown in Fig.7(b),the conductive filament system in an HfO2-based device is a quasi-core-shell structure consisting of a metallic hexagonal-Hf6O phase and its crystalline surroundings(monoclinic or tetragonal HfOx).The metallic hexagonal-Hf6O phase is a typical sub-oxide phase.[53]

To form the conductive filament,enough oxygen ions and oxygen vacancies must be imported into the resistive layer.But the pristine resistive layer, especially the devices fabricated by atom layer deposition(ALD),possesses not so many defects.[56,57]So,the applied voltage of the first-time resistive switching is usually larger than the subsequent switching processes,which guarantees the migration of enough oxygen ions to form the filament.The switching process from the original state to LRS is called forming,while subsequent switching from HRS to LRS is “set” and opposite switching is “reset”.It is not desirable to own a large forming voltage in a practical device.Thus, a lot of efforts were made to fabricate the“forming-free”devices.The forming voltage is demonstrated to be linearly dependent on the thickness of the oxide film.[58]Hence,a thinner film,such as 3 nm,was designed to reduce the forming voltage.In addition,introducing oxygen vacancies in the oxide film by controlling the annealing ambient was also reported to reduce the forming voltage.[59,60]

Fig.7.VCM devices: (a)typical switching behavior of RRAM;(b)HRTEM of a complete CF in the LRS device;[53](c)2048 resistance levels of an individual device;[54] (d),(e)schematic models illustrating resistive switching to LRS.[55]

The reset process, switching from the LRS to the HRS,can be explained by the rupture of the conductive filament.On the contrary to the forming or set process, it is necessary to apply a negative voltage pulse or sweep on the anode, which would drive ions to migrate to the cathode and neutralize the oxygen vacancies.The sub-oxide phase would partially oxidize,while the conductive filament would partially“dissolve”and break.[61]Then the resistance state of the device will increase.However, the resistance of the device cannot return to its initial state as the conductive filament cannot be fully dissolved.[62]The remaining defect-rich region is often called the“virtual electrode”that facilitates the formation of conductive filaments in the subsequent set operation.

As mentioned earlier, in SNN applications, memristors are required to have high and low resistance states (neuron)and stable multi-resistance state(synapse)characteristics,that is, analog devices.Analog devices are easier to implement in the VCM mechanism, and the explanation for the multiresistance state characteristics is mainly due to the different number or cross-sectional area of conductive filaments corresponding to different resistance states.In order to obtain stable and linear simulation characteristics, many studies have been carried out.[63-66]The 60 nm thick TaOxthermal enhancement layer was believed to facilitate the formation of multiple conductive filaments, thereby achieving analog resistive properties.[67,68]Recently,2048 conductance levels in a memristor were achieved through an electrical operation protocol to denoise the memristors for high-precision operations.The denoising process has been successfully applied to the 256×256 crossbars using the on-chip driving circuitry designed for regular reading and programming without extra hardware.[54]

Another VCM device has no conductive filament, which is based on interfacial redox reactions.Such a reaction involves oxygen vacancy exchange between two material layers.A typical example is the memristive device based on redox processes occurring at the electrode/oxide interfaces, leading to varying thicknesses of interfacial layers and, thus, different resistance states.Since such redox processes take place uniformly at the interface,it avoids the stochastic filament formation process and ensures good device-to-device and cycleto-cycle uniformity.As shown in Figs.7(d) and 7(e), for a Pr0.7Ca0.3MnO3(PCMO)/TiN-based device,in the high resistance state,a TiOxNyoxide layer is formed at the PCMO/TiN interface,which functions as an electron barrier that limits the current flowing through the device.When a positive voltage is applied to the anode,the reduction would first occur inside the TiOxNylayer, reducing its thickness, improving the device’s conductivity to turn it into the low resistance state,and generating moveable oxygen ions in the layer.These oxygen ions would then drift from the TiOxNylayer to the PCMO layer under the driving force of the local electric field and oxidize the PCMO.If a negative voltage is applied to the anode,a reverse redox process would take place,which increases the thickness of the TiOxNylayer and turns the device from LRS to HRS.[55]

3.1.2.ECM

Another primary type of operation mechanism used for neuromorphic devices is ECM.In devices based on such a mechanism,the resistive change is realized by the migration of metal ions and the formation of a metallic conductive filament between the electrodes.Metal ion migration-based devices typically possess a two-terminal metal/electrolyte/metal structure.Contrary to VCM-based devices,active metals are often adopted as the anode material or are doped into the electrolyte material in these types of devices to ensure metal ion migration under electric field control.In contrast,the cathode materials can still be inert metals,conductive nitrides,and oxides.The redox of metal atoms and ions at the electrodes and in the electrolytes is essential for the switching operation.[69,70]

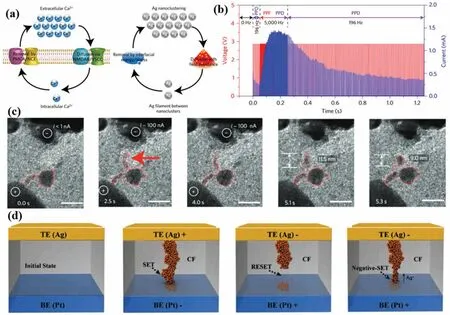

In biological synapses,the accumulation and extrusion of Ca2+in the pre-and postsynaptic compartments play a critical role in initiating plastic changes, as shown in Fig.8(a).The ECM devices are designed to emulate this biological process in electronic devices in the use of diffusive metal cations(i.e.,Ag+and Cu2+).[20]

In typical ECM devices, followed by the incorporation of cations, a positive voltage would be applied to the anode, which continuously oxides the anode surface, providing enough cations to form the conductive filament.At the same time, counter-reactions might happen simultaneously at the cathode/electrolyte interface to maintain charge neutrality.The cations accumulated at the cathode surface would be reduced to metal ions and grow larger.Under the control of the local electric field, the cations would migrate and gradually form the conductive filament.As shown in Fig.8(c),the evolution of the conductive filament has been observed with different biases at different times.The same spontaneous rupture of the conductive filament and a consequent clustering process are also observed, which leads to current decay in the electrostatic performance of the ECM device.[71]Based on such ion dynamics, Wanget al.demonstrated a diffusive memristor, which could experience the same set process from high to low resistance.However, after the set voltage is removed,the conductance of such a device could spontaneously relax to a relatively high state,resulting in the realization of volatility,namely,short-term plasticity for neuromorphic computing.It has also been shown in Fig.8(b) that prolonged or excessive stimulations with high-frequency pulses will eventually lead to an inflection of ECM device from facilitation to depression, an effect solely induced by an increased number of stimulation pulses at the same frequency, which is similar to bio-synapses.[20]

Generally, the operation of ECM devices also requires a forming process to incorporate the metal ions into the electrolyte layer,a set process to ensure metal ion migrations and the formation of the conductive filament, which leads to the switch from the HRS to the LRS, and a reset process to dissolute the filament and switch the device from the LRS to the HRS.

Fig.8.ECM devices.(a) Schematic illustration of the analogy between biological Ca2+ and biomimetic Ag+ dynamics.[20] (b) Short-term synaptic plasticity of the ECM device.[20] (c) In situ HR-TEM observation of Ag diffusion process.[20] (d) Schematic illustrations of the switching mechanism of ECM device.[71]

In practice operation, negative-set processes may exist.As shown in Fig.8(d), when a higher negative voltage is applied, the Ag precipitation in the bottom electrode as a local anode is oxidized and supplies Ag into the electrolyte.[71]Most of the Ag will move to the tip of the residual filament where the maximum electrical field exists in the electrolyte layer.When enough Ag is reduced to Ag atoms in the gap region, the filament will be reconnected to the bottom electrode,and the device will turn to LRS again.This contributes to the negative set process.Both the negative set and reset processes would increase the instability of the ECM cell and lead to computational failures.

In addition, the key benefits of memristor are their compactness with a 4F2footprint and low operation voltage.These characteristics allow reductions in the hardware area and required electrical power of the neuromorphic system.Such memristor-based synapses and neurons can be monolithically integrable over pre-existing CMOS circuitry with the aid of a back-end-of-line approach.However,their use can lay a heavy burden on the back-end-of-line and prolong the turnaround time owing to the increased number of fabrication steps compared to the cointegration on a coplanar wafer surface.

3.2.PCRAM

PCRAM devices have a high resistance state and a low resistance state.The set and reset processes in PCRAM devices are based on the crystallization and amorphization of the phase change materials.As shown in Fig.9(a),[72]by applying a low but wide voltage pulse, the set process can be realized.The growth of nanocrystals happens gradually until the amorphous region is finally transferred into a polycrystalline material.The PCRAM device gradually switches from the HRS to the LRS during the set process.During the reset process, a high but short voltage pulse is applied, melting part of the phase change materials.Such melted materials would cool down very quickly,forming an amorphous region.Such an amorphous region has lower electron density, which results in the resistive transition from the LRS to the HRS.From this working mechanism,stochastic spiking characteristics of synapse can be achieved.Although the PCRAM neuron has a low operation voltage and a high frequency, it requires additional circuits to detect the resistance and provide the appropriate reset pulse.

Fig.9.PCRAM.(a)Schematic illustration of resistive switching in PCM device.[72] (b)Schematic band structure of the GST.[73] (c)Model configurations demonstrating the atomic rearrangements during the phase transition.[75] (d) Crystallization process of SST with a crystalline embryo in the middle at ~600 K.[76] (e)40 pulse voltage of 0.8 V and 100 ns was operated for the cumulative SET.[77] (f)HR-TEM image for the phase-change heterostructure of Sb2Te3/TiTe2 before and after irradiating with strong electron beams for 5 min.[77]

Ge2Sb2Te5(GST) is a typical phase change material whose electron conduction at both crystalline and amorphous states is shown in Fig.9(b).[73]In both cases,the Fermi level lies close to the valence band, while the material conductivity is p-type.There is a valence band discontinuity between the crystalline and the amorphous GST.With the presence of structural vacancies in the crystal leading to acceptor-like traps,the pronounced band tail of the valence band and a high density of donor/acceptor defect pairs appear in the amorphous GST.[73,74]The microscopic origin of the crystallization in GST has been reported,[75]which illustrates that the fourfold rings of Ge inside the GST material accelerate the nucleation process and lead to the relatively high crystallization speed,as shown in Fig.9(c).Proceeding nuclei inside the amorphous region of GST can bypass the incubation process and ensure sub-ns crystallization speed.

Besides GTS,other materials were explored for faster and more stable crystallization speed.The scandium antimony telluride (Sc0.2Sb2Te3, SST) compound was designed to allow a writing speed of only 700 ps without preprogramming in a large conventional PCM device.[76]This ultrafast crystallization stems from the reduced stochasticity of nucleation through geometrically matched and robust scandium telluride(ScTe) chemical bonds that stabilize crystal precursors in the amorphous state.In DFMD simulations, the crystalline precursor made of ScTe cubes (~50 atoms in a four by four by four SST supercell made of 428 atoms) can stand robust against thermal fluctuations at~600 K in the absence of artificial constraint, serving as the center for subsequent crystallization(Fig.9(d)).

New phase-change heterostructure that consists of alternately stacked phase-change and confinement nanolayers to suppress the noise and drift allows reliable iterative RESET and cumulative SET operations for high-performance neuroinspired computing, as shown in Fig.9(e).[77]To observe the phase change evolution in the W/Sb2Te3/TiN device, it was characterized by the HR-TEM under irradiation of intense electron beams.The HR-TEM results in Fig.9(f) reveal the distinguishable regions in crystalline and amorphous states.Namely,the conductance of W/Sb2Te3/TiN phase change device was reconfigured by the irradiation of electron beam.The growth of the crystal in the amorphous region was dominated by the irradiation time.The resistance of the W/Sb2Te3/TiN memristor as a function of the number of the bias voltage pulse of 0.8 V and 100 ns showed a gradual SET behavior,in which the dynamic process was diagrammed as shown in Fig.9(e).The iterative RESET and gradual SET for the W/Sb2Te3/TiN device demonstrate that the information storage is nonvolatile,making it a desirable emerging device for neuromorphic computing.

PCRAM appears as a promising candidate for neuromorphic computing due to its great maturity, excellent stability,low operation power, and promising scalability.Intel Xpoint Memory as an PCRAM with a double storage-selector stacked memory cell based on GTS materials demonstrated the technical feasibility of large-scale integration.

3.3.FeRAM

Ferroelectric tunnel junction (FTJ) comprises two electrodes separated by an ultrathin ferroelectric tunnel barrier,which is about 3-4 nm to allow electrons to tunnel through and maintain their ferroelectric nature.The reversal of spontaneous polarization in the ferroelectric tunnel barrier alters the internal electric field profile across the FTJ in conjunction with the different charge screening characteristics of the two electrodes composed of different metals,consequently changing the tunneling current.As shown in Fig.10(a),[78]supposing the initial electronic potential barrier is rectangular,polarization charge effects would induce an asymmetric modulation of the electronic potential profile.The asymmetry of the electronic potential profile results in a higher average barrier height(Φ)when polarization points to the left(Φ+)than when polarization points to the right (Φ-).Since the tunnel transmission depends exponentially on the square root of the barrier height,the junction resistance will strongly depend on the polarization direction.The asymmetry between the two ferroelectric contact interfaces is thus essential to modulate the current transmission of the ferroelectric barrier.

Ferroelectric materials such as the BiFeO3,(Ba,Sr)TiO3and(La,Sr)MnO3were extensively studied for the ferroelectric switching mechanisms and applications.As shown in Fig.9(b),[76]experimental results demonstrated that the resistance change of the BaTiO3/La0.67Sr0.33MnO3(LSMO) was dominated by the proportion of specific polarization direction in the tunneling layer.[81]As for BiFeO3, combining scanning probe imaging in Fig.10(c), electrical transport, and atomic-scale molecular dynamics, the conductance variations can be modeled by the nucleation-dominated reversal of domains.The 3D polarization configuration of the center-type domain structure in the BiFeO3nano-island was diagrammed in Fig.10(d), in which the color arrows denoted the different polarization vectors.

The square BiFeO3nanoislands were fabricated further to study the polarization direction and DW switching behavior-topography of BiFeO3(001)thin film self-assembles as ordered nano-islands.The STEM images in Fig.10(e)also reveal that the BiFeO3nano-island with a vertical 71°DW has head-to-head (H-H) polarization configuration, in which the CDW denotes the charged domain wall.The CDW with a~3-5 nm width is more significant than the neutral-charged DWs.Low-and high-conductance states of the BiFeO3nano-island have been obtained after applying a bias voltage of 1.5 V.The switching behavior is very stable in 100 cycles between the two conductance states, and the resistance ratio of>103can be well maintained.Thus,the BiFeO3nano-island-based crossbar arrays with nonvolatile data storage can be used for synaptic devices.[79]

Ferroelectric neuron was also demonstrated with a leaky FeFET.[80]Compared to the nonvolatile devices for artificial synapse, the leaky FeFET utilizes accelerated degradation of polarization in a leaky ferroelectric hafnium zirconium oxide(HfZrOx)layer,meaning that it can be used in neuron devices with leaky characteristics.The voltage pulse applied to the gate of a leaky FeFET can change the polarization of the ferroelectric layer,and the drain current as the form ofIoutflows upon the application of a certain number of pulses.When the voltage pulse is not applied in the resting state,Ioutis reduced naturally owing to the rapid degradation of polarization in the leaky ferroelectric layer.Therefore,it can act as an LIF neuron in SNN.

Fig.10.FeRAM.(a)Band diagrams and schematics of FTJ in ON state,intermediated state,and OFF state.[78](b)Resistance versus the relative fraction of downward polarization domains for the BaTiO3/La0.67Sr0.33MnO3 (LSMO)junction.[76] (c)In-plane PFM image(left)and annular dark-field image showing a square-shaped BaFeO3(BFO)nano-island containing four sections(right).[79](d)Schematic of the 3D polarization configuration of the center-type domain structure in the BFO nano-island.[79] (e)HAADF STEM image of the H-H DW region.[79]

The HfZrOxis a commonly used material for ferroelectric devices and has extraordinary compatibility with the conventional CMOS technique because the HfO2dielectric has been widely used as a high-kgate dielectric in the current CMOS technology.Hence,it is believed to be a promising candidate for future large-scale SNN.

3.4.MRAM

Devices based on ferromagnetic materials have been systematically studied,providing excellent potential for artificial neuromorphic applications.As synaptic devices, MRAMs have relatively good performance in the read and write operations with the high speed (~ns), low energy consumption (~pJ) and excellent endurance (>108) characteristics.When the input pulses are applied, spike trains can be generated with a bias-dependent duty cycle,allowing it to behave as a stochastic neuron for an SNN.Among MRAMs,spin transfer torque(STT)magnetic tunneling junction(MTJ)and spinorbit torque(SOT)devices have attracted significant attention in the past few decades as promising candidates for neuromorphic computing.

3.4.1.STT

A unit MTJ has two metallic ferromagnetic layers separated by a tunnel barrier,as shown in Fig.11(a).[78]The tunnel barrier is a paraelectric insulating layer.The conductance of the MTJ is dominated by the magnetization directions of the two metallic ferromagnetic layers.The thicker ferromagnetic layer is used as the fixed layer, also termed the pinned layer(PL)where the spin polarization is pinned to a particular direction.The other thin layer is the free layer(FL),where the magnetization direction is changed by the injection of current.Importantly, intermediate resistance states between parallel and antiparallel magnetic directions are obtained for the free layer with injection current.The parallel magnetization process offers a higher probability of electron tunneling through the barrier of the MTJ than the antiparallel configuration.Thus,two distinct resistance states,the HRS of the antiparallel and LRS of the parallel structures,are observed in STT devices.[84]

The ferromagnetic fixed layer is spin-polarized in the defined magnetic direction ˆm.The electron injected into the PL externally has the same spin direction due to the magnetization of ˆm.If the concentration of injected electrons is low and not able to switch the magnetization direction of the FL,the MTJ remains in the HRS.If the concentration is high,a large number of electrons with the same magnetization of ˆmwill tunnel through the oxide insulator layer.The torque is induced by the difference between the magnetization of ˆmand FL.In this case, the magnetization of the FL gradually orients in the direction of ˆm.The intermediate conductance states of MTJ are useful for neural networks and analog computing.When the magnetization of the FL has the same direction of ˆm,namely,the magnetization parallel,the injected electron freely tunnels through the MTJ, the device switches from the HRS to the LRS.[85]

The tunnel barrier is an insulator with a thickness of about 1 nm made of a crystalline MgO material,which is the mostsuited tunnel barrier material up to date.[86,87]CoFeB alloys are the state-of-the-art ferromagnets employed in MTJs.[88]In these devices,the thickness of the CoFeB free layer has to be thinner than about 1.5 nm to favor the out-off plane orientation of its magnetization.

Although the read power consumption for MTJ could be extremely low, which is almost zero, the power consumption for writing could be much higher,making the power consumption up to~100 fJ/spike, which shows no significant advantage to other neuromorphic devices.Hence,many efforts were made to resolve the relatively large write power.

The electric field can modify the exchange coupling between two ferromagnetic layers through a non-magnetic spacer,which enables the magnetization switching without an external magnetic field.As shown in Fig.11(c),electric-fieldassisted reversible switching in CoFeB/MgO/CoFeB MTJ can be manipulated by voltage pulses associated with much smaller current densities.[82]

3.4.2.SOT

SOT-induced magnetization switching shows promise for realizing ultrafast and reliable spintronics devices.Typical structures of the SOT device employ a nonmagnet/ferromagnet(NM/FM)bilayer.The spin Hall effect(SHE)and Rashba effects originate from spin-orbit coupling within the NM layer and at the FM/NM interfaces,respectively,responsible for the switching.Insulator-ferromagnet-heavy metal (I-FM-HM)multilayer structures in Fig.11(b) own much greater spin injection efficiencies due to strong spin-orbit interaction.When a charge current flows through the underlying HM, SOT is generated at the FM-HM interface.[78]Although the cause of SOT can be attributed to two possible origins,SHE is usually the dominant underlying physical phenomenon.Due to the flow of charge current through the HM, electrons with opposite spins scatter on the top and bottom surfaces of the HM.The spin-polarization is orthogonal to both the directions of the charge current and injected spin current.These electrons repeatedly experience spin-scattering while traveling through the HM, transferring multiple units of spin angular momentum to the FM lying on top.The magnitude of injected spin current density (Js) is proportional to the magnitude of input charge current density(Jq),with the proportionality factor being defined as the spin-Hall angle(θSH<1).Hence,the input charge to spin current conversion is governed by the following relation:

whereIsandIqare the input spin current and charge current,respectively,WFMis the width of the FM lying on top of the HM, andtHMis the HM thickness.By ensuringWFM>tHM,high spin injection efficiencies greater than 100%(Is>Iq)can be achieved.

This has been suggested to induce switching of the perpendicular magnetic component within the trilayers of inplane CoFeB/Ti/perpendicular CoFeB(Fig.11(d)).[83]Meanwhile,when additional crystal or magnetic symmetry breaking is introduced,perpendicular and longitudinal spins can also be generated, e.g., in low symmetry crystals, non-collinear antiferromagnetic crystals with magnetic asymmetry or magnetic SHE, and also some collinear antiferromagnet crystals with spin conversions or spin splitting effect.

In addition, considering that the MRAM should be formed in the back-end-of-line, it can also give rise to fabrication complexity,especially on the back-end-of-line,and delay the turnaround time owing to the increased number of the fabrication steps.

4.In-sensor computing

With the rapid development of information technology,the volume of data has shown a blowout growth, which also puts forward higher requirements on the computing power,and the demand for the application of artificial intelligence in various fields is increasing, especially when dealing with complex,perception-and decision-related tasks,which require more complex and efficient computational models.However,with the unfortunate fact of the gradual failure of Moore’s law,an efficient process structure must be proposed.Biological neural systems, with their powerful parallel computing and adaptive learning capabilities,are able to process a large number of complex and changing signals in the surrounding environment quickly and in real time with low energy consumption and high arithmetic power, which makes neuromorphic computing a possible solution for the development of AI.

Inspired by biological neural systems,in-sensor computing architecture has been proposed and artificial neurons have been prepared triumphantly.Researchers have successfully simulated the biological senses to realize the processing of complex signals, including touch, smell, hearing, and vision,which acquire the most information based on the related research results.

In 2017, Shulakeret al.from Stanford University prepared a 3D vertically stacked chip that interconnects sensors and processing circuits in the vertical direction,realizing the integrated processing of sensing, storage, and arithmetic in a single chip, which shortens the data handling distance through vertical interconnections and enablesin-situprocessing of data, and ultimately succeeds in distinguishing nitrogen from six types of vapors: lemon juice,white vinegar,rubbing alcohol, vodka, wine and beer.[89]In 2012, Hsiehet al.from National Tsing Hua University, Taiwan of China developed a low-energy SNN chip with odor recognition function,which used circuits to simulate the functions of relevant cells and structures in the olfactory system, such as mitral valve cells,STDP synapses,sebaceous cells,etc.The chip was fabricated by TSMC’s 0.18-µm CMOS process,with a chip size of 1.78 mm2.The average power consumption was 3.6 µW,and the test accuracy was 87.59%.[90]

In 2017, TermehYousefiet al.at Kyushu Institute of Technology, Japan, fabricated a hemispherical artificial fingertip based on nanocomposites to enhance the tactile sensing system of a humanoid robot.Ripe and unripe tomatoes were categorized by recording their metabolic growth as a function of resistivity change under controlled indentation force.[91]In 2018, Yeonginet al.from Stanford University successfully simulated tactile perception using flexible electronics, with a ring oscillator that converts pressure information (1-80 kPa)into action potentials (0-100 Hz), and a layered structure of the device that detects the movement of an object, as well as combining the analysis of pressure signals to differentiate Braille characters.[92]

In 2018,Wanget al.reported a nm-scale device that enables synaptic plasticity and proposed a brain-like spatiotemporal algorithm that exploits the sensitivity of SNN networks to the arrival time of impulse spikes to successfully simulate the azimuthal angle detection of sound, enabling the simulation of biological hearing.[93]In 2018, Wanget al.from Zhengzhou University proposed a novel developmental network(DN)that simulates the human auditory system and constructed an artificial auditory model for speech recognition.The highest recognition rate for English word speech reached 93.60%.[94]

In 2021, Tanet al.from Aalto University, Finland introduced a bio-inspired spiking multisensory neural network,which integrates artificial vision,touch,hearing,simulated olfaction, and gustation with cross-modal learning via artificial neural networks.In one of the artificial olfactory and gustatory systems,olfaction and taste are simulated by nine(etheric,aromatic,sweet,spicy,oily,burnt,sulfuric,rancid,metallic)and five (sweet, sour, salty, bitter, fresh) receptor potentials, respectively.Spike encoders convert the analog voltages into light spikes,and photosensitive transistors decode and memorize the sensory information.[95]

4.1.Human vision system structure

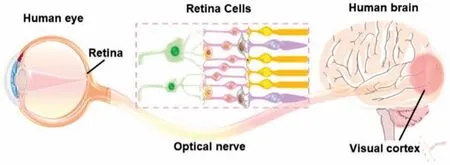

Eighty percents of the information that the human body acquires comes from the visual system, so the importance of vision cannot be overstated.The biological visual system consists of the retina, optic nerve, and brain.The rods and cone neurons in the retina capture the visual information and transform light signals into electronic signals.Subsequently, the optical nerve can transfer electronic signals to the brain,where it acts as the information processor, achieving classification,recognition, learning, and memory.Through selective attention,the brain performs efficient information processing.

As briefly mentioned above, the retina plays a vital role in visual.When the light arrives,photoreceptors in the retina are activated, creating an electronic signal which is conveyed to the optic nerve and then to the brain, as shown in Fig.12.These important functions are carried out by specific cells in the retina.Cone cells can sense light and distinguish color.According to the sensitive wavelength,they can be broadly divided into three categories: red, green, and blue.Rod cells are sensitive to low light.Even a single photo can cause cell excitation.Horizontal cells play a connecting role, adjust the brightness of the signal, and enhance the outline of the scene at the edge of the visual image.Bipolar cells convert sustained graded potentials into transient neural activity.Nodal cells are responsible for the encoding and transmitting electrical impulses of visual information.

Fig.12.Human vision system.[96]

Among the cerebral cortex, the visual cortex is primarily responsible for processing visual information.There are five critical areas in the visual cortex.V1 can process visual features such as direction motion,spatial frequency,parallax,color,etc.V2 can slightly complex modulation of positioning,spatial frequency,color,etc.And V2 could moderate the modulation of complex shapes.V3 is the second most important motion processing area responsible for the motion processing of the entire side field of view.V4 could handle simple geometric shapes but not complex shapes like faces.V5 is responsible for processing complex visual motion stimuli, observing the complex motion of individual points in the visual field caused by the relative movement to the environment.

4.2.Sensor pre-processing structure

A portion of the information is pre-processed on the retina and then transmitted to the brain via the optic nerve,as shown in Fig.13.In this way, the human eye accomplishes more complex tasks such as recognition and classification in a more energy-efficient and effective manner.Inspired by the way the human eye processes, researchers have also front-loaded a portion of the data preprocessing to the sensor unit,and the data obtained by the sensor is passed through the bus to the back-end processor to realize advanced functions,such as the recognition and classification of images and audio, and other parts.

In 2018,Liet al.designed a one transistor and one memristor (1T1R) structure, achieving analog signal and image processing, forming an array of 128×64.[97]In 2019, based on CMOS image sensors through 2D on-chip integration,Chuet al.assembled sensing, storage, and arithmetic functions onto the same PCB board,which is able to simulate LIF neurons and realizes simple image recognition functions through SNNs.[98]In 2022,Valenzuela proposed a smart image sensor using on-pixel memory based on a 0.35 µm CMOS process,achieving object positioning.[99]

Summarize the above architecture, through the logic circuit for each detector unit data processing scheme, there is a common problem:larger pixels and a smaller fill factor,which seriously limits the resolution of the imaging chip.What is worse,the more complex the functions to be accomplished,the more components the logic processing circuits contain,and the resolution is even more limited.To solve it and further reduce the power and time loss caused by the transmission of large amounts of data,a three-dimensional integrated vision chip architecture is proposed as an alternative to the two-dimensional planar architecture.

Fig.13.Retina-inspired sensor preprocessing structures.

In 2018, Noseet al.developed a high-speed vision chip using 3D stacking technology, and combined it with column parallel processing architecture,finally realizing high sensitivity,high resolution,and high accuracy target localization.[100]In 2017,Shulakeret al.used three-dimensional integration to realize an integrated sense-storage-accounting chip consisting of more than one million resistive random-access memory cells and more than two million carbon nanotube field-effect transistors, which is capable of realizing large-scale parallel,in-situprocessing of data.[101]In 2022,Hirataet al.designed a back-illuminated stacked sensor to achieve a frame rate of 1000 fps and a resolution of 4k×4k.[102]

Although the form of 3D stacking reduces the data transmission distance and improves the transmission bandwidth to a large extent,there is still the problem of transport limitation of bandwidth, i.e., the “transport wall” due to the lack of essential innovations in the architecture,and the solution of 3D stacking also brings troubles to the manufacturing process.

4.3.Integrated sensing–memory–processing structure

Based on the analysis above,the conclusion is that a new architecture that is closer to the human’s eye’s operation mode needs to be proposed,thus further increasing speed and reducing energy consumption.It is worth noting that the human eye processes pulsed signals,and every cell in the retina is capable of data acquisition and processing.In contrast,data transportation in the retina is highly parallelized.This highly parallel,insituprocessing,sensing the amount of change working model,has two significant advantages: high speed and low energy consumption.A human eye-inspired imaging chip structure is shown in Fig.14.[103]

Fig.14.Retina-inspired integrated sensing-memory-processing structure.[103]

In 2021, inspired by biological eyes, Menget al.fabricated an artificial retina based on 2D Janus MoSSe, which could simulate visual perception functions with electronic/ion and optical comodulating.In the formation of a faradic electric double layer (EDL) at the metal-oxide/electrolyte interfaces,it could realize synaptic weight changes.The 2D MoSSebased electronic device realized the integration of visible information sensing-memory-processing.[96]In 2022,Yuet al.proposed a programmable ferroelectric bionic vision hardware with selective attention for high-precision image classification.A programmable ferroelectric bionic vision hardware with selective attention was fabricated using quantum dots(QDs)and ferroelectric material.[104]In 2022, Leeet al.demonstrated a heterogeneously integrated 1-photodiode and 1-memristor(1P-1R) crossbar for in-sensor visual cognitive processing,the trained weight values are applied as an input voltage to the image-saved crossbar array, realizing the in-sensor computing paradigm.[105]In 2018, Seoet al.demonstrated an optic-neural synaptic device by implementing synaptic and optical-sensing functions together on h-BN/WSe2heterostructure.This device mimics the colored and color-mixed pattern recognition capabilities of the human vision system when arranged in an optic-neural network.[106]In 2023, Wanget al.presented a retina-inspired mid-infrared(MIR)optoelectronic device based on a two-dimensional(2D)heterostructure for simultaneous data perception and encoding.[103]In 2017,Wanget al.used the dispersion and aggregation behavior of silver atoms to mimic the influx and extrusion of Ca2+, thus simulating biological synapses behaviors.[20]In 2023, Tanet al.utilized a photoresistor array to do processing of input pulse signals to achieve dynamic vision.This dynamic processing capability in the sensor eliminates redundant data streams and facilitates real-time perception of moving objects by dynamic machine vision.[107]A general trend is that researchers are now focusing on the development of photonic synaptic devices in an attempt to advance retinal simulation through the simulation of synaptic properties.Some metal oxides,sulfide heterojunctions, two-dimensional materials, etc.are widely used in the realization of photonic synaptic devices due to their great potentials.They successfully model the short-term plasticity and long-term plasticity of synapses to achieve effective modulation of optical signals and real-time image processing,but there are still some drawbacks, the perception of wavelengths that are not in the visible light range and high energy consumption due to large light intensity.[108,109,111-116]A more specific performance comparison of the AI vision chips mentioned above is shown in Table 1.

According to the comparison of the above table, it can be found that the current AI vision chips generally have the problem of low sensitivity,which requires substantial incident optical power to respond, so further improving the sensitivity is particularly important for improving the AI vision chip performance.This requires a higher sensitivity of the sensor.Among the many competitors, the single-photon avalanche diode(SPAD)stands out due to its excellent weak light detection capabilities.The SPAD can detect optical signals at the single photon level and can also process pulsed signals.These features are well suited for neuromorphic computing and important for developing high-performance AI vision chips.

Table 1.AI vision chip performance comparison.

4.4.Single-photon avalanche diode device

An avalanche detector is good at detecting weak light signals because of its internal gain mechanism.Especially those working in the Geiger mode can achieve single photon level detection with an internal gain up to 106.This amazing result gives us much confidence to achieve the target of single-photo imaging.SPAD array could achieve a high degree of parallel operation and solve the problem of slow CCD reading speed.So if high-quality large-scale SPAD arrays could be made successfully one day,artificial intelligence imaging systems based on them could make a significant breakthrough in faint light imaging, which is of great significance for breaking through the limits of the human eye.

SPAD operates above the breakdown voltage when the depletion area forms a strong electric field (electric field strength up to kV/cm magnitude).When a photo hits the detector,the photoelectric effect produces an electron-hole pair.The electron (hole) rushes under the action of a high electric field,gains enough energy,and collides with the lattice to produce a new electron (hole).This chain reaction occurs continuously and is known as the collisional dissociation effect,where the number of carriers produced grows exponentially,culminating in a macroscopically detectable current.Above is the whole process of multiplication.However, the avalanche multiplication process is autonomous and cannot be quenched autonomously.Continued high current through the PN junction depletion region will lead to detector damage.And the detector in the self-sustaining state cannot sense new photon incidence,so the necessary quenching mechanism needs to be introduced to terminate the multiplication process and return the sensor to the initial state.The SPAD’s avalanche-quenched mode of operation causes it to output a pulsed signal(independent of whether the input signal is pulsed or not).The time for the quenching and recovery process is not negligible, which poses a challenge for high-speed imaging.Efficient quenching mechanisms need to be proposed to improve the detector response speed.

Another effect that can trigger an avalanche is known as after-pulsing.When an avalanche occurs, the PN junction is flooded with charge carriers, and trap levels between the valence and conduction band become occupied to the degree that is much greater than that expected in a thermalequilibrium distribution of charge carriers.After the SPAD has been quenched,there is some probability that a charge carrier in a trap level receives enough energy to free it from the trap and promote it to the conduction band, which triggers a new avalanche.Thus,depending on the quality of the process and the exact layers and implants that were used to fabricate the SPAD,a significant number of extra pulses can be developed from a single originating thermal or photo-generation event.The degree of after-pulsing can be quantified by measuring the autocorrelation of arrival times between avalanches when a dark count measurement is set up.Thermal generation produces Poissonian statistics with an impulse function autocorrelation,and after-pulsing has non-Poissonian statistics.