A Novel Clutter Suppression Algorithm for Low-Slow-Small Targets Detecting Based on Sparse Adaptive Filtering

2024-03-18ZeqiYangShuaiMaNingLiuKaiChangXiaodeLyu

Zeqi Yang, Shuai Ma, Ning Liu, Kai Chang, Xiaode Lyu

Abstract: Passive detection of low-slow-small (LSS) targets is easily interfered by direct signal and multipath clutter, and the traditional clutter suppression method has the contradiction between step size and convergence rate.In this paper, a frequency domain clutter suppression algorithm based on sparse adaptive filtering is proposed.The pulse compression operation between the error signal and the input reference signal is added to the cost function as a sparsity constraint, and the criterion for filter weight updating is improved to obtain a purer echo signal.At the same time, the step size and penalty factor are brought into the adaptive iteration process, and the input data is used to drive the adaptive changes of parameters such as step size.The proposed algorithm has a small amount of calculation, which improves the robustness to parameters such as step size, reduces the weight error of the filter and has a good clutter suppression performance.

Keywords: passive radar; interference suppression; sparse representation; adaptive filtering

1 Introduction

In recent years, the rapid development of small aircraft, such as unmanned aerial vehicles, has brought potential threats to the surveillance of low-altitude areas and air traffic safety, and the research on the detecting, tracking and positioning system of low-slow-small (LSS) targets has gradually become a hot topic [1].The echoes of LSS targets are vulnerable to ground clutter and multipath interference due to their low flying altitude, slow flying speed and small radar cross section (RCS), and the Doppler frequency is small, and the target echo is weak, so it is necessary to detect weak targets in strong clutter environment.It is of great significance to develop the passive detection technology for the efficient detection of LSS targets [2].Passive radar usually uses the third-party non-cooperative radiation sources such as digital television (TV) signals and communication base station signals to detect targets.Illuminators of opportunity are various and widely distributed, which has the advantages of anti-interference, low cost and no occupation of spectrum resources.It is an effective method to detect low-altitude targets by using illuminators of opportunity [3,4].

In the passive radar detection of LSS targets, due to the complex low-altitude environment and the small RCS of targets, the echo of LSS targets is weak, and it is more vulnerable to interference from ground buildings.The echo of coherent targets is covered by direct signal and multipath clutter, which makes it difficult to detect targets.Therefore, clutter suppression before target detection is an important means to improve the detection performance of the system[5].The commonly used clutter suppression algorithm is mainly adaptive filtering algorithm, and the adaptive filter achieves the best filtering performance by iteratively adjusting the coefficients of the filter.Widrow et al.[6] proposed an adaptive filter represented by least mean square(LMS), which suppresses the clutter signal in the received signal through multiple iterations, with slow convergence rate and poor clutter suppression performance; The extensive cancellation algorithm (ECA) and its improved algorithm proposed in [7,8] complete the interference suppression of direct signal by taking the interference signal as an orthogonal projection matrix,which has high computational complexity and is not conducive to practical application.Reference[9] adopts recursive least square (RLS) algorithm, and its convergence rate is restricted by forgetting factor.The frequency-domain block least mean square filter (FBLMS) [10,11] has the characteristics of fast convergence and real-time processing, and has been widely used in clutter suppression of passive radar.However, the traditional LMS algorithm has the contradiction between step size and convergence rate.To solve this problem, many improved adaptive filtering algorithms have been proposed.For example,variable step size LMS algorithm (VSS-LMS).Previous scholars focused on using the error signal in the adaptive process, trying to adjust the step size by using the relationship between the error signal and the step size [12].In recent years, Baydin et al.[13,14] introduced the idea of super-optimization to adjust the step size parameters, optimized the step size while optimizing the model parameters, and incorporated the optimization of the step size parameters into the filtering operation.This method improved the convergence rate and increased the robustness of the algorithm to the selection of initial super-parameters.At the same time, compared with the traditional adaptive filtering algorithm, it does not need additional gradient calculation, and the calculation and storage efficiency are higher.Rubio et al.[15] analyzes the convergence of the supergradient descent algorithm, and the simulation proves its advantages in practice.

In many application scenarios, the radar echo signal is sparse in a certain transform domain.In recent years, inspired by the sparse signal processing theory of compressive sensing, a series of penalty LMS algorithms have appeared,which use norm to add sparsity constraints to the criterion function of updating filter weight coefficients, so that the filter coefficients approach zero and improve the convergence rate of the algorithms, such as ZA-LMS [16], RZA-LMS [17],l0-LMS [18] and so on.This kind of algorithm is affected by random gradient noise in the process of convergence, so fast convergence and small steady-state error cannot be achieved at the same time.When the adaptive process convergences,the algorithm exerts too much attraction on the small coefficient near the zero point, which leads to the increase of the misalignment error.

In this paper, an LSS passive detection system based on sparse adaptive filtering is proposed.Firstly, a hypergradient descentl0-LMS adaptive filtering model with sparse representation is established.Based on the sparsity of the distance dimension of the target after matched filtering, the results of pulse compression operation between reference signal and error signal in iterative process is constrained by sparsity to construct a new cost function.In the iteration, it improves the filter’s weight updating criterion by restricting the sparsity of the target distance dimension, and obtains a purer echo signal.At the same time, the step parameters and balance factors are brought into the adaptive loop process, and the input data is used to drive the adaptive changes of parameters such as step size.Its convergence and steady-state error are analyzed theoretically.Compared with the traditional clutter suppression algorithm, the improved algorithm with sparse super-optimization idea has better clutter suppression performance and stronger robustness to step parameters and input data.Then the range and velocity information of the target is estimated by piecewise range Doppler processing [19,20].

2 Algorithm

2.1 Adaptive Filtering Algorithm for Sparse Representation

The echo signal model received by the surveillance channel of passive radar is defined as

wheres(t) is a direct signal;Ad,Ai,Birepresent signal amplitude;τiandτdirepresent multipath delay and target delay respectively;M1andM2represent multipath number and target number respectively;fdirepresents Doppler frequency of target;ns(t) represents zero mean noise, and is independent of the signal.

The signal received by the reference channel is defined as

whereArrepresents signal amplitude;nr(t) represents zero mean noise of the reference channel.

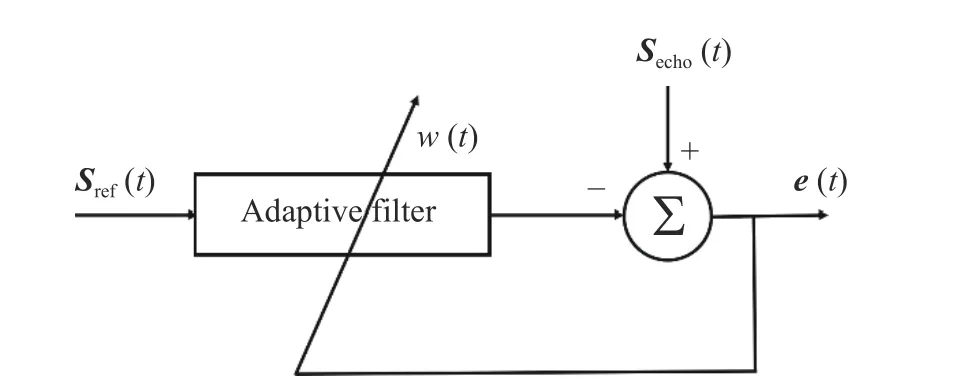

Direct signal and multipath clutter in echo signals are clutter signals to be cancelled.Passive detection systems based on illuminators of opportunity usually use pulse compression operation to coherently accumulate the echo signals received by the main channel and the direct signal received by the auxiliary channel.The target echo signals obtained after correlation processing are only distributed in a few distance points under ideal conditions, which is sparse.However,the signal intensity of interference such as direct signal and multipath clutter in the echo signal received by the main channel is much greater than the echo signal of the target, so it is necessary to suppress clutter first and establish an adaptive filtering algorithm model with sparse representation.Adaptive filter is shown in Fig.1.

Fig.1 Schematic diagram of adaptive filter

Let the input vector of the filter beS(t)=[Sref(t),Sref(t-1),···,Sref(t-M+1)]Tand the tap weight vector beWˆ (t)=[wˆ0(t),wˆ1(t),···,wˆM-1(t)]T.Mis the filter order andtis the response time.The output of the adaptive filter is

In the echo signal, the interference of direct signal and multipath clutter with strong amplitude will affect the detection of LSS targets.Ideally, the pulse compression result |R(τ)| of signale(t) and reference signalSref(t) after the direct signal and multipath clutter are filtered by adaptive filtering in the echo signal has a nonzero value only where the target exists, which is sparse.

whereTis the length of the integration time, *indicates the complex conjugate form of the signal, andτis the time-delay variable in the function.The time-domain data after coherent accumulation of the two signals are constrained by sparsity, and the cost function of the filter is constructed.The cost function of the adaptive clutter suppression algorithm defined in this paper is

whereris the penalty factor,‖*‖0is thel0norm, andnis the discrete time.

l0norm is the number of non-zero elements in the vector.l0norm makes the values of most mutual ambiguity functions converge to zero in the iterative process, thus ensuring the sparsity of the solution.Its numerical solution is NP-hard problem, andl0norm is not derivable.Reference[16] gives the approximate value ofl0norm of a typical sparse system, namely,

whereRi(n) is thei-th pulse compression result attdiscrete time,βis the expansion coefficient.

The approximate value ofl0norm is brought into the cost function of this paper, and it is concluded that

Derivation ofwis

where

Letfβ(Ri(n))be

Update criteria for obtaining weights is

whereµis the step size parameter,κ=µ×ris the balance factor between the constraint term and the estimation error.

By adding sparsity constraint, the adaptive filter can suppress clutter components better.The algorithm is affected by random gradient noise in the convergence process, which makes it impossible to possess both fast convergence and small steady-state error.The convergence rate is related to the step size and the eigenvalue of the input data correlation matrix.When the adaptive process convergences, the algorithm exerts too much attraction on the small coefficient near the zero point, which leads to the increase of the misalignment error.Therefore, based on the idea of super-optimization, this paper iteratively updates the step size parameterµand the penalty factorrin the adaptive loop, and brings the step size and the penalty factor into the filtering operation to obtain information from the data, thus adjusting the super-parameters.Namely,

By adjusting the step size and penalty factor, the convergence rate is not affected by the initial value of step size.And this process can exploit the results of the last iteration without increasing the amount of calculation.If the initial value of the step size is large, the algorithm is iteratively updated with a larger step size to improve the convergence rate of the algorithm.When the step size is close to the steady state,the step size update criterion keeps the step size smaller, and the penalty factor update criterion reduces the constraint on the weight vector near the steady state, thus maintaining a smaller steady-state error.If the initial step size is small,the algorithm will adaptively adjust to the appropriate step size for iteration.

The updated formula of step size parameter and penalty factor is as follows

And weight vector updating formula

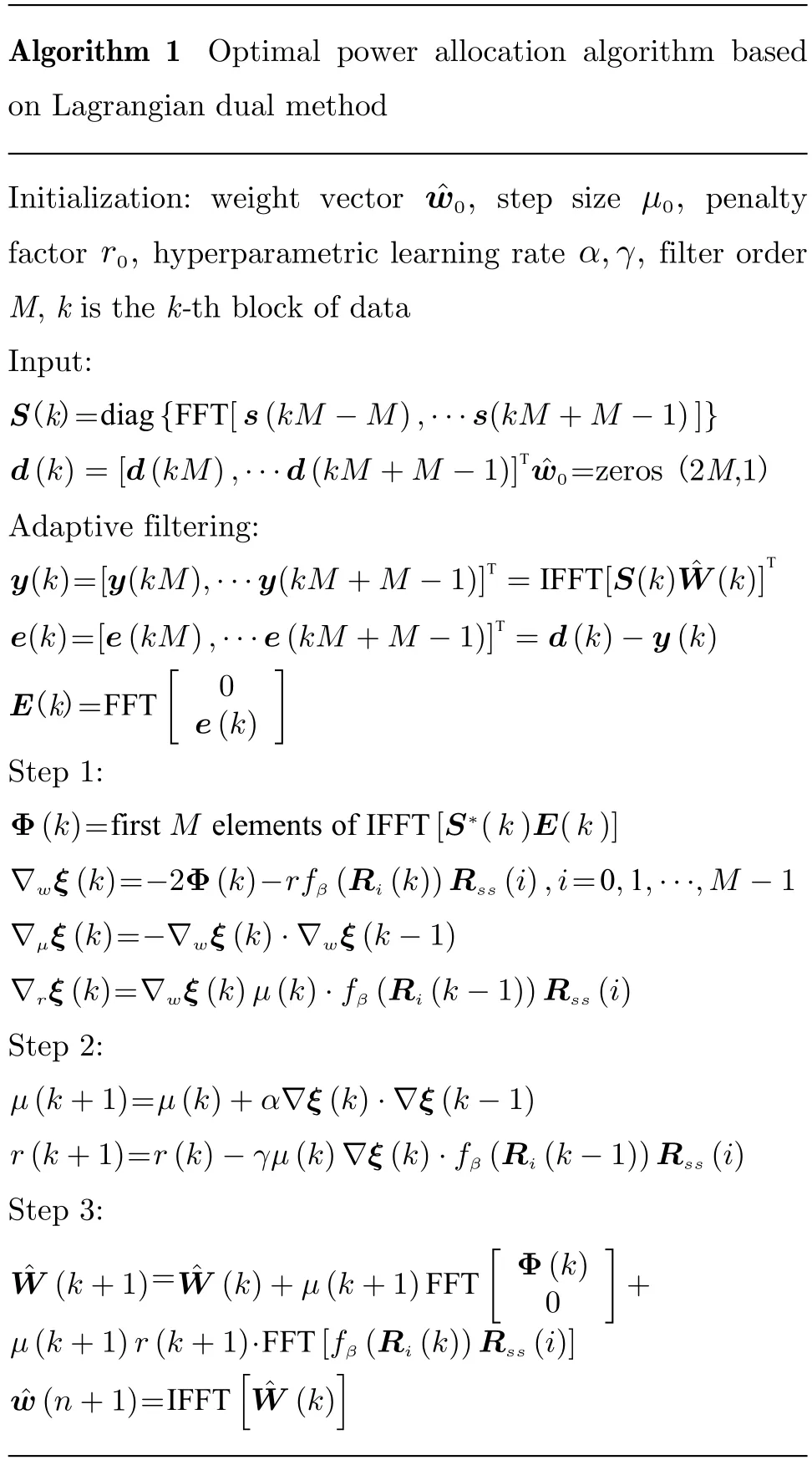

In order to reduce the calculation amount of the proposed algorithm, this paper adopts the method of fast calculation in frequency domain,blocks the data and calculates it by fast Fourier transform (FFT).The specific calculation flow is shown as follows.

Algorithm 1 Optimal power allocation algorithm based on Lagrangian dual method ˆw0, µ0,r0, α,γ,Initialization: weight vector step size penalty factor hyperparametric learning rate filter order M, k is the k-th block of data Input:diag{FFT[s(kM -M),···s(kM +M -1)]}S(k)=d(k)=[d(kM),···d(kM +M -1)]T ˆw0=zeros (2M,1)Adaptive filtering:y(k) [y(kM),···y(kM +M -1)]T =IFFT[S(k) ˆW(k)]T=e(k) [e(kM),···e(kM +M -1)]T =d(k)-y(k)=]FFT[0 e(k)E(k)=Step 1:Φ(k) first M elements of IFFT[S*(k)E(k)]=∇wξ(k) -2Φ(k)-rfβ(Ri(k))Rss(i),i=0,1,···,M -1=∇µξ(k) -∇wξ(k)·∇wξ(k-1)=∇rξ(k) ∇wξ(k)µ(k)·fβ(Ri(k-1))Rss(i)=Step 2:µ(k+1) µ(k)+α∇ξ(k)·∇ξ(k-1)=r(k+1) r(k)-γµ(k)∇ξ(k)·fβ(Ri(k-1))Rss(i)=Step 3:ˆW (k+1) ˆW (k)+µ(k+1)FFT]=[Φ(k)0+µ(k+1)r(k+1) FFT[fβ(Ri(k))Rss(i)]·ˆw(n+1) IFFT[ˆW (k)]=

2.2 Convergence Analysis

Letε(n)=wopt-wˆ(n),woptis the optimal weight vector of adaptive filtering, then

When the step size is small, the solution ofε(n+1)can be replaced by the solution after the expectation of the above formula.

Sincefβ(Ri(n))Rss(i) is bounded, the unitary similarity transformation is applied to the correlation matrixR:QHRQ=Λ.WhereQis a unitary matrix, its columns are eigenvectors related to the eigenvalues ofR, and Λ is a diagonal matrix composed of eigenvalues.

Assuming that the step size is very small,the instantaneous value can be used instead of the set average value.

Thus, the convergence condition can be obtained

2.3 Steady State Error Analysis

Su et al.[21] put forward two indexes to evaluate the steady-state performance.

1) Instantaneous mean square deviation(MSD): defined as the square of 2 norm based on the weight error vector, i.e.

2) Excess mean square error (EMSE):

When the step size is small, the cost function of the algorithm will approach a minimum value with the increase ofn.Because the value ofµ(n+1)r(n+1)is small, it is assumed to be close to 0.

Therefore, the steady-state error is related to the upper limit of step size and the eigenvalue of input matrix.Because at this time

Whenn →∞, the steady-state error of the learning curve is

Then

The steady-state MSD is

whereLis the filter length andNis the number of rows of the correlation matrix.

3 Simulation Results

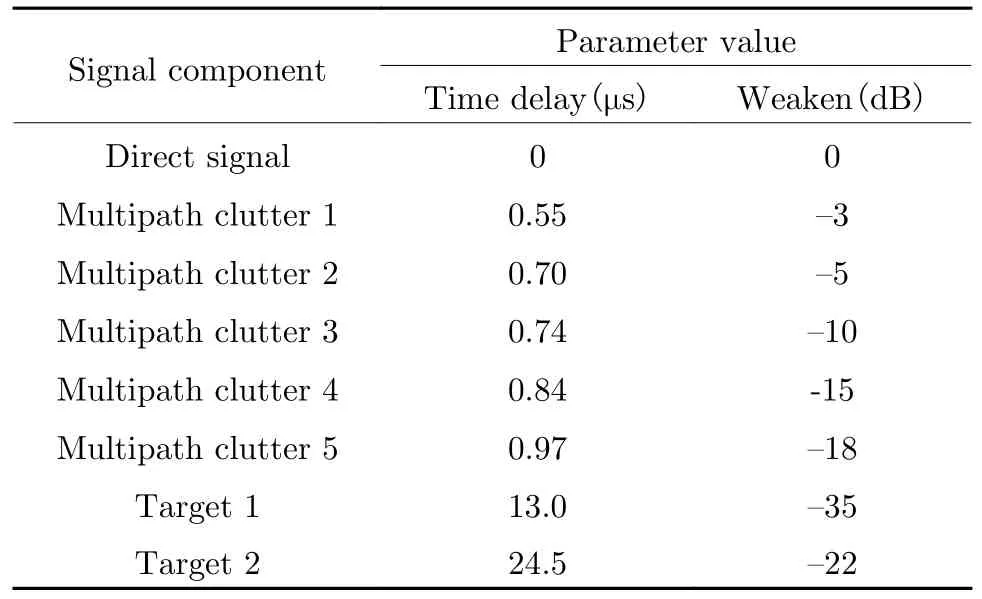

The following simulation analysis is carried out.It is assumed that the echo channel contains the target signal, multipath clutter and direct signal,and the reference channel contains the purified direct signal.The system parameters used in the simulation are shown in Tab.1.The simulation parameters of the echo channel are shown in Tab.2.

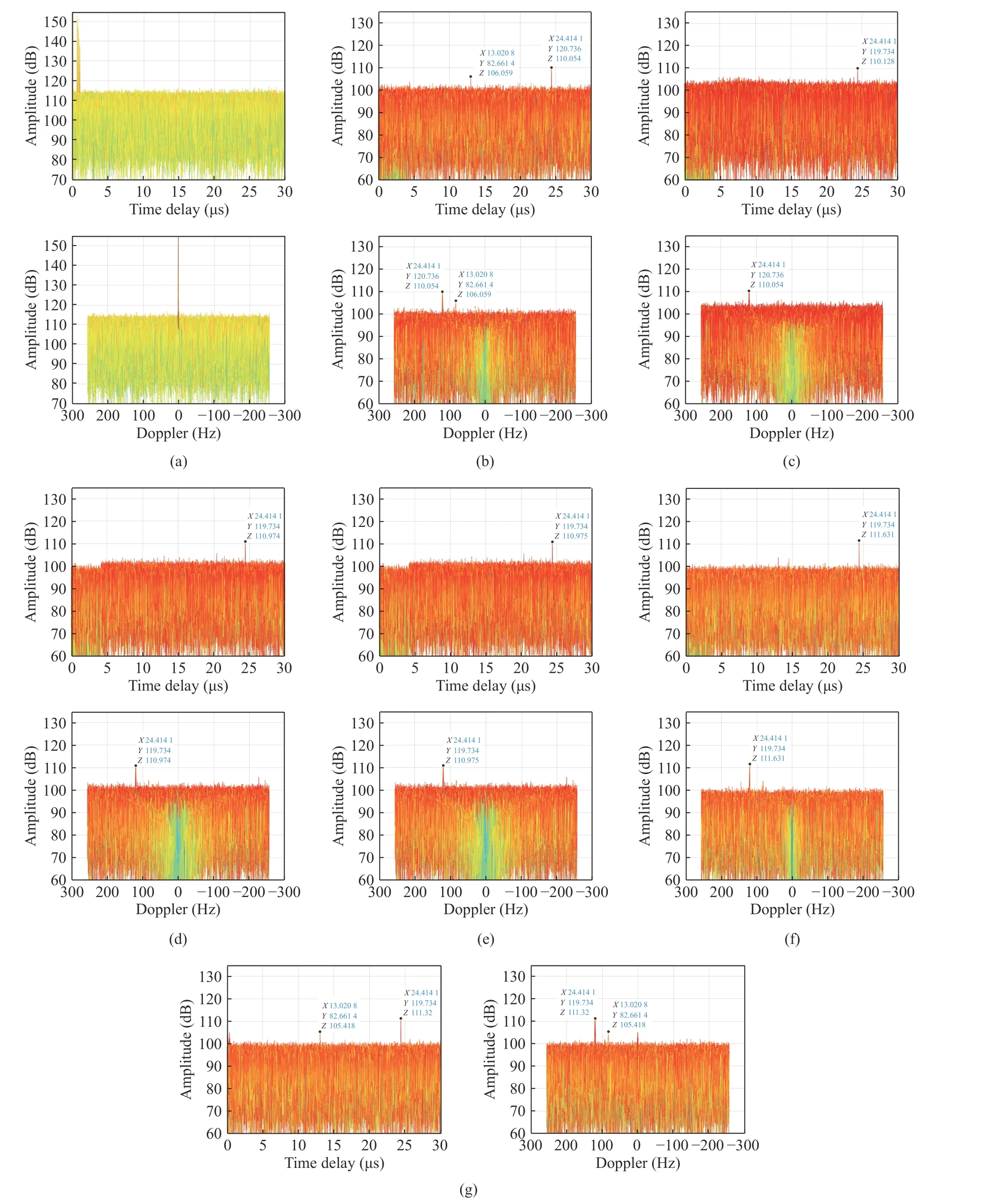

Fig.2 shows the time delay-Doppler diagram before clutter suppression, and compares the clutter suppression performance of the proposed algorithm, FBLMS algorithm,l0-LMS algo-rithm, NLMS algorithm, ECA-B algorithm and VSS-LMS algorithm [22].For adaptive filtering algorithms, when the initial step size is large, the step size is set to 5.5×10-3, and the time delaydoppler diagram filtered by several algorithms is shown in Fig.3.

Tab.1 The system parameters used in the simulation

Tab.2 The simulation parameters of the echo channel

Fig.2 Time delay-Doppler diagram without clutter suppression

Fig.3 Time delay-Doppler diagram after clutter suppression: (a) before processing; (b) the proposed algorithm; (c) FBLMS algorithm; (d) l0-LMS algorithm; (e) NLMS algorithm; (f) ECA-B algorithm; (g) VSS-LMS algorithm

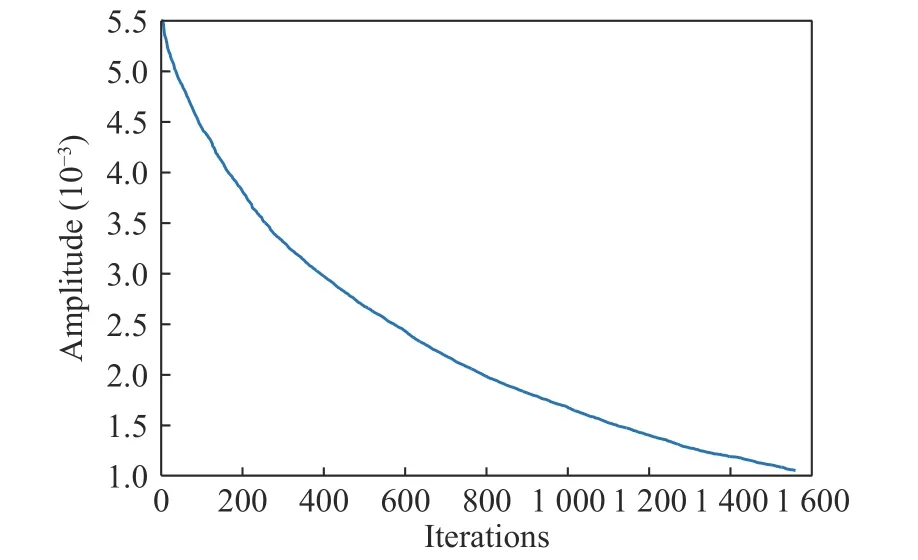

As can be seen from Fig.3, when the step size is large, several algorithms can detect strong target.At the same time, the proposed algorithm can also detect weak target, and ECA-B algorithm produced false peaks.The step size of VSS-LMS algorithm changes with the iterative process.In the initial stage, a larger step size is adopted to speed up the convergence, and in the later stage, a smaller step size is adopted to reduce the steady-state error.However, the stability of the algorithm is easily affected by input noise, and the step size range needs to be limited,so there is still a small amount of clutter energy residual after clutter suppression.The SNR of the proposed algorithm, FBLMS,l0-LMS, NLMS,ECA-B and VSS-LMS after clutter suppression are 9.07 dB, 5.32 dB, 7.76 dB, 7.74 dB, 9.02 dB,8.93 dB, respectively.The proposed algorithm has a good clutter suppression effect, and the step change curve at this time is shown in Fig.4.

Fig.4 Step size change curve

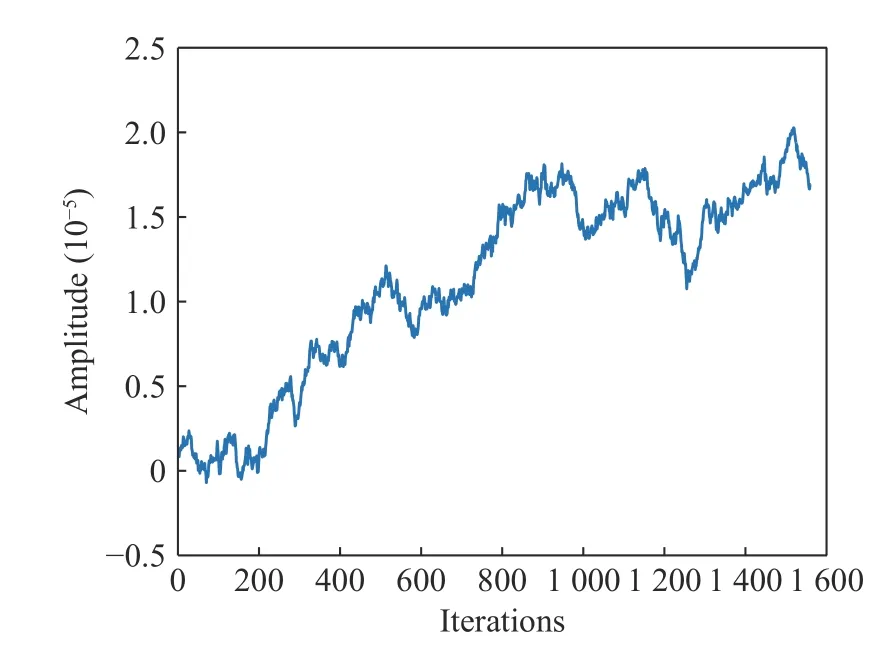

When the step size is small, the step size is set to 1×10-6, the clutter suppression performance of several algorithms is shown in Fig.5.FBLMS algorithm,l0-LMS algorithm and NLMS algorithm fail to detect the target, and the noise floor is only reduced by 3 dB compared with the time delay-doppler diagram without clutter suppression.However, the proposed algorithm has sparsity constraints, and the step size is adjusted adaptively in the filtering process, so the clutter suppression effect is better.The noise floor is reduced by 24 dB.The SNR is 10.37 dB after clutter suppression.

At this point, the adaptive change curve of step size as Fig.6.

Fig.6 Step size change curve

By comparing the weights obtained by iteration of the algorithms with the optimal weights,it can be seen from Fig.7 that the proposed algorithm is close to the true values of the weights,and the clutter suppression effect is better.

Fig.7 Comparison between the weights obtained by six algorithms and the optimal weights

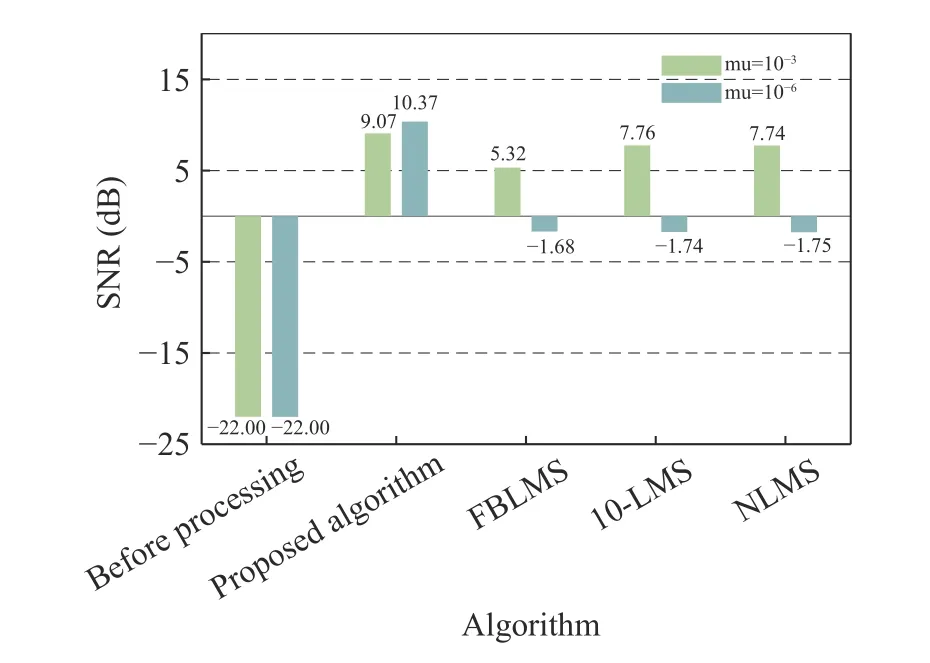

Fig.8 is the SNR comparison diagram of the several algorithms before and after clutter suppression.It can be seen that the algorithm in this paper has certain advantages, no matter whether it is a big step or a small step, and the SNR after clutter suppression is the highest.Especially under the condition of small step size, the proposed algorithm can still show good clutter suppression result when other algorithms fail.

Fig.8 Comparison of clutter suppression effects of algorithms with different step sizes

Comparing the computational complexity of the proposed algorithm with other classical algorithms, the proposed algorithm is implemented in frequency domain.It is the same as the highest power of the multiplication times required by FBLMS, and the computational complexity is low.When the filter order isMand the data length isN(M <N), the computational complexity of several algorithms is shown in Tab.3.

Tab.3 Comparison of computational complexity of algorithms

4 Conclusion

The sparse adaptive filtering algorithm proposed in this paper adds sparsity constraint to the cost function and incorporates the step size into the adaptive filtering process, improving the robustness of the traditional clutter suppression algorithm.

Through simulation analysis, compared with the traditional clutter suppression algorithm, the proposed algorithm can use the sparsity of the target in the distance dimension to obtain a purer echo signal, thus achieving better clutter suppression performance.At the same time,through adaptive iteration of the step size and other parameters, the algorithm can enhance the robustness of the input data without increasing the computational complexity.The ability of passive radar to detect LSS targets in strong clutter scenario is improved.

杂志排行

Journal of Beijing Institute of Technology的其它文章

- A Fusion Localization Method Based on Target Measurement Error Feature Complementarity and Its Application

- Robust Space-Time Adaptive Track-Before-Detect Algorithm Based on Persymmetry and Symmetric Spectrum

- Detection of UAV Target Based on Continuous Radon Transform and Matched Filtering Process for Passive Bistatic Radar

- An Efficient Radar Detection Method of Maneuvering Small Targets

- Equalization Reconstruction Algorithm Based on Reference Signal Frequency Domain Block Joint for DTMB-Based Passive Radar

- WSN Mobile Target Tracking Based on Improved Snake-Extended Kalman Filtering Algorithm