Towards resilient average consensus in multi-agent systems:a detection and compensation approach∗&#

2024-03-01ChongrongFANGWenzheZHENGZhiyuHEJianpingHEChengchengZHAOJingpeiWANG

Chongrong FANG ,Wenzhe ZHENG ,Zhiyu HE ,Jianping HE ,Chengcheng ZHAO ,Jingpei WANG

1Department of Automation, Shanghai Jiao Tong University, Shanghai 200240, China

2State Key Laboratory of Industrial Control Technology, Zhejiang University, Hangzhou 310027, China

3Technology and Engineering Center for Space Utilization, Chinese Academy of Sciences, Beijing 100094, China

Abstract: Consensus is one of the fundamental distributed control technologies for collaboration in multi-agent systems such as collaborative handling in intelligent manufacturing.In this paper,we study the problem of resilient average consensus for multi-agent systems with misbehaving nodes.To protect consensus value from being influenced by misbehaving nodes,we address this problem by detecting misbehaviors,mitigating the corresponding adverse impact,and achieving the resilient average consensus.General types of misbehaviors are considered,including attacks,accidental faults,and link failures.We characterize the adverse impact of misbehaving nodes in a distributed manner via two-hop communication information and develop a deterministic detection compensation based consensus(D-DCC) algorithm with a decaying fault-tolerant error bound.Considering scenarios wherein information sets are intermittently available due to link failures,a stochastic extension named stochastic detection compensation based consensus (S-DCC)algorithm is proposed.We prove that D-DCC and S-DCC allow nodes to asymptotically achieve resilient accurate average consensus and unbiased resilient average consensus in a statistical sense,respectively.Then,the Wasserstein distance is introduced to analyze the accuracy of S-DCC.Finally,extensive simulations are conducted to verify the effectiveness of the proposed algorithms.

Key words: Resilient consensus;Multi-agent systems;Malicious attacks;Detection;Compensation

1 Introduction

Industrial cyber-physical systems (ICPSs) are systems tightly integrating computing,network,and control technologies,and are broadly applied in multiple areas such as intelligent manufacturing.Cooperative tasks in ICPSs,such as collaborative handling in smart factories,could be accomplished by multi-agent systems through assigning well-designed subtasks to multiple nodes in a distributed manner.This is an important cooperation paradigm for ICPSs,especially in dynamic environments.Formation control is one of the key functions for multi-agent systems to accomplish such tasks,where consensus plays a key role in collaboration (Wang et al.,2014).Consensus aims to achieve global agreements through exchanges of local information among multiple agents by predefined consensus protocols.Recently,the security of multi-agent systems has attracted much attention,because these systems usually run in an open environment and are vulnerable to attack or failure(He et al.,2013;Ma et al.,2022).Even minor failures or malicious attacks on consensus will have an unexpected impact on formation control,thereby affecting the execution of cooperative tasks.Hence,resilient consensus algorithms have been widely investigated to ensure the security of distributed multi-agent systems under faults or malicious attacks.

Against this backdrop,there are a series of studies focusing on resilient consensus (LeBlanc et al.,2013).On one hand,a representative kind of research is studies developed based on meansubsequence reduced (MSR) algorithms (Kieckhafer and Azadmanesh,1994).The main idea of these studies is that each agent ignores the extreme states collected from neighbors,while updating its own state with the remaining information.Differently,weighted-mean-subsequence reduced (W-MSR) algorithms(LeBlanc et al.,2013)are developed which do not remove all extreme states as those in the MSR algorithm,but rather adopt a system wherein each agent discards only the extreme states that are strictly larger or smaller than their own states.In addition,Dibaji et al.(2018) proposed a quantized version of the W-MSR algorithm to deal with asynchronous and time-varying time delays.While the abovementioned MSR-based algorithms enable the consensus to be achieved within the range or convex hull of the initial states of normal agents,the accurate average consensus is difficult to guarantee.Additionally,the resilient consensus control strategies are investigated.These involve the development of resilient controllers for ensuring toleration in the event of attacked data or agents,thereby guaranteeing the survivability of the multi-agent systems under misbehaviors.For example,Ge et al.(2023)developed a resilient and safe platooning control strategy for connected automated vehicles under intermittent denial-of-service (DoS) attacks based on a heterogeneous and uncertain vehicle longitudinal dynamic model.Xie et al.(2022) proposed a proportional-integral-observer-based controller to achieve the desired platooning performance under replay attacks.Such resilient studies are also applied to specific practical scenarios,e.g.,the dispatch problem in a smart grid (Wen et al.,2021).Yang et al.(2021) tackled the economic dispatch problem of a smart grid under DoS attacks by leveraging a novel distributed event-triggered scheme and an improved multi-agent consensus protocol.

On the other hand,detection-isolation-based methods are also adopted to achieve resilient consensus.The main idea of such studies is to detect anomalous agents while the predefined detection criteria are breached and then to isolate the identified abnormal agents in case they continue to affect the execution of collaboration tasks.Specifically,observer-based detection methods are effective for the detection of abnormal behaviors in the system.Pasqualetti et al.(2012)proposed an observer-based method for synchronous consensus in directed networks,where networks need to be highly connected and agents require global knowledge.Zhao et al.(2018) proposed a mobile-detector-based method,which exploits mobile agents as observers.This approach extends the number of tolerable attacks that are decided by network connectivity.Observer-based techniques are also used in fault detection for interconnected second-order systems (Shames et al.,2011).Gentz et al.(2016) investigated the detection and mitigation in randomized gossiping algorithms based on the observation of temporal or spatial differences in systems of data injection attacks.Multiple communication-information-based approaches,e.g.,two-hop information (He et al.,2013;Yuan and Ishii,2021;Ramos et al.,2022),also contribute to detection.Specifically,Ramos et al.(2022) proposed methods to achieve consensus in multiple distinct subsets of agents,where each normal agent crosschecks its state among different subsets.There will be malicious agents among the subsets with the same values.Based on majority voting,Yuan and Ishii (2021) designed a detection scheme with two-hop information for which the constraint on the graph structure is less stringent than that for MSR algorithms.However,the above-mentioned detection-isolation-based algorithms might mistakenly identify faulty agents as malicious ones when faults occasionally appear,which results in loss of information and system capacity.Additionally,those detection-isolation-based methods cannot remove adverse impacts introduced before isolation by malicious agents.The research into resilient average consensus (Hadjicostis et al.,2012) considers unreliable heterogeneous communication links,but does not consider misbehaving agents including malicious and faulty ones.

Therefore,in this paper,we are motivated to design resilient average consensus algorithms for multiagent systems to defend against the adverse impact on the consensus that is brought by misbehaving agents,including malicious and faulty ones.To achieve better performance of resilient average consensus,we adopt the idea of detecting and compensating for the adverse impact of misbehaving agents with two-hop communication information and providing tolerance for faulty agents.In this work,we propose a method to detect misbehaving agents and estimate the corresponding adverse impact,followed by a compensation scheme to mitigate or eliminate the adverse impact.Specifically,the main contributions are summarized as follows:

1.We investigate the problem of resilient average consensus under misbehaving agents.Based on two-hop communication information,we design detection–isolation–mitigation-based methods to detect and isolate misbehaving agents and compensate for the detected errors,thereby achieving resilient accurate average consensus and unbiased resilient average consensus in a statistical sense for deterministic and stochastic scenarios,respectively.

2.We design a deterministic detection compensation based consensus (D-DCC) algorithm for normal agents using two-hop communication information.We prove that the D-DCC algorithm can detect and compensate for the impact of misbehaving agents on consensus,thereby achieving resilient average consensus exactly.

3.We further consider the scenario wherein the communication links could fail due to accidents.In this case,we propose a stochastic detection compensation based consensus (S-DCC) algorithm correspondingly.We also prove that S-DCC can achieve unbiased resilient average consensus in a statistical sense.Moreover,we analyze the accuracy of S-DCC by the Wasserstein distance.We present extensive evaluations to demonstrate the effectiveness of the proposed methods.

Compared with our conference version (Zheng et al.,2021),in this work,we have enriched design details and performance analysis of the resilient consensus algorithm for the deterministic scenario (i.e.,D-DCC algorithm) with illustrative examples and discussions.Additionally,for the stochastic scenario,we have relaxed the requirement that the expectation of faults should be zero and provided proof of the resilient consensus performance of the S-DCC algorithm.We have also theoretically analyzed the performance of S-DCC by the Wasserstein distance to show the accuracy of the S-DCC algorithm.Further,we have presented in-depth discussions on assumption relaxation and offered more numerical results in terms of comparisons with related studies.

Notations: R denotes the set of real numbers,and 1 denotes the vector of all ones with proper dimension.Given a matrixM,MTis its transpose matrix.Given a setV,|V|is the number of elements in the set andV/{i}is the set removingifromV.We let E and D denote expectation and variance operations,respectively.

2 Problem statement

2.1 Network description

In this paper,we consider a multi-agent system,and its network is modeled by an undirected graphG=(V,E),whereV={1,2,...,N}andE ⊆V×Vare the vertex set and edge set,respectively.The edge (i,j)∈Emeans that agentsiandjcan communicate mutually.The termNi={j|(i,j)∈E}represents the set of neighbors for agenti.We denote byAG=[aij]N×NandL=DG-AGthe adjacency matrix and Laplacian matrix,respectively,where we have thatDG=diag(d1,d2,...,dN) anddi=.Letdm=max{d1,d2,...,dN}.Without loss of generality,we denote byVs={1,2,...,n}andVm={n+1,n+2,...,N}the set of normal agents and the set of misbehaving agents,respectively.Note that we haveVs∩Vm=∅andVs∪Vm=V.

2.2 Consensus protocol

The state vector of the system at timekis given byx(k)=[x1(k),x2(k),...,xN(k)]T,wherexi(k)∈R is the state of agenti.The consensus protocol aims to drive all items in the state vector to the same value.Specifically,we say that the consensus is achieved when limk→∞xi(k)=c,∀i ∈V,where the valuecis called the consensus value.Particularly,the average consensus can be reached if it holds that.The basic discrete-time linear average consensus is represented as

where the weight matrixWis doubly stochastic.For each weightwijinW,we havewij0 when agentsiandjare neighboring.By Eq.(1),the system will achieve the average consensus exponentially if the undirected graphGis connected.Typical weight matrices,which can guarantee asymptotic convergence,include Metropolis weights(Xiao et al.,2005) and Perron weights,i.e.,W=I-γL,where 0<γ<1/dm.Under both Metropolis weights and Perron weights,the weights of agenti(namely,wij,∀j ∈Ni) are available to neighbors if the number of neighbors|Ni|andNare known by neighbors.

2.3 Information set and misbehavior model

Information set: As indicated in Eq.(1),agents will update their states in a distributed manner based on their own state and those of their adjacent agents.Such two-hop information (i.e.,the state of an agent and those of the agent’s neighbors) assists in effective detection of misbehaving agents(He et al.,2013;Zhao et al.,2018;Yuan and Ishii,2021).Specifically,the information set of agentiat timek,Ψi(k),is given by

in which the term(k-1) represents the state of agentjat timek-1,which will be sent by agentito its neighbors at timek.The termεi(k) indicates the compensation value injected by normal agents or the adverse impact brought by misbehaving agents,which will be discussed in detail later.The binary attack detection indicatorπi(k)=1 orπi(k)=0 represents “attack” or “no attack,” respectively,where the former indicates that the agentihas detected misbehaving agents among its neighbors.Note that for normal agents,εi(k-1)is allowed to be non-zero whenπi(k)=1.This implies that the compensation will be added when a misbehavior is detected.Every time an information transmission is made,all agents transmit their own information setΨi(k) (i ∈N) to neighbors.Therefore,each agent can easily obtain the two-hop information.

Misbehavior model: As for the misbehavior model,we consider that the misbehaving agents can be either faulty or malicious.Faulty agents may cause adverse impacts on the system because of accidental faults,e.g.,miscalculations.Malicious agents aim to disrupt the network functions by manipulating the information set,but such an agent can send only the same information to all of its neighbors at each time slot.This is common,especially when the network is implemented by broadcast communication.In the following,we make four assumptions regarding misbehaviors and misbehaving agents:

Assumption 1No two misbehaving agents are adjacent.

Assumption 2Malicious agents can modify the information set by changing their own state values as well as those of neighbors,but will not add any entries.

Assumption 3A normal agent will no longer communicate with the agent(s) that is (are) to be isolated.

Assumption 4The subgraphGs=(Vs,Es) is connected,whereEs⊆Edenotes the edge set between the agents inVs.

Remark 1Assumption 1 must hold if there is only one misbehaving agent,and it holds with high probability when misbehaving agents are very sparsely distributed in the network(He et al.,2013).Additionally,it is common to make such an assumption on network connectivity.Note that MSR-based methods assume that the network is(2F+1)-robust for theF-local malicious model and (F+1,F+1)-robust for theF-total malicious model (Kieckhafer and Azadmanesh,1994;LeBlanc et al.,2013).These assumptions present the requirement for network connectivity among normal agents.Assumption 1 indicates the requirement for connectivity among misbehaving agents.Nevertheless,we make further discussion on relaxing Assumption 1 in Section 5,with detection and compensation solutions for typical cases.

Remark 2Assumption 2 specifies the attacker capabilities.Malicious agents are usually reluctant to add any entries since they will be detected easily if normal agents cross-check the data in the twohop information set.By Assumption 3,misbehaving agents will be isolated effectively by all normal agents,which can be achieved especially when there are mobile agents in the network(Zhao et al.,2018).As for the update of the weight matrix after isolation,when an agent decides to cut offcommunication with another agent,it will adjust its weight by transferring the weight of the isolated agent to itself.As for the misbehaving agent,it needs to do a similar adjustment because it will no longer receive the corresponding information set.In this way,the weight matrixWwill still be doubly stochastic.Any method of recalculation of the degrees to ensure thatW{i}remains a doubly stochastic matrix is feasible.Note that the abovementioned strategy requires only local adjustment of the weight matrix and the information set would constitute a source of referral for neighbors for ascertaining the recalculation of the degrees.Assumption 4 is common in resilient-consensus-related literature and can always be satisfied if the number of malicious agents is below the network robustness threshold (Kieckhafer and Azadmanesh,1994).

Deterministic scenario: Consider that misbehaving agents will cause adverse impacts while normal agents will add compensation values as a confrontation.The state update rule in Eq.(1) can be reformulated as

whereε(k)=[ε1(k),ε2(k),...,εN(k)]Tis the input vector.The termεi(k) is the error input for misbehaving agenti ∈Vm,whileεj(k)is the compensation input for normal agentj ∈Vs.

Stochastic scenario: Given the fact that the possibility for link failure during communication is considered in the present study,we adopt the understanding of link failures as the phenomenon with the potential for preventing the information set from being received at each needed time slot.pdenotes the probability of connection between agents;i.e.,link failure occurs with probability 1-pbetween each pair of agents independently.Under scenarios where communication link failures may occur,the matrixWwill be time-dependent,i.e.,W(k).Moreover,due to link failures,some information sets are not available to normal agents,which will lead to undetected errors.Without loss of generality,the errors caused by misbehaving agents could be characterized as obeying an unknown distribution (Marano et al.,2009).Misbehaving agentiaffects the system(or the error equals zero)with probabilityθi ∈[0,1].We denote byXi(k)the random indicator for misbehavior,i.e.,Xi(k)∼B(1,θi),whereBis the Bernoulli distribution.Additionally,when misbehaving agents affect the system,the error inputsYi(k) (i ∈Vm)are added.We reasonably assume thatYi(k)follows a specific distribution with meanμiand variance.We consider that the probability ofYi(k)=0 is 0 since misbehaving agents aim to affect the system,andXi(k)andYi(k)are mutually independent.Hence,we haveεi(k)=Xi(k)Yi(k) for misbehaving agents inVm.Then,it holds that

Nevertheless,εi(k)could be zero and it can obey an arbitrary distribution because we do not pose any restriction on the distributions ofXi(k)andYi(k),and do not need to know their expectation and variance,which poses a situation different from the one characterizing the faults.The difference between malicious agents and faulty agents is that malicious agents will attack the system continuously,while faulty agents will cause only accidental disturbances in a limited period.

2.4 Problem formulation

In this paper,we consider a resilient average consensus problem in a multi-agent system with misbehaving nodes,which include faulty and malicious ones.Each agent has an initial state that can be expressed asxi(0),i ∈V,and the system updates the states according to Eq.(2).The goal of this work is to design a detection and compensation method to realize resilient average consensus in a distributed manner.Besides,given that the communication in distributed multi-agent systems could be unreliable due to link failures (especially in the wireless communication scenario),the consensus process will be influenced if the information set is intermittently unavailable.Therefore,we further consider link failures and aim to solve the following two issues:

1.Resilient accurate average consensus: Considering deterministic scenarios where the communication links are reliable,we aim to develop a misbehavior-resilient algorithm to achieve resilient accurate average consensus among the agents in the set after isolationVrfor the system under misbehaviors,namely

where the termVris a subset ofVcontaining the agents that are not isolated.

2.Resilient unbiased average consensus in a statistical sense: Considering stochastic scenarios where the communication links between agents are interrupted randomly,we aim to improve our detection and compensation algorithm to realize resilient unbiased average consensus in a statistical sense,i.e.,

3 Deterministic detection compensation based consensus

In this section,we propose a detection algorithm to detect misbehaving agents and then compensate for the negative impact caused by these misbehaviors.The underlying design idea is to extract abnormal behaviors from the redundant information in the two-hop information set,so as to detect and compensate for the adverse impact.Using the abovementioned detection and compensation methods,we present the D-DCC algorithm.The design details and performance analysis are provided in the following.

3.1 Detection strategies of D-DCC

The first step for each normal agent is to determine whether there are misbehaving agents in the neighborhood and to estimate the amount of error injected by them.Based on the two-hop information sets,we propose the following two detection strategies.Without loss of generality,we conduct an analysis in a subsystem composed of misbehaving agentiand its neighborsj ∈Niin the following.

Detection strategy I: The normal agentj ∈Niwill detect whether misbehaving agentimodifies the states of agentjin the information set,which amounts to checking whether(k)=xj(k) holds.Note that if a malicious agentiremoves the ID and state of agentj,it can be considered that the corresponding state is changed to zero.

Detection strategy II: The normal agentjwill perform the detection by checking whether the update rule in Eq.(1) is followed for each neighboring agenti,i ∈Nj.Specifically,it checks whetherholds.

Concerning the misbehaviors mentioned in Section 2.3,these could be detected with the use of detection strategy I or II or both (see Lemma 2 in Section 3.3).For misbehaving agenti,it holds that

Note that each neighbor of misbehaving agentiwill detect agentiwith the same error by detection strategy II,since misbehaving agents will send the same information to all neighbors.Hence,(k) is used,instead of(k).

Remark 3For the above two detection strategies,all information needed for agentjto detect agentican be obtained from its own statexj(k)and the available two-hop information setsΨi(k+1)andΨi(k) from agenti.Note that for all normal neighbors of agenti,both detection strategies I and II will be executed.In addition,by detection strategy I,each neighbor agentjcan check only its own state(k)in the information set.

3.2 Compensation schemes of D-DCC

To achieve resilient average consensus,the following lemma is given which provides a sufficient condition for consensus on dynamical systems:

Lemma 1(He et al.,2019) Considering system(2),the average consensus can be exponentially achieved if the input vectors are bounded;namely,we have||ε(k)||∞≤αρkfor certain parametersα>0 andρ ∈[0,1) and the sum of the input vectors satisfies

Obviously,the extra data injected by misbehaving agents will make Eq.(7) unsatisfied;i.e.,the average consensus is affected.From Lemma 1,to achieve resilient accurate average consensus,we need to design and add compensation inputsεj(k) for all normal agentsj ∈Vsto compensate for the impact of misbehaviors.In this way,Eq.(7)can still be satisfied under misbehaviors.Since the compensation cannot be completed at once,we introduce an error compensatorηjfor each normal agentjto store the amount of error that needs to be compensated for.At each time slot,every normal agentjwill determine the compensation inputεj(k)from the compensatorηj.Actually,the errors that need to be compensated for come from three aspects: the errors identified by detection strategies I and II,and the error introduced by isolation if the misbehaving agent injects malicious data beyond the tolerance for consensus.To cope with different situations,we propose three compensation schemes as follows:

1.Compensation scheme I: When the misbehavior of agentiin modifying(k) is captured by detection strategy I,compensation scheme I will be employed by the normal agentj ∈Nito compensate for the impact on consensus.The corresponding error to be compensated for is determined by

2.Compensation scheme II: When the misbehaving agentidoes not follow the update rule in Eq.(1),the normal neighboring agentj ∈Nican detect it by detection strategy II.Correspondingly,the error that needs to be compensated for is(k).Since all|Ni|adjacent agents of agentiwill detect the same error,each neighboring agentj ∈Niwill compensate for the detected error averagely.Therefore,the compensation value of each normal agentj ∈Niis given by

Isolation scheme: Considering that misbehaving agents cause finite errors such as accidental computation errors and actuator errors,compensation schemes I and II can accurately compensate for them.However,the malicious agenticould be too aggressive such that the adverse impact caused by it is severe enough to breach the conditions such as||ε(k)||∞≤αρk.As a result,the consensus process will be seriously affected and the convergence may not be achieved according to Lemma 1.In this case,the misbehaving agentishould be isolated(i.e.,the normal neighboring agentsj ∈Niwill cut offthe communication with agenti) to avoid a future adverse impact from agenti.From Lemma 1,we design a distributed exponentially decaying error bound (i.e.,αj,j ∈Vs) as the criterion for isolation.Letα=Nmaxi∈V αiandρ=maxi∈V ρi.Then,we have||ε(k)||∞≤αρk.Specifically,the normal agentj ∈Nican estimate the error of its neighboriby Eq.(6).If the obtained error is within the bound,i.e.,if,then agentjcan compensate for the error according to compensation schemes I and II.Otherwise,agentiwill be isolated and the following compensation scheme III will be employed to remedy the historical bad impact from the misbehaving agenti.In this way,an accurate average consensus with the remaining agents(after isolation)can be guaranteed.

3.Compensation scheme III: The main idea of compensation scheme III is to ensure that the summation of the remaining agents’states after isolation is the same as that of the initial states of the remaining agents.In other words,the historical adverse impact on consensus that is attributable to misbehaving agentishould be removed.Therefore,each normal agentj ∈Niwill equally compensate for the historical adverse impact.The specific compensation value for isolation is designed as

Remark 4The detailed D-DCC algorithm steps are summarized in the supplementary materials.Note that both normal and misbehaving agents have the input termεi(k)(i.e.,the error or compensation input) in their information sets.If malicious agents have full knowledge of detection and compensation methods,then they can easily masquerade as normal ones.To avoid this issue,the attack detection indicatorπi(k) in the information setΨi(k)could help.A normal agent is allowed to add nonzero compensation input only when it has detected misbehaviors in the neighborhood.Then,we adopt a steady compensation sequence that restricts the changes of compensation,i.e.,|εi(k)-εi(k-1)|≤δ,which is reasonable in practice.We also reasonably assume that malicious agents cannot change the attack detection indicator or have no knowledge ofδ.With the two abovementioned methods,it will be easy to distinguish malicious agents with errors and normal agents with compensation input.

3.3 Performance analysis of D-DCC

The following lemma is given first which demonstrates the effectiveness of D-DCC:

Lemma 2If Assumptions 1–4 hold,then all misbehaviors mentioned in Section 2.3 will be detected by detection strategies I and II,and some malicious agents will be isolated.

The proof is omitted.Please see Zheng et al.(2021).For ease of understanding,we provide an example in the supplementary materials to illustrate the effectiveness of the proposed detection method.

Next,the consensus performance of D-DCC is analyzed in Theorem 1.

Theorem 1If Assumptions 1–4 hold,then DDCC achieves resilient average consensus among the agents in the set after isolationVr,i.e.,Eq.(3)holds,where

The proof is omitted.See Zheng et al.(2021).

Remark 5The error bound can be used to a certain extent to distinguish between ever-present attacks and accidental faults and to provide tolerance for faults.The parametersαiandρiare designed to cover the fault,which depends mainly on the prior information of faults in the practical system.Note that a largerαiρican increase fault tolerance but slow down convergence.Though the fault tolerance bound may mistakenly classify malicious agents as faulty agents if malicious agents inject false data within the bound,the attack is also restricted to zero as time goes to infinity,which hardly harms the consensus process.

4 Stochastic detection compensation based consensus

4.1 Algorithm design

In this subsection,we further investigate the resilient average consensus problem against misbehaviors while considering the possible link failures among agents.We assume that the link failure occurs between any two agents with the same probabilitypat each time slot(see Section 2.3).This stochasticity property brings extra challenges compared with the problems in the deterministic scenario (see Section 3);i.e.,the corresponding information set of neighbors is not available when a link failure occurs.As a result,not only may state updating be affected,but also some misbehaviors among relevant agents will not be detected.First,to handle the effect on state updating,agentiwill adjust its update weight by transferring the weight of agentjto itself at timekif link failures between agentsiandjoccur,i.e.,w(k)ij=0,w(k)ii=wii+wij.Similarly,agentjwill perform the corresponding weight adjustment.In this way,W(k) will be doubly stochastic and state updating would still function normally.Second,if link failures occur between misbehaving agentiand normal agentj,agentjis not able to detect the errors of agenti.Such a situation would cause undetected errors,necessitating further compensation.To ensure the resilience performance against misbehaving agents when the information set is randomly unavailable,we propose an S-DCC algorithm to achieve unbiased resilient average consensus in a statistical sense.The detailed S-DCC algorithm is given in the supplementary materials.Specifically,we further propose compensation scheme IV based on the estimation of the average detected errors:

Compensation scheme IV:Considering the compensation for the adverse impact of undiscovered misbehaviors due to unreliable communication links,the compensation value is designed as

whereis the last detection time before agentiis first detected by agentjas a misbehaving agent,which is treated as the last time before a misbehavior occurs.Additionally,we have

wheremj(k) is the number of times that agentjdetects agentiafter time.The insight behind compensation scheme IV is that the average value of detected errors could characterize the average impact of misbehaving agents over a period of time.Specifically,the information set with probabilitypis available at each occasion of information transmission,with which the normal agentjcan perform the detection and compensation for potential errors.To estimate the average impact of misbehaving agents,agentjwill store the detected error(k) in the set(k)for agenti,and then use the average detected error to compensate for undetected errors.

4.2 Performance analysis

Before analysis of the S-DCC algorithm,we define several notations.Summing up the compensation input of all neighbors of misbehaving agenti,the compensation ofεi(k) is given by,where(k)is the average of the errors of misbehaving agentidetected by agentj ∈Ni.Note that there could be faulty agents non-isolated when they appear accidentally and their errors are within the error bounds.Therefore,we assume that the misbehaviors of the faulty agentioccur in a period from,which is reasonable.We denote bythe isolation time of agenti,and subsetsVfandVMare the sets of faulty and malicious agents,respectively.The following theorem is provided to demonstrate the performance of S-DCC:

Theorem 2If Assumptions 1–4 are satisfied,then S-DCC can achieve unbiased resilient average consensus in a statistical sense among the agents in the set after isolationVr;namely,∀j ∈Vr,we have

In addition,the consensus value is bounded,i.e.,

ProofFirst,we illustrate that all misbehaving agents will be detected.For each malicious agenti,the system is affected with a probability ofθi,where 0<θi ≤1.For each normal agentj ∈Ni,it detects the misbehavior of agentiwith probabilityp,0

By taking the limit on both sides of Eq.(15),we have limk→∞P(k)=limk→∞(1-(1-pθi)k)=1.Hence,all misbehaving agents will be detected as time goes to infinity.Similarly,all malicious agents will be isolated with a probability of 1 because the boundαρk=0 ask →∞.

Second,we prove that average consensus is achieved.SinceGsis connected and the remaining misbehaving agents must have normal neighbors,Grwill be connected,whereGr=(Vs,Es)andEr(Er⊆E) denotes the edge set between the agents inVr.Due to the detection and isolation,we have||ε(k)||∞ ≤ αρk.WhenW(k) is timedependent,it holds that E[w(k)ij]=pwijifi≠j,andHence,E[W(k)]is doubly stochastic(since it is the expected value of matrices,each matrix satisfies this property).Then,we have

Next,we will prove that

First,we have

For simplicity,we perform analysis on a subsystem composed of the misbehaving agentiand its neighbors inNihere.Recall that we setεi(l)=Xi(l)Yi(l) in Section 2.3,whereXi(l) andYi(l) are independent.

Case 1: Considering a malicious agenti,the expectation of the sum of its error within timekis

Without loss of generality,we consider that all neighbor agents inNidetect the misbehavior of agentiat the same time.The expectation ofsatisfies

The expectation of the sum of compensation values from compensation schemes I,II,and IV is

Hence,we have

Then,we have

With the two abovementioned cases,we have Eq.(17)for the general setVr.Hence,Eq.(4) holds and the unbiased resilient average consensus in a statistical sense among the agents inVris achieved.

According to the proof of Theorem 1(see Zheng et al.(2021)),ε(k)satisfies the condition||ε(k)||∞≤αρk.Then,for misbehaving agents,we have

Hence,inequality(14)is proved.

Remark 6Theorem 2 ensures the unbiased resilient average consensus in a statistical sense.Meanwhile,the expectation of undetected errors is the same as the mean of detected errors.For faulty agents,the compensation periodhas the same expectation as that of errors,i.e.,Hence,misbehaviors can be unbiasedly compensated for in a statistical sense.For normal agents,the larger attack probabilityθiof neighboring misbehaving agents and detection probabilitypwill reduce the number of expected steps for detection.On one hand,a larger attack probability will improve the attack capability of malicious agents.On the other hand,the detection probability based on the reliable link will be close to 1.Hence,the detection performance will be improved.Furthermore,it is not actually necessary for malicious agents to attack with a constant probability and a certain distribution.The detection method will be effective as long as the attack probability is larger than 0,and the errors may follow a certain attack method.Hence,to simplify the statement,we assume a constant attack probability and present the attack errors by a time-invariant probabilistic model.

Next,we analyze the accuracy of the meanbased compensation scheme IV,i.e.,the distance between the mean-based compensation and actual errors.The actual errors may consist of multiple uncertainties.LetFYi(k)(x) be the cumulative distribution function (CDF) ofYi(k).We adopt the Gaussian mixture model (GMM) to represent the error variableYi(k),because any distribution can be generally modeled by GMM with arbitrary precision.GMM is defined as a convex combination ofNlGaussian distributions with expectationsμland variancesσlfor the integer 1≤l ≤Nl,namely,

whereΦ(·)is the CDF of the standard normal distribution.Sinceεi(k)=Xi(k)Yi(k),the CDF ofεi(k)is

Without loss of generality,we consider that all the detection numbersmj(k) (j ∈Ni) are the same.When the detection number is large enough,the following holds according to the central limit theorem(Grimmett and Stirzaker,2020):

whereN(·,·) is the Gaussian distribution andMi=minj∈Ni mj().The CDF ofεiis given by

The close proximity between the statistical distribution of the error and that of the compensation value will guarantee not only the close final consensus value but also the stationarity of the consensus process.The characteristic of proximity of two probability distributions can be described by the Wasserstein distance (Vallender,1974).The Wasserstein distanceR(P,Q) between the two distributionsPandQis defined as follows:

whered(·,·) is the function of the metric space and the mathematical operation inf is taken over all possible pairs of random variablesξandηwith distributionsPandQ,respectively.In the case of onedimensional space with the Euclidean metric,the Wasserstein distance is calculated by

whereF(x) andQ(x) are the CDFs ofPandQ,respectively (Vallender,1974).Hence,we have the Wasserstein distance betweenεi(k)and(k)as

We can use the Wasserstein distance to show the expectation of the absolute error between the meanbased compensation and actual errors.The following theorem is provided to illustrate the bound ofR(εi(k),(k)):

Theorem 3WhenYi(k) is modeled by GMM,we have

ProofSee the supplementary materials.

5 Discussion on assumption relaxation

In this paper,Assumption 1 makes a strong assumption about the connection relationship between agents;i.e.,any two misbehaving agents are not adjacent to each other.This limits the interconnection between misbehaving agents.Although Assumption 1 is more likely to hold when misbehaving agents are sparsely distributed compared to normal agents,it still cannot be ruled out that some misbehaving agents could be adjacent to each other.In this regard,we discuss the relaxation of Assumption 1 in this section by analyzing a typical structure wherein two misbehaving agents are adjacent,followed by the corresponding detection and compensation strategies.Note that we do not fully solve the problem of misbehaving agent adjacency,which is worthy of further research.

A common assumption in related detection methods is that each pair of neighboring misbehaving agents must have at least one common normal neighbor (Zhao et al.,2018).Consider the situation in which two misbehaving agents have common normal neighbor(s).A representative topology is shown in Fig.1.When two malicious agents (agents 2 and 3 in Fig.1) are neighbors,they do not need to use detection strategies for each other.However,normal agent 1 may not be able to detect whether agent 2 or 3 is abnormal according to the previously proposed detection strategy.This is because as long as agent 2 forges the information of agent 3 according to the information set so that it satisfies the update rule in the eyes of agent 1,it can prevent agent 1 from identifying agent 2 as a misbehaving agent according to detection strategy II.Agent 3 can perform similar operations to prevent agent 1 from detecting it through detection strategy II.In this case,previous detection strategies may not work well.In other words,we need a new detection strategy to deal with it,which is given as follows.

Detection strategy III:Consider a normal agentjand two misbehaving agentsiandh,where the three agents are mutually adjacent to each other.To prevent agentjfrom detecting malicious agentsiandh,agentsiandhwill collaborate to bypass detection strategy II by carefully modifying the information set.Owing to the fact that both malicious agentsiandhwant to bypass detection strategy II in agentj,the information set of agentireceived by agentjmay be different from the part about agentiin the information set that is sent by agenthto agentj.This renders the possibility of detection of agentsiandhby agentj.In essence,agentjdetects agentiusing the common neighboring agenth.Specifically,at timek,the normal agentjsaves the state values of all neighbor agents(xh(k),h ∈Nj).At timek+1,the normal agentjchecks whether the states of each neighboring agenti’s neighbors in the information set are correct,i.e.,whetherxh(k)=x(i)h(k) holds,wherei ∈Nj,h ∈Nj,h ∈Ni.In this way,the detection can be achieved.

Compensation scheme V:After the detection,it is necessary to consider the means to compensate for the error.If the common neighborhof agentsiandjis normal,then agenthwill detect misbehaving agentiat the same time,and will compensate for misbehaviors according to compensation scheme I.Hence,agentidoes not need to add compensation.If agenthhas been marked as a misbehaving agent,it is necessary to add compensationfor the error of agenti.If agentsiandhhave multiple common normal neighbors(i.e.,|Ni ∩Nh ∩Vs|)and all normal neighbors can detect and compensate for misbehaviors,then the compensation value of each neighbor will be given as follows:

Since the main idea of the compensation is the same as that of compensation scheme I,the theoretical proof is omitted.

6 Simulation results

In this section,a series of simulations are conducted to illustrate the effectiveness of the proposed D-DCC and S-DCC algorithms.We consider a multiagent system withN=10 nodes,where its network is described by an Erdös–Rényi random graph.Each edge is generated with a probability of 0.7.The system updates states by Eq.(2)with the weight matrixWdesigned by Perron weights.All agents’ initial states are randomly selected from the interval[0,2].We set two misbehaving agents in the system,which are not adjacent.Specifically,agent 1 is malicious aiming to affect the consensus process spitefully and agent 5 is a faulty node.Here,the parameters are set asρi=0.9,αi=5,∀i ∈V.

6.1 Resilient consensus under D-DCC

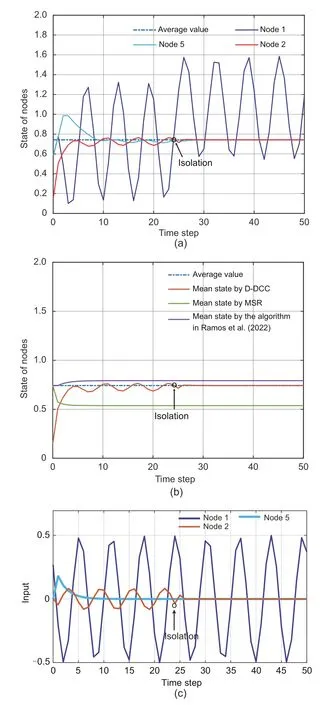

In this subsection,for malicious agent 1 and faulty agent 5,the adverse impacts injected are given byε1(k)=0.5 coskandε5(k)=0.5×0.6k,respectively.Note that these misbehaviors start at time 0.Then,D-DCC is deployed and the state evolution and extra input of the system are shown in Fig.2.From Fig.2a,it can be seen that accurate average consensus is achieved with all agents except agent 1.This is because the error of agent 1 exceeds the predefined decaying error bound and agent 1 is isolated at time 24.The final convergence value (i.e.,the consensus value) coincides with the average of the initial states of the agents that are not isolated(see the blue dotted line in Fig.2a).For comparison,we deploy the MSR algorithm adopted in Kieckhafer and Azadmanesh (1994) and the resilient consensus algorithm used in Ramos et al.(2022),as shown in Fig.2b.Obviously,both existing algorithms fail to achieve accurate average consensus.The MSR algorithm does not remove the injected adverse impact.The resilient consensus algorithm in Ramos et al.(2022) cannot detect agent 5 as misbehaving,because agent 5 injects only false data but does not aim at deviating the consensus to a specific value.Hence,its consensus process will be affected by agent 5,leading to a deviation from the average consensus.In Fig.2c,we show the error inputs of agents 1 and 5.Note that agent 5 is not isolated since its error decays exponentially and is always within the error bound.

Fig.2 Resilient consensus performance of D-DCC:(a) state evolution of the system;(b) system state under different methods;(c) error inputs of agents 1 and 5 and compensation of agent 2 (References to color refer to the online version of this figure)

Note that the parametersαjandρjplay a key role in trading offthe fault tolerance and algorithm convergence.Here,we show the impact of different parameter selections on system performance.Obviously,if the values of parametersαjandρjare too small,the fault tolerance will be low.Hence,we only show the impact on the algorithm convergence with different parameters.Without loss of generality,letαjbe constant andρjchange.The results are given in Fig.3.The mean state in the figure represents the average state.It can be seen that the mean state converges faster asρdecreases.

Fig.3 Convergence of system states with varying ρ(References to color refer to the online version of this figure)

6.2 Resilient consensus under S-DCC

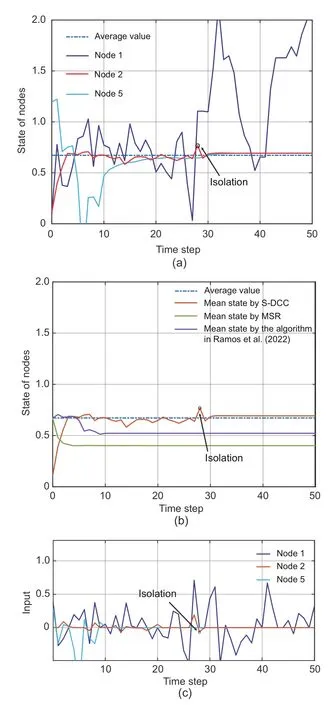

In this subsection,the error input of malicious agent 1 follows a GMM model when an attack is adopted,i.e.,In addition,the error input of faulty agent 5 follows a normal distribution.Here,the attack probability and connection probability are set to beθ1=0.8 andp=0.8,respectively.The performance of resilient consensus under S-DCC is provided in Fig.4.Specifically,Fig.4a demonstrates that the consensus is achieved with all agents except agent 1,since agent 1 causes errors continuously and exceeds the fault-tolerant bound,being isolated at time 28.Faulty agent 5 misbehaves only during the first 10 time slots and its error inputs are always within the fault-tolerant bound.Hence,agent 5 is not isolated and its errors are compensated for by neighbors.Here,the true average of the initial states of non-isolated agents is 0.672,while the similarly obtained number corresponding to the use of our methods is 0.693.We accordingly see that the latter is close to the true one.This is because the final consensus value varies randomly in practice,though the unbiased average consensus can be achieved theoretically.We also compare the S-DCC algorithm with the MSR algorithm in Kieckhafer and Azadmanesh(1994) and the consensus algorithm in Ramos et al.(2022).From Fig.4b,we see that the consensus values of the MSR algorithm and the algorithm in Ramos et al.(2022) are 0.401 and 0.522,respectively.The consensus achieved by S-DCC is more accurate.In Fig.4c,we show the error inputs of agents 1 and 5 and the compensation input of agent 2 under S-DCC.

Fig.4 Resilient consensus performance of S-DCC:(a) system state evolution;(b) system state under different methods;(c) error inputs of agents 1 and 5 and compensation of agent 2 (References to color refer to the online version of this figure)

7 Conclusions

In this work,we have studied the issue of resilient average consensus under misbehaving agents.First,considering scenarios with reliable communication,we have designed the D-DCC algorithm to eliminate the adverse impacts introduced by misbehaviors.We have proved that the resilient average consensus can be achieved by D-DCC.Furthermore,the S-DCC algorithm,as proposed in the present research,is imbued with the capability to adapt to scenarios wherein communication link failures may occur.It has been proved that the unbiased resilient average consensus in a statistical sense is achieved by S-DCC,and the absolute error between meanbased compensation and actual adverse impact has been analyzed using the Wasserstein distance.Finally,simulations have been conducted to illustrate the effectiveness of the proposed algorithms.In the future,it would be worthy to study the resilient consensus over time-varying and directed networks or high-dimensional systems.Resilient distributed optimization is also an interesting issue that can be investigated.

Contributors

Chongrong FANG,Wenzhe ZHENG,and Zhiyu HE designed the research.Chongrong FANG and Wenzhe ZHENG processed the data.Chongrong FANG,Wenzhe ZHENG,and Jianping HE drafted the paper.Chengcheng ZHAO and Jingpei WANG helped organize the paper.Jianping HE and Chengcheng ZHAO revised and finalized the paper.

Compliance with ethics guidelines

Chongrong FANG,Wenzhe ZHENG,Zhiyu HE,Jianping HE,Chengcheng ZHAO,and Jingpei WANG declare that they have no conflict of interest.

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

List of supplementary materials

1 Algorithm 1 (D-DCC algorithm)

2 Illustrating example of the D-DCC algorithm

3 Algorithm 2 (S-DCC algorithm)

4 Proof of Theorem 3

Fig.S1 Example: a ring network with a single misbehaving agent 2

杂志排行

Frontiers of Information Technology & Electronic Engineering的其它文章

- Secure control and filtering for industrial metaverse

- The engineering of circular causality for specialization and design of complex systems:cad2CAS and casCAD2∗

- A low-noise,high-gain,and large-dynamic-range photodetector based on a JFET and a charge amplifier*

- A novel topology with controllable wideband baseband impedance for power amplifiers∗

- Empowering smart city situational awareness via big mobile data∗

- Asynchronous gain-scheduled control of deepwater drilling riser system with hybrid event-triggered sampling and unreliable communication∗#