A Discontinuity and Cusp Capturing PINN for Stokes Interface Problems with Discontinuous Viscosity and Singular Forces

2023-12-10YuHauTsengandMingChihLai

Yu-Hau Tseng and Ming-Chih Lai

1 Department of Applied Mathematics, National University of Kaohsiung,Kaohsiung 81148, Taiwan, China

2 Department of Applied Mathematics, National Yang Ming Chiao Tung University, 1001 Ta Hsueh Road, Hsinchu 300, Taiwan, China

Abstract.In this paper,we present a discontinuity and cusp capturing physicsinformed neural network (PINN) to solve Stokes equations with a piecewiseconstant viscosity and singular force along an interface.We first reformulate the governing equations in each fluid domain separately and replace the singular force effect with the traction balance equation between solutions in two sides along the interface.Since the pressure is discontinuous and the velocity has discontinuous derivatives across the interface,we hereby use a network consisting of two fully-connected sub-networks that approximate the pressure and velocity,respectively.The two sub-networks share the same primary coordinate input arguments but with different augmented feature inputs.These two augmented inputs provide the interface information,so we assume that a level set function is given and its zero level set indicates the position of the interface.The pressure sub-network uses an indicator function as an augmented input to capture the function discontinuity,while the velocity sub-network uses a cusp-enforced level set function to capture the derivative discontinuities via the traction balance equation.We perform a series of numerical experiments to solve two-and three-dimensional Stokes interface problems and perform an accuracy comparison with the augmented immersed interface methods in literature.Our results indicate that even a shallow network with a moderate number of neurons and sufficient training data points can achieve prediction accuracy comparable to that of immersed interface methods.

Key words: Stokes interface problem,immersed interface method,level set function,physics-informed neural network,discontinuity capturing shallow neural network,cuspcapturing neural network.

1 Introduction

The incompressible two-phase flow problems have a wide range of applications in various scientific and engineering fields;see [7] and the references therein.Very often,this kind of problem involves solving Stokes equations with a discontinuous viscosity and singular force (such as surface tension force) along the fluid interface.For simplicity,we call this problem the Stokes interface problem hereafter.Solving Stokes interface problems numerically is known to be quite challenging in the scientific computing community,giving rise to several difficulties.One major difficulty is to handle the coupling between two adjacent fluids and the interfacial boundary conditions (arising from the singular force).Another difficulty comes from that the pressure is,in fact,discontinuous,and the partial derivatives of the velocity are also discontinuous across the interface.Thus,careful numerical treatments must be adopted for accurate discretizations near the interface,regardless of using the finite difference or finite element method.

In order to address the above issues,Peskin proposed the immersed boundary(IB) method [16],which introduces a regularized version of the discrete delta function to discretize the singular force in a Cartesian grid setting.The discontinuous viscosity can also be regularized by a smoothed version of the Heaviside function.In this framework,the problem becomes smooth so that regular finite difference discretizations such as the MAC scheme can be applied to solve the Stokes equations.However,it is known that the IB method achieves only first-order accuracy [12]for the solution variables.To improve the accuracy,an alternative approach is to solve the Stokes equations within each fluid domain separately and reformulate the singular force term into the traction balance equation on the interface.The above two formulations will be given in Section 2.In this paper,we shall introduce a neural network methodology to solve the problem based on the second formulation.Since the present goal here is to make comparisons with traditional methods such as immersed interface method(IIM,also based on the second formulation)from the implementation and accuracy aspects,we provide a brief review of the IIM in the following.

The immersed interface method proposed by LeVeque and Li [9] was originally designed to solve elliptic interface problems with discontinuous coefficients.Their approach incorporates the derived jump conditions via local coordinates into the finite difference discretization so that the local truncation error can be reduced appropriately to achieve the solution in second-order accuracy in theL∞norm.Lai et al.[5,8] later proposed a simplified version of the IIM that directly utilizes jump conditions to achieve second-order accuracy in theL∞norm without resorting to local coordinates.Of course,there are other variants of solving elliptic interface problems using the jump conditions,such as the ghost fluid method[1,11],or meshless method [15].However,it is not our intention to give an exhaustive review here so readers who are interested in the recent results can refer to [15],and the references therein.Comparing with the well-developed solvers for elliptic interface problems,there are relatively few works to investigate the present Stokes interface problems with discontinuous viscosity and singular forces.As we mentioned earlier,the major difficulty is that the solution and derivative discontinuities are all coupled,making it hard to develop accurate numerical methods.In [10],Li et al.developed a new IIM-based method for the two-dimensional problem that decouples the jump conditions of the fluid variables by introducing two augmented velocity variables.Although the new augmented system of equations can be respectively written as three Poisson interface problems for the pressure and the augmented velocity,they are still coupled through the augmented variable jumps defined on the interface.As a result,the GMRES iterative method is used to solve the Schur complement system for the augmented variable jumps.Recently,Wang et al.[24] used the similar idea but a simple version of IIM and extended the method to solve the three-dimensional problems.

In addition to traditional grid-based methods,the scientific computing community has shown increasing interest in utilizing deep neural networks to tackle elliptic interface problems.One advantage of the neural network approach is its mesh-free nature,enabling us to handle problems with complex interfaces or irregular domains more easily.However,with the common usage of the smooth activation function in neural network methods,the network solution is naturally smooth and cannot capture the solution behaviors (function discontinuity or derivative discontinuity)sharply.One simple way to circumvent such difficulty is to decompose the computational domain into two sub-domains and employs separate networks for each sub-domain such as the networks in [2,4,23].Recently,the authors have developed a discontinuity capturing shallow neural network to solve the elliptic interface problems [6].The main idea is to represent ad-dimensional discontinuous function by a single(d+1)-dimensional continuous neural network function in which the extra augmented variable labels the sub-domains.Once the function representation is chosen,the rest of the method follows the physics-informed neural network (PINN) framework developed in [20].Meanwhile,we have also developed a (d+1)-dimensional cusp capturing neural network[21]to represent ad-dimensional continuous function but with discontinuous partial derivatives across the interface.In such a case,the extra augmented variable uses the cusp-enforced level set function instead.As we mentioned earlier,in the present Stokes interface problem,the pressure is usually discontinuous,and the partial derivatives of velocity are discontinuous,so they are well represented by the discontinuity capturing and cusp capturing networks,respectively.Both network function representations will be given in detail in Section 3.

The remainder of the paper is organized as follows.In Section 2,we first present the Stokes equations with a discontinuity viscosity and singular force.Then we reformulate the governing equations in each sub-domain and introduce the traction balance equation to replace the singular force effect.In Section 3,we introduce the discontinuity and cusp capturing PINN for solving the model problems.Numerical experiments demonstrating the accuracy of the proposed method compared with the existing augmented immersed interface methods are shown in Section 4.Some concluding remarks are given in Section 5.

2 Stokes equations with a discontinuous viscosity and singular force

In this paper,we aim to solve ad-dimensional incompressible Stokes system with a piecewise-constant viscosity and singular force on an embedded interface.This problem arises from the simulation of the flow of two adjacent fluids with different viscosity in the presence of interfacial force.We start by writing down the governing equations in the immersed boundary (IB) formulation [16],which regards the interface as a singular force generator so that the equations can be described in the whole fluid domain Ω.To begin with,let Ω⊂Rd,whered=2 or 3,be a bounded domain,and Γ be an embedded co-dimensional oneC1-interface that separates Ω into two subdomains,Ω-and Ω+,such that Ω=Ω-∪Ω+∪Γ.This Stokes interface problem can be written as in [10,24]

Here,u=(u1,···,ud)∈Rdis the fluid velocity,pis the pressure,and g=(g1,···,gd)is an external force,which are all functions of x=(x1,···xd)∈Ω.The viscosityμ(x)is defined piecewise-constantly such thatμ(x)=μ±if x∈Ω±.The force term f is a singular concentrated body force expressed in the IB formulation as f(x)=F(X)δ(x-X)dS,where the interfacial force F=(F1,...,Fd) andδ(x) is thed-dimensional Dirac delta function.Due to the delta function singularity,one can immediately see that the pressure and velocity should not be smooth mathematically.In fact,one can reformulate the governing equations (2.1a)-(2.1c) in each fluid domain Ω±separately,but the solutions are linked by the traction balance equation (2.2c) on the interface Γ as follows.

The unit outward normal vector,n=(n1,···,nd),points from Ω-to Ω+along the interface Γ.This above traction balance equation (2.2c) can be derived in [19].

Since the fluid is viscous,the velocity is continuous across the interface.However,the traction balance equation (2.2c) shows that the pressure is discontinuous and the velocity has discontinuous partial derivatives.Indeed,we can rewrite Eq.(2.2c)component-wisely as

In the next section,we shall present a neural network learning methodology based on PINN [20] to solve Eqs.(2.2a)-(2.2b) with the traction balance equation (2.4),subject to the boundary condition(2.2d).One can see from Eq.(2.4)that there aredessential interface jump conditions in the present neural network method.On the contrary,the implementation of IIM in [10] requires 6 interface jump conditions for solving the problem with the dimensiond=2 as follows.

Here,τis the tangential vector along the interface Γ.For the three-dimensional case,one needs to use even 9 interface jump conditions of velocity and pressure in order to implement the augmented IIM in [24].Thus,it is more advantageous to use the present neural network method to solve the Stokes interface problems than the augmented IIMs regarding the interface jump conditions needed,not to mention the latter ones need to solve the resultant linear systems that lack good symmetric properties.

3 A discontinuity and cusp capturing PINN

In this section,we present a discontinuity and cusp capturing physics-informed neural network to solve Stokes equations (2.2a)-(2.2b) with the traction balance equation (2.4),subject to the boundary condition (2.2d).To proceed,we assume there exists a smooth level set functionφ(x) such that the interior and exterior domains are defined as Ω-={x∈Ω|φ(x)<0}and Ω+={x∈Ω|φ(x)>0},respectively,with the interface position given by the zero level set,Γ={x∈Ω|φ(x)=0}.

Since the pressure has jump discontinuity across the interface Γ,we follow the idea proposed in[6]and represent thed-dimensional discontinuous functionp(x)by a (d+1)-dimensional continuous neural network functionP(x,y),where x∈Ω andy∈R.We then set the augmented variableyto be the indicator functionI(x) as

So the pressurep(x)in Ω±can be represented by the neural network functionP(x,y=±1).We point out that the neural networkPis a smooth extension in Rd+1if a smooth activation function is used.The pressurep(x)in Ω-and Ω+can be regarded as the network functionPrestricted on two disjointd-dimensional hyperplanes,S-={(x,-1)|x∈Ω-}⊂Rd+1andS+={(x,1)|x∈Ω+}⊂Rd+1,respectively.Consequently,one can rewrite the pressure jumpas the difference of the continuous network functionPevaluated at the interface position on the two disjoint hyperplanes,S±.That is,for any xΓ∈Γ,

The gradient ofpin Ω±can be written by

where∇xP ∈Rdrepresents a vector consisting of the partial derivatives ofPwith respect to the components in x,and∂yPis the shorthand notation of partial derivative ofPwith respect to the augmented variabley.

Similarly,following the idea in [21],we represent thed-dimensional non-smooth velocity function u(x) by a (d+1)-dimensional smooth neural network function U(x,z)=(U1(x,z),···Ud(x,z)).We then set the augmented variablezto bez=φa(x)=|φ(x)| so the velocity u(x) in Ω can be represented by a neural network U(x,z=φa(x)).Here,we callφa(x) as a cusp-enforced level set function since it exhibits a gradient jump defined as(xΓ)=2∇φ(xΓ) for xΓ∈Γ.Based on the above property,one can immediately see that the gradient of velocity∇uk=∇xUk+∂zUk∇φa,k=1,...,din Ω±has discontinuity jump across the interface which inherits the cusp-like solution behavior of the velocity.More precisely,the jump ofμ∇ukacross the interface can be derived directly as

fork=1,...,d.Similarly,the jump ofcan be calculated by

By multiplying the normal vector n=∇φ/‖∇φ‖to the above equations (3.4) and(3.5),respectively;we obtain the following derivative jumps of u as follows

fork=1,...,d.Therefore,the interfacial velocity derivative jump terms in Eq.(2.4)can be expressed by the smooth network function U(x,z) with the assistance of the level set functionφ.

After careful calculation,the Laplacian ofukin Ω±can also be explicitly expressed in terms ofUkandφaas

where Δxrepresents the Laplace operator applied only to the variable x.Since the neural network function U is smooth,the continuity of velocity vector holds automatically,and calculating the derivatives of the network U with respect to its input variables x andzvia automatic differentiation [3] is straightforward.

Next,we express the Stokes equations (2.2a)-(2.2b) in terms ofPand U as follows.To simplify,we introduce the notationLUkto represent the right-hand side ofμΔukin Eq.(3.7) so that Eqs.(2.2a) and (2.2b) are written as

We also denote the sum of the right-hand sides of Eqs.(3.6a)-(3.6b)byDkU,so the traction balance equation (2.4) has the form as

Regarding the boundary condition Eq.(2.2d),we use xBto represent the points located on∂Ω so it becomes

Next,we aim to show the network architecture for the pressureP(x,I(x)) and the velocity U(x,φa(x)).Fig.1 illustrates the present neural network structure,where (x,I(x))∈Rd+1represents thed+1 feature input for the sub-networkP,while (x,φa(x))∈Rd+1represents the input for the sub-network U.Although the two sub-networks have separate structures,their output layers are fed into the same loss function,Loss(θ),which will be given in detail later.For simplicity,we assume that both sub-networks have the same depth number,L,and we use the subscripts★=’p’,’u’ to distinguish which sub-network is present.In each sub-network,we label the input layer as layer 0 and denote the feature input as=(x,I(x))Tor(x,φa(x))T,a column vector in Rd+1.The output at theℓ-th hidden layer,consisting

where the residual errorLI,incompressibility constraint errorLD,traction balance condition errorLΓ,and boundary condition errorLB,are shown respectively as follows:

The constantscBandcΓappeared in the loss function (3.14) are chosen to balance the contribution of the terms related to the traction balance equation(3.9),and the boundary condition (3.10),respectively.

4 Numerical results

In this section,we perform a series of accuracy tests for the proposed discontinuity and cusp capturing neural network method on solving two-and three-dimensional Stokes interface problems described by Eqs.(2.2a)-(2.2d).We set the penalty constants in the loss function,cΓ=cB=1.This approach enables the use of smooth neural network functions defined in Rd+1,denoted byP(x,y) and U(x,z),to learn discontinuous and non-smooth solutions in Rd,p(x) and u(x),respectively.We denote the network-predicted solutions asPNand UN=(UN,1,···,UN,d).Throughout this section,we represent the interface Γ using the zero level set of a suitable level set functionφ(x).We use the indicator functionI(x) and the cusp-enforced level set functionφa(x)=|φ(x)| as the augmented input for the network ofPNand UN,respectively.

To ensure theC2-regularity of u(x) in each subdomain,we employ the sigmoid functionσ(x)=as the activation function.For the numerical examples presented here(except the last example whose solution is not available,so deeper neural network approximations are used),we adopt a completely shallow network structure(L=1)withNpandNuneurons for the pressure and velocity,respectively,as shown in the network architecture Fig.1.So the number of total hyper-parameters to be trained isNθ=(d+3)Np+2(d+1)Nu.The training and test data points are generated using the Latin hypercube sampling algorithm[14].This algorithm effectively avoids clustering data points at specific locations,resulting in a nearly random sampling.To assess the accuracy of the network solution,we selectMtestpoints(distinct from the training points) within Ω and calculate theL∞errors as follows:

Here,theL∞error of a functionfis defined as‖f‖∞=We set the number of test points for each experiment to beMtest=100M,whereMrepresents the total number of training points used.Since the predicted results will vary slightly due to the randomness of training and test data points and the initialization of trainable parameters,we report the average value of errors and losses over 5 trial runs.

During the training process,we employ the Levenberg-Marquardt (LM) algorithm[13]as the optimizer to train the network and update the damping parameterνusing the strategies outlined in[22].The training process is terminated either when the loss value Loss(θ)falls below a threshold∈θ=10-14or the maximum iteration stepEpochmax=3000 is reached,unless otherwise stated.All experiments are performed on a desktop computer equipped with a single NVIDIA GeForce RTX3060 GPU.We implement the present network architecture using PyTorch (v1.13) [18],and all trainable parameters(i.e.,weights and biases)are initialized using PyTorch’s default settings.We have made the source code used in the paper available on GitHub at https://github.com/yuhautseng/.

Let us remark on the choice of test examples in the following.As mentioned earlier,we aim to emphasize the ease of code implementation for the problem compared with the traditional IIM;thus,we have taken the test examples in 2D and 3D directly from the literature [10] and [24],respectively.One might wonder why the interfaces Γ chosen there are in circular (2D) or spherical (3D) form.The reason is quite apparent since it is easy to construct the analytic solution under such a circumstance (the solution must satisfy the jump conditions (2.4) fork=1,...d).Nevertheless,this kind of simple interface shape cannot be regarded as a limitation of the present neural network methodology since it is completely mesh-free.Indeed,it can be applied to more complex interface cases when the analytic solutions are not available (see Example 4.5).

Notice that,it is not completely fair to make a running time comparison between the present neural network method with IIM since the former method is mesh-free while the latter one is grid-based.The present PINN method learns the solution directly while the IIM solves the solution on uniform grids.Besides,the results in[10]and[24]were run in different computing hardware and it is unlikely for us to reproduce the results.To provide some idea of the time performance of the present PINN method,we rerun our code in a slightly faster desktop machine equipped with a single NVIDIA GeForce RTX4090 GPU.The total training time up to 3000 epochs for the two-dimensional case with (Np,M0)=(20,30) (as shown in second row of Table 1) cost approximately 59 seconds while the three-dimensional case with (Np,Nu)=(40,100) (as shown in third row of Table 3) cost approximately 253 seconds,respectively.

Table 1: The L∞errors of PN and UN for Example 4.1.Left panel: the present network result.Here,Nθ=17Np, M=M0(M0+7), ∈θ=10-14,and Epochmax=3000.Right panel: IIM results in [10] with grid resolutions 1282,2562,and 5122.

Example 4.1.We start by solving a two-dimensional Stokes interface problem with a piecewise-constant viscosity and a singular force in a domain Ω=[-2,2]2.This example is taken directly from Example 4.2 in[10]so that we can compare the results with the augmented immersed interface method developed there.The interface Γ is simply a unit circle defined as the zero level set of the functionφ(x)=+-1.We specify the viscosityμ(x),and the exact solutionsp(x) and u(x)=(u1(x),u2(x)) as

The external force g(x)=(g1(x),g2(x)) thus can be derived accordingly as

and the interfacial force F(xΓ)=(F1(xΓ),F2(xΓ))=(-x1-2x2,-x2+2x1)can also be obtained from Eq.(2.4).

As mentioned earlier,we use a completely shallow network structure (L=1)withNpandNuneurons for the pressurePNand velocity UN,respectively.Here,we chooseNp=10,20,30,andNu=2Npso that the total training parameters isNθ=17Np.We also vary the training points accordingly by introducing a grid parameterM0=20,30,40 (can be regarded as the grid number used in traditional grid-based methods) so that the training dataset consists ofMI=points in Ω-∪Ω+,MΓ=3M0points on the interface Γ,andMB=4M0points on the boundary∂Ω.The total number of training points isM=M0(M0+7).

Table 1 shows theL∞errors ofPNand UN,as well as the corresponding training losses.We also show the results obtained by IIM in[10]using various grid resolutions in the right panel for comparison.It can be observed that the proposed network method achieves prediction accuracy on the order ofO(10-5)toO(10-8)as(Np,M0)varies from (10,20) to (30,40),with the loss dropping fromO(10-10) toO(10-15)accordingly.Meanwhile,Table 1 can be considered as an informal convergence analysis associated with the number of learnable parameters and training points used in the network.As seen,using a resolution of 5122uniform mesh,the IIM yields the accuracyandof the magnitudeO(10-5) that is at least two orders of magnitude greater than the one obtained by the present network using relatively fewer training pointsM=1880.

Example 4.2.In the second example (also referred to as Example 4.3 in [10]),we keep the domain Ω and interface Γ same as shown in Example 4.1.However,we choose the pressure jump no longer as a constant and it is defined by

The exact solution of velocity u(x)=(u1(x),u2(x)) is chosen as

and the external force g(x)=(g1(x),g2(x)) is derived as

The interfacial force,F(xΓ)=(F1(xΓ),F2(xΓ)),can be derived from the jump condition (2.4) as

For the network structure,we choose the exactly same setup as in Example 4.1.That is,we use the same values ofNpandM0but vary the viscosity contrast as(μ-,μ+)=(1,0.1),(10-3,1),(1,10-3).Table 2 shows theL∞norm errors ofPNand UN,and the corresponding training losses in the left panel.The corresponding results from IIM [10] are shown in the right panel for comparison.In the first case(μ-,μ+)=(1,0.1),by varying (Np,M0) from (10,20) to (30,40),we again observe that the present network predictionsPNand UNcan achieve the accuracy withL∞errors ranging from the orderO(10-5) toO(10-7) and the loss drops fromO(10-10) toO(10-14) accordingly.As we increase the neuronsNpand the training pointsM0,we again see an informal evidence for the numerical convergence of the present method.The results in [10] have=1.54×10-4and=6.49×10-5under the resolution using a 512×512 uniform mesh.Meanwhile,we also show the capability of the present method for interface problems with high viscosity contrastas(μ-,μ+)=(10-3,1)and(1,10-3).The present method achieves accuracy withL∞errors ranging from=O(10-4) to=O(10-7) which outperforms the results obtained from IIM significantly.

Table 2: The L∞errors of PN and UN for Example 4.2.Left panel: the present network result.Here,Nθ=17Np, M=M0(M0+7), ∈θ=10-14,and Epochmax=3000.Right panel: IIM results in [10] with grid resolutions 1282,2562 and 5122.

Example 4.3.We now consider solving a three-dimensional Stokes interface problem with a piecewise-constant viscosity (μ-=1 andμ+=0.1) and singular force in a domain Ω=[-2,2]3.The interface Γ is a simple unit sphere so it can be described by the zero level set of the functionφ(x)=-1.This example is taken directly from Example 6.2 in [24] so that we can compare the results with the augmented immersed interface method developed there.We list the exact solutions of pressurep(x) and velocity u(x) as follows:

Here,the velocity componentsu1(x),u2(x),andu3(x)are all smooth functions over the entire Ω,andp(x) is a piecewise constant.Accordingly,the external source g(x)=(g1(x),g2(x),g3(x)) can be derived as

Again,the interfacial force can be derived from the jump condition (2.4) as

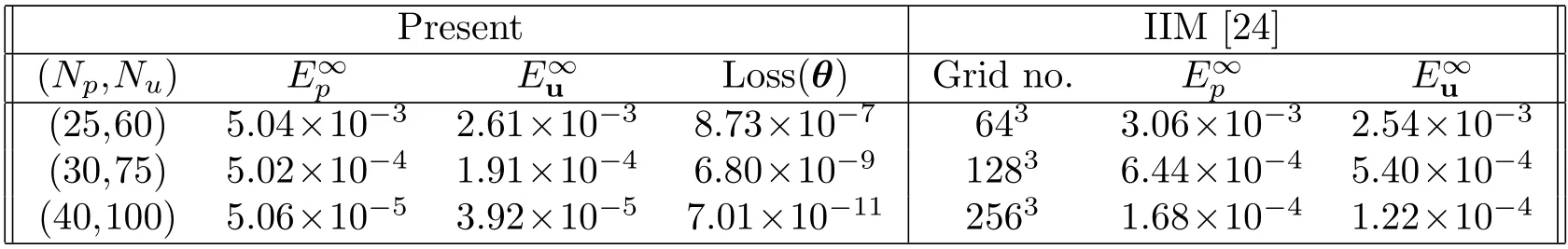

Unlike the two-dimensional cases in previous examples,we now fix the number of training points but vary the number of neuronsNpandNuto ease our computations.For the sampling of training data points,we generateMIdata points in the region Ω-∪Ω+,MBon the domain boundary (MB/6 on each face of∂Ω),andMΓdata points on the surface Γ generated using DistMesh[17].The total number of training points used in each of the following numerical runs isM=6216(MI=3000,MB=2400,andMΓ=816).In the left panel of Table 3,we present theL∞errors ofPNandUNobtained using the proposed method under three different (Np,Nu) pairs.Note that the total number of parameters can be computed asNθ=6Np+8Nu.As the network size increases,the proposed method achieves accurate predictions withL∞errors ranging from the order ofO(10-3) toO(10-5).The right panel of Table 3 lists the results generated from the augmented IIM method [24].One can see that,the present method can achieve comparably accurate results with the augmented IIM even far less the grid resolutions used.

Table 3: The L∞errors of PN and UN for Example 4.3.Left panel: the present network results.Here, μ-=1 and μ+=0.1, M=6216 training data points.Right panel: IIM results in [24] with grid resolutions 643,1283,and 2563.

Example 4.4.As the fourth example (also referred to as Example 6.3 in [24]),we keep the domain Ω and interface Γ the same as shown in Example 4.3.The piecewise constant viscosity is also chosen asμ-=1 andμ+=0.1.However,we choose the pressure jump no longer as a constant and the exact solutions of the pressurep(x)and velocity u(x)=(u1(x),u2(x),u3(x)) are given as follows.

and the external force g is given

Therefore,the interfacial force has the form F=(F1,F2,F3) as

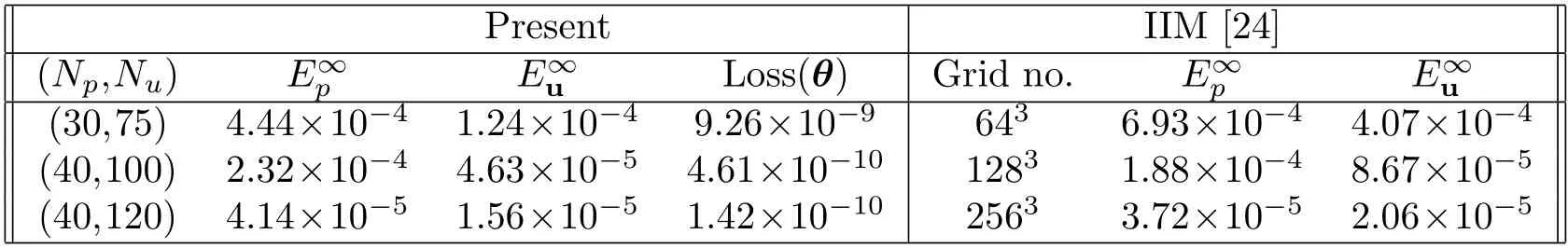

Since the domain and the interface are exactly the same as in Example 4.3,we use the same number of training pointsM.However,we use slightly different neuron pairs (Np,Nu)=(30,75),(40,100),(40,120) to generate the solution predictions.Table 4 shows the results for different (Np,Nu) values.One can see that using far less learnable parameters (maximalNθ=1200 parameters to be learned) and training points (M=6216),we are able to produce accurate results comparable to the augmented IIM proposed in [24].

Table 4: The L∞errors of PN and UN for Example 4.4.Left panel: the present network results.Here, μ-=1 and μ+=0.1, M=6216 training data points.Right panel: IIM results in [24] with grid resolutions 643,1283,and 2563.

Example 4.5.Unlike the previous examples with analytic solutions in regular domains,here,we consider the Stokes interface problem with a piecewise-constant viscosity (μ-=10,μ+=1) in the two-dimensional irregular circular domain Ω={x∈R2|‖x‖2≤2}whose solution is not available.The embedded interface Γ is parametrized as the polar function xΓ(α)=r(α)(cosα,sinα),wherer(α)=1-0.2cos(3α) withα ∈[0,2π),representing a flower shape with three petals.We useφ(x)=+-r(αx)as the level set function,whereαx=tan-1so the augmented feature inputs,I(x) andφa(x),can be obtained as before accordingly.Along the interface,we now define the surface tension force F(α)=-γκ(α)n(α) to mimic the stationary two-phase flow at some time instant.Here,γis the surface tension andκis the signed curvature of the curve.For simplicity,we set the surface tensionγ=1,the external force g=0,and the boundary condition ub=0.

Since the interface is more complex and the solution is more complicated,we hereby employ a four-hidden-layer deep neural network with (Np,Nu)=(15,20) in each hidden layer,resulting in a total number ofNθ=2175 trainable parameters used.(A comparison with a shallow network will be provided later.) The overall number of training points is chosen asM=7816,consisting ofMI=4816 points in Ω-∪Ω+,MΓ=1000 points on the interface Γ,andMB=2000 points on the boundary∂Ω.We finish the training by either the loss falling below a threshold∈θ=10-8or reaching the maximumEpochmax=5000 epochs.Since the analytic solution is unavailable,we investigate the flow behavior by plotting the pressure value and velocity quiver over the whole domain Ω in Fig.2.

Fig.2(a)shows the pressure predictionPNin Ω with the interface labeled by the red dashed line.Since the surface tension force acts in the normal direction along the interface,one can clearly see the pressure jump across the interface.The pressure jump is significantly larger,especially near the tip of each petal,where the curvature is relatively large.Meanwhile,near the tip part of each petal (positive curvature region),the pressure inside is higher than the outside one.On the contrary,the pressure inside is lower than the outside one near the neck part (negative curvature region).Fig.2(b) displays the corresponding velocity quiver UNin the domain Ω.It is observed that the flow tends to reduce the absolute magnitudes of curvature along the interface and relaxes to a circular shape.This instant flow tendency matches well with the simulation when the interface dynamics is taken into account.Meanwhile,since the fluid is incompressible,we can see three vortex dipoles (or six counter-rotating vortices) appearing at the petals.

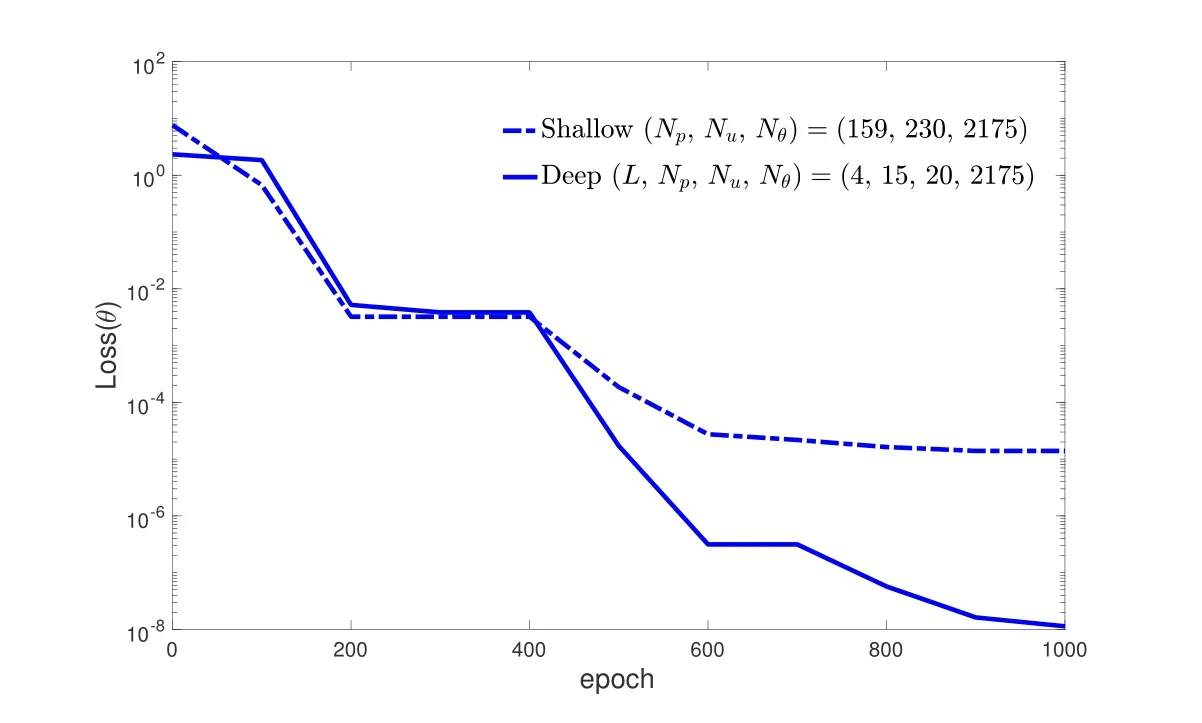

Figure 3: Evolution of training losses using shallow (dash-dotted line) and deep (solid line) networks with the same numbers of trainable parameters (Nθ=2175) and training points (M=7816).

We conclude this example by showing that the deeper neural network used here indeed performs better than a shallow one.To demonstrate that,we use a shallow network structure with neurons (Np,Nu)=(159,230) so the total number of trainable parametersNθ=2175 is the same as the one used in present four-hidden-layer network with(Np,Nu)=(15,20)in each hidden layer.Also we fix the training pointsM=7816 and train both networks up to 1000 epochs.The training time for both networks are almost the same since we use the same numbers of trainable parameters and training points.Fig.3 shows both evolution of training losses.One can see that,using the four-hidden-layer network,the training loss can drop down to the order of 10-8within 1000 epochs,while the training loss of the shallow one only drops to the order of 10-5.This illustration shows an appropriate network architecture can indeed impact the efficiency of the training process.

5 Conclusions

Solving Stokes equations with a piecewise-constant viscosity and singular force on an interface using traditional grid-based methods encounter the major challenge of computing the non-smooth solutions numerically.One can reformulate the governing equations by solving the Stokes equations in two sub-domains separately;however,the pressure and velocity from both sub-domains are coupled together by the traction balance equation on the interface.This makes the grid-based methods hard to discretize accurately near the interface and the resultant linear system hard to solve.In this paper,we provide a neural network approach to solve the equations.Our approach is based on adopting a discontinuity capturing sub-network to approximate the pressure and a cusp-capturing sub-network to approximate the velocity so that the network predictions can retain the inherent properties of the solutions.Since the present neural network method is completely mesh-free,the implementation is much easier than the traditional grid-based methods,such as immersed interface method,which needs careful and laborious efforts to discretize the equations accurately near the interface.A series of numerical experiments are performed to test the accuracy against the results obtained from the immersed interface methods in the existing literature.A completely shallow(one-hidden-layer)network with a moderate number of neurons and sufficient training data points can obtain comparably accurate results obtained from the traditional methods.We shall apply the present network to solve realistic two-phase flow applications in the future.

Acknowledgements

Y.-H.Tseng and M.-C.Lai acknowledge the supports by National Science and Technology Council,Taiwan,under research grants 111-2115-M-390-002 and 110-2115-M-A49-011-MY3,respectively.

杂志排行

Annals of Applied Mathematics的其它文章

- Specht Triangle Approximation of Large Bending Isometries

- A Review of Process Optimization for Additive Manufacturing Based on Machine Learning

- Fast High Order and Energy Dissipative Schemes with Variable Time Steps for Time-Fractional Molecular Beam Epitaxial Growth Model

- Connections between Operator-Splitting Methods and Deep Neural Networks with Applications in Image Segmentation

- Preface

- The Acoustic Scattering of a Layered Elastic Shell Medium