基于视觉的采摘机器人采摘定位与导航方法

2023-11-09蒙贺伟周馨曌吴烽云邹天龙

蒙贺伟 周馨曌 吴烽云 邹天龙

摘要:自主导航与采摘定位作为采摘机器人的关键任务,可有效减轻人工劳动强度,提高作业精度与作业效率。该文阐述和分析基于视觉的采摘机器人采摘定位与自主导航方法,主要涉及视觉导航的可行驶区域检测、果实目标识别及采摘点定位,并根据国内外的研究现状,对机器视觉的最新发展和未来发展趋势进行展望。

关键词:采摘机器人;机器视觉;自主导航;可行驶区域检测;果实目标识别;采摘点定位

中图分类号:TP391.4 文献标志码:A 文章编号:1674-2605(2023)05-0001-07

DOI:10.3969/j.issn.1674-2605.2023.05.001

Picking Location and Navigation Methods for Vision-based

Picking Robots

MENG Hewei1ZHOU Xinzhao1,2WU Fengyun3,4Zou Tianlong2

(1.College of Mechanical and Electrical Engineering, Shihezi University, Shihezi 832000, China

2.Foshan-Zhongke Innovation Research Institute of Intelligent Agriculture, Foshan 528010, China

3.Guangzhou College of Commerce, Guangzhou 511363, China

4.College of Engineering, South China Agricultural University, Guangzhou 510642, China)

Abstract: Autonomous navigation and picking positioning, as key tasks of picking robots, can effectively reduce manual labor intensity, improve work accuracy and efficiency. This article elaborates and analyzes the methods of vision-based picking positioning and autonomous navigation for picking robots, mainly involving the detection of movable areas, fruit target recognition, and picking point positioning in visual navigation. Based on the current research status at home and abroad, it looks forward to the latest development and future development trends of machine vision.

Keywords:picking robots; machine vision; autonomous navigation; travelable area detection; fruit target recognition; picking point positioning

0 引言

在世界各地,水果在農业经济中占有越来越重要的地位。根据联合国粮食及农业组织的统计数据,自1991年至2021年以来,葡萄、苹果、柑橘等水果的生产总值呈现稳步增长的趋势[1]。水果收获具有工作周期短、劳动密集、耗时等特点。随着人口老龄化和

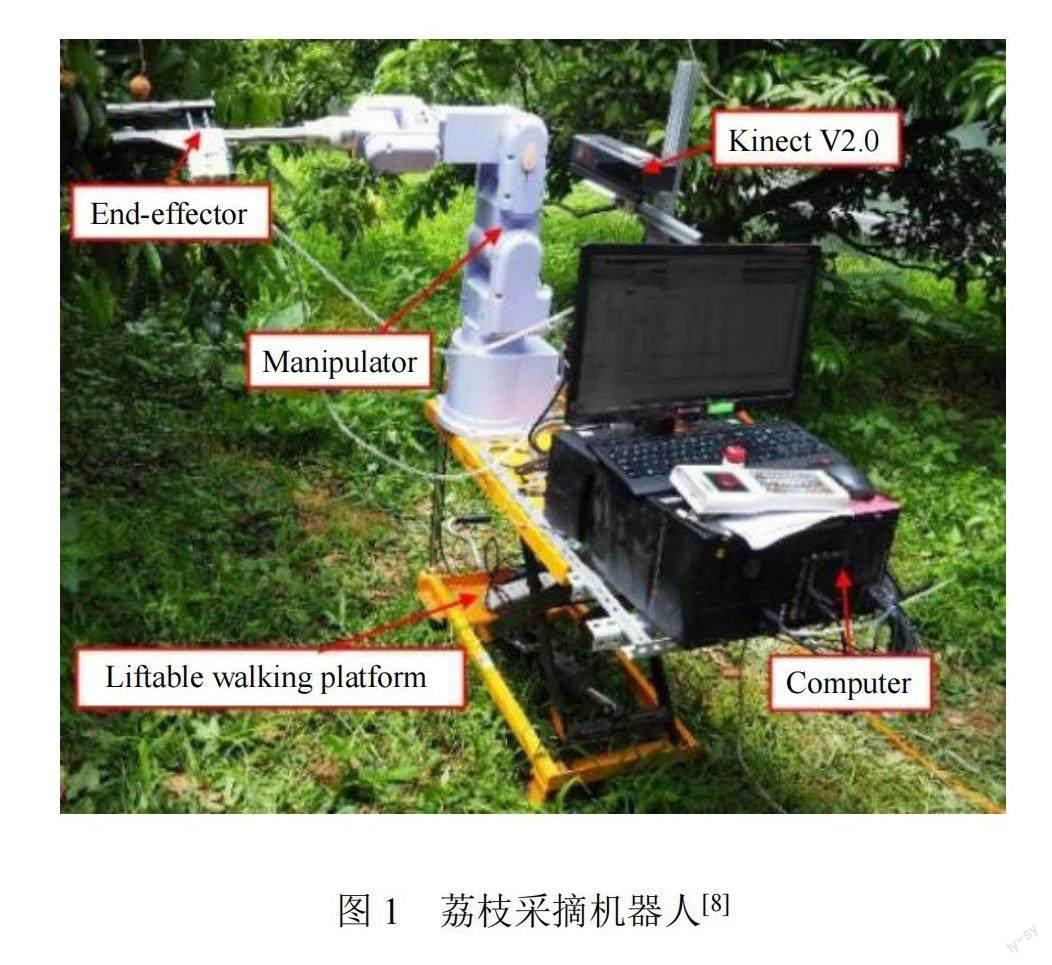

农村劳动力的短缺,人工成本逐年增加,劳动力需求与人工成本之间的矛盾日益突出,制约了中国传统农业的发展。随着现代信息技术、人工智能技术的快速发展,面向苹果[2-3]、番茄[4-5]、荔枝[6-8]、火龙果[9]、茶叶[10-11]、甜椒[12-13]等多种作物的采摘机器人及相关技术[14-15]得到了国内外学者的关注。采摘机器人的应用对提高生产力、作业效率以及农业可持续性发展具有重要的意义。荔枝采摘机器人如图1所示。

相较于工业机器人,采摘机器人的作业环境更加复杂,干扰因素多、障碍物多、不规则程度高,且由于果树叶片遮挡等影响,降低了全球定位系统(global positioning system, GPS)的定位精度。机器视觉具有成本低、操作简单、信息丰富等特点,更适用于GPS信号被遮挡的山间、农田等复杂环境。机器视觉导航的关键技术主要涉及可行驶区域检测,其研究方法通常分为基于机器学习的分割方法和基于图像特征的分割方法。

采摘机器人除了实现果园环境下的自主行走外,还需要在复杂的环境下实现果实自动采摘。如何实现低损、智能、拟人化的采摘作业是采摘机器人的应用重点。目前相关学者的研究主要集中在果实目标识别、采摘点定位等方面。

本文分析基于视觉的采摘机器人自主导航与采摘定位的研究进展,在对基于机器学习的可行驶区域分割方法和基于图像特征的可行驶区域分割方法进行总结分析的基础上,进一步阐述果实目标识别、采摘点定位等方法的发展现状,最后结合无人农场与智慧农业,对采摘机器人定位与导航技术的未来应用场景进行展望。

1 基于机器视觉的自主导航

可行驶区域检测的主要目的是从复杂场景中提取无障碍可行驶区域,为确定导航路径奠定基础。根据可行驶区域的特点,可分为结构化可行驶区域和非结构化可行驶区域两类。其中,结构化可行驶区域类似于城市道路、高速公路等标准化道路,车道标线清晰,道路边缘规则,几何特征鲜明;非结构化可行驶区域类似于果园、农村的道路以及作物行间区域,可行驶区域边缘不规则、边界不清晰、没有车道标线。与结构化可行驶区域相比,非结构化可行驶区域具有更为复杂的环境背景。大部分非结构化可行驶区域的路面凹凸不平,并伴有随机分布的杂草。

1.1 基于机器学习的可行驶区域分割方法

基于机器学习的可行驶区域分割方法可分为聚类[16]、支持向量机(support vector machine, SVM)[17]、深度学习[18]等。YANG等[19]提出一种基于神经网络和像素扫描的视觉导航路径提取方法,引入Segnet网络和Unet网络,提高果园路况信息和背景环境的分割效果;同时采用滑动滤波算法、扫描法和加权平均法拟合最终的导航路径。LEI等[20]结合改进的种子SVM和二维激光雷达点云数据,对非结构化道路进行检测和识别。WANG等[21]结合光照不变图像,通过组合分析概率图与梯度信息,实现复杂场景的道路提取。KIM等[22]采用基于补丁和卷积神经网络(convolu-tional neural network, CNN)的轻量化神经网络,实现半结构化果园环境中的自主路径识别。ALAM等[23]采用最近邻分类(nearest neighbor, NN)算法和软投票聚合相结合的方法,实现结构化和非结构化环境下的道路提取。部分学者[24-26]基于机器学习的方法,研究遥感中的道路提取方法,但这种方法并不适用于采摘机器人。

1.2 基于图像特征的可行驶区域分割方法

基于图像特征的可行驶区域分割方法通过建立模型,利用颜色、纹理等特征来区分道路和非道路区域。ZHOU等[27]利用H分量来提取天空区域的目标路径。CHEN等[28-29]利用改进的灰度因子和最大类间方差法提取土壤和植物的灰度图像,实现温室环境下土壤和植物的分割。ZHOU等[30]基于图像预处理算法,优化灰度与因子,实现双空间融合的非结构化道路提取,并在此基础上实现非结构化道路与路侧果实的同步识别,如图2所示。

QI等[31]基于图的流形排序方法对道路区域进行分割,并使用二项式函数来拟合道路区域模型,实现农村环境下的道路识别。一些学者在道路提取过程中考虑了消失点等空间结构特征,如SU等[32]在光照不变图像预消失点约束的基础上,采用Dijkstra方法结合单线激光雷达实现道路提取;PHUNG等[33]基于改进的消失点估计方法结合几何和颜色,实现行人车道的检测。然而,消失点的检测比较耗时[34],且大多應用于结构化道路检测,不适用于处理非结构化道路。

2 基于机器视觉的采摘定位

2.1 果实目标识别

果实目标识别的方法主要分为基于传统图像特征分析的方法和基于深度学习的方法。

基于传统图像特征分析的方法主要通过颜色[35]、形状纹理[36]以及多种特征[37-38]对水果进行识别。如P?REZ-ZAVALA等[39]基于形状和纹理信息,将聚类像素的区域分离成葡萄串,其平均检测精度为88.61%,平均召回率为80.34%。周文静等[40]基于K近邻算法和最大类间方差法将葡萄果粒与图像背景进行区别分割,并基于圆形Hough变换实现葡萄果粒的识别。LIU等[41]通过颜色、纹理信息以及SVM,实现了葡萄果束的分离和计数。吴亮生等[42]基于Cb Cr色差法和区域生长策略提取了杨梅果实潜在的前景区域。

基于传统图像特征分析的方法在面向多变环境时具有一定的局限性,因此基于深度学习的方法得到快速发展,并广泛应用于智慧农业领域[43-47],如在作物生长形态识别[48-53]、分类定位[54-57]、跟踪计数[58-60]和病虫害识别[61-64]等领域受到学者的高度重视,深度学习的相关技术也在果蔬的目标检测与识别方面得到深入研究。LI等[65]利用Faster R-CNN网络模型、色差和色差比实现果园混乱背景下的苹果检测与分割。WANG等[66]开发一种基于通道修剪YOLOv5s算法的苹果果实检测方法,该模型具有尺寸小、检测速度快等特点。FU等[67]通过修改YOLOv4网络模型,实现果园自然环境中香蕉束和茎的快速检测。HAYDAR等[68]基于OpenCV AI Kit (OAK-D)与YOLOv4-tiny深度学习模型,开发一种支持深度学习的机器视觉控制系统,实现果实高度的检测以及割台采摘齿耙位置的自动调整。LI等[69]基于改进的Faster R-CNN提出Strawberry R-CNN,通过创建草莓计数误差集,设计一种草莓识别与计数评估方法。SUNIL等[70]采用ResNet50、多视角特征融合网络(multi-view feature fusion network, MFFN)和自适应注意力机制,结合通道、空间和像素注意力对番茄植物叶子图像进行分类,实现基于MFFN的番茄植物病害分类。程佳兵等[71]基于深度目标检测网络实现水果与背景区域的分割,借助立体匹配和三角测量技术,实现水果三维点云与空间位置的获取。

2.2 采摘点定位

对果实目标识别后,结合果实的特性,对果实的采摘点进行定位,为采摘末端提供作业信息,以实现低损、准确的水果收获。

张勤等[72]基于YOLACT模型对番茄果梗进行粗分割,通过感兴趣区域(region of interest, ROI)的位置匹配关系、细化算法、膨胀操作和果梗形态特征等进一步对果梗进行细分割,最终结合深度信息求取采摘点坐标。徐凤如等[73]采用改进型YOLOv4-Dense算法和OpenCV图像处理方法,在对芽叶进行检测的基础上,基于熔断行预目标采摘区域的交点即为理想采摘点的思想,对茶树芽叶采摘点进行定位。宋彦等[74]构建一种基于多头自注意力机制结合多尺度特征融合的RMHSA-NeXt语义分割算法,实现茶叶采摘点的分割,具有准确性高、推理速度快等特点。宁政通等[75]采用掩模区域卷积神经网络与阈值分割方法,实现葡萄果梗的识别,并将果梗质心点确定为采摘点。杜文圣等[76]通过改进的MaskR-CNN模型和集合逻辑算法,实现鲜食葡萄的检测与夹持点定位。梁喜凤等[77]利用番茄果串的质心及其轮廓边界确定果梗的ROI,以第一个果实分叉点与果梗骨架角点确定采摘点位置。毕松等[78]通过分割成熟草莓的目标点云对草莓位姿进行估计,结合草莓位姿质心与草莓高确定采摘点。ZHAO等[79]基于采摘点服从边界框的思路,结合改进的YOLOv4,实现葡萄检测与采摘点的同步预测。张勤等[80]基于YOLOv4算法和番茄串与对应果梗的连通关系,筛选可摘番茄串,并利用深度信息和颜色特征确定采摘点。WU等[81]提出一种自上而下的葡萄果梗定位思路,整合目标和关键点的检测功能,实现果梗及其采摘点的定位。JIN等[82]构建远近距离立体视觉系统对葡萄果串与果梗进行识别与定位,并基于果梗质心识别算法实现采摘点定位。TANG等[83]采用k-means++先验框聚类方法对YOLOv4-tiny模型进行改进,并基于提取的目标ROI提出一种双目立体匹配策略,在降低算法计算量的同时,实现复杂环境下的油茶果果实检测与采摘点定位。WU等[84-85]为实现香蕉智能化采摘,提出改进的YOLOv5-B模型,搭建立体视觉香蕉雄花簇切断机器人实验平台,并获得花轴切断点的三维空间坐标。上述研究方法为采摘机器人的无人化作业和低损采摘奠定了基础。

3 结论与展望

本文综述了机器视觉在采摘机器人中采摘定位与自主导航的应用,主要包括基于机器视觉的自主导航、果实目标识别与采摘点定位等。

虽然人工智能及深度学习方法提高了采摘机器人作业的准确性与可靠性,但由于农业环境的复杂性和不确定性,机器视觉的应用仍然存在较大的定位误差。因此,需要结合采摘机器人的控制系统和机构的创新设计,进一步开发机器视觉与末端的误差主动容错机制,以降低目标定位和操作误差。

采摘机器人在自主行走作业过程中,受到地面不平、震动等动态干扰,导致视觉画面质量降低,影响导航的精确度。因此,需要结合图像预处理技术,进一步开发实时降噪、多源信息融合的导航算法,提高采摘机器人在野外果园作业的鲁棒性与可靠性。此外,采摘与行走的多行为协同决策是一个值得研究的方向。

参考文献

参考文献

[1]FAO. Value of agricultural production [EB/OL]. (2023-09-23) [2023-09-23]. https://www.fao.org/faostat/en/#data/QV/visualize.

[2]丁一,姬伟,许波,等.蘋果采摘机器人柔顺抓取的参数自整定阻抗控制[J].农业工程学报,2019,35(22):257-266.

[3]LI T, XIE F, ZHAO Z, et al. A multi-arm robot system for efficient apple harvesting: Perception, task plan and control[J]. Computers and Electronics in Agriculture, 2023,211:107979.

[4]LI Y, FENG Q, LIU C, et al. MTA-YOLACT: Multitask-aware network on fruit bunch identification for cherry tomato robotic harvesting[J]. European Journal of Agronomy, 2023,146:126812.

[5]于丰华,周传琦,杨鑫,等.日光温室番茄采摘机器人设计与试验[J].农业机械学报,2022,53(1):41-49.

[6]ZHONG Z, XIONG J, ZHENG Z, et al. A method for litchi picking points calculation in natural environment based on main fruit bearing branch detection[J]. Computers and Electronics in Agriculture, 2021,189:106398.

[7]陈燕,蒋志林,李嘉威,等.夹剪一体的荔枝采摘末端执行器设计与性能试验[J].农业机械学报,2018,49(1):35-41.

[8]LI J, TANG Y, ZOU X, et al. Detection of fruit-bearing branches and localization of litchi clusters for vision-based harvesting robots[J]. IEEE Access, 2020,8:117746-117758.

[9]ZHANG F, CAO W, WANG S, et al. Improved YOLOv4 recognition algorithm for pitaya based on coordinate attention and combinational convolution[J]. Frontiers in Plant Science, 2022,13:1030021.

[10]CHEN C, LU J, ZHOU M, et al. A YOLOv3-based computer vision system for identification of tea buds and the picking point[J]. Computers and Electronics in Agriculture, 2022,198: 107116.

[11]杨化林,钟岩,姜沅政,等.基于时间与急动度最优的并联式采茶机器人轨迹规划混合策略[J].机械工程学报,2022, 58(9):62-70.

[12]BARTH R, HEMMING J, VAN HENTEN E J. Angle estimation between plant parts for grasp optimisation in harvest robots[J]. Biosystems Engineering, 2019,183:26-46.

[13]HESPELER S C, NEMATI H, DEHGHAN-NIRI E. Non-destructive thermal imaging for object detection via advanced deep learning for robotic inspection and harvesting of chili peppers[J]. Artificial Intelligence in Agriculture, 2021,5:102-117.

[14]林俊強,王红军,邹湘军,等.基于DPPO的移动采摘机器人避障路径规划及仿真[J].系统仿真学报,2023,35(8):1692-1704.

[15]霍韩淋,邹湘军,陈燕,等.基于视觉机器人障碍点云映射避障规划及仿真[J/OL].系统仿真学报:1-12[2023-09-20]. http://kns.cnki.net/kcms/detail/11.3092.V.20230823.0932.002.html.

[16]ZHANG Z, ZHANG X, CAO R, et al. Cut-edge detection method for wheat harvesting based on stereo vision[J]. Computers and Electronics in Agriculture, 2022,197:106910.

[17]LIU Y, XU W, DOBAIE A M, et al. Autonomous road detection and modeling for UGVs using vision-laser data fusion[J]. Neurocomputing, 2018,275:2752-2761.

[18]LI Y, HONG Z, CAI D, et al. A SVM and SLIC based detection method for paddy field boundary line[J]. Sensors, 2020,20(9):2610.

[19]YANG Z, OUYANG L, ZHANG Z, et al. Visual navigation path extraction of orchard hard pavement based on scanning method and neural network[J]. Computers and Electronics in Agriculture, 2022,197:106964.

[20]LEI G, YAO R, ZHAO Y, et al. Detection and modeling of unstructured roads in forest areas based on visual-2D lidar data fusion[J]. Forests, 2021,12(7):820.

[21]WANG E, LI Y, SUN A, et al. Road detection based on illuminant invariance and quadratic estimation[J]. Optik, 2019, 185:672-684.

[22]KIM W S, LEE D H, KIM Y J, et al. Path detection for autonomous traveling in orchards using patch-based CNN[J]. Computers and Electronics in Agriculture, 2020,175:105620.

[23]ALAM A, SINGH L, JAFFERY Z A, et al. Distance-based confidence generation and aggregation of classifier for un-structured road detection[J]. Journal of King Saud University--Computer and Information Sciences, 2022,34(10):8727-8738.

[24]XIN J, ZHANG X, ZHANG Z, et al. Road extraction of high-resolution remote sensing images derived from DenseUNet[J]. Remote Sensing, 2019,11(21):2499.

[25]GUAN H, LEI X, YU Y, et al. Road marking extraction in UAV imagery using attentive capsule feature pyramid network[J]. International Journal of Applied Earth Observation and Geoinformation, 2022,107:102677.

[26]YANG M, YUAN Y, LIU G. SDUNet: Road extraction via spatial enhanced and densely connected UNet[J]. Pattern Recognition, 2022,126:108549.

[27]ZHOU M, XIA J, YANG F, et al. Design and experiment of visual navigated UGV for orchard based on Hough matrix and RANSAC[J]. International Journal of Agricultural and Biolo-gical Engineering, 2021,14(6):176-184.

[28]CHEN J, QIANG H, WU J, et al. Extracting the navigation path of a tomato-cucumber greenhouse robot based on a median point Hough transform[J]. Computers and Electronics in Agriculture, 2020,174:105472.

[29]CHEN J, QIANG H, WU J, et al. Navigation path extraction for greenhouse cucumber-picking robots using the prediction-point Hough transform[J]. Computers and Electronics in Agriculture, 2021,180:105911.

[30]ZHOU X, ZOU X, TANG W, et al. Unstructured road extraction and roadside fruit recognition in grape orchards based on a synchronous detection algorithm[J]. Frontiers in Plant Science, 2023,14:1103276.

[31]QI N, YANG X, LI C, et al. Unstructured road detection via combining the model-based and feature-based methods[J]. IET Intelligent Transport Systems, 2019,13(10):1533-1544.

[32]SU Y, ZHANG Y, ALVAREZ J M, et al. An illumination-invariant nonparametric model for urban road detection using monocular camera and single-line lidar[C]//2017 IEEE Inter-national Conference on Robotics and Biomimetics (ROBIO). IEEE, 2017:68-73.

[33]PHUNG S L, LE M C, BOUZERDOUM A. Pedestrian lane detection in unstructured scenes for assistive navigation[J]. Computer Vision and Image Understanding, 2016,149:186-196.

[34]XU F, HU B, CHEN L, et al. An illumination robust road detection method based on color names and geometric infor-mation[J]. Cognitive Systems Research, 2018,52:240-250.

[35]王玉德,張学志.复杂背景下甜瓜果实分割算法[J].农业工程学报,2014,30(2):176-181.

[36]田有文,李天来,李成华,等.基于支持向量机的葡萄病害图像识别方法[J].农业工程学报,2007(6):175-180.

[37]谢忠红,姬长英.基于颜色模型和纹理特征的彩色水果图像分割方法[J].西华大学学报(自然科学版),2009,28(4):41-45.

[38]卢军,桑农.变化光照下树上柑橘目标检测与遮挡轮廓恢复技术[J].农业机械学报,2014,45(4):76-81;60.

[39]P?REZ-ZAVALA R, TORRES-TORRITI M, CHEEIN F A, et al. A pattern recognition strategy for visual grape bunch detection in vineyards[J]. Computers and Electronics in Agri-culture, 2018,151:136-149.

[40]周文静,查志华,吴杰.改进圆形Hough变换的田间红提葡萄果穗成熟度判别[J].农业工程学报,2020,36(9):205-213.

[41]LIU S, WHITTY M. Automatic grape bunch detection in vineyards with an SVM classifier[J]. Journal of Applied Logic, 2015, 13(4): 643-653.

[42]吴亮生,雷欢,陈再励,等.基于局部滑窗技术的杨梅识别与定位方法[J].自动化与信息工程,2021,42(6):30-35;48.

[43]TANG Y, CHEN M, WANG C, et al. Recognition and localization methods for vision-based fruit picking robots: A review[J]. Frontiers in Plant Science, 2020, 11: 510.

[44]SANAEIFAR A, GUINDO M L, BAKHSHIPOUR A, et al. Advancing precision agriculture: The potential of deep lear-ning for cereal plant head detection[J]. Computers and Electro-nics in Agriculture, 2023, 209:107875.

[45]WENG S, TANG L, QIU M, et al. Surface-enhanced Raman spectroscopy charged probes under inverted superhydrophobic platform for detection of agricultural chemicals residues in rice combined with lightweight deep learning network[J]. Analy-tica Chimica Acta, 2023,1262:341264.

[46]KHAN S, ALSUWAIDAN L. Agricultural monitoring system in video surveillance object detection using feature extraction and classification by deep learning techniques[J]. Computers and Electrical Engineering, 2022, 102:108201.

[47]GUO R, XIE J, ZHU J, et al. Improved 3D point cloud segmentation for accurate phenotypic analysis of cabbage plants using deep learning and clustering algorithms[J]. Com-puters and Electronics in Agriculture, 2023,211:108014.

[48]YU S, FAN J, LU X, et al. Deep learning models based on hyperspectral data and time-series phenotypes for predicting quality attributes in lettuces under water stress[J]. Computers and Electronics in Agriculture, 2023, 211:108034.

[49]PAN Y, ZHANG Y, WANG X, et al. Low-cost livestock sorting information management system based on deep lear-ning[J]. Artificial Intelligence in Agriculture, 2023,9:110-126.

[50]梁金營,黄贝琳,潘栋.基于图像识别的物流停车场引导系统的设计[J].机电工程技术,2022,51(11):163-166.

[51]陈传敏,贾文瑶,刘松涛,等.高盐废水中硅的形态定性识别及定量分析[J].中国测试,2023,49(2):87-92.

[52]郭林,沈东义,毛火明,等.基于形态相似度识别的大数据分析方法在测井岩性识别中的研究[J].电脑知识与技术,2023, 19(3):54-56.

[53]宫志宏,董朝阳,于红,等.基于机器视觉的冬小麦叶片形态测量软件开发[J].中国农业气象,2022,43(11):935-944.

[54]SUNIL G C, ZHANG Y, KOPARAN C, et al. Weed and crop species classification using computer vision and deep learning technologies in greenhouse conditions[J]. Journal of Agricul-ture and Food Research, 2022,9:100325.

[55]PUTRA Y C, WIJAYANTO A W. Automatic detection and counting of oil palm trees using remote sensing and object-based deep learning[J]. Remote Sensing Applications: Society and Environment, 2023, 29:100914.

[56]劉斌,龙健宁,程方毅,等.基于卷积神经网络的物流货物图像分类研究[J].机电工程技术,2021,50(12):79-82;175.

[57]林静,徐月华.水果姿态图像自动采集训练检测仪设计[J].中国测试,2021,47(7):119-124.

[58]WU Z, SUN X, JIANG H, et al. NDMFCS: An automatic fruit counting system in modern apple orchard using abatement of abnormal fruit detection[J]. Computers and Electronics in Agriculture, 2023, 211:108036.

[59]WU F, YANG Z, MO X, et al. Detection and counting of banana bunches by integrating deep learning and classic image-processing algorithms[J]. Computers and Electronics in Agriculture, 2023,209:107827.

[60]成海秀,陈河源,曹惠茹,等.无人机目标跟踪系统的设计与实现[J].机电工程技术,2020,49(11):165-167.

[61]ALSHAMMARI H H, TALOBA A I, SHAHIN O R. Identification of olive leaf disease through optimized deep learning approach[J]. Alexandria Engineering Journal, 2023, 72:213-224.

[62]GIAKOUMOGLOU N, PECHLIVANI E M, SAKELLIOU A, et al. Deep learning-based multi-spectral identification of grey mould[J]. Smart Agricultural Technology, 2023,4:100174.

[63]KAUR P, HARNAL S, GAUTAM V, et al. An approach for characterization of infected area in tomato leaf disease based on deep learning and object detection technique[J]. Enginee-ring Applications of Artificial Intelligence, 2022,115:105210.

[64]ZHU D J, XIE L Z, CHEN B X, et al. Knowledge graph and deep learning based pest detection and identification system for fruit quality[J]. Internet of Things, 2023,21:100649.

[65]LI T, FANG W, ZHAO G, et al. An improved binocular localization method for apple based on fruit detection using deep learning[J]. Information Processing in Agriculture 2023, 10(2):276-287.

[66]WANG D, HE D. Channel pruned YOLO V5s-based deep learning approach for rapid and accurate apple fruitlet detection before fruit thinning[J]. Biosystems Engineering, 2021,210:271-281.

[67]FU L, WU F, ZOU X, et al. Fast detection of banana bunches and stalks in the natural environment based on deep learning[J]. Computers and Electronics in Agriculture, 2022,194:106800.

[68]HAYDAR Z, ESAU T J, FAROOQUE A A, et al. Deep learning supported machine vision system to precisely auto-mate the wild blueberry harvester header[J]. Scientific Reports, 2023,13(1):10198.

[69]LI J, ZHU Z, LIU H, et al. Strawberry R-CNN: Recognition and counting model of strawberry based on improved faster R-CNN[J]. Ecological Informatics, 2023,77:102210.

[70]SUNIL C K, JAIDHAR C D, PATIL N. Tomato plant disease classification using multilevel feature fusion with adaptive channel spatial and pixel attention mechanism[J]. Expert Systems with Applications, 2023,228:120381.

[71]程佳兵,邹湘军,陈明猷,等.多类复杂水果目标的通用三维感知框架[J].自动化与信息工程,2021,42(3):15-20.

[72]张勤,庞月生,李彬.基于实例分割的番茄串视觉定位与采摘姿态估算[J/OL].农业机械学报:1-13[2023-09-18]. http://kns.cnki.net/kcms/detail/11.1964.S.20230808.1632.024.html.

[73]徐凤如,张昆明,张武,等.一种基于改进YOLOv4算法的茶树芽叶采摘点识别及定位方法[J].复旦学报(自然科学版),2022,61(4):460-471.

[74]宋彦,杨帅,郑子秋,等.基于多头自注意力机制的茶叶采摘点语义分割算法[J].农业机械学报,2023,54(9):297-305.

[75]宁政通,罗陆锋,廖嘉欣,等.基于深度学习的葡萄果梗识别与最优采摘定位[J].农业工程学报,2021,37(9):222-229.

[76]杜文圣,王春颖,朱衍俊,等.采用改进Mask R-CNN算法定位鲜食葡萄疏花夹持点[J].农业工程学报,2022,38(1):169-177.

[77]梁喜凤,金超杞,倪梅娣,等.番茄果实串采摘点位置信息获取与试验[J].农业工程学报,2018,34(16):163-169.

[78]毕松,隗朋峻,刘仁学.温室高架栽培草莓空间姿态识别与采摘点定位方法[J].农业机械学报,2023,54(9):53-64;84.

[79]ZHAO R, ZHU Y, LI Y. An end-to-end lightweight model for grape and picking point simultaneous detection[J]. Biosystems Engineering, 2022,223:174-188.

[80]张勤,陈建敏,李彬,等.基于RGB-D信息融合和目标检测的番茄串采摘点识别定位方法[J].农业工程学报,2021,37(18): 143-152.

[81]WU Z, XIA F, ZHOU S, et al. A method for identifying grape stems using keypoints[J]. Computers and Electronics in Agri-culture, 2023,209:107825.

[82]JIN Y, YU C, YIN J, et al. Detection method for table grape ears and stems based on a far-close-range combined vision system and hand-eye-coordinated picking test[J]. Computers and Electronics in Agriculture, 2022,202:107364.

[83]TANG Y, ZHOU H, WANG H, et al. Fruit detection and positioning technology for a Camellia oleifera C. Abel orchard based on improved YOLOv4-tiny model and binocular stereo vision[J]. Expert Systems with Applications, 2023,211:118573.

[84]WU F, DUAN J, AI P, et al. Rachis detection and three-dimensional localization of cut off point for vision-based banana robot[J]. Computers and Electronics in Agriculture, 2022,198:107079.

[85]WU F, DUAN J, CHEN S, et al. Multi-target recognition of bananas and automatic positioning for the inflorescence axis cutting point[J]. Frontiers in Plant Science, 2021,12:705021.

作者簡介:

蒙贺伟,男,1982年生,博士,教授/博士生导师,主要研究方向:农业机械化、农业电气化与自动化。E-mail: mhw_mac@126.com

周馨曌,女,1997年生,博士,主要研究方向:智慧农业、机器视觉、农业电气化与自动化。E-mail: zxinzhao@126.com

吴烽云(通信作者),女,1988年生,博士,主要研究方向:智慧农业、机器视觉。E-mail: fyseagull@163.com

邹天龙,男,1986年生,大专,主要研究方向:测控系统集成应用。E-mail: 84174619@qq.com