Process Monitoring Based on Temporal Feature Agglomeration and Enhancement

2023-03-27XiaoLiangWeiwuYanYusunFuandHuiheShao

Xiao Liang, Weiwu Yan, Yusun Fu, and Huihe Shao

Dear Editor,

This letter proposes a process-monitoring method based on temporal feature agglomeration and enhancement, in which a novel feature extractor called contrastive feature extractor (CFE) extracts the temporal and relational features among process parameters.Then the feature representations are enhanced by maximizing the separation among different classes while minimizing the scatter within each class.

Process monitoring is a popular research area in process industries.With manufacturing processes becoming complex and intelligent, the demand for the safety and product quality is growing significantly.Process monitoring has played a crucial role in maintaining efficient and safe operating conditions in large-scale industrialized production.Data-driven process monitoring is one of the most fruitful areas in research and widely used in industrial applications over the last two decades [1] and [2].

Data-driven process monitoring is usually implemented using machine learning methods and multivariate statistical analyses.The key issue of data-based process-monitoring methods is to extract feature representations from industrial process data based on which the statistics of the monitoring model can be constructed in feature-representation space.Various supervised-learning methods have been introduced to learn feature representation for building process-monitoring models.PCA is the most widely used linear process-monitoring method.Nonlinear and robust methods, such as nonlinear PCA,the principal curve method, multimodal, manifold learning, and kernel-based methods (KPCA, KPLS, KICA, KFDA, etc.) are proposed to address nonlinearity and uncertainty in complex industrial processes [1].Some methods such as manifold learning and graphical model are proposed to extract relational complicated features of industrial process data.Those process-monitoring methods extract static relationships among process variables without regard to the temporal information of process variables.Considering that the temporal variation can indicate dynamic performance changes, processmonitoring methods considering process temporal behaviors are becoming focus issues in process-monitoring researches.Dynamic latent variable (DLV) methods have been studied for time-series monitoring models by exploiting dynamic relations among process variables.To make full use of label information, supervised approaches such as Bayesian network (BN) and support vector machine (SVM) are used to coach the training of feature extraction[2].Recently, deep learning is introduced into process monitoring for outstanding performance in extracting robust features and learning nonlinear feature representations.Deep learning-based process-monitoring models show excellent and promising performance.

Process-monitoring researches of industrial processes have obtained considerable achievements in the past few decades.However, it is extremely challenged to create process-monitoring models with high performance for large-scale, time-variant and complex industrial processes.Currently, temporal and relational features of the process data contain important information in time-variant and complex industrial production systems.

The motivation of this letter is to exploit temporal and relational information from time-variant and complex industrial process data to create process-monitoring models.Transformer-based feature extractor is introduced to abstract feature representations from time-variant process data through temporal and relational information agglomeration.Feature evaluation and feature enhancement are utilized to produce robust and interpretable feature representations through maximizing the separation among different classes while minimizing the scatter within each class.

The contributions of the letter are summarized as follows.

1) This letter proposes a process-monitoring framework based on temporal feature agglomeration and enhancement for time-variant and complex industrial processes.

2) Transformer-based feature extractor abstracts feature representations with long-term dependencies and relational information from time-variant process data.

3) The feature representations are enhanced by feature evaluation and contrastive learning to improve the robustness and generalization of the process-monitoring model.

4) The experimental results show the prominent performance of the proposed method in fault diagnosis and fault detection on the additional TE process dataset.

Related work: Some researches of process monitoring focus on extracting temporal and relational information from industrial process data.

To address the temporal feature representation and dynamic modelling issues, researchers have developed several extensions of traditional principal component methods.Kuet al.[3] proposed a dynamic PCA (DPCA) model, which performs classical PCA on augmented measurements with certain time lags.Liet al.[4] improve PCA by introducing spatiotemporal methods to integrate spatial and temporal prior into feature representations.Recurrent neural network(RNN) is used by Kiakojoori ang Khorasani [5] to represent temporal information through state inheritance.The aforementioned methods attempt to derive serial correlations between current and previous observations employing settling time.However, it is difficult for those methods to build temporal process-monitoring models with long-term dependences.

Robustness and interpretability are significant requirements for process-monitoring models.Several robust process-monitoring models employ some form of prior knowledge and expert knowledge to generate relational feature representations.Signed directed graph(SDG) is a knowledge-based fault diagnosis method, which can efficiently represent relationships among process variables and determine the fault root cause [6].Considering that a graphical model has an easily interpreted physical meaning, it has been introduced into process monitoring of complex industrial processes.Several graphical models, such as decision trees and causal graphical models [7]and [8], have been applied in the process monitoring field.Deep learning-based process monitoring models show well robustness and generalization [9].However, those deep learning-based process-monitoring methods lack interpretability.

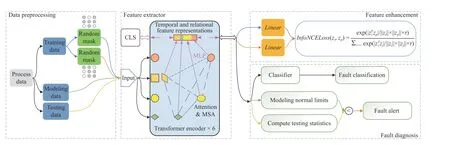

Fig.1.The process diagram of contrastive feature extracto.

Method: Aiming for the robustness and interpretability of feature representation, the letter proposes a process-monitoring framework based on temporal feature agglomeration and enhancement.As shown in Fig.1,the proposed framework mainly consists of four parts, which are data preprocessing, feature extractor, feature enhancement and fault diagnosis, respectively.The data preprocessing augmentations such as random masking and adding Gaussian noise generate diversified input data.The feature extractor abstracts and condenses feature representations with temporal and relational information.Then the feature representation is enhanced by implementing feature evaluation and contrastive learning.Finally,enhanced features are utilized to build the process monitoring model.

The temporal and relational information among industrial process data provides valuable information for industrial process monitoring.Transformer [10] is employed to extract temporal and relational features of process variables in process monitoring.In Transformer encoder, attention mechanisms establish the connections between timestamps and variables of diversified input data.MLP-based feedforward layers are used to agglomerate relational information from the variable connections.Classification vector (CLS) is used to agglomerates the timestamp connections through backpropagation.The temporal and relational information agglomeration provides interpretable and comprehensive feature representations for processmonitoring modelling.

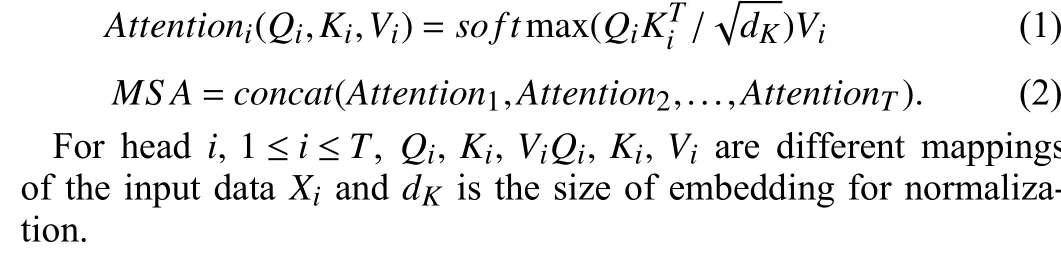

In RNN-based process monitoring, the temporal and relational information is represented by sequential hidden states.However, it is difficult for sequence hidden states to build long-term dependence because the deviations easily accumulate over time.Instead of building sequential dependencies, attention mechanisms obtain long-term dependency by calculating connections between long-term nodes.Multi-head self-attention (MSA) generates robust feature representations of process data by integrating multiple sets of attention.Attention and MSA are calculated as follows:

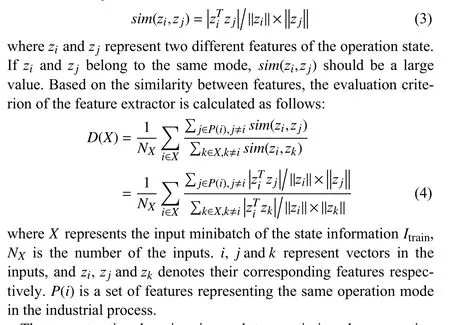

In industrial processes, process data in the same operation mode share feature similarities, while process data in the different operation modes have significant differences.Therefore, ideal feature representations for process monitoring should maximize the separation among different classes and minimize the scatter within each class.Feature evaluation and contrastive learning are used to enhance the discrimination and interpretability of features.

Firstly, an evaluation criterion based on similarity is introduced for feature enhancement.The similarity between features is calculated by cosine similarity as below:

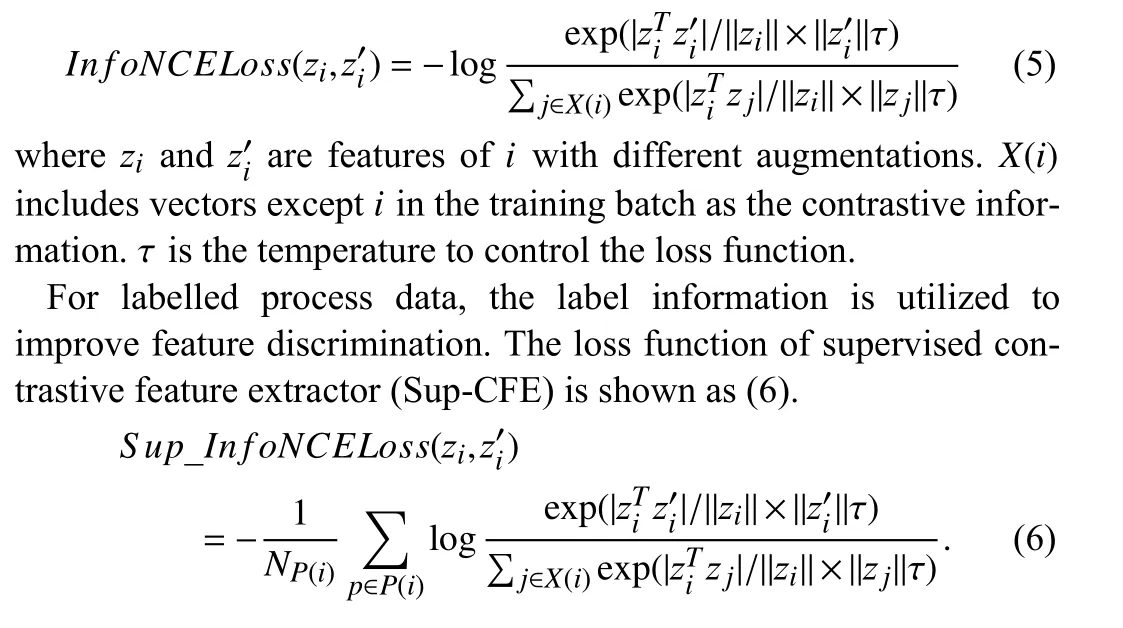

Then, contrastive learning is used to maximize the separation among different classes and minimize the scatter within each class to enhance the discrimination of features.Contrastive learning is widely used in image classification to distil information by self-supervision[11] and [12].The loss function of contrastive learning is derived from the evaluation criterionD(x) and specially designed for different types of process data.

For unlabeled process data, the self-supervised method is utilized to generate robustness features and eliminate human cognitive bias.The loss function of self-supervised contrastive feature extractor(Self-CFE) is shown as follows:

Self-CFE and Sup-CFE enhance feature discrimination through exploiting unlabeled and labelled process data.

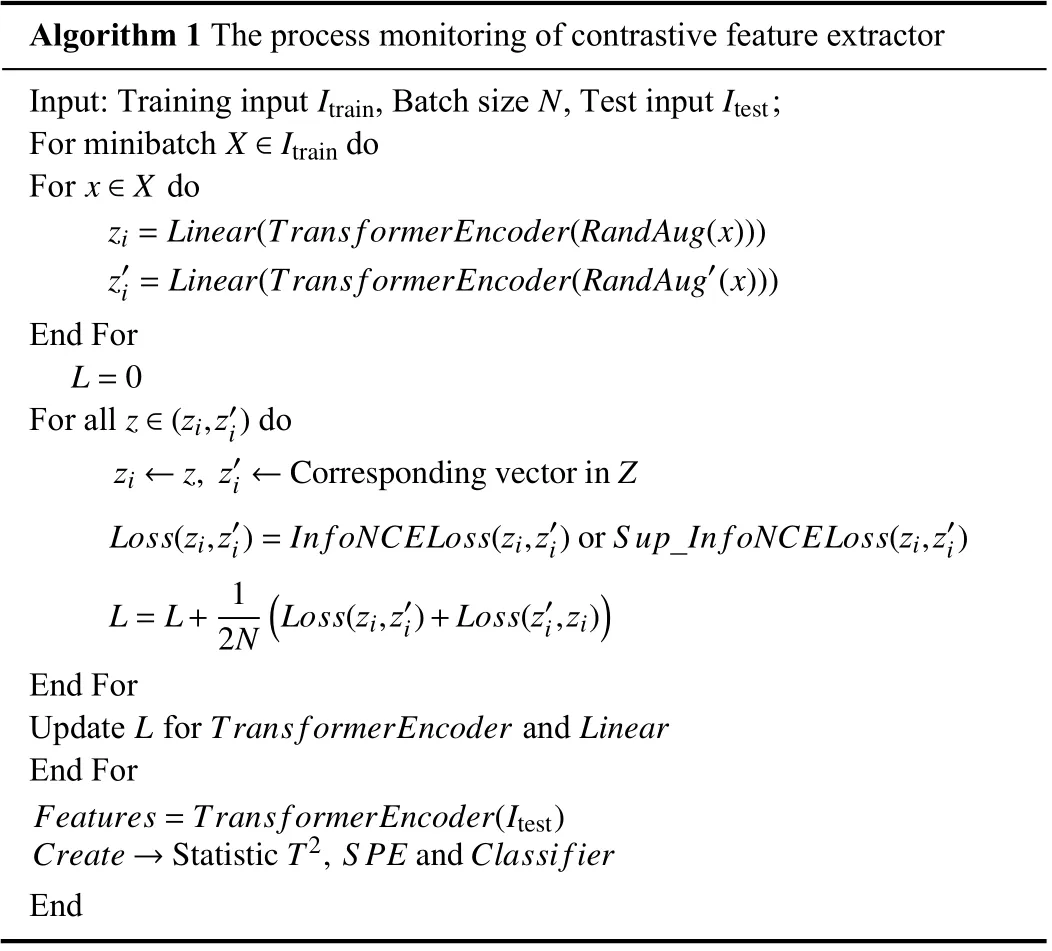

The complete training process of the contrastive feature extractor is shown in Algorithm 1.

Algorithm 1 The process monitoring of contrastive feature extractor Input: Training input , Batch size , Test input ;X∈ItrainItrainNItestFor minibatch dox∈XFor dozi=Linear(Trans formerEncoder(RandAug(x)))z′i=Linear(Trans formerEncoder(RandAug′(x)))End ForL=0z∈(zi,z′i)For all dozi←z,z′i←Corresponding vector inZLoss(zi,z′i)=In foNCELoss(zi,z′i)orS up_In foNCELoss(zi,z′i)L=L+ 1 2N(Loss(zi,z′i)+Loss(z′i,zi))End ForLTransformerEncoderLinearUpdate for and End ForFeatures=Trans formerEncoder(Itest)Create→StatisticT2,S PEandClassifierEnd

Experiment:Tennessee Eastman (TE) process is a well-known process dataset, which has been widely used as a benchmark in process monitoring and fault diagnosis.We use the Additional TE dataset from Harvard which has larger training and testing datasets.

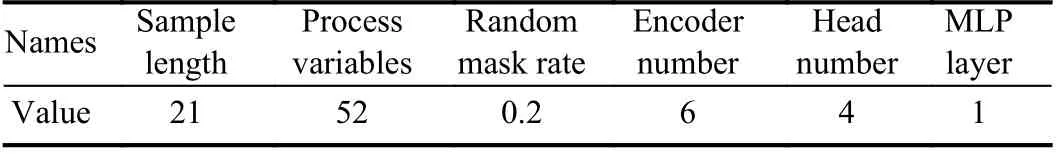

An additional linear classifier is trained to classify faults with feature representations.The Self-CFE and Sup-CFE are tested on TE process data.The main parameters of process monitoring model are shown in Table 1.

Table 1.Parameters of Contrastive Feature Extractor

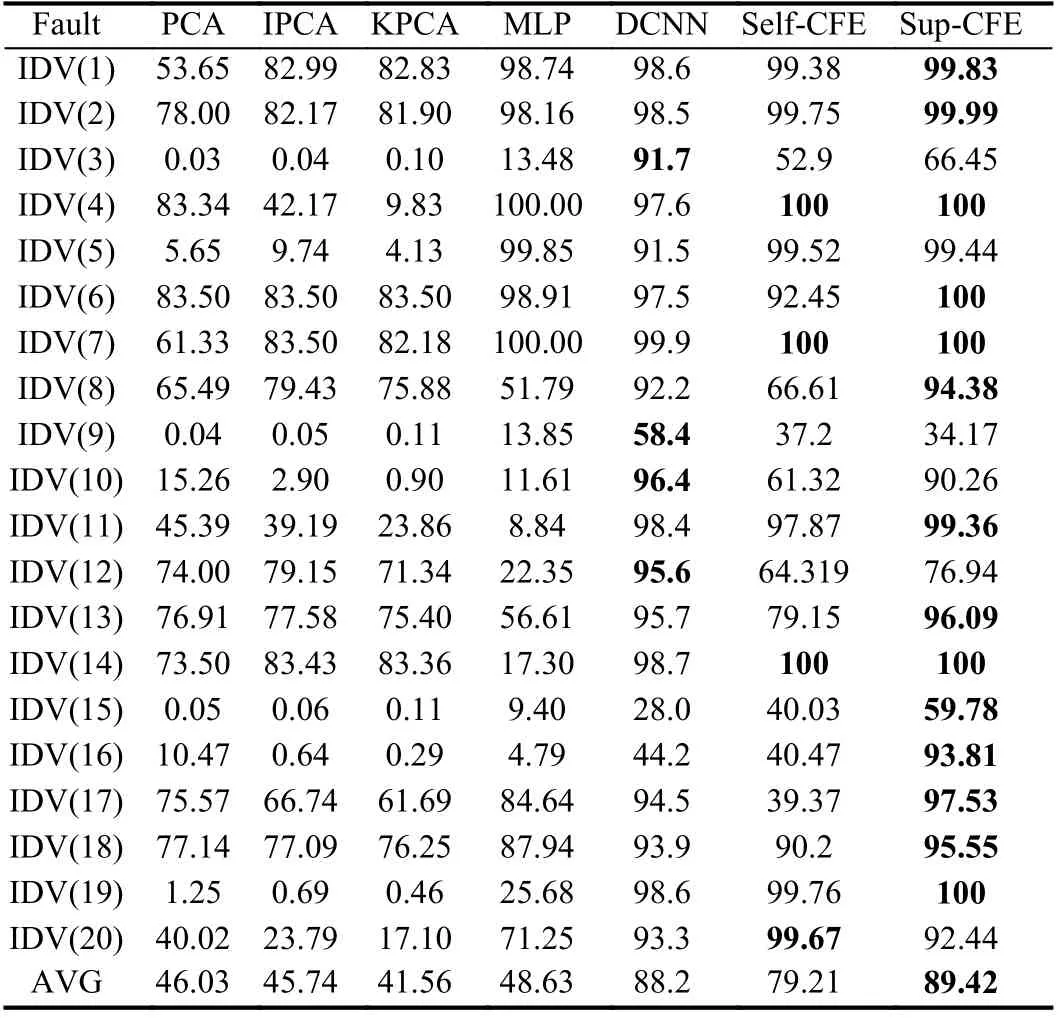

Diagnosis accuracies (%) of fault 1−20 by different methods on the additional TE are shown in Table 2.It is clearly seen that CFE-based methods achieve prominent performance on most of the faults.Sup-CFE gets the best average accuracy and improves fault classification results significantly on fault 16.Self-CFE also gets good performance on faults 4, 7, 14 and 20 with unlabeled data.It should be mentioned that DCNN [13] gets well accuracy for faults 3, 9, 10 and 12.

Conclusion:The letter discusses a process-monitoring framework based on temporal feature agglomeration and enhancement.Transformer is introduced as the feature extractor to extract temporal and relational information of the variant-time process data.Feature evaluation and contrastive learning are used to enhance the discrimination of features.The proposed method provides a promising monitoring framework for large-scale, time-variant and complex modern industrial processes.

Acknowledgment:This work was partially supported by the China national R&D Key Research Program (2019YFB1705702) and the National Natural Science Foundation of China (62273233).

Table 2.Diagnosis Accuracy (%) of Fault 1–20 Based on Different Methods on Additional TE Dataset

杂志排行

IEEE/CAA Journal of Automatica Sinica的其它文章

- Current-Aided Multiple-AUV Cooperative Localization and Target Tracking in Anchor-Free Environments

- Event-Triggered Asymmetric Bipartite Consensus Tracking for Nonlinear Multi-Agent Systems Based on Model-Free Adaptive Control

- UltraStar: A Lightweight Simulator of Ultra-Dense LEO Satellite Constellation Networking for 6G

- Multi-AUV Inspection for Process Monitoring of Underwater Oil Transportation

- Parallel Learning: Overview and Perspective for Computational Learning Across Syn2Real and Sim2Real

- Finite-Horizon l2–l∞State Estimation for Networked Systems Under Mixed Protocols