Radar emitter multi-label recognition based on residual network

2022-03-29YuHonghiYnXiopengLiuShokunLiPingHoXinhong

Yu Hong-hi ,Yn Xio-peng ,*,Liu Sho-kun ,Li Ping ,Ho Xin-hong

a Science and Technology on Electromechanical Dynamic Control Laboratory,School of Mechatronical Engineering,Beijing Institute of Technology,Beijing,100081,China

b Beijing Institute of Telemetry Technology,Beijing,100081,China

Keywords:Radar emitter recognition Image processing Parallel Residual network Multi-label

ABSTRACT In low signal-to-noise ratio(SNR)environments,the traditional radar emitter recognition(RER)method struggles to recognize multiple radar emitter signals in parallel.This paper proposes a multi-label classi fication and recognition method for multiple radar-emitter modulation types based on a residual network.This method can quickly perform parallel classi fication and recognition of multi-modulation radar time-domain aliasing signals under low SNRs.First,we perform time-frequency analysis on the received signal to extract the normalized time-frequency image through the short-time Fourier transform(STFT).The time-frequency distribution image is then denoised using a deep normalized convolutional neural network(DNCNN).Secondly,the multi-label classi fication and recognition model for multi-modulation radar emitter time-domain aliasing signals is established,and learning the characteristics of radar signal time-frequency distribution image dataset to achieve the purpose of training model.Finally,time-frequency image is recognized and classi fied through the model,thus completing the automatic classi fication and recognition of the time-domain aliasing signal.Simulation results show that the proposed method can classify and recognize radar emitter signals of different modulation types in parallel under low SNRs.

1.Introduction

Radar emitter recognition(RER)is used to obtain radar operating parameters and performance information by comparing the characteristic parameters of radar signals intercepted by reconnaissance receivers with those of known radiation sources[1].The parallel classi fication and identi fication of multiple radar radiation sources is the key technology in radar information countermeasures.RER involves several stages:basic parameter comparison[2],machine learning and basic parameter combination[3],intra-pulse feature analysis[4],and deep learning[5-8].In a low signal-tonoise ratio(SNR)environment,the recognition effect produced by these methods is not ideal,and it is impossible to recognize multiple modulation types within the time-domain aliasing signals in parallel.

The use of intra-pulse feature analysis to identify radar emitters is generally suitable for the recognition of a single signal or when the waveform is simple and the number of radiation sources is small.This is a serial classi fication recognition method.The automatic recognition of radar emitters based on Scale Invariant feature transformation(SIFT)position and scale features[9]uses support vector machines(SVM)to classify radar emitter signals and identify signal components of different modulation types by extracting SIFT features.Reference[10]proposed an automatic modulation classi fication technique in which the independent randomvariables are superposed to achieve multi-modulation-type signal classi fication.References[11,12]described the use of blind signal separation to separate the different components of multi-modulation-type timedomain aliased signals,and then employed a traditional recognition method for single-target signals to identify the modulation type of each component.These methods are not effective for the parallel classi fication and recognition of multi-modulation-type time-domain aliasing signals.Some scholars have introduced the Visual Geometry group(VGG)nets from the field of computer vision and residual networks(ResNet)into the recognition of signal modulation types[13-15],and proposed a deep learning-based method of recognizing the radio modulation type.These methods improve the signal recognition effect signi ficantly.However,the classi fication and recognition of the signal is completed in the time domain,and the effect is not ideal in the case of low SNR.In Refs.[16-18],the classi fication and identi fication of the radar emitter is considered as a multi-classi fication problem,and the time-domain aliasing signals are identi fied by training multiple classi fiers.Each combination of signals is regarded as a new category,and the recognition result of each category corresponds to a predicted label.For N types of modulation signals,there are 2-1 different combinations,i.e.,they correspond to 2-1 prediction labels.The N can be used to represents the number of all signal modulation types that may exist for a radar signal source,which has the same meaning as N below.The number of prediction labels increases exponentially with the number of modulation types.The generalization ability of this method is poor,and it is essentially a form of serial recognition classi fication.

To solve the problem of parallel classi fication and recognition of multi-modulation radar emitters in a low SNR environment,this paper describes a multi-label classi fication and recognition method for multi-modulation radar emitters based on a ResNet.The results obtained by this method are mutually independent.In the proposed method,the time-domain aliasing of radar emitter signals containing N modulation types corresponds to 2-1 combinations,but the number of predicted labels of the recognition result is only N.Thus,the parallel classi fication of multiple radiation source signals can be achieved.The simulation results show that the use of a ResNet,denoising model and multi-label greatly reduces the model training time,enhances the network depth,and improves the efficiency and recognition accuracy.The proposed method achieves good autonomous parallel classi fication and recognition in low SNR environments.

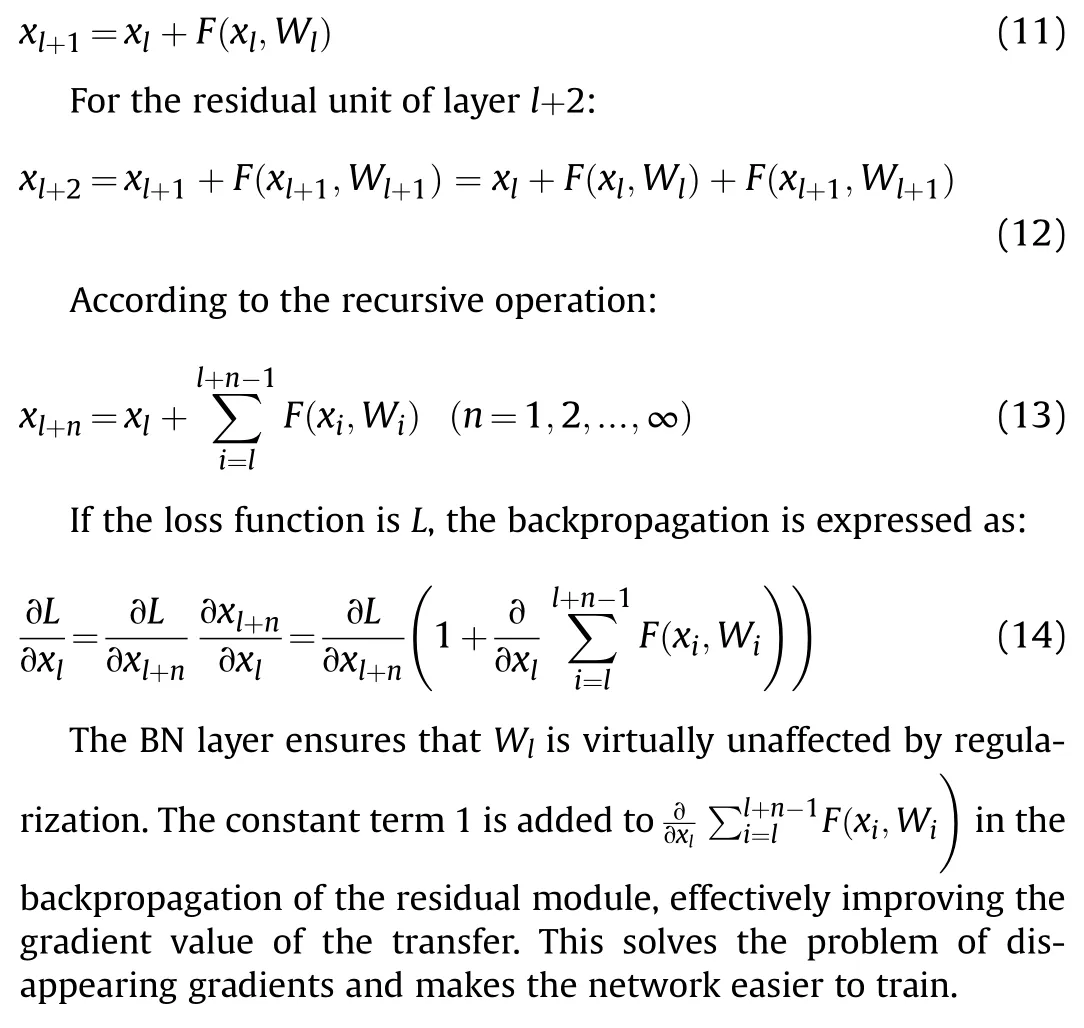

2.Construction of multi-label recognition model for multimodulation radar emitters

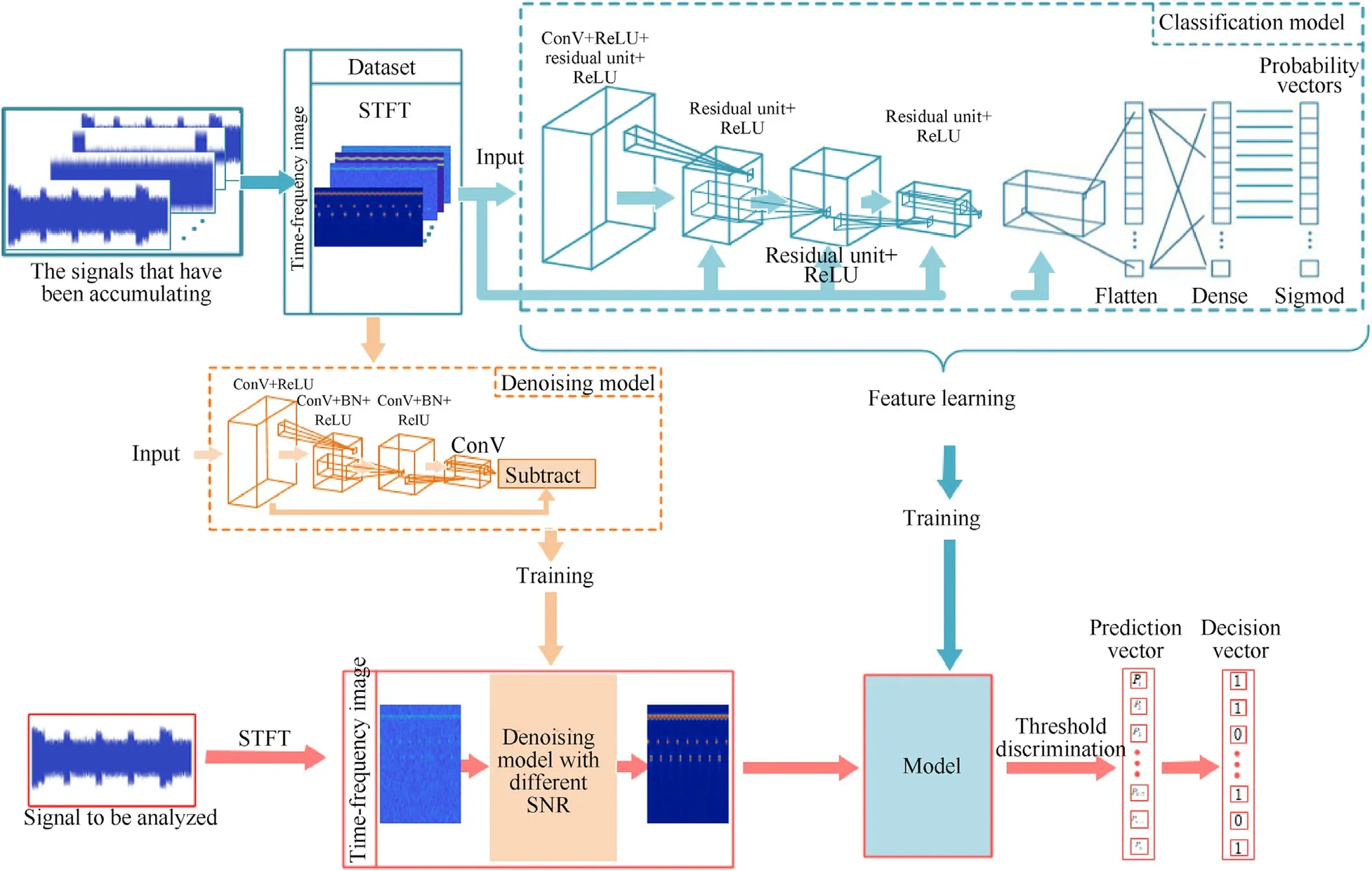

This method is divided into three main steps.First,we apply time-frequency analysis to the received signals to extract the normalized time-frequency image.The STFT is used for timefrequency analysis of the signal.And then time-frequency image is denoised by a deep normalized convolutional neural network(DNCNN),thus improving the method’s adaptability in low SNR environments.The DNCNN can realize blind denoising,which is different from traditional denoising methods.Second,the multilabel classi fication and recognition model for multi-modulation radar emitter time-domain aliasing signals is established,and learning the characteristics of radar signal time-frequency distribution images dataset to achieve the purpose of training model.The multi-label method is used to mark the data samples.The samples in the dataset are collected from the reconnaissance data accumulated earlier.Finally,the model recognizes and classi fies the time-frequency image of the time domain alias signals obtained in step 1,and outputs the classi fication results in the form of vectors.

2.1.Signal model

We assume that the receiver detects radar emitter signals consisting of n modulation types and treats these signals as timedomain aliasing signals.The signal can be expressed by the following formula.

where n represents the number of modulation types of radar radiation source signals that can be received when the receiver is working,N represents the number of all signal modulation types that may exist for radar signals source,R(t)indicates that the receiver detects and receives the alias signal in the time domain,and Ax(t)is the i-th radar signal with amplitude A.In addition to the radar emitter signals,the receiver also receives some level of additive white Gaussian noiseω(t)with mean 0 and varianceδ.We assume that each radar source signal contains only one modulation parameter.

The signal detected by the receiver contains up to N radar radiation source signals.These signals are aliased in the time domain to form the received signal.Considering the changes in N and n the number of possible time-domain aliasing combinations can be expressed as:

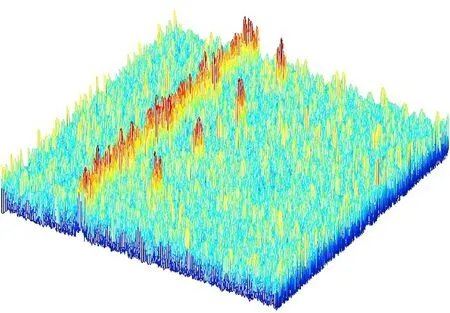

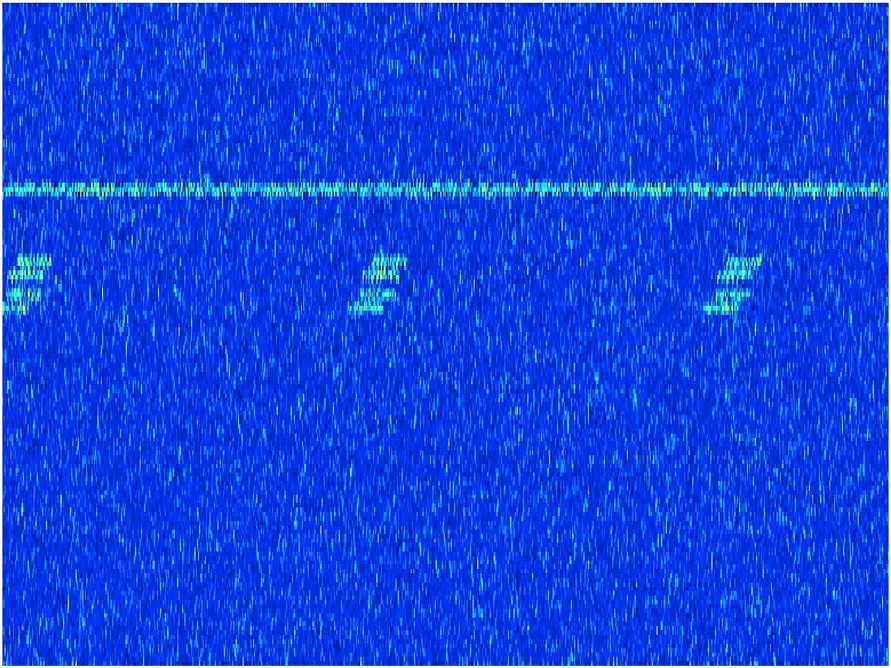

Fig.1.3D-STFT time-frequency images.

2.2.Signal preprocessing

2.2.1.Time-frequency analysis

The time-domain aliased signal enters the receiver through the antenna.As shown in Fig.1,this is a three-dimensional time-frequency image of the time-domain aliasing signal.This signal includes the time-frequency image of continuous wave frequency modulation(CWFM),sinusoidal frequency modulation(SinFM),triangular frequency modulation(TriFM),binary phase shift keying(BPSK),and quadrature phase shift keying(QPSK)time-domain aliased signals.The coordinate axes in the image represent time,frequency,and amplitude.

It is clear that the signal components in the time-domain aliased signal change with time and frequency,and the signal energy is different from the noise.But different signal components are aliased in time and frequency domain.It is dif ficult to distinguish the signal components of mixed signals from different radar emitters using traditional time-domain analysis methods.When the number of types of detected signals increases,it is dif ficult to complete the corresponding classi fication and recognition tasks in parallel.

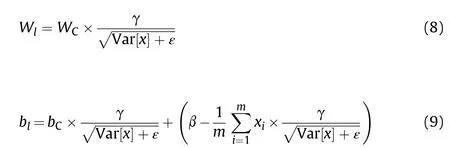

Therefore,it is necessary to propose a signal classi fication method based on deep learning to classify and recognize timefrequency images.We use a deep ResNet to learn the different features of the time-frequency images as shown in Fig.2 which to train the classi fication and recognition model.This enables us to achieve the purpose of parallel classi fication and recognition in low SNR environments.Fig.2 shows the time-frequency distribution image extracted from Fig.1.In Fig.2,the SNR of the received signal is-10 dB.

The short-time Fourier transform(STFT)is one of the most widely used time-frequency analysis methods,and is useful for the analysis of time-domain aliased signals.In addition to its simple form,the STFT is not affected by cross-terms.The mathematical expression of the STFT is de fined as follows:

Fig.2.Normalized time-frequency image in SNR=-10 dB.

where R(t)represents the time-domain aliasing signal of the radar radiation source to be analyzed,*denotes the complex conjugate,andγ(t)represents the window function.Due to the uncertainty principle,the time-frequency resolution of the STFT is affected by the width of the window function.The time-frequency resolution directly affects the accuracy of the recognition effect.To avoid the characteristic quantity from deviating under differences in the input signal power,the amplitude of the short-time Fourier time-frequency image is normalized.The RGB value of the image re flects the energy of the signal component of the radar emitter.The time-frequency distribution image is resized to 128×128×3,and then passed to subsequent models for denoising and classi fication recognition(128×128 represents the length×width format of the image,and 3 represents the number of RGB channels).

2.2.2.The noise reduction processing

Using the idea of deep ResNets[19],proposed a feed-forward noise-reduction convolutional neural network method for image denoising.The traditional denoising model is to train additive White Gaussian noise at a speci fic noise level.The DnCNN model can deal with gaussian denoising with unknown noise level.This is called blind Gaussian denoising.When we receive a signal,the SNR is unknown.Therefore,DNCNN model is selected here for denoising.

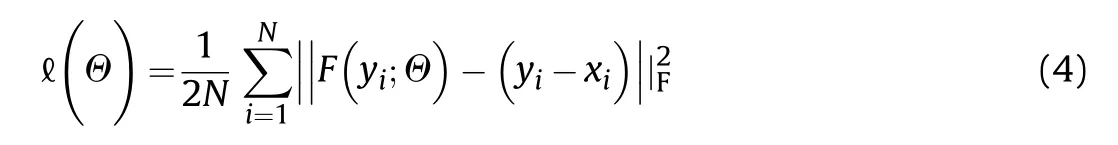

This method adopts residual learning and batch normalization.Unlike the ResNet in the classi fication model,however,the clean picture in the denoising model is xand the noisy picture is y.The input to the network is y=x+p,where p is the residual picture,and the residual learning formula is used to train the residual mapping F(y)≈P.The optimization goal of the denoising module is the mean square error between the real residual image and the network output.

The module uses the time-frequency distribution image of the radar radiation source signal as the training set.The network is then trained and the training model is saved.

2.3.Multi-label recognition model of multi-modulation radar emitters

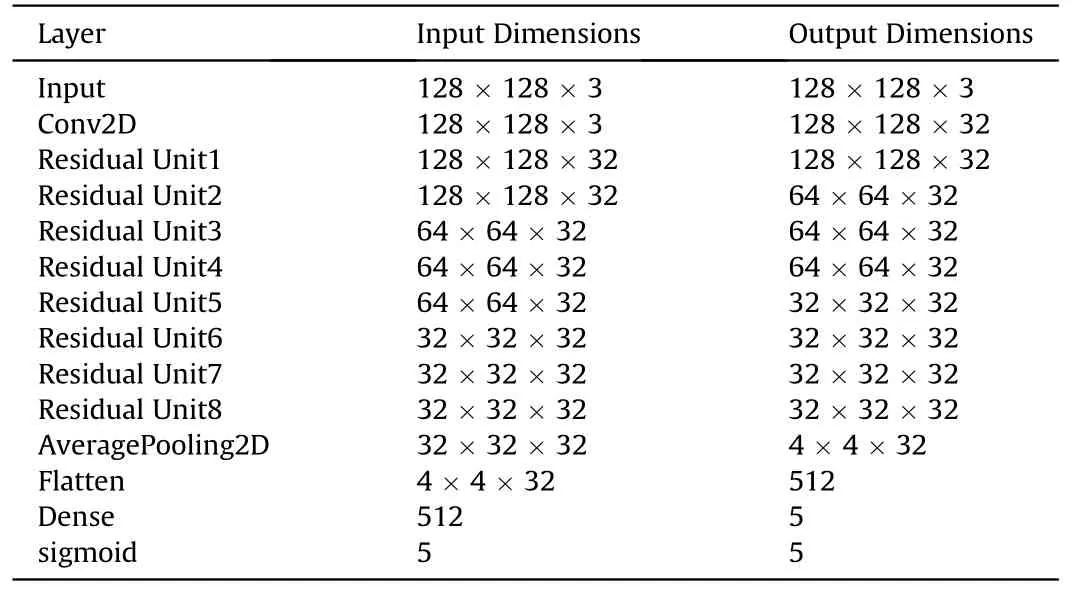

In this study,a multi-label recognition model based on the ResNet is developed for multi-modulation radar emitter signals.This model can be used to classify and recognize the timefrequency distribution image of the time-domain aliased signal.The method is to recognize and classify time-frequency images.The principle framework of the model is shown in Fig.3,illustrating that the model approaches the recognition of multi-modulation radar emitter signals as a multi-label classi fication problem.

The time-frequency image is generated by the STFT of the existing dataset signal.These images constitute the training set of the model to train the classi fication recognition model and obtain the training model.When the time domain signal is received by the receiver,the time-frequency image is obtained by the STFT.The time-frequency image is transferred into the denoising model for denoising and then into the classi fication model for classi fication.Finally,the classi fication results is output.

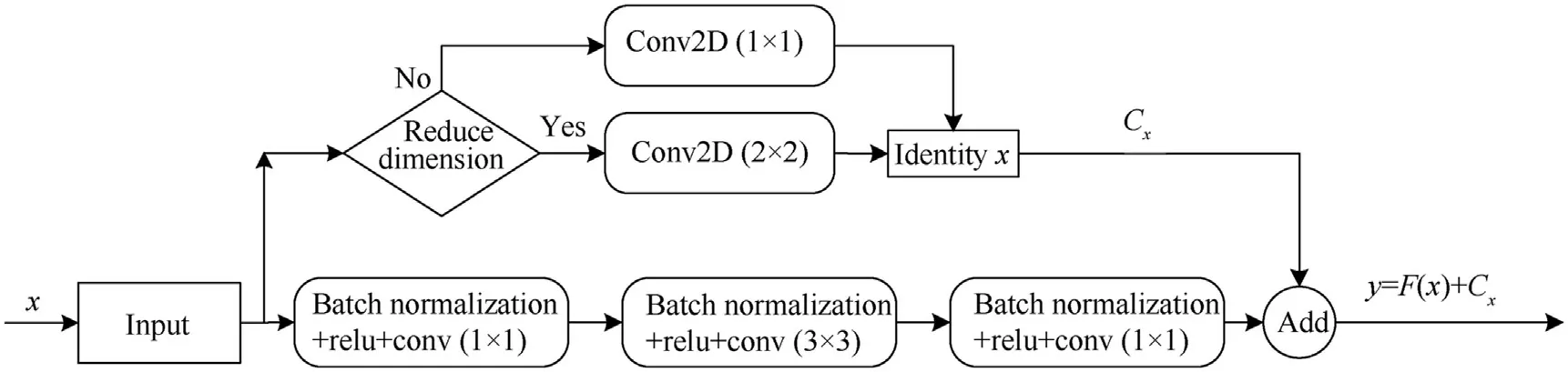

In traditional deep neural networks,data is transmitted through the adjacent upper-layer network,and the data vector passes through the convolution layer and the pooling layer to produce a down-sampling effect.With the deepening of the network layers,problems such as the disappearance of gradients are alleviated,but some degradation of the solution can occur.In the proposed method,the selection of a ResNet increases the depth of the network without suffering any degradation,allowing deeper features to be learned.The residual unit is illustrated in Fig.4.

2.3.1.Residual unit

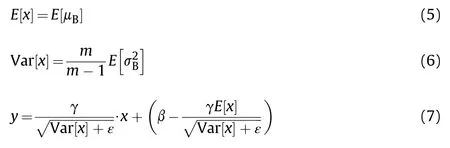

Batch normalization(BN)standardizes the input data,thus alleviating the internal covariate shift problem.The BN layer ensures that the data as a whole have a standard normal distribution with a mean value of 0 and a variance of 1.This solves the problem of linear expression loss in the network expression.The BN layer obtains the mean E(x)and variance Var(x)from all training data during the training process,which allows us to calculate the global statistics.The model is trained to obtain scaling factors and translation factors,which are passed to the next layer.The calculation process is as follows:

The data passes through the BNlayer and enters the convolution after some nonlinear function.The weight Wand bias bof the model is as follows:

Fig.3.Multi-label recognition model of radar emitter based on deep ResNet.

Fig.4.Residual unit.

where Wis the weight and bis the bias of the convolution.

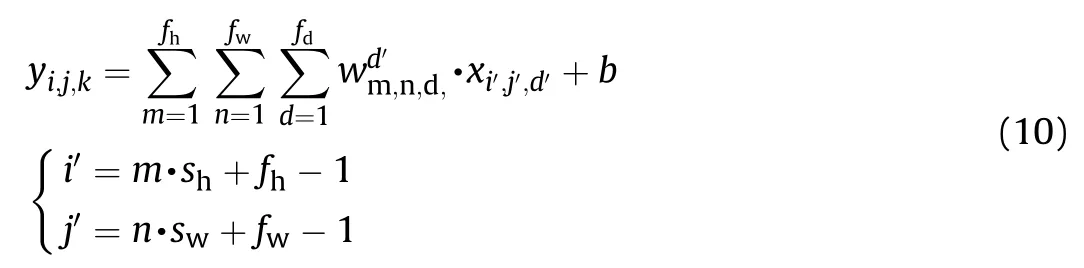

Each convolution kernel produces a feature map,and the convolution operation is de fined as the integral of the product of the two functions after one is reversed and shifted.And the integral is evaluated for all values of shift.The number of convolution kernels in the depth direction determines the dimensions of the output data.Each convolution kernel is used to calculate the weight of the convolution kernel and the input dot product.The calculation process can be expressed as:

In the residual unit,the required underlying mapping is y,and the input to a given layer is xConsider another map with stacked nonlinear layers:F(x)=y-Cx.The output mapping of the convolutional layer is y=F(x)+Cx.To ensure that F(x)matches x and determine whether the network needs to be changed,the identity equation is subjected to a convolution operation,Cx.When the dimension of the network needs to be reduced,the parameter C takes a value of 2×2;when the dimension does not need to be reduced,C=1×1.The input is transmitted to the next module through the ReLU activation function,and can be expressed as f(F(x)+Cx).This generates a residual unit.For convenience of calculation,the biases band Care ignored,and the model of the residual element in a given layer is as follows:

2.3.2.Multi-label samples

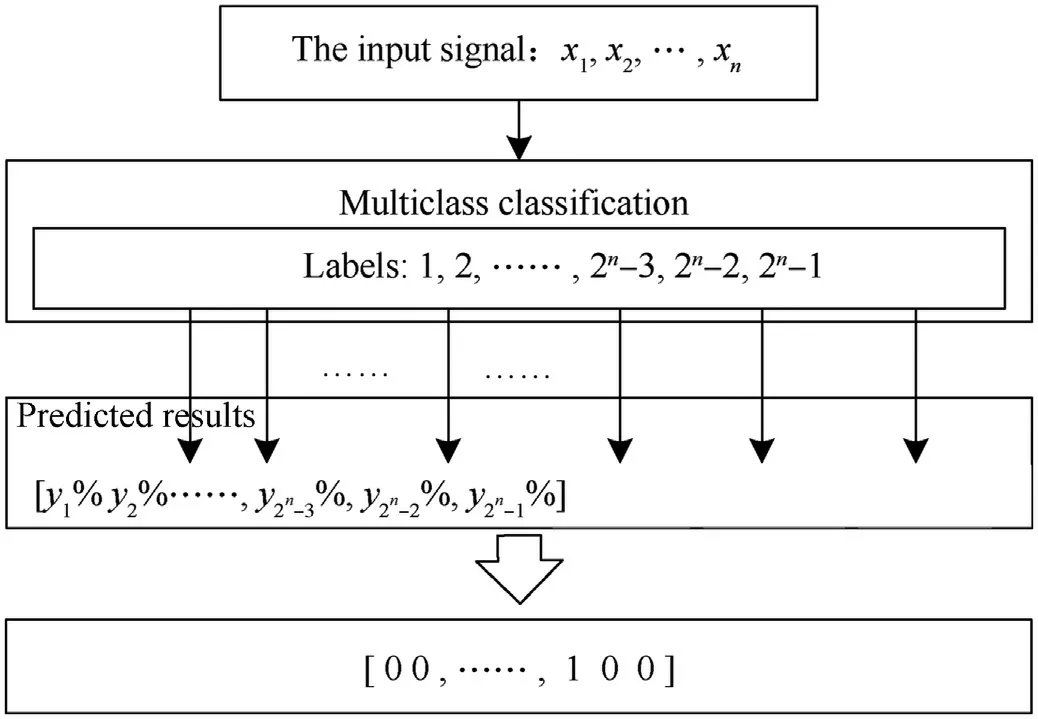

Multi-label samples have multiple labels.These labels should be recognized by the computer at the same time,unlike in the multiclassi fication problem.Multi-classi fication is essentially a singlelabel problem,each sample corresponding to only one label.For example,for a radar emitter signal received in the time domain,the alias signal in the time domain contains SinFMand BPSK.In multilabel classi fication,the samples include two labels:SinFM and BPSK.That is,one sample corresponds to multiple labels.For the case of multiple-signal time-domain aliasing,each type of timedomain aliasing is regarded as a new signal type.Thus,n types of signal aliasing in the time domain will have 2-1 labels,and the number of predicted labels in the model output is 2-1.

This is illustrated in Fig.5 for y+y+…+y=100.If the 2-3-th label is predicted to exist,the 2-3-th bit of the corresponding prediction matrix is 1,and no other label results exist.

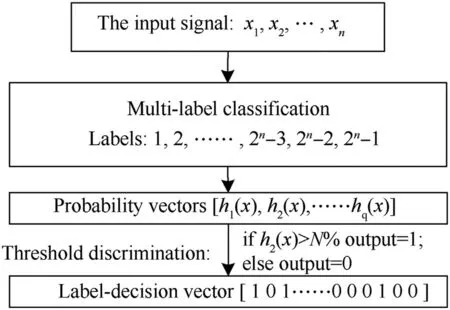

The multi-label classi fication problem is de fined as follows[20]:Letχ={x,x,…,x}represent the n-dimensional input sample space.The sample label contained in theχdata can be expressed as y={y,y,…,y},which contains the label output space of q possible classes.Each subset of y is called a label set and contains a total of 2-1 label subsets.In multi-label learning,the task is to learn the function mapping H:χ→2from D={(x,y)|1≤i≤m},where D is a multi-label training set containing m samples and X=(x,…,x)∈χin(X,Y)is an n-dimensional feature vector.The true label set of x is represented as H(x)⊆y.When the output result is greater than the set threshold,the predicted label set can also be expressed as a q-dimensional binary vector.If the label corresponds to the correct value,the element is 1;otherwise,it is 0.Given the input sampleχ,the multi-label classi fier will return a set of predicted labels y∈Y and the set of unrelated labels with that label y∉Y.In addition,we de fine a function f:χ×y→,where f(x,y)means that y is the trust value of the correct label of x.Based on the relevance of the label to the given instance,all possible label orders f(x,y)>f(x,y)are returned.The classi fication function is de fined as h(x)={y|f(x,y)>t(x)},where t(x)is the threshold function.The threshold selected in this study is 0.5.The target data are assigned a reasonable label through the classi fication function,that is,a label set H=[h(x),h(x),…,h(x)]is assigned to each sample x∈χ.The multi-label classi fication process is shown in Fig.6.

Fig.5.Single label multiple-classi fication.

Fig.6.Multi-label classi fication.

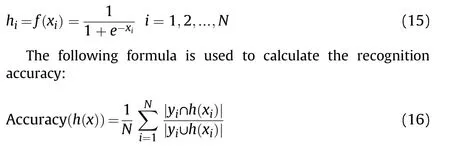

In multi-label classi fication,the probability vector,containing the probability of each label,is output.The traditional convolutional neural network uses the softmax function as the output layer,and the output result is the predicted probability of a sample.When using the softmax function,the probability of one category does not depend on the probability of other categories,and the sum of the probabilities of all categories is equal to 1.As this function is not applicable to multi-label classi fication,the sigmoid function is used as the output layer in the proposed model.Thus,the output results are the prediction probabilities of each label,and are independent of each other.The sum of the output prediction probabilities for the n labels is not necessarily equal to 1.This is the essential difference between multi-classi fication and multi-label classi fication.A label set H=[h(x),h(x),…,h(x)]is assigned to each sample x∈χto achieve parallel classi fication and recognition of aliased signals.

In the multi-label recognition model for multi-modulation-type radar radiation sources,the sigmoid function used as the output function can be expressed as:

where h(x)is the recognition probability of the input sample assigned by the classi fication function,yis the sample label of data.

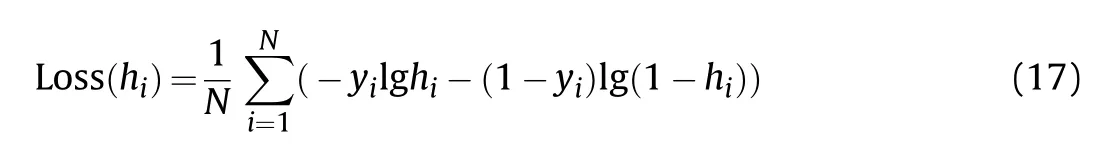

When implementing multi-label classi fication,we need to use the binary cross-entropy to train the model.The loss function is calculated as follows:

3.Simulation analysis

3.1.Selection of simulation and model parameters

To simulate and verify the effectiveness of the multi-label recognition model for multi-modulation radar emitter signals based on a deep ResNet,five radar emitter signals were selected,namely CWFM,SinFM,TriFM,BPSK,and QPSK.The work flow of the multi-label recognition model for multi-modulation radar emitters is shown in Fig.7.

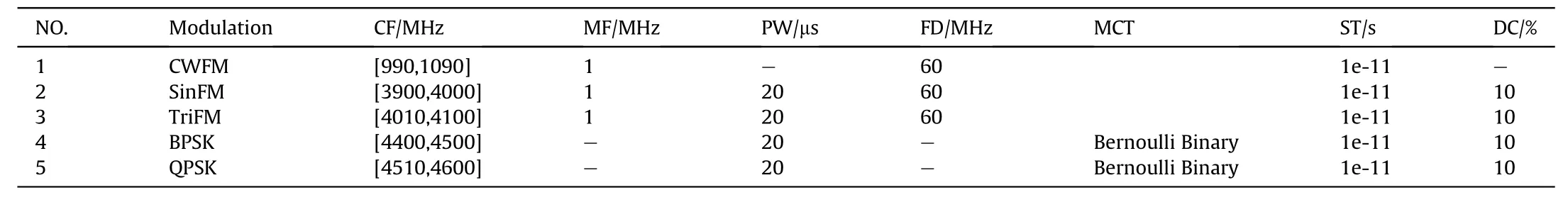

The Label Powerset method[20]was used to form the training set,that is,in the training process of the dataset,each combination of original labels corresponds to a new class of labels.The multilabel problem was then transformed into a single-label binary classi fication problem with N=2-1=31 possible combinations of signals.We selected appropriate parameters for the five radar emitter signals.It was assumed that each radar radiation source signal had only one modulation parameter.In the range of 10 dB to-11 dB,each combination of radar source signals produces 450 samples.We divided 80%of the data into training sets and 20%of the samples were used in the veri fication set.The signal parameters of the five kinds of radar radiation sources are listed in Table 1,where CF denotes the carrier frequency,MF denotes the modulation frequency,PW denotes the pulse width,FD denotes the frequency deviation,MCT denotes the modulation code type,ST denotes the sample time,and DC denotes the duty cycle.

The simulation environment was Google Colab,the number of epochs to train was set to 100,and the learning rate was set to 0.001.Generally,the threshold was set to 0.5.According to the simulation results,when there are eight residual neural modules,the expected recognition rate can be achieved.

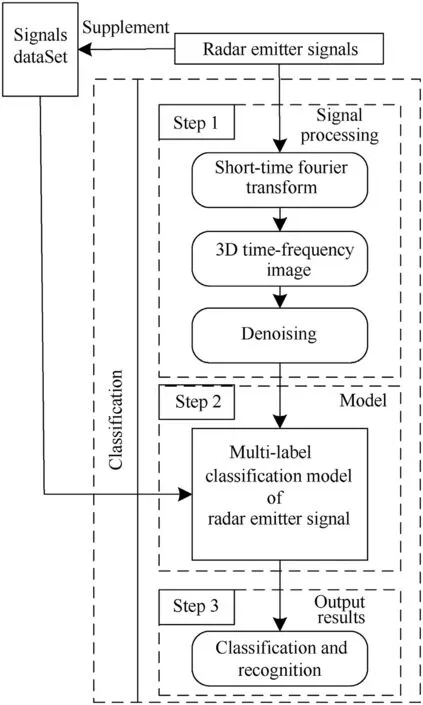

The parameters of the training model are presented in Table 2.

Table 1 Parameters of the radar signals.

Table 2 Parameters of the training model.

3.2.Model performance evaluation

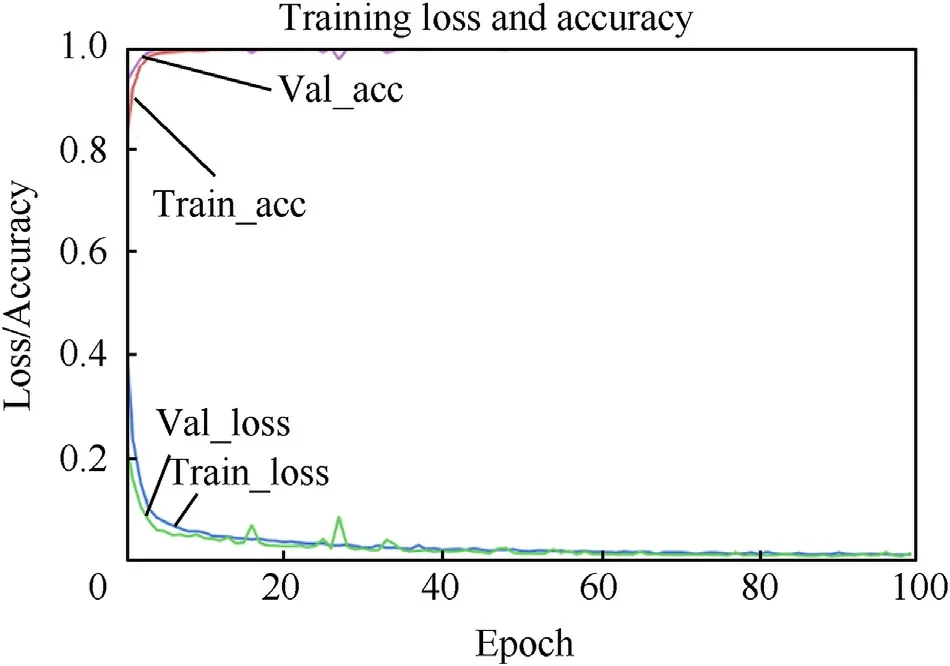

The changes of model training loss curve(train_loss),average loss curve(val_loss),accuracy curve(train_acc)and average accuracy curve(val_acc)generated in the training process are shown in Fig.8.The figure records and plots the values of the calculated loss function and accuracy function in the training process of each generation,so as to visualize the performance of the training model.The curve of loss function converges rapidly,and the loss curve of training and testing gradually stabilizes,but still decreases.The results show that the model parameters are reasonable and the model is effective.In the training model,the residual unit does not use dropout,but directly uses BN and global average pooling for regularization,which accelerates the training speed.Each generation in the training process takes 91 s,a signi ficant reduction from the 141 s of the method reported in Ref.[15].

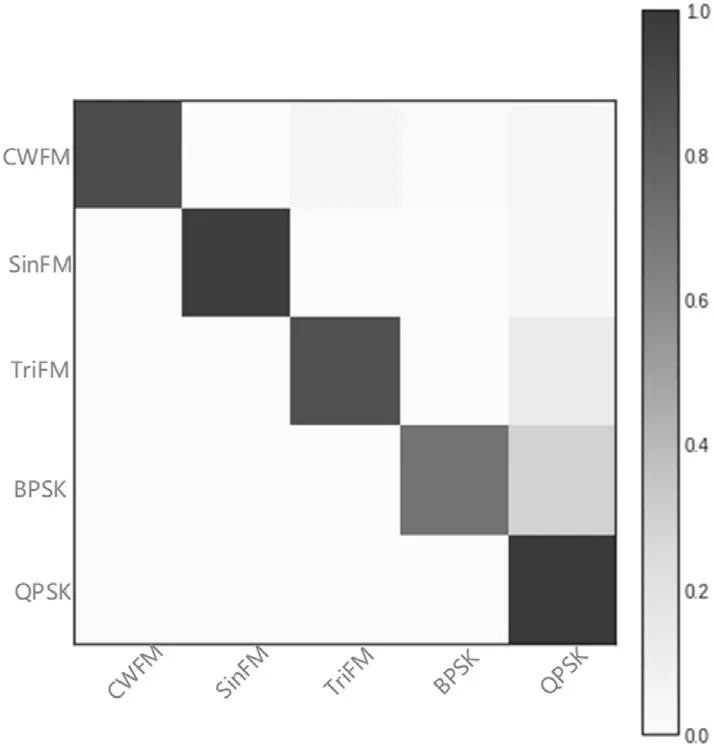

After training,the confusion matrix was obtained,as shown in Fig.9.

Fig.7.Work flow of classi fication model.

3.3.Model classi fication performance evaluation

With an SNR of-10 dB,we selected the time-domain alias signal to test the model.The time-domain alias signal included four types of signals:SinFM,TriFM,BPSK,and QPSK.And the CWFM is not included in the time-domain alias signal.The real label coding matrix of the time-domain aliasing signal is[0 1 1 1 1].We applied an STFT to the time-domain aliased signal and obtained a time-frequency distribution image.The time-frequency image was then denoised,and the image was entered into the recognition model for classi fication and recognition.The recognition probabilities for CWFM,SinFM,TriFM,BPSK,and QPSK were 0.8%,97.25%,97.61%,79.85%,and 81.46%,respectively.After threshold discrimination,the prediction matrix of the model output recognition result is[0 1 1 1 1],the same as the real label encoding matrix,which indicates that the prediction result is correct.

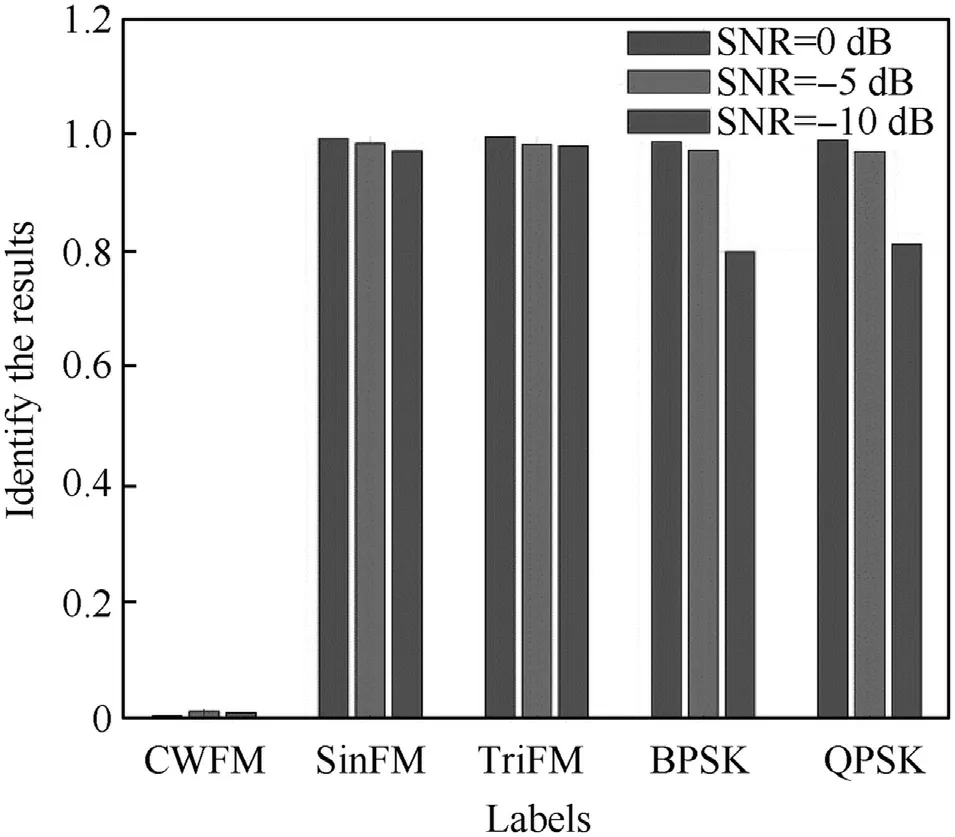

We veri fied the recognition probability of the model at SNRs of-10 dB,-5 dB,and 0 dB.The accuracy of each possible radar emitter signal is shown in Fig.10,where SinFM and TriFM are relatively easy to identify.At-10 dB,the accuracy of SinFM and TriFM remain at around 95%,while the recognition rates of BPSK and QPSK are greatly affected by the SNR.At-10 dB,the recognition rates of the BPSK and QPSK signals have dropped to 80%.

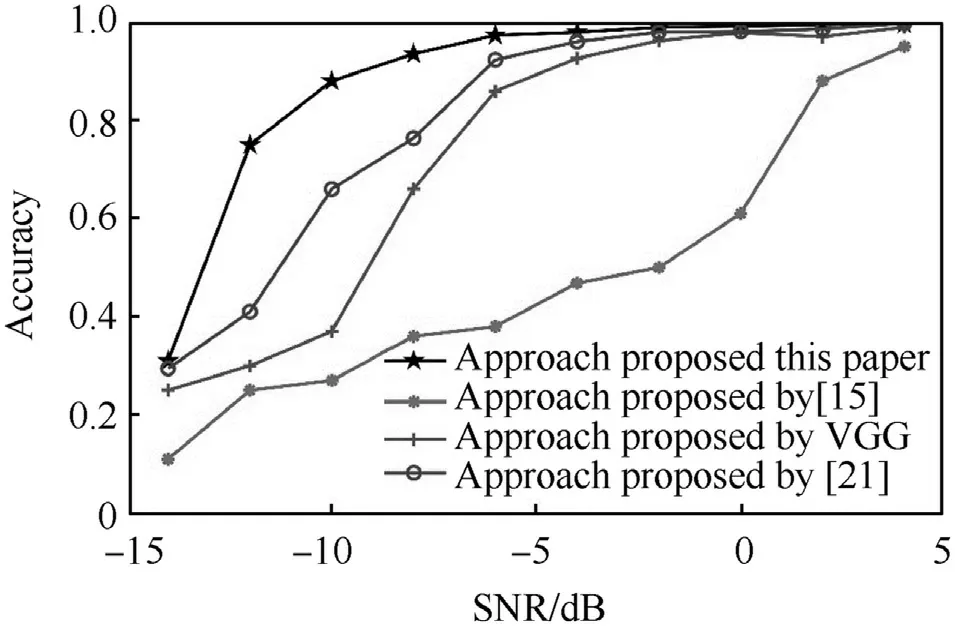

Next we verify the model’s accuracy.For SNRs of-14-4 dB,each combination of radar emitter signals generated a set of data at 2 dB intervals,and the combination of five signals generated 310 samples for testing the recognition rate of the model.The model simultaneously outputs the recognition probabilities of the five radar emitter signals.When the output recognition probability is greater than the threshold of 0.5,the signal component is considered to exist;when the signal component recognition probability is less than 0.5,the signal is considered to be absent.The accuracy of the model can be calculated using formula(16),and the accuracy under different SNRs(from 5 dB to-15 dB)is shown in Fig.11.Fig.11 also lists the simulation results of literature[15,21]and VGG network under the same dataset and SNR conditions.Compared with the method in this paper,it can be seen from the figure that the recognition results of this model under the condition of low SNR are relatively ideal.

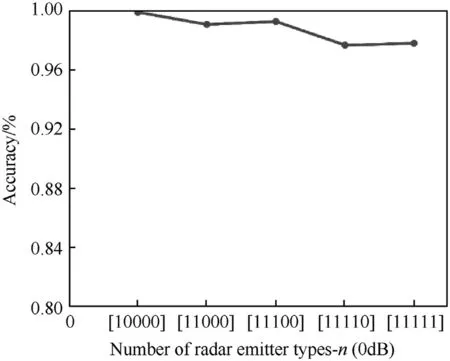

To test the effect of different signal aliasing on the model under a certain SNR environment,we sequentially increased the signal components of the radar radiation source under an SNR of-10 dB,where[1 0 0 0 0]indicates that the signal contains only CWFM;[1 1 0 0 0]indicates the time-domain aliasing signal of CWFM and SinFM;[1 1 1 0 0]indicates the time-domain aliasing signal of CWFM,SinFM,and TriFM;[1 1 1 1 0]indicates a signal containing CWFM,SinFM,TriFM,and BPSK;and[1 1 1 1 1]represents the timedomain aliasing signal of all five radar radiation sources.The corresponding model accuracy is shown in Fig.12.It can be seen that the accuracy is affected by the number of signal components.At the same time,the simulation results indirectly show that the adaptability of the model to CWFM,SinFM,and TriFM signal is better than that of BPSK and QPSK signal.

Fig.8.Training loss and accuracy change curve.

Fig.9.Confusion matrix.

It can be seen from Figs.10-12 that the method proposed in this paper can autonomously identify the signal components contained in the time-domain aliasing signal of multi-modulation radar source signals in parallel and in low SNR environments.Additionally,the proposed method can achieve a high level of accuracy.

4.Conclusion

This paper has described a multi-label recognition method for multi-modulation radar emitter signals based on a deep ResNet.The proposed method performs time-frequency analysis on the detected time-domain aliasing signal to extract the normalized time-frequency image,and performs denoising through the DNCNN network to improve its adaptability in low SNR environments.Then we used the residual units to build a ResNet classi fication model,and transformed the RER problem into a form of multi-label classi fication.And the time-frequency image is passed into the model for classi fication and the classi fication result is output.Under low SNR conditions,we realized fast parallel classification and recognition of multi-modulation radar time-domain aliasing signals.

Fig.10.Accuracy of each radar emitter signal with SNRs of 0 dB,-5 dB,and-10 dB.

Fig.11.Accuracy at different SNRs.

Fig.12.Accuracy of different signal types.

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to in fluence the work reported in this paper.

The authors would like to acknowledge National Natural Science Foundation of China under Grant 61973037 and Grant 61673066 to provide fund for conducting experiments.

杂志排行

Defence Technology的其它文章

- High explosive unexploded ordnance neutralization-Tallboy air bomb case study

- Dynamics and rebound behavior analysis of flexible tethered satellite system in deployment and station-keeping phases

- Finite element analysis of functionally graded sandwich plates with porosity via a new hyperbolic shear deformation theory

- Investigation on the penetration of jacketed rods with striking velocities of 0.9-3.3 km/s into semi-in finite targets

- The effect of strain rate on compressive behavior and failure mechanism of CMDB propellant

- Adaptive target and jamming recognition for the pulse doppler radar fuze based on a time-frequency joint feature and an online-updated naive bayesian classi fier with minimal risk