A Visual-Based Gesture Prediction Framework Applied in Social Robots

2022-01-26BixiaoWuJunpeiZhongandChenguangYang

Bixiao Wu,Junpei Zhong,,and Chenguang Yang,

Abstract—In daily life,people use their hands in various ways for most daily activities.There are many applications based on the position,direction,and joints of the hand,including gesture recognition,gesture prediction,robotics and so on.This paper proposes a gesture prediction system that uses hand joint coordinate features collected by the Leap Motion to predict dynamic hand gestures.The model is applied to the NAO robot to verify the effectiveness of the proposed method.First of all,in order to reduce jitter or jump generated in the process of data acquisition by the Leap Motion,the Kalman filter is applied to the original data.Then some new feature descriptors are introduced.The length feature,angle feature and angular velocity feature are extracted from the filtered data.These features are fed into the long-short time memory recurrent neural network(LSTM-RNN) with different combinations.Experimental results show that the combination of coordinate,length and angle features achieves the highest accuracy of 99.31%,and it can also run in real time.Finally,the trained model is applied to the NAO robot to play the finger-guessing game.Based on the predicted gesture,the NAO robot can respond in advance.

I.INTRODUCTION

CURRENTLY,computers are becoming more and more popular,and the demand for human-robot interaction is increasing.People pay more attention to research of new technologies and methods applied to human-robot interactions[1]–[3].Making human-robot interaction as natural as daily human-human interaction is the ultimate goal.Gestures have always been considered an interactive technology that can provide computers with more natural,creative and intuitive methods.Gestures have different meanings in different disciplines.In terms of interaction design,the difference between using gestures and using a mouse and keyboard,etc.,is obvious,i.e.,gestures are more acceptable to people.Gestures are comfortable and less limited by interactive devices,and they can provide more information.Compared with traditional keyboard and mouse control methods,the direct control of the computer by hand movement has the advantages of being natural and intuitive.

Gesture recognition [4] refers to the process of recognizing the representation of dynamic or static gestures and translating them into some meaningful instructions.It is an extremely significant research direction in the area of human-robot interaction technology.The method of realizing gesture recognition can be divided into two types: visual-based [5],[6] gesture recognition and non-visual-based gesture recognition.The study of non-vision approaches began in the 1970s.Non-vision methods always take advantage of wearable devices [7] to track or estimate the orientation and position of fingers and hands.Gloves are very common devices in this field,and they contain the sensory modules with a wired interface.The advantage of gloves is that their data do not need to be preprocessed.Nevertheless,they are very expensive for virtual reality applications.They also have wires,which makes them uncomfortable to wear.With the development of technology,current research on non-visual gesture recognition is mainly focused on EMG signals[8]–[11].However,EMG signals are greatly affected by noise,which makes it is difficult to process.

Gesture recognition is based on vision and is less intrusive and contributes to a more natural interaction.It refers to the use of cameras [12]–[16],such as Kinect [17],[18] and Leap Motion [19],[20],to capture images of gestures.Then some algorithms are used to analyze and process the acquired data to get gesture information,so that the gesture can be recognized.It is also more natural and easy to use,becoming the mainstream way of gesture recognition.However,it is also a very challenging problem.

By using the results of gesture recognition,the subsequent gesture of performers can be predicted.This process could be called gesture prediction,and it has wider applications.In recent years,with the advent of deep learning,many deep neural networks (DNN) are applied to gesture prediction.Zhanget al.[21] used an RNN model to predict gestures from raw sEMG signals.Weiet al.[22] combined a 3D convolutional residual network and bidirectional LSTM network to recognize dynamic gesture.Kumaret al.[23] proposed a multi modal framework based on hand features captured from Kinect and Leap Motion sensors to recognize gestures,using a hidden Markov model (HMM) and bidirectional long shortterm memory model (LSTM).The LSTM [24] has become an effective model for solving some learning problems related to sequence data.Hence,inspired by the previous works,we adopt the LSTM to predict gestures in our proposed framework.

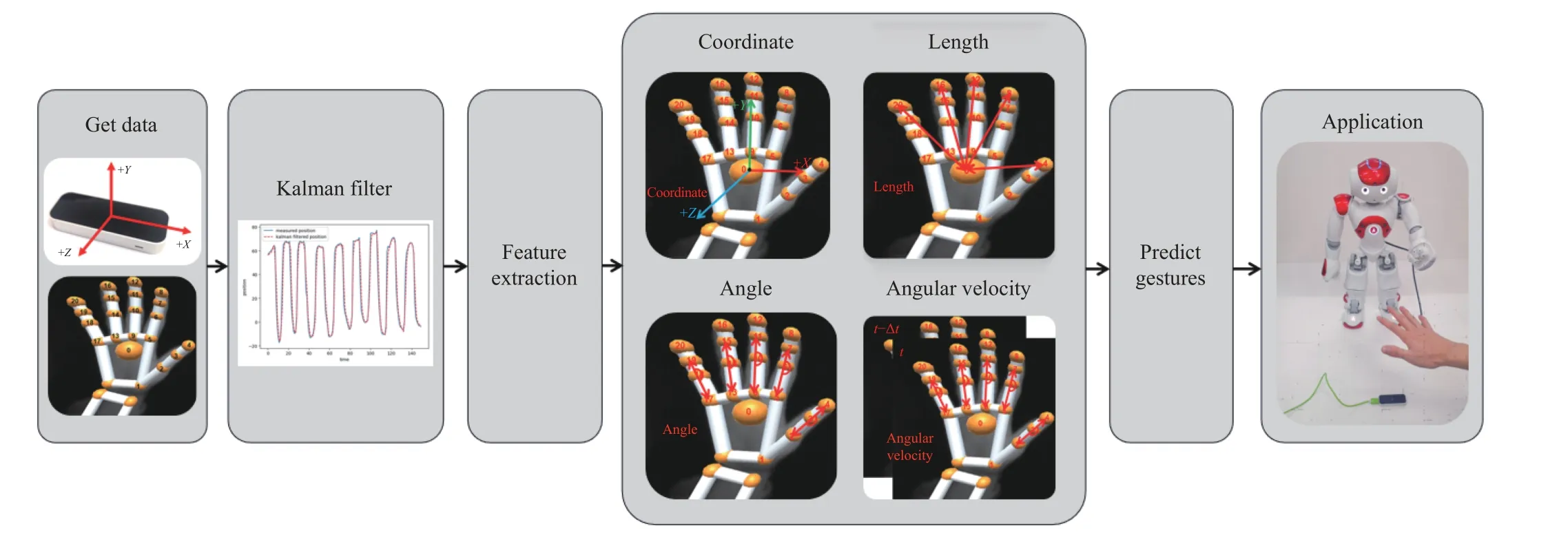

Fig.1.Pipeline of the proposed approach.

In the method of gesture prediction,hand key point detection is one of the most important steps.In the early stage of technological development,the former mainly used color filters to segment the hands to achieve detection.However,this type of method relies on skin color,and the detection performance is poor when the hand is in a complex scene.Therefore,the researchers proposed a detection method based on 3D hand key points.The task goal of the 3D hand key point estimation is to locate the 3D coordinates of hand joints in a frame of depth image,mostly used in virtual immersive games,interactive tasks [25],[26],and so on.Leap Motion is a kind of equipment for 3D data extraction based on vision technology.This device could extract the position of the hand joints,orientation and the speed of the fingertips movement.Recently,Leap Motion has always been used by researchers for gesture recognition and prediction.For example,some scholars use it to recognize American sign language (ASL)[27],[28],and it has a high gesture recognition accuracy.Moreover,Zenget al.[29] proposed a gesture recognition method based on deterministic learning and joint calibration of the Leap Motion.And Marinet al.[30] developed a method to combine the Leap Motion and Kinect to calculate different features of hand,and a higher accuracy was obtained.In this work,we use the data of hand key points detected by the Leap Motion to predict gestures and utilize the gesture recognition results to play the finger-guessing game.This game contains three gestures: rock,paper and scissors.The winning rules of this game are: scissors wins paper,paper wins rock,rock wins scissors.Based on these game rules,this paper proposes a method to judge gestures in advance when the player has not completed the action.

The combination of the Leap Motion and LSTM significantly improves human-robot interaction.The Leap Motion could track each joint of the hand directly and has the ability to recognize or predict gestures.Moreover,compared with other devices,the Leap Motion has higher localization precision.On the other hand,the LSTM can solve the prediction problem well in most cases,and it is one of the important algorithms of deep learning (DL).This work combines the strengths of the LSTM and Leap Motion to predict gestures.Leap Motion captures 21 three-dimensional joint coordinates in each frame,and the LSTM network is used to train and test these features.This work has some novel contributions:

1) A method for predicting gestures based on the LSTM is proposed.The data of gestures is collected by the Leap Motion.

2) In order to reduce or eliminate the jitter or jump generated in the process of acquiring data by the Leap Motion,the Kalman filter is applied to solve this problem effectively.

3) We propose a reliable feature extraction method,which extracts coordinate features,length features,angle features and angular velocity features,and combines these features to predict gestures.

4) We apply the trained model to the NAO robot and make it play the finger-guessing game with players,which effectively verifies the real-time and accuracy of the proposed approach.

The rest part of this paper is structured as below: in Section II,the process of processing data is given.In Section III,the experiment of this work is introduced in detail and the effectiveness is verified in this section.Finally,Section IV makes a summary.The framework of this paper is shown in Fig.1.

II.DATA PROCESSING

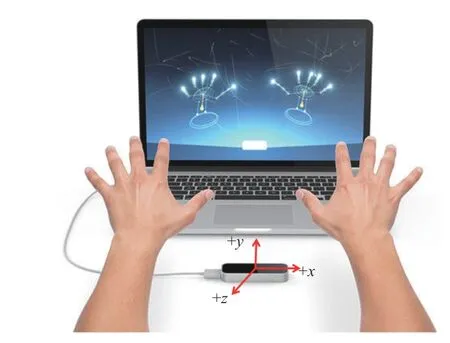

A.Leap Motion Controller

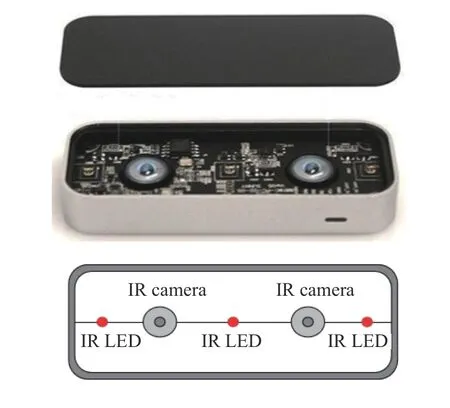

The structure of the Leap Motion is not complicated,as shown in Fig.2.The main part of the device includes two cameras and three infrared LEDs.They tracked infrared light outside the visible spectrum,which has a wavelength of 850 nanometers.Compared with other depth cameras,such as the Kinect,the information obtained from the Leap Motion is limited (only a few key points rather than complete depth information) and it works in smaller three-dimensional areas.However,it is more accurate to use Leap Motion to acquire data.Moreover,Leap Motion provides software that can recognize some movement patterns,including swipe,tap and so on.Developers can access some functions of Leap Motion through the application programming interface (API) to create new applications.For example,they can obtain information about the position and length of the user’s hand to recognize different gestures.

Fig.2.The structure of the Leap Motion.

Even though the manufacturers declare an accuracy of the Leap Motion in position measurement is around 0.01 mm,[31] shows that it is about 0.2 mm for static measurements and 0.4 mm for dynamic measurements in fact.And in the coordinates of the finger joints extracted by Leap Motion,there exists jitter or even jump,which could affect the accuracy of the experimental results.In order to reduce or eliminate these phenomena,this work takes advantage of the Kalman filter to correct the predicted position of hand joints.

B.Data Acquisition

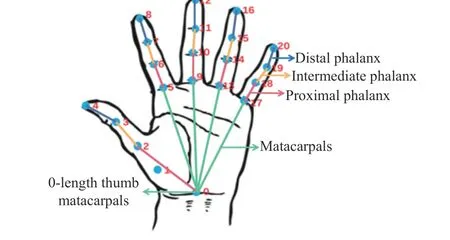

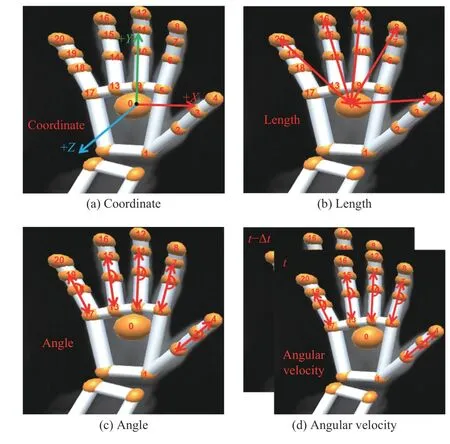

Each finger is marked with a name: thumb,index,middle,ring,and pinky,including four bones (except thumb).As shown in Fig.3,the phalanx of the finger includes the metacarpal,proximal phalanx,middle phalanx,and distal phalanx.Particularly,the thumb has only three phalanges,one less than the other.In the algorithm design,we set the length of the thumb metacarpal bone to 0 to guarantee that all five fingers have the same number of phalanges,which is easy to programme.In this work,the main data acquired by the Leap Motion are as follows:

1) Number of Detected Fingers:Num∈[1,5] is the number of fingers detected by Leap Motion.

2) Position of the Finger Joints:Pi,i=1,2,3,...,20 contains the three-dimensional position of each finger joint.The Leap Motion provides a one-to-one map between coordinates and finger joints.

3) Palm Center:Pc(x0,y0,z0) represents three-dimensional coordinates of the center of the palm area in 3D space.

4) Fingertips Movement Speed:Vrepresents the speed in the three-dimensional direction of each fingertip detected by the Leap Motion.

Fig.3.Definition of endoskeleton in Leap Motion.

C.Kalman Filter

1) Problem Formulation:In the process of gesture changes,the fingertips have the largest range of change and can more easily jitter or jump than other joints,therefore,the Kalman filter is used to process the data from fingertips.Compared with other filters,such as the particle filter,the Luenburger observer filter,etc.,the Kalman filter has sufficient accuracy and can effectively remove Gaussian noise.In addition,its low computational complexity meets the real-time requirements of this work.Therefore,the Kalman filter is used for this work.

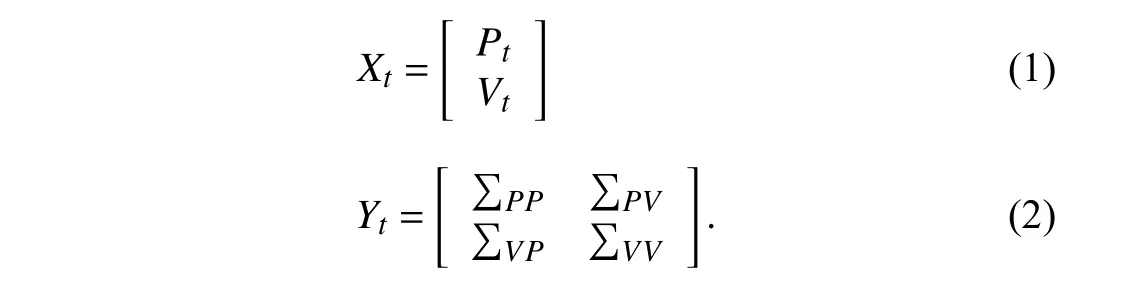

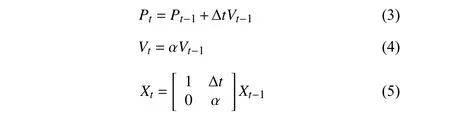

Suppose that the current position of the fingertips obtained by Leap Motion isPt,and the speed isVt.The Kalman filter assumes that these two variables obey a Gaussian distribution,and each variable has a mean value of μ,and variance of σ2.For clarity,Xtdenotes the best estimate at timet,andYtdenotes the covariance matrix.The equations ofXtandYtare as follows:

2) The Prediction Process:We need to predict the current state (timet) according to the state of the last time (timet-1).This prediction process can be described as follows:

where Δtis the time interval,which depends on the data acquisition rate of the Leap Motion,and α is the rate of speed change.

The matrixFtis used to represent the prediction matrix,so(5) can be represented as follows:

and through the basic operation of covariance,Ytcan be expressed as the following equation:

3) Refining the Estimate With Measurements:From the measured sensor data,the current state of the system can be guessed roughly.However,due to uncertainty,some states may be closer to the real state than the measurements acquired from the Leap Motion directly.In this work,covarianceRtis used to express the uncertainty (such as the sensor noise),and the mean value of the distribution is defined asZt.

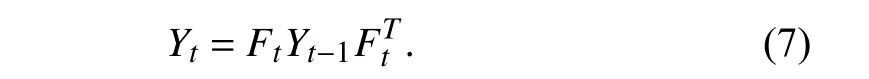

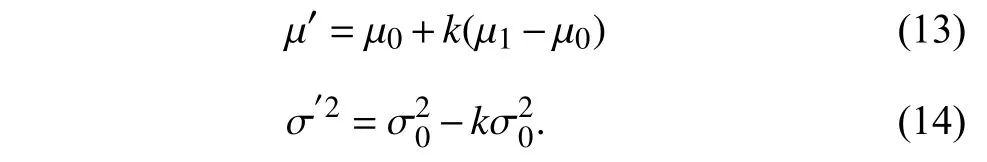

Now,there are two Gaussian distributions,one near the predicted value and the other near the measured value.Therefore,two Gaussian distributions are supposed to be multiplied to calculate the optimal solution between the predicted value and the measured value of the Leap Motion,as shown in the following equations:

where μ0,σ0represent the mean and variance of the predicted values,respectively.μ1,σ1represent the mean and variance of the measured values,respectively.μ′,σ′represent the mean and variance of the calculated values,respectively.

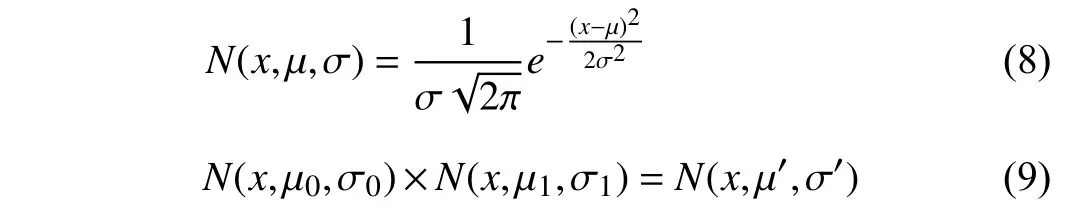

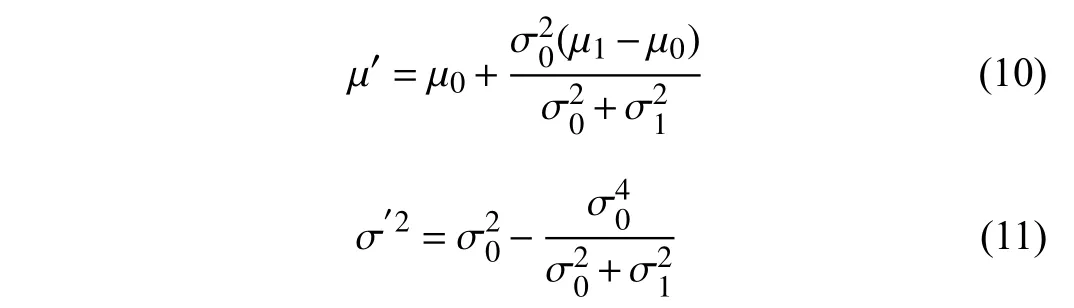

By substituting (8) into (9),we can get the following equations:

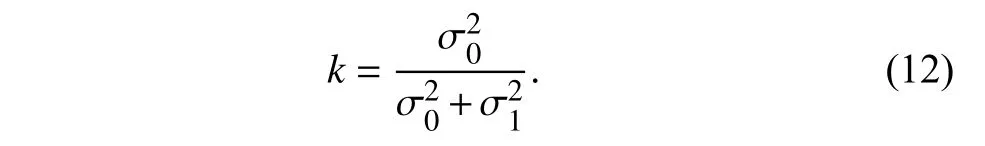

the same parts of (10) and (11) are represented byk

Therefore,(10) and (11) can be converted as follows:

4) Integrate All the Equations:In this section,the equations of the Kal man filter used in this paper are integrated.

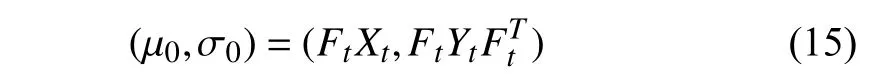

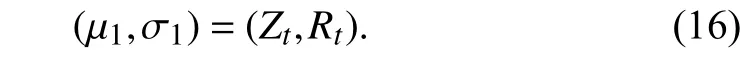

There are two Gaussian distributions,the prediction part

and the measurement part

We then put them into (13) and (14) to get the following equation:

And according to (12),the Kalman gain is as follows:

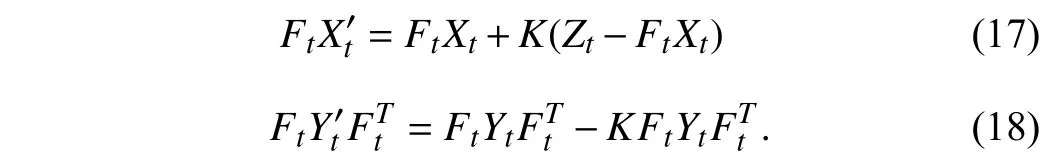

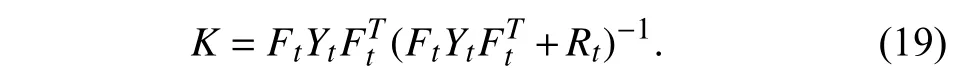

Next,the above three formulas are simplified.On both sides of (17)and(18),we left multiply the inverse matrix ofFt.On both sides of(18),weright multiply the inverse matrix of.We then can get the following simplified equation:

is the new optimal estimation of the data collected by the Le ap Motion,which we putalong withinto the next prediction and update the equation,and iterate continuously.Through the above steps,the data collected by the Leap Motion could be more accurate.

D.Feature Extraction

Now,after filtering the original data,we analyze four features acquired from the filtered data.These features are introduced in the rest of this section.

● Coordinate:x,y,andzcoordinates of the hand joints obtained by the Leap Motion.

● Length:The distance from the fingertips to center of the hand.

● Angle:The angle between the Proximal phalanx and Intermediate phalanx of each finger (except Thumb).

● Angular velocity:The rate of the joints angle change.

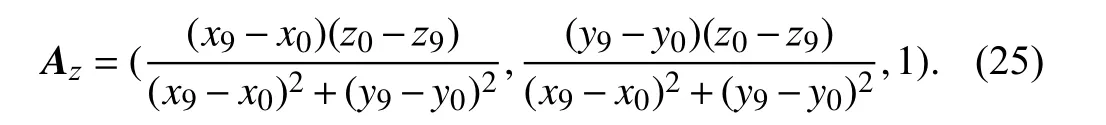

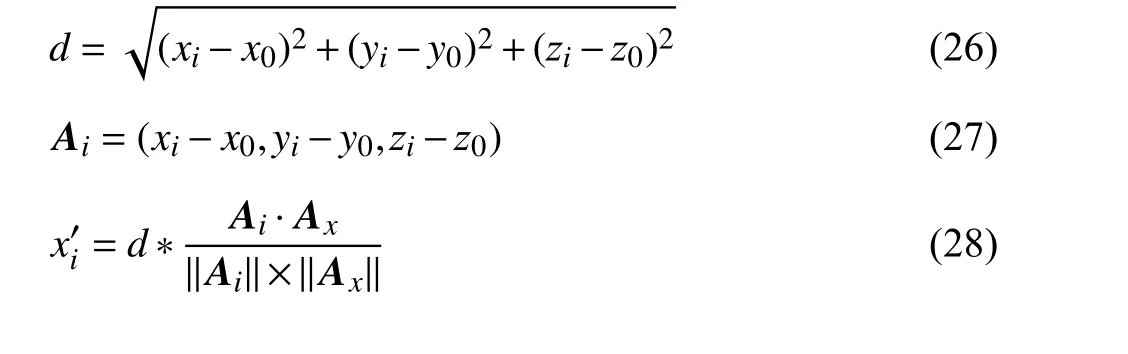

1) Coordinate Feature:As shown in Fig.4(a),this feature set represents the position of the finger joints in three dimensional space.The original data take the Leap Motion as the coordinate origin as shown in Fig.5.With the movement of the hand,the obtained data could change a lot,which has a certain impact on the experimental results.For the purpose of eliminating the influence of different coordinate systems,the coordinate origin is changed to the palm center,as shown in Fig.4(a).Taking the palm of the hand as the plane,the direction from palm center to the root of the middle finger is the positive direction of they-axis.The positive direction of thex-axis is the direction perpendicular to they-axis and to the right.Through the coordinate origin,perpendicular to this plane is thez-axis.

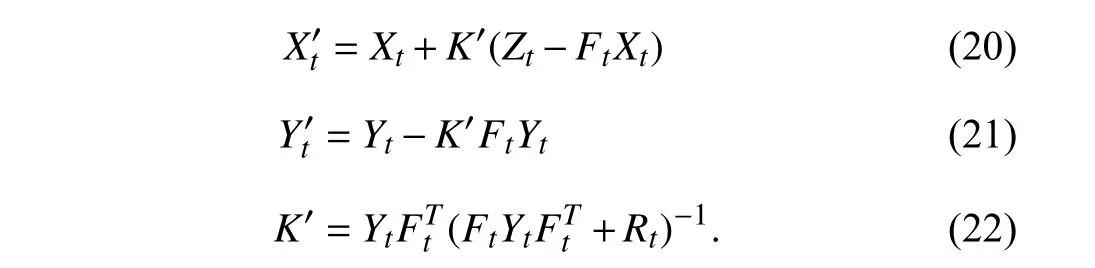

The positive direction of they-axis in the new coordinate system can be represented by the following vector:

Similarly,the positive direction of thex-axis in the new coordinate system can be expressed by the following vector:

And the positive direction of thez-axis in the new coordinate system can be expressed by the following vector:

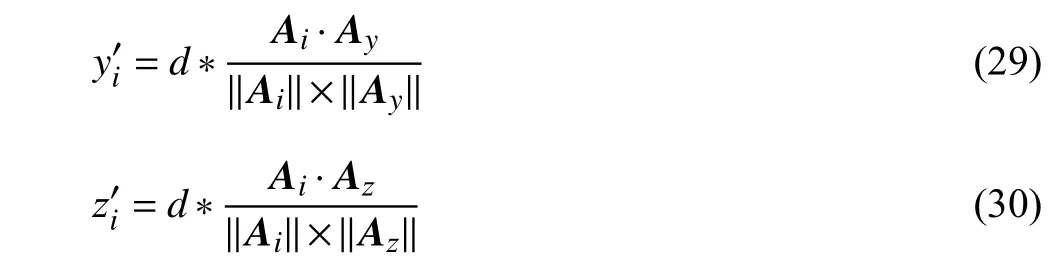

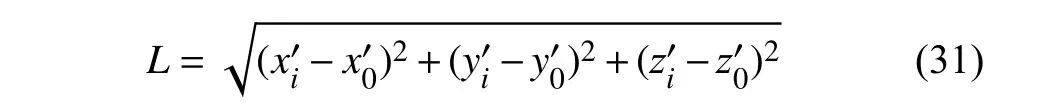

The coordinate representation in the new coordinate system is

Fig.4.Four different types of features extracted from the Leap Motion.

Fig.5.The coordinate system of the Leap Motion.

where (,,) represents the new coordinate after coordinate conversion,i=1,2,...,20 represent the points corresponding to the finger joints.Through the above equations,we can get new coordinates with the palm center as the origin of the coordinate system.Because each three-dimensional coordinate is a array of length 3,the actual dimension of the coordinate feature is 3 × 20 = 60.

2) Length Feature:As shown in Fig.4(b),this feature refers to the length from each fingertip to the center of the palm.The coordinates of the joints collected from the Leap Motion are used to calculate length information.It can be found that the fingertips are the most variable joints,so (31) is used to calculate the distance between the palm center and the fingertips.

wherei=4,8,12,16,20 represent the points corresponding to the fingertips in Fig.4(b),and the dimension of length feature is 5.

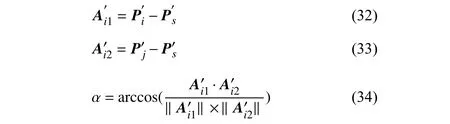

3) Angle Feature:As shown in Fig.4(c),this feature represents the angle between Proximal phalanx and Intermediate phalanx of each finger (except Thumb),and the angle extracted from the thumb is between the Intermediate phalanx and Distal phalanx.The calculation process is as follows:

4) Angular Velocity Feature:As shown in Fig.4(d),this feature represents the rate of the joint angle change.As shown in the following equation:

wheretis the current time,Δtis the time interval,which depends on the Leap Motion’s sampling time.The dimension of the angular velocity feature is 5.

E.Gesture Prediction

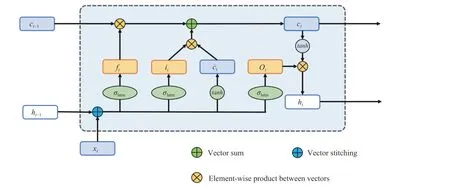

The method in the previous section produces four different features,and each feature represents some information related to the performed gesture.In this section,the LSTM network[24] used for gesture prediction is described in detail.The internal structure of the LSTM is shown in Fig.6,wherextdenotes the input of the LSTM network andhtdenotes the output of the LSTM network.ftis the forget gate variables,itis the input gate variables,andotis the output gate variables.The subscriptstandt-1 represent the current time and previous time.ctandare the memory cell state and the memory gate,respectively.The notation of σlstmandtanhdenote the sigmoid and hyperbolic activation functions as shown in (36) and (37).

The relevant parameters of the LSTM can be calculated by the following equations:

Fig.6.The internal structure of the LSTM.

Fig.7.Collect gesture paper data by using the Leap Motion.

where subscripts off,i,o,andcare related to the parameters of the forget gate,input gate,output gate and memory cell.The parametersWf,Wi,Wc,andWodenote the weight matrices of the corresponding subscripts.Similarly,bf,bi,bc,andbopresent the biases corresponding to subscripts of the LSTM network.The notation of * denotes the element-wise product between vectors.

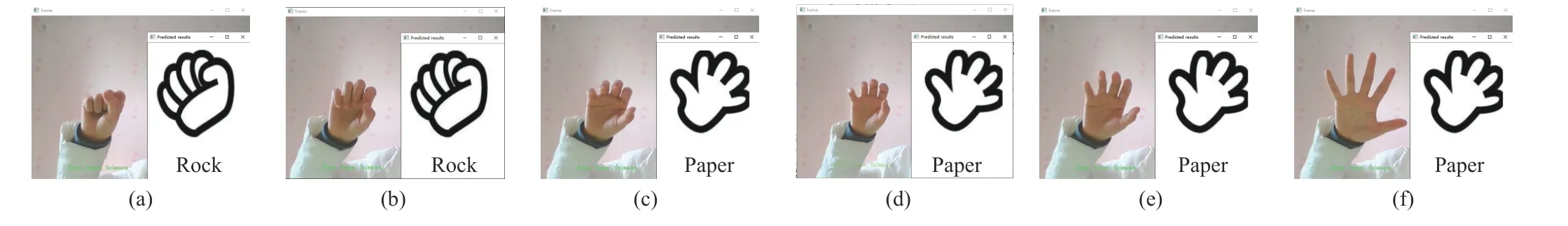

In the process of data collection,the change of gestures can be divided into three stages,as shown in Fig.7.For a clearer description,we take the process of turning rock into paper as an example to explain these three stages:

1) The Original Stage:As shown in Figs.7(a) and 7(b),the gestures at this stage are close to the original state,that is,the gesture is similar to a rock.

2) The Intermediate Stage:As shown in Figs.7(c) and 7(d),the gestures at this stage change significantly compared to the original stage,that is,the five fingers clearly show different degrees of openness.

3) The Completion Stage:As shown in Figs.7(e) and 7(f),the gestures at this stage are close to the completion,that is,the gestures tend to being paper.

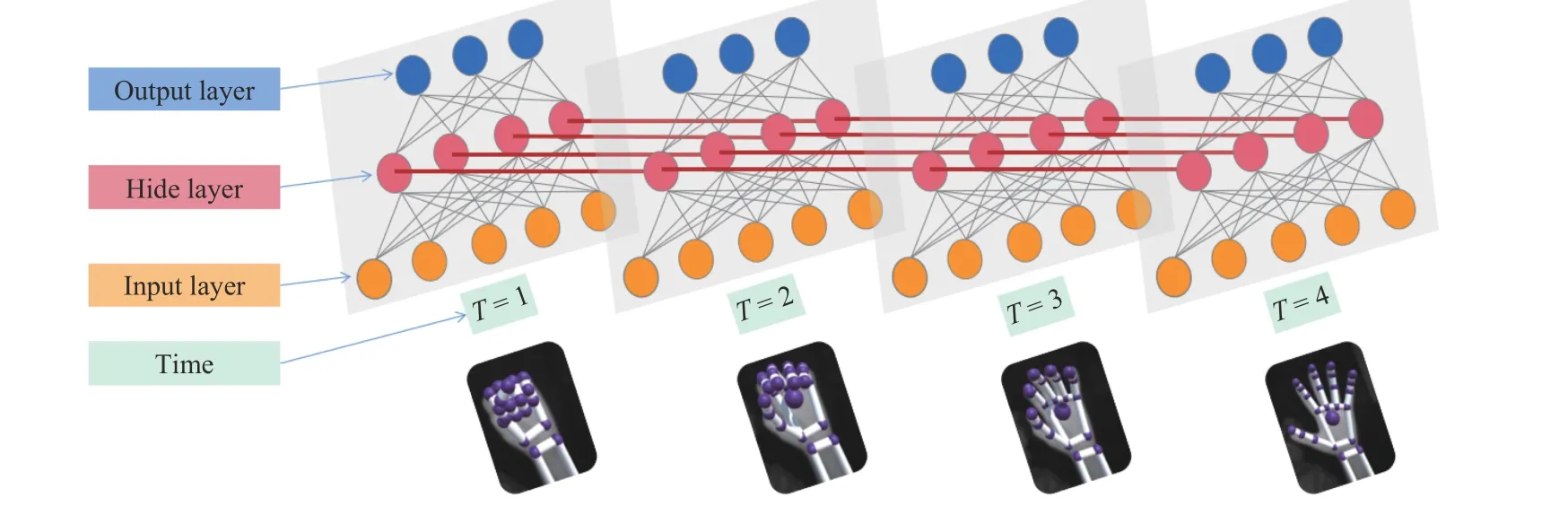

Since different players perform actions at different speeds,each action contains 2–6 frames.For the purpose of uniformity,theTof LSTM is set to 4,that is,4 frames of data are input into the LSTM network for prediction.This process is shown in Fig.8.The input layer of the LSTM network is features obtained by the Leap Motion.These features are the coordinate,length,angle and angular velocity calculated from Section II-D,and their dimensions are 60,5,5,and 5,respectively.In addition,the hidden layer of the LSTM network contains 100 nodes.The output of the LSTM network is the result of gesture prediction with the dimension of 3,that is,rock,scissors and paper.With the LSTM network,we can predict the gestures accurately,and the classification results will be sent to a social robot for interaction and reaction.

Fig.8.The process of the LSTM for predicting gestures.

III.EXPERIMENT

A.Experimental Setup

In this section,the performance and efficiency of the proposed framework are tested.The experiments were carried out on a laptop with an Intel Core i5-6200U CPU.The dynamic gestures of rock,paper and scissors are collected from five different players,and each player repeats each gesture 300 times at fast,medium,and slow speeds,for a total of 4500 different data samples.The experimental results of the network trained by the four features and their combination are compared.

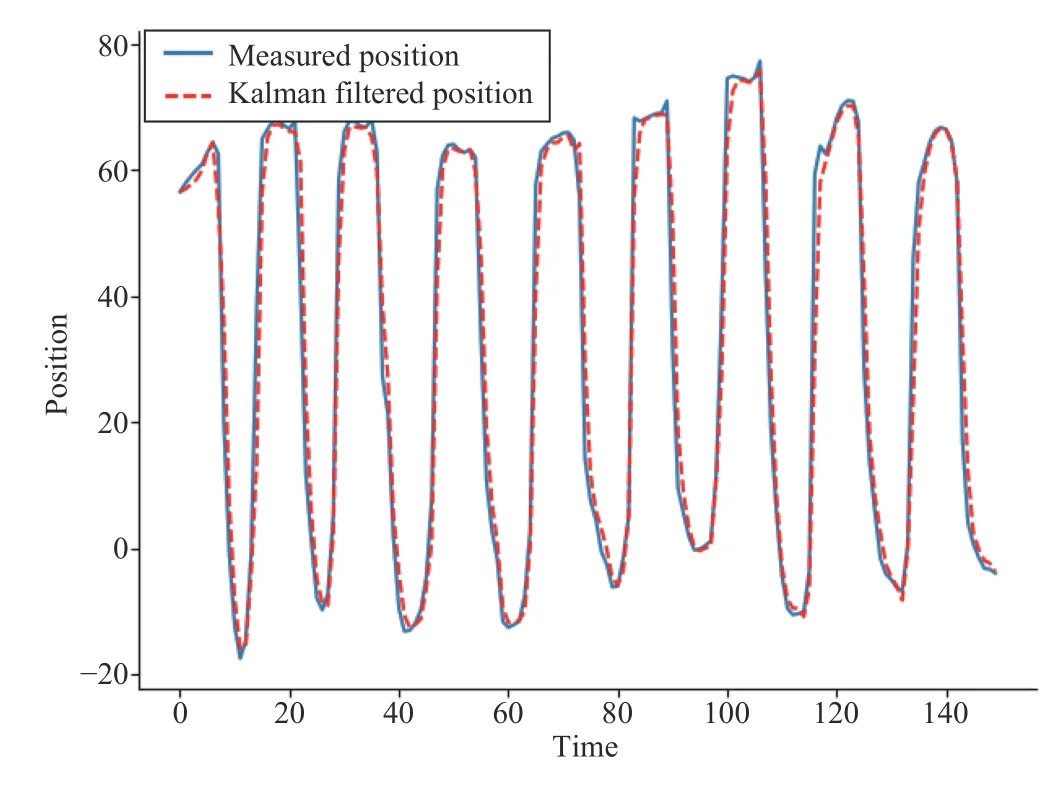

B.Kalman Filter

In Section II-C,the Kalman filter is introduced in detail.In this section,it is verified by an experiment,and the measured position is directly obtained by the Leap Motion.The Kalman filter is used to process the original coordinate data to make the processed data closer to the real value.As can be seen from Fig.9,the processed data is much smoother.

Fig.9.Data processed by Kalman filter.

C.Experimental Result

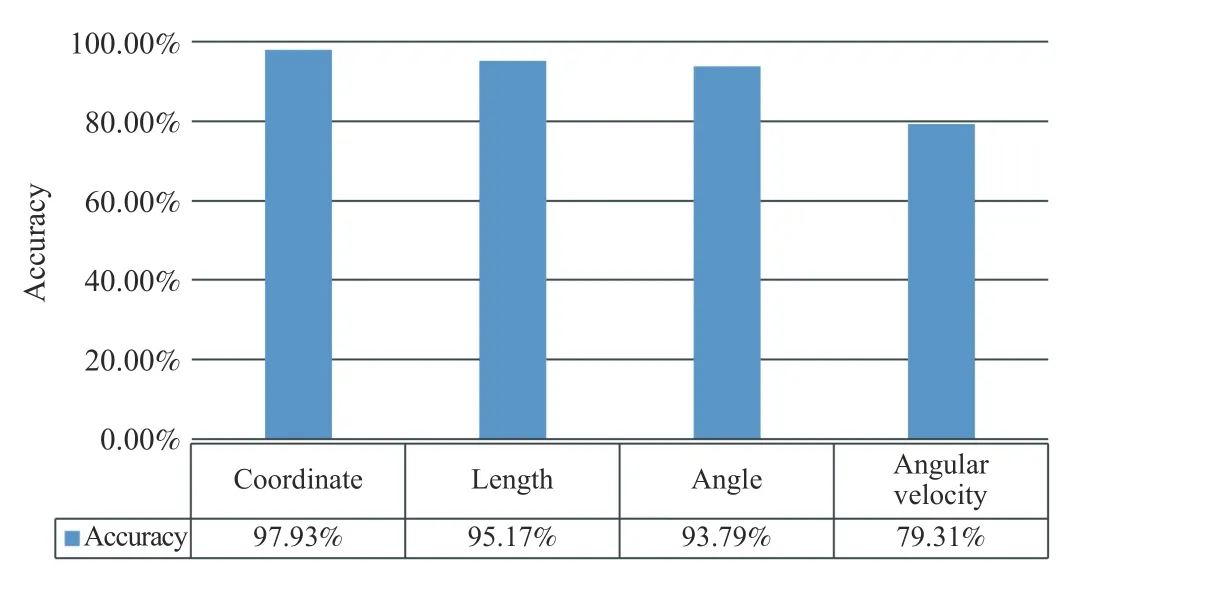

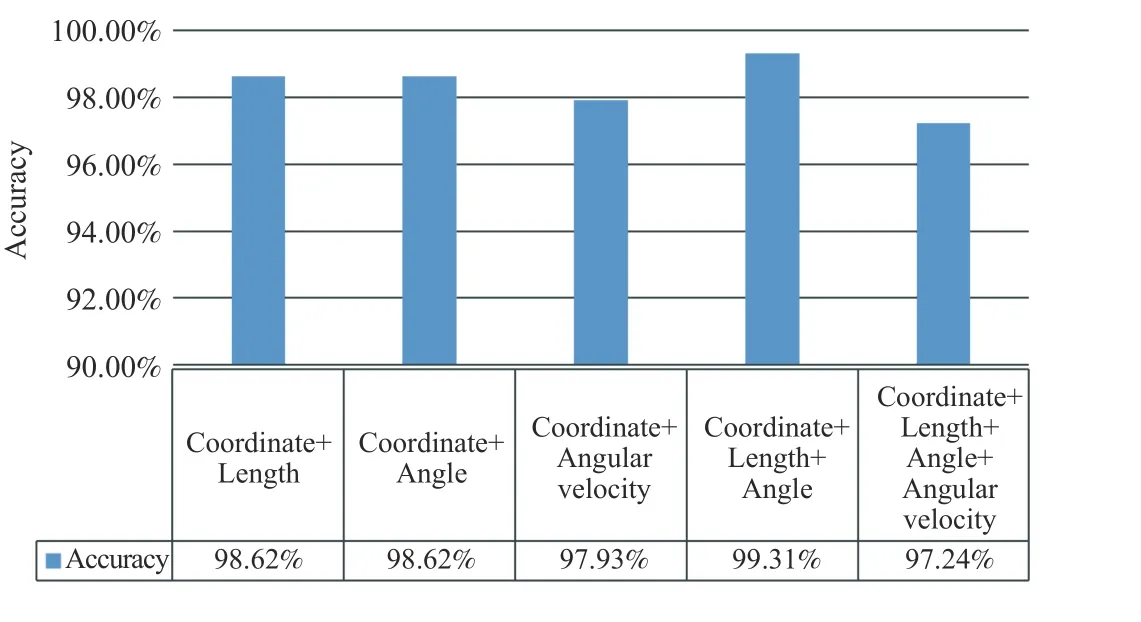

According to the description in Section II-D,we extract the three-dimensional coordinates feature,length feature,angle feature,and angular velocity feature from the filtered data,and train them.Figs.10 and 11 show the accuracy of features using the classification algorithm of Section II-E.

The three-dimensional positions of the finger joints show that the accuracy of gesture prediction is 97.93%.The length feature and the angle feature have an accuracy of 95.17% and 93.79%,respectively.The angular velocity feature has lower performance,it has an accuracy of 79.31%.It is affected by the speed of the player’s movement,so it is not fast enough to make an accurate prediction.

The combination of multiple features could enrich the input of the neural network.In some cases,it maybe improve the performance of the prediction.As can be seen from Fig.11,the combination of coordinate features,length features and angle features achieve the highest accuracy of 99.31%,better than any of the three features alone.These results suggest that different features can represent different attributes of the hand and include complementary information.

Fig.10.The experimental results of four features.

Fig.11.The experimental results of the combination of four features.

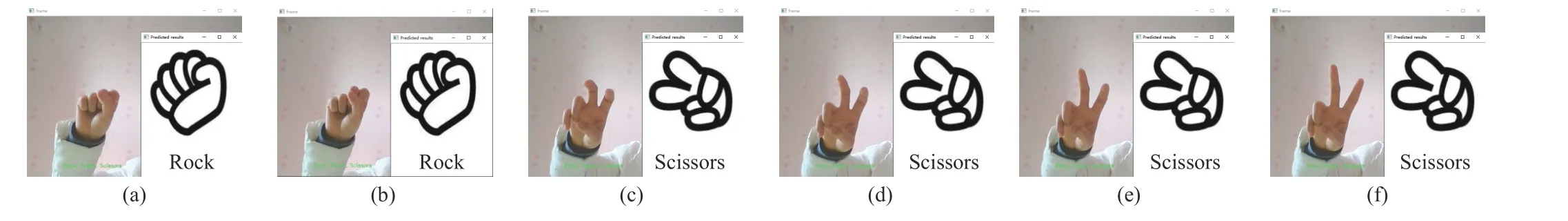

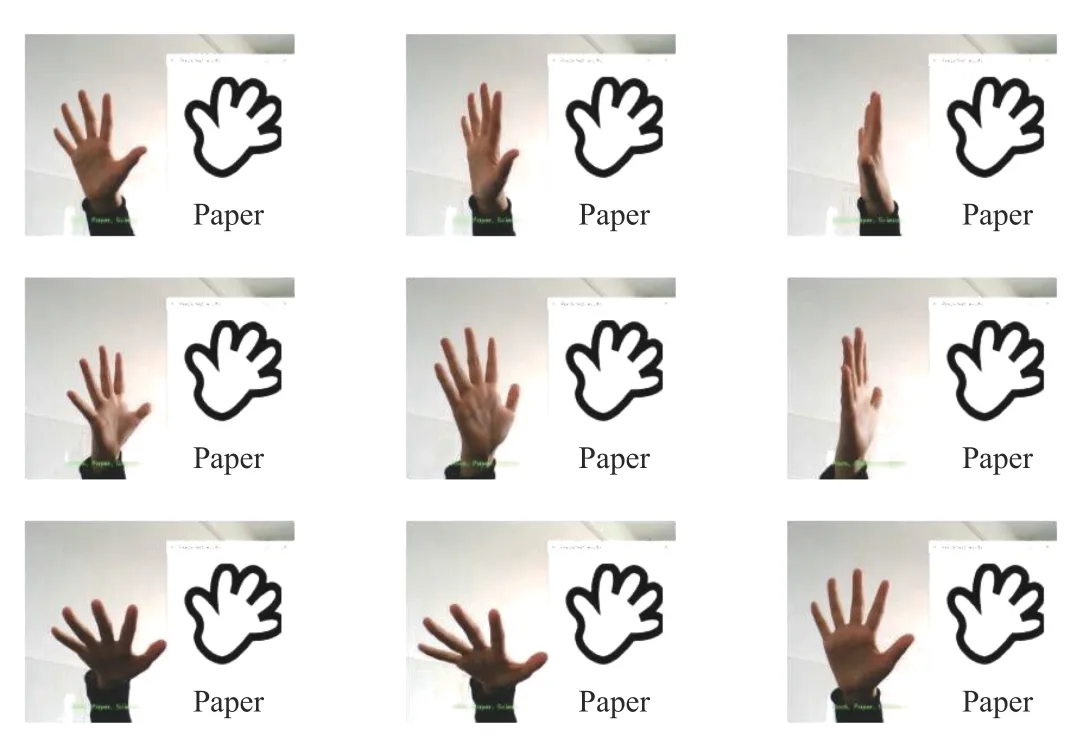

We examine whether the proposed method is able to achieve real-time gesture recognition and prediction.As shown in Figs.12 and 13,it is obvious that the method proposed in this work can predict the gesture of the fingerguessing game very well.For example,when the player’s gesture changes from rock to paper,the proposed method can predict that the player’s gesture is paper before all fingers are fully open.In addition,we also verify the prediction results of the proposed method from different angles of the Leap Motion to the hand,as shown in Fig.14.

D.Application

Fig.12.The prediction process of turning rock into paper.

Fig.13.The prediction process of turning rock into scissors.

Fig.14.Predicted results from different angles of the Leap Motion to hand.

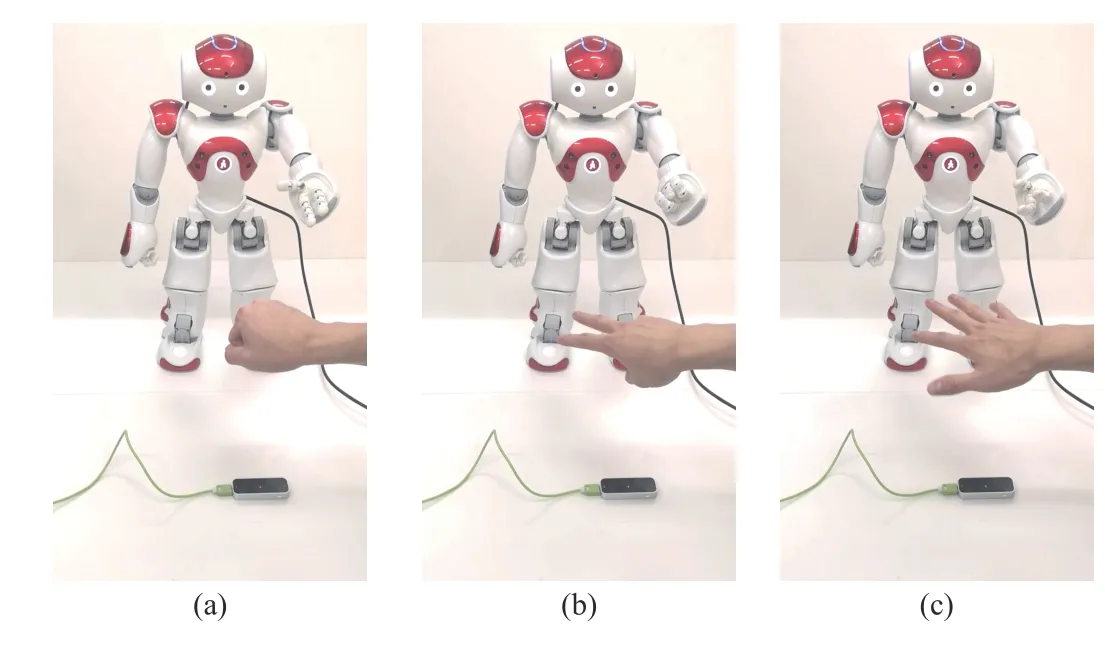

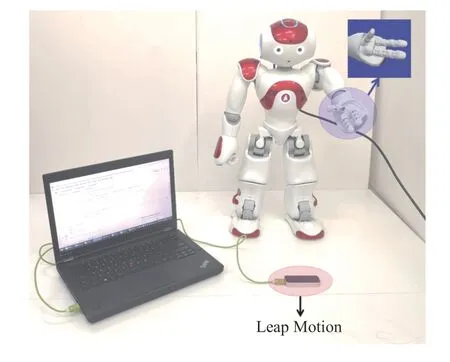

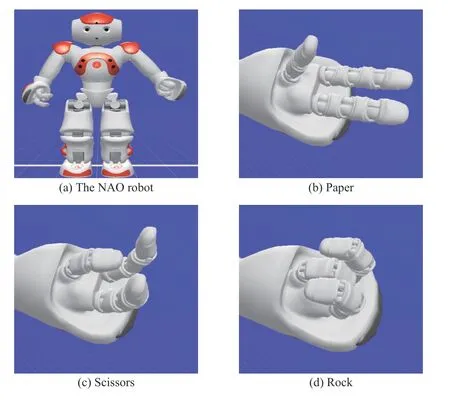

In order to further prove the effectiveness of the proposed method,the trained network is applied to the humanoid robot NAO,as shown in Fig.15.The NAO is an autonomous,programmable humanoid robot which is designed by Aldebaran Robotics [32].The height of the NAO is 573.2 mm and the weight of it is 4.5 kg.It has two cameras,voice recognition,voice synthesis and powered by LiPo Battery.What’s more,it consists of four microphones,two sonar emitters and receivers,two IR emitters and receivers,and three tactile sensors on the top of head.

In this work,we mainly use the NAO robot’s left hand to play the finger-guessing game with the player.As shown in Fig.16,the NAO robot has only three fingers,and they are linked.Therefore,we first define that the full opening of the robot fingers is paper,the half opening of the robot fingers is scissors,and the clenched fingers are rock.

Then,the trained model is applied to the NAO robot,and the experimental results are shown in Fig.17.The Leap Motion is used to predict gestures,and then the computer sends the results to the NAO robot,so that the NAO robot can win or lose the game through some simple judgments.

Fig.17.The experimental results with the NAO robot.

IV.CONCLUSION

Fig.15.Experimental equipment and platform.

Fig.16.The NAO robot and rock-paper-scissors gesture.

In this paper,a gesture prediction framework based on the Leap Motion is proposed.In the process of data acquisition by the Leap Motion,some jumps or jitters maybe occur.Therefore,the Kalman filter is used to solve these problems.Then,based on the original coordinate features collected by the Leap Motion,we extract three new features,namely,the length feature,angle feature and angular velocity feature.The LSTM network is used to train the model for gesture prediction.In addition,the trained model is applied to the NAO robot to verify the real-time and effectiveness of the proposed method.

杂志排行

IEEE/CAA Journal of Automatica Sinica的其它文章

- Deep Learning Based Attack Detection for Cyber-Physical System Cybersecurity: A Survey

- Sliding Mode Control in Power Converters and Drives: A Review

- Cyber Security Intrusion Detection for Agriculture 4.0: Machine Learning-Based Solutions,Datasets,and Future Directions

- Barrier-Certified Learning-Enabled Safe Control Design for Systems Operating in Uncertain Environments

- Cubature Kalman Filter Under Minimum Error Entropy With Fiducial Points for INS/GPS Integration

- Conflict-Aware Safe Reinforcement Learning:A Meta-Cognitive Learning Framework