Understanding Nonverbal Communication Cues of Human Personality Traits in Human-Robot Interaction

2020-11-05ZhihaoShenArmaganElibolandNakYoungChong

Zhihao Shen, Armagan Elibol, and Nak Young Chong,

Abstract—With the increasing presence of robots in our daily life, there is a strong need and demand for the strategies to acquire a high quality interaction between robots and users by enabling robots to understand users’ mood, intention, and other aspects. During human-human interaction, personality traits have an important influence on human behavior, decision, mood, and many others. Therefore, we propose an efficient computational framework to endow the robot with the capability of understanding the user’s personality traits based on the user’s nonverbal communication cues represented by three visual features including the head motion, gaze, and body motion energy, and three vocal features including voice pitch, voice energy, and mel-frequency cepstral coefficient (MFCC). We used the Pepper robot in this study as a communication robot to interact with each participant by asking questions, and meanwhile, the robot extracts the nonverbal features from each participant’s habitual behavior using its on-board sensors. On the other hand, each participant’s personality traits are evaluated with a questionnaire. We then train the ridge regression and linear support vector machine (SVM) classifiers using the nonverbal features and personality trait labels from a questionnaire and evaluate the performance of the classifiers. We have verified the validity of the proposed models that showed promising binary classification performance on recognizing each of the Big Five personality traits of the participants based on individual differences in nonverbal communication cues.

I. Introduction

WITH the population aging and sub-replacement fertility problems increasingly prominent, many countries have started promoting robotic technology for assisting people toward a better life. Various types of robotic solutions have been demonstrated to be useful in performing dangerous and repetitive tasks which humans are not able to do, or do not prefer to do. In relation to elderly care provision, assistive robots could replace and/or help human caregivers support the elderly socially in their home or residential care environments.

Researchers gradually realized that the interactions between a human user and a robot are far more than sending commands to the robot or reprogramming, as a new class of social robots are emerging in our daily life. It is now widely understood that not only the robot’s appearance but also its behaviors are important for human-robot interaction [1], [2].Therefore, synchronized verbal and nonverbal behaviors [3]were designed and applied to a wide variety of humanoid robots, like Pepper, NAO, ASIMO, and many others, to improve the user’s engagement in human-robot interaction.For instance, the Honda ASIMO robot can perform various movements of arms and hands including metaphoric, iconic,and beat gestures [4]. Likewise, some researchers have designed such gestures using the SAIBA framework [5] for the virtual agents. The virtual agents were interfaced with the NAO robot to model and perform the combined synchronized verbal and nonverbal behavior. In [6], the authors tested the combined verbal and nonverbal gestures on a 3D virtual agent MAX to make the agent act like humans. Meanwhile, cultural factors are also considered to be crucial components in human-robot interaction [7]. In [8], the authors designed emotional bodily expressions for the Pepper robot and enabled the robot to learn the emotional behaviors from the interacting person. Further investigations on the influence of the robot’s nonverbal behaviors on humans were conducted in [9]. These efforts were made to enable robots to act like humans.However, the synchronized behaviors are unilateral movements with which robots track the person’s attention.Therefore, the authors in [10] claimed that social robots need to act or look like humans, but more importantly they will need to be capable of responding to the person with the synchronized verbal and nonverbal behavior based on his/her personality traits. Inspired by their insight in [10], we aim to develop a computational framework that allows robots to understand the user’s personality traits through their habitual behavior. Eventually, it would be possible to design a robot that is able to adapt its combined verbal and nonverbal behavior toward enhancing the user’s engagement with the robot.

A. Why Are the Personality Traits Important During the Interaction?

In [11], the authors investigated how personality traits affect humans in their whole life. The personality traits encompass relatively enduring patterns of human feelings, thoughts, and behaviors, which make each different from one another. When the human-human conversational interaction is considered, the speaker’s behavior is affected by the speaker’s personality traits,and the listener’s personality traits also affect their attitude toward the speaker. If their behaviors make each other feel comfortable and satisfying, they would enjoy talking to each other. In social science research, there have been different views toward the importance of interpersonal similarity and attraction. Some people tend be attracted to other people with similar social skills, cultural background, personality, attitude,and several others [12], [13]. Interestingly, in [14], the authors addressed the complementary attraction that some people prefer to talk with other people whose personality traits are complementary to themselves. Therefore, we believe that if the robot is able to understand the user’s coherent social cues, it would improve the quality of human-robot interaction,depending on the user’s social behavior and personality.

In previous studies, the relationships between the user’s personality traits and the robot’s behavior were investigated. It was shown in [15] that humans are able to recognize the personality of the voice that was synthesized by the digital systems and computers. Also, a compelling question was explored to better understand the personality of people whether they are willing to trust a robot or not in an emergency scenario in [16]. Along the lines, a strong correlation between the personality traits of users and the social behavior of a virtual agent was presented in [17]. In [18], the authors designed the robot that have personalities to interact with a human, where significant correlation between human and robot personality traits were revealed. Their results showed how the participants’technological background affected the way they perceive the robot’s personality traits. Also, the relationship between the profession and personality was investigated in [19]. The result conforms with our common sense such as that doctors and teachers tend to be more introverted, while managers and salespersons tend to be more extroverted. Furthermore, the authors investigated how humans think about the NAO robot with different personality traits (introversion or extroversion),when the robot plays different roles in human-robot interaction[20]. However, their results were not in accordance with our common sense. The robot seems smarter to the human when the robot acted as an introverted manager and extroverted teacher.On the contrary, the extroverted manager and introverted teacher robots were not perceived intelligent by the participants.These two results conflict with each other. This could be due to the fact that people treat and perceive robots differently from humans in the aforementioned settings. Another reason could be that the introverted manager robot looked like more deliberate, because it took more time to respond, while the extroverted teacher robot looked like more erudite, because it took less time to respond during the interaction. Even though these two studies found conflicting results, the results imply the importance of robot personality traits in designing professional roles for human-robot interaction.

In light of the previous studies on personality match in humanrobot interaction, some of the findings are inconsistent with each other. The result that was shown in [21] indicated that the participants enjoyed interacting more with the AIBO robot when the robot has a complementary personality to the participants’. While the conclusions from [22] showed that the participant was more comfortable when they interacted with the robot with a similar personality to theirs. Similarly, the engagement and its relation to the personality traits were analyzed during human-robot interaction in [23], where the participants’ personality traits played an important role in evaluating individual engagement. The best result was achieved when the participant and robot both were extroverted. Note that when both the participant and the robot were introverted, the performance was the worst. Although the complementary and similar attraction theory may need further exploration in the future, these studies clearly showed that how the personality traits are important in human-robot interaction.

On the other hand, the personality traits have been shown to have a strong connection with the human emotion. In [24], it was discussed that how the personality and mind model influence the human social behavior. A helpful analogy for explaining the relationship between personality and emotion is“personality is to emotion as the climate is to weather” [25].Therefore, theoretically, once the robot is able to understand the user’s personality traits, it would be very helpful for the robot to predict the user’s emotion fluctuation.

Fig. 1 illustrates our final goal by integrating the proposed model of inferring human personality traits with the robot’s speech and behavioral generation module. The robot will be able to adjust its voice volume, speed, and body movements to improve the quality of human-robot interaction.

Fig. 1. Integrating proposed model of inferring human personality traits into robot behavior generation.

B. Architecture for Inferring Personality Traits in Human-Robot Interaction

In the sub-section above, the importance of the personality traits in human-human and human-robot social interactions is clearly stated. Here we propose our computational framework for enabling the robot to recognize the user’s personality traits based on their visual and vocal nonverbal behavior cue. This paper is built upon our preliminary work in [26].

In this study, the Pepper robot [27] equipped with two 2D cameras and four microphones interactsw ith each participant.In the previous research on the emergent LEAder corpus(ELEA)[28],when recording the video of a group meeting,the camera was set in them iddle of the desk to capture each participant’s facial expression and upper body movement.Reference[23]also used the external camera to record the individual and interpersonal activities for analyzing the engagement of human-robot interaction.However,we do not use any external devices for the two reasons;First,we attempt to make sure that all audio-visual features are captured from the first-person perspective,ensuring that the view from the robot is closely sim ilar to that from the human.Secondly,if the position and pose of the external camera changes for some reasons,it would yield a significant difference between the visual features.Thus, we use the Pepper’s forehead camera only.

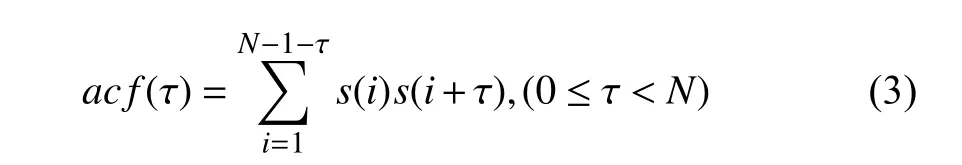

Fig.2 briefly illustrates our experimental protocol which consists of the nonverbal feature extraction and themachine learningmodel training.Part a):all participants recruited from the Japan Advanced Institute of Scienceand Technology were asked to communicate w ith the Pepper robot.The robotkeeps asking questions related to the participant,and each participant answers the questions.The participantsare supposed to reply to the robot’s questions w ith their habitual behavior.Before or after each participant finished interacting w ith the robot, they were asked to fill out a questionnaire to evaluate their personality traits.The personality traits scores were binarized to perform the classification task.Part b):we extracted the participants’audio-video features that include the headmotion,gaze, body motion energy,voice pitch,voice energy,and MFCC during the interaction.Part c):the nonverbal features and personality traits labels w ill be used to train and test our machine learning models.

Fig.2.Experimental protocol for inferring human personality traits.

To the best of our know ledge,this is the first work that shows how to extract the user’s visual features from the robot’s first-person perspective,as well as the prosodic features,in order to infer the user’s personality traits during human-robot interaction.In[29],the non-verbal cues were extracted from the participant’s first-person perspective and used to analyze the relationship between the participant and robot personalities.W ith our framework,the robot is endowed w ith the capability of understanding human personalities during face-to-face interaction.W ithout using any external devices,the proposed system can be conveniently applicable to any typeof environment.

The rest of this paper is organized as follows.Section II explains the personality traits model used corresponding to Part a).Section III explains why we used the nonverbal features,and what nonverbal features were used for recognizing the participant personality traits corresponding to Part b).Section IV presents the technical details of our experiments.Section V is devoted to experimental results and analysis corresponding to Part c).Section VI draws conclusions.

II.Per sona l ity Traits

Based on the definition in[30],[31],personality traits have a strong long-term effect in generating the human’s habitual behavior:“the pattern of collective character, behavioral,temperamental,emotional,and mental traits of an individual that has consistently over time and situations”.

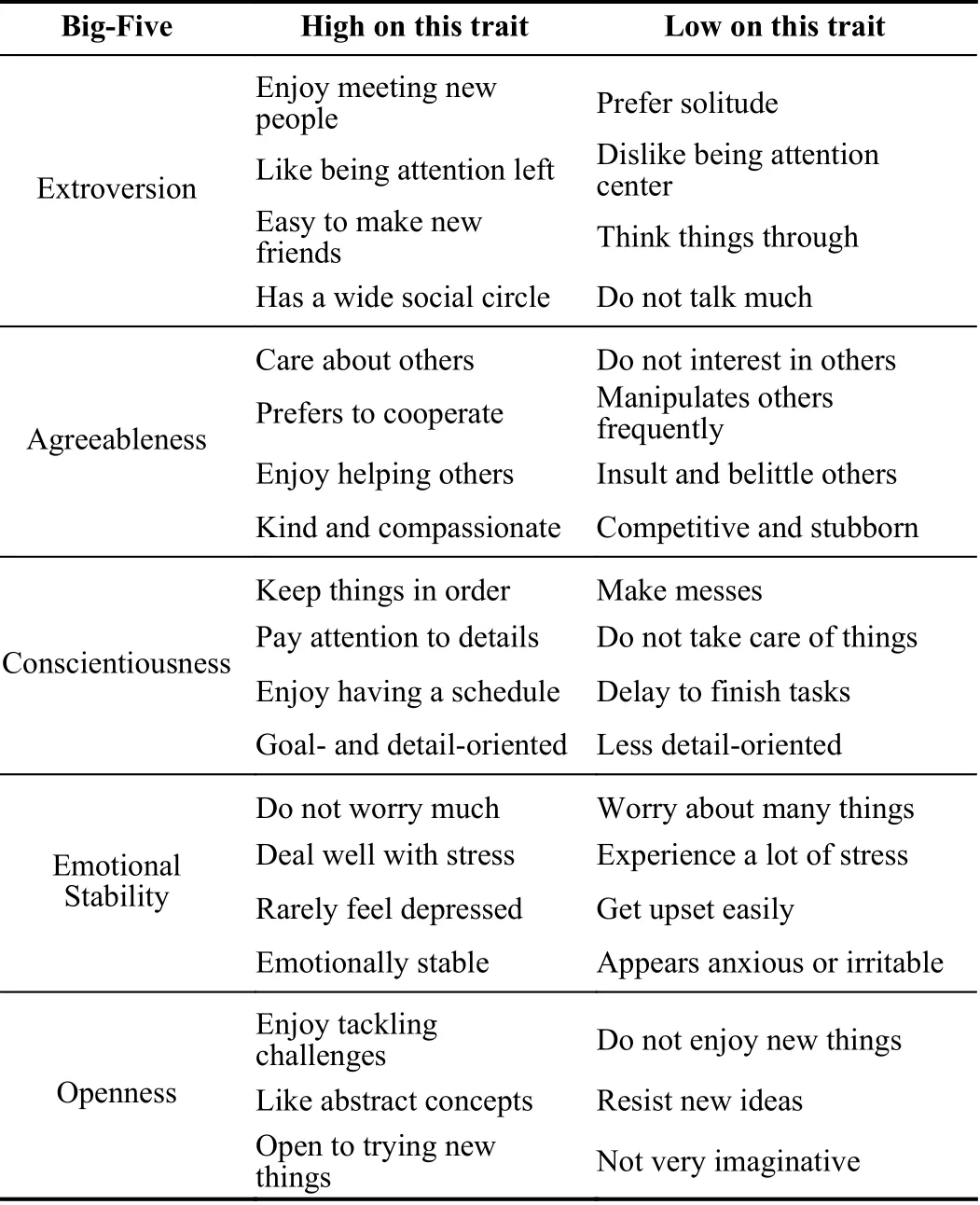

In the most of existing studies on personality traits,the researchers proposed many different personality models including Meyers-Briggs(extroversion-introversion, judgingperceiving,thinking-feeling,and sensation-intuition)[32];Eysenck model of personality (PEN)(psychoticism,extroversion,and neuroticism)[33];and the Big-Five personality model(extroversion,openness,emotional stability,conscientiousness,agreeableness)[34],[35].The Big-Five personality traits are the very common descriptor of human personality in psychology.In[36],[37], the authors investigated the relationship between the Big-Five personality traitsmodel and nonverbal behaviors.We also use the Big-Five personality traits model in this study.Table I denotes the intuitiveexpressions for the Big-Fivepersonality traits.

TABLE I Big-Five Per sona l ity Traits

As the personality traits become more popular in the last few decades[38], various questionnaires were proposed in the literature for the assessment of human Big-Five personality traits.Themost popular format of questionnaire is the Likert scale:ten item personality inventory(TIPI)which has 10-itemsand each question ison a7 point scale[39];The revised NEO personality inventory(NEO PI-R) which contains 240 items[40]; the NEO five-factor inventory(NEO-FFI),a shortened version of NEO PI-R, which comprises 60 items[41];and the international personality item pool(IPIP)Big-Five Factor Markers which has been simplified to 50 questions[42].We used the IPIP questionnaire in this paper,and all participantswere asked to fillout the questionnaire to evaluate their Big-Five personality traits.The IPIP questionnaire is relatively easier to answer,and it does not need toomuch time to complete.

Specifically,the participants are asked to rate the extent to which they agree/disagreew ith the personality questionnaires on a five-point scale. A total of 50 questions are divided into ten questions for each of the Big-Five traits and the questions also include the reverse-scored and positive-scored items.For the reverse-scored items,Strongly disagree equals 5 points,neutral equals 3 points,and strongly agree equals 1 point;for the positive-scored items,strongly disagree equals 1 point,neutral equals 3 points,and strongly agree equals 5 points.A fter the participants rate themselves for each question,each personality trait is represented by the mean score of 10 questions.We did not use the scale of 1–5 to represent the participant’s personality traits.Instead,the personality traits are binarized using themean score of all participantsas a cutoff point to indicatewhether the participanthas a high or low level of each of the Big-Five traits.For instance,if a participant’s trait of extroversion was rated 2 which is less than theaverage value2.8,then, this participant is regarded as introvert and his/her trait scorew ill be re-assigned 0.Then,we used the binary labels to train our machine learning models and evaluate the classification performanceaccordingly.

III.Feature Representation

It is known that the personality trait encompasses the human’s feeling, thoughts,and behaviors.The question to be investigated then arises as“how can it be inferred human personality traits based on their verbal and nonverbal behaviors?”

A. Related Work on Verbal and Nonverbal Behaviors

The influences of personality traits on linguistic speech production have been addressed in previousworks[43],[44].The user’s daily habitswere investigated to ascertain whether they are related to the user’s personality traits.The changesof facialexpression were also used to infer the personality traits,which was proposed in[45].In[46],the participants were asked to use the electronically activated recorder(EAR) to record their daily activities,which included locations, moods,language,and many others,to verify the manifestations of personality.Moreover, the authors investigated how the w riting language reflects thehuman personality stylebased on their daily w riting diaries,assignments,and journal abstracts[47].More specific details were presented in[48].In that study, two corpora that contain 2479 essays and 15 269 utterancesmore thanm illion wordswere categorized and used to analyze the relation to each participant’s Big-Five personality traits.A lthough the participant’s verbal information can be used to analyze their personality traits based on Pennebaker and King’s work[47],it should be noted that categorizing so many words would be an arduous task.In[49],the authorsaddressed that the language differences could influence the annotator’s impressions toward the participants.Therefore,they asked three annotators to watch the video that was recorded in themeeting w ithout audio and to annotate the personality traits of each participant. Notably,the issue of conversational error was addressed in[50],where the error caused the loss of trust in the robot during human-robot interaction.In light of the aforementioned studies,the participants in our study were free to use any language to talk w ith the robot.It can generally be said that the nonverbal behavior would be a better choice in this study.

On the other hand,it is desirable that the robot can change its distances w ith the user depending on a variety of social factors leveraging a reinforcement learning technique in[51].In[52],the author also used the changes in the distance between the robot and the participant as one of their features for predicting the participant’s extroversion trait.Sim ilarly,the authors proposed a model of automatic assessment of human personality traits by using body postures, head pose,body movements,proxim ity information,and facial expressions[53].The results in[54]also revealed that the extrovert could accept people to come closer than the introvert.However,the proxem ics feature was not considered in our study,as the human-robot distance remains unchanged during our communicative interaction settings.

In the related research on inferring human personality traits,a variety of fascinatingmultimodal featureswere proposed.In[36],[55],the authors used vocal features to infer personality traits.In[37], they used vocal and simple visual features to recognize the personality traits based on MS-2 corpus(M ission Survival 2).References[49]and[56]detailed how to infer personality traits in the groupmeeting.They used the ELEA corpus,and the participant’s personality traits were annotated by the external observer.Meanwhile, the participant’s vocal and visual features such as voice pitch, voice energy,head movement, bodymovement,and attentionswere extracted from audio and videos.The sim ilar features were used in [57]to infer the personality traitsw ith YouTube video blogs.The convolutionalneuralnetworks were also applied to predict human personality traits based on an enormous database that contains video,audio,and text information from YouTube vlogs[58],[59].In[60],[61],the authorsexplained a nonverbal feature extraction approach to identifying the emergent leaders.The nonverbal features that were used to infer the emergent leaders included prosodic speech feature(pitch and energy), visual features(head activity and body activity),and motion template-based features.In[62],[63],the frequently-used audio and visual nonverbal features in existing research were summarized for predicting the emergent leader or personality traits.Similarly,amethod was proposed in[64]for identifying the human’s confidence during human-robot interaction w ith the sound pressure,voice pitch,and head movement.

In the previous studies[37],[49],[60],[62],the authors used the statistical features and activity length features.Since the personality traits are long-term characteristics that affect people’s behaviors,they believed that the statistical features can well represent the participants’behaviors.Sim ilar nonverbal features were used in our study.However,we believe that the state transitions of the nonverbal behaviors or features are also importance to understand the human’s personality traits.The study in[56] proposed their cooccurrent features to indicate some movements of other participants that happened at the same time.Hence,in our study, the raw form and time-series based features of the visual and vocal nonverbal behavior were used to train the machine learning models.

B. Nonverbal Feature Representation

Taking into account the findings of the aforementioned studies, we intend to extract sim ilar features from the participant’s nonverbal behaviors. Nonverbal behaviors include vocal and visual behaviors.Table II shows the three visual features including the participant’s head motion,gaze score,and upper body motion energy,as well as the three vocal features including the voice pitch,voice energy,and mel-frequency cepstral coefficient (MFCC).

TABLE II Nonverba l Fea ture Representat ion

In our basic human-robot interaction scenario,it is assumed that the participant talks to a robot using gestures the way a person talks to a person.Therefore,the participant’s visual features can be extracted using the robot’s on-board camera while the participantor the robot talks. Note that,in Table II,some of the visual featuresHM2,GS2,andME2 are extracted when the participant listens to the robot asking four simple questions.The total time duration was too short to capture sufficient data enough to train our machine learning models.Therefore,we did not use these three features in our study.

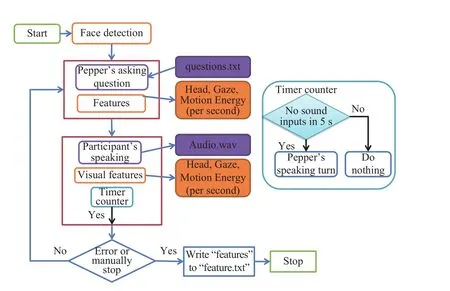

1) Head Motion:An approach to analyze the head activity was proposed in[60].They applied the optical flow on the detected face area to decide whether the head wasmoving or not.Based on the head activity states,they were able to understand when and for how long the head moved.We followed themethod thatwas proposed in[65].First,every frame captured by the Pepper’s forehead camerawasused for scanning procedure to extract the sub-w indows.The authors in[65]has trained 60 detectors based on left-right rotationout-of-plane and rotation-in-plane angle,and each detector containsmany layers that are able to estimate the head pose and detect a human face.Each sub-w indow was used as an input to each detectorwhich was trained by a set of the face w ith a specific angle.The outputwould provide the 3D head pose (pitch, yaw,and roll)asshown in the left imageof Fig.3.In this study, the pitch angle covers[–90°,90°],the rollangle covers[–45°,45°],and yaw angles covers[–20°,20°].And then the Manhattan distance of every two adjacent head angle was used to represent the participant’s head motion.Let,,anddenote the pitch,yaw,and roll angles,respectively.Then the head motion(HM1)can be calculated by the follow ing equation:

whereiandare two consecutive frames at 1 s time interval.

Fig.3.Visual features(The left image illustrates the 3D head angles,and the right image shows the different pixels by overlapping two consecutive frames).

2)Gaze Score:In[66],the influence of gaze in the small group human interaction was investigated.The previous studies used the visual focusof attention(VFOA)to represent the participant’s gaze direction[61]in the group discussion.However,the high-resolution image is required for the analysis of the gaze direction,which w ill tremendously increase the computational cost.In our experiment,the participant sitsata table in front of the robot positioned 1.5m to 1.7 m away.In practice,the calculation of gaze direction mightnot be feasible,if we consider the image resolution and the distance,since the eye occupies only a few pixels in the image.As the head pose and gaze direction are highly related w ith each other[67],an efficient way of calculating the gaze direction was proposed based on the head orientation in[68].Therefore, we used the head direction to estimate the gaze direction which is highly related to the head yaw and pitch angles.In the real experimental environment,we found that the face was hardly detected when the facial plane exceeds.When the participant faces the robot’s forehead camera,the tilt/pan angle is.Therefore,we measure the Euclidean distance from theto the head yaw and pitch angle.Then,the full range(distance)of tilt/pan angles[0°,20°]is normalized to 0 to 1.Finally,thenormalized score between 0 and 1 is used as the gaze score which indicates the confidence in the fact that the participant is looking at the robot.If we denote byandthe head pitch and yaw angles,respectively,thegaze scoreof the frameican be calculated by the follow ing equation:whereandrepresent the maximum degree of the head pitch and yaw angle,respectively.

3) Motion Energy:The motion energy images[69],[70]were used in the previous studies to describe body motion.Their basic idea is to compute the number of different pixels of every two consecutive frames.We applied the same idea to calculate the ratio of the different pixels between every two frames.The right image of Fig.3 shows an example of different pixels between two frames.Thismethod is simple and effective.However,it requires the image to have stationary background and distance between the robot and each participant.Otherw ise,the change of the background w ill be perceived as the participant’s body movement,and the number of different pixels w ill increase if the participant sits closer to the robot. Now,all three visual features were calculated and normalized in the whole database,denoted byHM1,,andME1.The binary features,,andmentioned in Table IIare the binarizedHM1,,andME1 which were simply calculated by comparing whether the value is larger than 0 or not.

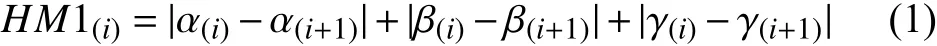

4)Voice Pitch and Energy:The vocal behavior is another important featurewhen humansexpress themselves.Pitch and energy are the two well-known vocal features and very commonly used in emotion recognition.Pitch,which is generated by the vibration of vocal cords,is perceived asthe fundamental voice frequency.There are many different methods to track the voice pitch.For instance,average magnitude difference function(AMDF)[71],simple inverse filter tracking(SIFT)[72],and auto-correlation function(ACF)[73]are the time domain approach,while harmonic product spectrum(HPS)[74]is the frequency domain approach.We used the auto-correlation function denoted bygiven in (3) to calculate pitch

In(5),Tis the time duration of the audio signal in one frame.Since the frame sizeused in this study is 800,the time durationTis50m illiseconds.

Now the average of the short-term energy can be calculated by the follow ing equation:

5) Mel-Frequency Cepstral Coefficient:MFCC[75]is a vocal feature well known for its good performance in speech recognition[76].The procedures to calculate MFCC are highly related to the vocalism principle and also able to discard the redundant information that the voice carries,e.g.,thebackground noise,emotion,and many others.We intend to test this pure and essential feature which reflects how the sound was generated.We calculated the MFCC based on the follow ing steps.

First,we calculate the power spectrum by calculating the fast Fourier transform(FFT)of each frame.Themotivating idea is from the concept of how our brain understands the sound.The cochlea in the ear converts sound waves,which caused the vibrations in different spots,to the electrical impulses to inform the brain that some frequencies are present.Usually,only 256 pointswere kept from 512 points in FFT.

Then,20–40(usually 26) triangular filtersof themel-spaced filterbank were applied to the power spectrum.This step is to simulate how the cochlea perceives the sound frequencies.The human ear is less sensitive to the closely spaced frequencies,and it becomeseven harder when the frequency is increasing.This iswhy the triangular filter becomesw ider as the frequency increases.

Third,the logarithm was applied to the 26 filtered energies.This is also motivated by human hearing.We need to put 8 times more energy to double the loudness of the sound.Therefore, we used the logarithm to compress the features much closer towhat humansactually hear.

Finally,we compute the discrete cosine transform(DCT)of the logarithm ic energies.In the previous step,the filterbanks were partially overlapped, which provide high correlated filtered energies.The DCT was used to decorrelate the energies.Only 13 coefficientswere kept as the finalMECC.

IV.Exper imenta l Design

The experimentwas designed in the scenario that the robot asks questions as the robotmeets w ith the participant.In the follow ing, we introduced the experimental environment and themachine learning methodsused.

A. Experimental Setup

The relationship between people’s professions and personality traits was investigated in[19].In our study,all the participantswere recruited from the Japan Advanced Institute of Science and Technology.Therefore,the relationship between professionsand personality traitswas notconsidered.On the other hand,the interactions between participants and the robot were assumed to be casual everyday conversations.Specifically,each participant sits at a table w ith his/her forearm resting on the tabletop and talksw ith the robot.The participants did not have any strenuous exercises before they were invited to theexperiment.

The experimental setup is shown in Fig.4.Each participant was asked to sitata table in frontof the robot standing 1.5 m to 1.7m away in a separate room.Only the upper partof the participant’s body was observable from the robot’s on-board camera that extracts the visual features.The robot keeps asking questions one after another.The participantwas asked to respond to each question using his/her habitual gesture. As mentioned in Section III,the participants were free to use any language(such as English,Italian,Chinese,and Vietnamese)to communicate w ith the robot.

Fig.4.Detailsof experimental setup.

We recruited 15 participants in the study; however,3 of the participantswere too nervous during the experiment,and they looked at the experimenter frequently.Therefore, they were excluded,and our database contains the data of 12 participants w ith a total duration 2000 s.One of the convenient ways to infer personality traits is using the fixed time length.Once the robot hasenough data,it would be able to infer the personality traits.Therefore,we divided the data into 30-sec long clips.The 30-sec clip may contain data from different sentences.When we divided the clips,each clip hasoverlap w ith the previousone,and then wewere able to generatemore data generalized.

The flow chart in Fig.5 shows the architecture for extracting features.The robot first detects whether there is a person to talk to.Then the robot would sequentially select a question from a file“questions.txt”and use the speech synthesizers to start the conversation.Meanwhile,the robotalso extracted the visual features every second.Even after the robot finished asking its question,the vocal and visual features extraction would be continued while the participantwas responding to the question.The participant was instructed that they were expected to stop talking for 5 s for letting the robot know that it may ask the next question.

Fig.5.The pipeline for feature extraction.

TABLE III Averaged Accuracies for Big Five Persona l ity Traits (Ridge Regression Classifier)

TABLE IV Averaged Accuracies for Big Five Per sona l ity Traits (Linear SVM Classifier)

B.Classification Model

In[63]and[77],the authors summarized differentmethods used for the prediction of the leadership style such as the logistic regression[49],[56],[78], rule-based[79],Gaussian m ixture model[80],and support vector machine[49],[56],[81].The ridge regression and linear SVM were both used in[49],[56].We opted to apply the samemethods in our study tomake a simple comparison.The cross-validation was used to find the optimal regression parameters.The follow ing formulas were used to calculate the regression parameters:

whereXis the featurematrix,Iis an identitymatrix,is the binarized label of the personality traits,andis the ridge parameter calculated using the follow ing equation:

In(9),iis an integer indicating thateach regressionmodel is executed for 30 times for optimizing the regression parameter. As we used the regression model to perform a classification task,we used the accuracy rather than themean squared error, which would givemoremeaningful results.

SVM is used to perform the linear or nonlinear classification task by using different types of kernel functions.It requires a longer time to train an SVM classifier than ridge regression.From Table III,it can be noticed that the binary features did not present their advantages in ridge regression.Therefore, the binary featureswere discarded in SVM.Then,we trained an SVM classifier w ith the linear kernel w ith the penalty parameter of the error term which was chosen from[0.1,0.4,0.7,1].Therefore,each SVM classifier was trained for 4 times based on the equation thatwasmentioned in[82]which isshown in the follow ing equation:

The leave-one-out method was used to evaluate the performance of ridge regression and linear SVM.The results of the linear SVM was presented in Table IV.

V.Exper imen ta l Resu l ts

A.Classification Results

B. Regression

For the ridge regression model,we used the average personality trait scores that were calculated from the questionnaire ranging from 1 to 5.For evaluating the regression model,we calculated mean squared error (MSE) valuesandR2which is known as the coefficient of determ ination used to evaluate the goodness of fitof the regressionmodel[49].The maximumR2values of conscientiousness and openness are smaller than 0.1.Therefore,we only presented theR2values of extroversion,agreeableness,and emotional stability in Table V.We calculatedR2based on

In Table V,the best classification resultof three personality traits were inferred by the features w ith the highestR2values marked in bold.

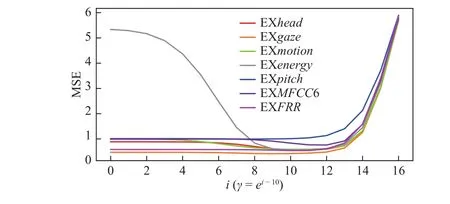

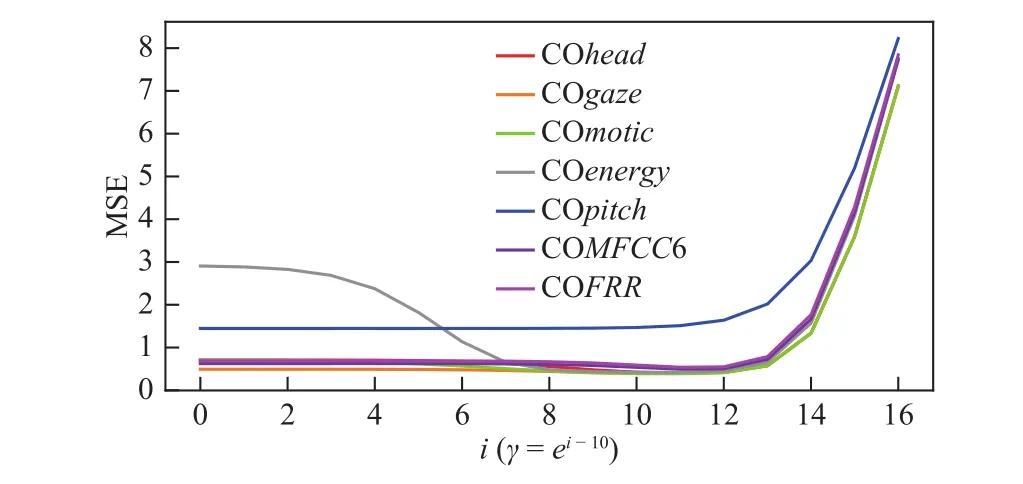

The MSE values were given in Figs.6–10.In order to show the changes of the MSE values clearer, we only revealed thei(the parameter for calculating the ridge parameterfrom(9))from 0 to 16.The variables that were shown in Fig.6 to 10 were represented by using two capital letters of the abbreviation of personality traitand the feature name(refer to Table II,MFCC6 is the 6th MFCC vector).Figs.6,7,and 9 of extroversion,agreeableness,and emotional stability also showed that the feature w ith the smallestMSE value acquired the best classification result.The differences of the other two traits conscientiousness and openness were not very obvious compared to the aforementioned three traits.

VI.Conc lusion and the futu re w orks

In this paper, we have proposed a new computationalframework to enable a social robot to assess the personality traits of the user it is interacting w ith.In the beginning,the user’s nonverbal featureswere defined as easily-obtainable as possible and extracted from video and audio collected w ith the robot on-board camera andm icrophone.By doing so, we have decreased the computational cost in the feature extraction stage,yet the features provided promising results in the estimation of the Big Five personality traits.Moreover,the proposed framework isgeneric and applicable to aw ide range of off-the-shelf social robot platforms.To the best of our know ledge, this is the first study to show how the visual features can be extracted in the first-person perspective,which could be the reason that our system outperformed the previous studies. Notably,the MFCC feature was beneficial to assessing each of the Big Five personality traits.We also found that,apparently,extroversion appeared to be the hardest trait.One reason could be the current experimental settings,where the participantssat ata table w ith their forearms resting on the tabletop that lim ited their body movements.Another reason could be the confusing relationship between the participants and the robot,which made the participants hesitate to express themselves naturally in the way they do in everyday situations.

TABLE V The Max imum Va lues of R2 of the Regression Resu l ts for Ex t roversion,Agreeableness,and Emotiona l Stabil ity

Fig.6.MSE valuesof the ridge regression for inferring extroversion.

Fig.10.MSE values of the ridge regression for inferring openness.

Fig.7.MSE valuesof the ridge regression for inferring agreeableness.

Fig.8.MSE valuesof the ridge regression for inferring conscientiousness.

Fig.9.MSE valuesof the ridge regression for inferring emotional stability.

Each feature showed its advantage in a different aspect.However,there is not a standard way of draw ing the conclusion that declares the user’s personality traits.Therefore,one of the future works is to find an efficientway to fuse the multi-modal features.On the other hand,the personality traits can be better understood through frequent and long-term interaction.Thismeans that the system should be able to update its understandings of the user’s personality traits whenever the robot interacts w ith its user.It is also needed to evaluate the engagement between a human and a robot,and attitude of the human toward the robot,since the user’sbehaviors can be precariouswhen theuser loses interest in interacting w ith the robot.Finally,in order to achieve the best possible classification performance,more sophisticated machine learning modelsneed to be incorporated.

杂志排行

IEEE/CAA Journal of Automatica Sinica的其它文章

- Single Image Enhancement in Sandstorm Weather via Tensor Least Square

- A Novel Radius Adaptive Based on Center-Optim ized Hybrid Detector Generation Algorithm

- Sliding Mode Control for Nonlinear Markovian Jump SystemsUnder Denial-of-Service Attacks

- Neural-Network-Based Nonlinear Model Predictive Tracking Controlof a Pneumatic Muscle Actuator-Driven Exoskeleton

- Privacy Preserving Solution for the Asynchronous Localization of Underwater Sensor Networks

- A Behavioral Authentication Method for Mobile Based on Browsing Behaviors