Functional Neuroimaging in the New Era of Big Data

2019-02-08XingLiNingGuoQunzhengLi

Xing LiNing GuoQunzheng Li*c

Massachusetts General Hospital and Harvard Medical School,Boston,MA 02114,USA

Abstract The field of functional neuroimaging has substantially advanced as a big data science in the past decade,thanks to international collaborative projects and community efforts.Here we conducted a literature review on functional neuroimaging,with focus on three general challenges in big data tasks:data collection and sharing,data infrastructure construction,and data analysis methods.The review covers a wide range of literature types including perspectives,database descriptions,methodology developments,and technical details.We show how each of the challenges was proposed and addressed,and how these solutions formed the three core foundations for the functional neuroimaging as a big data science and helped to build the current data-rich and data-driven community.Furthermore,based on our review of recent literature on the upcoming challenges and opportunities toward future scientific discoveries,we envisioned that the functional neuroimaging community needs to advance from the current foundations to better data integration infrastructure,methodology development toward improved learning capability,and multi-discipline translational research framework for this new era of big data.

KEYWORDS Big data;Neuroimaging;Machine learning;Health informatics;fMRI

Introduction

Functional neuroimaging techniques,including functional magnetic resonance imaging(fMRI)acquired both during task(tfMRI)and resting-state(rsfMRI),electroencephalography(EEG),magnetoencephalography(MEG),and other modalities,provide powerful tools to study human brain and serve as methodological foundations for quantative cognitive neuroscience[1].Neuroimaging analysis of all these modalities has a long history of close relationship with data science,statistics,and medical imaging community.Similar to data analysis tasks in other applications,earlier work of functional neuroimaging analysis usually involved a very limited number of subjects.For example,the resting-state network study in[2]used the datasets that only consisted of 10 subjects in two separate scans to infer the networks,which was a similar case in[3](10 subjects in two experiments)for studying functional connectivity in tfMRI.Decomposition of multi-subject fMRI analysis in[4]used 5 datasets,with each dataset consisting of 3 subjects as the model input.As more functional neuroimaging data become available and consequently more data-driven analytical approaches are adopted,in the past few decades,there has been growing awareness of the need and significance for a big data perspective on the neuroscience research.A series of literature discussed the new aims and characteristics of general and functional neuroimaging,both prospectively and retrospectively.Roberts et al.proposed that the brain functional fluctuation signal(both fMRI and EEG)follows non-Gaussian distribution and is intrinsically‘heavy-tailed” [5],which is a common characteristic(‘endogeneity”)of big data analytics[6],thus precluding the use of simple statistical models to fully capture the data distribution.In the special issue ‘Dealing with Data” of Science,Akil et al.[7]addressed the need for neuroinformatics system(as a ‘prelude to new discoveries”)to support the analytics on neuroimaging big data and enable coordination across multicentered efforts under the Neuroscience Information Framework(NIF).They also proposed the concept of both macroscopic(e.g.,MRI and behavioral data)and microscopic(function and structure of individual neuron cells)connectomes to achieve the goal of deciphering the ‘neural choreography”of the brain at multiple scales spatiotemporally.From the machine learning perspective under a big data premise,traditional methods for functional neuroimaging analysis,which have been proven successful in multiple applications including functional network analysis and functional dynamics modeling[8],are in need of adaptation[9]to handle growing amount of big data for both computational capacity and speed[10](e.g.,via parallel computing[11]).Later in the perspective article of[12]and[13],it was proposed that neuroimaging was an emerging example of discovery-oriented science based on big data,thus facing a broad range of challenges and risks which were commonly present in the field of data science,that is,challenges in handling the growing amount of the data,challenges in sharing and archiving the data,and challenges in exploring and mining the data.Coincidentally,these challenges and the corresponding solutions were also discussed in the perspective article on big data in the microscale connectomics in neuroscience[14],calling for better computation,better data sharing,and better data management.In the later sections we will review the community perspectives and efforts on addressing these three challenges.

Better data availability

In the past decades we have seen a rapid growth in both the quantity and quality of the neuroimaging data on various scales,thanks to a series of collaborative projects on brain science with huge amount of functional neuroimaging data collected[15](also refer to[16]for an extended review of neuroimaging projects),including the European Human Brain Project(reconstructing the brain’s multi-scale organization)[17],the U.S.Brain Activity Map(establishing functional connectome of the entire brain),the Allen Institute for Brain Science(developing cellular,gene,and structural atlas of brain)and the U.S.Brain Research through Advancing Innovative Neurotechnologies(BRAIN)Initiative(investigating the interaction among individual cells and complex neural circuits in the brain)[18].As the data volume and number of investigation sites grow,challenges in social and logistic aspects in data sharing rise[19].As pointed out in[20]as an analogue to the Human Genome Project,although the increasing quantity of neuroimaging data can help us to better model the brain function and structure,difficulty in data sharing,including the issues mentioned above and ethical concerns,motivations,and cultural shifts[21],can be more complex than expected.To address these challenges,a series of data sharing projects were established over the past decades,which provided values to the community including enhancing reproducibility,improving research value,and reducing research cost[22].One of the data collection and neuroinformatics projects mentioned in[7],the ongoing Human Connectome Project(HCP)[23],collected neuroimaging(functional and structural MRI,EEG,and MEG)and behavioral data from 1200 subjects,with the data freely available to the community.The milestone paper initiating the 1000 Functional Connectomes Project(1000FCP)in[24]demonstrated the feasibility of collecting imaging data from a large number of subjects(1414 volunteers at 35 international centers)and analyzing them to establish a universal functional architecture to identify putative boundaries between functional networks in the brain.The subsequent project,the International Neuroimaging Datasharing Initiative(FCP/INDI)[25],provided open access to imaging datasets of over thousands of subjects,both clinically and non-clinically.The Laboratory of Neuro Imaging(LONI)pipeline not only provided data access to nearly 1000 subjects specifically with a large population of Alzheimer’s disease(AD)[26],but also established an integrated data center for big data mining and knowledge discovery[27].The Neuroimaging Informatics Tools and Resources Clearinghouse(NITRC)image repository[28]hosted 14 projects with neuroimaging data from 6845 subjects.Later development of data collection projects resulted in a substantial number of publiclyavailable neuroimaging databases,consisting of populations with schizophrenia[29,30],attention deficit/hyperactivity disorder(ADHD)[31],autism spectrum disorder(ASD)[32,33],development[34],mild cognitive impairment(MCI),AD[35],and aging[36,37],as well as healthy controls for various research purposes[38-42].The ongoing effort from UK Biobank study[43]aims to acquire multi-modal imaging data from 100,000 participants within various age groups,with currently 5000 participants’data being released.A summary of current prominent large databases that are publicly available is listed in Table 1.While most of the databases feature rsfMRI data(mainly for the purpose of connectivity analysis),some of them also include scans of tfMRI with task designs for specific purposes[44](e.g.,memory and motor controls).In addition to these large data sharing efforts,which usually consist of healthy individuals and people with common diseases,more heterogeneous small datasets produced by individual groups are equally important to the community[45].

It should also be noted that along with the growing size(i.e.,more subjects)of new functional neuroimaging databases,there have also been community efforts to improve the quality of the images acquired,which include but not limited to:(1)higher image resolution(both spatially and temporally)to support more precise localization and temporal characterization of brain function;(2)more imaging modalities;(3)more imaging sessions;and(4)specific target populations and/or specific cognition processes to identify new functional biomarkers[46].These efforts are highly valuable to the community,especially in the emerging field of precision psychiatry[47].

Table 1 List of current publicly available functional neuroimaging databases

Better informatics infrastructure

Informatics infrastructure is a fundamental element to support large-scale data sharing and analytical work in neuroimaging big data.It is expected to enhance ‘data collection,analysis,integration,interpretation,modeling,and dissemination”as well as easier and more secure data sharing/collaboration[48].Further requirements of the informatics infrastructure include management facility for better long-term data integrity,distributed computing and storage,as well as services for statisticalcomputation,visualization,and interaction of the neuroimaging data[49].The main archive and dissemination platform of HCP[23],the ConnectomeDB database[50],implements extensive automated validation for data quality control(QC)with manual examination of the data following standard operating procedures(SOPs),and allows multiple approaches for access(browser-base,hard drive,and Amazon S3).Both ConnectomeDB and NITRC utilize the infrastructure of the Extensible Neuroimaging Archive Toolkit(XNAT)software platform proposed in[51].The platform provides functionality of data archiving,QC,and secure access for the neuroimaging data.It follows the three-tier architecture of(1)relational database,(2)Java-based middleware engine,and(3)browser-based user interface,implemented by XML schema.Based on the XNAT platform,multiple large-scale data sharing projects were developed.For example,the Northwestern University Neuroimaging Data Archive(NUNDA)[52]hosted 131 projects with 4783 subjects and 7972 imaging sessions.The Washington University Central Neuroimaging Data Archive(CNDA)[53]hosts more than 1000 research studies with 36,000 subjects and 60,000 image sessions.XNAT has then been further extended by the data dictionary service[54]to provide a flexible and multi-use data repository for the user.Various other neuroinformatics infrastructure were also developed to meet specific needs and scenarios,including the Data Processing&Analysis for Brain Imaging(DPABI)[55],which focused on test-retest reliability,QC,and streamlined preprocessing;the Holistic Atlases of Functional Networks and Interactomes (HAFNI)-enabled large-scale platform for neuroimaging informatics(HELPNI)[56],which specifically enables dictionary learning analysis on useruploaded data based on XNAT;the work in[57],which can perform co-analysis between neuroimaging and genome-wide genotypes;and the BROCCOLI framework[58],which supports GPU acceleration.

Better analytical methods

The early recognition for a data-driven(rather than modeldriven),discovery-oriented neuroimaging analysis framework comes from[59],where the author envisioned that functional neuroimaging studies should merge the traditional,widelyused,hypothesis-based experiments with larger-scale discovery methods developed in data science fields.In the special issue‘The Heavily Connected Brain” of Science[60],a similar argument was proposed on the neuroimage analytics:the oversimplified assumptions of the traditional study protocols need to be replaced by the unbiased,large-scale machine learning approaches to account for massive,dynamic interactions between brain regions.In the past decades,enormous machine learning techniques have been developed in the functional neuroimaging analysis community[8],for various purposes including classification,recognition,signal processing,and pattern discovery[61].Methods for classifying abnormal brain functional patterns were developed for schizophrenia[62],AD[63,64],MCI[65,66],social anxiety[67],depression[68,69],Parkinson’s disease(PD)[70],post-traumatic stress disorder(PTSD)[71],ADHD[72],as well as other diseases[73].Machine learning-based recognition/decoding of brain responses is another important task,analyzing brain processing such as language[74],visual stimuli of both low-level[75]and high-level context[76],emotion[77],and auditory[78].These studies also constitute fundamentals to the development of brain-computer interface[79].To discover brain functional patterns,especially its multi-level network structures,discovery models were applied to fully leverage the great details and depth of the current neuroimaging data[80],while various approaches have been further developed for characterizing dynamic network behavior of the brain[81,82].

Shifting toward the big data perspective of functional neuroimaging on the basis of both higher image quality[83]and growing number of subjects[84],a series of modeling methods for large-scale functional neuroimaging data have been developed[85],mainly focused on unsupervised or semi-supervised matrix decomposition algorithms[86].Earlier works in[87]proposed a data reduction scheme performing principle component analysis(PCA)before using independent component analysis(ICA)for group-wise functional network inference(GIFT toolbox).Similar scheme is later adopted by the extended GIFT[88]based on PCA compression and projection with various heuristics,such as only storing the lower triangular portion of covariance matrix[89].Further,Rachakonda et al.[90]utilized subsampled eigenvalue decomposition techniques to approximate the subspace of the target decomposition,which can analyze group-wise PCA of 1600 subjects on a regular desktop computer.Other methods such as the group-wise PCA proposed in[91]can also achieve nearconstant memory requirement for large datasets(>10,000 subjects)by iterative optimization.Dictionary learning-based methodologies for functional network inference have achieved similar performance.The HAFNI system[92,93]found simultaneous,spatially-overlapping presence of both task-evoked and resting-state networks in the brain through sparse representation of whole-brain voxel-wise signals.

As the growth of neuroimaging dataset size quickly outnumbered the computational capacity of a single workstation,the need for parallel,computationally scalable framework becomes an important topic.The Thunder library[94]is an Apache Spark/MapReduced-based platform(https://spark.apache.org)for large-scale distributed computing of the neuroimaging data.Also based on Spark,distributed Singular Value Decomposition(SVD)[95]and low-rank matrix decomposition[96,97]have been developed on large-scale functional neuroimaging data.Decentralized and cloud computing,which is a closely related methodology with parallel computing,has also been applied to neuroimaging analytics,including the decentralized data ICA[98]and Canadian Brain Imaging Research Platform(CBRAIN)[99].

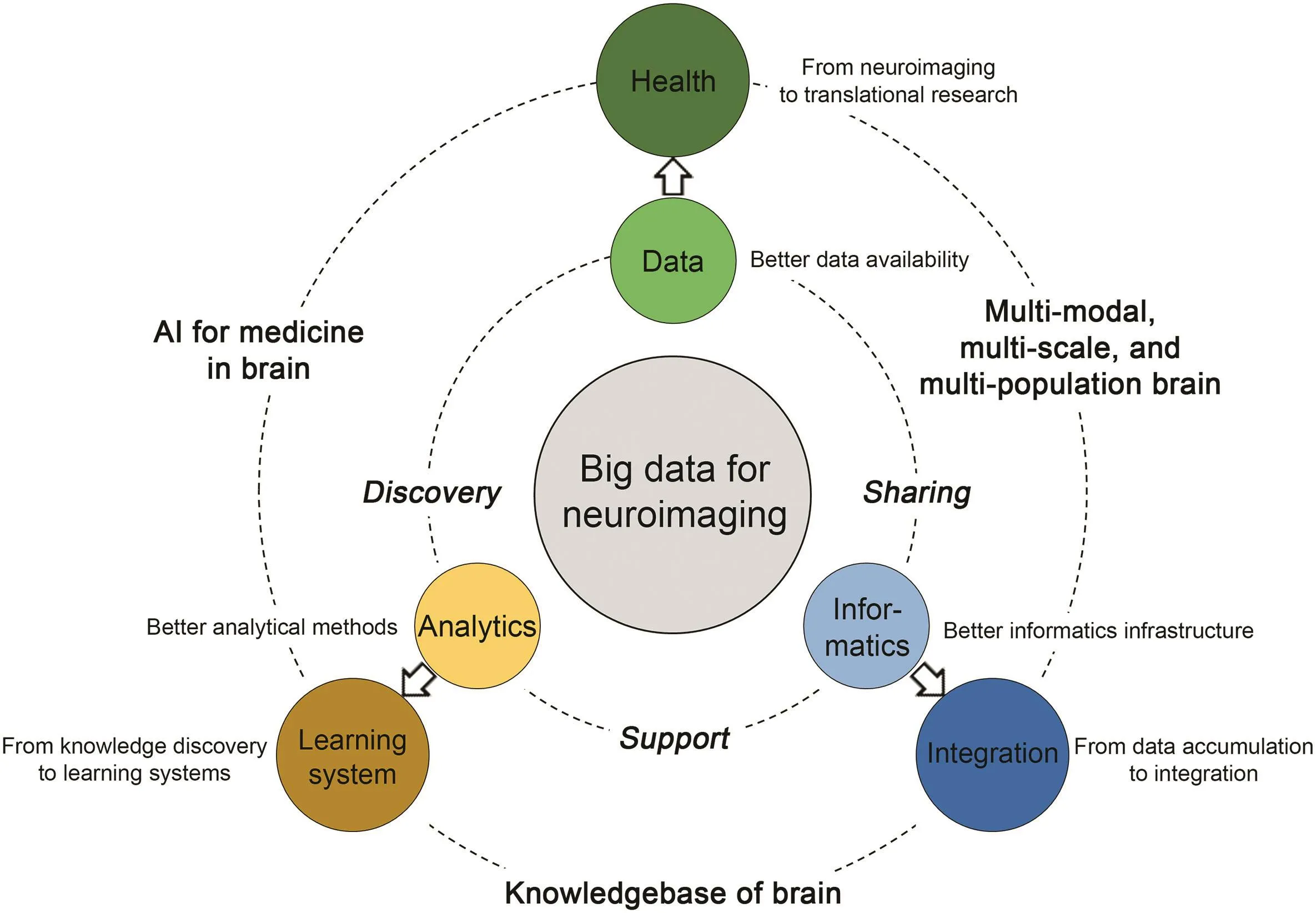

Led by the international projects and collaborative efforts,we have seen the advancement of functional neuroimaging as a big data science.At the same time,also in the past decade we have experienced a tremendous paradigm shift in the field of scientific research as a whole, toward data-intensive,discovery-oriented approaches,as envisioned in the book‘The Fourth Paradigm” [100].Data have been recognized not only as a valuable resource for research,but the research itself.Thus,we call the current stage of scientific research as in a ‘new era” of big data.The field of functional neuroimaging,after the efforts through all these years in amassing the data,building the infrastructures,and developing methodologies,begins the transformation as well as the new paradigm.Here in the later sections of this paper we review the literature focusing on potential advancement of the three core foundations(and challenges)discussed above,as illustrated in Figure 1.

From neuroimaging to translational research

Figure 1 Illustration of current and perspective core foundations of functional neuroimaging

One major challenge of neuroimaging recently recognized by the field lies in its(relative)lack of impact on the clinical practice and public health[101],caused by limitations in both data and analytical methods[102].The ‘translational neuroimaging”thus becomes an important goal for the field and has been addressed in multiple neuroscience research projects.For example,the China Brain Project specifically listed two translational aspects of brain science and neuroimaging in its framework:supporting better brain disease diagnosis and therapy and developing brain-machine intelligence[16].In the BRAIN initiative,it was also proposed that a deeper understanding of human brain willbring corresponding clinicalbenefits,although not necessarily immediate[18].Previous studies on computational methods for functional neuroimaging data(such as those based on network characterization)have shown potential capability in early diagnosis[103],intervention[104],and drug discovery[105,106].From a clinical perspective,as summarized in[107],functional neuroimaging in clinical practice has been proven useful for treatment planning and diagnostic supplement. Translational value of functional neuroimaging[108],especially its big data perspective,is specifically important for precision psychiatry[47],where it was addressed that availability of massively acquired data from multiple sources is the foundation for considering diverse patient characteristics,thus achieving ‘precision” during diagnosis and treatment.Also recognized in[47],in addition to imaging data,the solution to more practical clinical application is to introduce more biological data(‘-omics”)into the analytics.Studies on imaging genomics have shown that the structure of subcortical brain regions can be altered by genetic variants[109],which might lead to neuropsychiatric dysfunction.Perspective work in[110]proposed the need for an integrated bioinformatics approach to correctly characterize bipolar disorder(BD)based on meta-analysis,addressing the insufficient predictive value from a single phenotype.It suggested utilizing multiple biomarkers from a series of personal profiling data to establish computational models,including neuroimaging, transcriptomics, proteomics, and metabolomics.On the investigation of other common psychiatric disorders including ASD and ADHD,it was proposed in[111]that in addition to MRI-based imaging features(e.g.,functional connectivity and gray matter volume),other imaging modalities such as EEG,MEG,or functional nearinfrared spectroscopy(fNIRS)should also be considered.Ashar et al.[112]mapped imaging features to multi-output behavioral scores for AD and schizophrenia studies in a transfer learning manner,with the potential to combine heterogeneous data sources and imaging modalities.A recent review of studies on brain developmental disorders[113]shows that structural and functional imaging characteristics,combined with genetic and environmental influences,can provide early biomarkers with improved prediction power.Utilization of other data types,such as physiological and mechanistic data[114],can also help us reveal the underlying mechanisms of neuroimaging for its translational applications.

From data accumulation to integration

While registration to common template has been a standard practice for MRI-based studies(which is the similar case for EEG),establishing a common ground for neuroimaging studies across different populations,scales,and imaging modalities is still a tremendous challenge.The Allen Brain Atlas showed a promising architecture for characterizing and localizing mouse brain on different scales,integrating MRI,serial two-photon(STP)tomography[115],and gene expression data[116]in a single atlas.For collaboration across institutions on neuroscience research,BigNeuron[117]proposed a new module of neuroimaging informatics to integrate the community efforts,in terms of both data and methodology,into a standard framework for the study of neural circuits and connectomics,especially on single-neuron morphology and reconstruction.On the human brain studies,the multi-modal parcellation framework proposed in[118]provided a way to obtain accurate areal map of the brain based on parcellation on cortical architecture,function,connectivity,and topography.Cortical parcellation framework only based on individual rsfMRI data but guided by population-level functional atlas was also proposed[119]and demonstrated similarly high level of reproducibility.Also based on rsfMRI,the ‘connectivity domain”framework proposed in[120]established a new common space to represent individual fMRI data,which has demonstrated advantages for the data-driven analysis.In addition,studies based on meta-analysis to integrate functional neuroimagingfindings have been proposed to form a large-scale brain map[121].

From knowledge discovery to learning systems

In the book that Goodfellow et al.laid the foundation of deep learning[122],the authors stated that the purpose of machine learning is to ‘discover not only the mapping from representation to output but also the representation itself”,which is known as representation learning.In other words,knowledge discovered from big data can also be massive and needs to be organized into practical models.The authors argued that learned representations,rather than the hand-crafted ones,can achieve better performance with greater robustness to new tasks.Such paradigm shift has also been recognized by medical and biological researchers.As proposed in[123],there is a need to transform the traditional ‘expert systems” which were pre-defined by domain knowledge,to the learningbased systems which can identify the most discriminative/predictive combinations(representation)from enormous number of factors.Similarly,perspective work in[124]proposed that advanced machine learning systems are desperately needed to leverage the vast volume of neuroimaging data.Capability of deep learning in exploiting hierarchical feature representations has shown superior performance in medical image analysis for the task of detection,classification,and prognosis[125].One of the promising methodologies in deep learning for modeling the 4-D functional neuroimaging data is the recurrent neural network(RNN)[126](see also[127]for its current implementation)based on long short-term memory(LSTM)[128],which utilizes cyclic connections to model sequences(e.g.,time frames).Various preliminary attempts have been made to apply RNN to functional neuroimaging data,including functional network identification[129],psychiatric disorder diagnosis[130],and estimation of long-term dependencies for the hemodynamic responses[131].Other methods of applying deep learning-based methods to neuroimaging studies include theunsupervised autoencodermodelingoffMRIdata[132,133]and classical convolutional neural network[134].

Concluding remarks

In this work,we reviewed studies and efforts on the advancement of functional neuroimaging as a big data science in the past decade.The field has been rapidly growing in data quantity,infrastructure,and analytical methods.At the same time,there are also growing concerns on the translational limitation of functional neuroimaging(e.g.,in clinical practice and drug discovery)and on the difficulty in fully leveraging the evergrowing amount of data.We then conducted focused review on the recent progress in both methodologies(specifically in deep learning)and research frameworks(in data integration and multi-type data collection)to address these concerns.We envision that in the new era of big data,the field of functional neuroimaging could be greatly enhanced by the tools and concepts in the new paradigm of scientific discovery,with promises of better understanding the brain functional architecture and more useful discoveries from the imaging data.

Competing interests

The authors have declared no competing interests.

Acknowledgments

This work was supported by the National Institutes of Health,United States(Grant No.RF1AG052653).

杂志排行

Genomics,Proteomics & Bioinformatics的其它文章

- Challenges of Processing and Analyzing Big Data in Mesoscopic Whole-brain Imaging

- Deciphering Brain Complexity Using Single-cell Sequencing

- Application of Computational Biology to Decode Brain Transcriptomes

- How Big Data and High-performance Computing Drive Brain Science

- Brain Banks Spur New Frontiers in Neuropsychiatric Research and Strategies for Analysis and Validation

- Translational Informatics for Parkinson’s Disease:from Big Biomedical Data to Small Actionable Alterations