Challenges of Processing and Analyzing Big Data in Mesoscopic Whole-brain Imaging

2019-02-08AnanLiYueGuanHuiGongQingmingLuo

Anan Li,Yue Guan,Hui Gong,Qingming Luo*,d

1Britton Chance Center for Biomedical Photonics,Wuhan National Laboratory for Optoelectronics,Huazhong University of Science and Technology,Wuhan 430074,China

2MOE Key Laboratory for Biomedical Photonics,School of Engineering Sciences,Huazhong University of Science and Technology,Wuhan 430074,China

3HUST-Suzhou Institute for Brainsmatics,JITRI Institute for Brainsmatics,Suzhou 215125,China

The brain is recognized as the most complex organ on earth.The complex neuronal network in the human brain consists of approximately 86 billion neurons and more than one hundred trillion connections[1].Even the mouse brain,which is commonly used as a model in neuroscience studies,contains more than 71 million neurons.Despite the great efforts made by the neuroscientists,our knowledge about the brain is still limited and studies on small model animals have only provided preliminary results[2-4].To improve the situation,brainsmatics studies try to accelerate neuroscience research by providing a standard whole-brain spatial coordinate system and standardized labeling tools,imaging the brain,as well as discovering new neuronal cell types,neuronal circuits,and brain vascular structures.

Neuroscience studies at mesoscopic scale

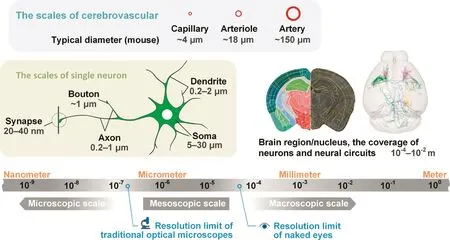

Spatial brain structure can cross several magnitudes,from synapse,single neuron,and neural circuit to brain nucleus,brain region,and organ[5],as shown in Figure 1.Structures at the macroscopic scale include brain regions and white-matter fibers,which are usually larger than 100 μm and can be seen by the naked eye without magnifying optical instruments.Structures that can be viewed only with the help of a microscope,especially an electron microscope,exist at the microscopic scale.In neuroanatomy,structures at microscopic scales are usually made visible with the help of an electron microscope.For example,individual synapses and their vesicles are micro-structures with approximately 100-nm spatial resolution.In addition,there is a mesoscopic scale between the macroscopic and microscopic scales[9];these mesoscopic structures are typically observable with an optical microscope[10].

Figure 1 The various scales in neuroscience research

Structures at the mesoscopic scale include soma,neurite,and neural circuits,whose diameters are between 100 nm and 100 μm.Neurons and neural circuits at the mesoscopic scale also have macroscopic spatial distribution characteristics[8].Studies on neurons and neural circuits need to be performed with single-neuron three-dimensional spatial resolution across the entire brain[10];however,reconstructing an entire human brain neuronal network at the mesoscopic scale is similar to taking three-dimensional pictures of a forest with a trillion trees[12],in which we need to see the whole forest as well as each twig and leaf of any single tree.Consequently,constructing whole-brain datasets is a massive undertaking that relies not only on technological advances but also requires massive investments and extensive cooperation[12].Nonetheless,the data obtained by this approach may help to improve our understanding of the brain.As described previously[13],a comprehensive whole-brain atlas of the cholinergic system was obtained based on a mesoscopic whole-brain dataset,which helps in understanding the role of the cholinergic system in sensory and motor functions in addition to cognitive behaviors.

Brain research projects focus on mesoscopic scale

Driven by the huge community demands,scientists have proposed several global-scale brain research projects[14,15].The European Human Brain Project(HBP)focuses on collecting and integrating different types of neuroscientific data,reconstructing the brain structure,and simulating brain functions on supercomputers[16,17].In 2016,HBP released a platform on its official website,providing researchers with brain simulation,visualization,and computational tools.The Brain Research through Advancing Innovative Neurotechnologies(BRAIN)initiative in the United States first develops new methodology and tools,and then attempts to answer scientific questions[18].The two highest priority goals of the BRAIN initiative are to perform a brain-cell-type census and an atlas of cross-scale neural circuits[5,11,19].To march toward these goals,NIH kicked off a pilot program in 2014 and started the BRAIN initiative Cell Census Network(BICCN)in 2017.The task of BICCN is to construct a common whole-mouse brain cell atlas,in which a main focus is to collect information on mesoscopic neuron anatomy[20].

Brain science is also well recognized by the Chinese government and the Chinese research community.‘Brain science and cognition” was included in the ‘Guidelines on National Medium-and Long-term Program for Science and Technology Development”a decade ago.Support is provided through the National Natural Science Foundation of China(NSFC)and the National Basic Research Program of China(973 Program).For example,the key research plan ‘Neural Circuit Foundation of Emotion and Memory”was supported by NSFC[21].A Chinese brain project is also in the planning stages,and ‘brain science and brain-inspired intelligence research”is included as one of the key science and technology innovation programs and projects in the ‘Thirteenth five-year development plan”and is one of 4 pilot programs of the‘science and technique innovation 2030 key program” [22].Some institutions have been established for Chinese brain projects,such as the Center for Excellence in Brain Science and Intelligence Technology of the Chinese Academy of Sciences[23]and the Chinese Institute for Brain Research in Beijing and Shanghai,respectively[24].

Big data in mesoscopic whole-brain imaging

These projects will generate an enormous quantity of data at an ever-increasing rate;thus,processing and analysis of the brain big data will likely become a bottleneck[25].In 2010,we used the Micro-Optical Sectioning Tomography(MOST)system to obtain a Golgi-stained whole-mouse-brain dataset[26].At 0.3 μm × 0.3 μm × 1 μm voxel resolution,this dataset contains more than 15,000 coronal images,and the total size of the raw data exceeds 8 TB.The MouseLight project from the Janelia Research Campus uses serial two-photon(STP)tomography to obtain whole-mouse-brain images at a resolution of 0.3 μm × 0.3 μm × 1 μm voxels;the raw data from each channel is approximately 10 TB[27].Osten’s group from Cold Spring Harbor Laboratory also uses STP tomography to study neural distribution and projection dependent on cell type and to build a brain architecture database[28].They recently used new oblique light-sheet tomography to obtain a whole-mousebrain dataset with a raw data size of 11 TB[29].

Human brain volume is approximately 3500 times that of a mouse brain[1].Thus,mesoscopic scale imaging of the human brain will generate a dataset at the 10 PB scale,as shown in Figure 2.This is equivalent to hundreds of thousands of 4 K movies and equals the storage capacity of the Sunway Taihu-Light supercomputer[30],one of the most powerful supercomputers in existence today.In addition,as the image techniques advance,featuring higher resolutions,more color channels and wider dynamic ranges,systems will generate even larger volumes of data.

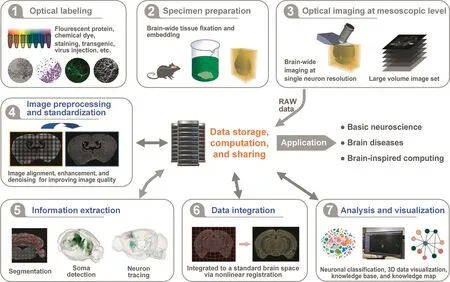

Biology labs need a mature and comprehensive solution for managing mesoscopic whole-brain images when studying neuron types and circuit connections.In 2014,Nature Neuroscience published a special issue on big data in neuroscience that says, ‘Neuroscientists have to learn to manage and take advantage of the big waves of data that are being generated.”[31].To distill brain science knowledge from the raw images,the entire processing pipeline typically includes image processing,image registration,information extraction,quantitative analysis,and visualization,as shown in Figure 3.Consequently,big data bring a range of challenges to data processing,storage,analysis,management,and sharing,because the size of the research target data increases by several folds.A decade of systematic studies on whole-brain imaging from several groups has established a self-contained system to support the applied studies[27,32-37].Nevertheless,we foresee that there is a long way to go to fully utilize mesoscopic wholebrain images.We recognize the following challenges listed in Table 1 and discuss in the following sections.

The challenge of information extraction and neuron identification

Data segmentation and identification are often recognized as the bottleneck for studies involving big data.The mesoscopic brain-image segmentation and identification target brain regions/nuclei with sizes ranging from hundreds of micrometers to millimeters,neuron soma and micro-vessels with sizes ranging from a few micrometers to 10 micrometers,and boutons with sizes ranging from sub-micrometers to a few micrometers.Axon tracing is one of the most difficult tasks in wholebrain data processing.One reason is that the spatial structures of neurons are complex,the distribution of the neural fibers can be dense,and they can cover large distances but have only a small diameter[38].Another is that axons and neurites are small structures with weak fluorescent signals that are often difficult to distinguish from the strong background noise.These factors make manual labeling a time-consuming process and degrade the accuracy of automated algorithmic labeling.Thus,manual or semiautomatic axon tracing is still the standard solution in high quality neuron morphology studies.In 2017,Nature reported three giant neurons whose fibers cover almost the entire mouse brain,which were all manually reconstructed[39].Because of the low reliability of automatic reconstruction software,reconstruction may require a day or even a week or more to map a single neuron with highly complex morphology.

Figure 2 Data size comparison among different species

Figure 3 The pipeline of whole-brain data processing and analysis at the mesoscopic scale

Table 1 Challenges of the whole-brain big data processing and analysis at mesoscopic scale

Deep learning may help improve the neuron reconstruction issue.However,deep learning currently requires large quantities of labeled data for training,such as ImageNet[40],before the models are applicable for neuron reconstruction.Another approach attempts to accelerate a semiautomatic process using techniques such as virtual reality[41,42].Additionally,crowdsourcing may be another approach for solving the human resource problem[43]in data segmentation and neuron fiber identification.Some examples are the project Fold.it for protein study[44]and Openworm for electron microscope data[45].

The challenge of data integration

Data from different samples and different experimental batches need to be integrated—even data obtained through different methodologies and different ‘omics” can be integrated together for neuroscientific research[46-49].A wholebrain mesoscopic scale data integration process can employ local,sparse,and cell-type data to build a systematic and comprehensive neuronal network[13,50-52].However,data from different sources may have nonlinear displacements in spatial coordinates[53,54],which jeopardizes the possible spatial correlation between data samples.Thus,we need a powerful,standardized framework for integrating multi-scale,multi-mode whole-brain big data[15,48,55].

We face the following challenges when building such a framework.First,the data mode often varies,the metadata are scattered,and usually,no standard approach exists for describing the metadata and the data format.The lack of a standard approach introduces unnecessary barriers to the integration and reduces the level of confidence for the integrated data.A second challenge is that no high-resolution wholebrain reference atlas exists for mesoscopic research yet.For example,the Waxholm brain coordinate system is based on magnetic resonance imaging(MRI)data,and its resolution is approximately tens of micrometers[56].The highest-resolution three-dimensional atlas available is the Allen institute’s Allen mouse common coordinate framework version 3(CCFv3),whose isotropic resolution is 10 micrometers.The CCFv3 is based on 1675 genetically-engineered mouse brains[34];the atlasing process eliminated individual differences by averaging the brain tissue’s auto-fluorescence signals[57].Finally,no registration method currently exists that can accurately map mesoscopic whole-brain datasets to a standard brain space.While some existing registration methods work well on small regions of the mesoscopic scale data,no current methods are able to work on 10-TB data scales to our knowledge[58].An ideal anatomical datum is useful because it can be used to define a standard brain space coordinate system despite sample and development varieties.However,the existing conservative anatomical data are small and subject to manual biases.

The challenge of data storage,computation,and sharing

The quality of data used in neuroscience research can be categorized.The BICCN project classifies the data into five categories:raw,quality control/quality assurance(QA/QC),linked,featured,and integrated(https://biccn.org).The mesoscopic whole-brain data size in the raw,QA/QC,and linked categories is quite large,imposing a huge burden on data storage,computation,and sharing.One important approach for confronting this whole-brain big data challenge is to reduce the data size when not all the raw data are required.For example,we can try to capture high signal-to-noise ratio raw data in the imaging stage,skip imaging in uninterested areas,as well as downsample,denoise,and compress the data.Data compression in particular may be a valid option because considerable redundant information exists in raw three-dimensional whole-brain image data[59].The data compression techniques used in video compression[27]and compressed sensing[60]may also be applicable for brain imaging data.Due to the large volume of mesoscopic-scale whole-brain data,even small improvements in data compression can have large effects in relieving the requirements for data storage,computation,and transmission.

Another concern with mesoscopic whole-brain data processing is in big data QC,as expanding studies involve international cooperation and data integration efforts.The quality standards for both raw and derived data are prerequisites for productive data exchange and cooperation between research teams.Scientists need easy-to-use processing,analysis,and QA/QC tools to adhere to and benefit from these quality standards[61-63].The International Neuroinformatics Coordinating Facility(INCF)is a non-profit science organization established in 2005 that acts as the most important facilitator for standards,tools,and sharing of big data in neuroscience between the large international brain initiatives[64].Individual scientific software applications can register with INCF Central to identify their file formats,transformations,standard query formats,and other essential metadata[48].

In addition,an increasing number of desktop applications will migrate to cloud platforms,especially those involving biomedical big data at PB/EB scales(1024 PB equals 1 EB)[65,66].Over a cloud platform,users can use client applications to send commands to the cloud platform;then,a cloud server executes the requested data manipulations,computations,and visualizations.Centralizing the data in this way obviates the need to transport the big data;thus,this mode reduces the transmission burden and fosters unified data formats and quality standards.However,building and running big data cloud platforms requires a huge upfront investment,coordination with other existing computation facilities,as well as community financial and management support.

The challenge of data and knowledge visualization

Eventually,mesoscopic whole-brain image data are refined into vectorized graphic data via preprocessing,segmentation,labeling,and integration processes[67].These graphic data contain precise spatial positioning information,forming the basic data needed for further studies,such as neuron cell typing and connections between brain regions[68,69].Visualization is a necessary aspect of these studies.Visualizations present complex data using graphics tools,including symbols,colors,and textures.These graphics tools can deliver information effectively and promote data salience.Using visualization tools makes interpreting,manipulating,and processing data simpler and allows patterns,characteristics,correlation,and anomalies to be recognized or constructed.Although visualization has been widely applied in biomedical studies,the large volume,cross-magnitude,high-dimensional,and complex mesoscopic whole-brain data bring new challenges to the existing visualization techniques.For example,both main memory and graphics memory become bottlenecks when three-dimensional data at 10 TB or PB scales need to be rendered[33,70,71].Visualization applications should support rendering from 10 K to millions of neurons,allowing both mesoscopic neuron morphological detail and macroscopic brain region connections to be viewed.Visualization should also support various types of data manipulation and presentation,including oblique reslicing[29],immersive display[72],neuron recon-struction analysis[35],brain connection atlas presentation[34],and visualizations distributed to clients by clouds[73].

Promote brain-inspired artificial intelligence

Artificial intelligence(AI)has achieved remarkable progresses after more than 6 decades of development.The defeat of the human Go champion by Google’s AlphaGo in 2016 ushered in a new wave of intelligent techniques[74].However,developments in AI are limited,have weak intelligence,and models cannot be applied in scenarios other than the one the model was designed to address.One reason may be that AI studies have not yet fully learned how brain mechanisms work,and many are not brain-inspired[75].The Blue Brain Project from Ecole polytechnique fe´de´rale de Lausanne(EPFL)tries to simulate mouse brain cortex activity based on neuron morphology data,electrophysiology data,and so on[76,77].The BRAIN Initiative launched the Machine Intelligence from Cortical Networks(MICrONS)program to reconstruct neural circuits in a 1-cubic millimeter brain volume,simulate cortical function,and develop next-generation machine intelligence systems[78].The forthcoming China Brain Initiative also prioritizes brain-inspired AI over other lower priority long-term subjects[75].Neurons are the basic information processing unit in the brain,and neural circuits are the basic structure involved in brain function.Thus,the fine spatial-temporal structure and functional data collected via mesoscopic studies can provide clues in understanding how brains function and promote brain-inspired AI efforts[79].

The research and application of mesoscopic whole-brain image data is just beginning,and many challenges and difficulties lie ahead.We hope to see increasing cooperation between scientists and engineers for the various brain projects in the future.

Competing interests

The authors have declared that no competing interests exist.

Acknowledgments

This study is supported by Science Fund for Creative Research Group of the National Natural Science Foundation of China(Grant No.61721092),and the National Natural Science Foundation of China(Grant No.61890954).

杂志排行

Genomics,Proteomics & Bioinformatics的其它文章

- Deciphering Brain Complexity Using Single-cell Sequencing

- Application of Computational Biology to Decode Brain Transcriptomes

- How Big Data and High-performance Computing Drive Brain Science

- Functional Neuroimaging in the New Era of Big Data

- Brain Banks Spur New Frontiers in Neuropsychiatric Research and Strategies for Analysis and Validation

- Translational Informatics for Parkinson’s Disease:from Big Biomedical Data to Small Actionable Alterations