基于三目视觉的自主导航拖拉机行驶轨迹预测方法及试验

2018-10-11田光兆顾宝兴IrshadAliMari王海青

田光兆,顾宝兴,Irshad Ali Mari,周 俊,王海青

基于三目视觉的自主导航拖拉机行驶轨迹预测方法及试验

田光兆1,顾宝兴1※,Irshad Ali Mari2,周 俊1,王海青1

(1. 南京农业大学工学院,南京 210031;2. 巴基斯坦信德农业大学凯尔布尔工程技术学院,凯尔布尔 66020)

为了实现自主导航拖拉机离开卫星定位系统时能够持续可靠工作,该文提出了基于三目视觉的拖拉机行驶轨迹预测方法。该方法将三目相机分解为长短基线2套双目视觉系统分时独立工作。通过检测相邻时刻农业环境中同一特征点的坐标变化反推拖拉机在水平方向上的运动矢量,并通过灰色模型预测未来时刻的运动矢量变化,最终建立不同速度下的前进方向误差模型。试验结果表明:拖拉机行驶速度为0.2 m/s时,46.5 s后前进方向误差超过0.1 m,对应行驶距离为9.3 m。行驶速度上升到0.5 m/s时,该时间和行驶距离分别降低到17.2 s和8.6 m。当行驶速度上升到0.8 m/s时,该时间和距离分别快速降低至8.5 s和6.8 m。行驶速度越高,前进方向误差增速越高。该方法可用于短时预测拖拉机的行驶轨迹,为自主导航控制提供依据。

拖拉机;自主导航;机器视觉;轨迹预测;灰色模型

0 引 言

为了降低人工成本、提高作业效率、改善作业质量,具有自主导航功能的农业机械越来越多地应用到农业生产中来。比如,自主导航拖拉机能够作为牵引机械进行田间播种、施肥、耕地[1-7]。自主导航联合收割机能够在无人干预的情况下收获小麦、水稻和玉米[8-14]。自主导航插秧机能够在水田里精准插秧,大幅提高作业精度,其效率是人工的50倍[15-18]。

视觉系统是自主导航农业装备的重要组成部分。视觉系统主要用来识别作物行、沟垄或障碍物,是农机智能化作业的重要外界环境和自身姿态感知工具[19-21]。尤其是当GPS或北斗定位系统受到干扰无法正常工作时,视觉系统能够进行辅助相对定位,保证导航工作能够继续进行[22-27]。同时,通过视觉系统也能够对未来进行预测,其预测结果为导航决策与控制提供数据基础。由于农业机械导航控制具有严重时滞性,为了提高常规PID控制效果,文献[28]和[29]都提到通过预测数据能够显著改善PID控制效果,具有很强的工程实际意义。

现有研究中,大多是在GPS或北斗可靠工作的前提下讨论导航控制方法问题。而本文探讨的问题是当GPS或北斗失效时,如何单独利用视觉系统为自主导航拖拉机进行行驶轨迹预测,并提出一种基于灰色理论的轨迹预测方法。

1 视觉系统硬件组成

本研究中视觉系统由Point Gray公司BBX3三目相机、1394B采集卡和工控机组成。

三目相机由右、中、左3个子相机构成。其中右、中2个子相机构成短基线双目视觉系统,右、左2个子相机构成长基线双目视觉系统。三目视觉系统由长短基线2套双目视觉系统叠加而成。2套双目视觉系统空间坐标系原点和各轴正方向相同,原点在右相机光心,水平向右为轴正方向,垂直向下为轴正方向,水平向前为轴正方向。为了提高开发效率,Point Gray公司已经直接将双目系统的另外一个相机的抓图和系统的视觉测量功能固化到API[30]。使用者无需采用传统的双目抓图、图像特征点检测与匹配、视差法测距等一系列过程,只需根据单幅右相机图像即可获取环境深度信息。

图1 三目相机结构

1394B采集卡用于高速接收相机回传的数字图像。工控机是图像处理的核心部件,用于程序控制相机采集图像,执行图像处理程序,输出解算结果。

2 拖拉机运动矢量检测与预测方法

2.1 拖拉机运动矢量检测

拖拉机运动矢量检测的基本原理是:利用同一组静止的特征点相邻时刻在相机坐标系中的坐标变化,反推拖拉机的运动矢量,其具体检测流程如图2所示。

图2 拖拉机运动矢量检测流程

由于2套视觉系统空间坐标原点重合,所以同一个实际物理点在2套视觉系统中的坐标理论上也是完全吻合的。但是由于2套系统的基线长度不一样,就会导致测量结果略有偏差。为了得到精确的测量结果,长短基线2套视觉系统执行相同的运动检测方法,然后求取均值。

其步骤包括:

1)在复杂背景农业环境中,右相机采集图像,图像编号自增1,并将图像存储。

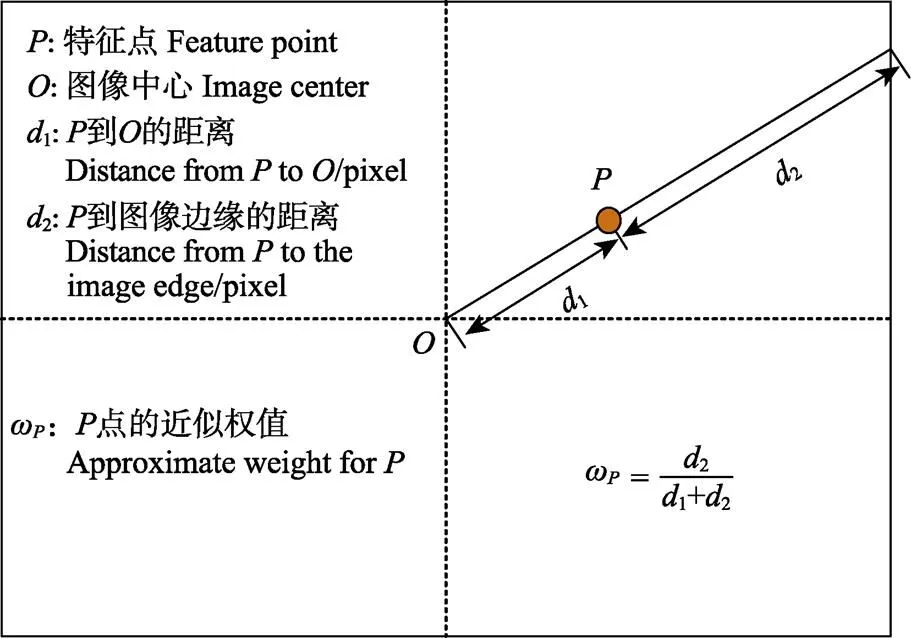

2)对右相机采集到的图像进行SIFT特征点检测,计算环境中所有特征点图像坐标[31]。由于相机的图像将不可避免地发生畸变,所以还需要对每个特征点的近似权值进行估算。图3为某一特征点的近似权值估算方法如式(1)所示。

3)根据特征点的图像坐标和步骤1)采集到的深度图像,利用相机提供的API函数进行图像坐标到相机坐标的转换。

4)判断当前处理的是否为第1幅图像。若是,将每个有效特征点的图像坐标、相机坐标以及权值存储到数组中,然后重复步骤1)~4)。若不是第1幅图像,则将以上数据存储到数组中。

5)与前1幅图像进行SIFT特征点匹配。将匹配成功的特征点对的图像坐标保存到数组中。

图3 特征点近似权值计算

6)遍历数组,分别从数组和中找出匹配成功的特征点对所对应的相机坐标和近似权值,保存到数组。

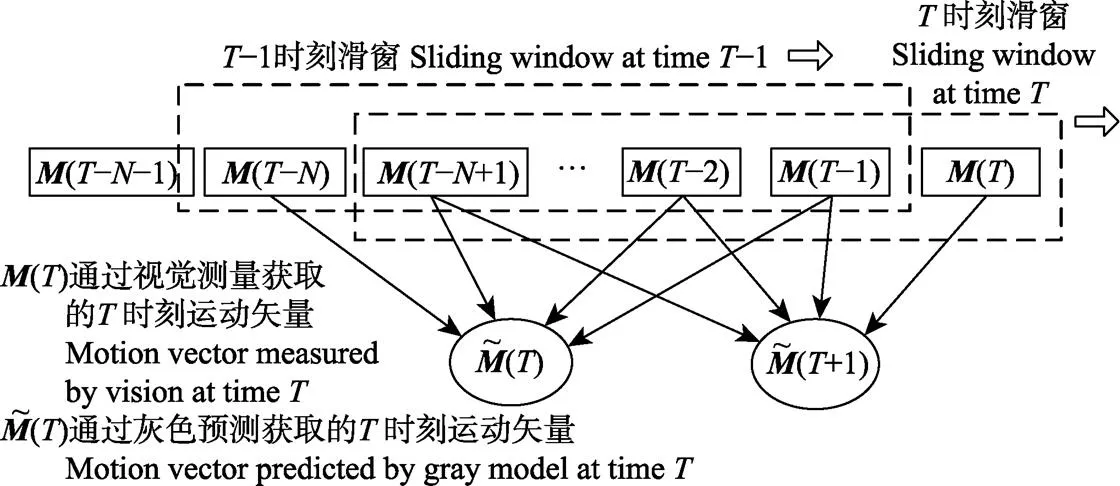

式中表示有效特征点的总个数。

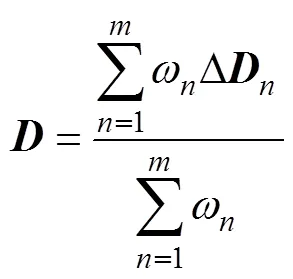

2.2 拖拉机运动矢量预测

农用拖拉机大多数都是匀速低速作业,根据其作业特点,本文设计了灰色理论的轨迹预测方案。

图4 通过滑动窗口获取预测数据

假设时刻滑窗内个运动矢量组成样本(0),其中(0)形式为式(4)。

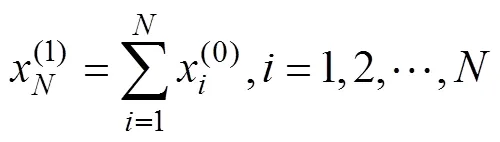

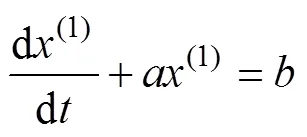

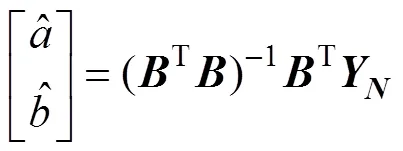

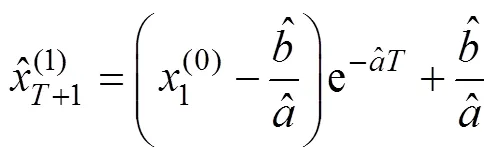

为了降低干扰数据对有效数据的影响,对(0)进行一次累加,得到(0)的1-AGO序列为(1),如式(5)所示。

其中

则GM(1,1)模型的表达式为一阶微分方程,如式(6)所示。

其中

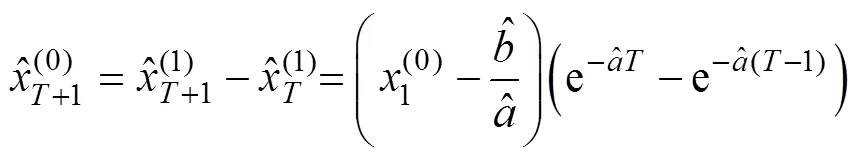

对式(8)离散化后,可得出一次累加后+1时刻的预测模型,如式(9)所示。

3 拖拉机行驶轨迹预测试验与结果分析

3.1 试验设计

以东方红SG250型拖拉机为试验平台,将BBX3型三目相机以水平姿态安装在拖拉机头部的配重梁前端,距离地面0.6 m,如图5所示。同时拖拉机顶部安装精度为厘米级的RTK-GPS系统。在光线条件良好的晴天上午,在具有大量砂石的硬路面开展试验。视觉检测和RTK-GPS检测同步,频率都是10 Hz。拖拉机分别以0.2、0.5、0.8 m/s的低速直线行驶。通过工控机采集GPS数据和视觉预测数据,绘制实测轨迹和预测轨迹,提取相同行驶距离内的有效数据,分析预测精度。所用工控机型号为研华ARK3500P,CPU型号为i7-3610,内存4 GB。由于GPS采用了载波相位实时差分技术,定位精度可达厘米级,因此可将GPS定位数据作为参考标准,以此验证三目视觉系统的运动检测与预测精度。

图5 三目相机安装位置

试验过程中,GPS初始时刻的全局定位数据作为基准。按照文中方法,通过视觉系统获得的下一时刻的增量数据加上GPS基准数据,就形成了视觉系统的测量数据(也是绝对坐标)。由多个视觉系统测量数据可以形成视觉系统预测数据。在某时刻的视觉系统预测数据和该时刻的GPS的定位数据之间必然存在一定的误差。本文得到这个误差后再向前进方向(方向)和侧向(方向)进行分解,得到2个方向上的误差分量。

3.2 结果与分析

拖拉机分别在0.2、0.5、0.8 m/s的恒定速度下直线行驶,轨迹预测试验结果分别如图6~图7和表1所示。

图6a、6c、6e中,实线是根据GPS数据绘制的拖拉机行驶轨迹。虚线是根据前文所述三目视觉预测方法得到的预测轨迹。视觉预测轨迹基本与GPS实测轨迹一致。但是随着行驶距离的增大,预测的累积误差越来越明显。

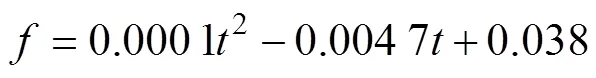

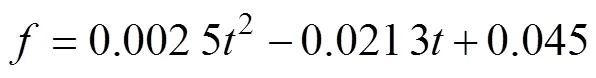

图6b、6d、6f表明,方向的误差是导致预测轨迹和实测轨迹偏差越来越大的主要原因。方向误差在震荡中不断增大。根据试验数据,分别建立了不同速度下方向累积误差的2次多项式模型。当拖拉机行驶速度为分别0.2、0.5、0.8 m/s时,该模型分别如式(11)~(13)所示,对应2分别为0.93、0.97、0.98。式(11)~(13)中,对的一阶导数反映出方向累计误差的变化。由于二次项系数均大于0,故一阶导数均为递增函数,即方向误差的变化呈线性递增。通过计算,线性递增的斜率分别为0.000 2、0.002 6、0.005 0,表明拖拉机行驶速度越高,方向误差增速越高。该模型可以用来估计当前时刻误差状态。

式中表示行驶时间,表示方向累积误差。

图7反映出不同速度下方向的误差变化很小。主要原因是试验过程中拖拉机直线行驶,在方向上位移很小,因此累积误差也很小,在±5 cm以内。

图7 不同恒定速度下x方向累积误差

表1 x和z方向累积误差数据

表1定量分析了不同速度下,、方向累积误差变化。行驶速度越快,方向累积误差上升越快。速度为0.2 m/s时,需要46.5 s的时间方向累积误差超过0.1 m,当速度上升到0.8 m/s时,这个时间缩短到8.5 s。方向误差变化没有明显规律性。通过对表1的数据进行非线性拟合,得到方向累积误差变化速率(单位m/s)与行驶速度之间的关系

据式(14)可以直接计算不同速度下的方向累积误差变化速率。

在国内外近期类似研究中,文献[32]中提到采用粒子滤波方式对改装的农业机器人进行了60 m的直线跟踪,横向偏差为4±0.7 cm。文献[33]中提到改装后的茂源250拖拉机以0.58 m/s速度视觉导航,最大误差18 cm,平均误差4.8 cm。这些关于机器视觉在农机导航上的最新研究成果与本文研究最大的区别在于研究内容的不同。上述研究均是以机器视觉和其他传感器联合,进行直线跟踪研究。而本文是研究预测轨迹与实际轨迹的偏差。由于本文是单独通过视觉传感器对运动轨迹进行检测,并在此基础上再次预测,那么累积误差将不可避免。

误差产生的原因主要包括:自然光线影响和图像处理的时间延迟造成。为了减小累积误差,得到更好的试验效果,建议使用更高性能工控机和高速快门相机。

4 结 论

1)通过长短基线2套双目视觉系统叠加构建三目视觉系统,并通过灰色预测算法,确实能够预测拖拉机在平面上的运动轨迹。

2)通过视觉系统得到的预测轨迹与真实轨迹之间存在累积误差。该误差主要由前进方向的测量误差引起。

3)拖拉机行驶速度越高,前进方向累积误差增速越高。速度为0.2 m/s时,前进方向累积误差超过0.1 m的时间和行驶距离分别为46.5 s和9.3 m。速度上升到0.5 m/s时,该时间和行驶距离分别降低到17.2 s和8.6 m。当速度上升到0.8 m/s时,该时间和距离分别快速降低至8.5 s和6.8 m。

[1] Adam J L, Piotr M, Seweryn L, et al. Precision of tractor operations with soil cultivation implements using manual and automatic steering modes[J]. Biosystems Engineering, 2016, 145(5): 22-28.

[2] Gan-Mor S, Clark R L, Upchurch B L. Implement lateral position accuracy under RTK-GPS tractor guidance[J]. Computers and Electronics in Agriculture, 2007, 59(1/2): 31-38.

[3] Timo O, Juha B. Guidance system for agricultural tractor with four wheel steering[J]. IFAC Proceedings Volumes, 2013, 46(4): 124-129.

[4] Karimi D, Henry J, Mann D D. Effect of using GPS auto steer guidance systems on the eye-glance behavior and posture of tractor operators[J]. Journal of Agricultural Safety and Health, 2012, 18(4): 309-318.

[5] 刘柯楠,吴普特,朱德兰,等.太阳能渠道式喷灌机自主导航研究[J].农业机械学报,2016,47(9):141-146. Liu Kenan, Wu Pute, Zhu Delan, et al. Autonomous navigation of solar energy canal feed sprinkler irrigation machine[J]. Transactions of the Chinese Society for Agricultural Machinery, 2016, 47(9): 141-146. (in Chinese with English abstract)

[6] Cordesses L, Cariou C, Berducat M. Combine harvester control using real time kinematic GPS[J]. Precision Agriculture, 2000, 2(2): 147-161.

[7] Jongmin C, Xiang Y, Liangliang Y, et al. Development of a laser scanner-based navigation system for a combine harvester[J]. Engineering in Agriculture, Environment and Food, 2014, 7(1): 7-13.

[8] 张美娜,吕晓兰,陶建平,等.农用车辆自主导航控制系统设计与试验[J]. 农业机械学报,2016,47(7):42-47. Zhang Meina, Lü Xiaolan, Tao Jianping, et al. Design and experiment of automatic guidance control system in agricultural vehicle[J]. Transactions of the Chinese Society for Agricultural Machinery, 2016, 47(7): 42-47. (in Chinese with English abstract)

[9] 姬长英,周俊.农业机械导航技术发展分析[J].农业机械学报,2014,45(9):44-54. Ji Changying, Zhou jun. Current situation of navigation technologies for agricultural machinery[J]. Transactions of the Chinese Society for Agricultural Machinery, 2014, 45(9): 44-54. (in Chinese with English abstract)

[10] 张漫,项明,魏爽,等.玉米中耕除草复合导航系统设计与试验[J].农业机械学报,2015,46(增刊1):8-14. Zhang Man, Xiang Ming, Wei Shuang, et al. Design and implementation of a corn weeding-cultivating integrated navigation system based on GNSS and MV[J]. Transactions of the Chinese Society for Agricultural Machinery, 2015, 46(Supp.1): 8-14. (in Chinese with English abstract)

[11] 谢斌,李静静,鲁倩倩,等.联合收割机制动系统虚拟样机仿真及试验[J].农业工程学报,2014,30(4):18-24. Xie Bin, Li Jingjing, Lu Qianqian, et al. Simulation and experiment of virtual prototype braking system of combine harvester[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2014, 30(4): 18-24. (in Chinese with English abstract)

[12] 任述光,谢方平,王修善,等.4LZ-0.8型水稻联合收割机清选装置气固两相分离作业机理[J].农业工程学报,2015,31(12):16-22. Ren Shuguang, Xie Fangping, Wang Xiushan, et al. Gas-solid two-phase separation operation mechanism for 4LZ-0.8 rice combine harvester cleaning device[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2015, 31(12): 16-22. (in Chinese with English abstract)

[13] 焦有宙,田超超,贺超,等.不同工质对大型联合收割机余热回收的热力学性能[J].农业工程学报,2018,34(5):32-38. Jiao Youzhou, Tian Chaochao, He Chao, et al. Thermodynamic performance of waste heat collection for large combine harvester with different working fluids[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2018, 34(5): 32-38. (in Chinese with English abstract)

[14] 伟利国,张小超,汪凤珠,等.联合收割机稻麦收获边界激光在线识别系统设计与试验[J].农业工程学报,2017,33(增刊1):30-35. Wei Liguo, Zhang Xiaochao, Wang Fengzhu, et al. Design and experiment of harvest boundary online recognition system for rice and wheat combine harvester based on laser detection[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2017, 33(Supp.1): 30-35. (in Chinese with English abstract)

[15] Yoshisada N, Katsuhiko T, Kentaro N, et al. A global positioning system guided automated rice transplanter[J]. IFAC Proceedings Volumes, 2013, 46(18): 41-46.

[16] Tamaki K, Nagasaka Y, Nishiwaki K, et al. A robot system for paddy field farming in Japan[J]. IFAC Proceedings Volumes, 2013, 46(18): 143-147.

[17] 胡炼,罗锡文,张智刚,等.基于CAN总线的分布式插秧机导航控制系统设计[J].农业工程学报,2009,25(12):88-92. Hu Lian, Luo Xiwen, Zhang Zhigang, et al. Design of distributed navigation control system for rice transplanters based on controller area network[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2009, 25(12): 88-92. (in Chinese with English abstract)

[18] 胡静涛,高雷,白晓平,等.农业机械自动导航技术研究进展[J].农业工程学报,2015,31(10):1-10. Hu Jingtao, Gao Lei, Bai Xiaoping, et al. Review of research on automatic guidance of agricultural vehicles[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2015, 31(10): 1-10. (in Chinese with English abstract)

[19] 宋宇,刘永博,刘路,等.基于机器视觉的玉米根茎导航基准线提取方法[J].农业机械学报,2017,48(2):38-44. Song Yu, Liu Yongbo, Liu Lu, et al. Extraction method of navigation baseline of corn roots based on machine vision[J]. Transactions of the Chinese Society for Agricultural Machinery, 2017, 48(2): 38-44. (in Chinese with English abstract)

[20] Leemans V, Destain M F. Line cluster detection using a vartiant of the Hough transform for culture row localisation[J]. Image and Vision Computing, 2006, 24(5): 541-550.

[21] Gee C, Bossu J, Jones G, et al. Crop weed discrimination in perspective agronomic image[J]. Computers and Electronics in Agriculture, 2007, 58(1): 1-9.

[22] 姜国权,柯杏,杜尚丰,等.基于机器视觉的农田作物行检测[J].光学学报,2009,29(4):1015-1020. Jiang Guoquan, Ke Xing, Du Shangfeng, et al. Crop row detection based on machine vision[J]. Acta Optica Sinica, 2009, 29(4): 1015-1020. (in Chinese with English abstract)

[23] Han Y H, Wang Y M,Kang F. Navigation line detection basedon support vector machine for automatic agriculture vehicle[C]// International Conference on Automatic Control and Artificial Intelligence (ACAI 2012), Xiamen, 2012: 1381-1385.

[24] English A, Ross P,Ball D, et al. Vision based guidance for robot navigation in agriculture[C]// 2014 IEEE International Conference on Robotics & Automation (ICRA), Hong Kong, 2014: 1693-2698.

[25] Cariou C, Lenain R, Thuilot B, et al. Motion planner and lateral-longitudinal controllers for autonomous maneuvers of a farm vehicle in headland[C]// 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, USA, 2009: 5782-5787.

[26] 林桂潮,邹湘军,张青,等.基于主动轮廓模型的自动导引车视觉导航[J].农业机械学报,2017,48(2):20-26. Lin Guichao, Zou Xiangjun, Zhang Qing, et al. Visual navigation for automatic guided vehicles based on active contour model[J]. Transactions of the Chinese Society for Agricultural Machinery, 2017, 48(2): 20-26. (in Chinese with English abstract)

[27] 项明,魏爽,何洁,等.基于DSP和MCU的农机具视觉导航终端设计[J].农业机械学报,2015,46(增刊1):21-26. Xiang Ming, Wei Shuang, He Jie, et al. Development of agricultural implement visual navigation terminal based on DSP and MCU[J]. Transactions of the Chinese Society for Agricultural Machinery, 2015, 46(Supp.1): 21-26. (in Chinese with English abstract)

[28] 任俊如.改进的预测PID控制器的研究与设计[D].武汉:武汉科技大学,2011. Ren Junru. The Research and Design of Improved Predictive PID Controller[D]. Wuhan: Wuhan University of science and Technology, 2011. (in Chinese with English abstract)

[29] 余天明,郑磊,李颂.电控机械式自动变速器离合器灰色预测PID控制技术[J].农业机械学报,2011,42(8):1-6. Yu Tianming, Zheng Lei, Li Song. Gray prediction PID control technology of automated mechanical transmission clutch[J]. Transactions of the Chinese Society for Agricultural Machinery, 2011, 42(8): 1-6. (in Chinese with English abstract)

[30] Point Grey Research, Inc. Triclops software kit Version 3.1 user’s guide and command reference [EB/OL]. [2018-08-25]. https://www.ptgrey.com/support/downloads

[31] 陈晗婧.SIFT特征匹配技术研究与应用[D].南京:南京理工大学,2017. Chen Hanjing. Research and Application of SIFT Feature Point Technology[D]. Nanjing: Nanjing University of Science and Technology, 2017. (in Chinese with English abstract)

[32] Hiremath S, Evert F K V, Braak C T,et al. Image-based particle filtering for navigation in a semi-structured agriculturalenvironment[J]. Biosystems Engineering, 2014, 121(5): 85-95.

[33] 沈文龙,薛金林,汪东明,等.农业车辆视觉导航控制系统[J].中国农机化学报,2016,37(6):251-254. Shen Wenlong, Xue Jinlin, Wang Dongming, et al. Visual navigation control system of agricultural vehicle[J]. Journal of Chinese Agricultural Mechanization, 2016, 37(6): 251-254. (in Chinese with English abstract)

Traveling trajectory prediction method and experiment of autonomous navigation tractor based on trinocular vision

Tian Guangzhao1, Gu Baoxing1※, Irshad Ali Mari2, Zhou Jun1, Wang Haiqing1

(1.210031,; 2.66020,)

In order to make the autonomous navigation tractors work steadily and continuously without the satellite positioning system, a traveling trajectory prediction system and method based on trinocular vision were designed in this paper. The system was composed of a trinocular vision camera, an IEEE 1394 acquisition card and an embedded industrial personal computer (IPC). The right and left sub cameras constituted a binocular vision system with a long base line. The right and middle sub cameras constituted another binocular vision system with a narrow base line. To obtain more precise measurement results, the two binocular vision systems worked independently and in time-sharing. Then the motion vectors of tractor, which were in presentation of horizontal direction data, were calculated by the feature point coordinate changing in the working environment of the tractor. Finally, the error models which were in the direction of heading were established at different velocities, and the motion vectors of tractor were predicted by the models based on grey method. The contrast experiments were completed with a modified tractor of Dongfanghong SG250 at the speed of 0.2, 0.5 and 0.8m/s. During the experiments, the IPC was used to collect RTK-GPS data and predict movement tracks. The RTK-GPS used in the experiments was a kind of high-precision measuring device, and the measuring precision can reach 1-2 cm. Therefore, the location data of RTK-GPS were supposed as the standard which was used to compare with the data from trinocular vision system. The experimental results showed that the method mentioned above could accurately predict the trajectory of the tractor on the plane with an inevitable error which was mainly caused by the visual measurement error of the forward direction (direction). When the tractor travelled at the speed of 0.2 m/s, the time and the distance that the error in forward direction exceeded 0.1 m equaled 46.5 s and 9.3 m, respectively. When the speed increased to 0.5 m/s, the time and the distance decreased to 17.2 s and 8.6 m, respectively. When the driving speed increased to 0.8 m/s, the time and distance quickly decreased to 8.5 s and 6.8 m, respectively. It showed that the higher the tractor traveling speed, the faster the error in forward direction increased. After that, the relationship between errors in forward direction and traveling time was acquired and analyzed by the way of nonlinear data fitting. In addition, the experimental results showed that the trend of lateral error (direction) which was perpendicular to forward direction was not regular. When the speed was 0.2 m/s, the average error was 0.002 5 m with a standard deviation (STD) of 0.003 9. When the speed increased to 0.5 m/s and 0.8 m/s, the average error in lateral direction was 0.008 2 m with an STD of 0.012 4 and 0.003 6 m with an STD of 0.006 4. The result showed that the lateral error was very small and almost invariable. Therefore, the errors of trinocular vision were mainly caused by the errors of the forward direction. The root causes of the error were the natural light and time-delay during the image processing. According to the experimental data and results, the system and method proposed in this paper could be used to measure and predict the traveling trajectory of a tractor in the dry agricultural environment with the sudden loss of the satellite signal in a short period of time. The measured and predicted data could provide temporary help for the operations of autonomous tractors.

tractor; automatic guidance; machine vision; trajectory prediction; gray model

10.11975/j.issn.1002-6819.2018.19.005

S219.1

A

1002-6819(2018)-19-0040-06

2018-06-13

2018-08-27

中央高校基本业务费资助项目(KYGX201701);国家自然科学基金资助项目(31401291);江苏省自然科学基金资助项目(BK20140729)

田光兆,讲师,博士,主要从事农业机械导航与控制研究。 Email:tgz@njau.edu.cn

顾宝兴,讲师,博士,主要从事智能化农业装备研究。 Email:gbx@njau.edu.cn

田光兆,顾宝兴,Irshad Ali Mari,周 俊,王海青. 基于三目视觉的自主导航拖拉机行驶轨迹预测方法及试验[J]. 农业工程学报,2018,34(19):40-45. doi:10.11975/j.issn.1002-6819.2018.19.005 http://www.tcsae.org

Tian Guangzhao, Gu Baoxing, Irshad Ali Mari, Zhou Jun, Wang Haiqing. Traveling trajectory prediction method and experiment of autonomous navigation tractor based on trinocular vision [J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2018, 34(19): 40-45. (in Chinese with English abstract) doi:10.11975/j.issn.1002-6819.2018.19.005 http://www.tcsae.org