Facial expression recognition with contextualized histograms

2015-04-22YUELei岳雷SHENTingzhi沈庭芝DUBuzhi杜部致ZHANGChao张超ZHAOShanyuan赵三元

YUE Lei(岳雷), SHEN Ting-zhi(沈庭芝), DU Bu-zhi(杜部致)ZHANG Chao(张超) ZHAO Shan-yuan(赵三元)

(1.School of Information and Electronics, Beijing Institute of Technology, Beijing 100081,China;2.School of Computer Science and Technology, Beijing Institute of Technology, Beijing 100081, China)

Facial expression recognition with contextualized histograms

YUE Lei(岳雷), SHEN Ting-zhi(沈庭芝)1, DU Bu-zhi(杜部致)1,ZHANG Chao(张超)1, ZHAO Shan-yuan(赵三元)2

(1.School of Information and Electronics, Beijing Institute of Technology, Beijing 100081,China;2.School of Computer Science and Technology, Beijing Institute of Technology, Beijing 100081, China)

A new algorithm taking the spatial context of local features into account by utilizing contextualized histograms was proposed to recognize facial expression. The contextualized histograms were extracted from two widely used descriptors—the local binary pattern (LBP) and weber local descriptor (WLD). The LBP and WLD feature histograms were extracted separately from each facial image, and contextualized histogram was generated as feature vectors to feed the classifier. In addition, the human face was divided into sub-blocks and each sub-block was assigned different weights by their different contributions to the intensity of facial expressions to improve the recognition rate. With the support vector machine (SVM) as classifier, the experimental results on the 2D texture images from the 3D-BUFE dataset indicated that contextualized histograms improved facial expression recognition performance when local features were employed.

facial expression recognition; local binary pattern; weber local descriptor; spatial context; contextualized histogram

Facial expressions play a key role in non-verbal face-to-face communication. Facial expressions have paramount impact on human interaction: about 55 percent of the effectiveness of a conversation relies on facial expressions, 38 percent is conveyed by voice intonation, and 7 percent by the spoken words[1]. Therefore, automatic facial expression is essential to unleash the potential of many applications such as intelligent human-computer interaction (HCI) and social analysis of human behavior.

During the past decades, many visual features have been proposed to characterize facial expressions[2-4]. In particular, local image descriptors such as local binary pattern (LBP)[5]and weber local descriptor (WLD)[6]have demonstrated great effectiveness in facial expression recognition[7]. Ojala et al. initially proposed LBP to characterize texture units by exploring the spatial context around a given pixel. And inspired by Weber’s Law, Chen et al. proposed WLD which characterizes local features with two components, namely differential excitation and Orientation, to handle many tasks such as texture classification, action recognition, and face recognition. However, these two descriptors only explore the first order context at the pixel intensity level, rather than the pattern level. As a result, the contextual information among those local patterns or descriptors does not contribute to the discrimination of extracted descriptors and recognition performance could be compromised.

In this paper, we explored spatial context among local descriptors during the formation of histograms with the recently introduced contextualized histogram[8]technique. That is, instead of simply calculating the distribution of each local pattern for a given image, more patterns at finer granularity were discovered to form a higher dimensional and more discriminative histogram. In order to further improve recognition performance, a weighted partition method was investigated.

1 Local features

1.1 LBP

LBP was initially proposed by Ojala et al.[5]to characterize texture units by exploring the spatial context around a given pixel. That is, each pixel is converted into a LBP by referring to its neighborhood and the binary patterns of a given image are formed into a histogram which describes the distribution of those LBPs. In detail, the operator labels the pixels of an image by thresholding the 3×3 neighborhood of each pixel with the center value and considering the result as a binary number. By considering the 8-bit result as a binary number, a 256-bin histogram of the LBP labels computed over a region is used as a texture descriptor. Due to its effectiveness as well as simplicity, it has been widely used in many pattern classification problems such as face recognition and facial expression recognition.

Formally, given a pixel at (xc,yc), the resulting LBP can be expressed in decimal form as

(1)

whereicandipare gray-level values of the central pixel and one of thePneighbor pixels with a radiusR, respectively. Functions(x) is defined as

(2)

Inaddition,thedimensionalityoftheoperatorwasfurtherreducedbyintroducingthenotionofauniformLBPwhichcontainsatmosttwobitwisetransitionsfrom0to1orviceversawhenthebinarystringisconsideredcircular.Asaresult,eachimagecanberepresentedwitha59-dimensionalhistogramwhereeachbinrepresentsthedistributionofoneLBPamongtheimage.

Fig.1 Illustration of the LBP operator

1.2WLD

WLD[6]isbasedonthefactthathumanperceptionofapatterndependsonnotonlythechangeofastimulus(suchassound,lighting)butalsotheoriginalintensityofthestimulus.Specifically,WLDconsistsoftwocomponents:differentialexcitationandorientationforeachreferencepixelwithinitsneighbors(i.e.thecentralpixelofa3×3neighborhood).Thedifferentialexcitationcomponentisafunctionoftheratiobetweentwoterms:therelativeintensitydifferencebetweenareferencepixelanditsneighbors,andtheintensityofthereferencepixel.Theorientationcomponentisthegradientorientationofthereferencepixel.

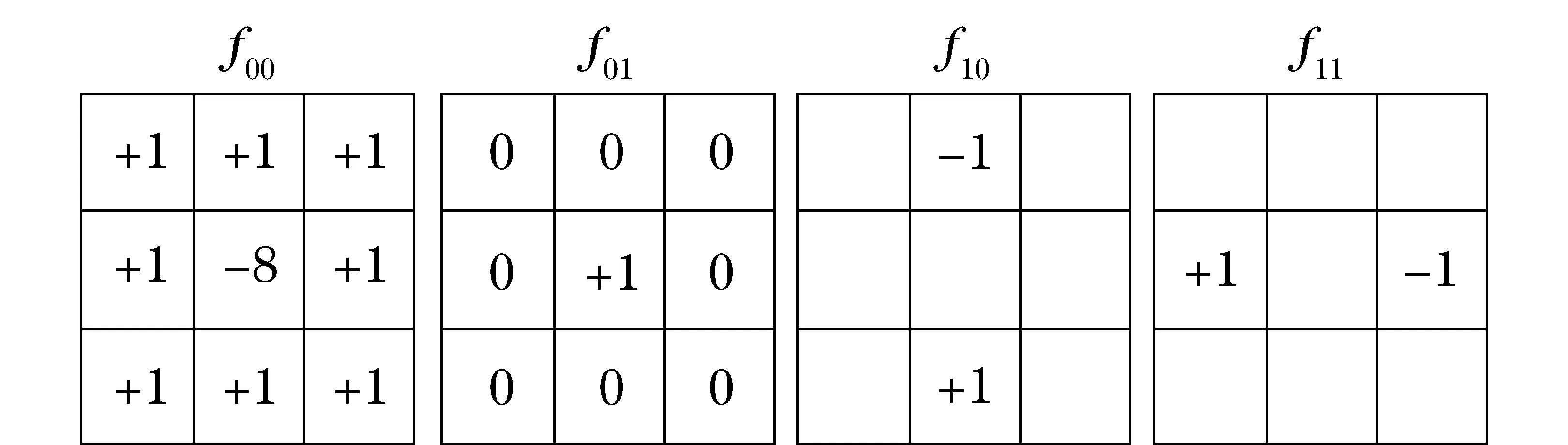

Specifically,thedifferentialexcitationξ(xc)ofareferencepixelxciscomputedinthreesteps.First,thedifferencesbetweenthereferencepixelanditsneighborsarecalculatedaftertheinputimageisfilteredwithf00asshowninFig.2.

官箴文化在晚明有显著的发展,呈现兴盛局面,最直接、最明显的表现就是官箴书数量的“骤增”。需说明的是,这里所指的官箴书包括三种情况:一是书籍的创作与刊刻都在晚明时期;二是书籍的创作在晚明,但可能刊刻于清代;三是无法判定其创作期,但最早的版本确定在晚明,这种情况一般不著撰者。以上三种情况,我们都可将其视为晚明官箴书。

(3)

(4)

Fig.2 Masks of four Filters: f00, f01, f10 and f11

TheorientationcomponentofWLD,i.e.thegradientorientation,iscomputedas

(5)

Asaresult,eachimagecanberepresentedwithaWLDhistogramfromthe2Dhistogramsofdifferentialexcitationsanddominantorientations.Thedimensionalityofthe2DhistogramisT×C,whereTisthenumberofdominantorientationsandCisthenumberofcells.EachcellisfurtherevenlydividedintoM×Ssegments,whereMisthenumberofsub-histogramsforeachorientationandSisthenumberofbinsofeachsub-histogram.Thatis,thedimensionoftheWLDhistogramisT×S×M.

2 Histogram contextualization

AssumethatanimageisrepresentedwithaK-binhistogram: h={hi}(i=1,2,…,K) (hisafeaturehistogramandKisthenumberofbinsofit).Histogramcontextualizationistoconstructaso-calledcontextualizedhistogramhs={hi(sj)}(i=1,2,…,K; j=1,2,…,M)basedonasetofpredefinedlocalcontextualstructuresdenotedas{sj}(j=1,2,…,M),wherehi(sj)isthenumberofpixelswhichtaketheithlocalfeaturevalueandsurroundedwiththelocalcontextualstructuresj.Thatis,foreachgivenlocalfeaturepattern(e.g.LBP),thereareMsub-patternsintermsofitsspatialcontext.Asaresult,thecontextualizedhistogramisofM×Kdimension.

Foralocalcontextualstructurewithonlytwopixels,therearetwotypesofhomogeneities,namelyhomogeneousandinhomogeneous.Foraternarylocalcontextualstructure,thereare5typesoflocalhomogeneities.Therefore,theternarylocalcontextualstructuresareabletoencodemorecomplicatedandinformativelocalcontextualinformation.Fengetal.[8]defined30ternarycontextualstructuresbycombining5typesofhomogeneitiesand6shapes(Fig.3).Notethattheordershowninthefiguremeansthenumberofpixelsbelongingtothesamehistogrambin,anddifferentcolorsrepresentdifferenthistogrambins.Asaresult,thedimensionofthecontextualizedhistogramisM×K=30×K.

Fig.3 Histogram contextualization with 30 ternary local contextual structures combining 5 homogeneities and 6 different shapes

3 Experiments

Weconductedexperimentsinfourstepswiththe2Dtextureimagesfrom3D-BUFEdataset: ①ExtractedlocalfeaturepatternsofLBPandWLDseparatelyfromtheoriginalexpressionimages; ②Dividedtheimagesintosub-block; ③Addedweightstoeachsub-block.; ④Impliedcontextualizedhistogramtothefeaturevectorsfromthethirdstep.Wegotexpressionclassificationresultsfromallthesefourtrialsandcomparedtherecognitionrateinthissection.

3.1Experimentalsettings

Weusedthe2Dtextureapexexpressionimagesfromthewidelyused3D-BUFEdataset[10].Therewere100subjectswhoparticipatedinfacescan,includingundergraduates,graduatesandfacultyfromvariousdepartmentsofBinghamtonUniversity(e.g.suchasPsychology,Arts,andEngineering)andStateUniversityofNewYork(e.g.ComputerScience,ElectricalEngineering,andMechanicalEngineering).ThemajorityofparticipantswereundergraduatesfromthePsychologyDepartment.Theresultingdatabaseconsistsofabout60%femaleand40%malesubjectswithavarietyofethnic/racialancestries,includingWhite,Black,East-Asian,Middle-eastAsian,HispanicLatino,andothers.Eachsubjectperformedsevenexpressionsinfrontofthe3Dfacescanner.Withtheexceptionoftheneutralexpression,eachofthesixprototypicexpressions(i.e.anger,disgust,fear,happiness,sadness,andsurprise)includesfourlevelsofintensity.Therefore,thereare25 3Dexpressionmodelsforeachsubject.Asaresult,thedatasetintotalcontains2 500 3Dfacialexpressionmodelsandeachmodelhasitscorresponding2Dtextureimage.SevensampleexpressionsofasubjectareshowninFig.4.Andwerandomlypicked80subjectsasthetrainingsetandtheother20subjectsasthetestingset.

Fig.4 Samples of seven expressions (from left to right): anger, disgust, fear, happiness, neutral, sadness, and surprise

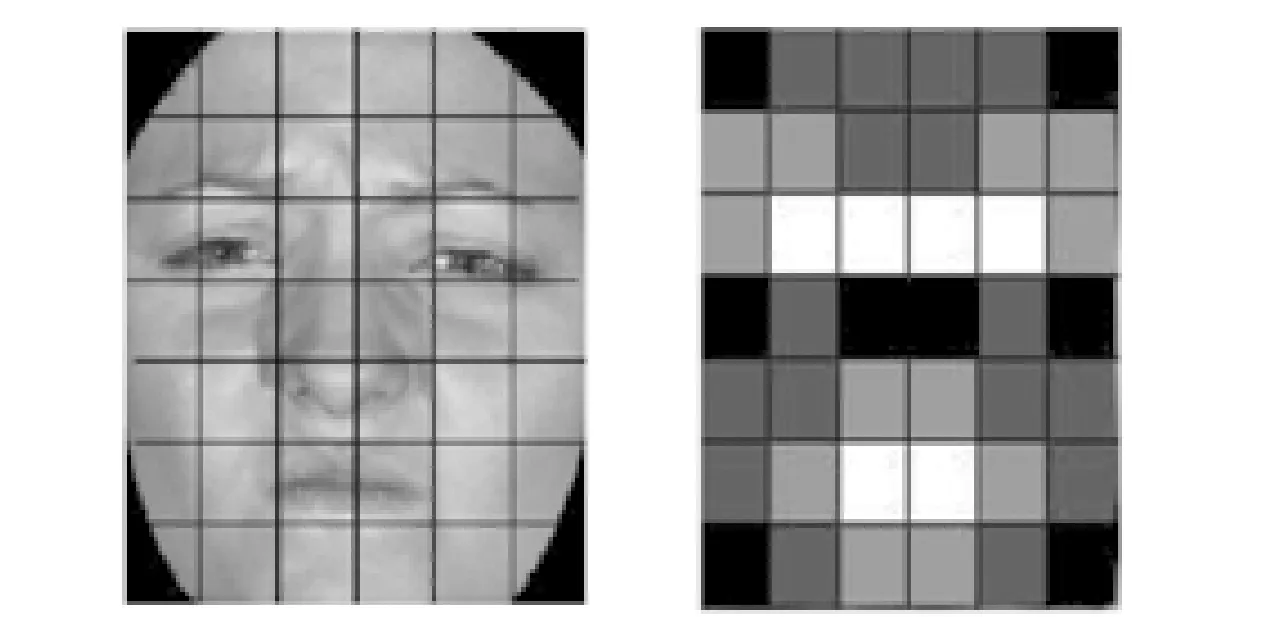

Fig.5 Illustration of weighted sub-block based representation

Inordertotaketheglobalspatialcontextintoaccount,wealsoinvestigatedtheimpactofsub-blockbasedrepresentationwithandwithoutweights.AsshowninFig.5,eachfaceimageispartitionedinto42 (6×7)sub-blocksandsquarecolorsofblack,darkgray,lightgrayandwhiteindicateweightsof0.0, 1.0, 2.0and4.0respectively[7].Asaresult,thefeaturevectorofeachimagewillbe42×K,whereKisthedimensionofthehistogramderivedfromalocaldescriptor(eg.59forLBPor192forWLDwhereT=8, S=4,andM=6).Wegotthesethreenumbers(T, SandM)byrepeatedlytryingdifferentcombinationsandtriedtoreachacompromisebetweencalculationefficiencyandrecognitioncorrectrates.

Duetothesub-blockrepresentationandhistogramcontextualization,thefeaturedimensionisquitehigh.Forexample,thefeaturedimensionofacontextualizedWLDhistogramis241 920 (=192×42×30).Therefore,weperformdimensionreductionwithprinciplecomponentanalysis(PCA).Weemployedthewidelyusedsupportvectormachines(SVM)[11]astheclassifier.Thoughmanynewkernelsarebeingproposedbyresearchers,themostwidelyusedkernelfunctionsarethelinearfunction,polynomialfunction,radialbasisfunction(RBF),andSigmoidfunction.Inthispaper,weusedtheRBFkernelfunctiontocarryouttheexperiments.

3.2Experimentalresultsanddiscussions

Experimentswereconductedinfourstepstoevaluatetheperformanceofthecontextualizedhistogramsderivedfromlocaldescriptors.ThefourLBP-basedhistogramsareglobalhistogram(LBP),sub-blockbasedrepresentation(LBPwithsub-block),weightedsub-blockbasedrepresentation(LBPwithweightedsub-block),andcontextualizedhistogramsoverweightedsub-blockbasedrepresentation(LBPwithweightedsub-blockandcontext).AsshowninTab.1,thecontextualizedhistogramwasabletoachievethehighestrecognitionaccuracy,indicatingthatlocalcontextualstructurewashelpfulincharacterizingspatialcontext.Thesub-blockbasedrepresentationimprovedrecognitionperformancesignificantly,whichdemonstratedtheuseofglobalspatialcontextcouldimprovetherecognitionrateaswell.Assigndifferentweighttodifferentsub-blockswasalsohelpfulforfacialexpressionrecognition.Thebestperformanceforcontextualizedhistogramwasobtainedwhenthereducedfeaturedimensionwas200,afterseveralvalueswereattempted.

Tab.1 Comparison of recognition accuracy among LBP based histograms

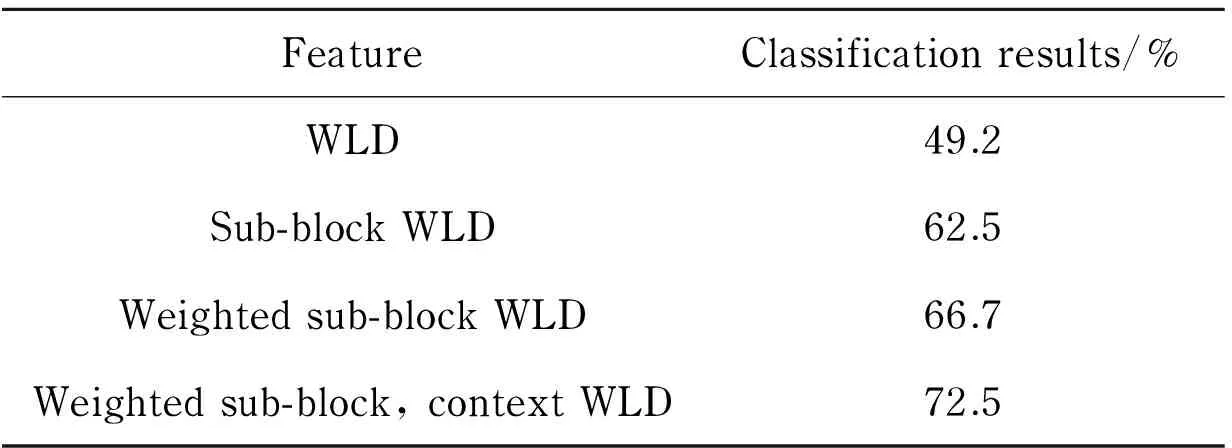

The similar result was also observed for WLD-based histograms as shown in Tab.2. The classification based on original WLD histograms didn’t show competitive recognition capability. But when we first divided the face into sub-block and then assigned different weights to corresponding sub-blocks, the recognition rate improved significantly. We obtained the best results with the feature extract with WLD when we applied contextualized histogram on the vector generate from weighted sub-blocked human face.

Tab.2 Comparison of recognition accuracy among WLD based histograms

WLD outperformed LBP while a histogram was obtained globally (without partitioning an image into sub-blocks). This could be explained with the superiority of WLD. However, in other situations LBP slightly outperformed WLD. This could be explained with the high dimensionality of WLD based histograms. Dimension curse was still an imperfectly solved problem. So, efficient extraction of useful information from overlong vectors extracted with WLD may be able to help us to further improve the recognition rate.

4 Conclusion

In this paper we presented a solution to contextualize the histograms derived from local features such as LBP and WLD and utilize them for facial expression recognition. Since local contextual structures were taken into account when a histogram was derived from local features, the contextualized histogram was more discriminative for facial expression recognition. Experimental results on the 2D feature images from the 3D-BUFE dataset demonstrated the effectiveness of the proposed solution by adding the recognition rate when weighted sub-block and contextualized histograms were employed to the original local feature patterns. In the future, we will further investigate adaptive weighting strategies at both sub-lock level and the local contextual structure level, and try to introduce dimension reduction algorithm to improve the performance.

[1] Mehrabian A. Communication without words[J]. Psychology Today, 1968, 2(4):53-56.

[2] Fasel B, Luettin J. Automatic facial expressionanalysis: a survey[J]. IEEE Transactions on Pattern Recognition, 2003,36(1):259-275.

[3] Krinidis S, Buciu I, Pitas I. Facial expressionanalysis and synthesis: a survey[C]∥The 10thInternational Conference on Human-Computer Interaction, Crete, Greece, 2003.

[4] Pantic M, Rothkrantz L J. Automatic analysis offacial expressions the state of the art[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2000, 22(12):1424-1445.

[5] Ojala T, Pietikinen M, Harwood D. A comparative study of texture measures with classification based on featured distribution[J]. Pattern Recognition, 1996, 29(1):51-59.

[6] Chen J, Shan S, He C,et al. Wld: A robust local image descriptor[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2010, 32(9):1705-1720.

[7] Shan C, Gong S, McOwan P. Facial expression recognition based on local binary patterns: A comprehensive study[J]. Image and Vision Computing, 2009, 27(6):803-816.

[8] Feng J, Ni B, Xu D, et al. Histogram contextualization[J]. IEEE Transactions on Image Processing, 2012, 21(2):778-788.

[9] Ojala T,Pietikainen M, Maenpaa T. Multi-resolution gray-scale and rotation invariant texture classification with local binary patterns[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2002, 24(7):971-987.

[10] Yin L, Wei X, Sun Y, et al. A 3d facialexpression database for facial behavior research[C]∥International Conference on Automatic Face and Gesture Recognition, Southampton, UK, 2006.

[11] Chang C C, Lin C J. LibSVM: a library for support vector machines[J]. ACM Transactions on Intelligent Systems and Technology, 2011, 2(27):1-27.

(Edited by Cai Jianying)

10.15918/j.jbit1004-0579.201524.0317

TP 37 Document code: A Article ID: 1004- 0579(2015)03- 0392- 06

Received 2014- 02- 20

Supported by the National Natural Science Foundation of China(60772066)

E-mail: napoylei@163.com

猜你喜欢

杂志排行

Journal of Beijing Institute of Technology的其它文章

- Anti-hypertensive effects of rosiglitazone on renovascular hypertensive rats: role of oxidative stress and lipid metabolism

- On a novel non-smooth output feedback controller for the attitude control of a small float satellite antenna

- New model reference adaptive control with input constraints

- Length estimation of extended targets based on bistatic high resolution range profile

- Joint receiving mechanism based on blind equalization with variable step size for M-QAM modulation

- Coherence-based performance analysis of the generalized orthogonal matching pursuit algorithm