Visuospatial properties of caudal area 7b in Macaca fascicularis

2013-09-20HuiHuiJIANGYingZhouHUJianHongWANGYuanYeMAXinTianHU

Hui-Hui JIANG, Ying-Zhou HU, , Jian-Hong WANG, Yuan-Ye MA, , Xin-Tian HU,

1. State Key Laboratory of Brain and Cognitive Sciences, Kunming Institute of Zoology, the Chinese Academy of Sciences, Kunming, Yunnan 650223, China;

2. University of the Chinese Academy of Sciences, Beijing 100049, China;

3. State Key Laboratory of Brain and Cognitive Sciences, Institute of Biophysics, Chinese Academy of Sciences, Beijing 100101, China;

4. Kunming Primate Research Center, Kunming Institute of Zoology, the Chinese Academy of Sciences, Kunming 650223, China

When we attempt to grasp or reach for an object,our motor system requires that our sensory system provide information about the location of the target.However, different sensory stimuli are encoded in different reference frames: visual stimuli are captured by the retina, auditory stimuli are received within the head,and tactile stimuli arise from the body. Movement must be directed towards the target depending on where it is positioned with respect to the body part. To proceed from sensation to movement, our brain needs to integrate information from the different senses and transform it into a non-retinocentric frame of reference, such as a body-part-centered frame of reference. The purpose of this study was to investigate whether area caudal 7b was a part of this transformation system.

To construct a body-part-centered visual receptive field, retinal signals must be integrated with other signals.For example, by combining retinocentric visual signals with eye position signals, the head-centered visual receptive field of the cells can be constructed (Graziano et al, 1997). The arm-centered visual receptive field of cells requires integration of not only retinocentric visual and eye position signals but also head and arm position signals. In addition to visual signals, eye position, head position, and vestibular signals converge on neurons in area 7a (Andersen et al, 1985; Andersen et al, 1990b;Andersen et al, 1997). These signals are combined in a systematic fashion using the “gain field” mechanism, in which other signals modify the retinocentric visual signal.1

Anatomically, area 7a projects to area 7b and the ventral intraparietal cortex (VIP), which in turn connects to the ventral premotor cortex (PMv). Although other sensory signals modulate retinocentric visual signals, the visual receptive fields in area 7a are still eye-centered(Andersen et al, 1985). In the PMv, most neurons are bimodal cells, i.e., they respond to both tactile and visual stimuli (Fogassi et al, 1992; Fogassi et al, 1996;Gentilucci et al, 1983; Graziano et al, 1994; Graziano et al, 1997). Most visual receptive fields encode nearby space in a body-part-centered reference frame (Fogassi et al, 1992; Fogassi et al, 1996; Gentilucci et al, 1983;Graziano et al, 1994; Graziano et al, 1997). Additionally,most neurons in the VIP are bimodal (Colby et al, 1993;Duhamel et al, 1998) and employ a hybrid reference frame (Duhamel et al, 1997).

On the basis of previous studies, it is known that area 7b receives visual and somatosensory inputs from cortical and subcortical brain structures (Andersen et al,1990a; Cavada & Goldman-Rakic, 1989a) and is also the target of cerebellar output, which suggests that it may be important in movement (Clower et al, 2001; Clower et al,2005). Damage to area 7b causes spatial deficits, such as neglect of contralateral peripersonal space (Matelli et al,1984), which suggests that this area is involved in spatial information processing. Area 7b contains cells with visual and tactile properties that resemble those of PMv cells (Dong et al, 1994; Leinonen et al, 1979). These results suggest that area 7b may be one component of the receptive field transformation system. Do neurons in this area have the characteristics of the transformation system?Does the area employ a retinocentric reference frame similar to area 7a, a hybrid system similar to VIP, or some other reference frame? To date, no quantitative studies on these issues have been conducted.

In the present study, we first qualitatively tested the sensory properties of neurons in the caudal portion of area 7b. If a neuron showed a clear visual response, the reference frame of the neuron was tested quantitatively.We plotted the visual receptive fields of neurons when the eyes were held still. The receptive fields were then replotted when the eyes were in a new position. In this way, we could determine whether the visual receptive fields were centered on the eyes.

MATERIALS AND METHODS

Ethics statement

All animal care and surgical procedures were performed in accordance with the National Institutes of Health Guide for the Care and Use of Laboratory Animals and approved by the Institutional Animal Care and Use Committee at the Kunming Institute of Zoology.

Two adult male Macaca fascicularis (4.2–6.0 kg)were used in the present research. They were singlehoused under standard conditions (a 12-h light/dark cycle with lights on from 07:00 to 19:00, humidity at 60%, and temperature at 21 ± 2℃ in the animal colony. Their body weights were closely monitored during the experiments.

Surgical procedures

All surgeries were performed under strict aseptic conditions and, unless otherwise indicated, under ketamine hydrochloride (10 mg/kg) and acepromazine (0.4 mg/kg)anesthesia. Under deep anesthesia, one stainless steel recording chamber for chronic single-unit recording, 2.5 cm in diameter, was embedded in dental acrylic over the right parietal lobe of each monkey. A steel bolt for holding the monkeys’ head stationary during experiments was also embedded in the acrylic. During the same surgery session, one scleral search coil (Fuchs & Robinson, 1966)was implanted to record eye position signals.

Post-surgical recovery lasted approximately one week. Both monkeys were given two additional weeks before behavioral training started to allow the skull to grow tightly around the skull screws.

After the behavioral training was completed, the recording chamber was opened under aseptic conditions and a ~4 mm hole was drilled through the dental acrylic and bone to expose the dura mater. All data were collected from this region.

Behavioral training

Before experimental task training began, each monkey’s ad libitum daily water intake was measured.Based on this measurement, the monkeys were placed on a restricted water schedule whereby each monkey received most of its water as a reward for performing the task. If the monkey received less water than its normal daily amount during the experimental session, a postsession supplement was given. Each monkey also received ad libitum water for two consecutive days every weekend during which training and recording sessions were not conducted. Each monkey’s body weight was closely monitored during the time it was on the restricted water schedule.

At the beginning of the task training, the monkey sat in the chair with its head and left arm fixed, and its eye movements were constantly monitored using a standard eye coil technique (Fuchs & Robinson, 1966;Graziano et al, 1997). As shown in Figure 1, three LEDs were placed 57 cm in front of the monkey at eye level,15 visual degrees apart. First, the monkeys were trained to perform the fixation task without a peripheral visual stimulus. The central LED was set to blink at a frequency of 6 Hz. If the monkey fixated on the central LED within a specified round spatial window (fixation window), the LED stopped blinking and remained lit. If the monkey maintained fixation for the remainder of the trial, the LED was turned off and the monkey received a drop of juice as a reward. A 3–10-second intertrial interval (ITI)commenced at the same time that the reward was given.If the monkey broke fixation at any time during the trial,the LED was turned off, the ITI was restarted, and the monkey was not rewarded. As the training progressed,the size of the spatial fixation window was decreased and the fixation period was increased. At the final stage, the monkeys were able to fixate within a round window of 3 visual degrees in diameter for a 4-second fixation period.After they learned to fixate on the central LED, the monkeys were trained to fixate on the two lateral LEDs using the same procedure.

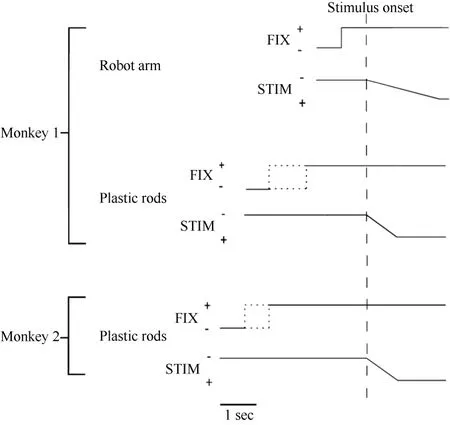

Having learned the fixation task, the monkeys were next trained to perform the same task when a peripheral visual stimulus was presented (Figure 1 and Figure 2).

Recording procedure

The recording sessions started after the monkey was able to perform the task for more than 700 trials in one day. During the daily recording sessions, a hydraulic microdriver was mounted to the top of the recording chamber. A steel guide cannula (22-gauge syringe needle)was slowly lowered through the small hole in the chamber until its tip was close to the surface of the dura mater. Then, a varnish-coated tungsten microelectrode(Frederick Haer, impedance 0.8–1.0 MΩ) was advanced slowly from the guide cannula through the dura mater and into the cortex. After the electrode penetrated the surface of the cortex, as indicated by the appearance of background neuronal signals, the electrode was withdrawn 1.5 mm, and the brain was allowed to recover from the indentation caused by the advance of the electrode for about one hour. The electrode was then slowly moved up and down to isolate single-unit activity.As the experiment progressed, the cannula and a new electrode were inserted into a slightly different location in the small hole.

Tests

When a single neuron was isolated, its activity was constantly monitored with an oscilloscope and a loudspeaker. Each neuron was first tested to determine if it had tactile, visual, and/or auditory responses. The tactile response was tested by manual palpation, gentle pressure, and stroking with a cotton swab. Tactile responses on the face were studied in the dark or when the eyes were covered. Visual responses were studied with hand-held objects, such as cotton swabs, a bunch of plastic grapes, monkey dolls, or a metal rod. The visual stimulus was moved into the visual receptive field from above, below, or the side. The receptive field was measured with the most effective stimulus when the response became stable and clear. By presenting stimuli at different distances from the monkey, we also tested the depth extension of visual RFs.

If a cell showed clear visual responses, it was then tested quantitatively with one of the following two tests:

Figure 1 Two experimental tests were used in the present study. A: robot arm test. In each trial, the monkey fixated on one of the three LEDs (FIX A, B, C), and the visual stimulus was advanced along one of the five trajectories indicated by the arrows. B: plastic rod test. In each trial, the animal fixated on one of the three LEDs (A, B, C). During the fixation, one of the five plastic rods indicated by the filled circles (stimulus positions 3 to 7) descended into view from behind a panel. The circles on the monkey’s head show the recording locations.

Figure 2 Time course of the two experimental tests used in the present study. The change in the vertical position of the stimulus time course indicates stimulus movement.The dotted line indicates that the duration was random. FIX = fixation light; STIM = stimulus.

Robot arm test

Only monkey 1 was tested with this type of stimulus,as shown in Figure 1A. At 0.5 seconds after the onset of fixation, a visual stimulus (a piece of blue Styrofoam, 2 cm in diameter) was advanced towards the animal by a mechanized track at a speed of 8 cm/sec along one of five equally spaced parallel trajectories at the same level,15 visual degrees apart. The trajectories were 12 cm long and their locations were adjusted to match the visual receptive field being studied. The stimulus stayed at the end of the trajectory for 0.2 seconds before the ITI started. The stimulus was moved to the starting position of the next trajectory during the ITI. The time course of this test is demonstrated in Figure 2. The combination of three eye fixation positions and five stimulus trajectories resulted in fifteen conditions. These fifteen conditions were tested in an interleaved fashion, and each condition typically had ten trials.

However, there were some problems with the stimulus. Firstly, in the robot arm test, the Styrofoam stimulus was always in view and some area 7b neurons quickly habituated to it. Secondly, the five trajectories used in the robot arm test were parallel to each other and moved straight towards the monkey. When the stimulus was advanced towards the monkey along a trajectory located peripheral to the visual receptive field, it produced a much larger visual angle compared with other trajectories in the middle of the visual receptive field before reaching its end point. When a larger visual angle was covered, a larger area on the retina was stimulated,which made it more difficult to directly compare results from different trajectories. To address these problems, we designed a plastic rod test in which the stimulus suddenly came into view and the visual angle of visual stimulus was kept constant.

Plastic rod test

This test was used on both monkeys. An array of five small plastic rods, arranged 15 degrees apart along a semicircle and controlled by five different stepping motors, served as visual stimuli. The center of the semicircle was located at the midline of the monkey’s face, and its radius was 5 cm. There were a total of ten possible locations on the semicircle (labeled 1 to 10) for the five rods so that the locations of the stimuli could be adjusted according to the location of the visual receptive field being tested.

Each trial began when the animal fixated on one of the three LEDs. After an initial fixation period of 1.25–2.0 seconds for monkey 1 and 2.0–2.5 seconds for monkey 2, one of the five rods descended at a speed of 10 cm/sec for 6.0 cm, emerging from behind a head-level panel into the monkey’s view. The rod remained stationary for 1.0 second in front of the animal. The trial was then ended and the animal received 0.2 ml of juice as a reward. A variable ITI began (4.0-7.0 seconds for monkey 1 and 2.0–3.0 seconds for monkey 2), and the rod was withdrawn to behind the panel. Figure 2 shows the time course for the test. The combination of three eye fixation positions and five stimuli (rods) yielded fifteen conditions. These fifteen conditions were run in an interleaved fashion, and each condition typically contained ten trials.

Statistical procedures

Two analysis windows were chosen for each cell.The first, termed the "baseline window" and used to calculate the pre-stimulus baseline activity, consisted of the time period from 0.6 to 0.0 s before the onset of the stimulus. The other window, termed the "response window", was used to examine the neuron’s response to visual stimuli and consisted of the time period from the onset of the neuron’s main visual response (i.e., three standard deviations above the baseline for three consecutive bins, with a 50-ms bin length) to 1.5 s after stimulus onset because all recorded neurons continued their responses to the end of the trial. We chose not to use a fixed response window as in previous studies because the size and location of the receptive fields differed among the neurons, and as a result, the exact time when the stimulus moved into the receptive field varied from neuron to neuron. Because cells did not respond to the stimulus at the same time across different locations, we chose the response window from the earliest onset(which was usually also the best response) location and applied this window to all fifteen experimental conditions. The response window was shortened and lengthened for several tested neurons; very similar results were obtained, which demonstrated the robustness of this method of analysis.

Qualitative analysis

All neurons were classified qualitatively. Firstly,neurons without a clear peak (as judged by the existence of a response location in the curve that was significantly different from the response for at least one location on both sides of the peak, according to a t-test) in three tuning curves were not included in the data set for the spatial shift analysis. Secondly, before calculating the shift index, we compared the shapes of the tuning curves by moving two of the tuning curves until the peaks of three tuning curves overlapped, because shift index values can be affected by shapes of the tuning curves. We classified neurons into the following three types based on this comparison: (1) Neurons without obvious shape changes (as judged by the overlap of the standard deviations at each stimulus location) and receptive fields that did not shift with the eyes were classified as nonretinocentric neurons. (2) Neurons without obvious shape changes and receptive fields that shifted with the eyes were defined as retinocentric neurons. (3) Neurons with a partial spatial shift, which included two groups of neurons: the first group had no obvious shape changes but had partially shifted receptive fields; the second group had obvious shape changes and was also classified as partial spatial shift neurons.

Quantitative analysis

Based on the qualitative analysis, neurons with clear peaked responses were analyzed quantitatively. A shift index (SI) was calculated to measure how much the visual receptive field shifted when eye position changed as the monkey fixated on different LEDs (Graziano,1999; Schlack et al, 2005).

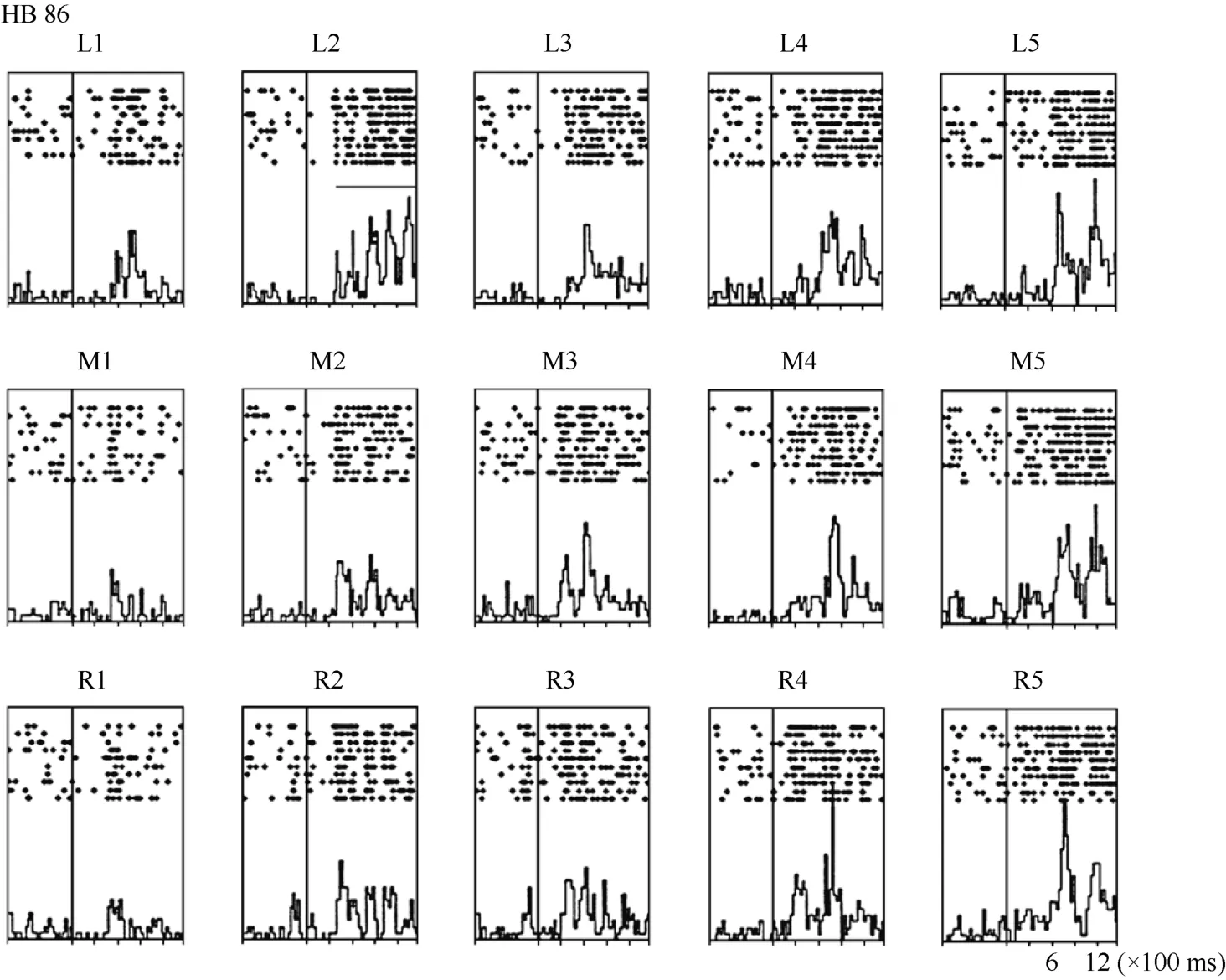

First, a center of mass (CM) was calculated for each of the tuning curves corresponding to the right (CMR)and left (CML) fixation LEDs. When the animal fixated on the right LED, the responses (number of spikes per second) from each of the five stimulus locations were numbered from the monkey’s left to right as R1 to R5.The formula used to calculate the CMR of the right fixation LED tuning curve is C MR = ( R1 + 2 × R 2+3 × R 3 + 4 × R 4 + 5 × R 5 )/(R1 + R 2 + R 3 + R 4 + R 5).

The CML of the left LED could be calculated in the same way. From the CMs, the shift index (SI) was calculated as SI = C MR - C ML .

A positive SI indicated that the visual receptive field shifted in the same direction as the change in eye position in the orbit. A negative SI indicated that the visual receptive field was displaced in the opposite direction from the change in eye position. An SI value close to zero indicated a stationary visual receptive field.

Histology

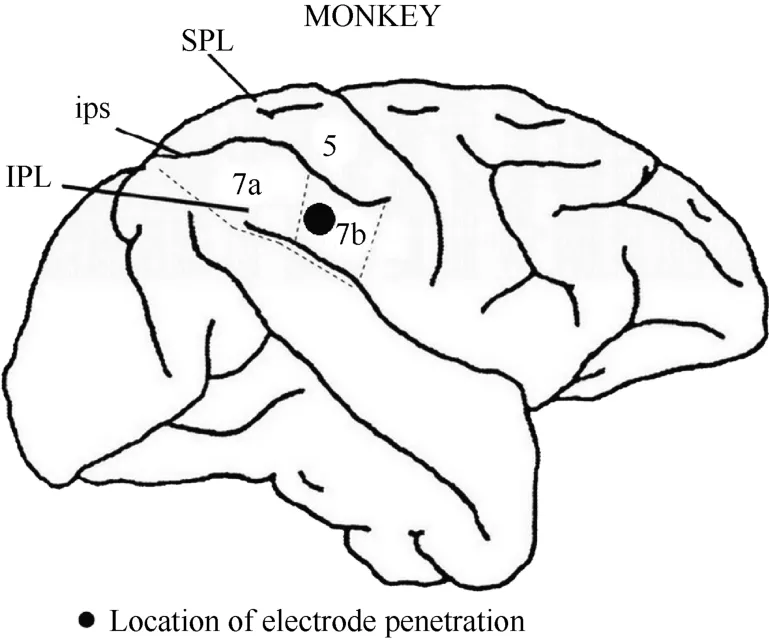

After the series of experiments was completed,monkey 1 was injected with an overdose of pentobarbital sodium (100 mg/kg) and perfused with saline and 4%paraformaldehyde. The head was removed from the body and placed in a stereotactic apparatus. The skull and dura mater were removed to expose the brain. The intraparietal, superior temporal and lateral sulci were mapped stereotactically. Figure 3 shows the entry location of the electrode in relation to the sulci for monkey 1. On this basis, we confirmed that the recording sites were located in the caudal portion of area 7b.

Figure 3 Locations of area 7b and electrode penetration. Black dot: the recording area was ~4 mm in diameter and~2 mm in depth. IPL, the inferior parietal lobe; SPL,the superior parietal lobe; ips, the intraparietal sulcus.

RESULTS

Response categories

In total, 291 neurons in caudal area 7b were examined in the right hemispheres of two awake monkeys. In these cells, neuronal responses were classified as somatosensory, visual, or bimodal(somatosensory + visual). We also tested 82 cells in this area using auditory stimuli and found that only one (1%)responded. Table 1 shows the proportions of the different response types.

Table 1 Categories of neurons based on their response type

Somatosensory cells

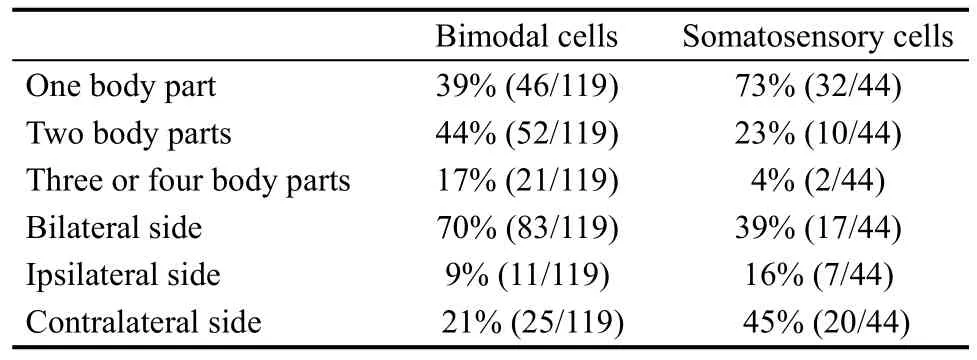

To classify the tactile receptive fields, we arbitrarily divided the monkey body into four parts: head, arms(including the hands), trunk, and legs (including the feet).The tactile receptive fields were classified into three types according to the number of body parts encompassed by the receptive fields. The tactile receptive fields were also classified into three types:ipsilateral, contralateral, and bilateral according to the recording hemisphere. The distribution of the receptive fields of the somatosensory cells is shown in Table 2.

Table 2 The distribution of tactile receptive fields

Visual cells

In total 35 of the 42 purely visual cells were recorded for a time to test the location of the visual receptive fields, whereas the remaining seven cells were only studied long enough to determine that they had visual responses. The visual receptive fields were divided in two ways. According to recording hemisphere, the visual receptive fields could be divided into three categories: ipsilateral, contralateral, and bilateral to the recording hemisphere. By presenting stimuli at different distances from the monkey, neurons were classified into two types: distance > 1 m and distance < 1m. Only five visual neurons were tested with stimuli at different distances. Table 3 shows the results.

Table 3 The distribution of visual receptive fields

Bimodal cell

We assessed the locations of the tactile and visual receptive fields for 119 of the 122 bimodal cells. The remaining three cells were only recorded long enough to demonstrate the existence of visual and tactile responses.The tactile receptive and visual receptive fields of the bimodal cells were classified into different types in the same way as the somatosensory and visual cells, and the results are shown in Tables 2 and 3, respectively.

One interesting finding is that the visual receptive field in bimodal neurons always surrounds the tactile receptive field. Overall, 76% (90/119) of the bimodal cells had tactile receptive fields that spatially match their visual receptive fields.

We also tested the visual response to perceptual depth in 45 out of the 119 bimodal cells. More than half(64%) of these cells responded clearly to the stimuli presented more than 1 m away from the monkey’s face,and the remaining 36% of the visual receptive fields were confined to a depth of 1 m or less, with a mean inner-limit distance of 0.4 m.

In summary, the qualitative tests showed that there were three major types of responsive neurons in caudal area 7b: visual neurons (14%), somatosensory neurons(15%), and bimodal neurons (42%). The receptive fields of these three categories of neurons were usually large:52% (85/163) of all tactile receptive fields (including the somatosensory cells and bimodal cells) encompassed two or more parts of the body surface, 61% (100/163) of all tactile receptive fields were bilateral, and 66% (101/154)of the visual receptive fields (including the visual cells and bimodal cells) were bilateral. Most visual receptive fields extended more than 1 m away from the body part.Most of the bimodal cells had spatially matched tactile and visual receptive fields.

Reference frames for encoding visual stimuli

If a neuron showed a clear visual response, it was further studied to determine whether the receptive field moved with the eye. As described in the methods section,two types of stimuli were used and three different types of neurons were found.

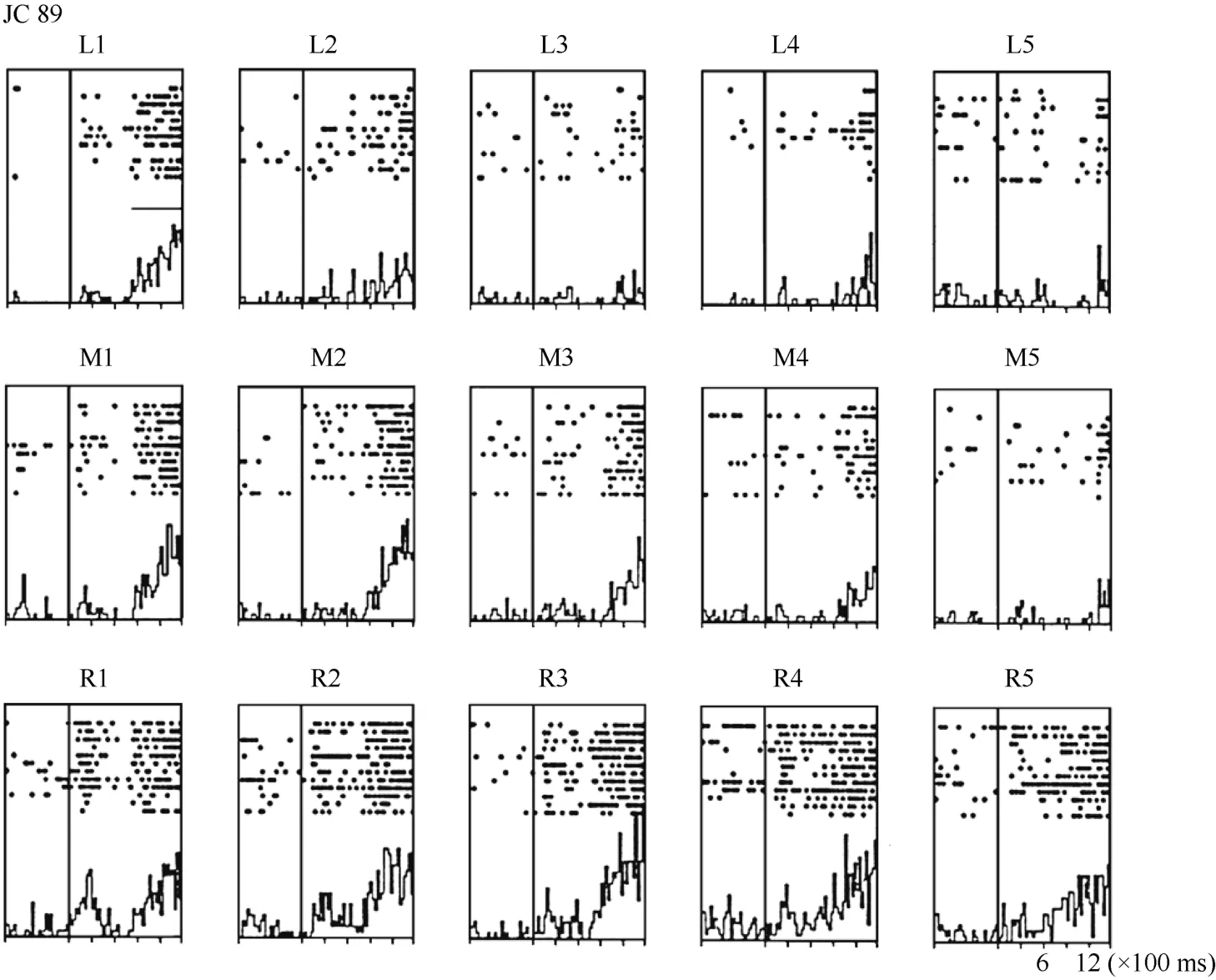

For some neurons, such as Neuron JC89 (Figure 4 and Figure 5) tested with the plastic rod stimulus, the visual receptive fields moved with the eye. As seen in the Figures, when the monkey shifted its fixation point from the right to the middle LED and then to the left LED, the position at which neuron JC89 had the best response shifted from position 3 to position 2 and then to position 1. These results suggest that the visual receptive field moves with the eyes.

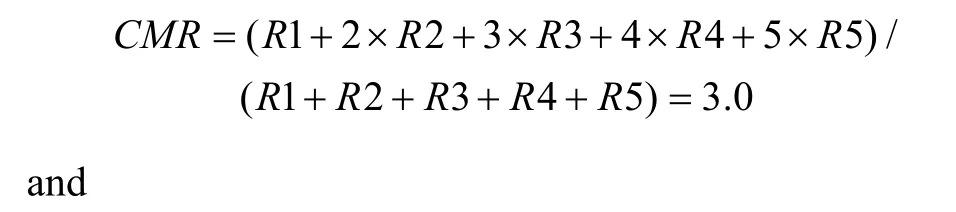

Some neurons had visual receptive fields that did not shift with eye movement. Neuron HB16 (Figure 6 and Figure 7), which was tested with the robot arm stimulus, belonged to this type of neuron. Figure 7 shows that regardless of the LED on which the monkey was fixating, the position with the best response did not change for this neuron; it always showed the strongest response to a stimulus presented at position 4, and the three tuning curves were similar and overlapping. These results demonstrate that the visual receptive fields do not move with the eyes.

Figure 4 Histograms of activity from neuron JC89 in area 7b. The upper row of the histograms shows the visual responses to the stimuli presented at the five stimulus positions when the monkey was fixating on the left LED (marked as L). The middle row of the histograms shows the visual responses when the monkey was fixating on the center LED (marked as M). The lower row of the histograms shows the visual responses when the monkey was fixating on the right LED (marked as R).For each histogram, the x-axis shows the time in ms, and the y-axis shows the neuronal responses in spikes/second. The vertical line inside each histogram indicates the time at which the visual stimulus began to move into view. The horizontal line inside histogram L1 indicates the temporal window used to calculate the neuronal response to the stimulus.

Figure 5 Three tuning curves for the activity of neuron JC89. The x-axis shows the stimulus position, and the y-axis shows the neuronal responses in spikes/second. The dashed line shows the average pre-stimulus activity.During right fixation (circles), the cell responded most strongly to the stimulus presented at position 3.During middle fixation (filled diamonds), the cell responded most strongly to the stimulus presented at position 2. During left fixation (stars), the cell responded most strongly to the stimulus presented at position 1. This result indicates that the receptive field of this cell shifted with changes in eye position.

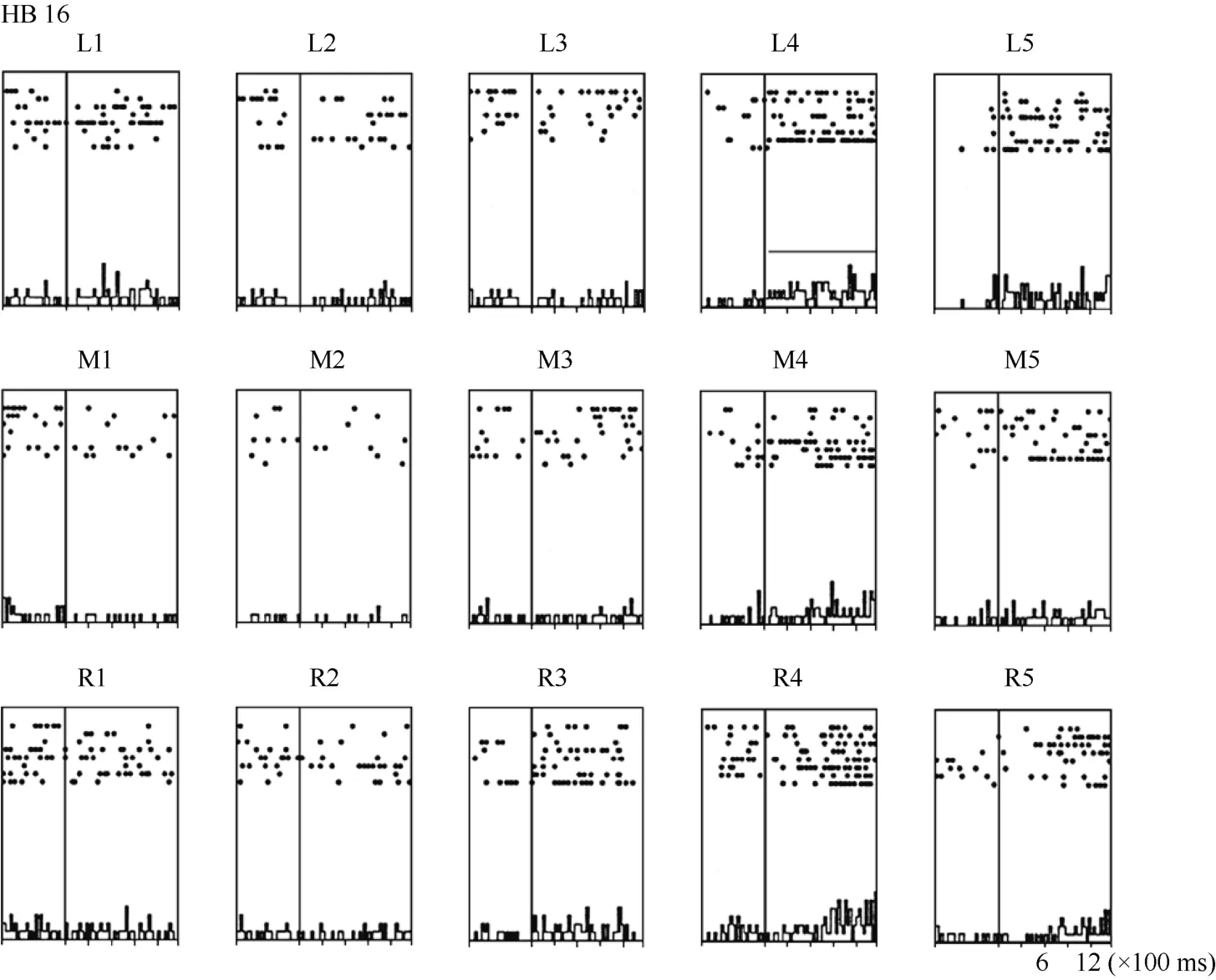

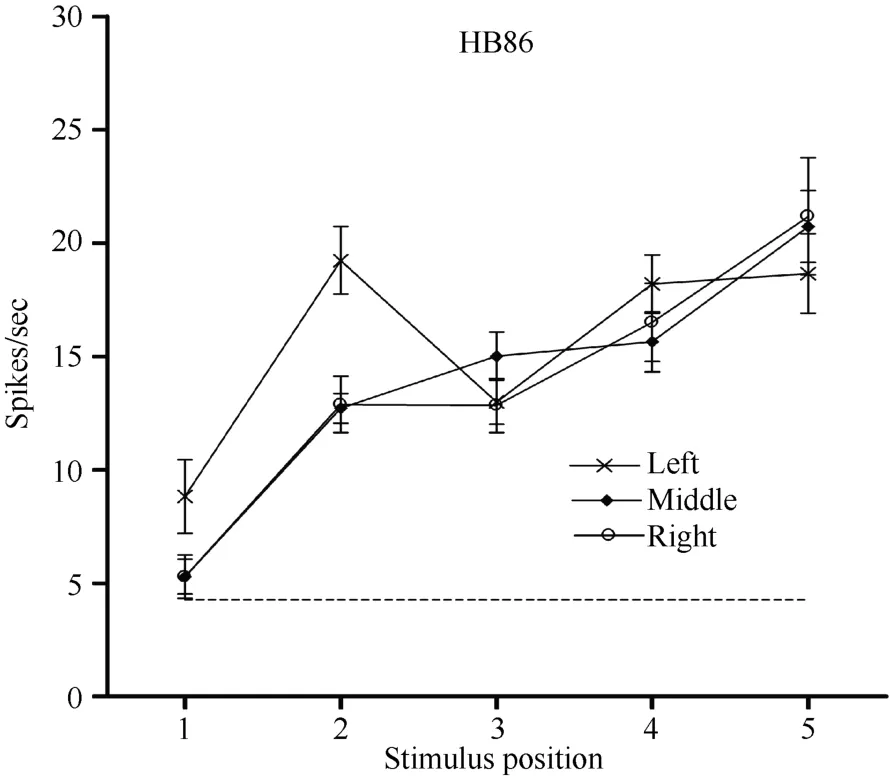

Neuron HB86 (Figure 8, Figure 9), which was tested with the plastic rod stimulus, is an example of a neuron with properties intermediate to those of a visual receptive field, which did not move with the eyes, and those of a visual receptive field, which did. That is, these neurons had visual receptive fields that moved slightly.As seen from the three tuning curves of neuron HB86 in Figure 9, when the monkey’s eyes shifted from the right LED to the middle LED, the two tuning curves overlapped, i.e., the visual receptive field did not shift with the eyes. In contrast, when the eye moved from the middle LED to the left LED, the visual receptive field partially shifted to the left as indicated by increased responses to stimuli at positions 1 and 2.

To quantify the results, a shift index (SI) was calculated for each neuron to measure how much the visual receptive field shifted when the eye position changed because the monkey was fixating on different LEDs. An example SI calculation for neuron JC89 is as follows:Thus, the shift index of JC89 is SI(J C 8 9)= C MRCML= 3 .0 - 2 .2 = 0 .8.

Figure 6 Histograms of the activity of neuron HB16. Axes are the same as in Figure 4. The horizontal line inside histogram L4 indicates the temporal window used to calculate the neuronal responses to the stimulus.

Figure 7 Three tuning curves for the activity of neuron HB16.Axes are the same as in Figure 5. During right LED fixation (circles), middle LED fixation (filled diamonds), and left LED fixation (stars) the cell responded most strongly to the stimulus presented at position 4. This result indicates that the receptive field of neuron HB 16 did not shift with changes in eye position.

Using the same formula, the shift indices for the other two example neurons are as follows: neuron HB16= 0.1 and neuron HB86 = 0.3.

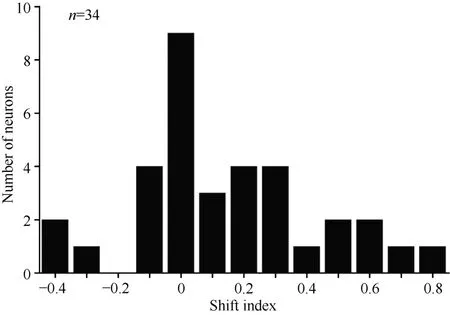

Overall, 34 neurons showing clear responses to the automatically presented stimuli demonstrated peak responses. We calculated the shift index for each of the 34 neurons. Figure 10 shows the distribution of the shift indices was from –0.4 to 0.8. The distribution of the shift index was significantly greater than zero (χ2= 9.23, P <0.01). On average, the visual receptive fields moved when the eyes moved. Thus, some neurons had visual receptive fields that moved with eye movement, some neurons had partially moving visual receptive fields, and some had receptive fields that did not move with the eyes.

Effect of eye position on the neuronal firing rate

In addition to the location of the visual receptive field, the visual response magnitude of caudal area 7b neurons was sometimes significantly influenced by the position of the eyes in the orbit, which is termed the modulation effect. For example, regardless of the LED on which the monkey fixated, HB169 responded with almost equal strength at each of the five positions,although its response was slightly stronger when the stimulus was at position 5 (Figure 11). However, the response magnitude of the cell decreased monotonically

Figure 8 Histograms of the activity of neuron HB86. Axes are the same as in Figure 4. The horizontal line inside histogram L2 indicates the temporal window used to calculate the neuronal response to the stimulus.

Figure 9 Three tuning curves for the activity of neuron HB86.Axes are the same as in Figure 5. During right LED fixation (circles) and middle LED fixation (filled diamonds), the two corresponding tuning curves overlapped. The cell showed the weakest response to the stimulus presented at position 1 and the strongest response to the stimulus at position 5. In contrast,when the eyes moved from the middle LED to the left LED (stars), the visual receptive field partially shifted to the left as indicated by the increased responses to stimuli at positions 1 and 2. Overall, the visual receptive field of neuron HB 86 partially shifted with changes in eye position.

Figure 10 Distribution of shift indices for 34 neurons. The distribution of shift indices was significantly above zero (P< 0.01)

as the eye moved from the right LED to the middle LED and from the middle LED to the left LED. A 5 × 3 within-subjects two-way ANOVA (5 stimulus positions ×3 eye positions) showed that the modulation of the cell response was significant (P < 0.001). Neuron JC 89 showed the same type of response modulation (P < 0.05).

In contrast, neuron HB16 responses were not modulated by eye position (P > 0.5). This cell responded best to the stimulus presented at position 4. The magnitude of the visual response did not change significantly when the eyes were at different positions in the orbit.

Figure 11 Three tuning curves for the activity of neuronHB169. Axes are the same as in Figure 5. Regardless of the LED on which the monkey fixated, neuron HB169 responded to the stimulus with almost equal strength when presented at each of the five positions, although the response was slightly stronger at position 5. However, the response magnitude decreased monotonically as the eyes moved from the right LED(circles) to the middle LED (filled diamonds) and from the middle LED to the left LED (stars). This result indicates that the visual response magnitude of neuron HB169 was significantly modulated by changes in eye position (P < 0.001).

The ANOVA for the 34 neurons showed that the visual response magnitudes of 12 cells (35%) were significantly modulated by eye position, and the response magnitudes of the remaining 22 cells (65%) were not significantly modulated by eye position.

DISCUSSION

Visual and tactile responses in caudal area 7b

In this study, we qualitatively tested the sensory properties of neurons in caudal area 7b. Three major types of responsive neurons were found: visual neurons(14%), somatosensory neurons (15%), and bimodal neurons (42%). The bimodal, visual-somatosensory cells usually responded to visual stimuli positioned close to the tactile receptive field. Some cells responded only to stimuli within centimeters of the body whereas others responded to stimuli presented more than 1 m away.

Other studies regarding the general properties of neurons in area 7b have also demonstrated that it contains visual cells, somatosensory cells, and bimodal cells (Dong et al, 1994; Leinonen et al, 1979). The proportions of cells across these three categories varied from study to study, with that the percentage of bimodal neurons varying from 6% to 33%. The present study showed 42% bimodal neurons.

One possible reason for these quantitative differences is that the stimuli and the way in which stimuli are used may vary across labs. For example, we found that the visual response to a stimulus delivered from behind the animal and then moved into its view was usually better than the visual response observed when the same stimulus was delivered from in front of the animal.It is possible that the stimulus coming from behind was unexpected, and therefore, it drew more attention. Other labs using stimuli presented from the front may therefore report a lower percentage of visual responses. Another possible reason for the quantitative differences is that the recording sites (i.e., the sample population) may differ from the caudal part of area 7b used in the present study.Furthermore, the monkeys used in our study were awake,whereas other studies were conducted on anesthetized monkeys. As described previously, the wakefulness of animals can affect the response properties of area 7b neurons (Robinson & Burton, 1980). When the monkey is asleep, the receptive fields of some somatosensory neurons become smaller, the borders become more difficult to define, and the somatic activations become less clear.

Transformation of reference frames

It has been suggested that body-part-centered receptive fields provide a general solution to the central problem of sensory-motor integration (Graziano et al,1994) and that this type of reference frame can encode the distance and direction from a body part to a nearby visual stimulus (Graziano et al, 1997). However, to proceed from a retinocentric reference frame to a bodypart-centered reference frame requires a complicated transformation system that involves several brain areas.

Only one study has reported that, in some cases, the visual responses of neurons in area 7b do not depend on the direction of the gaze (Leinonen et al, 1979). Our present research is the first quantitative study to explore whether the visual receptive fields of neurons in caudal area 7b move with the eyes. We found that neurons in caudal area 7b encoded space in a hybrid reference frame,and some neurons encoded space in a retinocentric reference frame. We did not further differentiate the reference frames employed by the non-retinocentric neurons, e.g., head-centered, body-centered, or worldcentered, but showed that the caudal part of area 7b was one component of the reference frame transformation system.

Recent studies on the inferior parietal lobe (IPL)challenge the widely used bipartite subdivision of the IPL convexity into caudal and rostral areas (7a and 7b,respectively). These studies provide strong support for a subdivision of the IPL convexity into four distinct areas(Opt, PG, PFG, and PF) and indicate that these four areas are not only cytoarchitectonically distinct but also distinct in connectivity (Gregoriou et al, 2006; Rozzi et al, 2006). In our study, the recording site was located in the caudal portion of area 7b, corresponding to area PFG.The present results provide evidence that caudal area 7b may be one component of the reference frame transformation, which is consistent with the proposed function based on the connectivity pattern of area PFG(Borra et al, 2008; Fogassi & Luppino, 2005; Rozzi et al,2006).

Eye-position-independent visual receptive fields in other brain areas

The anterior intraparietal (AIP) area is located rostrally in the lateral bank of the intraparietal sulcus and is known to play a crucial role in visuomotor transformations for grasping. Anatomically, the AIP is connected to the IPL and the rostral ventral premotor area F5 (Borra et al, 2008; Luppino et al, 1999).Reversible lesion experiments have shown that AIP inactivation produces grasping impairment (Gallese et al,1994). Visual, motor, and visuomotor neurons have been recorded during the execution of grasping movements(Murata et al, 1996; Murata et al, 2000; Sakata et al,1995; Taira et al, 1990).

Another parietal area suggested to have eyeposition-independent visual receptive field is the parietooccipital visual area (V6A). Studies have shown that although most of the visual receptive fields are eyecentered, there is a small portion of neurons in this area with visual receptive fields that remain static when the eyes move and another small group of visual receptive fields that partially move with the eyes (Fattori et al,1992; Galletti et al, 1993; Galletti et al, 1995).

Area 7b, VIP, and PMv are monosynaptically interconnected and all project to the putamen (Cavada &Goldman-Rakic, 1989a; Cavada & Goldman-Rakic,1989b; Cavada & Goldman-Rakic, 1991; Matelli et al,1986). Studies of the putamen of anesthetized and awake macaque monkeys have shown that about 30% of the cells with tactile responses also respond to visual stimuli.For these bimodal neurons, the visual receptive fields are anchored to the tactile receptive fields, moving as the tactile receptive fields move (Graziano & Gross, 1993).

Comparing these brain areas quantitatively by calculating the fraction of each cell type in each individual area, might provide more information about how the system works, and more experiments should be conducted in which all of these areas are tested using identical procedures.

In summary, we tested the response properties and the spatial encoding coordinates of neurons in caudal area 7b. Three major kinds of responsive neurons were found in this area: visual neurons, somatosensory neurons, and bimodal neurons. The receptive fields were usually large, and visual receptive fields of the bimodal neurons were always located near tactile receptive fields.Neurons in this area encoded space in a hybrid reference frame, which may be a characteristic of the reference frame transformation system. In addition, the responses of some neurons were modulated by the eye position.

Andersen RA, Essick GK, Siegel RM. 1985. Encoding of spatial location by posterior parietal neurons. Science, 230(4724): 456-458.

Andersen RA, Asanuma C, Essick G, Siegel RM. 1990a. Corticocortical connections of anatomically and physiologically defined subdivisions within the inferior parietal lobule. J Comp Neurol, 296(1): 65-113.

Andersen RA, Bracewell RM, Barash S, Gnadt JW, Fogassi L. 1990b.Eye position effects on visual, memory, and saccade-related activity in areas LIP and 7a of macaque. J Neurosci, 10(4): 1176-1196.

Andersen RA, Snyder LH, Bradley DC, Xing J. 1997. Multimodal representation of space in the posterior parietal cortex and its use in planning movements. Annu Rev Neurosci, 20(1): 303-330.

Borra E, Belmalih A, Calzavara R, Gerbella M, Murata A, Rozzi S,Luppino G. 2008. Cortical connections of the macaque anterior intraparietal (AIP) area. Cereb Cortex, 18(5): 1094-1111.

Cavada C, Goldman-Rakic PS. 1989a. Posterior parietal cortex in rhesus monkey: II. Evidence for segregated corticocortical networks linking sensory and limbic areas with the frontal lobe. J Comp Neurol,287(4): 422-425.

Cavada C, Goldman-Rakic PS. 1989b. Posterior parietal cortex in rhesus monkey: I. Parcellation of areas based on distinctive limbic and sensory corticocortical connections. J Comp Neurol, 287(4): 393-421.Cavada C, Goldman-Rakic PS. 1991. Topographic segregation of corticostriatal projections from posterior parietal subdivisions in the macaque monkey. Neuroscience, 42(3): 683-696.

Clower DM, West RA, Lynch JC, Strick PL. 2001. The inferior parietal lobule is the target of output from the superior colliculus, hippocampus,and cerebellum. J Neurosci, 21(16): 6283-6291.

Clower DM, Dum RP, Strick PL. 2005. Basal ganglia and cerebellar inputs to 'AIP'. Cereb Cortex, 15(7): 913-920.

Colby CL, Duhamel JR, Goldberg ME. 1993. Ventral intraparietal area of the macaque: anatomic location and visual response properties. J Neurophysiol, 69(3): 902-914.

Dong WK, Chudler EH, Sugiyama K, Roberts VJ, Hayashi T. 1994.Somatosensory, multisensory, and task-related neurons in cortical area 7b (PF) of unanesthetized monkeys. J Neurophysiol, 72(2): 542-564.

Duhamel JR, Bremmer F, BenHamed S, Graf W. 1997. Spatial invariance of visual receptive fields in parietal cortex neurons. Nature,389(6653): 845-848.

Duhamel JR, Colby CL, Goldberg ME. 1998. Ventral intraparietal area of the macaque: congruent visual and somatic response properties. J Neurophysiol, 79(1): 126-136.

Fattori P, Galletti C, Battaglini PP. 1992. Parietal neurons encoding visual space in a head-frame of reference. Boll Soc Ital Biol Sper,68(11): 663-670.

Fogassi L, Gallese V, di Pellegrino G, Fadiga L, Gentilucci M, Luppino G, Matelli M, Pedotti A, Rizzolatti G. 1992. Space coding by premotor cortex. Exp Brain Res, 89(3): 686-690.

Fogassi L, Gallese V, Fadiga L, Luppino G, Matelli M, Rizzolatti G.1996. Coding of peripersonal space in inferior premotor cortex (area F4) . J Neurophysiol, 76(1): 141-157.

Fogassi L, Luppino G. 2005. Motor functions of the parietal lobe[J].Curr Opin Neurobiol, 15(6): 626-631.

Fuchs AF, Robinson DA. 1966. A method for measuring horizontal and vertical eye movement chronically in the monkey. J Appl Physiol, 21(3):1068-1070.

Gallese V, Murata A, Kaseda M, Niki N, Sakata H. 1994. Deficit of hand preshaping after muscimol injection in monkey parietal cortex.Neuroreport, 5(12): 1525-1529.

Galletti C, Battaglini PP, Fattori P. 1993. Parietal neurons encoding spatial locations in craniotopic coordinates. Exp Brain Res, 96(2): 221-229.

Galletti C, Battaglini PP, Fattori P. 1995. Eye position influence on the parieto-occipital area PO (V6) of the macaque monkey. Eur J Neurosci,7(12): 2486-2501.

Gentilucci M, Scandolara C, Pigarev IN, Rizzolatti G. 1983. Visual responses in the postarcuate cortex (area 6) of the monkey that are independent of eye position. Exp Brain Res, 50(2-3): 464-468.

Graziano MS, Gross CG. 1993. A bimodal map of space:somatosensory receptive fields in the macaque putamen with corresponding visual receptive fields. Exp Brain Res, 97(1): 96-109.

Graziano MS, Yap GS, Gross CG. 1994. Coding of visual space by premotor neurons. Science, 266(5187): 1054-1057.

Graziano MS, Hu XT, Gross CG. 1997. Visuospatial properties of ventral premotor cortex. J Neurophysiol, 77(5): 2268-2292.

Graziano MS. 1999. Where is my arm? The relative role of vision and proprioception in the neuronal representation of limb position. Proc Natl Acad Sci U S A, 96(18): 10418-10421.

Gregoriou GG, Borra E, Matelli M, Luppino G. 2006. Architectonic organization of the inferior parietal convexity of the macaque monkey.J Comp Neurol, 496(3): 422-451.

Leinonen L, Hyvärinen J, Nyman G, Linnankoski I. 1979. I. Functional properties of neurons in lateral part of associative area 7 in awake monkeys. Exp Brain Res, 34(2): 299-320.

Luppino G, Murata A, Govoni P, Matelli M. 1999. Largely segregated parietofrontal connections linking rostral intraparietal cortex (areas AIP and VIP) and the ventral premotor cortex (areas F5 and F4). Exp Brain Res, 128(1-2): 181-187.

Matelli M, Gallese V, Rizzolatti G. 1984. Neurological deficit following a lesion in the parietal area 7b in the monkey. Boll Soc Ital Biol Sper,60(4): 839-844.

Matelli M, Camarda R, Glickstein M, Rizzolatti G. 1986. Afferent and efferent projections of the inferior area 6 in the macaque monkey. J Comp Neurol, 251(3): 281-298.

Murata A, Gallese V, Kaseda M, Sakata H. 1996. Parietal neurons related to memory-guided hand manipulation. J Neurophysiol, 75(5):2180-2186.

Murata A, Gallese V, Luppino G, Kaseda M, Sakata H. 2000.Selectivity for the shape, size, and orientation of objects for grasping in neurons of monkey parietal area AIP. J Neurophysiol, 83(5): 2580-2601.Robinson CJ, Burton H. 1980. Organization of somatosensory receptive fields in cortical areas 7b, retroinsula, postauditory and granular insula of M. fascicularis. J Comp Neurol, 192(1): 69-92.

Rozzi S, Calzavara R, Belmalih A, Borra E, Gregoriou GG, Matelli M,Luppino G. 2006. Cortical connections of the inferior parietal cortical convexity of the macaque monkey. Cereb Cortex, 16(10): 1389-1417.

Sakata H, Taira M, Murata A, Mine S. 1995. Neural mechanisms of visual guidance of hand action in the parietal cortex of the monkey.Cereb Cortex, 5(5): 429-438.

Schlack A, Sterbing-D'Angelo SJ, Hartung K, Hoffmann KP, Bremmer F. 2005. Multisensory space representations in the macaque ventral intraparietal area. J Neurosci, 25(18): 4616-4625.

Taira M, Mine S, Georgopoulos AP, Murata A, Sakata H. 1990. Parietal cortex neurons of the monkey related to the visual guidance of hand movement. Exp Brain Res, 83: 29-36.