A Video Captioning Method by Semantic Topic-Guided Generation

2024-03-12OuYeXinliWeiZhenhuaYuYanFuandYingYang

Ou Ye,Xinli Wei,Zhenhua Yu,Yan Fu and Ying Yang

College of Computer Science and Technology,Xi’an University of Science and Technology,Xi’an,710054,China

ABSTRACT In the video captioning methods based on an encoder-decoder,limited visual features are extracted by an encoder,and a natural sentence of the video content is generated using a decoder.However,this kind of method is dependent on a single video input source and few visual labels,and there is a problem with semantic alignment between video contents and generated natural sentences,which are not suitable for accurately comprehending and describing the video contents.To address this issue,this paper proposes a video captioning method by semantic topicguided generation.First,a 3D convolutional neural network is utilized to extract the spatiotemporal features of videos during the encoding.Then,the semantic topics of video data are extracted using the visual labels retrieved from similar video data.In the decoding,a decoder is constructed by combining a novel Enhance-TopK sampling algorithm with a Generative Pre-trained Transformer-2 deep neural network,which decreases the influence of“deviation”in the semantic mapping process between videos and texts by jointly decoding a baseline and semantic topics of video contents.During this process,the designed Enhance-TopK sampling algorithm can alleviate a long-tail problem by dynamically adjusting the probability distribution of the predicted words.Finally,the experiments are conducted on two publicly used Microsoft Research Video Description and Microsoft Research-Video to Text datasets.The experimental results demonstrate that the proposed method outperforms several state-of-art approaches.Specifically,the performance indicators Bilingual Evaluation Understudy,Metric for Evaluation of Translation with Explicit Ordering,Recall Oriented Understudy for Gisting Evaluation-longest common subsequence,and Consensus-based Image Description Evaluation of the proposed method are improved by 1.2%,0.1%,0.3%,and 2.4%on the Microsoft Research Video Description dataset,and 0.1%,1.0%,0.1%,and 2.8%on the Microsoft Research-Video to Text dataset,respectively,compared with the existing video captioning methods.As a result,the proposed method can generate video captioning that is more closely aligned with human natural language expression habits.

KEYWORDS Video captioning;encoder-decoder;semantic topic;jointly decoding;Enhance-TopK sampling

1 Introduction

At present,videos have become an essential carrier of information dissemination and an important source of daily human life,learning,and knowledge acquisition,such as object detection studies,video surveillance technology popularization,and film entertainment[1–4].The studies of video captioning have become a hotspot in computer vision and cross-modal content cognition [5,6].Their purpose is to enhance the intelligent understanding and analysis of video data by using natural language to describe and interpret the content of videos,aiming to achieve structured summarization and re-expression of visual content.In recent years,video captioning studies have gained attention for their applications in navigation assistance,human-computer interaction,automatic interpretation,and video monitoring[7].

Since 2010,there have been two primary video captioning methods: template-based video captioning [8] and retrieval-based video captioning [9].The template-based video captioning methods require many manually designed annotations,resulting in a single syntactic structure and limited sentence diversity;in contrast,the retrieval-based video captioning methods are easily limited by the retrieval samples,which makes it difficult to generate accurate natural sentences.Currently,with the in-depth studies of deep learning in image processing[10]and machine translation[11],deep network models with encoder-decoder have been applied to generate video captioning [12,13].This kind of model regards to video captioning as a process of “translation”.In the encoding phase,the visual contents of video data are encoded into feature vectors using an encoder;in the decoding phase,a decoder is utilized to map feature vectors to generate semantic aligned natural sentences.In general,the video captioning methods based on encoder-decoder can achieve caption generations that are more in line with human language habits through cross-media semantic alignment.However,since the rich and diverse presentation forms of video content,relying solely on the visual features extracted by an encoder can easily ignore some details and be affected by noise and changes in appearance features,which are not conducive to accurately comprehending and describing the video contents.To address this issue,an attention mechanism is introduced into a video captioning method based on the encoderdecoder in [14].This method improves the quality of generated sentences by assigning weights to optimize and select the temporal features.At present,although attention mechanisms can improve the generated accuracy of video captioning,a semantic alignment issue due to a single input source limits accurate video captioning generation.

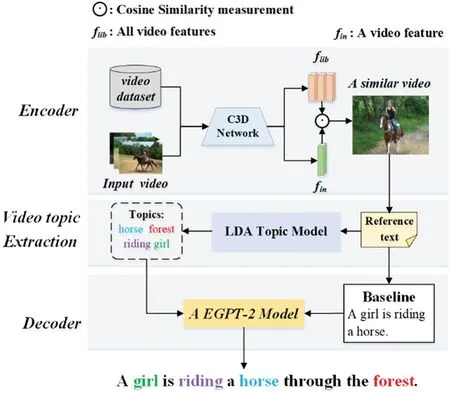

Considering that the semantic topic expression is not only limited to text information but also can convey visual information,which can effectively converge cross-modal semantic elements in the visual and linguistic domains and alleviate the limitations of limited visual features.Furthermore,incorporating the semantic topics of video data can also effectively guide the caption generation of the video contents.Therefore,a video captioning method based on semantic topic-guided generation is proposed,which guides the generation of video captioning using external semantic topic information.This method utilizes the Convolutional 3D(C3D)model to extract advanced spatiotemporal features of video data,then generates video captioning by constructing a decoder Enhance-TopK Generative Pre-rained Transformer-2(EGPT-2)based on the extracted semantic topics of the video data.Taking Fig.1 as an example,when input video data of “a woman rides a horse through the forest”,even though the scene“forest”and the object action“through”are not easy to obtain in the encoding and decoding phases,they are still possible to utilize a Latent Dirichlet Allocation model to construct a semantic topic of the video data to guide and predict the video content of“girl riding through the forest”by retrieving“woman”,“horse”,“riding”and other keywords contained in a video with similar semantics.In this process,to alleviate the long tail problem existing in the decoding phase and make the generation of video captioning more accurate,a novel sampling algorithm named Enhance-TopK is designed,which calculates the probability distribution of the topic correlation coefficient to affect the probability distribution of the predictive words,further ensuring the accurate generation of the video captioning.As shown in the decoding phase of Fig.1,the generated video captioning is“a girl is riding a horse through the forest”instead of passing through“fields”or“wilderness”.

Figure 1:The general idea of a video captioning method based on semantic topic-guided generation

This paper’s contributions are summarized as follows: (1) an innovative idea of utilizing video semantic topics as a“bridge”to guide the video captioning generation is proposed,which can improve the semantic alignment between generated natural sentences and video content,thereby reducing the“deviation”effect in the semantic mapping process between video and text;(2)in the decoding phase,a baseline caption of a video and its semantic topic are jointly decoded through an EGPT-2 deep network model.In this process,a sampling algorithm named Enhance-TopK is designed to enhance the topic impact on the next prediction word,thereby adjusting the probability distribution of the prediction words.The purpose is to ensure that the generated video captioning is consistent with the video topic and keeps the natural sentences smooth;(3) the proposed method is verified on the Microsoft Research Video Description(MSVD)and Microsoft Research-Video to Text(MSR-VTT)datasets with a significant difference in size to evaluate the different effects of semantic topics on the generation of video captioning,which provides help for in-depth studies on how the topic information of video content can guide the video captioning generation.

This paper is divided into several sections.First,Section 2 presents a brief review of related video captioning.Subsequently,Section 3 introduces a video captioning method based on semantic topicguided generation.The performance of this method is then verified through experiments in Section 4.Finally,we conclude and provide the future study in Section 5.

2 Related Works

In the early stages of video caption studies,video captioning methods mainly consisted of template-based and retrieval-based video captioning methods.Template-based video captioning methods involve two phases: syntax generation and semantic constraints.Syntax generation refers to detecting attributes,concepts,and relations of objects in videos using designed manual features;semantic constraint refers to pre-setting fixed sentence templates or syntax rules,and then the detected objects are combined into the final natural language descriptions[15].For example,a study in[16]first detects objects in images,and predicts the attributes of objects and prepositional relations between objects;then a Conditional Random Fields(CRF)model is constructed to predict labels in the form of

With the in-depth studies of video captioning approaches,the encoder-decoder models combining convolutional neural networks with recurrent neural networks[20]have been widely used to generate video captioning.For example,Venugopalan et al.[21] utilized an encoder of the AlexNet model to extract video features.Then,these features are fed into a short-term long memory (LSTM)network model to generate the natural sentences.However,this method ignores the influence of the temporal features on the semantic representation of video data.To address this issue,the study in[22]constructed a long-short-term graph(LSTG)to capture the short-term spatial semantic relationship and long-term conversion dependency relationship between several visual objects,then fully exploring the spatiotemporal relationships between visual objects,and implements the inference of object relationship on LSTG through the global gated module,further improving the quality of generated video captioning.Zheng et al.[23] suggested that existing methods prioritize the accuracy of object category prediction in generated video captioning.However,it often overlooks object interactions.Hence,a Syntax-Aware Action Targeting model is designed to detect semantic objects and dynamic information to learn the actions in videos to improve the accuracy of action prediction in the generated video captioning.The work in[24]proposed a semantic-based video keyframe method that extracts the initial video keyframe using a convolutional neural network model and the feature windows.Then the keyframes are automatically marked by the image caption network.Finally,a pre-interactive LSTM network model is proposed to generate video captioning,which can fully extract the semantic feature of a video using the video keyframes to improve the generation of video captioning.However,the quality of the generated video captioning depends on the accuracy of keyframe annotations.Liu et al.[25]proposed an Unpaired Video Captioning with Visual Injection system to address the issue that sufficient paired data is unavailable for many targeted languages.However,the semantic information of video captioning is still limited.The study in[26]presented a novel global-local encoder to obtain video captioning across frames by generating a rich semantic vocabulary.However,this method only enriches semantic vocabulary by encoding different visual features,and the source of semantic information is relatively single.Recent studies of video captioning utilized an encoder-decoder to extract the visual features from the video data and then decode these features with a decoder to produce human-like sentences that match the video content semantically.However,video content is diverse and complex,which can result in an encoder losing some critical visual information during the encoding process.This loss of part visual information can make it difficult to accurately convey the video content during the decoding phase.

There have been related studies to address this issue.For example,the work in [27] proposed a dual-stream learning method with multiple instances and labels,which minimizes the semantic gap between the original video and the generated captions through a dual-learning mechanism of captioning generation and video reconstruction.Furthermore,a Textual-Temporal Attention Model was proposed in[28].This model introduces pre-detected visual labels from the videos and selects the most relevant visual labels based on the contextual background.Subsequently,the temporal attention mechanism is used to enhance semantic consistency between visual content and generated sentences.Nevertheless,whether it is simple to label extraction or enhance the semantic alignment between video and text by stacking a multi-layer attention mechanism,it may introduce irrelevant semantic noise,which may further amplify the impact of“semantic deviation”on the generation of video captioning.

3 The Proposed Method

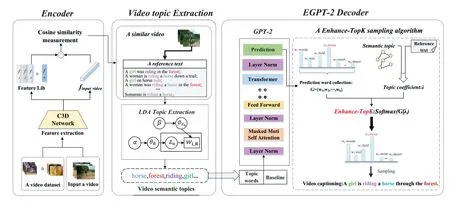

To alleviate the problem of “semantic bias” caused by limited visual information,a video captioning method based on semantic topic-guided generation is proposed,and the overall idea is shown in Fig.2.This approach comprises an encoder,a semantic topic extraction module,and a decoder.In the encoding phase,the encoder in this methodology utilizes a C3D deep network model to capture the spatiotemporal features.At the stage of semantic topic extraction of videos,the retrieval of video reference sentences and extraction of semantic topics are mainly implemented.Due to the visual consistency between the video reference sentences and the video contents,the topic words with the reference textRrelated to the contents of an input video are extracted in this phase.Then,a probability set of topic words is obtained,denoted asPtopic={Pw1,Pw2,...,Pwn},wherepwidenotes a topic probability of theithtopic word.Finally,we select the top n(n=6 is set in this paper)topic wordsTv={t1,t2,...,tn}in the probability rankingPtopicas a semantic topic of an input video.In the decoding phase,aiming to strengthen the semantic alignment between the video contents and the generated natural sentences under the guidance of the semantic topics,an EGPT-2 decoder is constructed to jointly decode a baseline captionCband the semantic topics of a given input video.In this process,aiming to improve the accuracy of the predicted words,an Enhance-TopK sampling algorithm is designed to calculate the probability distribution of the topic-related coefficientsλaffecting the predicted words,which is used to enhance the influence of the video semantic topics on the prediction words in the next moment.

3.1 An Encoder Construction of C3D Deep Neural Network

Since video data have both temporality and spatiality,the C3D deep network model can extract both appearance and motion features from videos simultaneously,a pre-trained C3D deep network model with eight convolution layers,five pooling layers,two fully connected layers,and a softmax output layer was used in this paper.Specifically,we take anyithvideo clipviin a video datasetV={v1,v2,...,vN} as the input video to inject into a C3D deep network model and extract the spatiotemporal features of a given video.Suppose a video clipvihaslvideo frames.Each video frame’s size isw×hand has the number ofcchannels.We designate the values ofwandhare all 112 andc=3.In addition,we refer to the parameter settings in[15]to set the convolution kernel size 3×3×3 and the step size 1×1×1.To preserve the spatiotemporal features of the videos,we configure the size of the pooling kernel in the initial pooling layer as 1×2×2 with the step size as 1×2×2,and the remaining 3D pooling kernel size with step size 2×2×2 for implementing the convolution operations,and the maximum pooling processes.In the convolution operation,the C3D deep network model performs the convolution operation by stacking the 3D convolution kernel and the cube formed by multiple consecutive frames.Therefore,the feature map generated by the current convolution layer can be used to capture spatiotemporal features of multiple consecutive frames in the upper layer of the network.Formally,an eigenvalue in the location(x,y,z)on thejthfeature map of theithlayer in the C3D deep network model can be acquired using Eq.(1):

where Relu(·)denotes an activation function of the Rectified Linear Unit,mdenotes the number of index relations linking a group of feature maps in the upper layer to the feature map in the current layer;Si,TiandRiare the height,width,and size in the temporal dimension of the 3D convolution kernels,respectively;denotes an eigenvalue of the point(s,t,r)linked to themthfeature map in the upper layer,andbijdenotes the bias of the current feature map.After eight convolutional layers and five maximum pooling operations,two fully connected layers are utilized to extract a spatiotemporal feature vectorfvinof size[1,4096]to represent the global features of the given input videos.Moreover,the C3D deep network model employs a dropout function with a dropout probability of 0.5 and an activation function of the Rectified Linear Unit to prevent overfitting.

Figure 2:The video captioning method based on semantic topic-guided generation

3.2 Semantic Topic Extraction of Videos

In this paper,the reference sentences of videos refer to the natural sentences related to this video content,which are derived from the description sentences of other videos like this video content.Since the reference sentences of the input videos contain valuable semantic information that aids in describing video topics,the semantic topic distribution of the video data can be extracted according to the reference sentences of the videos.

First of all,the matching pairs of“video clip–reference sentences”in a video dataset are defined aswhereNdenotes the total number of videos in a video dataset,anddenotes thejthreference sentence of theithvideo data in a video datasetV.Then,the feature vectors of the input video are used to retrieve the video data with similar semantics,and the several reference sentences of this video are spliced to form a reference textR,which is expressed asSubsequently,a reference textRis randomly selected as a baseline caption of the videovi.During the test phase of the topic extraction of video content,since the input videovindoes not have to correspond to the reference sentences,a videovresthat is most similar tovina video datasetVis retrieved by using the similarity measurement between videos and learning a semantic topic of the given input videovinwith a reference text R of the videovres.

Before retrieving similar video data,each video in the video datasets can be represented as a highdimensional feature vector by using a C3D deep network model.Then,similar video data are retrieved by calculating the similarities between the given input videovinand all other videos in a video dataset.According to[29],similar video retrieval methods based on Euclidean distance may be significantly affected by the size of the visual representation dimension,while cosine similarity can still maintain the accuracy of similarity calculation when the dimension is high.Moreover,Wang et al.[30]calculated the cosine distance between the global feature vectors of video data and the corresponding features of text captioning by weighting to measure the similarity of different videos.Therefore,the similarities between an input video and all other videos in the video set are calculated through the cosine distance in this paper,as shown in Eq.(2):

wherefvindenotes a feature vector of an input video;fvidenotes a feature vector of any one video data in the dataset,and sim(·)denotes a function of cosine similarity measurement between the input videovinand anyithvideo datavi.Here,we select a videovreswith the highest cosine similarity as the similar video ofvin.

Through the above phases,similar video datavreswith the given input videovincan be retrieved,and a reference textRcan be obtained.On this basis,this reference text can be utilized to extract a semantic topic of the given input video.Since the topic model based on the Latent Dirichlet Allocation[31]can obtain the topics of documents in the form of a probability distribution,and it is used in the studies of text clustering [32] and automatic summarization [33],the semantic topics of videos are extracted using the topic model based on Latent Dirichlet Allocation.The topic extraction process of video semantics can be regarded as a generation process of “videos→texts→topics→words”.Here,it should be noted that since the reference textRcomes from the baselines of similar video data,the above words extracted fromRusing a topic model can serve as visual labels to accurately describe the semantic topics of video data.

Specifically,a reference textRof a video is extracted first,and then a topic probability based on this reference text is calculated according to Eq.(3):

whereZR,udenotes theuthtopic probability of a reference textR,and obeys the multinomial distribution with parameters.Here,θRdenotes a topic probability distribution of reference textR,and obeys the Dirichlet distributionθR∼Dirichlet(α),whereαdenotes a priori parameter of the Dirichlet distribution,α=1/Uis set in this paper.Moreover,Udenotes the number of topics.Since all videos in a video datasetVcan be divided into several categories,for example,a dataset named MSR-VTT is divided into 20 categories,U=20 which is set for this video dataset.

On this basis,the probability distribution of topic words under theuthtopic can be obtained through Eq.(4):

wheredenotes theithtopic word of reference textRunder theuthtopic,and obeys multiple distributions with the parameters.Here,denotes the only generated topic words under theuthtopic,and obeys the Dirichlet distribution∼Dirichlet(β),βdenotes the priori parameter of the Dirichlet distribution,which is set to 0.01.

When the topic words under all topics in the reference textRhave been extracted,the semantic topics of the given input videovincan be obtained through the calculation of Eq.(5):

For any one row or column in the matrixE,the element values are sorted in reverse order.Hence,we can obtain the topntopic words as a semantic topicTv={w1,w2,...,wn}of the input videovin.

3.3 The Construction of an EGPT-2 Decoder

Inspired by the open domain question and answer tasks [34],video captioning is not generated directly based on visual features,but a GPT-2 language model [35] is introduced to generate video captioning.Since the GPT-2 language model is a language model that has been pre-trained by a large number of unsupervised data,it can learn rich semantic information from external text data,and reduce the dependence on labeled data.In the decoding phase,aiming to obtain external semantic information from the video semantic topics to enrich the semantics of video captioning,the visual semantic topics based on the GPT-2 language model and a baseline caption of video content are jointly decoded.In addition,to alleviate the long-tail problem that appears during the decoding phase,a novel Enhance-TopK sampling algorithm is designed to combine with the GPT-2 language model to construct an EGPT-2 decoder.This decoder can adjust the probability distribution of the predictive words by combining the topic correlation coefficientλ,which can reduce the impact of predicted repeating irrelevant words on the accuracy of the generated video captioning.

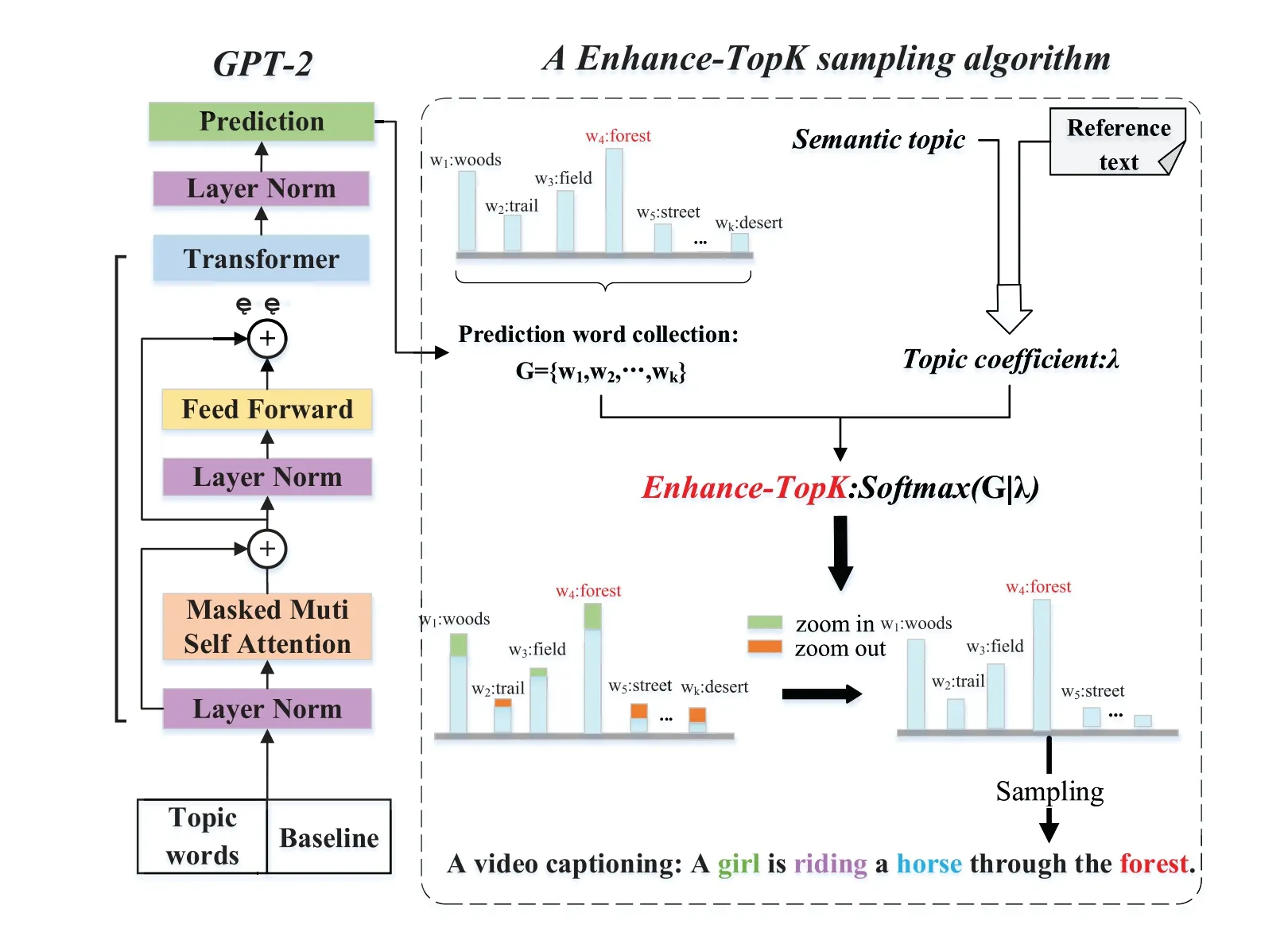

As shown in Fig.3,the EGPT-2 decoder contains a stacked 12-layer Transformer module.Specifically,the input datafinof the EGPT-2 decoder is defined as the form of a

whereheadidenotes the semantic features learned by the self-attention mechanism in theithfeature subspace,andi∈ [1,m],selfAttention(·)denotes a self-attention function.Findenotes a word embedding matrix of all tokens in the input data,μQ,μKandμVare learnable parameters,the “·”operator denotes the dot product operation of matrixes.Transformer(·)denotes an output of thejthlayer in a Transformer module (j=12,m=8 are set in this paper),concat(·)denotes the combination of semantic features learned by the self-attention mechanism in different subspaces,andμsis a transformation matrix,which is used to maintain the size invariance of semantic features.

Figure 3:The designed process of an EGPT-2 decoder

Subsequently,the feature vectorftis multiplied with the word embedding matrixDembof vocabularyD,and the result is normalized to obtain an initial probabilities set of predicted wordsGp=wherepwidenotes the co-occurrence probability of theithprediction word in the vocabularyD;pwndenotes the co-occurrence probability of thenthprediction word in the vocabularyD.The calculation is shown in Eq.(9):

wheresoftmax(·)denotes an activation function and the size ofDembis [768,50257].When using a greedy sampling algorithm for word predictions,a predicted wordxtat momenttcan be obtained by using Eq.(10):

According to Eq.(10),the greedy sampling algorithm selects the word with the highest probability for prediction at each time step.However,this method often leads to repetitive or meaningless video descriptions.When using pure random sampling,the length of the predicted word setGpis 50257,which results in a long-tail problem that can cause generated sentences to be illogical and difficult to read.To alleviate this issue,references[36]and[37]utilized a decoding method based on beam search,which can improve the accuracy of the generated natural sentences.However,such methods are prone to fall into local optimization that leads to generating a more rigid,incoherent,or repetitive loop of the natural sentence.To suppress the long-tail effect and make the generated video captioning satisfy the diversity of language expressions while maintaining the smoothness of the natural sentences,a novel Enhance-TopK sampling algorithm is constructed in this paper,as shown in Fig.4.This sampling algorithm uses the feature vectors of video data to calculate a topic correlation coefficient,which is utilized to adjust the probability distribution of predicted words to generate words related to the semantic topic of video data.

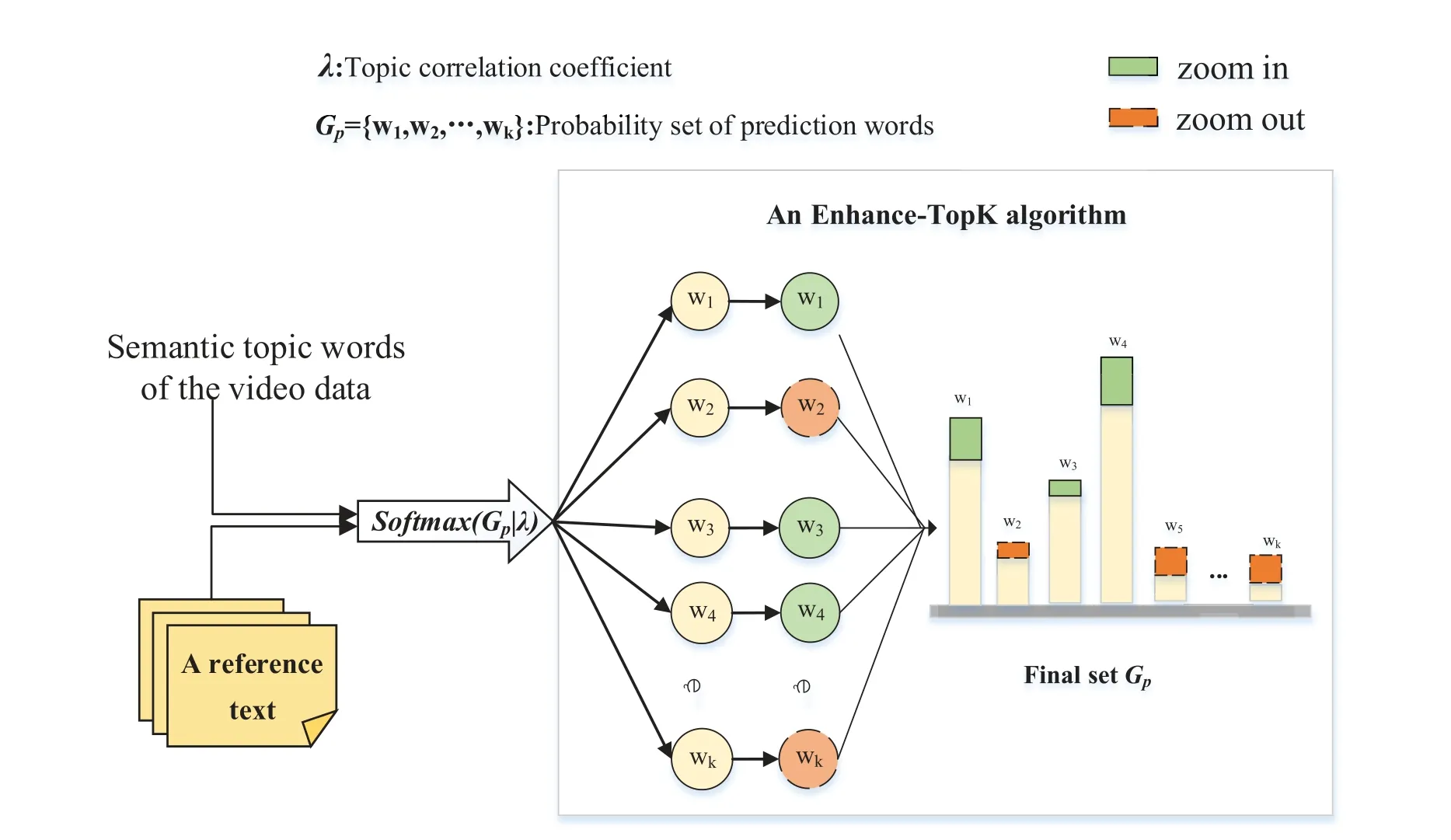

Figure 4:The idea of an Enhance-TopK sampling algorithm

Compared to existing Top-K sampling algorithms,the Enhance-TopK sampling algorithm considers the influence of the topics on the predicted word probability distribution.The “zoom in”in Fig.4 shows the words that are greatly affected by the topic inGpby using a topic correlation coefficientλ.For instance,the occurrence probabilities of words “w1”,“w3” and “w4” inGpare further influenced by the topics under the reinforcement ofλ.Moreover,“zoom-out”shows that the occurrence probability of words in a probability set of predicted wordsGpwill be further reduced,which is insensitive to the semantic topics at this moment,such as“w2”and“wk”.

Specifically,an Enhance-TopK sampling algorithm first splits the topic words of an input videovinto form the semantic topicTv,as shown in Eq(11):

wheretidenotes theithtopic word of a video;“⊕”denotes an operation of concatenating different topic words.Then,a pre-trained Sentence-Transformer deep network model [38] was used to implement word embedding calculation for a semantic topicTvand a reference sentenceyi,as shown in Eq.(12):

wherefyidenotes a feature vector of the reference sentenceyi;the sentenceTransformer(·)denotes the function of the Sentence-Transformer deep network model;denotes the word embedding of thewthword in the reference sentenceyi;headjdenotes a semantic feature output in thejthsubspace,andθdenotes the super-parameters of a Sentence-Transformer deep network model.Similarly,a feature vectorf TVof the semantic topicTvcan be obtained.

On this basis,a topic correlation coefficientλof the input videovincan be calculated by using the semantic topicTvand the reference sentenceyi,as shown in Eq.(13):

wheremis the number of sentences in a reference textR.

Then,the first Top-K words (we setK=30 in this paper) in the prediction vocabularyDare selected in reverse order of probability as an initial sampling setGw=at the momentt.To enhance the influence of the semantic topics on word prediction,we combine the topic correlation coefficientsλto renormalize the sampling setGw,as shown in Eq.(14):

whereKis the number of predicted words in the sampling setGw.In addition,the probabilities of predicted words that are outside the sampling setGware set to 0.On this basis,a prediction wordat momenttcan be obtained by using Eq.(15):

Finally,a prediction word at each moment is connected until the next prediction word is marked with

3.4 Training

In the encoding phase,a pre-trained C3D deep network model is used to extract the spatiotemporal features of the videos.The input size of the C3D deep network model is 3×16×112×112.In the semantic topic extraction of videos phase,the reference sentences of each video in the training set are spliced to form a video reference textR.After performing word segmentation and removing stop words,the semantic topics are extracted from videos using Latent Dirichlet Allocation.In the decoding phase,an EGPT-2 deep network model is constructed to jointly decode the baseline captions and semantic topics of videos,and predict the words to generate video captioning with the help of semantic topics.In the training phase,the inputfinof an EGPT-2 deep network model is a

where a sequenceconsists of all words predicted before momentt.

Finally,the EGPT-2 network model is trained using the minimum negative log-likelihood loss function by using Eq.(17).

where|S|denotes the size of the training set,andTidenotes the length of theithsentence.

In addition,this model is trained with a batch size of 8 for the MSVD dataset and 16 for the MSRVTT dataset.The number of training iterations is set to 20,and the learning rate is set to 1×10-4.Finally,aiming to avoid overfitting,the regularization rate of a Dropout algorithm is set to 0.1,and an Adam optimizer in the Stochastic Gradient Descent[39]is used for training optimization.When the training is completed,the minimum loss value on the training sets is used as the metric to select the best parameters of the EGPT-2 deep network model.

4 Experimental Evaluation

4.1 Dataset and Evaluation Indicator

This paper evaluates the proposed method’s performance through extensive experiments using MSVD [40] and MSR-VTT [41] datasets.MSVD is a collection of 1970 open-domain video clips from YouTube covering various topics such as sports and cooking.Each video has around 40 natural sentences as labels.The dataset is partitioned into three sets: a training set of 1200 video clips,a validation set of 100 video clips,and a test set of 670 video clips.On the other hand,MSR-VTT is a larger dataset consisting of 10,000 videos and 200,000 captions.Each video has an average of 20 annotation sentences.The dataset is divided into a training set of 6513 video clips,a validation set of 609 video clips,and a test set of 2878 video clips.

Currently,the evaluation indicators Bilingual Evaluation Understudy (BLEU) [42],Metric for Evaluation of Translation with Explicit Ordering (METEOR) [43],Recall Oriented Understudy for Gisting Evaluation-longest common subsequence (ROUGE-L) [44],and Consensus-based Image Description Evaluation(CIDEr)[45]are widely utilized to evaluate the quality of the generated video captioning.Since the number of n-grams overlapped between the generated and reference sentences can better reflect the quality of video captioning,the performance of the BLEU-4 indicator in the experiment has also received attention.

The experiments are conducted using Python 3.8 programming language and Pytorch 1.7.0 framework for model training on a Linux operating system.The GPU used in the experiments is RTX A5000,with 42GB memory and 100GB hard disk size.Moreover,CUDA11.0 with cuDNN8.0 is utilized to accelerate the proposed method’s computation.

4.2 Experimental Results Analysis

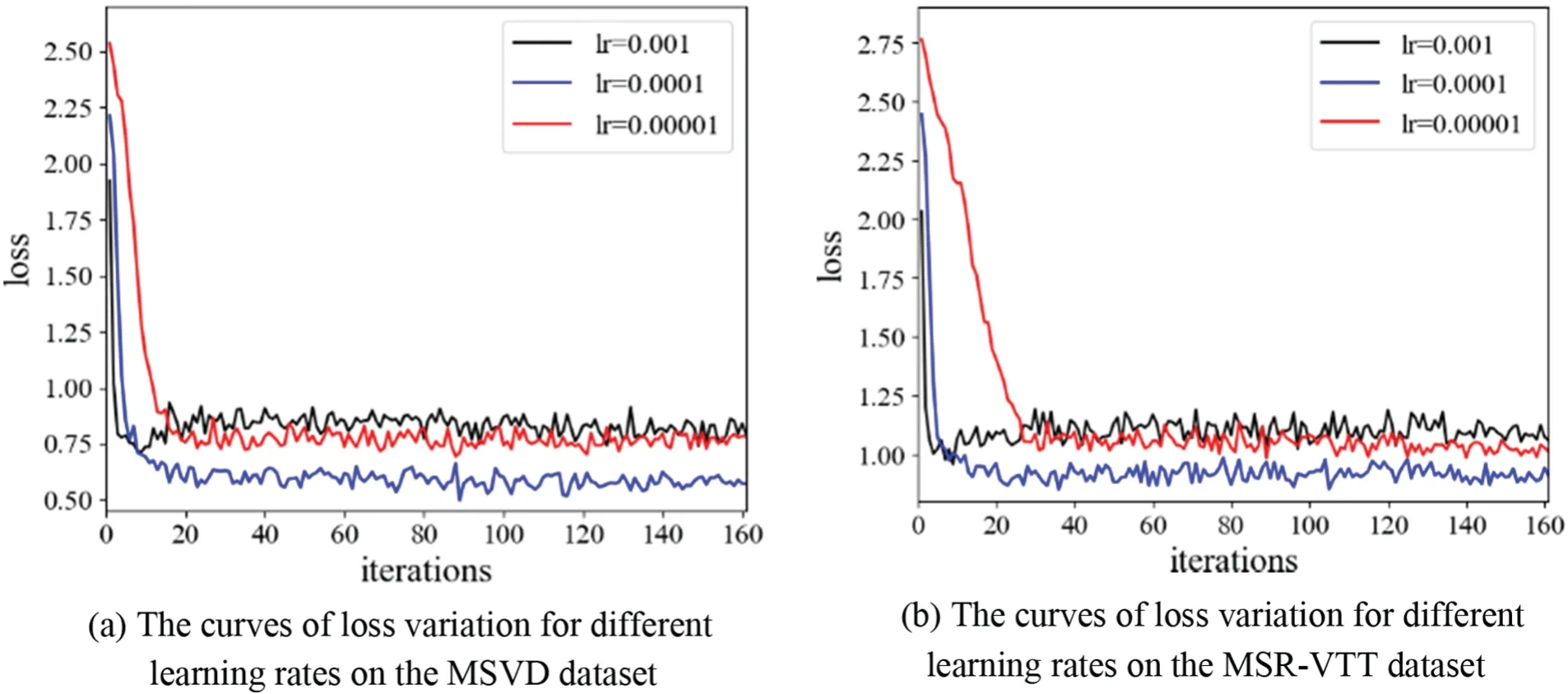

1)Training analysis of the proposed method

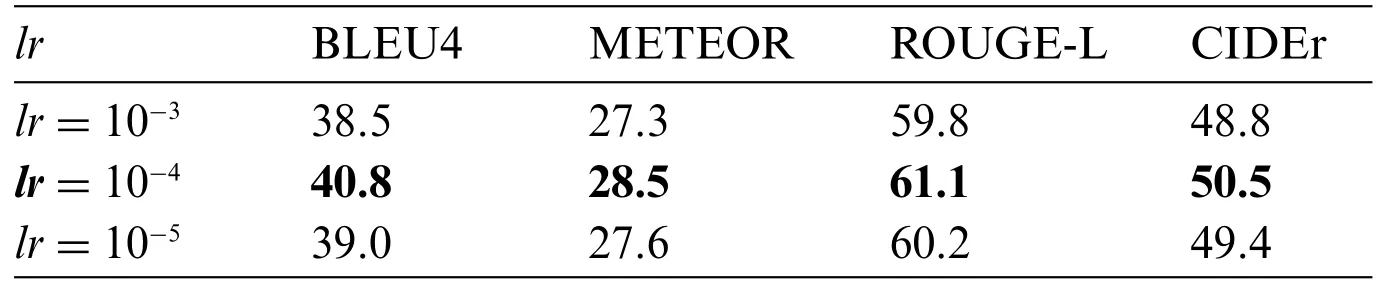

The proposed method utilizes 48000 reference sentences and 130260 reference sentences for model training in MSVD and MSR-VTT datasets,respectively.In the experiments,the optimal performance is achieved by setting the learning rate to 1×10-3,1×10-4,and 1×10-5,respectively.The experimental results are shown in Fig.5.After 20 iterations,the loss function converges smoothly,and when the learning rate is set to 1×10-4,the loss on the two datasets is the lowest,respectively.In addition,it can also be seen from Table 1 that when the learning rate is 1×10-4,the indicators of the proposed method are optimal.It is considered that when the learning rate is very small,this method may fall into local optimization.On the other hand,if the learning rate is set too high,the output error of the proposed method will have a larger influence on the network parameters during the backpropagation process.This,in turn,causes the parameters to update too rapidly,making it difficult to converge.When the learning rate is 1×10-4,the defined negative log-likelihood loss function(Eq.(17))can converge and achieve the optimal training effect by updating the network parameters in each iteration.

Table 1: Comparison of results with different learning rates on the MSR-VTT dataset

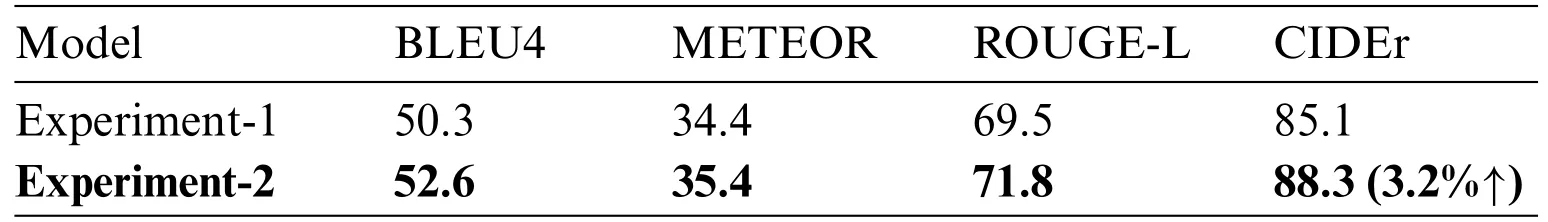

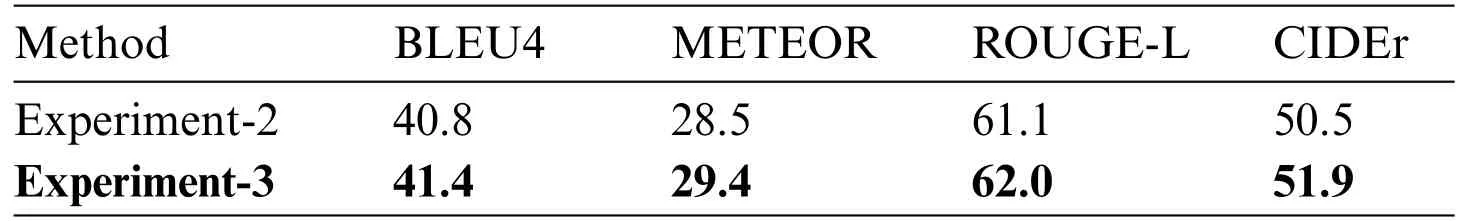

Table 2: Comparison of results of ablation experiments on the MSVD dataset

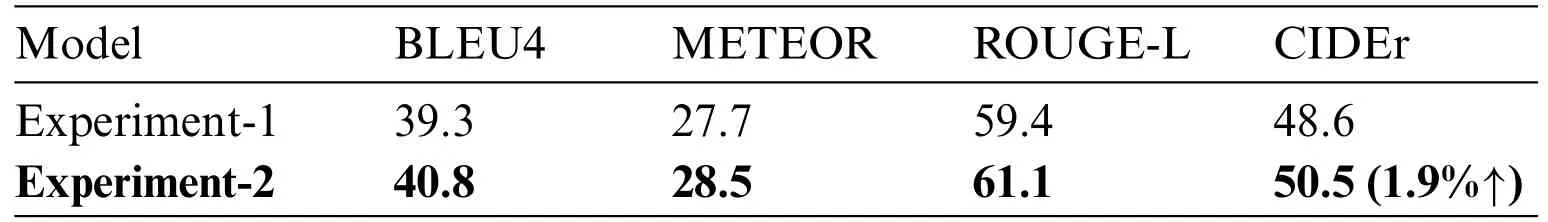

Table 3: Comparison of results of ablation experiments on the MSR-VTT dataset

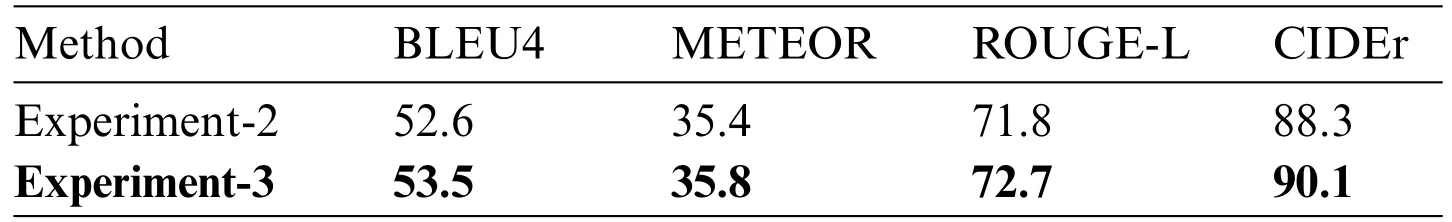

Table 4: Experiment-3 experimental results on the MSVD dataset

Table 5: Experiment-3 experimental results on the MSR-VTT dataset

Figure 5: The curve of loss variation during the EGPT-2 deep network model training on different datasets

To evaluate the effectiveness of the proposed method,the performance indicators at different learning rates are further compared on the MSR-VTT dataset.The experiment results are presented in Table 1.

The results in Table 1 demonstrate the effectiveness of the proposed method in generating video captions that are coherent with the video content without overfitting.

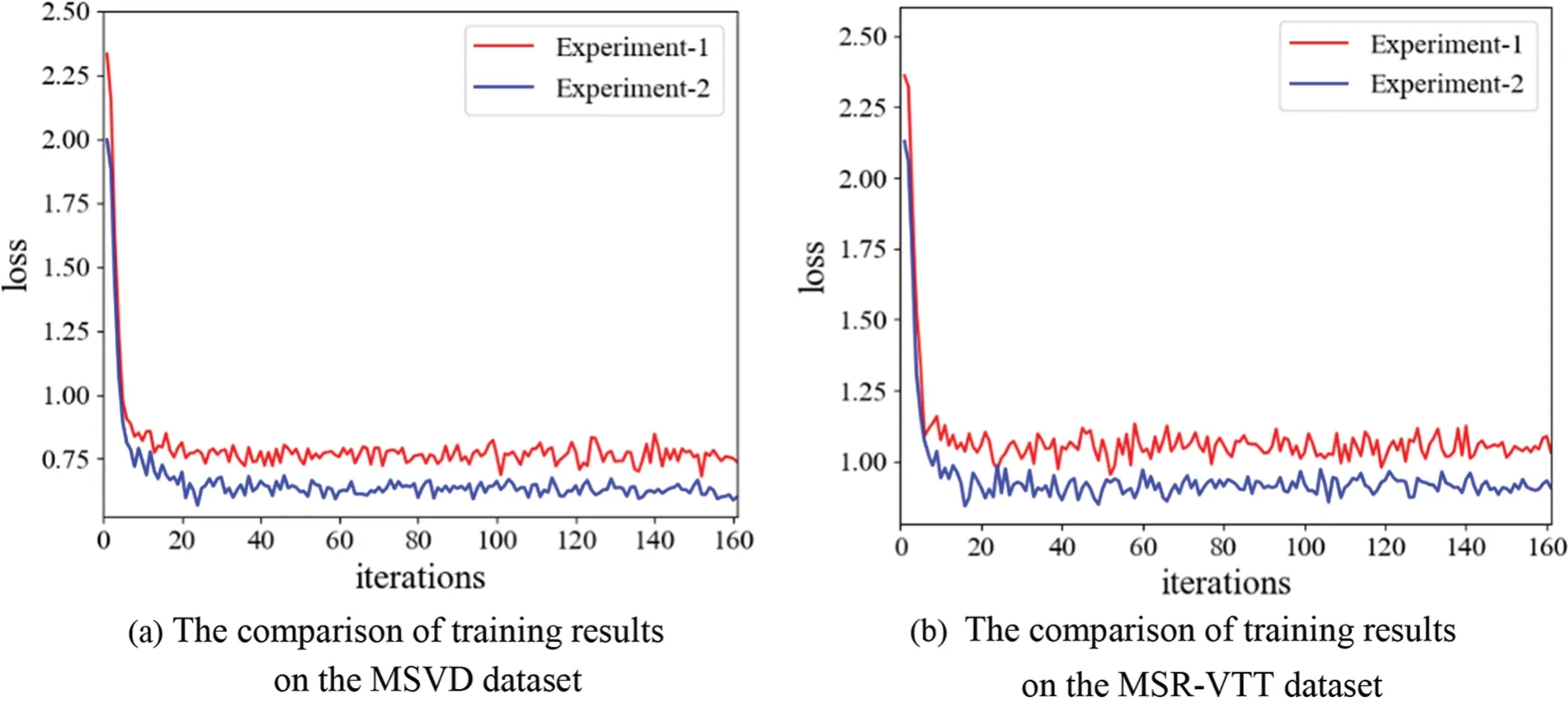

2)Ablation experiments

To clarify the respective contributions of the video semantic topics and the Enhance-TopK sampling algorithm to prediction words,the following ablation experiments are conducted in two datasets.

It should be noted that to ensure the training effect of the EGPT-2 deep network model,Experiment-1 and Experiment-2 both utilize the normal greedy sampling algorithm in the training phase,and Experiment-3 is conducted based on the above experiments,which is only used the verify the effectiveness of the Enhance-TopK sampling algorithm,and does not involve the training phase.Therefore,the comparison results of training the EGPT-2 deep network model through Experiment-1 and Experiment-2 are shown in Fig.6.

Figure 6:The comparison results of training the EGPT-2 deep network model

From Fig.6,it can be seen that Experiment-1 and Experiment-2 both start to converge smoothly after 20 iterations,and the loss of Experiment-2 is significantly lower than Experiment-1.It is considered that since Experiment-2 embeds the semantic topic features of videos in the encoding and decoding process,it can predict the target words with the help of semantic topics to improve the accuracy of video captioning.For example,when the semantic topic of an input video contains the semantics “slicing”and “cucumber”,the generated video captioning will be more specific,and there will be no blurred descriptions such as“A person is cooking”.To further verify the impact of the semantic topics on the model performance,the comparative experiments separately are conducted on the two datasets,and the experimental results are shown in Tables 2 and 3.

Tables 2 and 3 explore the impact of topics on model performance through ablation experiments.It can be seen that all indicators of Experiment-2 are superior to Experiment-1 on both datasets,especially on the indicator CIDEr,which has been significantly improved by 3.2% on MSVD and 1.9% on MSR-VTT,respectively.This is because Experiment-2 captures the topic information of video content through video semantic topics.During the decoding stage,additional information can be obtained from the video semantic topic features to predict the generation of sentences,making the generated video captioning both consistent with the video content and accurate.Specifically,the CIDEr metric is calculated using the TF-IDF algorithm,which assigns low weights to infrequentngrams and high weights to the coren-grams in a sentence.A high CIDEr score indicates that the decoder can further accurately capture the semantic information of video data using video semantic topics.Thereby ensuring that the generated sentences of video content are more consistent with human consensus.

In addition,the Enhanced-TopK sampling used in the decoding phase is also effective.As shown in Tables 4 and 5,Experiment-3 has achieved good results on both datasets.It is considered that since the Enhance-TopK sampling algorithm utilizes the topic correlation coefficientλ,the probability of words affected by the topic in the prediction word setGis enhanced.By adjusting the probability distribution of the predicted words,the long-tail effect in the decoding stage is suppressed,and the accuracy of generated video captioning is further improved.

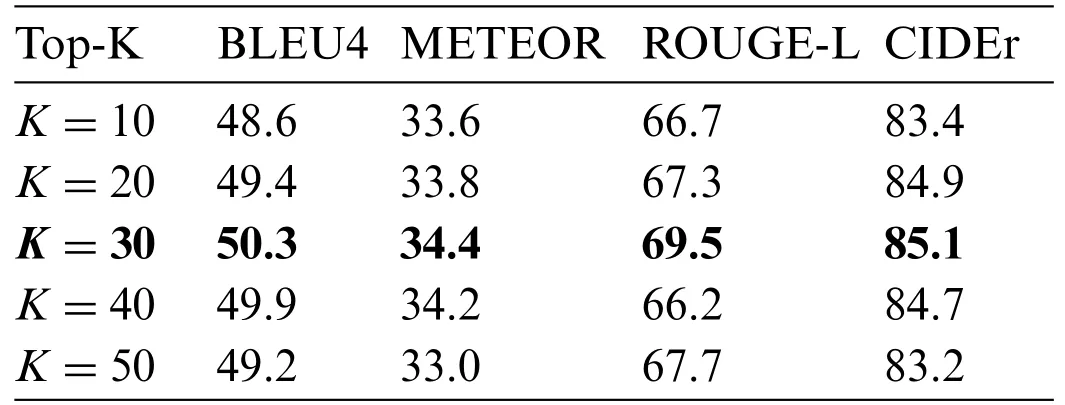

3)Parameter setting

To ensure the generated video captioning conforms to the video content,which maintains the smoothness and rationality of the natural language,a novel Enhance-TopK sampling algorithm is constructed in the decoding stage.In this algorithm,to determine the value of the optimal initial sampling intervalK,Kis set to 10,20,30,40,and 50 for experimental verification on the MSVD video dataset.The experimental results are shown in Table 6.

Table 6: Comparison of experimental results on different sampling intervals of Top-K

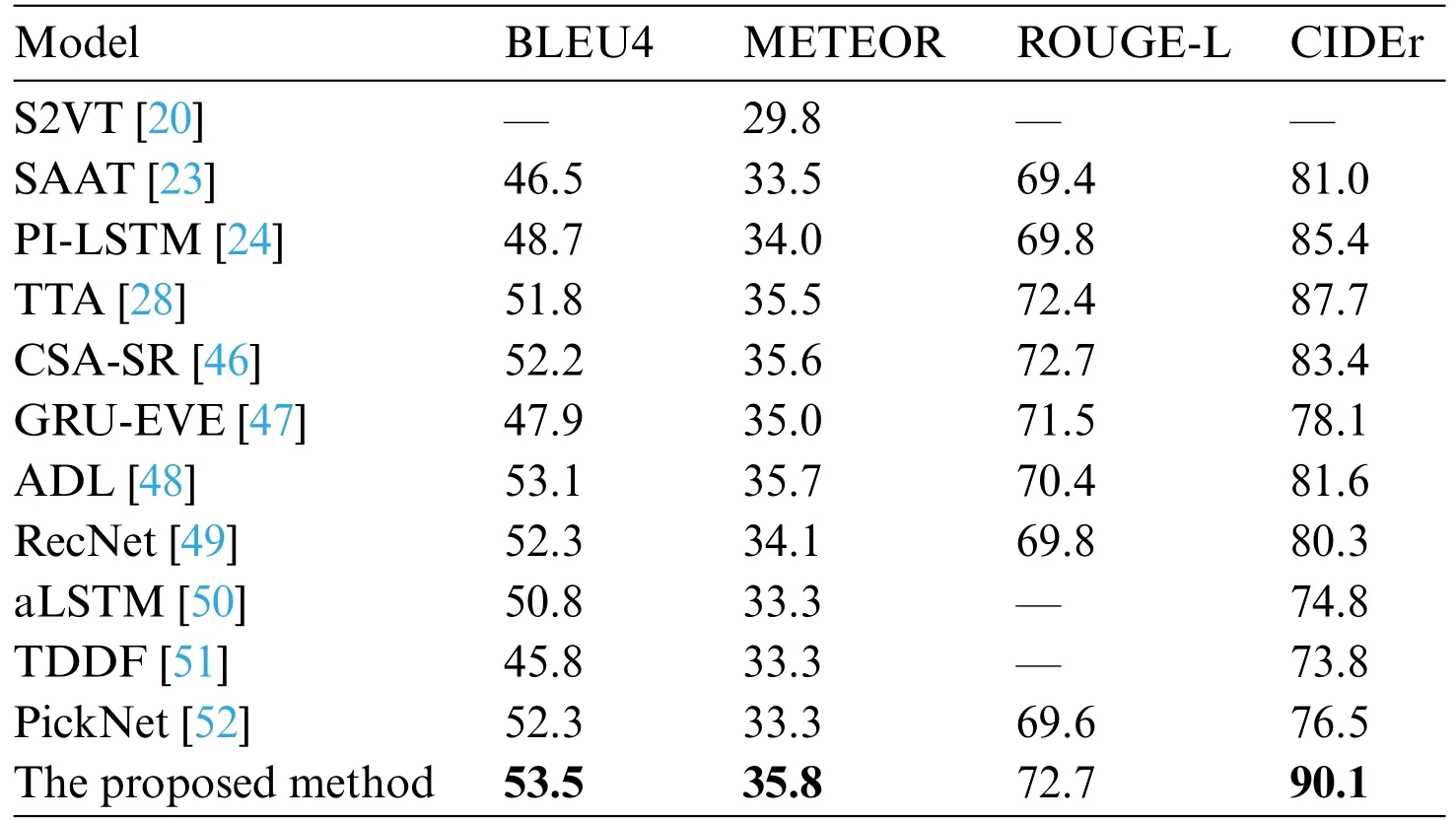

Table 7: Comparison of experimental results of multiple methods on the MSVD dataset

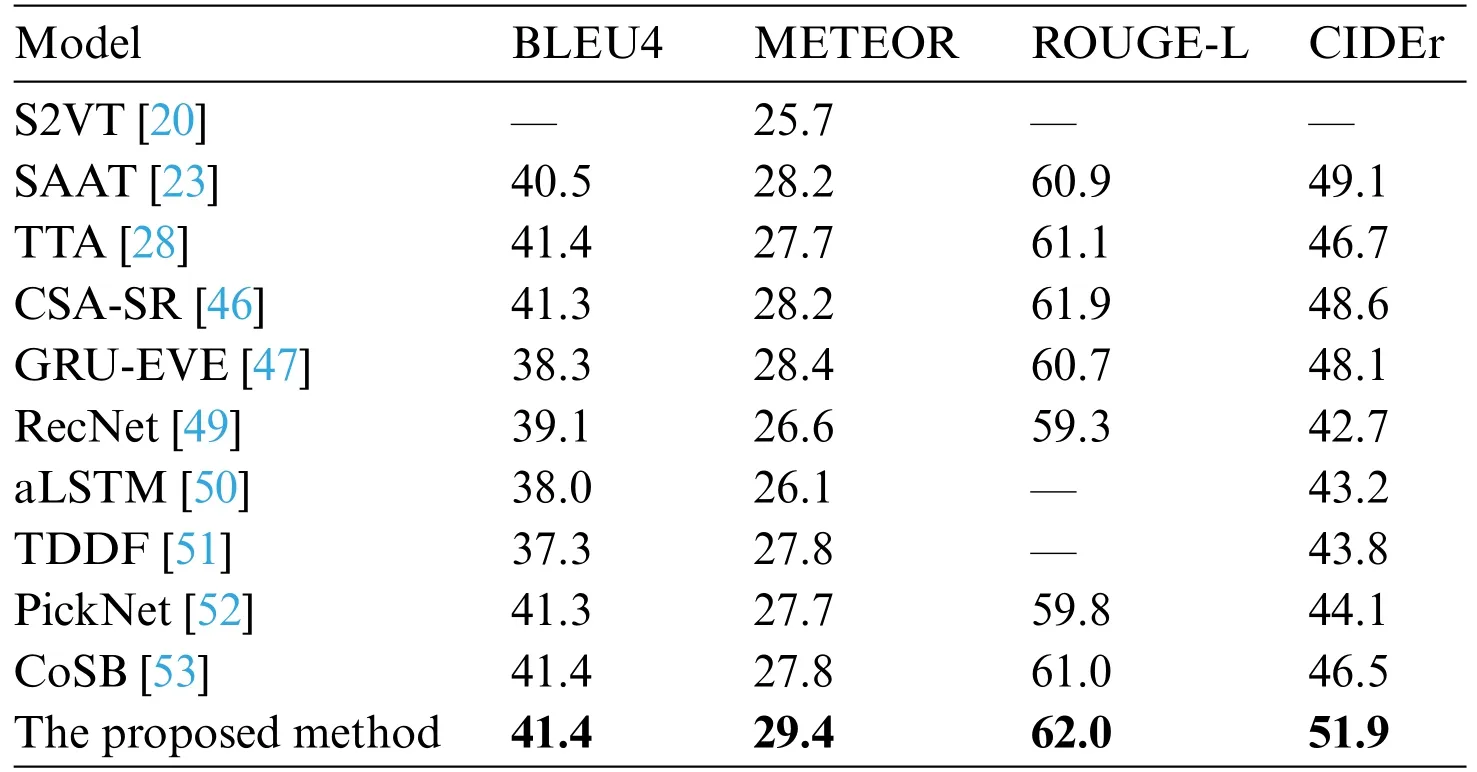

Table 8: Comparison of experimental results of multiple methods on the MSR-VTT dataset

The results in Table 6 show that when theKvalue of the sampling interval is equal to 30,the score of each indicator is highest.It is considered that when theKvalue is less than 30,the sampling interval is small,leading to the next predicted word being likely to be sampled by using the maximum probability to generate repeated or flat sentences,such as the sentence“A woman is riding horse riding horse”resulting in the poor semantics of the generated video captioning.When theKvalue is greater than 30,the sampling interval is too large,which may cause the mode to sample the long tail words and make the generated sentences impassable.Therefore,theKvalue of the initial sampling interval is set to 30.

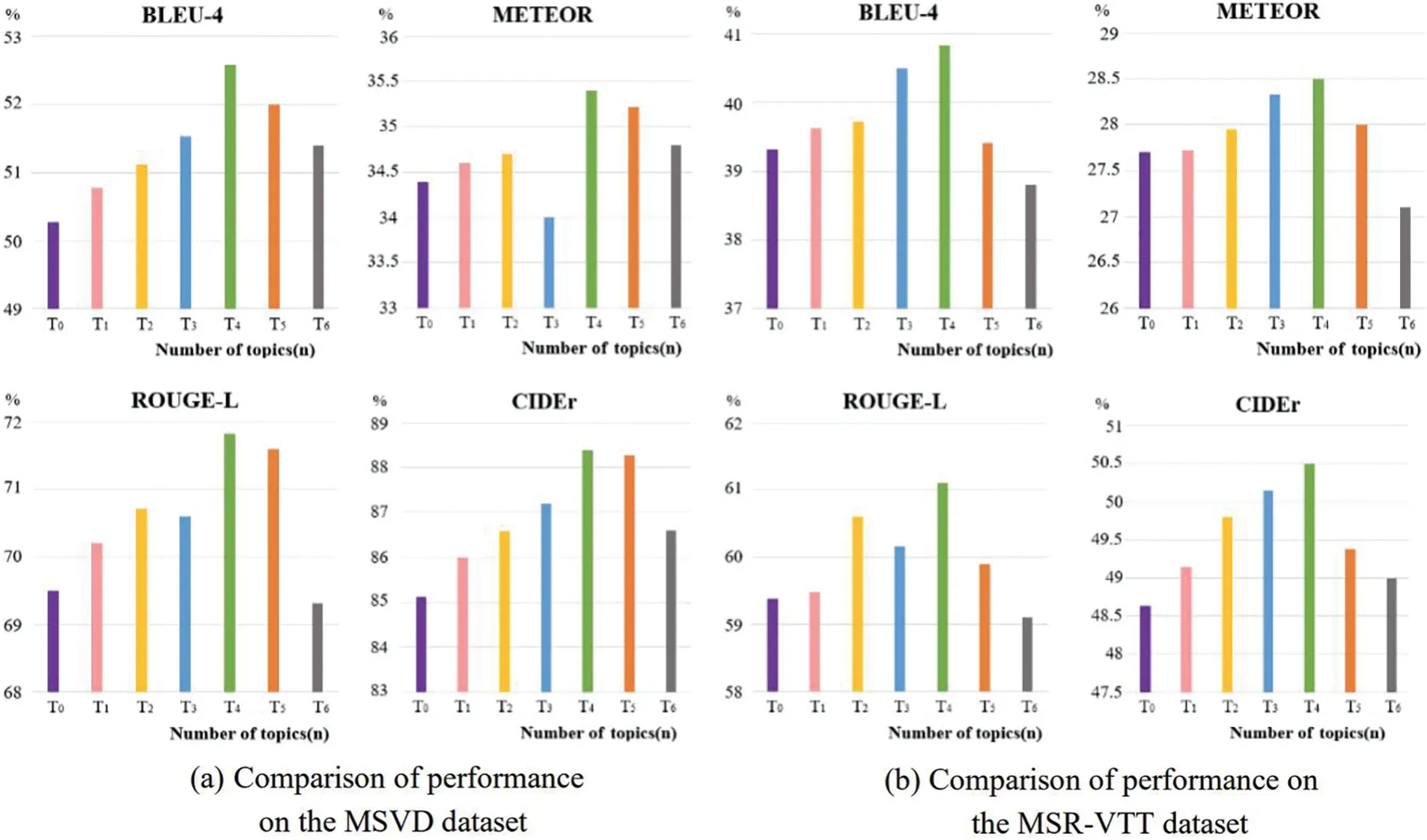

The method proposed in this paper can learn additional semantic information by embedding the semantic topic of the video content to predict the next word.In this process,the parameter setting of the semantic topic quantity is very important.To obtain an appropriate parameter of visual semantic topics,0 to 6 topic words are set for each video to verify the performance of the method.The experimental results are shown in Fig.7.

Figure 7:Comparison of performance metrics for different number of topics on MSVD and MSRVTT datasets

To evaluate the impact of the Enhance-TopK sampling algorithm on the performance of the proposed method,comparative experiments with different numbers of topic words are conducted in Experiment-2.It can be seen from Fig.7 that when the number of topic words is 4,all indicators of the proposed method on the two datasets can achieve the best ones.It is considered that when the number of topic words is small,the EGPT-2 decoder will lose some key objects and actions in the video content.On the contrary,when too many topic words are selected,some irrelevant noise will be introduced into the EGPT-2 decoder,leading to the generated video captioning deviating from the video content.For example,when generating the sentence “A monkey is grasping the dog’s tail.”,if the number of topic words is set to 0,the generated video captioning may lose some key information about the monkey,such as “attack”and “grab”actions.When the number of topic words is set to 6,the irrelevant semantic noise such as“animals”and“roadside”may affect the generation of video captioning that is inconsistent with the video content,such as the sentence“Monkeys are dragging an animal off the road.”.Therefore,according to the results in Fig.7,the topic words are finally set to 4 for each video data.

4)Comparative analysis with different video captioning methods

To evaluate the performance of the proposed method,it is compared with other video captioning studies.Tables 7 and 8 show the comparison results on the MSVD and MSR-VTT datasets.

It can be seen from Tables 7 and 8 that the proposed method shows better performance on two datasets compared with S2VT [20],SAAT [23],PI-LSTM [24],TTA [28],CSA-SR [46],GRU-EVE[47],ADL[48],RecNet[49],STM[50],TDDF[51],PickNet[52],and CoSB[53]methods,especially the advantages of CIDEr indicator is obvious.It is considered that since the CIDEr indicator is used to measure the amount of video-related information contained in the contents generated by the models,and the method proposed utilizes the EGPT-2 decoder to jointly decode the semantic topics and baseline of video captioning simultaneously.This alignment of prediction words with video content enhances semantic consistency,and the generated video captioning contains more semantic information about video content.In addition,the Enhance-TopK sampling algorithm can be used in the vocabulary prediction stage to improve the accuracy of word prediction,which makes the generated video captioning smooth and closer to the semantics of video content.

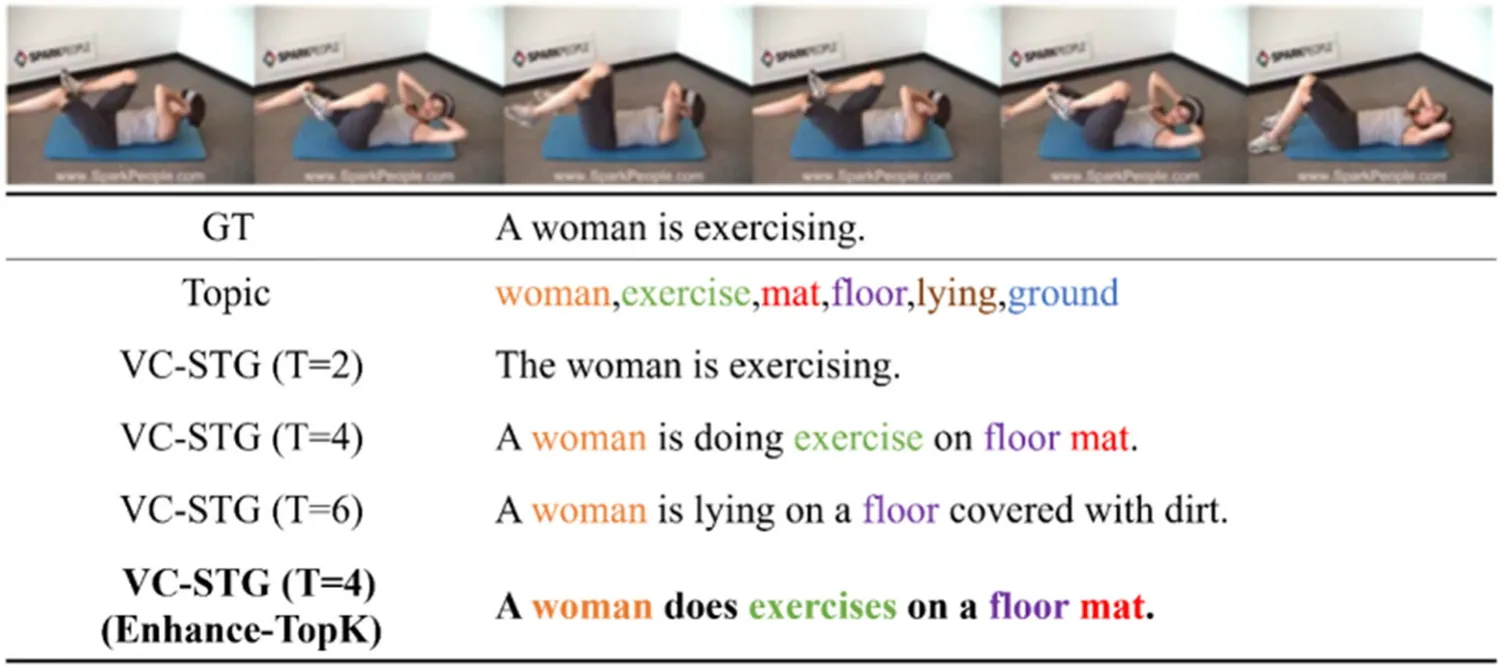

Finally,we utilize the proposed method (denoted as VC-STG) to generate the video captioning on the MSVD and MSR-VTT datasets,as shown in Figs.8 and 9.In Fig.8,“GT”denotes a reference sentence of a video,and “Topic” denotes the topic words of a video.To reflect the impact of the semantic topics on the performance of the proposed method,2,4,and 6 topic words are selected to compare the results of the video captioning.It can be seen from Fig.8 that when the given input video contains the baseline and topics of the video content,it will output a relatively complete video captioning.However,when the number of topic words is large (the number of topic wordsT=6),semantic noise may be introduced into the EGPT-2 decoder,resulting in a deviation in the generated video captioning,such as“through the streets.”.When the optimal number of topic words isT=4,the Enhance-TopK sampling algorithm can be used to help generate more accurate video captioning,such as the generated word“quickly”and the phrase“through the forest”.

Figure 8:A video captioning under the MSVD test set

Figure 9:A video captioning under the MSR-VTT test set

In addition,the impact of semantic topics on video captioning is verified.When the number of topic words is 4 (T=4),the accuracy of prediction words can be significantly improved.However,having too few or too many topic words cannot improve video captioning.For example,the error message“lying on a floor covered with dirt.”may be predicted according to the words“lying”,“floor”and“ground”.

It can be seen from Figs.8 and 9 that when the number of topic words is 4,the method proposed can generate a video captioning that conforms to the video content.On this basis,when the Enhance-TopK sampling algorithm is used in the decoding stage,the generated video captioning is more accurate and the effect is better.

5 Conclusion

To address the issue that the existing video captioning methods developed based on encoderdecoder rely on a single video input source,this paper proposes a video captioning method based on semantic topic-guided generation to improve the accuracy of video captioning,which can enhance the alignment between visual information and natural language by introducing the semantic topics of video data and guide the generation of video captioning.The proposed method is verified with two common MSVD and MSR-VTT datasets.The experimental results demonstrate that the proposed method outperforms several state-of-art approaches.Specifically,the performance indicators BLEU,METEOR,ROUGE-L,and CIDEr of the proposed method are improved by 1.2%,0.1%,0.3%,and 2.4%on the MSVD dataset,and 0.1%,1.0%,0.1%,and 2.8%on the MSR-VTT dataset,respectively,compared with the existing video captioning methods.The introduction of semantic topics can be effective in generating topic-related video captioning and improving the generation effect of video content by a decoder.However,since the limited baselines of video data in public video datasets,the extraction of video semantic topics is restricted.Future studies will introduce target detection algorithms to capture fine-grained semantic information and combine the attention mechanism to eliminate irrelevant and interfering semantic information.

Acknowledgement:The authors would like to express their gratitude to the College of Computer Science and Technology,Xi’an University of Science and Technology (XUST),Xi’an,China,and China Postdoctoral Science Foundation(CPSF)for providing valuable support to carry out this work at XUST.

Funding Statement:This work was supported in part by the National Natural Science Foundation of China under Grant 61873277,in part by the Natural Science Basic Research Plan in Shaanxi Province of China under Grant 2020JQ-758,and in part by the Chinese Postdoctoral Science Foundation under Grant 2020M673446.

Author Contributions:Study conception and design:Ou Ye;data collection:Ou Ye,Xinli Wei;analysis and interpretation of results: Zhenhua Yu,Xinli Wei;draft manuscript preparation: Yan Fu,Ying Yang.All authors reviewed the results and approved the final version of the manuscript.

Availability of Data and Materials:Datasets used for generating the results reported in Sections 3 and 4 are available at https://opendatalab.com/OpenDataLab/MSVD and https://www.kaggle.com/datasets/vishnutheepb/msrvtt?resource=download.

Conflicts of Interest:The authors declare that they have no conflicts of interest to report regarding the present study.

杂志排行

Computers Materials&Continua的其它文章

- Fuzzing:Progress,Challenges,and Perspectives

- A Review of Lightweight Security and Privacy for Resource-Constrained IoT Devices

- Software Defect Prediction Method Based on Stable Learning

- Multi-Stream Temporally Enhanced Network for Video Salient Object Detection

- Facial Image-Based Autism Detection:A Comparative Study of Deep Neural Network Classifiers

- Deep Learning Approach for Hand Gesture Recognition:Applications in Deaf Communication and Healthcare