Weighted-adaptive Inertia Strategy for Multi-objective Scheduling in Multi-clouds

2022-08-24MazenFaridRohayaLatipMasnidaHussinandNorAsilahWatiAbdulHamid

Mazen Farid, Rohaya Latip, Masnida Hussinand Nor Asilah Wati Abdul Hamid

1Department of Communication Technology and Networks, Universiti Putra Malaysia (UPM), Serdang, 43400, Malaysia

2Institute for Mathematical Research (INSPEM), Universiti Putra Malaysia (UPM), Serdang, 43400, Malaysia

3Faculty of Education-Saber, University of Aden, Aden, 2408, Yemen

Abstract: One of the fundamental problems associated with scheduling workflows on virtual machines in a multi-cloud environment is how to find a near-optimum permutation.The workflow scheduling involves assigning independent computational jobs with conflicting objectives to a set of virtual machines.Most optimization methods for solving non-deterministic polynomial-time hardness (NP-hard) problems deploy multi-objective algorithms.As such, Pareto dominance is one of the most efficient criteria for determining the best solutions within the Pareto front.However, the main drawback of this method is that it requires a reasonably long time to provide an optimum solution.In this paper, a new multi-objective minimum weight algorithm is used to derive the Pareto front.The conflicting objectives considered are reliability, cost, resource utilization, risk probability and makespan.Because multi-objective algorithms select a number of permutations with an optimal trade-off between conflicting objectives, we propose a new decisionmaking approach named the minimum weight optimization (MWO).MWO produces alternative weight to determine the inertia weight by using an adaptive strategy to provide an appropriate alternative for all optimal solutions.This way, consumers’needs and service providers’interests are taken into account.Using standard scientific workflows with conflicting objectives, we compare our proposed multi-objective scheduling algorithm using minimum weigh optimization (MOS-MWO) with multi-objective scheduling algorithm(MOS).Results show that MOS-MWO outperforms MOS in term of QoS satisfaction rate.

Keywords: Multi-cloud environment; multi-objective optimization; Pareto optimization; workflow scheduling

1 Introduction

The cloud environment provides a platformwhere servers in a data center can be accessed in shared mode when users request services [1].Cloud computing architecture is composed of three distinct layers,namely Infrastructureasa Service(IaaS),Softwareasa Service(SaaS)and Platformasa Service(PaaS).SaaS integrates three different organizations: cloud providers, software providers and users.A multi-cloud system involves the collaboration of multiple cloud infrastructure providers (such as Microsoft Azure, 2018; Amazon EC2, 2018 and Google Compute Engine, 2018) that configure their computing needs using a wide range of cloud-based IaaS.They provide their virtual machines at a price using“pay as you go”models which is one of the most popular ways to share resources between cloud providers.[2,3].

The workflow is a common method for modeling most distributed systems used in scientific applications.It is typically represented as an acyclic graph where the relation between tasks is shown on the edges of each node.Usually, the number of tasks is greater than the number of virtual machines(VMs) available.This necessitates the use of an appropriate scheduling policy for task assignments.Recently, workflow scheduling in the cloud has been a subject of focus due to the importance of workflow applications.Therefore, selecting the near-optimal schedules in such scenarios is generally NP-hard.

Most of the recently proposed solutions have only taken one of the qualities of service (QoS)requirements into account.For example, the workflow completion time is considered when investigating makespan.Other important factors related to QoS include cost, cloud security, performance and reliability.Hence, an efficient task scheduling algorithm must strike a balance between several QoS objectives.This is known as multi-objective task scheduling.One way of striking such a balance is the use of Pareto optimal algorithms that allow users to select the best result within an acceptable set of solutions.

A Pareto optimal solution simultaneously optimizes conflicting objectives.If the optimal solutionhas a number of candidates, the set is known as the Pareto front.No solution is considered dominantn has a number of candidates, the set is known as the Pareto front.No solution is considered dominant within the Pareto front.Since heuristic algorithms are generated, it becomes difficult to choose the appropriate permutation for service providers [4].The main drawback of the Pareto optimization method is that it needs a longer time to make a better final decision [5].The authors of [6] used a well-known decision-making process called weighted aggregated sum product assessment (WASPAS).This method enables service providers to communicate their needs and give specificweight to objectives based on consumers’preferences.It selects the best solution from the optimal Pareto set with respect to a given priority.

In this article, we propose a multi-objective workflow scheduling algorithm with minimum weight optimization (MWO) method.A Pareto front is used to reveal all possible solutions and determine an optimal solution.This way, both the service provider and customer needs can be catered for simultaneously.The introduced algorithm utilizes the MWO method to meet the following five requirements.

1.Minimum workflow time (makespan).

2.Minimum cost.

3.Maximum resources utilization.

4.Maximum workflow reliability.

5.Minimum risk probability of workflow.

In real-time applications, some users may be concerned with makespan reduction, some other users may care about the cost of paying VMs while others focus on high workflow reliability.In contrasttotheseusers’concerns,VMproviders aim atmaximizing the utilization of their resources and minimizing risk probability.Therefore, a multi-objective method of optimization is needed to achieve an almost optimum trade-off between these conflicting concerns and satisfy them simultaneously.

In this article, the main contributions are:

1.Presenting a multi-objective method for scheduling workflow in a cloud environment based on the particle swarm optimization (PSO) algorithm.

2.Defining a fitness function that simultaneously considers the interests of users and service providers (by decreasing makespan, cost and risk probability and increasing resource utilization and reliability).

3.Deploying a newly proposed decision-making method called minimum weight optimization to choose a feasible solution and illustrating the solution using the Pareto front based on users’preferences.

4.Using alternative weight values to determine the inertia weight by deploying an adaptive strategy to increase the convergence of the PSO in the first few iterations of the algorithm.

The rest of this paper is organized thus: Section 2 presents an overviewof the relatedworks.Section 3 defines the scheduling model and explains the problem and network model formulation.Section 4 discusses the multi-objective optimization methods.Section 5 presents the proposed algorithm and details its implementation while Section 6 elaborates the experimental setup and simulation results.Section 7 discusses the performance measurement and Section 8 concludes this article.

2 Related Work

Related WorkFinding the most suitable workflow scheduling solution is an NP-hard problem.A workflow scheduling method is generally aimed at achieving one specific or multiple objectives.It balances the trade-off between conflicting objectives.One of such multi-objective scheduling methods is the aggregation method.This strategy tackles a multi-objective scheduling problem by converting it to a single objective.The weight is taken into account for each objective and the number of weighted objectives is optimized.In [7], a multi-objective problem was transformed into a single objective problem using cost-conscious scheduling.The system was designed to maximize makespan and minimize cost.Jeannot et al.[8] proposed a scheduling strategy that increases performance and reliability using the aggregationmethod.During runtime, the dynamic level strategy (DLS) introduced in [9], can changes the task priority.Doan et al.[10] enhanced the DLS method with a genetic algorithm and proposed Bi-objective Dynamic Level Scheduling (BDLS).

The Pareto approach differs from the aggregation approach as it leads to a trade-off between unde-sired objectives that should be reduced (e.g., cost) and desired objectives that should be maximized.Such algorithms are assisted by solutions that are non-dominant in the Pareto front.Adopting this approach, Sagnika et al.[11] introduced a Cat Swarm Improvement algorithm to schedule workflows in the cloud.The algorithm reduces the time consumption, cost and processor’s idle time.The authors compared the proposed method with multi-objective particle swarm optimization (MOPSO)algorithm and the results revealed that the proposed algorithm performs better than MOPSO.

A multi-objective scheduling method was proposed by Udomkasemsub et al.[12] using the Pareto optimizer algorithm with the Artificial Bee Colony (ABC) algorithm to minimize cost and makespan.Similarly, Wu et al.[13] proposed an RDPSO algorithm for managing workflows in the cloud to reduce cost or makespan.In [14], a new multi-objective scheduling approach that uses the Gray Wolf algorithm was introduced with a focus on cost, makespan and resource efficiency.Another multi-objective approach using the MODPSO algorithm was proposed by Yassa et al.[15] to reduce cost, energy and makespan.Dynamic voltage and frequency scaling was used to minimize cost.The proposed scheduling algorithm was compared with the Heterogeneous Earliest Finish Time (HEFT)algorithm.

Considering more than two significant scheduling factors, [16] deployed a heuristic black hole multi-objective Pareto-based algorithm.They proposed a suitable approach for analyzing the problem of cloud scheduling to reduce cost and makespan and increase resource efficiency.Kaur et al.[17] proposed an incremented frog slipping algorithm for scheduling workflows to reduce the execution cost while meeting the task deadline.Simulation, using WorkflowSim, reveals that the proposed scheme outperforms the PSO-based approach with respect to minimizing the overall workflow execution cost.Khalili et al.[14] focused on preserving the QoS requirement for service providers by developing a multi-objective scheduling algorithm using the Grey Wolf and Pareto optimizers to decrease makespan, time and cost.The algorithm increases throughput,which is a fundamental service requirement, compared to the Strength Pareto Evolutionary Algorithm 2 (SPEA2).

An adaptive multi-objective scheduling method for mapping workflow at the IaaS level was proposed by Zhang et al.[18].Their goals are to reduce user resource costs and balance loads on VMs without violating the deadline constraint of the workflow.The Non-dominated Sorting Genetic Algorithm-2 (NSGA2) extension, which uses mutation and crossover operators, was used to achieve Pareto-front and search diversity.The authors deployed Inverted Generational Distance (IGD) which measures theminimum Euclidean distance and calculated the diversity and convergence of a collection of Pareto solutions.In an attempt to reduce costs, makespan time and the use of cloud energy related to the workload deadline, a new approach to workflow scheduling was suggested by Singh et al.[19].The authors grouped users using machine learning technology.Thereafter, time, cost and negotiationbasedpolicies were considered.Simulation results from CloudSim indicated that the proposed strategyis consistent with other methods in relation to the three objectives studied.

Verma et al.[20] proposed Hybrid PSO (HPSO) by incorporating budget and deadline constrained heterogeneous earliest finish time algorithm (BDHEFT) to schedule multi-objective workflows with budget and deadline constraints.MOPSO is used to reduce resources cost and workflow makespan during the scheduling process.The original solution can be generated through BDHEFT and other randomly selected initial solutions.During the MOPSO implementation cycle, optimal (nondominated) solutions are maintained as the Pareto front.The final solution is the ultimate Pareto set.Dharwadkar et al.[21] introduced a new programming method, called Horizontal Reduction (HR), to minimize failure, execution costs, scheduling overhead and makespan by integrating control point, replication and PTE algorithms.The findings revealed that the suggested approach outperforms other methods in terms of the three objectives studied.

In [16], a new multi-objective hyper-volume algorithm that expands the black hole detection algorithm was proposed.The algorithm uses a dominant strategy that increases its flexibility and convergence towards the Pareto optimum.The conflicting objectives optimized are resource utilization, resource cost and makespan (completion time).Durillo et al.[22] presented a list-based multiobjective workflow scheduling algorithm.A single-objective Pareto optimizer was used to minimize the resource utilization cost and makespan.Another new multi-objective scheduling approach called Multi-objective HEFT (MOHEFT) [22] was also presented.[23] further proposed an improvement of[24], called the Pareto Multi-objective List Scheduling Heuristic (PMLSH), for estimating solutions when ranking in HEFT.A consistency metric, Crowding Distance (CD), was deployed to maximize various objectives.CD is a test to assess the solutions around a particular population of solutions.In each iteration of the algorithm, one can choose nearer optimal solutions.Hypervolume was used as a benchmark to test the proposed algorithm.

Yu et al.[25] used a multi-objective evolutionary algorithm (MOEA) to solve issues regarding workflow planning.The strategy was applied to reduce two conflicting issues which are the costs of utilizing services and the time of implementation.In addition to that, they established objective functions that are compatible with the constraints.Similarly, Kalra et al.[26] put forth a workflow scheduling method by integrating Intelligent Water Drop and Genetic Algorithms (IWD-GA).The method reduces makespan and execution cost and offered a variety of solutions with respect to reliability and deadline constraints.IWD-GA helped consumers to flexibly select solutions based on their requirements.

From the above, it is clear that multi-objective workflow scheduling algorithms take many objectives into consideration to provide effective scheduling.In this regard, an accurate selection (of objectives) is required to effectively allocate tasks to the virtual machines.Most of the previous studies in related literature used Pareto optimality approach [14,26].However, increasing the number of conflicting objectives increases the Pareto computation cost and completion time.Other methods like aggregation and hypervolume are not scalable when the number of objectives are increased [8,16].In order to address these limitations, we develop the minimum weight approach that reduces the cost and time of the scheduling process and improves the scalability evenwith a higher number of objectives.Thus, this paper introduces a new multi-objective scheduling minimum weight optimization (MOSMWO) algorithm for scheduling scientific workflow in a multi-cloud environment using the MWO process.This is targeted at reducing workflow costs, risk probability and makespan and increasing reliability and resource utilization within the set reliability constraints.

3 Scheduling Model

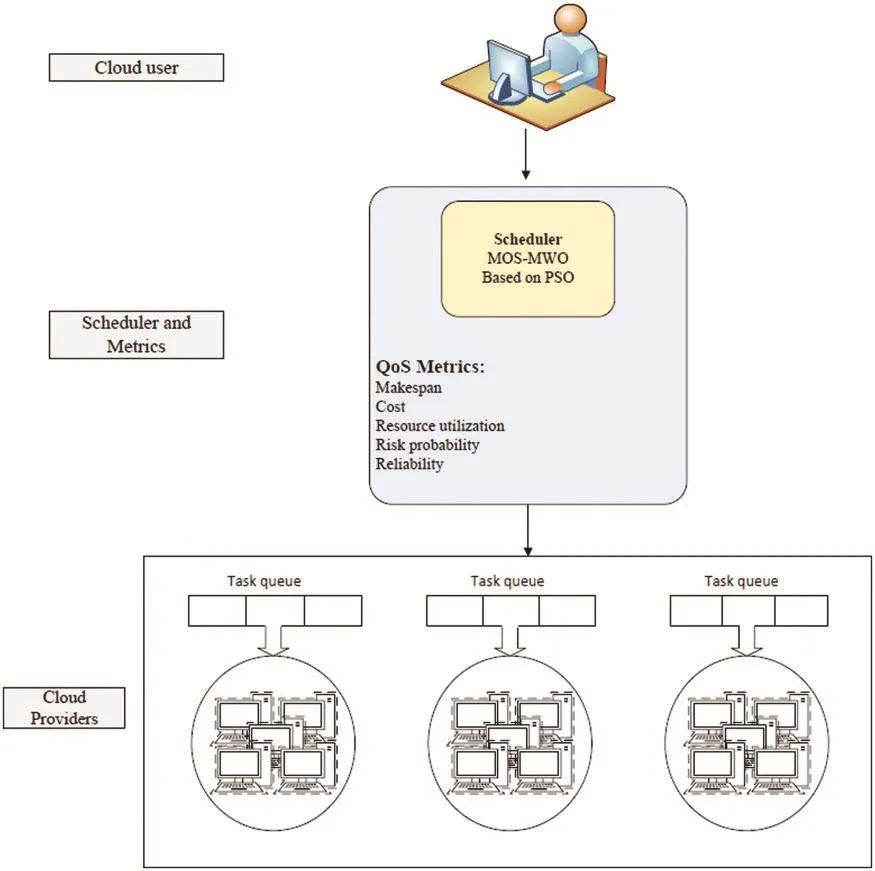

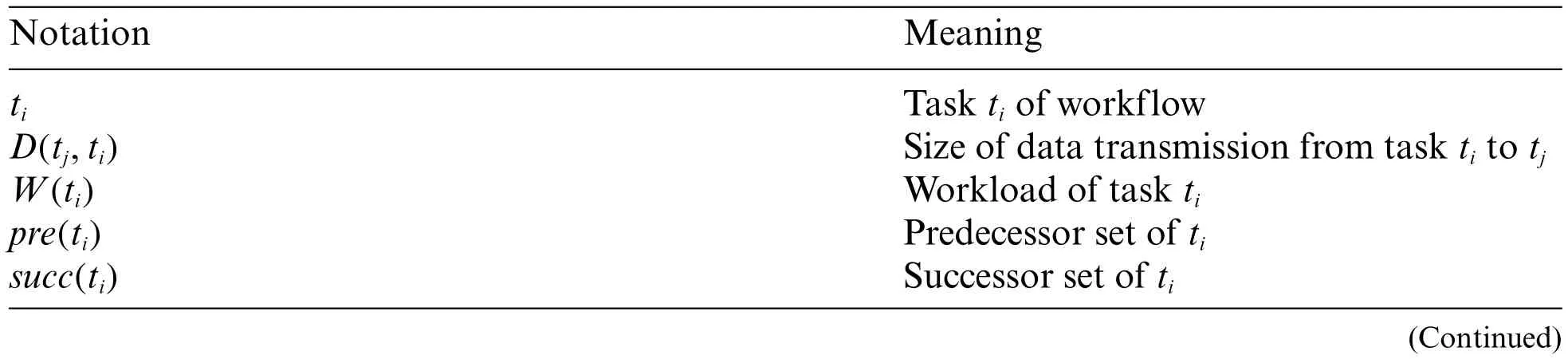

This section discusses the workflow scheduling model and metrics with special attention to reliability, makespan, cost, workflow model, multi-cloud model, resource utilization, risk probability and problem formulation.The proposed MOS-MWO algorithm considers five QoS requirements:cost, risk probability, makespan, resource utilization and reliability.The schedule model is provided in Fig.1 while the notations used in this study are defined in Tab.1.

v

3.1 Workflow Model

A sequence of scheduled activities is necessary to achieve a defined objective.These activities are known as workflows.Workflows provide sets of basic processes designed to address a more complicated problem [27].These processes must follow a standard pattern to ensure coherence and to improve the efficiency of executing the desired tasks.A workflow aims to decide how various tasks can be configured, performed and monitored.

Figure 1: Scheduling model

Table 1: Notations and their meanings

Table 1: Continued

Workflow can be modeled with nodes and edges as a direct acyclic graph (DAG).It can be expressed asW=(T, E), where the task set consists ofT= {t0, t1,..., ti,..., tn-1}.A number of arcsE= {(ti, tj)|ti, tjϵT} represents the task dependencies.Each workflow has the entry taskTentryand exittaskTexit.In addition, each task has a predecessor settpre(ti)and a successor setsucc(ti).Each task is executed after its predecessor has been executed.Tasktihas an assigned weightW(ti)which denotes its workload and is quantified in terms of compute unit(CU).D(ti,tj)denotes the output data size of taskstithat must be transferred to the tasktj.

3.2 Multi-cloud Architecture

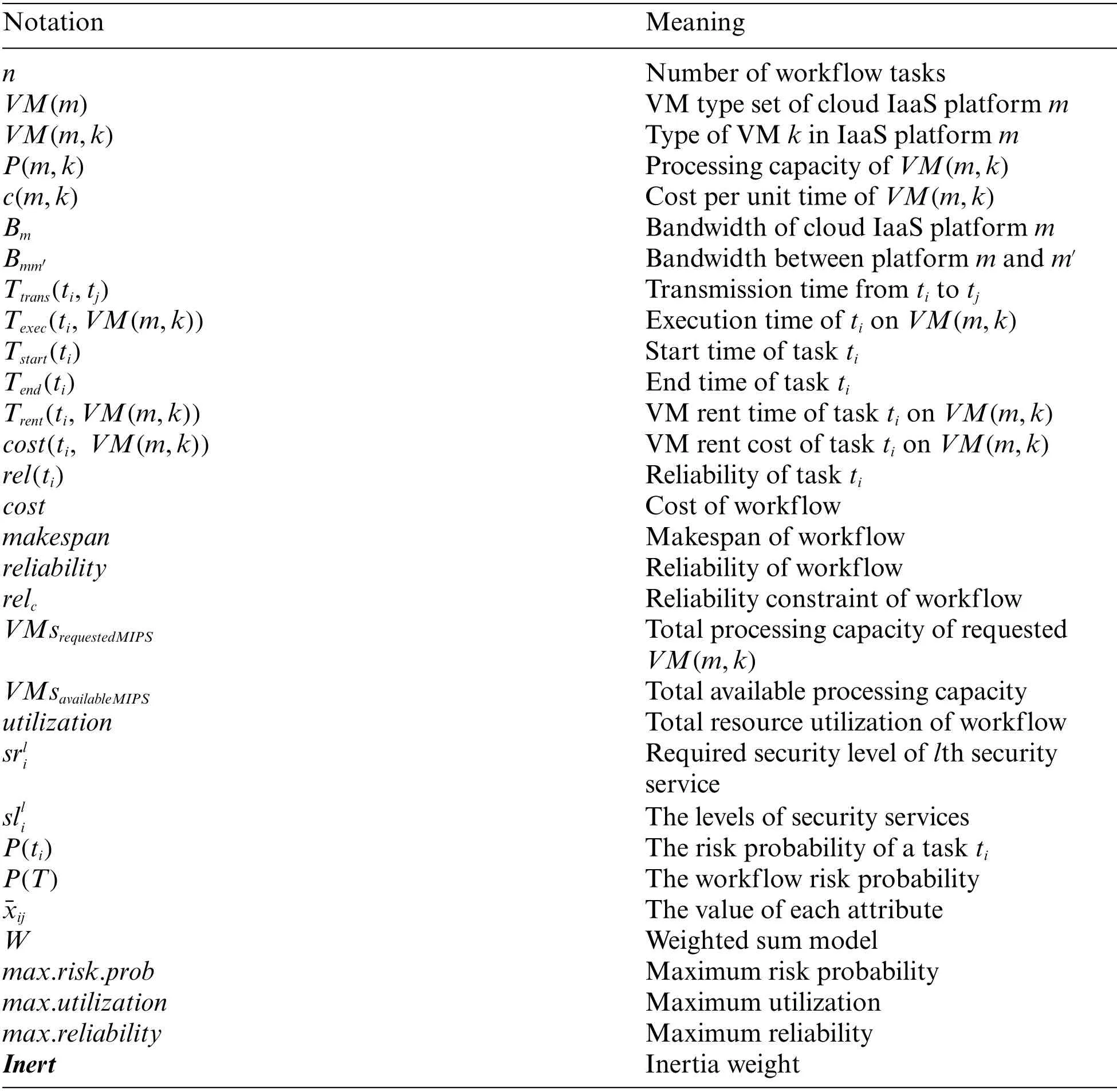

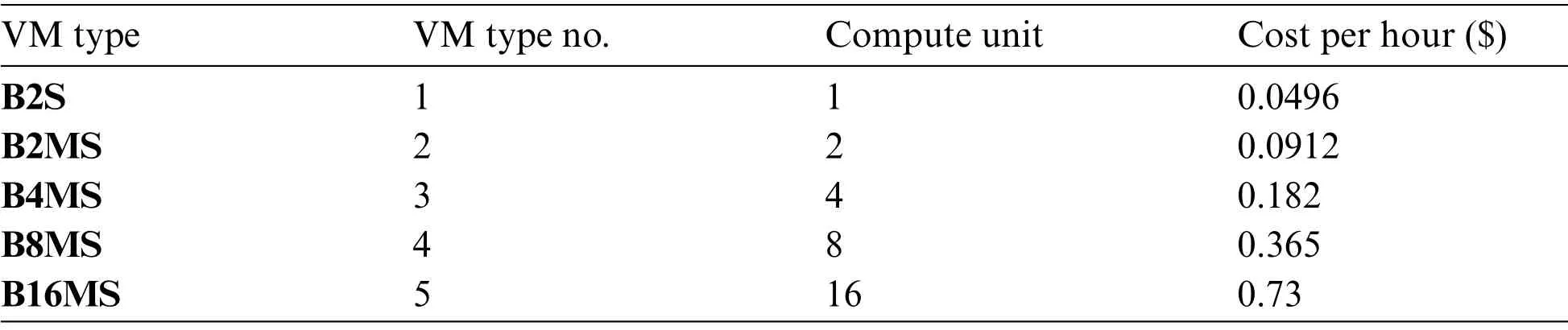

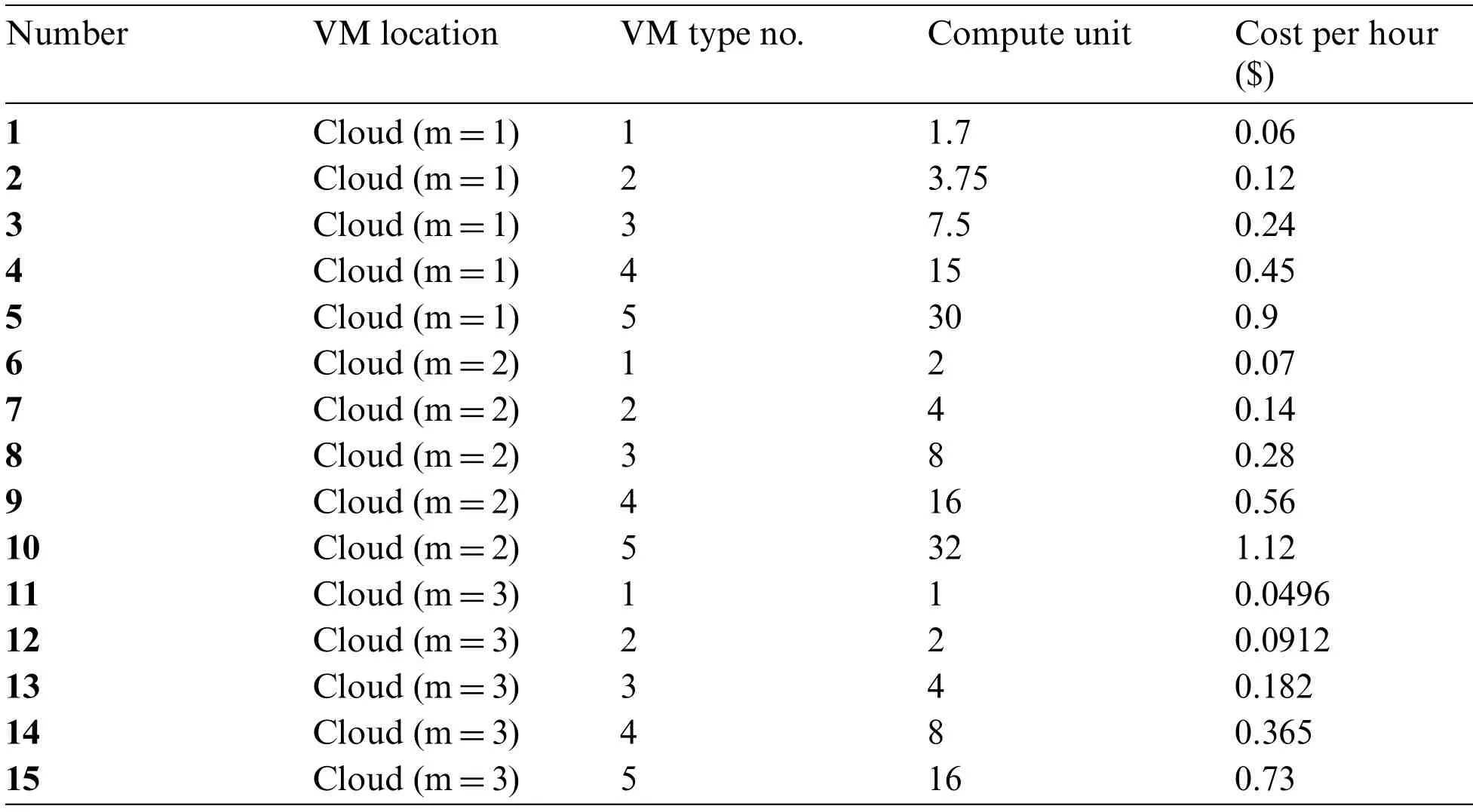

This paper considers three providers of cloud services:Google Compute Engine, Amazon EC2 and Microsoft Azure.Here, we discuss the multi-cloud architecture that gives users access to VMs from multiple cloud providers with different pricingmodels.For example, (Amazon EC2, 2018) rate is based on operating hours.Every partial clock gradually hits full hours.Likewise, (Azure, 2018) consumers pay per minute.(Google Compute Engine, 2018) charges per minute after the first ten minutes the VM instances are launched.The various types of VMs are shown in Tabs.2-4.

Table 3: Different types of VMs in google compute engine

Table 4: Different types of VMs in microsoft azure

Different IaaS platforms can be provided in a multi-cloud environment for a collection of VMs whereVM(m)={VM(m,1),...,VM(m,k),...,VM(m,ks)},m=1,2,...,M.Let(m|m=1,2and3),wheremrepresents various IaaS cloud providers (i.e., Amazon, Microsoft and Google).TheVM(m,k)is the instance VM specified by the IaaS cloud providerm.P(m, k)is its processing power, hourly costs are denoted byC(m, k)and CU refers to the VM CPU computing capacity [28-30].We assume the various cloud providers can supply end users with an unlimited number of VMs.Bmindicates the bandwidth of cloud platformmandBmm’is a bandwidth between two cloud platformsmandm’.

3.3 Makespan Computation

In a multi-cloud environment, workflow/tasks on various IaaS platforms can be delegated and executed.Other VMs need to wait before tasks are received.This is because VMs transfer many copies of their output to other VMs.The nature of the receiver’s performance depends on the task order.Eq.(1) defines the group sorting process of workflow/tasks in set A.

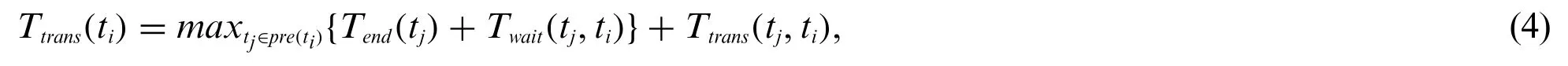

The sequence of the tasks in partial setBis the same as the previous taskstiinA.IfA= {t1, t3, t4,t2}, thenB= {t1, t3} ifti=t4.The start time of the tasktiis represented asTstart(ti)in Eq.(2) and the end time isTend(ti).

whereTwait(tj,ti)iis the waiting time fortitto receive input data from tasktj.This is presented as follows:

Note that ifti=tentry, thenTstart(ti)= 0.

In order to calculate the transmission time, we have

where the transmission time betweentiandtjisTtrans(tj, ti).

As a result, tasktihas a receiving time given by

The time of execution of each tasktidepends on the output data size for each task [31,28].The execution time to perform different tasks on a differentVM(m, k)can be determined using the following equation.

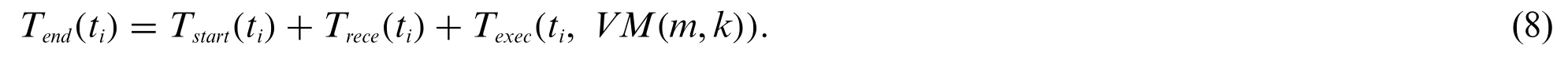

The processing capacity ofVM(m,k)in CU can be taken for the end time of each task.Therefore,

Then,

Makespan is the last task’s end time (texit) and deadline is the time required to finish the scheduling using VMs with minimum processing capacity.

3.4 Cost Computation

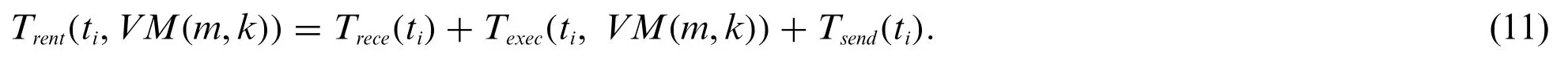

IaaS platforms have unique pricing methods that follow the multi-cloud model.The existing workflow algorithms compute the rental time of the VM by taking the interval from start time to finish time [28,30-32].When the task is done, the VM stops and the task output is transferred to its successor.Data transfer priority depends on the task order.The time of sending tasktican bespecified as:

The rental time of the tasktifor the VM running on VM(m, k) is specified in Eq.(11).

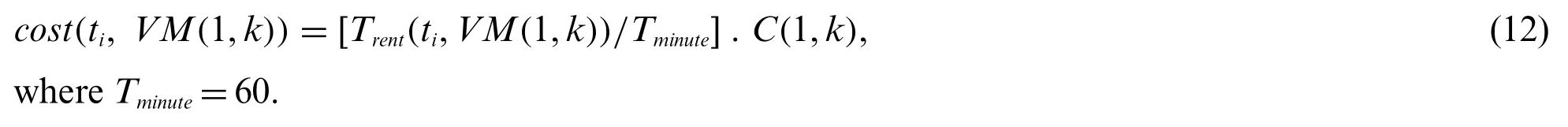

The VM rental cost for tasktion each IaaS platform is calculated below.

For Amazon EC2 which charges per hour, taskticost onVM(1, k)is expressed in Eq.(12).

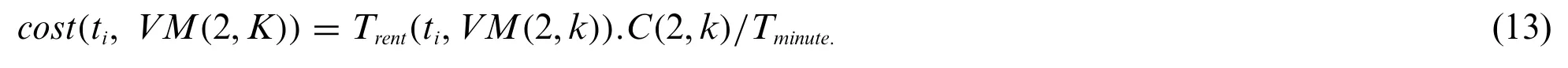

Microsoft Azure charges per minute, tasktiexecution cost onVM(2, k)is stated in Eq.(13).

Google charges the instance per minute after the first ten minutes.This is indicated as the task cost onVM(3, k)in Eq.(14), whereTten=10.

The cost of the workflow can be calculated using Eq.(15).

The budget constraint can be calculated during workflow scheduling using VMs with the maximum price in a critical path.

3.5 Resource Utilization Computation

Scheduling plays a crucial role in efficiently allocating resources for cloud operation.Many scheduling processes are also conducted by assigning tasks to strike a balance between costeffectiveness, resource utilization and makespan [33].It takes more time for cheaper resources to complete task execution than expensive ones.This means that a VM’s CPU achieves better resource efficiency at the expense of cost.The total capacity of a requested VM is calculated in Eq.(16).

The workflow utilization percentage for each workflow can be determined using Eq.(17).

Since users always assume that they areworking with a service provider that provides high resource utilization, the value ofmax.utilizationis set to 100 in Eq.(32).

3.6 Reliability Computation

Cloud computing failures are unavoidable.Failures may result from within (for example, software faults, hardware failures, power defects, etc.) [34,35] or outside (for example, harmful web attacks)[36,37].Short-termfailures also occur thereby causing failure during task workflow.The case of failure can be based on the Poisson distribution [8,38,39].It is determined by computing the exponential of the reliability that tasktiis performed correctly inVM(m, k)as follows:

where the cloud service provider failure coefficient λm>0 (m=1, 2, 3).

Every IaaS platform also has a specificmulti-cloud failure coefficient.If there are problems during the rental period, the mission may fail.So long as the failures are independent, the reliability of the workflow is determined by Eq.(19).

Suppose λmax=max{λm|m=1, 2, 3} indicates the maximum failure coefficient and λmin=min{λm|m=1, 2, 3} refers to the minimum failure coefficient, the resulting scheduling workflow for the reliability can be Maximum (relmax) or Minimum (relmin).In addition, different workflow results are generated in different clouds according to the task scheduling process.Consequently, cloud users must set appropriate reliability constraintrelcfor the scientific workflow application, i.e.,relc∈[relmin,relmax].

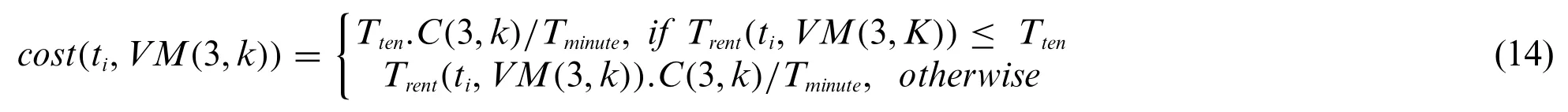

3.7 Workflow Risk Probability

Workflow applications are run in a non-risk-free cloud computing environment and thus, security awareness is critical for quantitative assessment of services.The risk analysis model determines the risk rate throughout the workflow [36].The model assumes that the probability of risks is determined by the level of security and that the distribution of the risk for any fixed interval is based on the Poisson probability distribution.The risk probability of the security service lth for the task can therefore be defined by an exponential distribution as follows [40,41]:

a, g and c represent the services of authentication, integrity and confidentiality, respectively.

Three major cloud threats are snooping, altering and spoofing, and the three security services used to secure scientific workflow applications are authentication service, integrity service and confidentiality service [42].Users flexibly merge these security services to provide a robust defense against different risks and attacks.The designed model assumes that a typical task will involve three types of security services with different user-defined security rates.For example,sriis the set of security requirements of tasktithat can be specified as a q-tuplesli=whererepresents the required security level oflth security service and q=3.

The risk coefficient λldiffers for various cloud environments.For example, 3 snooping attacks,2.5 alterations and 1.8 spoofing attacks can be carried out in the data center within a time interval.The negative exponent shows that a probability of failure increases with the difference between.The risk could be caused by serious network attacks or by security barricade inaccessibility.Therefore,by considering all security services, the risk probability of a tasktimay be obtained below.

The workflow risk probabilityP(T)with task setTcan be calculated according to Eq.(22).

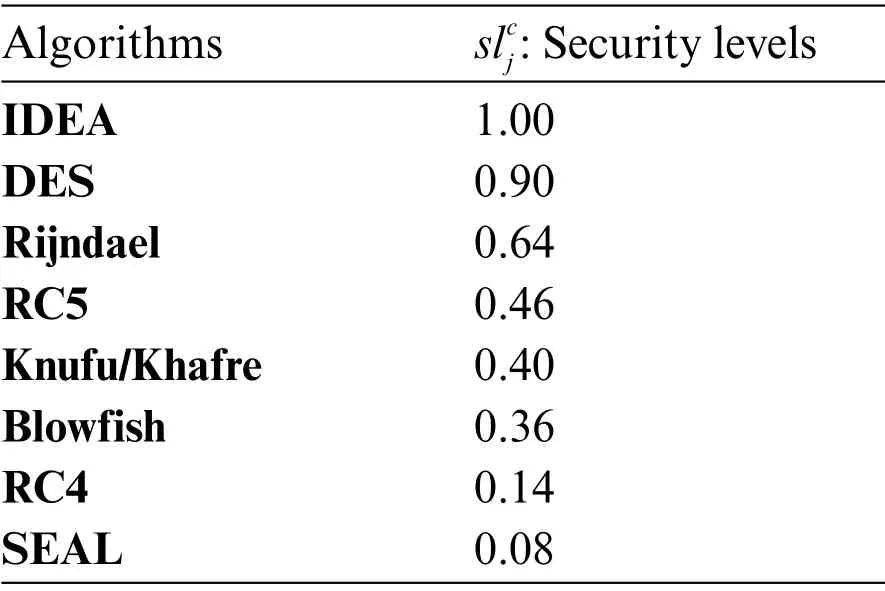

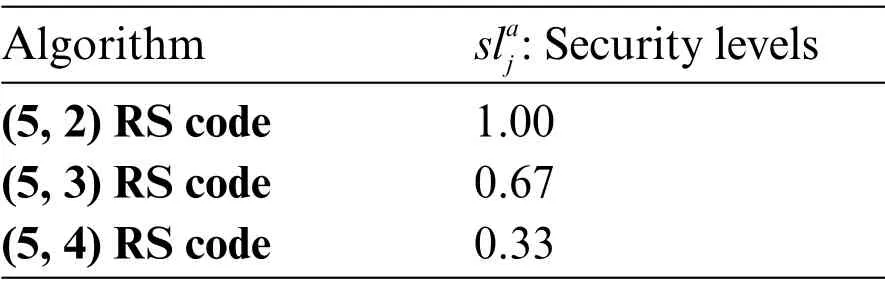

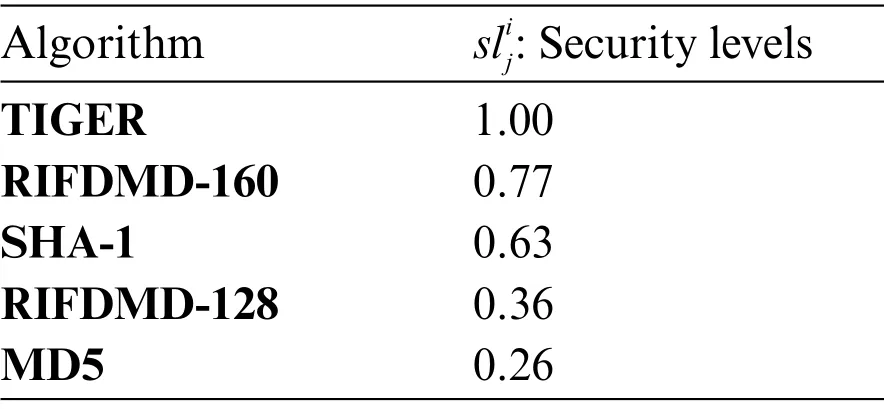

This workflow risk probability will be used as a QoS constraint for problem formulation in the next section.The confidentiality, availability and integrity algorithms are shown in Tabs.5-7[36,42,43].Based on the cryptographic algorithm efficiency, a security level of 0.08 to 1 is assigned to each algorithm.Let the setreflect security services levels for taskti,whereindicates the level of thelth security service that tasktihas received.

Table 5: Cryptographic algorithms for confidentiality [36]

Table 6: Cryptographic algorithms for availability [43]

3.8 Deadline

For each objective, there is a corresponding user-defined scheduling requirement.The constraint is referred to as a hard requirement.Most of the previous studies [2,44] used the deadline constraint as the user-defined hard requirement.But in our experiments, we determined the deadline as the completion time of the workflow scheduling process using a critical path with the lowest processing capacity of VMs.

Table 7: Cryptographic algorithms for integrity [43]

3.9 Budget

Budget constraint is another user-defined hard requirement.Budget is determined in our experiments as the maximum cost of the critical path for scheduling workflow using VMs with the most expensive price.

3.10 Total Reliability

The probability that tasktiwill be performed correctly on any VM is calculated using the exponential distribution as shown in Eq.(18).According to Eq.(19), the reliability of the workflow is calculated as the product of task reliabilities.Thus, workflow reliability becomes smaller.For this reason, we use the mean reliability of each workflow to get the workflow total reliability as shown below.

Since users always assume that they areworking with a service provider that provides high resource Since users always assume that they areworking with a service provider that provides high resourcemax.utilizationandmax_reliabilityaresetto100in Eq.(32).

3.11 Problem Description

The focus of this paper is to maximize resource utilization and reliability and reduce risk probability, makespan and cost.Thus, the workflow is represented asWF=(T, E).The primary objective is scheduling Γ=(Loc,Ord,R),whereLoc={loc(t0),loc(t1),...,loc(tn-1)}istheworkflow task to be executed,Ord= {ord(t0), ord(t1),..., ord(tn-1)} is the task’s data transfer order used primarily to determine the tasks waiting time (the tasks order must also reflect dependence relations),R= {R0, R1,..., Ri,..., Rn-1} is a set of resources for the whole workflow whereRi=(ti, VM(m; k),Tstart(ti), Tend(ti)).Next, we formally describe the multi-objective optimization problem.

Some previous studies have designed scheduling methods for task execution [28,31,36] but the data transmission order has not been prioritized.Meanwhile, this is particularly important in the design of a scheduling strategy.The scheduling results would be different if different tasks are executed in the same location.We, therefore, take into account the priority of data transmission.

4 Multi-Objective Optimization Methods

In this section, two algorithms (MOS [45] and MOS-MWO) by which the Pareto frontier set can be derived are discussed.Similarly, the effectiveness of these multi-objective optimization methods is compared.According to [46], the Pareto optimal solutions must be preferably accurate and uniformly distributed.Thus, three common effectiveness metrics (distance distribution, coverage ratio and maximum distribution ratio) are used for comparing the collections of archives (Pareto front) in the proposed algorithm.

4.1 Particle Swarm Optimization (PSO)

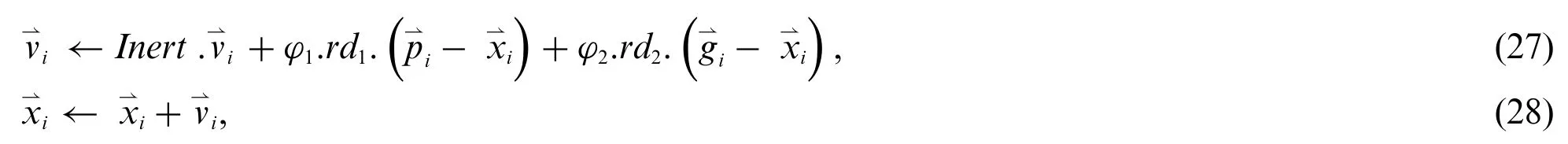

PSO was invented in 1995 by Kennedy and Eberhart.It is a swarm intelligence-based computational method that works by the principle of evolution [47].PSO simulates birds’hunting behaviour.Initially, the PSO algorithm was used to solve single-objective optimization problems.Its great search capacity motivated its exploration for solving multi-objective problems [48-50].PSO’s basic component is the particle moving through the search area.The direction and velocity of the particle are used to determine the movement of the particle.The velocity is generated by integrating the best historical locations with random perturbations.Eqs.(27) and (28) provide the velocity and position update functions, respectively.

whereInertis an inertia weight determined by Eq.(36),φ1and φ2are positive integers andrd1,rd2∈[0,1] are numbers chosen randomly from a uniform distribution [51].Each PSO particle is represented by a three-dimensional vector: the best local position, the current positionand its velocity.Positionspecifies a PSO algorithm-determined filter solution.Whenever the fitness value for the current positionis better than the fitness value of the previous position, the current position is stored in the vector.The best global positionfor all particles is eventually calculated based on how the particles communicate [52].

4.2 Weighted Sum Function

The weighted sum function method is employed to integrate the features of a multi-objective problem into one feature with weighted sum factors.The weighted sum method is especially efficient and easier to apply compared to the Pareto optimality approach.However, a prior understanding of the relationship between the derived objectives is required.Also, it does not include details of the influence of the variable on particular design objectives.

This study deploys the MOS algorithm with a new decision-making method (i.e., MWO).The proposed algorithm can produce solutions showing various cost-makespan trade-offs and reliabilityresource utilization relationships from which cloud users can select.The first step is weight determination of each alternative.We adopt the normalization function for the values of each attribute in accordance with Eq.(29) if xijis considered a beneficial metric and Eq.(30) if it is not.

The weighted sum model (WSM) is calculated using Eq.(31) for all alternatives.

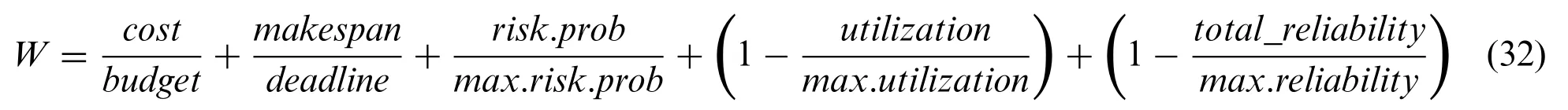

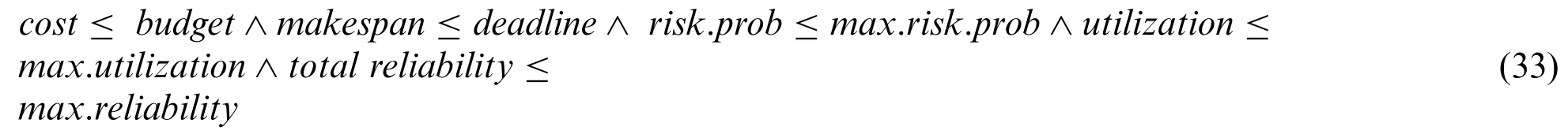

After the candidate alternatives are weighed based on theW-values, the member with the lowestW-value is assigned higher priority among the group members.MWO is advantageous because users or experts do not need to determine the weight for each attribute as required in multi-criteria decision-making(MCDM).Eq.(32)shows how to normalize the makespan, cost, resource utilization,reliability and risk probability.For the case with different constraints, we normalize the execution cost by cost/budget; makespan by makespan/deadline; resource utilization by (1-utilization/maximum utilization); reliability by (1-reliability/maximum reliability) and risk probability by (risk probability/-maximum risk probability).After normalization, all values should not be greater than one if they meet their respective constraints.Then, we can easily determine the alternative particle/schedule with the optimal solution.

Let budget, deadline, max.risk.prob, max.utilization and max.reliability denote budget, deadline,maximum risk probability, maximum utilization and maximum reliability constraints, respectively.These constraints (cost, makespan, risk.prob, utilization, reliability)are defined as the scheduling objectives.The workflow is said to be a feasible schedule if and only if it satisfies Eq.(33):

The MOS-MWO algorithm solves PSO multi-cloud workflow scheduling problems.Therefore,we compare our proposed method with the MOS algorithm and show that the proposed MOS-MWO algorithm outperforms the MOS algorithm.

4.3 QoS Satisfaction Rate (QSR)

The QoS satisfaction rate (QSR) is used to evaluate the performance of multi-objective optimization approaches in a multi-cloud environment.In our study, we calculate the weight of each alternative (workflow) and the algorithm chooses the alternative with the minimum weight which is considered as the best alternative.According to the MWO method, the minimum weight implies a higher performance indicating a higher satisfaction and vice versa.Thus, the key factor that affects satisfaction is the discrepancy between the received and offered performance.Satisfaction is formulated in Eq.(34) based on the Oliver Theory of Expectancy Disconfirmation [53,54].

whereQSRdenotes the QoS satisfaction rate of the alternative for thekthattribute,Rkis the received performance of the alternative forkthattribute andOkis the offered performance forkthattribute.

Similarly, we used the minimum weight to denote high performance.Thus, the above equation is modified to suit the minimum weight.The satisfaction rate is then formulated as Eq.(35).

Example:

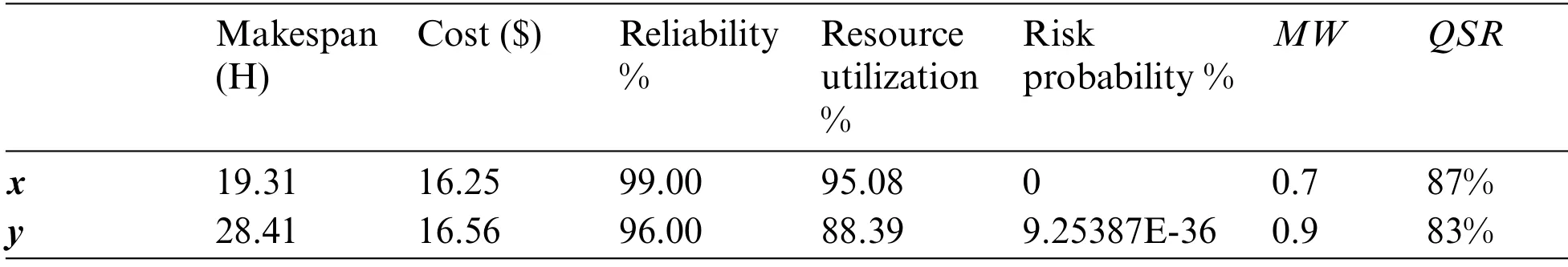

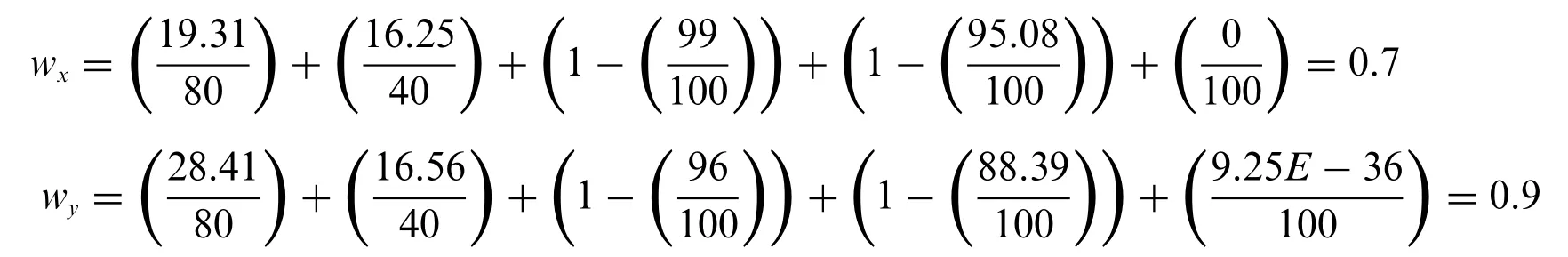

In a five-objective case study, the operation of the proposed algorithm is explained using different values of the attributes of two workflows that are described in Tab.8.The selection of the alternative according to weight and the evaluation of theQSRis shown below.

Table 8: The solution and the evaluation results of the example

Assuming the deadline is 80 and the budget is 40 in the scenario above, the minimum weight valueMWof each workflow is computed using Eq.(32) as shown below.

Workflowxwill be selected because its weightWxis less thanWy.Also, theQSRof the workflow with the minimum weight is high according to Eq.(35).

4.4 Adaptive Inertia Weight Strategy

The inertia weight plays an important role in balancing the exploration and exploitation processes.The contribution rate of a particle’s previous velocity to its velocity at the current time is determined by the inertia weight.There is no inertia weight in the basic PSO presented by Kennedy et al.in 1995[55].Shi et al.[56] later introduced the concept of inertia weight in 1998.They concluded that a large inertia weight makes a global search easier while a small inertia weight makes a local search easier.Other scholars that proposed dynamic inertia weight include Eberhart et al.[57].They suggested a random inertia weight strategy that improves PSO convergence in early iterations of the algorithm.

In our experiment, we used a random inertia weight strategy by considering the weight of the alternative as a random value as shown below.

where

High inertia weight indicates that the solution is not optimal and that more exploration in global search space is required to obtain a good solution.Whereas, low inertia weight indicates that the solution is nearly optimal and that more exploitation in local search space is required to refine the solution and avoid large jumps in the search space.

5 The Proposed Algorithm

In this study, we propose a PSO-based algorithm (MOS-MWO) to optimize the multi-cloudscheduling process while considering different attributes.The algorithm adopts a new decision-making method (MWO) to obtain a better set of solutions in the Pareto front.MOS-MWO involves three main procedures: encoding and initial swarm generation, fitness evaluation and selection and particle updating.In the course of encoding and initial swarm generation procedure, all scheduling solutions are properly represented and the first set of solutions are generated.The fitness evaluation and selection step involves evaluating and selecting best solution fromthe set of solutions generated.Lastly,,the particle updating procedure involves the evolution of the PSO particles.All the procedures are executed using Algorithms 1, 2 and 3 which are integrated to obtain near-optimal multi-objective solutions.

5.1 Coding Strategy

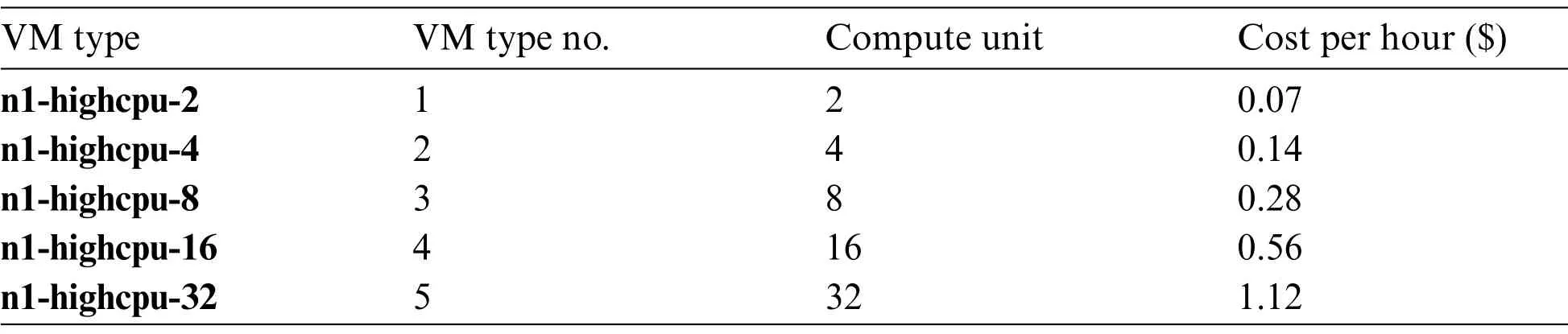

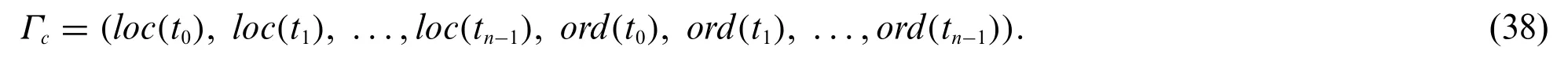

The coding strategy is illustrated in Eq.(38).The order of each task is determined and such a task is assigned to an optimal location for data transmission.This way, the multi-objective scheduling problem can be solved as mentioned earlier.Tab.9 shows the search space for different VM types of the three IaaS platforms considered in this study.

Table 9: Search space for different VM types

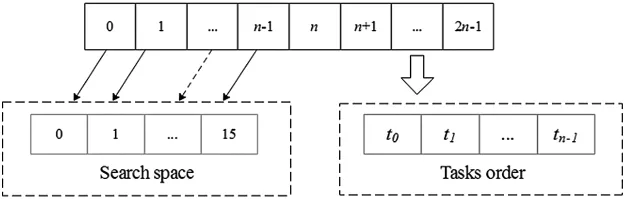

From Eq.(38), the number of parameters in Γcshows the dimension of the particle, i.e.,Ω=2·n.0ton-1positions determine the kinds of VMs that are assigned to the tasks.For every task,loc(ti)takes into account the VM type and the execution location.The order of tasksord(ti)affects the waiting time of the task.Fig.2 shows the workflow encoding plan.

Figure 2: Encoding approach of workflow

5.2 MOS-MWO Algorithm

The proposed MOS-MWO algorithm is described in Algorithm 1.Note that Algorithms 1, 2 and 3 are integrated to get near-optimal multi-objective solutions.

Algorithm 1: MOS-MWO BEGIN 1.Set the number of particles Np;2.Set A =Ø; // initially empty archive, record non dominated solution 3.initializeimages/BZ_1509_729_1441_754_1487.png⇀vi,→xi,→pi,→giimages/BZ_1509_1031_1441_1057_1487.pngN i=1; // random location and velocity 4.initialize {total reliability = makespan = cost = utilization = Random = 0};5.Set {→pi =→xi,→gi = ,→xi}Ni=1;6.calculate {pi, gi}Ni=1;7.While idx<NIT // NITis the number of iteration time 8.for each particle i to NP 9.→vi←inert.→vi+φ1. rd1.(→pi-→xi) +φ2.rd2.(→gi-→xi); // update velocity 10.→xi← →xi+→vi; // update position 11.Call Algorithm (2);12.Define θ(→xi) = max( 0 , relc- reliability)13.If θ(→xi) == 0∧θ(→pi) == 0 //→xiand→piare all feasible solutions 14.If W→xi<W→pi// update personal→pi 15.Set→pi=→xi;16.Set Random = W→xi 17.end if 18.else 19.Set→pi=→x′= argmin{θ(→xi),θ(→pi)};20.Set Random = W→x′;21.end if 22.If θ(→xi) == 0 // only the feasible solution will be added to A 23.for∀→x∈A∧W→xi<W→x // update A 24.A = {→x∈A| W→x>W→xi}; // remove points from A 25.A = A∪→xi; // add→xito A 26.end for 27.end if 28.idx + +(Continued)

Algorithm 1: Continued 29.end for 30.Select global optimal position→gi;31.end while END

MOS-MWO scheduling algorithm involves estimating the fitness of each particle.Scheduling parameters and PSO parameters are initialized (lines 1-6).MOS-MWO calls Algorithm 2 to calculate the QoS parameters (line 11).There are two reliability constraints for selecting the viable solution[58]: 1) The optimal solution within the set of possible solutions (lines 13-17).In this case, a Random variable takes the weight of the optimal solution.2) If not all solutions are feasible, the best solution and the Random value shall be chosen with the least reliability constraint (lines 18-21) [38].So,only feasible solutions are stored (lines 22-27).The selected method is used to evaluate the optimal location(line30)formulti-objective problems according to theweight of the alternative.The algorithm continues until the final condition is fulfilled (line 7).

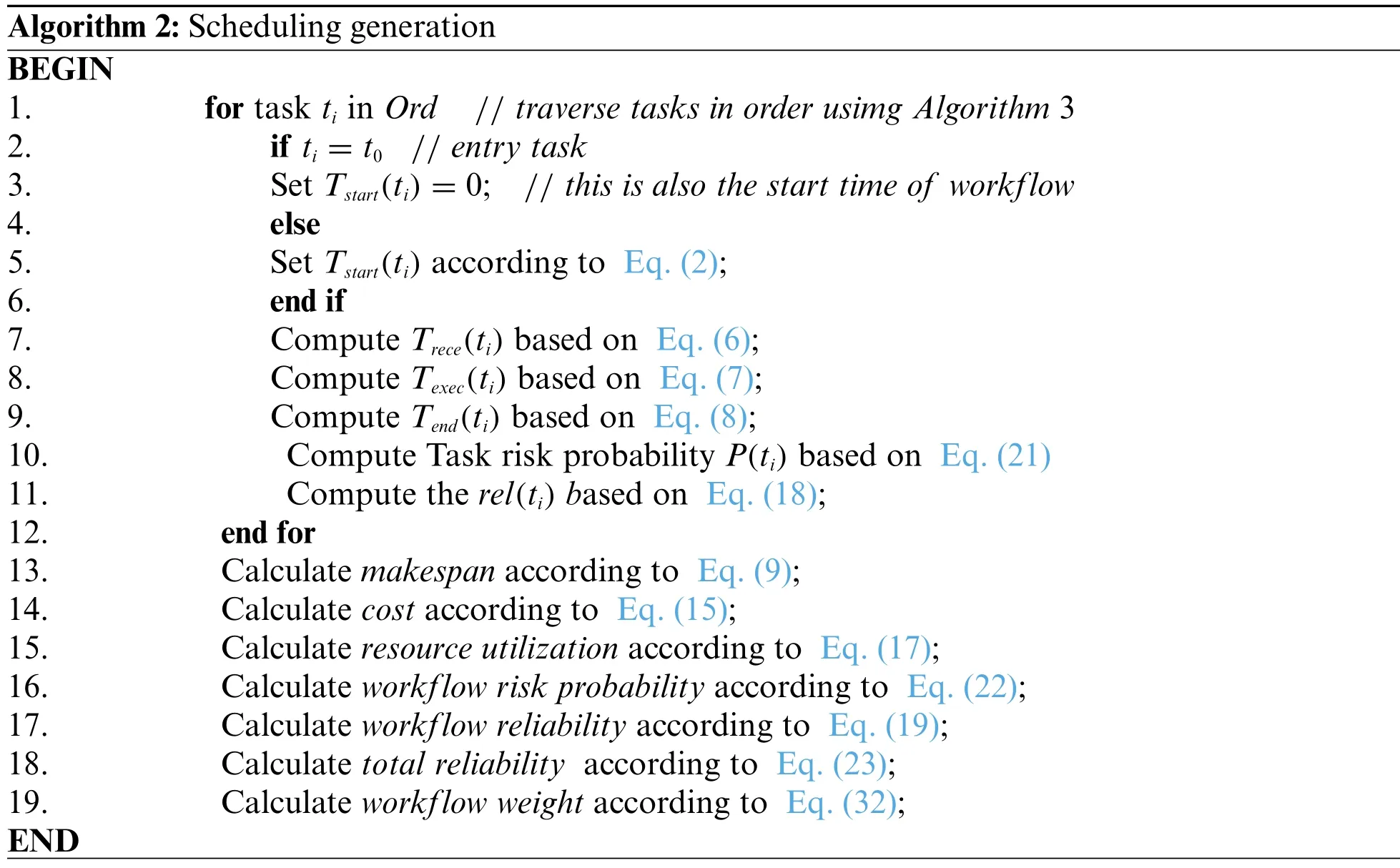

Algorithm 2: Scheduling generation BEGIN 1.for task tiin Ord // traverse tasks in order usimg Algorithm 3 2.if ti= t0 // entry task 3.Set Tstart(ti) = 0; // this is also the start time of workflow 4.else 5.Set Tstart(ti) according to Eq.(2);6.end if 7.Compute Trece(ti) based on Eq.(6);8.Compute Texec(ti) based on Eq.(7);9.Compute Tend(ti) based on Eq.(8);10.Compute Task risk probability P(ti) based on Eq.(21)11.Compute the rel(ti) based on Eq.(18);12.end for 13.Calculate makespan according to Eq.(9);14.Calculate cost according to Eq.(15);15.Calculate resource utilization according to Eq.(17);16.Calculate workflow risk probability according to Eq.(22);17.Calculate workflow reliability according to Eq.(19);18.Calculate total reliability according to Eq.(23);19.Calculate workflow weight according to Eq.(32);END

The output parameters are evaluated by scheduling generation (Algorithm 2) (lines 7-18) and tasks are traversed in order (line 1) using Algorithm 3 during the workflow scheduling process.The start time is calculated to find the makespan for each task (lines 2-6).Next, receiving data timeTrece(ti),task execution timeTexec(ti)and end timeTend(ti)are calculated (lines 7-9).The risk probability and reliability of the task (lines 10-11) then cost, reliability, resources utilization, risk probability and makespan of the workflow are determined (lines 13-18).The total reliability is calculated using Eq.(23) (line 18) and the minimum weight using Eq.(32).Finally, the weight of the workflow is calculated (line 19).

Algorithm 3: Order of tasks BEGIN 1.Initialize 2.α= {ti}; // schedulable entry task t0 3.γ=β=Ø; // the set of scheduled tasks and temporary tasks 4.flag = 0; // record location in search space 5.space = [0,0] // search space 6.end Initialize 7.while α/=Ø 8.flag = flag + |α|;9.for tiin α 10.Put all succers of task tiinto β;11.→vi←inert.→vi+φ1. rd1.(→pi-→xi) +φ2.rd2.(→gi-→xi);12.→xi← →xi+→vi;13.if xi /∈space 14.xi= (x′i: min(|x′i- xi|,xi∈space)15.end if 16.space = space - {xi};17.γ=γ+ {ti}; // add task to the set of scheduled tasks 18.α=α- {ti}; // remove task from α 19.end for 20.for tiin β 21.if pre(ti)∈γ 22.α=α+ {ti}; // add new task to α 23.end if 24.end for 25.Clear β;26.space = [flag , flag + |α| - 1]; // update the search space 27.end while END

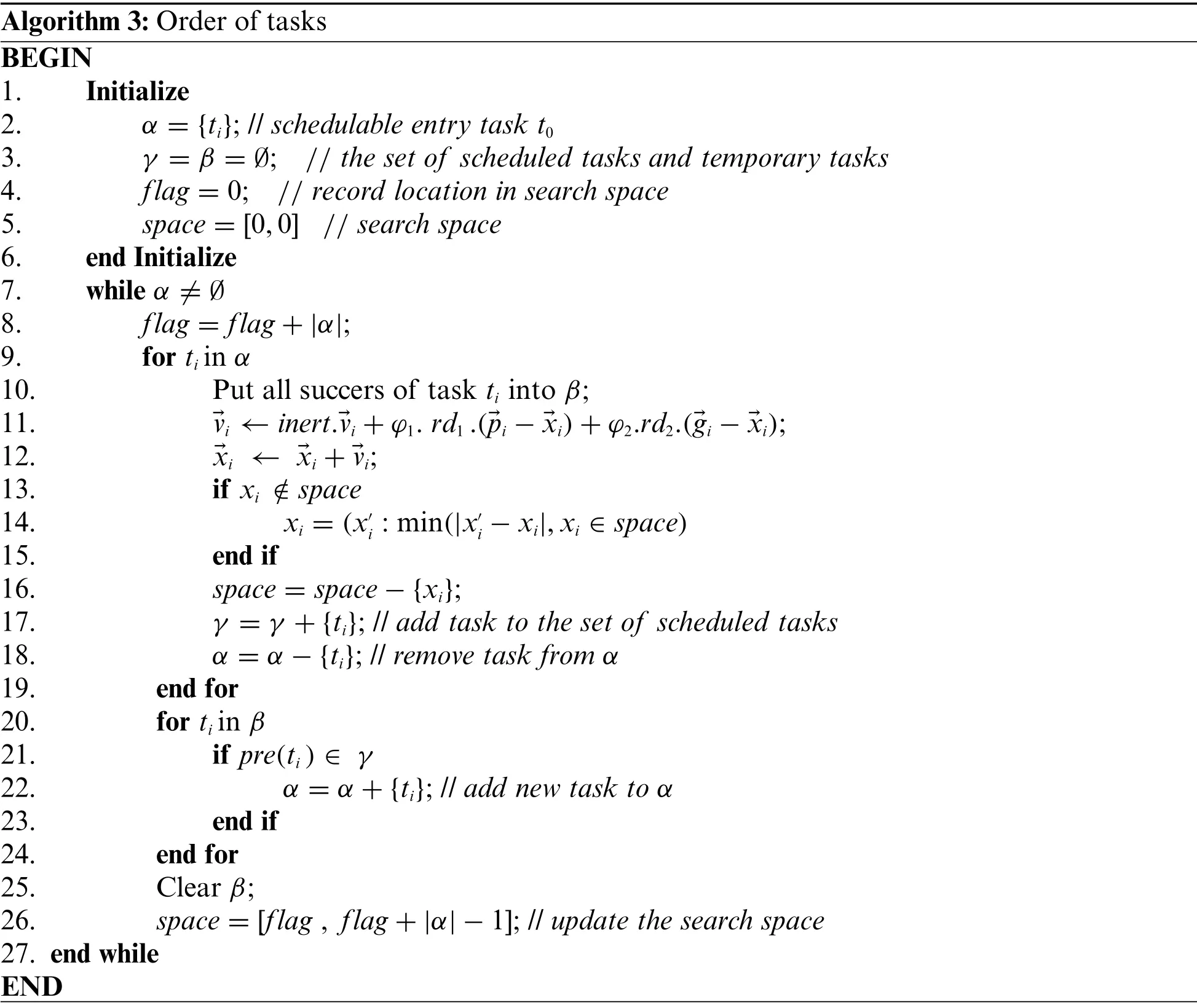

Algorithm 3 identifies task order according to the dependencies between tasks, that is, taskt1must be executed before taskt2if it precedes taskt2in stringOrd.Firstly, the scheduled tasks are initialized, i.e.,α= {t0} (line 2).In line 3, two sets of scheduled tasks are set as γ and β.Waiting tasks are set to empty and the flag records the search area location (line 4).space= [0,0] is used to indicate an unchanged entry task position (line 5).The Euclidean distance is used in lines 11-15 and the correct solution is selected from the search area in line 16.Tasks are finally tested and mapped to the schedulable set α(lines 20-24).The search space is updated sequentially (line 26) to ensure there is a more confined search space for each task in the current schedulable task α.

6 Experimental Setup and Simulation Results

6.1 Experimental Setup

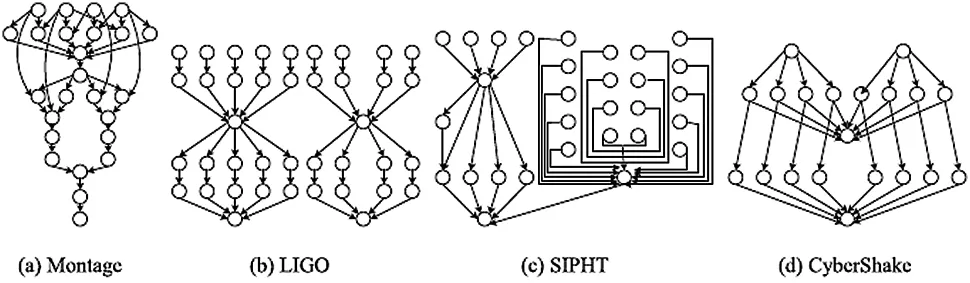

Fig.3 shows the structure of the scientific workflow.Experiments were carried out using an i7 6 cores computer with a 16 GB RAM CPU.The proposed algorithm was implemented in Workflowsim 1.0 using four real-world scientific workflows; SIPHT, montage, LIGO and CyberShake.Randomrd1andrd2values were generated by uniform distribution in the range [0, 1] with [1,32] computing units;respectively.The various cloud failure coefficients for Amazon EC2, Google Compute Engine and Microsoft Azure were identified as λ1=0.001,λ2=0.003 and λ3=0.002, respectively.The bandwidth wassetto0.1 G/sifthe VMs are located within the same cloud while the bandwidth of the VMs was set to 0.05 G/s if they are located in different clouds.In the multi-objective problem, the reliability of the workflow should be equal or greater than the reliability constraint according to Eq.(26).Maximum reliability can be calculated by Eq.(19).

Figure 3: Structure of scientific workflows [45]

In MOS-MWO,φ1=φ2=2.05, and the number of particlesNP=50.For the MOS-MWO algorithm, the number of compensation solutionsNS=15, the repeat timeNIT=1000 and repeat programming is 20 times.Besides the maximum workflow reliability, we also measured the minimum workflow reliability (relmin) to provide sufficient reliability.Users can set the reliability of workflow as follows:

where ρ ϵ[0, 1].

In our experiments, we set the reliability constraint coefficient ρ as 0.2.

6.2 Simulation Results

This section discusses the results obtained from the experiments above with two and five conflicting objectives.The simulations were carried out under reliability constraint on four scientific workflow applications (Montage, LIGO, SIPHT and CyberShake) using MOS-MWO and the original MOS algorithms.The purpose of each algorithm is to optimize the QoS constraints.Feasible solutions were determined according to the minimum weight of the alternatives.

6.2.1 The Two-Objective Case Study

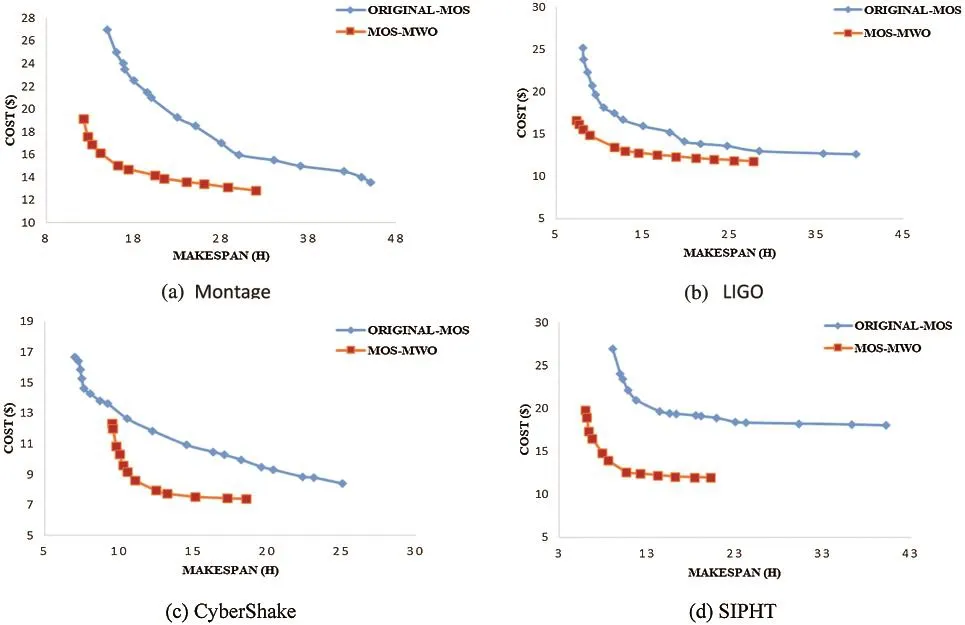

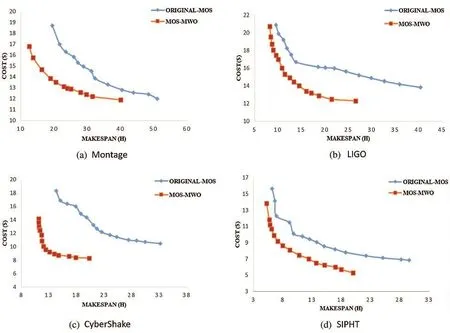

This scenario minimized two objectives, makespan and cost, to get the best trade-off during the scheduling process.The results are shown in Fig.4.Makespan and cost are two non-beneficial metrics so they are normalized according to Eq.(30) and the minimum weight is calculated using Eq.(41)below.

Figure 4: Makespan-cost trade-off on real world scientific workflow in the case of two objectives

By applying our proposed algorithm in Fig.4a, makespan and cost reduced by 26% and 21%,respectively compared to original-MOS.In Fig.4b, it can be observed that MOS-MWO scheduling on LIGO yields better makespan and cost as it achieves 15% and 21% reduction, respectively.Fig.4c shows that when MOS-MWO was applied on CyberShake, the makespan reduced by 12% while cost also reduced by 24% as compared to the original MOS algorithm.The result of the makespan-cost trade-off on SIPHT workflow (Fig.4d) indicates that the makespan is reduced by 39% and the cost reducedby30%using MOS-MWO.Overall,applying our proposed MOS-MWO algorithm minimized makespan and cost and performs better than original-MOS.

6.2.2 The Five-Objective Case Study

We consider a scenario consisting of five important objectives in real-life applications: makespan,cost, resource utilization, reliability and risk probability.Resource utilization and risk probability are vital to the resource provider while the other objectives are particularly of concern to the users.This section discusses the results obtained from the experiments with these five conflicting objectives.Fig.5 shows the results of deploying MOS-MWO and original-MOS algorithms for the different scientific workflows to obtain their optimum solutions.Results from the Montage workflow in Fig.5a show that theMOS-MWO algorithm yields the best set of makespan-cost trade-offs as compared with original-MOS algorithm.With MOS-MWO, makespan is reduced by 28% as compared to the original MOS and cost is also reduced by 4%.Similarly, Fig.5b shows that MOS-MWO produced the best set of alternatives in terms of makespan-cost trade-off on LIGO.It reduced makespan and cost by 35% and 8%, respectively when compared to original-MOS.

Figure 5: Makespan-cost trade-off on real world scientific workflow in case of five objectives

Looking at the two algorithms applied on CyberShake in Fig.5c, results show that MOS-MWO produced solutions with the best makespan-cost trade-off.As compared to original-MOS, MOSMWO reduced makespan and cost by 41% and 20%, respectively.This is also observed in the graphs for the SIPHT workflow(Fig.5d).On applyingMOS-MWOon SIPHT, we get a bettermakespan-cost trade-off and the makespan and cost reduced by 30% and 12%, respectively.Deductively,MOS-MWO outperforms original-MOS that applies the Pareto dominance method.

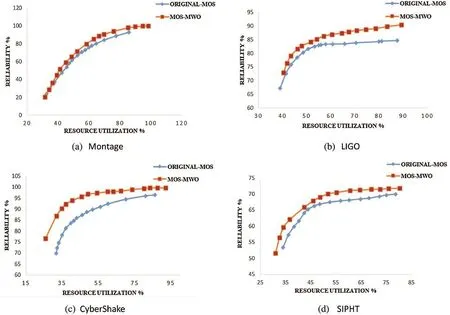

Fig.6 shows the relationships between resource utilization and reliability.MOS-MWO and original-MOS algorithms are applied to various scientific workflows.Similar to Fig.5, the results show that the MOS-MWO achieves the best performance (i.e., higher resource utilization and reliability) in comparison with the original MOS algorithm.This is an indication of the efficiency of our new decision-making method.

Figure 6: The relation between resource utilization and reliability

Fig.6a shows that using MOS-MWO for scheduling Montage scientific workflow can produce better results in terms of reliability and resource utilization than original-MOS.The resource utilization and reliability increased by 13% and 15%, respectively when compared with original-MOS.Applying MOS-MWO on LIGO in Fig.6b increased the resource utilization of MOS by 4%.The reliability also increased by 5% more than the original MOS.Fig.6c shows that working with MOSMWO on CyberShake gives best results in terms of resource utilization and reliability, so the resource utilization is 6% more than the original MOS and the reliability increased by about 4% more than original-MOS.As for SIPHT (Fig.6d), we can see that MOS-MWO produced better results in terms of resource utilization with a 3%increase.Also, the reliability increased by 3% as compared to original- MOS.

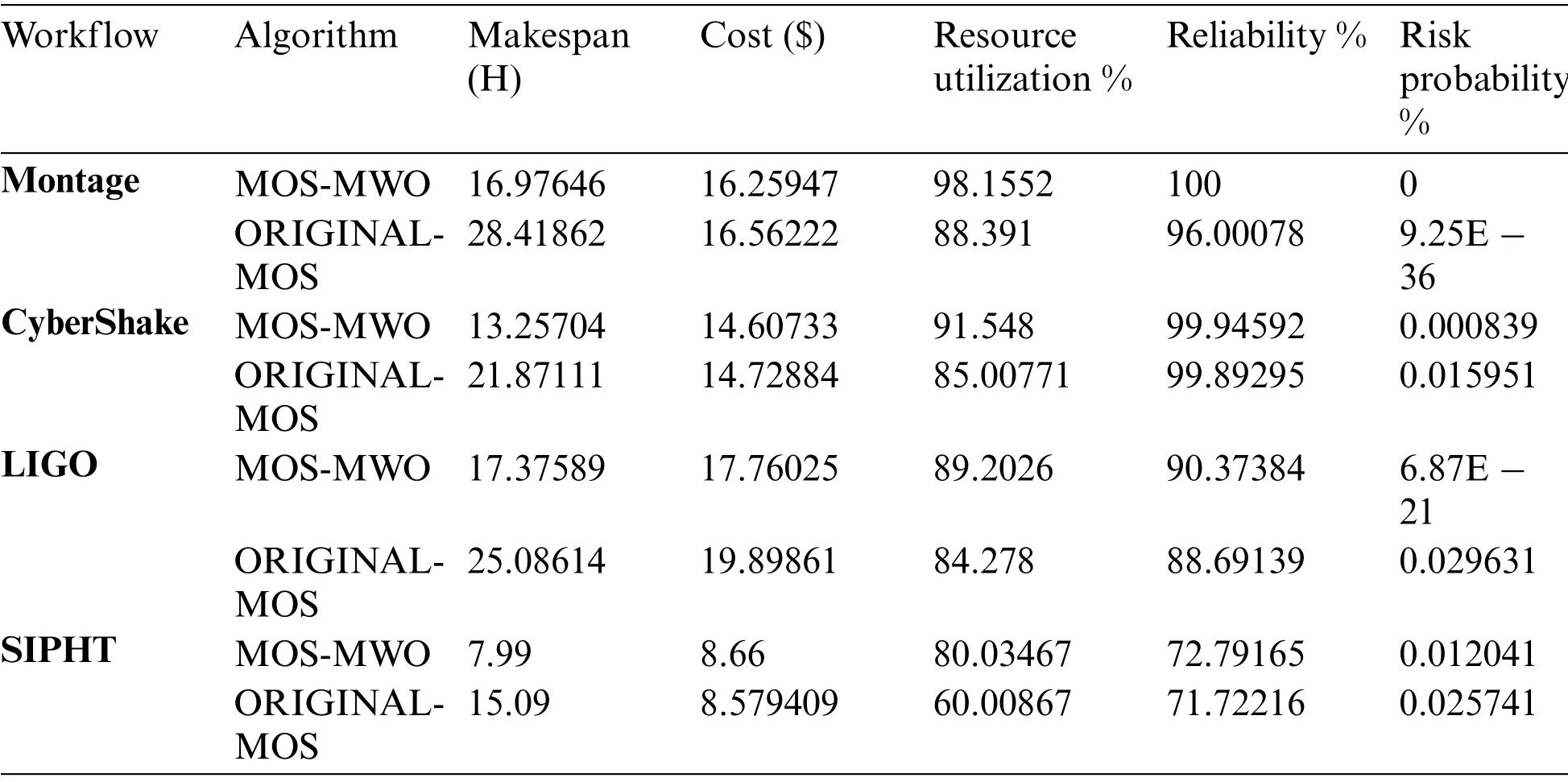

Tab.10 shows the results for the two multi-objective scheduling algorithms studied in this article.Both algorithms were executed in 1000 iterations to obtain the solutions with the highest reliability.With the conflicting attributes (makespan, cost, risk probability, reliability and resource utilization),the table shows that MOS-MWO yields the best results while working with all workflows.MOS-MWO performs better than the original MOS algorithm for all attributes.We noticed in Tab.10 that in the case of SIPHT, original-MOS produces less cost as compared with MOS-MWO.This does not affect the overall performance because we are concerned about the general optimization of the scheduling process considering all the existing attributes.Nevertheless, MOS-MWO yields an optimal solution when compared with the original MOS algorithm.

Table 10: The scheduling results of different objectives

7 Performance Measurement

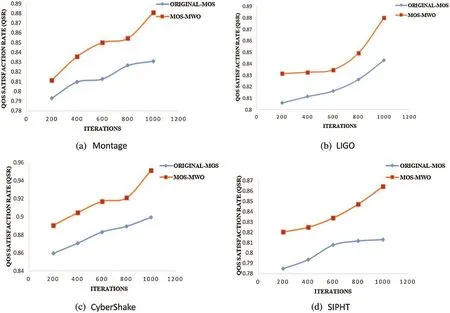

Fig.7 shows the QoS satisfaction rate achieved by both MOS-MWO and original-MOS for all scientific workflows used in this study.As observed in Fig.7a, MOS-MWO achieves the bestQSRwhen dealing with Montage compared to original-MOS algorithm as it uses a number of iterations under the reliability constraint.MOS-MWO achieves a 2% improvement with respect toQSRwhile using 200 iterations and it achieved around 4% improvement ofQSRwhen the number of iterations is 600.With1000iterations,MOS-MWO improved the satisfaction rate by 5% as compared with original-MOS.While increasing the number of iterations, we can get better QSR by using MOS-MWO.

Fig.7b illustrates the results of scheduling LIGO scientific workflow by using both MOS-MWO and original-MOS under the reliability constraint.With 200 iterations, MOS-MWO yields about 3%QSRimprovement as compared to MOS.By increasing the number of iterations to 1000,QSRincreased by 4% when compared with original-MOS.Using MOS-MWO to schedule CyberShake in 200 iterations, theQSRimproved by 3% more than original-MOS as shown in Fig.7c.When the number of iterations increased to 1000, MOS-MWO improvedQSRby 5% more than original-MOS.

Fig.7d illustrates the results of scheduling SIPHT scientific workflow using both MOS-MWOandoriginal-MOS algorithms under the reliability constraint.As compared to original-MOS,MOS-MWO achieves about 3%QSRimprovement with 200 iterations.By increasing the number of iterations to 1000, theQSRincreased to 5% in comparison with original-MOS.

Figure 7: QoS satisfaction rate with five studied objectives

Examining just one of the aspects related to efficiency is unlikely to give a decisive test on multipurpose solutions.Thus, three metrics are used in this study: Q-metric, S-metric and FS-metric.These metrics are used to evaluate the quality of the Pareto fronts obtained by different algorithms[49].Q-metric can be deployed [59,60] as shown in Eq.(42) to determine the particular degree of convergence of multi-objective algorithms A and B.

Ψ=ϒ∩SA and Y is the set of SA∪SB.Two sets of Pareto optimum results for two multiobjective algorithms A and B are indicated by SA and SB.Algorithm A is better than Algorithm B only if Q(A, B)>Q(B, A) or Q(A, B)>0.5.The Pareto front space size is determined by the FS metric as computed in Eq.(43) [61].

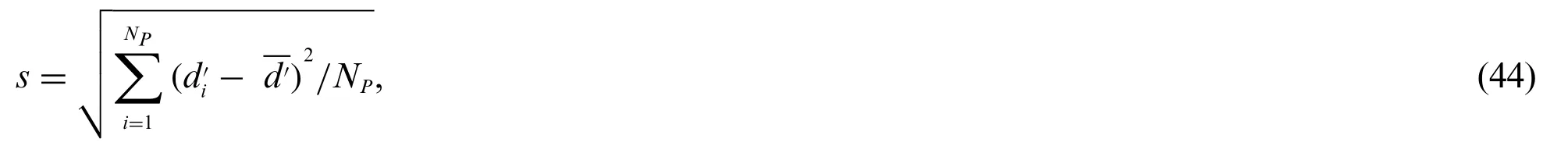

wherefi(x0)andfi(x1)are two values of one objective function.A larger FS value means a greater diversity in the Pareto front.We adopt the S-metric as calculated in Eq.(44) in order to determine the level of uniformity of solutions [30].

where the number of Pareto solutions is NPandcalculates the distance between the members of Pareto front set.

A smaller S-metric implies that the algorithm has found a uniform solution, as opposed to FSmetric and Q-metric, where a greater value is preferred.

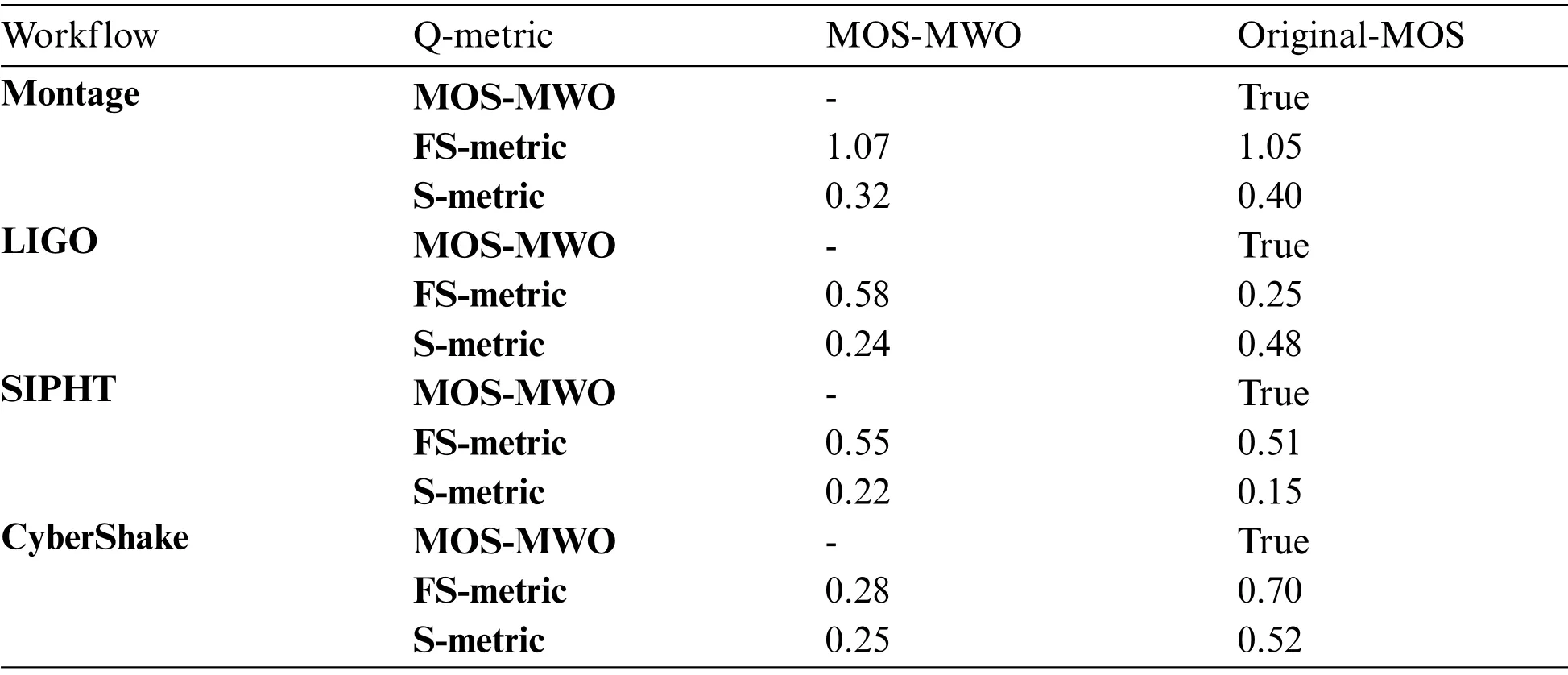

Figs.5 and 6 show the trade-off between makespan and cost and the relation between reliability and resource utilization for MOS-MWO and original-MOS algorithms.Obviously, the MOS-MWO algorithm yields the optimal results for all objectives considered.The multi-objective performance metrics in Figs.5 and 6 are illustrated in Tab.11.If the Q-metrics of MOS-MWO is set to true, that implies that its performance is better than the original-MOS algorithm.Tab.11 shows that the value of Q-metrics is true Q(MOS-MWO, MOS) for all scientific workflows.This shows that the solutions of MOS-MWO are always better than the original MOS, which means that MOS-MWO provides the best result in terms of multi-objective convergence.

Table 11: Multi-objective performance metrics

With regards to the Montage workflow, the FS-metric value for MOS-MWO is 1.07 which is greater than that of original-MOS algorithm.This means MOS-MWO achieves a higher diversity when compared to the original MOS algorithm.The S-metric for MOS-MWO is smaller than that of original-MOS algorithm by 0.02, which means MOS-MWO produces better uniformity in the Pareto front than the original MOS algorithm.As for the LIGO workflow, the FS-metric of MOS-MWO is 0.58 and that is higher than original-MOS algorithm thus, indicating that the MOS-MWO is better than original-MOS algorithm in terms of diversity.The value of the S-metric of MOS-MWO is lesser than that of the MOS algorithm by 0.24, which indicates the uniformity in the Pareto front of MOSMWO is better than the original MOS algorithm.

In Tab.11 we can see that applying MOS-MWO on SIPHT produced an FS-metric value of 0.55 which is better than the original MOS.In contrast, the S-metric of MOS-MWO is 0.22 which is higher than the original MOS algorithm.This implies that the uniformity produced by the original MOS is better than MOS-MWO algorithm.Looking at the results from the CyberShake, it can be observed that the FS-metric of MOS-MWO is 0.28 which is less than the original MOS algorithm that has 0.70 FS-metric value.This implies that original MOS produced a better diversity compared to the MOSMWO algorithm.The S-metric value of MOS-MWO is 0.25 which is smaller than the original MOS.As such, MOS-MWO produces better uniformity when compared to original-MOS algorithm.

8 Conclusion

In this article, the MOS-MWO algorithm is presented and its efficiency in handling scheduling problems with multiple QoS constraints and objectives in a multi-cloud environment is demonstrated.The proposed MOS-MWO algorithm uses a novel decision-making method(MWO) to solve workflow scheduling problems by considering both users’ and service providers’ QoS requirements in multicloud environments.The proposed approach is modeled to handle QoS objective optimization with multiple constraints.MWO is used to evaluate and select the best solutions according to the weights of the specified alternatives.Such weights are also used to establish the inertia weight by using an adaptive strategy.High inertia weight means that the solution is not optimum thus, there is a need to increase exploration in the global search space to get good solutions.A low inertia weight means that the solution is near-optimal.Hence, we increase the exploitation in local search space to refine the solution and prevent big jumps in the search space.Extensive simulations were conducted on four scientific workflow applications to evaluate the performance of the proposed MOS-MWO algorithm.The simulation results obtained indicated that MOS-MWO outperformed the original MOS with respect to the QoS constraints.It also remarkably outperformed the original MOS in term of QoS satisfaction rate.

Acknowledgement:Throughout the study, the authors gratefully appreciate all those who contributed academically, technically, administratively and financially to this study.

Funding Statement:This work was supported by Putra Grant,University Putra Malaysia,under Grant 95960000 and in part by the Ministry of Education (MOE) Malaysia.

Conflicts of Interest:The authors declare that they have no conflicts of interest to report regarding the present study.

杂志排行

Computers Materials&Continua的其它文章

- Constructing Collective Signature Schemes Using Problem of Finding Roots Modulo

- Modeling and Simulation of Two Axes Gimbal Using Fuzzy Control

- Artificial Monitoring of Eccentric Synchronous Reluctance Motors Using Neural Networks

- An Optimal Scheme for WSN Based on Compressed Sensing

- Triple-Band Metamaterial Inspired Antenna for Future Terahertz (THz)Applications

- Adaptive Multi-Cost Routing Protocol to Enhance Lifetime for Wireless Body Area Network