Progress in high-order methods for industrial large eddy simulation

2021-06-23WANGZhijian

WANG Zhijian

(Department of Aerospace Engineering, University of Kansas, Lawrence KS 66045)

Abstract:It is now well-known that high-order methods can be orders of magnitude faster than 2nd order methods to achieve the same accuracy for scale resolving simulations. Because of that potential, much progress has been made in “industrializing” these high-order methods for real world applications. The use of large eddy simulation in industry especially in turbomachinery has seen a significant increase in the past decade, perhaps because of the relatively low to medium Reynolds numbers in gas-turbines and the high-impact of such simulations in understanding key flow physics. In this article, we review recent progress made in enabling LES using high-order methods in the last decade, and identify the most profitable areas of research in the near future to push LES into the design process.

Keywords:industrial large eddy simulation; high-order methods; Navier-Stokes; unstructured meshes

0 Introduction

The current design tools in aerospace engineering are mostly based on the Reynolds averaged Navier-Stokes (RANS) approach because of its low cost, and reasonable accuracy for attached flow. In an assessment of the state-of-the-art CFD tools, NASA’s CFD Vision 2030 Study[1]states.

“TheuseofCFDintheaerospacedesignprocessisseverelylimitedbytheinabilitytoaccuratelyandreliablypredictturbulentflowswithsignificantregionsofseparation.AdvancesinReynolds-averagedNavier-Stokes(RANS)modelingaloneareunlikelytoovercomethisdeficiency,whiletheuseoflarge-eddysimulation(LES)methodswillremainimpracticalforvariousimportantapplicationsfortheforeseeablefuture,barringanyradicaladvancesinalgorithmictechnology.HybridRANS-LESandwall-modeledLESofferthebestprospects…”

The vision study explicitly calls for scale-resolving large eddy simulation (LES) to be further developed for vortex-dominated separated flow, either with or without a wall-model or in the context of hybrid RANS-LES approaches. The concept of LES was developed over half a century ago[2]and has been applied over many decades to low Reynolds number problems to study fundamental turbulent flow physics. The major obstacle in its wider application is the computational cost especially for high-Reynolds number flows. Relative to wall-resolved LES (WRLES), wall-modeled LES (WMLES) is much less expensive because the small turbulence scales near solid walls are not fully resolved on a computational mesh. For WMLES, Chapman[3]estimated that the number of degrees of freedom (nDOF) scales with the Reynolds number according toRe2/5, which is considered too optimistic. Choi & Moin[4]provided the following estimate: nDOF~Re. Taking into account of the cost for time integration, Slotnick et al[1]offered the following cost estimateC~Re4/3. In addition, an estimate of computational time was also provided for an explicit, 2ndorder numerical method.

The resolution of a computational simulation depends not only on nDOF, but also the order of accuracy of the numerical method. For example, a spectral method and a 2nd-order finite volume (FV) method with the same nDOF have dramatically different resolution power for turbulence scales.

Because of the disparate length and time scales in a turbulent flow, spectral methods[5]was the method of choice for earlier direct numerical simulation (DNS) investigations involving relatively simple geometries, such as fully developed channel flow[6]. Such studies have been invaluable in understanding fundamental turbulent physics, and in developing turbulence models for RANS approaches.

Although spectral methods possess the high accuracy, and have achieved considerable success in DNS, their potential for industrial applications are limited due to the difficulty in handling complex geometries. The present design tools in industry are almost always based on unstructured meshes. The cost to maintain a separate tool handling structured meshes far out-weighs the efficiency benefit in memory and speed. Therefore, it is a common practice to handle structured meshes as if they are unstructured with the associated benefits in modularity, maintainability and load-balance in a parallel implementation.

In an effort to reduce the cost of scale-resolving simulations, the international CFD community has invested significantly in high-order methods, which are at least 3rdorder accurate, in the last two decades. This effort has spurred the further development of many high-order methods. Interested readers can refer to many review articles[7-14]. Five International Workshops on High-order CFD Methods have been organized in both the US and Europe to provide a forum to evaluate the status of high-order methods and identify pacing items. One conclusion from these workshops is that high-order methods are indeed much more efficient/accurate than 2ndorder methods for scale resolving simulations. To achieve the same accuracy, high-order methods are orders of magnitude faster.

Since the publication of the review article of the first high-order CFD workshop (HOCFDW)[10], significant progress has been made in many pacing items. The progress has enabled applications of LES based on high-order methods by many research groups in academia, industry and government research labs[15-24]. To narrow the scope of the present paper, we review major advancements in the development of high-order methods for scale-resolving simulation in the last decade. We start our review by examining the pacing items listed in[10]:

·High-order mesh generation

·Solution-based hp-adaptations

·Scalable, low memory efficient time integrators for RANS and hybrid RANS/LES approaches

·Robust, accuracy-preserving, and parameter-free shock capturing.

Note that in[10], the emphasis was on the development of high-order CFD methods for both RANS and LES applications. In the present one, we focus only on LES to limit the scope of the present paper. Therefore, in the following sections, we will review the challenges in using high-order methods for LES, progress in high-order mesh generation, space discretization, time integration, hp-adaptation. The paper will conclude with an outlook for further development in the near future.

1 Challenges in using high-order methods for LES

The benefits of high-order methods for LES and DNS were well-known since some of the earliest DNS studies used spectral methods[6]. Spectral methods represent the ultimate high-order methods in term of accuracy. However, the global nature of the methods limited the application to simple geometries, and to flow problems without discontinuities. High-order methods for structured meshes have achieved far more success for more complex geometries than spectral methods[9]. However, high-order methods capable of handling unstructured meshes are preferred for industrial LES because the cost for mesh generation is significantly reduced, and the parallel implementation is more straightforward.

Although high-order methods can be orders of magnitude faster than 2ndorder methods for LES to achieve the same accuracy, many challenges still remain. Some are related to intrinsic challenges for LES, and some others are unique for high-order methods. In the following paragraphs, we briefly describe some of the challenges.

1.1 Flow at high Reynolds numbers

This is an intrinsic challenge for LES. At a high Reynolds number, such as 10 million or above, the range of scales that must be captured increases, and the cost becomes prohibitively high. The smallest scales near a solid wall make WRLES very expensive. To reduce cost, wall models have been developed for such simulations. In particular, non-equilibrium wall models capable of handling flow separations are needed. Progress made in developing robust and accurate non-equilibrium wall models will impact the range of Reynolds numbers that can be tackled. Meanwhile, fast implicit methods which can be implemented on GPU clusters will further increase the range of Reynolds numbers that can be tackled.

1.2 High-order mesh generation

This is a challenge unique to adaptive high-order methods. The need for high-order meshes has been demonstrated by many researchers with the DG and related methods. Because of the high accuracy of such methods, the nDOFs to achieve an engineering level of accuracy is dramatically reduced. Many studies have shown that even a 3rdorder scheme can save over an order of magnitude in nDOFs comparing with a 2ndorder scheme[25]. Since DG and related methods add internal DOFs, the computational meshes are therefore much coarser than those used in a FV method. For example, let’s assume that a 2ndorder FV method needs a billion hexahedral cells to achieve an acceptable level of accuracy. For a FR/CPR code running at 3rdorder accuracy at solution polynomial degree of 2 (or p2), the number of DOFs to achieve a similar accuracy is about 1 billion/16, which is 62.5 million DOFs/equation. Since each element has 33=27 DOFs, the mesh needs only 2.3 million elements! This is a very coarse mesh comparing with one having 1 billion elements. At such a coarse resolution, the wall boundaries must be represented with at least degree 2 polynomial patches. Otherwise, the error near a linear wall-representation will destroy the high-order accuracy.

1.3 Robustness of high-order LES

High-order methods have much less dissipation and dispersion errors than 2ndorder methods. For under-resolved LES, scales that cannot be resolved on a given mesh alias into high-wave number/high frequency modes. Energy from these modes can often accumulate because of a lack of dissipation from the numerical scheme or the sub-grid scale stress model. At high Reynolds numbers, the physical dissipation is too low to damp these aliased modes, which can drive under-resolved LES divergent.

Many remedies have been developed to improve the robustness of under-resolved LES:

1.4 Efficiency, accuracy and memory requirement of time integration approaches

Large eddy simulations are time-accurate 3D computations with a time step small enough to capture the dominant turbulent dynamics. The efficiency and accuracy of the time marching scheme is therefore a very important factor in determining the simulation cost. Both explicit and implicit schemes of various order of accuracy have been used successfully for LES. The time step used in explicit schemes is determined by the smallest element size usually located near solid walls and the speed of the fastest acoustic wave. This time step is often orders of magnitude smaller than the dominant turbulent time scale, making explicit schemes inefficient. On the other hand, implicit schemes do allow time steps many orders larger. However, the cost per time step is often many times of that of explicit schemes. In addition, implicit schemes often need to store the Jacobian matrices, whose size increases dramatically with the order of accuracy as p6. Much research is still needed to develop efficient low memory time integration schemes.

2 High-order mesh generation

Research into high-order mesh generation started at least as early as 2000[26]. There is a reason why high-order mesh generation was the top pacing item identified in the first HOCFDW. By that time, it was well-known that high-order accuracy could not be achieved with linear meshes. In addition, linear meshes can cause stability problems and possibly induce flow separations. However, the capability to generate high-order meshes was not available in commercial mesh generators simply because no commercial CFD software can handle high-order meshes, and only research codes in the small high-order CFD community needed such meshes. As a result, each research group had their own ad-hoc solutions to produce high-order meshes. In fact, there was not even a standard format for high-order meshes.

At the time of the first HOCFDW, the most well-known high-order mesh generator was gmsh[27], which also established the first public-domain high-order mesh format. Because of that, the high-order meshes supplied by the first HOCFDW used the gmsh format. There was no alternative. Unfortunately, gmsh was a research code geared towards mesh generation researchers, not necessarily high-order CFD practitioners. Ultimately, in order for industry to used high-order LES solvers, it is necessary to convince commercial mesh generators to produce production-level high-order mesh generators. As a precondition for that to become a reality, there must be a standard mesh format for both mesh generators and flow solvers.

Soon after the first HOCFDW ended,there was an effort to standardize a high-order mesh format. Although gmsh was very popular in the high-order CFD community, it was not an international standard. The international standard for CFD meshes is the CGNS format[28], widely used in the CFD community, not only for meshes, but also for flow solvers and flow visualization packages. It appears the only possible route to standardize a high-order mesh format is to extend CGNS to high-order meshes. A graduate student from the University of Kansas (KU) (M. Yu) wrote the proposals to formally extend the CGSN standard to handle cubic and quartic meshes. The quadratic mesh format was already part of CGNS. The proposals were quickly approved by the CGNS committee, and these formats were later implemented.

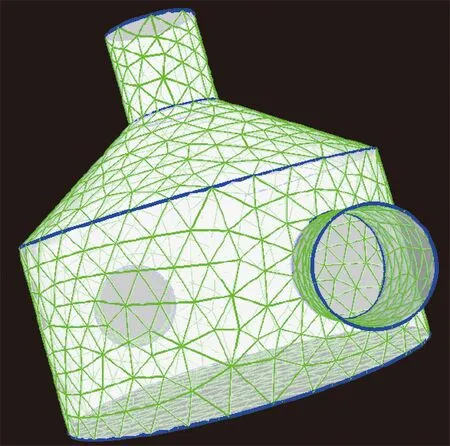

After the 1stHOCFDW, there was a lot of interest in developing high-order meshing tools which made high-order CFD simulations feasible. Gmsh continued to develop its high-order meshing capability. Many university groups also took part in producing tools converting linear meshes into high-order ones because producing high-order meshing tools directly for CAD geometries was beyond the capability of academic research groups, and it was completely unnecessary. At University of Stuttgart, Prof. Munz’s group produced a tool called HOPR[29], which can translate linear meshes into high-order ones, and also convert structured meshes into high-order unstructured meshes. Under the support of NASA, University of Kansas produced a meshing tool called meshCurve[30]to convert unstructured linear meshes into high-order meshes in the CGNS format. In meshCurve, it was assumed that the mesh is generated by an external mesh generator, and the access to the geometry is not possible. Therefore, the geometry is reconstructed from scratch only using the surface meshes. To make the process user-friendly, a graphical user interface was developed, which allows the user to select wall surfaces, detect important geometric features such as sharp edges and critical points. These features are then preserved in the geometry-reconstruction phase. After that, high-order surface meshes are generated, followed by high-order volume meshes. Finally, the entire mesh is examined for possible negative Jacobians, which are removed through smoothing iterations. A sample reconstructed high-order mesh from a linear mesh is shown in Fig.1. Note the blue sharp edges have also been upgraded to high-order curves. After meshCurve was announced in 2016, it has been downloaded by more than 220 unique users from all over the world.

Fig.1 A sample reconstructed high-order mesh by meshCurve with feature preservation

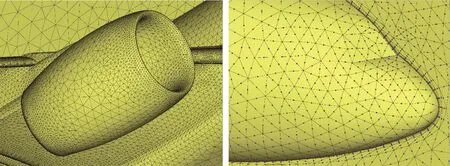

In the past few years, research on high-order mesh generation has been supported all over the world, see references[31-36]. The main breakthrough came when Pointwise[32]and GridPro announced the capability for high-order mesh generation. Pointwise can generate mixed high-order meshes including tetrahedral, prismatic, hexahedral and pyramidic elements, while GridPro is capable of producing unstructured hexahedral meshes. Both mesh generators first generate linear meshes, which are then elevated to high-order ones. Both support the CGNS high-order mesh format. An example high-order mesh for the NASA Common Research Model high-lift configuration is displayed in Fig.2. Ultimately the availability of high-order commercial mesh generators is a sure sign that the CFD community now embraces high-order CFD, and industrial high-order LES is becoming a reality[24].

Fig.2 Sample high-order meshes for the NASA common research model high-lift configuration generated by pointwise (image courtesy of Steve Karman)

3 Space-Discretization

As described by many review papers, there has been considerable research interest on high-order methods in the past two decades. Many methods have seen implementation and application in production-level LES solvers. We divide methods capable of handling unstructured meshes into the following main categories:

·High-order finite volume (FV) or WENO methods[37-38];

·Continuous finite element methods such asstreamline upwind Petrov-Galerkin (SUPG)[39];

·Discontinuous finite element and related methods such as discontinuous Galerkin (DG)[40], spectral difference (SD)[41]and flux reconstruction (FR)[42-43];

·Hybrid FV/DG type or PnPm methods[44-45].

As reviewed in[14], most modern super-computers are mixed CPU/GPU/vector-processor clusters. On a GPU card, the motto is “communication is everything” because the GPU performance hinges on the cost of communication. Computations with continuous local data and coalesced memory access are orders of magnitude faster than computations with remote data and non-coalesced memory access. This property immediately makes discontinuous finite element methods such as the DG, SD and FR methods stand out for maximum potential efficiency.

Let’s illustrate this point in a FV and FR context. We assume inviscid flow for simplicity. In a FV method, one time-marching step consists of the following major operations:

1) Reconstruct solution distributions from cell-averaged state variables;

2) Compute the Riemann fluxes at face quadrature points;

3) March one time step.

Linear reconstructions need at least face-neighbor solution data, as shown in Fig.3, and high-order reconstructions usually need data from neighbor’s neighbors. For the reconstruction step, the higher the polynomial order, the more the communication penalty. This operation incurs a significant penalty on a GPU because the data required by the reconstruction is scattered all over the place in the data array. Since the mesh is unstructured, the solution data is stored in the memory without any fixed patterns to exploit. Furthermore, to compute the Riemann flux on the face quadrature point, more data communication is needed to obtain the solution from the neighboring element. Any communication carries a penalty to performance. Note that once the residual is obtained, explicit time marching does not involve data communication.

Fig.3 Reconstruction stencil and flux point locations in a finite volume scheme. Different colors indicate discontinuous data in the solution array. The flux always needs data from neighboring elements

In a DG or FR method, on the other hand, the solution data is stored in an element-wise manner. The state variables on an element are stored continuously in the data array, as illustrated in Fig.4. One time-marching step consists of the following major operations:

Fig.4 Reconstruction stencil and flux point locations in a DG/FR method. Same color indicates continuous data in the solution array. The flux always needs data from neighboring elements

1) Evaluate the flux diverge based on the local solution data on each element;

2) Compute the Riemann fluxes at the flux points and correct the local flux divergence;

3) March one time step.

The 1ststep uses completely local data on the element. It involves computing the solution gradients at the solution points, and the solutions at the face flux points. The higher the polynomial degree is, the higher the computational intensity. Communication is only needed when the Riemann flux is evaluated at the face flux points. Only immediate neighbors are included in the stencil no matter how high the polynomial degree is. Once the Riemann flux is computed, the correction operation is again local. This communication pattern gives the discontinuous high-order FE method the best chance in maximizing performance on GPUs. Depending on how data is stored in SUPG, the reconstruction operator may need to access remote data. Therefore, we expect the GPU performance of SUPG and PnPm methods to be between the FV and DG/FR methods.

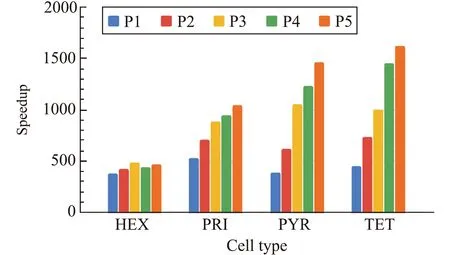

Indeed,recently the FR/CPR solver, hpMusic[24,46], was successfully ported to one of the fastest supercomputers in the world, Summit. On a single NVIDIA V100 card, the speedups of one GPU card over a single Intel Xeon CPU E5-2620 processor for different polynomial degrees and element types are shown in Fig.5. The speedup ranges from 400 to over 1 600. Note the clear trend of increasing GPU performance with increasingporders. This is because of the higher local computational intensity with increasingporders. In addition, the performance reaches the highest on tetrahedral elements because stride-one local memory access is achieved on the local solution array for tetrahedral elements. An astonishing speedup of 1 600 was achieved atp=5 on tetrahedral elements[47].

Fig.5 Speedups of a single NVIDIA V100 card over an Intel Xeon CPU E5-2620 processor for the FR/CPR method running different p orders on four types of elements, in double precision

To deal with shock-waves, the most popular approaches include artificial viscosity[48-50]or with solution limiters[51-53]. Shock-fitting has also seen a new life in the moving discontinuous Galerkin context[54]with promising results for 3D unsteady flow problems. Although progress has been made in shock-capturing, we have not witnessed a major breakthrough. For example, user-specified parameters are still needed in artificial viscosity. Limiters continue to hinder convergence to steady state and can degrade solution accuracy. The vision of robust, parameter free and accuracy-preserving and convergent shock computing has not been realized yet. However, enough progress has been made that high-order LES from transonic to hypersonic speeds has been successfully performed regularly.

Shock-capturing is first order accurate since the position within an element is undecided. For a stationary shock wave, the characteristic wave originating from the shock always carries a first-order error. Based on this argument, strict accuracy-preserving shock-capturing is difficult or impossible to realize. Perhaps shock-fitting is necessary to strictly preserve accuracy away from the shock-wave. Nevertheless, much higher resolution has been obtained with high-order methods than with 2ndorder ones using shock-capturing when shock-waves are present.

4 Time integration

Scale-resolving simulations are expensive because time accurate computations must be performed with a time-step small enough to capture the dominant turbulent dynamics. Such computations need to be sufficient long so that the flow reaches a statistically steady state. To complicate things even further, some turbulent flows appear to oscillate between different states. In this case, the problem may have multiple statistically “steady” states. Finding a time-invariant mean solution is even more challenging in such a situation.

Both explicit and implicit approaches have been used in high-order LES. The advantage of explicit schemes is the ease to implement on parallel computers and its usually excellent parallel efficiency[55-56]. The major disadvantage is that the global time step is limited by the smallest element size due to the CFL condition. This problem is particularly severe for unstructured meshes generated for complex geometries, which often have a few bad elements with diminishing cell volumes. Such bad cells can make explicit schemes prohibitively expensive.

To remedy this problem, time-accurate local time-stepping approaches were developed to overcome the time step limit[57-58,17]. Each element advances in time based on its own time step, and only synchronizes with other elements for post-processing purposes. Orders of magnitude in speedup were demonstrated comparing with a global time stepping approach. The challenge in implementing the time-accurate local time-marching approach is how to maintain load balance in an extreme-scale parallel environment.

The most-often used approach to improve time-marching efficiency is to adopt an implicit approach. There are two major components in an implicit approach which affect the accuracy and efficiency: the time discretization scheme and the numerical algorithm to solve the resulting time-discretized system. The order of accuracy is determined by the time discretization scheme, while the efficiency is mostly dictated by the nonlinear equation solver (or just solver when there is no ambiguity), and the convergence tolerance of the unsteady residual. To illustrate the point, let’s consider the semi-discretized system written as

whereUis the global DOFs, andRes(U) is the residual after space discretization. Various time-marching schemes have been developed for high-order spatial discretizations in the past decade. Popular choices include backward difference formulas (BDF)[59], implicit Runge-Kutta (IRK)[60-62], diagonally implicit Runge-Kutta (DIRK)[63], and its variant, explicit singly diagonally implicit Runge-Kutta (ESDIRK) and implicit-explicit Runge-Kutta[64-66], linearly implicit Rosenbrock-type method[67-68], and high-order space-time method[69]. Recently an IRK scheme called Radau IIA was studied by several researchers because of its high-accuracy, being L-stable, and having fewer stages than other DIRK schemes. However, a fully coupled system of all the stages needs to be inverted. The BDF schemes need to solve one non-linear system per time step, but not L-stable for higher than 2ndorder accuracy. All other schemes need to solve multiple non-linear systems, or a fully coupled nonlinear system at each time step.

Several popular schemes are compared in[70-72]for efficiency and accuracy. To illustrate the two main components, we consider the 2nd-order backward difference formula

In solving (2), we often define the unsteady residual as

Eq. (3) is sometimes solved with a dual-time approach with a pseudo-timeτ[44]defined in

Any iterative solvers for steady-state problems can be used to decrease the unsteady residual by the convergence toleranceεN(Nmeaning the non-linear residual), including explicit local time-marching (in pseudo-time), hp-multigrid solvers or implicit Newton-like solvers. Let the initial guess forUn+1be the solution at the previous time step,Un. The unsteady residual is considered converged if

This convergence tolerance does indeed influence efficiency and accuracy. Through a comparative study in[72], it was shown a two-order convergence (εN=0.01) is sufficient in capturing both the mean flow quantities such as mean pressure and skin-friction and the power spectral density, which highlights turbulence dynamics.

The well-known Newton’s method can be used to solve (3), i.e.,

wherekis the iteration index, and ΔU=U(k+1)-U(k).Normally multiple Newton steps are necessary to satisfy (5). This Newton loop is sometimes called the outer loop. The linear equation (6) is a large sparse system, which is again solved using an iterative solver such as Gauss-Seidel, or the Jacobian-free Newton-Krylov (JFNK) method[73]with various preconditioners, e.g., ILU(n), element Jacobi, line[74],p-multigrid, or no preconditioner. The iterative loop for this linear equation is called the inner loop with a convergence tolerance ofεL. The inner loop usually does not need to converge very far (withεL~0.1) as long as the outer loop satisfies the convergence tolerance.

The inclusion of the unsteady term with a small time-step to capture the turbulence makes the non-linear system less stiff than the corresponding steady system. As an alternative to this dual loop approach, the non-linear LU-SGS approach[75-76]avoids the dual loop by directly updating and converging the unsteady residual in a single loop. Let the update equation for elementibe

whereDiis the element Jacobian matrix,U(*)represents the latest global solution. Symmetric forward and backward Gauss-Seidel loops over the elements are then used to solve (7) until the unsteady residual satisfies (5). The requirement to store the element Jacobian matrix makes the LU-SGS approach unattractive for higherp>3, as the size of this matrix goes up according top6. In addition, LU-SGS is not a very strong implicit solver, and the time step size is often smaller than the one dictated by flow physics.

For steady flow computations, a JFNK approach needs a good preconditioner to converge. The most used preconditioners are usually based on the Jacobian matrix, for example, the incomplete LU decomposition with various levels of fill-in, ILU(n), or the element Jacobi preconditioner. Unfortunately, for DG-type methods at higherporders >3, matrix-based preconditioners are out of the questions. Other preconditioners such as p-multigrid have been developed, and demonstrated various levels of success[77-79]. Fortunately, weaker-preconditioners which do not need to store the large Jacobian matrix such as p-multigrid have a chance to perform well. The search for low-memory and efficient time integration is still ongoing.

5 Solution-based hp-adaptations

Various adaptation criteria have been used for mesh adaptations in CFD[80-81]. There are four main types of error indicators in the literature[82].

1) Feature based indicators. Such indicators use the gradient, curvature or smoothness of certain flow variables. They can be directional to enable anisotropic mesh adaptations.

2) Solution error or discretization error based indicators. To apply this indicator, the solution error needs to be estimated. Since it is the discretization error that we wish to reduce with mesh adaptation, on the surface it might appear the discretization error would serve as an appropriate driver for the adaption process. Reference[82]showed discretization error is not an appropriate mesh adaption criterion.

3) Truncation error or residual based indicators. For complex non-linear equations, the truncation error is not easy to compute. Instead, the numerical solutions or residuals on coarse and fine meshes or with different polynomial orders are used to approximate the truncation error. Furthermore, the transport of the discretization error is driven by the local truncation error[83].

4) Output adjoint based indicators[84]. Adjoint-based error indicators relate a specific functional output directly to the local residuals by the adjoint solution, which can be used to construct a very effective error indicator to drive an adaptive procedure towards any engineering output. Such indicators can target elements which contribute the most to the output, such as the lift or drag forces.

For steady RANS flow computations, output adjoint based mesh adaptation approaches appear to be the most efficient[85]in obtaining mesh-independent lift, drag and moment coefficients. In addition, they can also provide a reasonable error estimate, which can further increase the accuracy. Comparisons with fixed grid approaches indicate that orders of magnitude in CPU time can be saved to achieve the same accuracy[85-86].

For unsteady flow problems, however,a similar output-adjoint approach is very expensive because an extra dimension in time needs to be traversed. For example, if the drag at a final time needs to be predicted, an unsteady output adjoint problem needs to be solved in reverse time. In addition, the mesh needs to be adapted at different times to produce the most accurate output[87]. Furthermore, for turbulent flow problems the instantaneous quantities are usually very irregular and chaotic[88]. Design engineers are interested in mean quantities, such as the mean lift, drag and moment coefficients. The mean quantities can take many time steps to achieve convergence in time averaging. All these challenges make output adjoint-based adaptation impractical for LES.

Instead,physics-based adaptation criteria have been developed for LES[89-92], e.g., solution features (steep gradients or curvature), smoothness of the numerical solution either within an element or cross element boundaries, truncation errors, the smallest resolved scales. Instead of mesh adaptations, several papers used p-adaptation to increase the resolution[89,93-95]and achieved significant cost-savings.

In particular, an adaptation criterion based on the smallest resolved scales in any coordinate direction is developed in Ref.[96]. The criterion allows a directional indicator in any direction to enable anisotropic mesh adaptations for mixed unstructured meshes. Further-more, by equalizing error distributions in all coordinate directions, an “optimal” computational mesh can be obtained, which is capable of producing a LES solution of a given resolution with minimum cost. Promising results have been demonstrated for hexahedral meshes. The basic idea of the approach is reviewed here.

Let the LES solution at elementibeUi. An implicit low pass test filter.^in 1D is defined as

whereΔis the test filter size, which can be the same as the element size. The implicit filter can be approximated by the following explicit filter

After that, the smallest resolved scales are extracted as

This idea can be generalized to compute the smallest resolved scales in any direction of unit vectorn

∇∇TUi)n

(11)

Finally the anisotropic error indicator in directionnis defined as

where (·,·) denotes an inner product, while 〈·〉 indicates time or phase averaging. With this adaptation criterion, nearly “optimal” LES meshes have been obtained for well-known benchmark test cases[96]. Figure 6 shows an “optimal” mesh for the backward facing problem.

Fig.6 “Optimal” LES mesh obtained for flow over a backward facing step by Toosi and Larsson[96] (image courtesy of Johan Larsson)

6 Conclusions and outlook

Significant progress has been made in the past decade in advancing LES as a simulation tool for mission critical applications in industry. In fact, a team in France just accomplished an important milestone: simulating an entire jet engine operation using LES[97]with 20 billion grid points. At present, such computations can only be accomplished on some of the biggest computers owned by government agencies, and the cost is prohibitive for industrial use. However, at least in turbomachinery, the progress has dramatically increased the use of LES as an analysis tool. Scale-resolving simulations which took months to compute on a small cluster only 5 years ago can now be completed in just a few days.

In the next decade, I believe overnight turnaround time can be achieved on small GPU clusters (~100 GPU cards) for problems of moderate Reynolds numbers (a few million) encountered in turbomachinery. It is expected that mixed CPU, GPU and vector accelerators will continue to be the main computer architecture in the not too distant future. Further progress in the following pacing items will have the highest payoffs:

1) Low memory efficient time integration approaches for higher p orders. P-multigrid, JFNY approaches with dual time stepping are strong candidates. Perhaps new ideas will emerge which will significantly improve the current state-of-the-art.

2) Efficient implementation on mixed CPU/GPU/accelerator clusters[23,98]. Such architectures (such as GPU cards) have limited amount of memory, and cannot store even the element Jacobi matrices from high-order finite elements methods. Progress made in 1 can significantly influence the performance of large scale simulations.

3) The development of non-equilibrium wall models for high Reynolds number flows. Wall-resolved simulations at such Reynolds numbers are still prohibitively expensive. New ideas in wall-modeling including machine-learning may spur significant progress, especially for highly separated flow. In the past few years, many equilibrium wall-models have been developed and demonstrated[99-101]. These need to be extended to the non-equilibrium flow regime.

4) Improvement in the robustness of high-order methods, which include shock-capturing and improved non-linear stability for under-resolved flow features. Ideas such as entropy stability, kinetic energy and entropy preserving schemes[21,102-103]have already shown promise, and will continue to play an important role.

5) Multi-physics and multi-disciplinary LES. The resolved scales embedded in a LES solution can significantly affect other physical phenomenon such as combustion and fluid structure interactions since fluids mechanics, chemical reaction and structural mechanics are all tightly coupled. Flow simulations with moving boundaries will also mature for industrial applications. It is only natural that more and more physics will be considered in high-fidelity LES with high-order methods.

Acknowledgements:The author gratefully acknowledges the support from AFOSR under grant FA9550-20-1-0315 with Dr. Fariba Fahroo being the Program Manager.