Adaptive level of autonomy for human-UAVs collaborative surveillance using situated fuzzy cognitive maps

2020-12-09ZheZHAOYifengNIULinchengSHEN

Zhe ZHAO, Yifeng NIU, Lincheng SHEN

College of Intelligence Science and Technology, National University of Defense Technology, Changsha 410073, China

KEYWORDS

Abstract Collaborating with a squad of Unmanned Aerial Vehicles (UAVs) is challenging for a human operator in a cooperative surveillance task.In this paper,we propose a cognitive model that can dynamically adjust the Levels of Autonomy(LOA)of the human-UAVs team according to the changes in task complexity and human cognitive states.Specifically,we use the Situated Fuzzy Cognitive Map (SiFCM) to model the relations among tasks, situations, human states and LOA. A recurrent structure has been used to learn the strategy of adjusting the LOA,while the collaboration task is separated into a perception routine and a control routine. Experiment results have shown that the workload of the human operator is well balanced with the task efficiency.

1. Introduction

Unmanned Aerial Vehicles(UAVs)have been deployed in military and civil applications, including but not limited to counter-terrorism activities, forest-fire prevention,agricultural chemicals spreading, etc.1–3In the search-and-rescue operations,UAVs play an important role in the detection of disaster areas that are hard to reach by humans.However,considering the limited onboard load capability and endurance, a single UAV can hardly accomplish complicated missions. A squad of UAVs has proved able to achieve higher performance than a single UAV in,e.g.,multi-target tracking.4,5In this paper,we mainly deal with the problem of collaborative multi-target surveillance in a complex environment executed by a single human operator.

Due to the differences in task-oriented cognitive capabilities between humans and UAVs, collaborating with a team of UAVs remains a challenge. Cognitive human–machine interfaces and interactions can help incorporate human factors engineering that introduces adaptive functionalities in the design of operators’command,control and display functions.6However, the UAVs are in lack of initiative in their system.Johnson et al. present a ‘‘Coactive Design” concept to translate high-level teamwork into interdependence between humans and robots, and enable robots to fulfill their envisioned role as teammates.7Strenzke and Schulte apply mixed-initiative planning to bring together cognitive skills in order to cooperatively execute a mission planning task without decomposing it in advance.8The main focus of their work is task planning, which is not comprehensive enough to bridge the gap between the cognition of humans and that of UAVs.Chen et al.present the human/unmanned-aerial-vehicle collaborative decision-making mechanism with limited intervention based on the combination of Agent-based Fuzzy Cognitive Map(ABFCM)and Dynamic Fuzzy Cognitive Map(DFCM)is presented.9Their mechanism is in lack of learning ability so the biases of the experts cannot be improved with history data.

Situation Awareness(SA)is a cognitive theory that inspires human decision making in complex, dynamic environments,especially in human-controlled and -monitored mobile systems.10Mental models are the main carriers for integrating SA in the intelligent systems.11For example, Kokar and Endsley present a cognitive plausible model, the SA-FCM model,to analyze the SA of the mission.12Fuzzy Cognitive Map(FCM)has been used in many cases to deal with complex systems which are highly dynamic and uncertain.4The SA-FCM has two submaps, i.e., the goal submap and the SA requirement network. The goal submap is a qualitative model,whereas the FCM is a strong soft computing method with adaptive ability.13–15Moreover, in the SA requirements network, there are no feedbacks to model the system dynamics.In this paper, we improve the SA-FCM model by designing a learning mechanism that makes full use of the network feedback based on the structural characteristics of the FCM.

Autonomy has been considered as an extension of machine automation because it involves higher levels of cognitive capabilities to solve goal-directed tasks.16The Level of Autonomy(LOA)has been used as a measurement of machine autonomy in tasks such as collaborative target recognition,17sharedcontrol,18mix-initiative task planning,19etc. A higher LOA typically indicates a higher cognitive competence of the machine. However, full autonomy is not only difficult to realize, but also undesirable with regard to ethic issues.20There have been many definitions of the LOA.For example,according to the definition of the U.S.Air Force research laboratory,the autonomous control capability of UAVs can be divided into 10 levels from single UAV remotely guided to fully autonomous swarms.21The Autonomous Control Level (ACL)maps 11 levels of autonomy over the 4 descriptors represented by the steps of the Observe-Orient-Decide-Act (OODA)loop.22However, these definitions do not provide any implementation guidelines for us to solve actual human-UAV collaboration tasks, as pointed out by Johnson.23In this paper,we propose a new definition for designing the human-UAVs teamwork in surveillance missions.

Further researches have been done on the contextual LOA which has several axes and scales. In instance, the LOA of Mobility, Acquisition, and Protection (MAP) defined by Los Alamos National Lab has 3 metrics and 6 levels.24Draper 3D Intelligence Space has 3 metrics, each of which has 4 levels.25However, These versions of contextual LOA are not designed for human control of unmanned systems such as UAVs. As an alternative, Autonomy Levels for Unmanned Systems(ALFUS)can quantify the contextual LOA for adaptive control of unmanned systems.26Nevertheless, this problem still needs to be solved so as to support collaborative decision making in the ALFUS framework.

In addition, adjustable LOA can potentially enhance the human operator’s task efficiency, and thus improve the safety and efficiency of operations.27Adjustable Autonomy divides the work between the human operator and agents to manage the performance of the human-agents team in real-time.28It has been proved that adjustable autonomy can allocate workload, improve performance, and enhance situation awareness.However, it remains a challenge to deal with both subjective and objective situations during human-UAVs cooperation.Besides,it is more challenging for the human to cooperate with a squad of distributed UAVs than with a centralized team,because the LOA of each UAV may change independently.

The main contributions of this paper are as follows. We propose a cognitive model named Situated FCM(SiFCM)that can adaptively adjust the LOA of human-UAVs systems within the SA and ALFUS framework. The SiFCM model can predict the future decision on the LOA by the hierarchical and recurrent structure.Moreover,it can also learn the parameters of the SiFCM model by the Truncated back-Propagation Through Time (TBPTT) learning algorithm. We also make a comparison between the centralized adaptive LOA and the distributed adaptive LOA where each UAV has its own cognitive model and adjusts its LOA accordingly.

The rest of the paper is organized as follows. In Section 2,we formulate the autonomy adaption problem. In Section 3,the LOA metric of human-UAVs cooperative surveillance is proposed.The structure of the cognitive model and the fundamental of FCM is introduced in Section 4. In Section 5, the details of SiFCM are presented, and the learning ability of SiFCM is verified. In Section 6, three simulated experiments are used illustrate the effectiveness of the proposed method.In Section 7, the results of the simulation experiments are shown. In Section 8, the effectiveness of our human-UAVs cooperative method is discussed. In Section 9, we conclude our work and make prospect on the future work.

2. Problem statement and motivation

In our problem, a single human operator collaborates with a squad of UAVs in multi-target surveillance in complex environments. More specifically, the targets pop up randomly within the searching areas, and a team of UAVs are sent to the areas to allocate and identify the targets. Our proposition is to identify more targets within limit time. A possible object function of human-UAVs collaborative performance is:

The values of whand wUAVare determined by the dynamical LOA of the UAVs.In a centralized UAVs team,all the UAVs change their LOAs synchronously, w1=w2=...=wl. If the UAVs are distributed, the LOAs of UAVs can be adjusted asynchronously and the weights of different UAVs may be different.

where Λ is the operator utilization,X is the cognitive consumption of the operator, and χ is the penalty caused by the loss of SA. When the operator is over- or under-utilized, χ increases,while the performance decreases. χ can be adjusted by tuning wkof the Eq. (1)

As shown in Fig.1,the operator utilization should be maintained in the interval I to maximize the situation awareness of the human-UAVs team.In this article,we propose an adaptive LOA method to optimize the efficiency of the human-UAVs team during the multi-target surveillance.Each LOA has specific properties that confer an UAV’s authority of operations.The adaptive LOA varies with the situation, as shown in Fig.2,to maintain the situation awareness of the human operator and UAV agents.The situation awareness of the team as a whole supports their information interpretation in the same way and helps make accurate projections regarding each other’s state.

The motivation of this paper is to optimize the object Eq.(1) by the adaptive LOA, which is a human-UAVs collaborative method in which the human operator and UAVs reshape their cognitive ranges with respect to the situation variation during the mission. When the LOA rises adaptively, more work can be taken over from the human operator, and wkin Eq. (1) increases, vice versa. The adaptive LOA may prevent the operator from being overwhelmed by enormous information when the task is complex, or from being absent-minded when the task is monotonous.

Collaboration requires intelligibility for the operator and the UAVs.To achieve common goals,the operator and UAVs need to have a shared understanding of the situation.The cognitive model imitates the way of perception, information processing and reasoning of the human operator.With a cognitive model, the decision-making process of the UAV can be more convincing. Specifically, the cognitive model needs to realize the following features to realize the adaptive LOA:

Fig.1 Operator utilization.

Fig.2 Adaptive LOA.

(1) Data integration management.

The situation information usually comes from different sources and thus has to be fused for a decision. In reactive agent architectures, the decision-making process selects relevant stimuli for the current data. Such models do not provide the intelligence analyst. The cognitive model should incorporate human cognition into data fusion. For example, the cognitive model can process data deliberation and learn the evolution of future states.

(2) Long- and short-term memory.

Memory stores the knowledge, and it also enables learning and adaptation to the changing environment. In a cognitive model, memory is described in terms of its duration (shortand long-term). Long-term memory is used to store innate knowledge that enables system operation, thus it is usually referred to as a knowledge base for facts and problemsolving rules. The short-term memory maintains the knowledge that is up-to-date and acquired at the right time, hence it requires the capability of learning to improve the accuracy of the knowledge.

(3) Regulation of decision making processes with a loop.

For UAVs decision-making, feedbacks from the environment should be collected. The top-down process focuses on perceiving the situation, comprehending the information, and making appropriate decision. The bottom-up predictive process can include shifts of possible actions and forecasts of their effects.

To fulfill the demands above,we need a well-defined,highly organized yet dynamic knowledge structure developed over time from experience. The Fuzzy Cognitive Map (FCM) is applicable to the graph-based knowledge representation where nodes refer to concepts and weights assigned to edges corresponding to the associative concepts.Although the FCM does not have separate representations for different kinds of knowledge or short-vs long-term memory,it uses a unified structure to store all information in the system. The long-term memory is used when the initial FCM is designed by the fuzzy rules of the system, and the short-term memory denotes the tuning of the weights by learning. The FCM provides a solution for structuring knowledge according to accepted cognitive processes as perception, comprehension and projection. As a result, the operator utilization can be maintained in theIin Fig.1.

3. LOA metric of human-UAVs cooperative surveillance

There is still a long way to go before full autonomy is achieved on UAVs. Therefore, ‘‘human in the loop” is still the main method for human-UAVs cooperation. In the human-UAVs cooperation, the UAVs adjust their LOA to keep a balance between the human controlled and the autonomous behaviors of the UAVs. Two issues are connected with the LOA of human-UAVs cooperative surveillance:

(1) Which functionalities of the UAVs need to be autonomous in the human-UAVs cooperative surveillance mission?

(2) Which level of autonomy is required for these functionalities?

To answer these questions, we develop a mission specific definition of the LOA, and a quantitative metric of the LOA.

3.1. LOA of human-UAVs cooperative surveillance

Although human-UAVs collaborative surveillance requires a variety of autonomous capabilities, for example, energy management, self-protection, etc. Searching and identification are the most representative features of the problem. The UAVs team with high autonomy can address optimal searching schemes and optimal trajectories. The identification includes multi-sensor fusion,image processing,pattern recognition,etc.

Therefore, we develop a contextual definition of LOA with two scales.The scale of searching is related to the autonomous functions of target allocation and trajectory planning. The scale of identification indicates the autonomous functions of perception and target recognition. Each scale is defined by 4 levels,and the autonomous capabilities increase with the level.

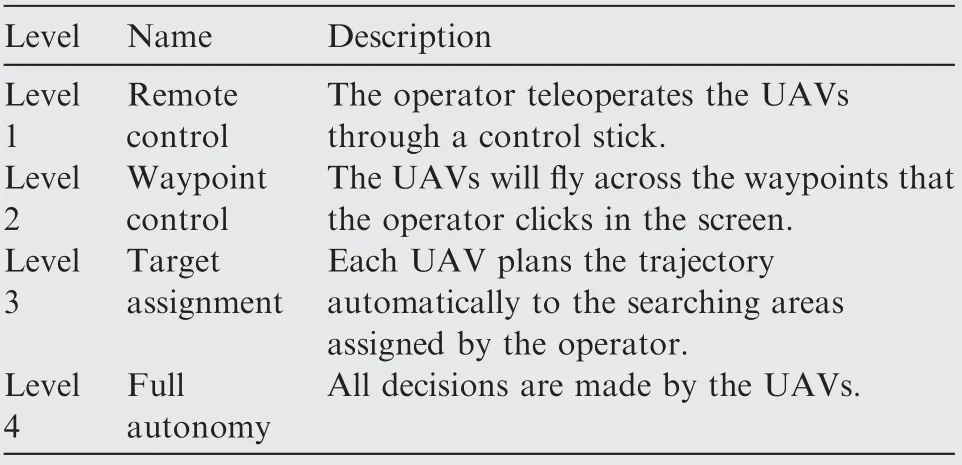

The LOA of trajectory planning is shown in Table 1. At Level 1 is remote control, where the operator teleoperates the UAVs through a control stick. At Level 2, the operator can click the waypoints of the UAV in the screen, and the UAVs will fly across the waypoints. At Level 3, the operator assigns the searching areas to each UAV sequentially, and UAVs plan the trajectories automatically. At Level 4, i.e.,under full autonomy, UAVs are autonomously allocated to the searching areas and plan the trajectories.

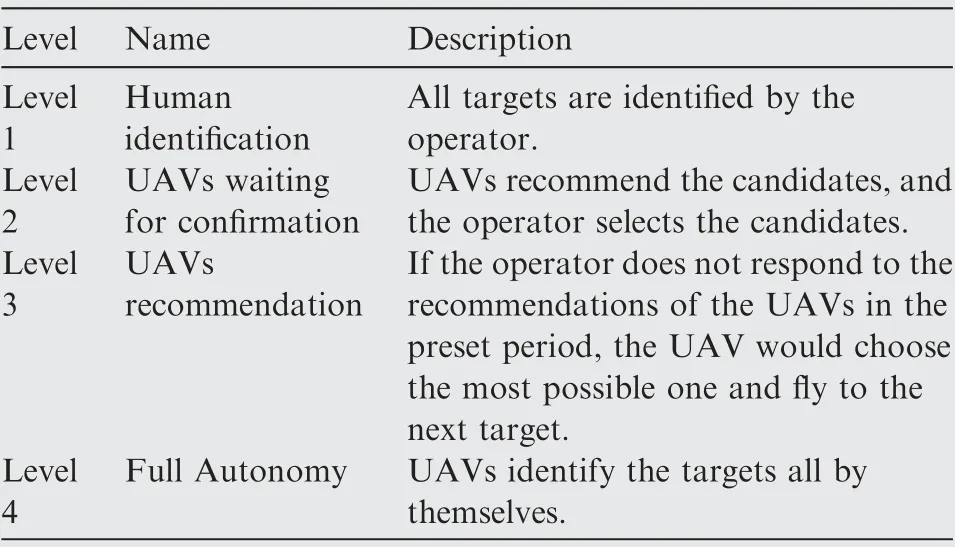

Similar to the LOA of perception31, the second metric is also defined by 4 levels, as illuminated in Table 2. At Level 1,UAVs transmit the real-time surveillance videos to the con-trol station, meanwhile, the operator looks for the targets without assistance. At Level 2, the suspicious targets are framed, and the operator finds out which is the true target.At Level 3, the targets are framed to wait for the operator’s decision;if the operator does not make his decision in the preset period,the UAV selects the most possible one as the target.At Level 4, UAVs identify all the targets by themselves.

Table 1 LOA metric of human-UAVs cooperative searching.

3.2. Quantitative LOA adjustment metric

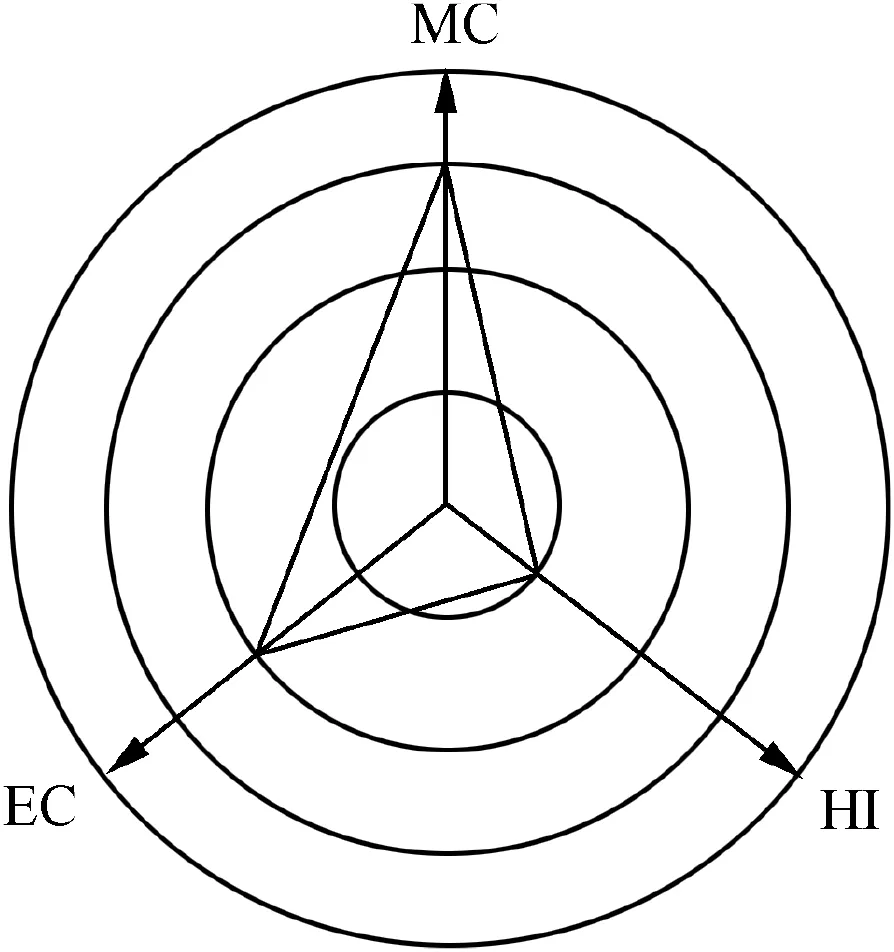

To realize the adaptive LOA,we need to characterize the LOA of UAVs quantitatively according to the requirements on the autonomous ability for task execution. As shown in Fig.3,the LOA metric of Autonomy Levels For Unmanned Systems(ALFUS)is used to adjust the LOA. The ALFUS is a general term referring to the autonomy level framework. There are three axes of the ALFUS: Human Independence (HI), Environment Complexity (EC), and Mission Complexity (MC).Each axis is implemented in the cognitive model to facilitate the dynamic analysis and measurement of the LOA.

(1) HI is based on the independence of the human operator that can be allowed in the performance of the missions.

(2) EC indicates the complexity of environments in which the missions are performed.

(3) MC relates to the missions that the system is capable of performing.

The ALFUS considers not only the subjective factors, but also the objective factors of the human-UAVs cooperation.After the LOA is determined by ALFUS, the corresponding autonomous functionalities of the UAVs are chosen to execute the mission.

4. Cognitive model of adaptive LOA

We develop a cognitive model of the adaptive LOA which integrates the SA and ALFUS by the FCM.A cognitive model can be described as a set of well-defined, highly organized yet dynamic knowledge structures developed over time from experience.32Since the performances of the human operator and UAVs are highly nonlinear and uncertain,the cognitive model provides us a computational method to make decisions on the optimal LOA during mission execution.

Table 2 LOA metric of human-UAVs cooperative identification.

Fig.3 LOA metric of ALFUS.

As shown in Fig.4, our cognitive model integrates the knowledge structure named SiFCM to guide the adaptive LOA. An advantage in modeling the SA with a FCM is that it allows higher-level cognitive processes to be expressed explicitly. In the first place, the situation is fuzzificated and input into the cognitive model. Secondly, the information is input to the SiFCM, which performs the cognitive processes.Thirdly, the decision of the appropriated LOA is made by the SiFCM. Finally, the UAVs vary their action ranges to cooperate with the operator.

The Fuzzy Cognitive Map (FCM) is a well-established computational intelligence method which combines fuzzy logic and neural networks. With the fuzzy values and recurrent edges, the FCM can be used with knowledge representation and dynamic causal reasoning. Meanwhile, with the recurrent structure,the weights of the FCM can be trained from history data.

Fig.4 Cognitive model of adaptive LOA.

Typically,the FCM is a directed weighted three-tuple graph G(C,W,f).Where C, W and f denote the value of the concept nodes, the weights of the directed edges, and the activation function, respectively. The SiFCM sample shown in Fig.4 includes 6 nodes. C= [C1,C2,...,C6]is the value vector of nodes. The value of each node is normalized in [0,1], and it denotes the activation degree. W is the weight matrix of the graph G. W is composed by all weights:

The elements of Wrepresent the weights of the edges in the FCM.The sign(+or–)of the weight means the effect of motivating or rejection. The value range of the weight is [–1, 1]. If there is no edge between two nodes, the value of the weight is 0.

The transformation function f refers to some activation functions in neural networks, for example, the sigmoid function:.

The construction of the initial FCM is completed by the experts. First of all, the experts determine the concepts of the system according to their own knowledge. Secondly, the edges are weighted by experts in several linguistic term sets,which are expressed as semantic symbols, such as Very Small(VS), Small (S), Medium (M), Large (L), and Very Large(VL). For instance, when C1has a strong influence on C2,the linguistic term is strong. Then the linguistic variables are translated into numeric values.

After every expert constructs his/her own FCM, all the FCMs are synthesized together. Linguistics are integrated using a sum combination method and the Center of Gravity(CoG) defuzzification method produces the weight value in the interval [–1,1]. Considering the credibility, each expert is given a belief bm.

The Eq. (4) shows the way of combination. The inference process of FCM is:

Since the FCM is constructed by the experts, sometimes their biases and insufficient understanding would make the FCM inaccurate. History data are the objective reflex of the truth of a system.The FCM can be modified by learning from history data.

5. SiFCM

In this section,we will elaborate on a FCM denotative knowledge structure named SiFCM to implement the adaptive LOA.A specific SiFCM is constructed to solve the problem of human-UAVs collaborative surveillance,and the learning ability of the SiFCM is verified.

5.1. Structure of SiFCM

The SiFCM integrates SA with the FCM into the UAV’s internal representation of the world,as shown in Fig.5.SA is a theory about human decision making in complex and dynamic environments, especially in human-controlled and -monitored mobile systems. It can support better understanding of situations the humans are faced with as well as their decision making tasks.In the SA theory,the decision making process can be graded by three cognitive levels: perception, comprehension and projection. Perception means collecting information from the external environment; comprehension refers to decision making based on the collected information and agents’knowledge, and action in line with their decisions; projection means to project the current decision to the future environment to make better decisions.

5.1.1. Perception level

Accurate perception of the situation is taken at the perception level. Here the SiFCM based cognitive model monitors the operator’s state,senses the working environment of the UAVs,and supervises task processing.

(1) Operator state

There are various psycho-physiological parameters that can reflect the operator’s state. The brain activity based index for workload estimation takes the advantage of the principle that humans’ brain activities in different brain regions change dynamically while cognitive tasks with difficulties at different levels are performed. For Example, Electroencephalogram(EEG) and functional Near-Infrared Spectroscopy (fNIRS)can be used to record local hemodynamic change caused by brain nerve activities. However, due to the technological limitations, the users of EEG and fNIRS systems are often tethered by wires and fibers. Recently, the smart body sensors are accommodated in the consumer market, and there are a growing number of researchers who suggest that body sensors will become even more lightweight and unobtrusive in the future. These smart devices provide us a superior substitution for the EEG and fNIRS devices.

Fig.5 Structure of SiFCM.

Eye trackers are used to record the characteristics of human eye movements during visual information processing, and extract data such as gaze points, gaze time and number, eye jump distance, pupil size, etc. from the records. Eye movements can reflect the choice pattern of visual information,which is of great significance for revealing the psychological mechanism of cognitive processing.For example,if the mental workload of an operator increases,the fixation time and pupil diameter increase.33

The smart band is a wearable wristband which can record real-time data in daily life. Many sensors are integrated into a smart band, including the optical heart rate sensor, ambient light sensor, skin temperature sensor, galvanic skin reaction sensor, etc. These measured values can be uploaded wirelessly by Bluetooth.The physical indexes are valuable for measuring the operator state. In instance, it is found that the Heart Rate(HR) increases due to negative emotions, but decreases in rest time. The skin temperature increases along with the stress of the operator, and decreases in the process of relaxation.

(2) Operation environment.

The operation environment of UAVs has a significant impact on the computational burden of the system.For example, the processing load associated with navigating in a sparsely occupied environment distinctly differs from that in a heavily cluttered environment.It can be categorized into static environment like terrains, buildings, static radar, etc., and dynamical environments like traffic,climate,dynamical obstacles, etc.

For collaborative searching and identification,environment information is detected and delivered to the operator by wireless image transmission. In this occasion, environment complexity refers to the inherent difficulty of finding or extracting a real target in a given image. Image entropy is a statistical feature of the image, which reflects the average volume of information in the image, whereby increasing entropy values are indicative of a system exhibiting a higher degree of complex dynamics.34

The one-dimension entropy of the image is:

where H is the entropy of the image,pois the probability of the gray value o in the whole image and 255 is the total number of the gray levels

Moreover, the local entropy of the image considers the complexity contained in the neighborhood of the pixels:

where pqris the probability that gray values q and r emerge as a pair in the whole image, g(q, r) is the duration of the pixel pair (q, r), and 255 is the gray scale of the image.

(3) Mission execution process.

Task complexity places a metric on the autonomous system that can execute the task at the desired level of performance.Firstly, different tasks consume different volumes of cognitive resources.The skills are associated with the types of tasks.For example, ISR (Intelligence, Surveillance, and Reconnaissance)involves less tactical behaviors than SEAD (Suppression of Enemy Air Defense),and air-combat is higher in lethality than air-to-ground attack. Secondly, the number of targets impacts the decisions/choices and their couplings. At last, multiple UAVs involve more complex and more risky collision avoidance, conflict resolution and negotiating than a single UAV.35So the number of UAVs also has a significant impact on the task complexity.

The ALFUS provides a task complexity computation method.In collaborative searching and identification,the tasks include trajectory planning and target identification.Each task is evaluated by the subjective experiences of experts. The mission complexity is equal to the product of the number of targets and the tasks complexity. The collaboration complexity is related to the ubiquitousness and transparency of the collaborative system.

5.1.2. Comprehension level

The comprehension level facilitates the specification, analysis,evaluation, and measurement of the activation of the data from different sources. According to the ALFUS framework,the factors influencing the LOA can be summarized as three axes,which correspond to three concepts at the comprehension level.

5.1.3. Projection level

The dynamic character is an important feature of the SiFCM.Since the situation is always changing,the operator’s SA must also be constantly adjusted to avoid outdated and inaccurate decisions. Projection means mapping the current situation to the near future to understand what will happen.The temporal dependence can be introduced to the cognitive model by projection. Time dynamics are built into the SiFCM by the feedbacks from the Desired Output Concept (DOC) to the perception concept.36The recurrent edges in Fig.6 denote the section of projection.

5.2. SiFCM of human-UAVs cooperative surveillance

In the FCM, knowledge representation is implied by the concepts and weights. The initial FCM is determined by the experts.However,the initial FCM may integrate some subjective errors and biases of the experts. Historical data are a sequence of states illustrative of the system’s characters over time. By learning from historical data, time series forecasting can be realized by the FCM. Many learning algorithms of the FCM have been developed37–39, which can make the FCM more accurate.

The SiFCM is in a hierarchical and recurrent structure,which is similar to Recurrent Neural Networks (RNN). We elaborate on an FCM denotative cognitive model named SiFCM as shown in Fig.6 to implement the adaptive LOA.

The concepts C1-C4in Fig.6 are related to the state of the operator.The concept C1denotes fixation,which is the time of a gaze on an area, C2denotes the pupil diameter of the operator, C3denotes the HR of the operator, and C4denotes the skin temperature of the operator. The concepts C5-C8denote the perception of the working environment, each corresponding to the image entropy of the camera view shot by the UAV. The concept C9denotes the task of target allocation,C10denotes the task of target identification, and C11denotes the complexity of collaboration. At the second layer of the SiFCM, C12denotes the human independence, C13denotes the environment complexity and C14denotes the mission complexity.

Fig.6 SiFCM of human-UAVs cooperative surveillance.

Different from the inference of neural networks with different decision nodes,the decision making of the FCM is realized by only one decision node called the DOC. Suppose DOCtakes values in the range DOC ∈[DOCmin,DOCmax]⊆[0,1].Each decision class is enclosed into the activation space of the DOC as shown in Fig.7. If DOC ∈[0,0.25), the decision of LOA is level 1; if DOC ∈[0.25,0.5), the decision of LOA is level 2; if DOC ∈[0.5,0.75), the decision of LOA is level 3; if DOC ∈[0.75,1], the decision of LOA is level 4.

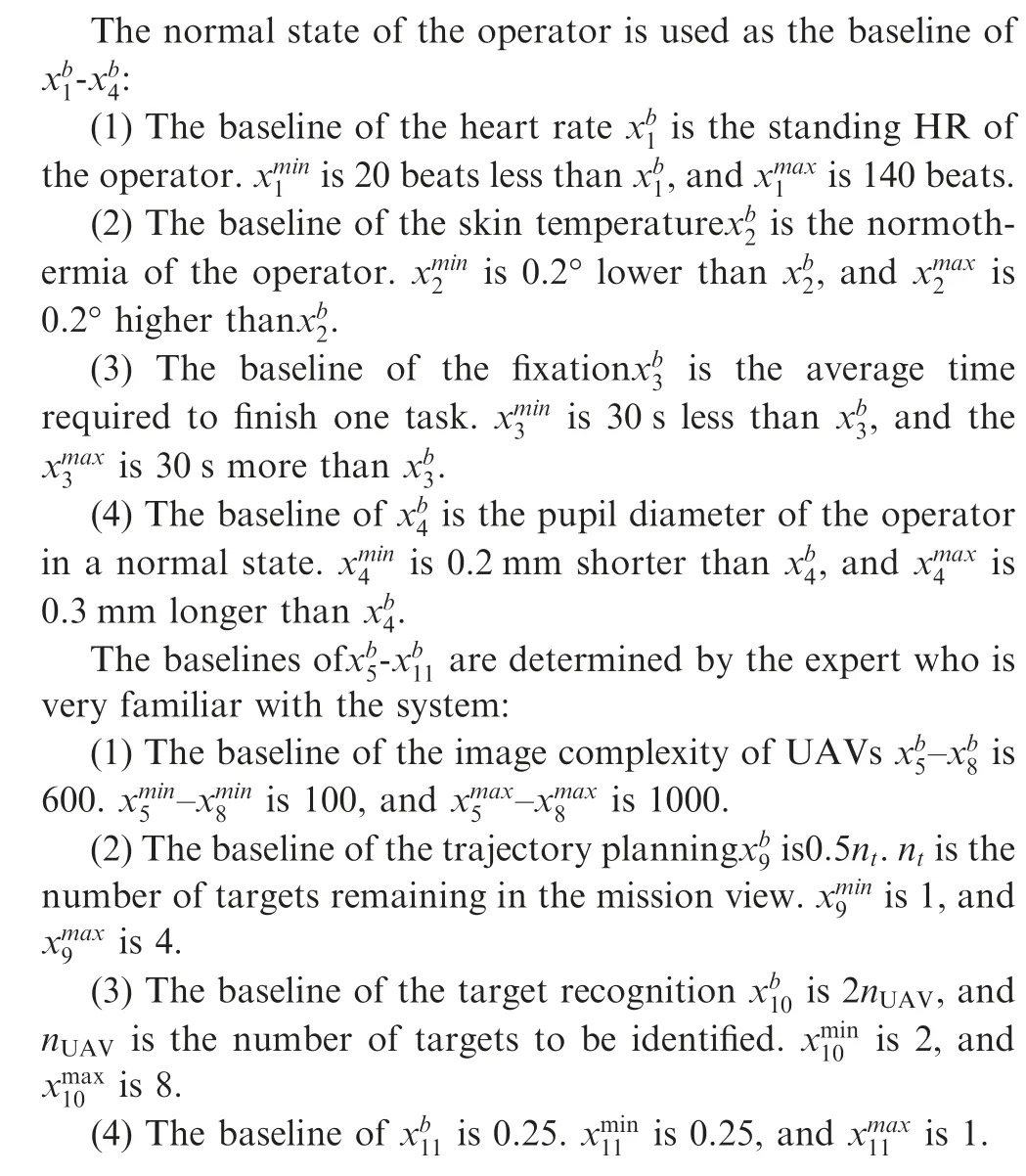

The inputs of the SiFCM are mission execution situations.According to the ALFUS,there are three situation elements to be perceived:the operator state,the working environment and the tasks. The values of the input concepts are determined by normalization as:

Fig.7 DOC intervals.

5.3. Time series learning of SiFCM

Practically,even if the situations are all the same,the operator could make different decisions. For example, in a same complex situation, if the operator just starts to work, he could choose the low LOA to make a try by himself; however, if the operator has worked for a long time, he would probably raise the LOA to share more responsibilities. The time series data about operation involve a great volume of information:it can reveal the evolution of an operator’s experience, or help to identify the operator’s custom in a particular situation.With time series data, context-dependent predictions should accurately predict the immediate behavior of the operator.Given a sequence prediction approach, operators are rather represented by trajectories in the feature space, entailing a more accurate prediction of the evolution of the operators’decisions by pursuing the same trajectory.

The SiFCM is trained by the time series data about mission operation. First of all, the operator finishes the human-UAVs cooperative surveillance mission by switching the LOA manu-ally.Meanwhile,the inputs data and the decisions on the LOA are recorded with time log.Then,the SiFCM is trained by the recorded operation data. Finally, the trained SiFCM can be used in the next mission to adjust LOA adaptively.

Table 3 Parameters of inputs normalization.

Let s ={s(1),s(2),...,s(n)}denote a set of situations,where s(t)=[c1(t),c2(t),...,c11(t)] is the vector of the concepts at level1oftheSiFCMattimestept.Let D =[D(1),D(2),...,D(n)] denote a set of LOA decisions,where D(t) is the LOA at timestep t.

For example, the timestep length=3 implies the data corresponds to a timestep of 3 minsin every sequence (in 1 min intervals). The input is a list of timesteps {s(t), s(t+1),s(t+2)}, {s(t+1), s(t+2), s(t+3)], {s(t+2), s(t+3),s(t+4)], .... And the target should be {D(t+3), D(t+4),D(t+5),...].

The learning index of the SiFCM minimizes the square error over the training set:

where y(t)is the output of SiFCM with s(t),and tfis the number of timesteps. The SiFCM can be unrolled as in Fig.8.

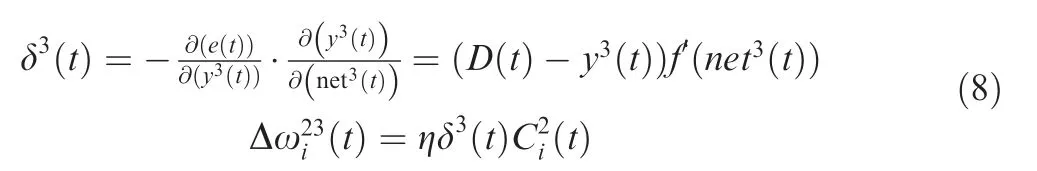

The error propagates from L3(t)to L2(t)is:

Fig.8 Unrolled SiFCM with 3 timesteps.

The learning process is shown as Algorithm 1:

An experiment is carried out to evaluate the time series data learning ability of the model. First of all, the operator designs the weights of the SiFCM, as shown in Table 4.

Then, a random weight matrix is generated, as shown in Table 5.

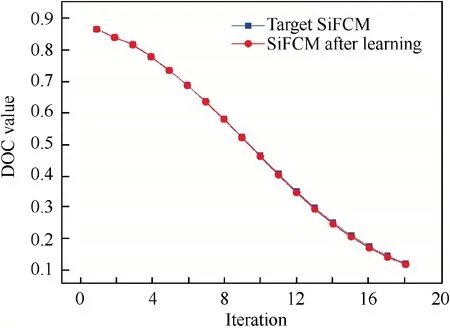

The outputs of the SiFCM with the target weight matrix and the initial weight matrix are shown in Fig.9.

The learning curve of mean square error is shown in Fig.10.

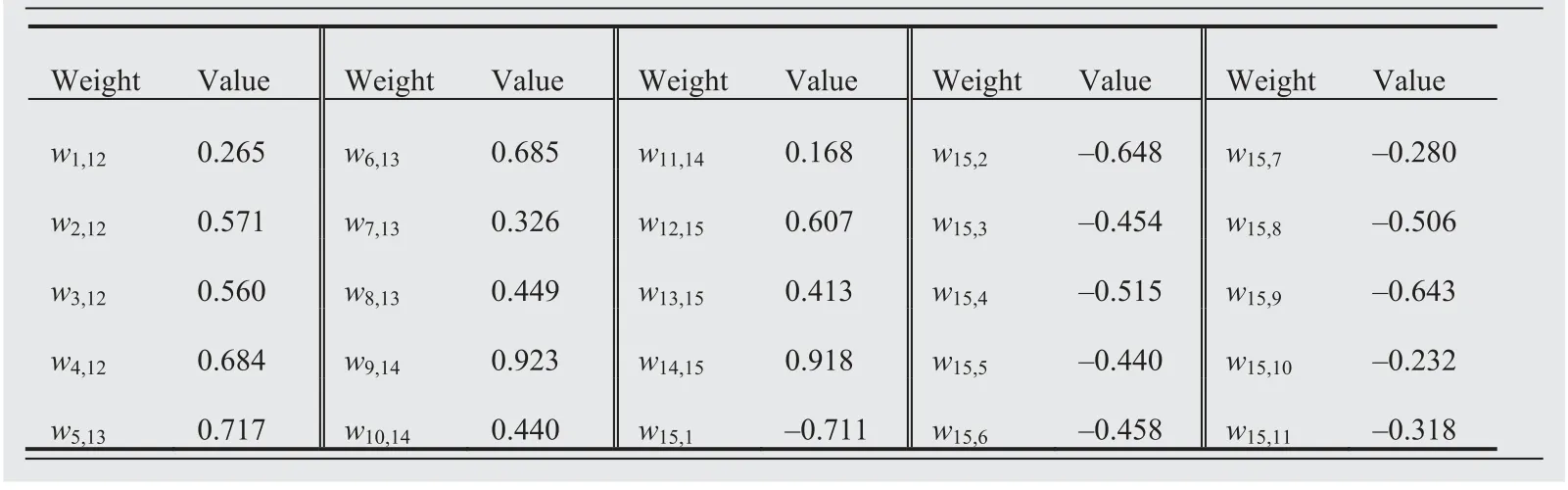

After learning, the weight matrix of the SiFCM is show in Table 6.

The outputs of the trained SiFCM and the target SiFCM are shown in Fig.11.

The result of the experiment illuminates the time series learning ability of the SiFCM. The information contained in the sequences could be of great importance for producing better decisions. The SiFCM can tune the weights based on history data and make more accurate decisions about the LOA.

6. Three simulation experiments

In order to evaluate the effectiveness of the adaptive LOA method and the cognitive model,we have conducted three simulation experiments.The first experiment attempted to validate the effect of the cognitive model.More specifically,the second was designed to validate the effect of distributed adaptive LOA of UAVs, and the third demonstrated the benefits of the cognitive model in a dynamic situation.

6.1. Apparatus

We invited 35 postgraduate students in the experiments,including 13 females and 22 males at the age between 22 and 30.

Algorithm 1 SiFCM learning by the TBPTT algorithm For time τ from time 1 to t:

Set the inputs of the SiFCM.

Do a feed-forward pass to compute the concepts value of all nodes.

Unroll the SiFCM.

Measure the deviation of the DOC outputs and the LOA decision of the operator.

Backpropagate the errors across each timestep of the SiFCM.

Perform weight updates by adding the epoch gradient as Eqs.(11)–(13).

Roll up the SiFCM.The experiments were carried out on the prototype of our ground UAVs control station, which was attached to a simulated UAVs operational system. The platform consisted of one PC (i7, 8 G, GTX1080), two 24 inch (1 inch=25.4 mm)screens, one flight control stick, one eye tracker (Tobii X120)and one smart band (Microsoft Band 2).

Table 4 Target weights of SiFCM.

Table 5 Initial weights of SiFCM.

Fig.9 Outputs of the target SiFCM and initial SiFCM.

Fig.10 Learning curve of SiFCM.

The interfaces of the collaborative system were displayed in two screens as shown in Fig.12.The first screen displayed the manual control interface.In the left part,the map of the whole working area was displayed. The positions of the target and UAVs were displayed in the map. The right part showed the flight control panels.The variables,such as direction,throttle,height and speed,were arranged in the panels.The upper part of the second screen displayed the camera views of the UAVs.The target images and the LOA indicators were arranged in the lower part. If the operator wishes to see the details of one camera view, he could click the camera view box, and the view would be magnified.

6.2. Task and procedure

The participants were told to control the UAVs so that they flied to the detecting area and found the targets as shown by indicators. There were 40 targets in each period, including buildings, warehouses, ships, playgrounds, etc. At most 8 detecting areas that contain the targets were given at the same time. If one target was identified, it would be replaced by another in the target boxes until all targets were found or it timed out. There were 30 min in each mission period. The operators could take a 10 min break between two missions.

Table 6 Final weights of trained SiFCM.

Fig.11 Outputs of target SiFCM and SiFCM after learning.

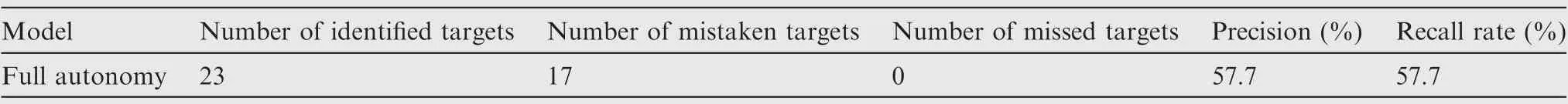

We employed the hierarchical autonomous trajectory planning algorithm40,41in task allocation and the adaptive humanin-the-loop multi-target recognition algorithm17in target identification. The training data sets were all obtained from the data sets of aerial images. The full autonomy of the UAVs team was tested. The result is shown in Table 7.

7. Results of simulation experiments

In the following we will present a statistical analysis of the study data in order to test the effect of the SiFCM and adaptive LOA.

7.1. Experiment 1

10 participants performed Experiment 1. In the first period,they could switch the LOA manually during the mission execution. The experimental data on the first period were stored to train the SiFCM by the TBPTT.In the second period,the participants executed the mission by the adaptive LOA with the trained SiFCM.

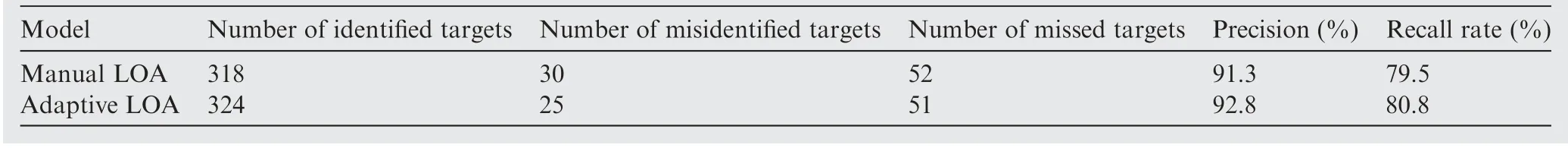

The number of identified targets, misidentified targets, and missed targets of all the operators were counted. The result is shown in Fig.13. The statistical results are shown in Table 8.The recall rate of the adaptive LOA with SiFCM (80.8%) is slightly higher than that of switching LOA manually(79.5%). The p-value of T-test result (p=0.569 >0.05)shows there are no significant differences in the number of targets found.

We designed a Likert Scale questionnaire. Each item was meant to be scored by all participants. The max. score was 5 points. Specifically, 1 point means highly disagreeable, 2 disagreeable 3 neutral, 4 agreeable, and 5 highly agreeable. The contents of the questionnaire and the summary of the scores are shown in Table 9.From the table,we can see that the average scores for these questions were high except question 4,which points to a better result when the score is lower. The variation was basically low. So the reception by the users was highly positive.Most participants agreed that the adaptive LOA with SiFCM could improve their efficiency in the human-UAVs collaboration.

Fig.12 Control interfaces of simulation experiments.

Table 7 Results of full autonomy model.

Fig.13 Statistical analysis of Experiment 1.

Table 8 Results of Experiment 1.

7.2. Experiment 2

15 participants took part in the Experiment 2,where three control models were performed. In the first period, the participants executed the mission by teleoperation. In the second period, the control model was switched to the Adaptive LOA. At last, the participants controlled the UAVs under the distributed adaptive LOA model.

Table 9 Questionnaire.

The results of experiment 2 are shown in Fig.14.Fig.14(a)shows the number of identified targets, Fig.14(b) shows the number of misidentified targets, Fig.14 (c) shows the number of missed targets.

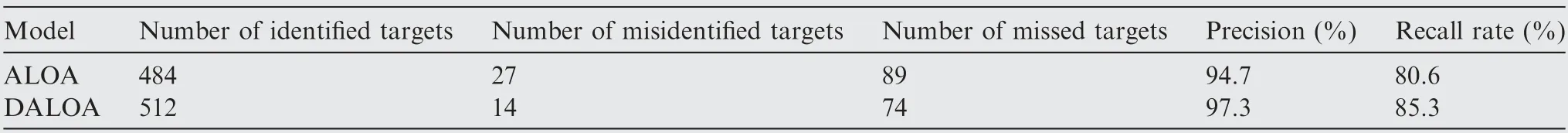

The statistical results of Experiment 2 are shown in Table 10. From the table we can see the recall rate of the Adaptive LOA (ALOA) (80.6%) was lower than that of the Distributed Adaptive LOA (DALOA) (85.3%). Specifically,compared with the centralized adaptive LOA, the p-value of t-test result (p=0.0372 <0.05) shows the distributed adaptive LOA was more efficient.

Wait time is an important performance metric in humanagents collaboration.42It is caused by the loss of SA. It happens when the UAV needs attention and the operator does not interfere. The wait time is measured by system log. The results are shown in Fig.15. Wait time in the adaptive LOA model was longer than that in the distributed adaptive LOA model.It indicates that the opacity between human and UAVs decreased when we used the distributed LOA model.

7.3. Experiment 3

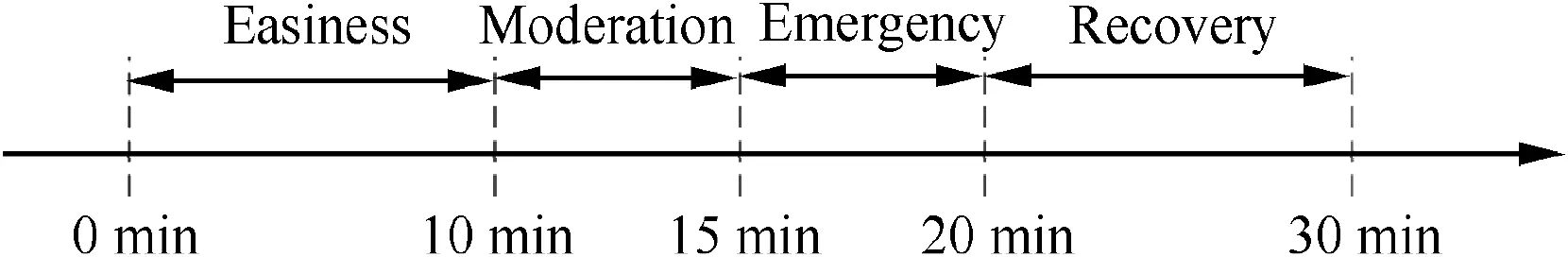

To testify the benefits of the cognitive model, we designed an experiment on dynamic tasks. During the execution of human-UAVs cooperative surveillance, four scenarios occurred along the timeline as shown in Fig.16

Fig.14 Statistical analysis of Experiment 2.

Table 10 Results of Experiment 2.

(1) Easiness: 10 min, each target popped up every 30 s.

(2) Moderation: 5 min, each target popped up every 15 s.

(3) Emergency: 5 min, each target popped up every 5 s.

(4) Recovery: 10 min, each target popped up every 30 s.

10 participants performed Experiment 73. We used a trigger methody28in the comparative analysis on our adaptive LOA method, which adjusts the LOA of UAVs when the following criteria were satisfied: (A) more than 8 outstanding targets, or average response time exceeding 60 s; (B) more than 15 outstanding targets, or average response time exceeding 90 s; (C) more than 25 outstanding targets, or average response time exceeding 120 s; and (D) more than 35 outstanding targets, or average response time exceeding 180 s. In other words, the automation scales up when the task complexity and/or responding time increase , and scales down when the task complexity and/or responding time decrease.

Fig.15 Wait time of centralized adaptive LOA and distributed adaptive LOA.

Fig.16 Timeframes of the mission.

The performances of the triggered method and adaptive LOA were measured by logging the number of identified targets. Fig.17 illustrates the number of identified targets in different timeframes.In the easy scenario,there was no significant difference between the triggered method and adaptive LOA.Both the triggered method (Mean value M=18.7, Standard Deviation SD=1.70) and the adaptive LOA (M=19.4,SD=0.84) exhibited a high performance. In the moderate timeline, F-test result (F=3.3, p=0.01 <0.05) shows these two methods began to diverge in performance. The performance of the triggered method started to degrade(M=11.2, SD=4.13), and that of the adaptive LOA(M=17.1, SD=2.28) was exactly on the opposite. In the emergency timeframe, the differences in performance were widened. Based on the adaptive LOA (M=20, SD=2.87),the operator performed much better than using the triggered method (M=11.8, SD=3.19). Moreover, there was no significant performance gap between the moderate timeframe and the emergency timeframe under the triggered method(F=1.67, p=0.23 >0.05).

Summarily, the performance of the adaptive LOA(M=81.3, SD=7.2) was much better than the triggered LOA(M=61,SD=10.8)under the dynamic situation.With the cognitive model, the adaptive LOA considered more comprehensive situations than traditional autonomy adjustment methods such as the triggered method.Additionally,the learning capability of the cognitive model can help make more appropriate decisions under the dynamic situations.

8. Discussion

The results of the experiments have demonstrated the learning ability of the cognitive model and the cooperative efficiency of the adaptive LOA method. During our experiments, the performance was indexed by the total number of the identified targets under different cooperation methods.In Experiment 1,the operators maintained their utilization at a ‘comfortable’ level while shifting their responsibilities by adjusting the LOA.The learning ability of the cognitive model was verified. The cognitive model could learn the operation trajectory of the operator from history operational data and the curve. In Experiment 2,the adaptive LOA method could take over work that would normally require attentional resources. It helped the operator to better focus on the important issues. The centralized and distributed adaptive LOA methods were tested.It was shown the distributed adaptive LOA could enhance the efficiency of human-UAVs cooperation. In Experiment 3, the benefit of the adaptive LOA was verified under dynamic situations. Compared with traditional human-agents cooperative methods such as triggered autonomy, an improvement was brought in the high workload situation and the total number of the identified targets increased significantly in the adaptive LOA method.

9. Conclusions and future work

Fig.17 Average number of identified targets in different LOA adjustment methods.

This article presents the human-UAVs collaboration in searching and identification with the adaptive LOA. A cognitive model is developed to adjust the LOA adaptively.The SiFCM is the knowledge structure of the cognitive model, which can synthesize the situation of the operator’s state, the working environment of the UAVs, and task processing. A TBPTT algorithm is implemented to learn the time series of human decision sequence. Two cases are studied on the prototype of our ground UAVs control station. In the first case, the adaptive LOA with SiFCM proves more efficient than adjusting the LOA manually. A questionnaire is developed and the participants prefer the adaptive LOA than manual LOA adjustment. In the second case, the distributed adaptive LOA is compared with the centralized adaptive LOA.The results indicate the distributed adaptive LOA is more flexible.Each UAV decides the LOA based on the situation it is faced with. The shorter wait time taken under the distributed adaptive LOA indicates it can decrease the opacity between the human operator and UAVs. Our study is of positive significance for guiding the future application of human-UAVs collaboration.

Acknowledgements

The authors are grateful to Chang WANG, Lizhen WU and Shuangpeng TAN for discussions. This study was supported by the National Natural Science Foundation of China (No.61876187).

杂志排行

CHINESE JOURNAL OF AERONAUTICS的其它文章

- Event-triggered control for containment maneuvering of second-order MIMO multi-agent systems with unmatched uncertainties and disturbances

- Battery package design optimization for small electric aircraft

- Coactive design of explainable agent-based task planning and deep reinforcement learning for human-UAVs teamwork

- Two-phase guidance law for impact time control under physical constraints

- Adaptive leader–follower formation control for swarms of unmanned aerial vehicles with motion constraints and unknown disturbances

- Optimal video communication strategy for intelligent video analysis in unmanned aerial vehicle applications