基于可见光图像和卷积神经网络的冬小麦苗期长势参数估算

2019-04-26马浚诚刘红杰郑飞翔杜克明张领先孙忠富

马浚诚,刘红杰,郑飞翔,杜克明※,张领先,胡 新,孙忠富

基于可见光图像和卷积神经网络的冬小麦苗期长势参数估算

马浚诚1,刘红杰2,郑飞翔1,杜克明1※,张领先3,胡 新2,孙忠富1

(1. 中国农业科学院农业环境与可持续发展研究所,北京 100081;2. 河南省商丘市农林科学院小麦研究所,商丘 476000;3. 中国农业大学信息与电气工程学院,北京 100083)

针对目前基于计算机视觉估算冬小麦苗期长势参数存在易受噪声干扰且对人工特征依赖性较强的问题,该文综合运用图像处理和深度学习技术,提出一种基于卷积神经网络(convolutional neural network, CNN)的冬小麦苗期长势参数估算方法。以冬小麦苗期冠层可见光图像作为输入,构建了适用于冬小麦苗期长势参数估算卷积神经网络模型,通过学习的方式建立冬小麦冠层可见光图像与长势参数的关系,实现了农田尺度冬小麦苗期冠层叶面积指数(leaf area index, LAI)和地上生物量(above ground biomass, AGB)的准确估算。为验证方法的有效性,该研究采用以冠层覆盖率(canopy cover, CC)作为自变量的线性回归模型和以图像特征为输入的随机森林(random forest, RF)、支持向量机回归(support vector machines regression, SVM)进行对比分析,采用决定系数(coefficient of determination,2)和归一化均方根误差(normalized root mean square error, NRMSE)定量评价估算方法的准确率。结果表明:该方法估算准确率均优于对比方法,其中AGB估算结果的2为0.791 7,NRMSE为24.37%,LAI估算结果的2为0.825 6,NRMSE为23.33%。研究可为冬小麦苗期长势监测与田间精细管理提供参考。

作物;生长;参数估算;冬小麦;苗期;叶面积指数;地上生物量;卷积神经网络

0 引 言

叶面积指数(leaf area index, LAI)和地上生物量(above ground biomass, AGB)是表征冬小麦长势的2个重要参数[1]。农田尺度的LAI和AGB估算对于冬小麦苗期长势监测与田间精细管理具有重要的意义。传统的LAI和AGB测量方法需要田间破坏性取样和人工测量分析,存在效率低、工作量大等问题,不能满足高通量、自动化的植物表型分析需求[2-4]。遥感是目前冬小麦长势参数无损测量的主要方法之一,利用获取的冬小麦冠层光谱数据,通过计算植被指数并与长势参数实测数据进行回归分析,能够实现LAI和AGB的无损测量[1,5-8]。但由于光谱数据采集需要使用专用的设备,该方法在使用成本和便捷性方面存在一定不足[2,9]。

可见光图像具有成本低、数据获取方便等优点[10-14]。基于计算机视觉技术,从可见光图像中提取数字特征,能够对LAI和AGB进行准确的拟合分析[11,15-18],例如:陈玉青等[4]基于Android手机平台开发了一种冬小麦叶面积指数快速测量系统,该系统利用冬小麦冠层HSV图像中的H分量和V分量进行冠层分割,然后利用分割后的冠层图像计算LAI。结果表明,该系统测量结果与实测LAI之间存在良好的线性关系。崔日鲜等[19]利用可见光图像分析,提取了冠层覆盖率等多个颜色特征,利用逐步回归和BP神经网络方法进行冬小麦地上部生物量估算研究。结果表明,利用冠层覆盖度和BP神经网络,能够实现冬小麦地上部生物量的准确估算。虽然基于计算机视觉技术的方法取得了一定效果,但仍然存在2个问题[20-21]:1)易受噪声干扰,田间采集的冬小麦图像中包含大量由光照不均匀和复杂背景产生的噪声,对冬小麦图像分割及特征提取的准确率有严重的影响;2)对图像特征的依赖程度较高,但通常人工设计的图像特征泛化能力有限,导致该方法难以拓展应用。

卷积神经网络(convolutional neural network, CNN)是目前最有效的深度学习方法之一,能够直接以图像作为输入,具有识别准确率高等优点[22-24],已在杂草和害虫识别[25-26]、植物病害和胁迫诊断[20,24]、农业图像分割[27-29]等多个领域得到了广泛的应用。本研究拟开展基于卷积神经网络的冬小麦苗期长势参数估算研究,以冬小麦苗期冠层可见光图像作为输入,利用卷积神经网络从冠层图像中自动学习特征,通过学习的方法建立冬小麦冠层可见光图像与长势参数的关系,实现农田尺度的冬小麦苗期LAI和AGB快速估算,以期为冬小麦苗期长势监测与田间精细管理提供有效支撑。

1 材料与方法

1.1 图像数据采集及数据集构建

本研究试验于2017年10月—2018年6月在河南省商丘市农林科学院田间试验基地进行。试验采用的冬小麦品种为国麦301,播种时间为2017年10月14日。共设置12个小区,小区规格为2.4 m×5 m。在每个小区内设置3个1 m×1 m的图像采样区。采用佳能600D数码相机(有效像素1800万,最高图像分辨率为5 184×3 456像素)对每个图像采样区进行拍照。采集图像时,利用三脚架将相机放置于图像采样区正上方1.5 m处,镜头垂直向下,不使用光学变焦,保持闪光灯关闭。试验期间共进行17次图像采集,获得612张冬小麦苗期冠层可见光图像,具体图像采集日期如表1所示。

表1 冬小麦苗期冠层图像采集日期

采集的图像格式为JPG,原始分辨率为5 184×3 456像素。获取图像后,利用手动剪裁的方式将图像中非图像采样区的部分剔除。

将冬小麦苗期冠层可见光图像数据集划分为训练集、验证集和测试集。为扩充数据集的数据量,避免过拟合现象的发生,本研究对图像数据集进行扩充:首先将原始图像分别旋转90°、180°和270°,然后进行水平和垂直翻转。为使构建的估算模型能够克服大田环境下光照噪声,将冬小麦苗期冠层可见光图像转换到HSV空间,通过调整V通道改变图像亮度,模拟大田环境下光照条件的变化,进一步扩充图像数据集[27]。通过数据扩充,将原始数据集扩充至26倍。扩充后的图像数据集共包含15 912张冬小麦冠层图像,其中训练集、验证集和测试集中图像的数量分别为8 486、2 122和5 304(训练集与测试集按照7:3的比例进行划分,其中验证集占训练集的20%)。扩充后,考虑到模型网络结构、实际应用效率、网络训练时间、模型计算量和硬件设备等因素,将数据集中图像的尺寸调整为96像素×96像素,降低CNN模型的参数量。

1.2 冬小麦长势参数采集

冬小麦苗期LAI与AGB数据的采集与图像采集同时进行。AGB数据采集采用破坏性取样的方法,在每个小区内随机选择5株冬小麦进行烘干称质量(该5株小麦均不在图像采样图内)。将5株冬小麦的干质量平均后乘以相应的植株密度,从而获得该试验区的实测AGB数据。LAI数据通过比叶重法计算获取[30]。

1.3 卷积神经网络模型构建

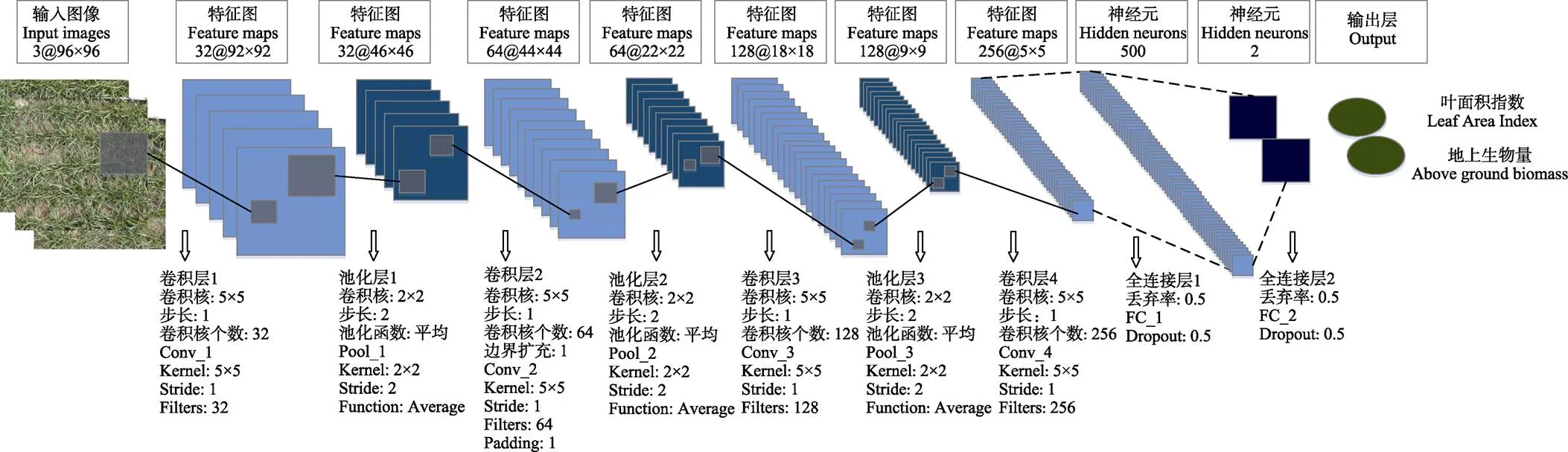

本研究CNN模型结构如图1所示。本研究CNN模型的输入为冬小麦苗期冠层图像,输入图像的尺寸为96×96×3(宽×高×颜色通道),共包含4个卷积层、3个池化层和2个全连接层。卷积层中采用大小为5×5的卷积核提取图像特征,4个卷积层中卷积核的数量分别为32、64、128和256[23]。为保持特征图的尺寸为整数,卷积层2中采用了边界扩充(Padding=1)。池化层卷积核的大小为2×2,步长为2,采用平均池化函数。全连接层1中隐藏神经元的个数为500,丢弃率为0.5,全连接层2包含2个隐藏神经元,对应输出层估算的参数数量,丢弃率为0.5。输出层为冬小麦苗期冠层LAI和AGB。

本研究CNN模型采用梯度下降算法(stochastic gradient descent, SGD)进行训练,动量因子(momentum)设置为0.9,训练过程中保持不变;CNN模型的学习率(learning rate)和图像批处理大小(mini-batchsize)2个参数通过网格式搜索确定,选择模型估算准确率最高的参数组合。初始learning rate设置为0.001,每20次训练后学习率下降为原始学习率的10%,mini-batchsize设置为32,最大训练次数设置为300。

1.4 估算结果对比试验及评价指标

为验证本研究冬小麦长势参数估算方法的有效性,本研究采用传统的估算方法进行对比试验。已有研究表明,冠层覆盖率(canopy cover, CC)与冬小麦长势参数具有良好的线性关系[12,15,19,31-32],因此,本研究采用以CC作为自变量的线性回归(linear regression,LR)模型(LR-CC)作为对比方法之一。CC通过计算冬小麦冠层图像中植被像素占图像总像素的比例得出[12]。本研究还采用了随机森林(random forest, RF)和支持向量机回归(support vector machines regression, SVM)2种传统分类器结合特征提取作为对比。

由于采集的冬小麦苗期冠层图像中含有背景噪声,因此在提取图像特征用于对比方法估算长势参数之前,首先要进行冠层图像分割,剔除图像中的背景噪声。本研究采用Canopeo[15,17]实现冠层图像分割,然后从分割后的冠层图像中提取图像特征。提取的特征包含RGB、HSV和***3个颜色空间9个颜色分量的一阶矩(Avg)和二阶矩(std)2个颜色特征以及能量(Energy)、相关度(Correlation)、对比度(Contrast)和同质性(Homogeneity)4个纹理特征,共计54个图像特征。在提取特征后,利用Pearson相关分析选择与估算参数相关性较高的特征构建模型。

注:3@96×96代表3幅96×96像素的特征图,余同。卷积层1中卷积核大小为5×5,数量为32,卷积层2中卷积核大小为5×5,数量为64,卷积层3中卷积核大小为5×5,数量为128,卷积层4中卷积核大小为5×5,数量为256,全连接层1中神经元个数为500,全连接层2中神经元个数为2。局部连接采用ReLU激活函数实现。

本研究对模型估算的冬小麦苗期长势参数和实测长势参数进行线性回归分析,定量评价估算模型的准确率。采用决定系数(coefficient of determination,2)和标准均方根误差(normalized root mean square error, NRMSE)作为评价指标。

2 试验结果与分析

本研究CNN模型采用Matlab 2018a编程实现,试验软件环境为Window 10专业版,硬件环境为Intel Xeon E5-2620 CPU 2.1 GHz,内存32GB,GPU为NVIDIA Quadro P4000。

2.1 冬小麦苗期长势参数估算结果

采用SGD方法进行CNN模型训练的过程如图2所示。随着迭代次数的增加,训练集和验证集的损失逐渐降低。模型在较短的迭代次数内能够迅速收敛,表明模型取得了良好的训练效果。利用训练完的CNN模型进行冬小麦苗期冠层AGB和LAI估算,估算结果如图3和4。

图2 训练和验证损失函数曲线

图3 基于CNN的地上生物量估算结果

从估算结果中可以看出,本研究基于CNN模型估算的长势参数和实测长势参数之间存在良好的线性关系。在AGB的估算结果中,基于CNN模型在训练集和验证集上取得了较高的准确率,2均达到了0.9以上,NRMSE均低于5%;在测试集上,基于CNN模型的估算准确率相较于训练集和验证集出现了一定的下降,但依然取得了良好的估算结果,2为0.791 7,NRMSE为24.37%。LAI的估算结果与AGB类似,基于CNN的模型在训练集和验证集上的准确率较高,2均超过了0.98,NRMSE均低于25%,在测试集的估算结果2为0.825 6,NRMSE为23.33%。测试结果表明,采用基于CNN的模型,能够实现冬小麦苗期长势参数的准确估算。

图4 基于CNN的叶面积指数估算结果

2.2 方法对比与分析

2.2.1 与LR-CC估算方法对比

在用Canopeo进行冠层图像分割之前,为降低方法运算量,提高效率,将冠层图像的尺寸统一调整为1000像素×1000像素。根据本研究试验设置,每个小区内设置了3个图像采样区,因此在计算每个小区对应的CC值时,本研究将该小区内3个图像采样区的CC值进行平均。基于以上试验设置,本研究建立了CC数据集,用于LR-CC模型的构建。在异常值(由于光照过强导致的偏差较大的CC值)检测后,将CC数据集划分为训练集和测试集,其中训练集的样本量为144,测试集的样本量为48。基于LR-CC模型的冬小麦苗期冠层LAI和AGB估算果如图5所示。

图5 基于线性回归模型的长势参数估算结果

从估算结果可以看出,LR-CC估算AGB的2为0.724 6,NRMSE为29.31%,估算LAI的2为0.794 9,NRMSE为35.18%。总体来说,LR-CC的估算效果低于CNN模型。

2.2.2 与RF、SVM估算方法对比

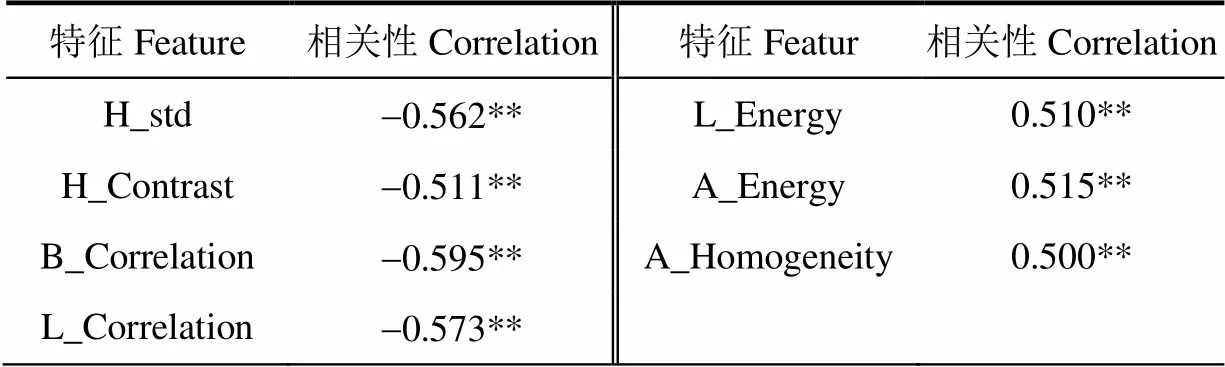

在采用RF和SVM进行冬小麦长势参数估算前,本研究采用Pearson相关系数进行图像特征的选择。将提取的54个图像特征分别与AGB数据和LAI实测数据进行相关性分析,选择相关性较高的特征构建估算模型,特征选择的结果如表2和表3所示。

表2 冬小麦苗期冠层图像特征选择结果(与AGB相关性)

注:**表示在0.01水平显著。下同。

Note:**represents significant at the 0.01 level. The same below.

表3 冬小麦苗期冠层图像特征选择结果(与LAI相关性)

从相关性分析结果中可以看出,原始特征集中的16个图像特征与AGB数据相关性较高,7个图像特征与LAI数据相关性较高,因此,本研究建立包含16个特征的数据集进行AGB估算,建立包含7个特征的数据集进行LAI估算。采用与CC数据集相同的划分比例将构建的2个数据集划分为训练集和测试集,分别采用RF和SVM模型进行冬小麦苗期AGB和LAI估算,估算结果如图6所示。

从图6中可以看出,对于AGB的估算,RF、SVM与LR-CC模型的估算能力类似,RF估算AGB的2为0.773 8,NRMSE为28.85%,估算准确率略高于SVM,SVM估算AGB的2为0.645 5,NRMSE为53.73%。LAI估算结果方面,RF和SVM的估算结果均不准确,基于RF的2为0.18,NRMSE为29.65%,基于SVM的2为0.189 4,NRMSE为74.68%,估算效果远低于LR-CC模型。

2.2.3 讨 论

从对比结果中可以看出,相比于传统的冬小麦长势参数估算方法,本研究提出的基于CNN的估算方法能够取得更准确的农田尺度冬小麦苗期AGB和LAI估算。通过本研究试验过程可知,基于CNN的估算方法不需要对冬小麦图像进行分割,是更直接的估算方法,并且该方法能够直接以冬小麦冠层图像作为输入并从训练数据中自动学习、选择特征,避免了传统估算方法中图像分割和人工特征提取等环节,且CNN模型学习的特征具有更好的泛化能力[20,21,33],进一步提升了在大田环境下实际应用的潜力。而LR-CC、RF和SVM3种对比方法在提取图像特征前需要进行图像分割,提取冬小麦冠层图像。由于大田环境下光照和背景等噪声对图像分割具有较大的影响,且冬小麦叶片细长,导致冬小麦冠层图像分割往往难以取得理想的效果。Canopeo[15,17]是目前广泛应用的冠层图像分割方法之一,但由于Canopeo是基于颜色信息的图像分割方法,而颜色信息容易受到光照和背景等噪声的影响[21],从而导致对比方法估算的准确性和鲁棒性较低。除此之外,这3种对比方法都需要人工设计、提取图像底层特征,由于人工设计的图像特征泛化能力有限,也导致了这些方法难以在大田实际环境中应用。

3 结 论

该研究基于图像处理与深度学习技术,提出了基于卷积神经网络的冬小麦苗期长势参数估算方法。主要结论如下:

1)以冠层可见光图像作为输入,本研究提出了适用于冬小麦苗期长势参数估算卷积神经网络模型,实现了农田尺度冬小麦苗期AGB和LAI的准确估算,其中AGB估算结果的2为0.791 7,NRMSE为24.37%,LAI估算结果的2为0.825 6,NRMSE为23.33%。

2)采用以冠层覆盖率作为自变量的线性回归模型、随机森林和支持向量机回归进行估算准确率的定量对比。结果表明,以冠层覆盖率作为自变量的线性回归模型估算AGB的2为0.724 6,NRMSE为29.31%,估算LAI的2为0.794 9,NRMSE为35.18%,随机森林估算AGB的2为0.773 8,NRMSE为28.85%,估算LAI的2为0.18,NRMSE为29.65%,支持向量机估算AGB的2为0.645 5,NRMSE为53.73%,估算LAI的2为0.189 4,NRMSE为74.68%。与对比估算方法相比,本研究提出的基于CNN的估算方法准确率更高,更适用于田间实际环境的冬小麦苗期长势参数估算。

本研究提出的基于卷积神经网络的冬小麦苗期长势参数估算方法,实现了农田尺度冬小麦长势参数的准确估算,可为冬小麦苗期长势监测与田间精细管理提供支撑。

[1] 徐旭,陈国庆,王良,等. 基于敏感光谱波段图像特征的冬小麦LAI和地上部生物量监测[J]. 农业工程学报,2015,31(22):169-175. Xu Xu, Chen Guoqing, Wang Liang, et al. Monitoring leaf area index and biomass above ground of winter wheat based on sensitive spectral waveband and corresponding image characteristic[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2015, 31(22): 169-175. (in Chinese with English abstract)

[2] Zhang L, Verma B, Stockwell D, et al. Density weighted connectivity of grass pixels in image frames for biomass estimation[J]. Expert Systems with Applications, 2018, 101: 213-227.

[3] Walter J, Edwards J, McDonald G, et al. Photogrammetry for the estimation of wheat biomass and harvest index[J]. Field Crops Research, 2018, 216: 165-174.

[4] 陈玉青,杨玮,李民赞,等. 基于Android手机平台的冬小麦叶面积指数快速测量系统[J]. 农业机械学报,2017,48(增刊):123-128. Chen Yuqing, Yang Wei, Li Minzan, et al. Measurement system of winter wheat LAI based on android mobile platform[J]. Transactions of the Chinese Society for Agricultural Machinery, 2017, 48 (Supp): 123-128. (in Chinese with English abstract)

[5] 高林,杨贵军,于海洋,等. 基于无人机高光谱遥感的冬小麦叶面积指数反演[J]. 农业工程学报,2016,32(22):113-120. Gao Lin, Yang Guijun, Yu Haiyang, et al. Retrieving winter wheat leaf area index based on unmanned aerial vehicle hyperspectral remoter sensing[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2016, 32(22): 113-120. (in Chinese with English abstract)

[6] Schirrmann M, Hamdorf A, Garz A, et al. Estimating wheat biomass by combining image clustering with crop height[J]. Computers and Electronics in Agriculture, 2016, 121: 374-384.

[7] Rasmussen J, Ntakos G, Nielsen J, et al. Are vegetation indices derived from consumer-grade cameras mounted on UAVs sufficiently reliable for assessing experimental plots?[J]. European Journal of Agronomy, 2016, 74: 75-92.

[8] 苏伟,张明政,展郡鸽,等. 基于机载LiDAR数据的农作物叶面积指数估算方法研究[J]. 农业机械学报,2016,47(3):272-277. Su Wei, Zhang Mingzheng, Zhan Junge, et al. Estimation method of crop leaf area index based on airborne LiDAR data[J]. Transactions of the Chinese Society for Agricultural Machinery, 2016, 47(3): 272-277. (in Chinese with English abstract)

[9] 李明,张长利,房俊龙. 基于图像处理技术的小麦叶面积指数的提取[J]. 农业工程学报,2010,26(1):205-209. Li Ming, Zhang Changli, Fang Junlong. Extraction of leaf area index of wheat based on image processing technique[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2010, 26(1): 205-209. (in Chinese with English abstract)

[10] Hamuda E, Mc Ginley B, Glavin M, et al. Automatic crop detection under field conditions using the HSV colour space and morphological operations[J]. Computers and Electronics in Agriculture, 2017, 133: 97-107.

[11] González-Esquiva J M, Oates M J, García-Mateos G, et al. Development of a visual monitoring system for water balance estimation of horticultural crops using low cost cameras[J]. Computers and Electronics in Agriculture, 2017, 141: 15-26.

[12] Casadesús J, Villegas D. Conventional digital cameras as a tool for assessing leaf area index and biomass for cereal breeding[J]. Journal of Integrative Plant Biology, 2014, 56(1): 7-14.

[13] 马浚诚,杜克明,郑飞翔,等. 可见光光谱和支持向量机的温室黄瓜霜霉病图像分割[J]. 光谱学与光谱分析,2018,38(6):1863-1868. Ma Juncheng, Du Keming, Zheng Feixiang, et al. A segmenting method for greenhouse cucumber downy mildew images based on visual spectral and support vector machine [J]. Spectroscopy and Spectral Analysis, 2018, 38(6): 1863-1868. (in Chinese with English abstract)

[14] Ma J, Li X, Wen H, et al. A key frame extraction method for processing greenhouse vegetables production monitoring video[J]. Computers and Electronics in Agriculture, 2015, 111: 92-102.

[15] Chung Y S, Choi S C, Silva R R, et al. Case study: Estimation of sorghum biomass using digital image analysis with Canopeo[J]. Biomass and Bioenergy, 2017, 105: 207-210.

[16] Neumann K, Klukas C, Friedel S, et al. Dissecting spatiotemporal biomass accumulation in barley under different water regimes using high-throughput image analysis[J]. Plant, Cell and Environment, 2015, 38(10): 1980-1996.

[17] Patrignani A, Ochsner T E. Canopeo: A powerful new tool for measuring fractional green canopy cover[J]. Agronomy Journal, 2015, 107(6): 2312-2320.

[18] Virlet N, Sabermanesh K, Sadeghi-Tehran P, et al. Field scanalyzer: An automated robotic field phenotyping platform for detailed crop monitoring[J]. Functional Plant Biology, 2017, 44(1): 143-153.

[19] 崔日鲜,刘亚东,付金东. 基于可见光光谱和BP人工神经网络的冬小麦生物量估算研究[J]. 光谱学与光谱分析,2015,35(9):2596-2601. Cui Rixian, Liu Yadong, Fu Jindong. Estimation of winter wheat biomass using visible spectral and bp based artificial neural network[J]. Spectroscopy and Spectral Analysis, 2015, 35(9): 2596-2601. (in Chinese with English abstract)

[20] 马浚诚,杜克明,郑飞翔,等. 基于卷积神经网络的温室黄瓜病害识别系统[J]. 农业工程学报,2018,34(12):186-192. Ma Juncheng, Du Keming, Zheng Feixiang, et al. Disease recognition system for greenhouse cucumbers based on deep convolutional neural network[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2018, 34(12): 186-192. (in Chinese with English abstract)

[21] Ma J, Du K, Zhang L, et al. A segmentation method for greenhouse vegetable foliar disease spots images using color information and region growing[J]. Computers and Electronics in Agriculture, 2017, 142: 110-117.

[22] Krizhevsky A, Sutskever I, Hinton G E. ImageNet classification with deep convolutional neural networks[J]. Advances in Neural Information Processing Systems, 2012, 25(2): 1‒9.

[23] Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition[C]// International Conference on Learning Representations, 2014: 1-14.

[24] Ghosal S, Blystone D, Singh A K, et al. An explainable deep machine vision framework for plant stress phenotyping[J]. Proceedings of the National Academy of Sciences of the United States of America, 2018, 115(18): 4613-4618.

[25] Ferreira A D S, Freitas D M, Silva G G D, et al. Weed detection in soybean crops using ConvNets[J]. Computers and Electronics in Agriculture, 2017, 143: 314-324.

[26] Ding W, Taylor G. Automatic moth detection from trap images for pest management[J]. Computers and Electronics in Agriculture, 2016, 123: 17-28.

[27] Xiong X, Duan L, Liu L, et al. Panicle-SEG: A robust image segmentation method for rice panicles in the field based on deep learning and superpixel optimization[J]. Plant Methods, 2017, 13(1): 1-15.

[28] 段凌凤,熊雄,刘谦,等. 基于深度全卷积神经网络的大田稻穗分割[J]. 农业工程学报,2018,34(12):202-209. Duan Lingfeng, Xiong Xiong, Liu Qian, et al. Field rice panicles segmentation based on deep full convolutional neural network[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2018, 34(12): 202-209. (in Chinese with English abstract)

[29] 刘立波,程晓龙,赖军臣. 基于改进全卷积网络的棉田冠层图像分割方法[J]. 农业工程学报,2018,34(12):193-201. Liu Libo, Cheng Xiaolong, Lai Junchen. Segmentation method for cotton canopy image based on improved fully convolutional network model[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2018, 34(12): 193-201. (in Chinese with English abstract)

[30] 刘镕源,王纪华,杨贵军,等. 冬小麦叶面积指数地面测量方法的比较[J]. 农业工程学报,2011,27(3):220-224. Liu Rongyuan, Wang Jihua, Yang Guijun, et al. Comparison of ground-based LAI measuring methods on winter wheat[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2011, 27(3): 220-224. (in Chinese with English abstract)

[31] Baresel J P, Rischbeck P, Hu Y, et al. Use of a digital camera as alternative method for non-destructive detection of the leaf chlorophyll content and the nitrogen nutrition status in wheat[J]. Computers and Electronics in Agriculture, 2017, 140: 25-33.

[32] Ma J, Li Y, Du K, et al. Estimating above ground biomass of winter wheat at early growth stages using digital images and deep convolutional neural network[J]. European Journal of Agronomy, 2019, 103: 117-129.

[33] Ma J, Du K, Zheng F, et al. A recognition method for cucumber diseases using leaf symptom images based on deep convolutional neural network[J]. Computers and Electronics in Agriculture, 2018, 154: 18-24.

Estimating growth related traits of winter wheat at seedling stages based on RGB images and convolutional neural network

Ma Juncheng1, Liu Hongjie2, Zheng Feixiang1, Du Keming1※, Zhang Lingxian3, Hu Xin2, Sun Zhongfu1

(1100081,;2. 476000,;3 . College of Information and Electrical Engineering, China Agricultural University, Beijing100083,)

Leaf area index (LAI) and above ground biomass (AGB) are two critical traits indicating the growth of winter wheat. Currently, non-destructive methods for measuring LAI and AGB heavily are subjected to limitations that the methods are susceptible to the environmental noises and greatly depend on the manual designed features. In this study, an easy-to-use growth-related traits estimation method for winter wheat at early growth stages was proposed by using digital images captured under field conditions and Convolutional Neural Network (CNN). RGB images of winter wheat canopy in 12 plots were captured at the field station of Shangqiu Academy of Agriculture and Forestry Sciences, Henan, China. The canopy images were captured by a low-cost camera at the early growth stages. Using canopy images at early growth stages as input, a CNN structure suitable for the estimation of growth related traits was explored, which was then trained to learn the relationship between the canopy images and the corresponding growth-related traits. Based on the trained CNN, the estimation of LAI and AGB of winter wheat at early growth stages was achieved. In order to compare the results of the CNN, conventionally adopted methods for estimating LAI and AGB in conjunction with a collection of color and texture feature extraction techniques were used. The conventional methods included a linear regression model using canopy cover as the predictor variable (LR-CC), Random Forest (RF) and Support Vector Machine Regression (SVR). The canopy images of winter wheat were captured at early growth stages, resulting in the existence of pixels representing non-vegetation elements in these images, such as soil. Therefore, it was necessary to perform image segmentation of vegetation for the compared methods prior to feature extraction. The segmentation was achieved by Canopeo. The linear regression was used to compare the accuracy of the methods. Normalized Root-Mean-Squared error (NRMSE) and coefficient of determination (2) were used as the criterion for model evaluation. Results showed the CNN demonstrated superior results to the compared methods in the two metrics. Strong correlations could be observed between the actual measurements of traits to those estimated by the CNN. The estimation results of LAI had2equaled to 0.825 6 and NRMSE equaled to 23.33%, and the results of AGB had2equaled to 0.791 7 and NRMSE equals to 24.37%. Compare to the comparative methods, the CNN was a more direct method for AGB and LAI estimation. The image segmentation of vegetation was not necessary because the CNN was able to use the important features to estimate AGB and LAI and ignore the non-important features, which not only reduced the computation cost but also increased the efficiency of the estimation. In contrast, the performances of the compared estimating methods greatly depended on the results of image segmentation. Accurate segmentation results guaranteed accurate data sources to feature extraction. However, canopy images captured under real field conditions were suffering from uneven illumination and complicated background, which was a big challenge to achieve robust image segmentation of vegetation. It was revealed that robust estimation of AGB and LAI of winter wheat at early growth stages could be achieved by CNN, which can provide support to growth monitoring and field management of winter wheat.

crops; growth; parameter estimation; winter wheat; seedling stages; leaf area index; above ground biomass; convolutional neural network

2018-09-27

2019-01-17

国家自然科学基金(31801264);国家重点研发计划项目(2016YFD0300606和2017YFD0300402)

马浚诚,助理研究员,博士,主要从事基于计算机视觉的作物信息获取与分析研究。Email:majuncheng@caas.cn

杜克明,助理研究员,博士,主要从事农业物联网研究。Email:dukeming@caas.cn

10.11975/j.issn.1002-6819.2019.05.022

S512.1+1;TP391.41

A

1002-6819(2019)-05-0183-07

马浚诚,刘红杰,郑飞翔,杜克明,张领先,胡 新,孙忠富. 基于可见光图像和卷积神经网络的冬小麦苗期长势参数估算[J]. 农业工程学报,2019,35(5):183-189.doi:10.11975/j.issn.1002-6819.2019.05.022 http://www.tcsae.org

Ma Juncheng, Liu Hongjie, Zheng Feixiang, Du Keming, Zhang Lingxian, Hu Xin, Sun Zhongfu. Estimating growth related traits of winter wheat at seedling stages based on RGB images and convolutional neural network [J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2019, 35(5): 183-189. (in Chinese with English abstract) doi:10.11975/j.issn.1002-6819.2019.05.022 http://www.tcsae.org