Software defect prevention based on human error theories

2017-11-20FuqunHUANGBinLIU

Fuqun HUANG,Bin LIU

aInstitute for Dependability Engineering,Seattle,WA 98115,USA

bSchool of Reliability and System Engineering,Beihang University,Beijing 100083,China

Software defect prevention based on human error theories

Fuqun HUANGa,*,Bin LIUb

aInstitute for Dependability Engineering,Seattle,WA 98115,USA

bSchool of Reliability and System Engineering,Beihang University,Beijing 100083,China

*Corresponding author.

E-mail address:huangfuqun@gmail.com(F.HUANG).

Peer review under responsibility of Editorial Committee of CJA.

Production and hosting by Elsevier

http://dx.doi.org/10.1016/j.cja.2017.03.005

1000-9361©2017 Chinese Society of Aeronautics and Astronautics.Production and hosting by Elsevier Ltd.

This is an open access article under the CC BY-NC-ND license(http://creativecommons.org/licenses/by-nc-nd/4.0/).

Software defect prevention is an important way to reduce the defect introduction rate.As the primary cause of software defects,human error can be the key to understanding and preventing software defects.This paper proposes a defect prevention approach based on human error mechanisms:DPeHE.The approach includes both knowledge and regulation training in human error prevention.Knowledge training provides programmers with explicit knowledge on why programmers commit errors,what kinds of errors tend to be committed under different circumstances,and how these errors can be prevented.Regulation training further helps programmers to promote the awareness and ability to prevent human errors through practice.The practice is facilitated by a problem solving checklist and a root cause identification checklist.This paper provides a systematic framework that integrates knowledge across disciplines,e.g.,cognitive science,software psychology and software engineering to defend against human errors in software development.Furthermore,we applied this approach in an international company at CMM Level 5 and a software development institution at CMM Level 1 in the Chinese Aviation Industry.The application cases show that the approach is feasible and effective in promoting developers’ability to prevent software defects,independent of process maturity levels.

©2017 Chinese Society of Aeronautics and Astronautics.Production and hosting by Elsevier Ltd.This is an open access article under the CC BY-NC-ND license(http://creativecommons.org/licenses/by-nc-nd/4.0/).

Human factor;

Human error;

Programming;

Root cause analysis;

Software defect prevention;Software design;

Software quality;

Software psychology

1.Introduction

With the increasing dependence on software to realize complex functions in the modern aeronautic systems,software has become the major determinant of the systems’reliability and safety.1Software defect prevention(DP)is an important strategy to improve software quality and reduce development costs by preventing defects from reoccurring.2,3Defect prevention can be employed at the early stages of software development to reduce defect introduction rates and thus cut down the effort of defect detection and fixing.4

Existing DP emphasizes software process improvement based on learning from historic failures.It proceeds as a specialized team chooses defect samples from a database,identifies causes of these defects and then produces strategies of process improvement for the current project.2,5–9This paradigm works effectively in preventing defects that are caused by process f l aws,e.g.insufficient requirement tracking.However,software developers’cognitive failures are insufficiently addressed.10

An approach for preventing software developers’cognitive failures is urgently required in the Chinese Aviation Industry.One of the authors’previous studies,11which investigated a total of 3747 defects from 70 software systems developed by 29 Chinese aviation organizations,shows that individual cognitive failures cause 87%of the severe residual defects.This study also shows that the process maturity levels of most software development institutions in the Chinese aviation industry are below CMM Level 3;under this situation,the conventional DP process is hard to implement due to the lack of root-cause data and a systematic root-cause taxonomy.10Cognitive errors are the primary cause of software defects,12since software is the representation of human thoughts.13,14If software developers understand why,how,and when they are prone to commit errors,they could prevent errors more effectively.15It is scientifically interesting and an urgent demand to promote software developers’cognitive abilities to prevent software defects.

This paper proposes a human-centered defect prevention approach to improve software developers’cognitive abilities with respect to human error prevention.This approach emphasizes learning from software errors based on their underlying causal mechanisms.For convenience,we name this approach brief l y as ‘defect prevention based on human error theories”(DPeHE).In the next section,we review the concepts and related work.In Section 3,we present the design of the approach.Section 4 presents two application cases.Section 5 presents the discussions and conclusion.

2.Related work

2.1.Traditional defect prevention

The DP processes reported in the existing literature are generally similar to the traditional defect prevention process2or the corresponding defect causal analysis process(D.N.Card,2006).The typical process of conventional DP includes the following major steps6:(1)select defect samples from historical database,(2)hold causal analysis meeting to identify defect causes and develop prevention action proposals,and(3)implement preventative actions.Therefore,to implement DP,three resource elements are required:(1)a database and tool for defect sample selection and action tracking,(2)a cause classification method for identifying root causes,and(3)an action team with good expertise of root cause analysis.DP has shown to be effective in preventing software defects,especially from a perspective of process improvement.4,5Despite the great progress made in past years,some aspects require further study.

The key of conventional defect prevention is how to identify root causes.However,this process strongly depends on expert experience.Root cause taxonomies are proposed to aid root cause analysis,which are summarized in Table 1.Unfortunately,these taxonomies seem too abstract to be helpful for those organizations with little experience.Root causes are generally classified into four categories:method,people,tool,and requirement;detailed causes are analyzed by brainstorming with cause-effect diagrams.7–9Generally,brainstorming is a good method for gathering new ideas,but it is not asreliable for repeated use.For example,defect causes analyzed by different people may fall into different categories,be overlapped or even be omitted.This problem reduces the possibility of recurring good effects across different organizations.It is no accident that defect causal analysis is often misunderstood and misapplied in the software industry.7

Table 1 Summary of existing root cause classifications.

The other problem is how to choose defect samples.The common method is choosing according to defect type,which is generally defined by the orthogonal defect classification(ODC).17However,there is a lack of scientific criteria to help people make decisions about what historical defects have heuristic meanings for the current project.Again,this process strongly depends on the experience of the team members.As a result,it is hard for those organizations with scarce data to implement DP,as there are hardly any defect samples for reference.

In the authors’previous study,10a structural taxonomy of root causes has been recently proposed to address these problems.More efforts are required to provide software developers with explicit knowledge strategies,as well as a training program to improve their awareness and abilities to prevent human errors.DPeHE is proposed to address these needs.

2.2.Human errors

Human errors have been studied along with developments in cognitive psychology since the 1970s.In terms of fundamental theories,Reason’s work(1990)is regarded as the most systematic theory that is widely accepted and applied in safety critical domains.However,these theories tend to be ‘theoretical and less analytical”.18,19Only after being adapted and integrated to the application contexts can these basic human error modes be very powerful in practice.

In nuclear power industry,human errors are mainly addressed in human reliability analysis(HRA),which is employed as a part of probabilistic risk assessment(PRA).20,21The main aim of these approaches is to assess error probability,as part of system risk probability.Well-known HRAs include technique for human error rate prediction(THERP),22Human error assessment and reduction technique(HEART)23,and cognitive reliability and error analysis method(CREAM).24The common idea of these HRAs is to quantify human error probability(HEP)by assessing the factors that influence human errors.Such factors are called performance shaping factorsin THERP,error-producing conditions(EPCs)in HEART,error-forcing context(EFC)in ATHEANA,and common performance conditions(CPCs)in CREAM.They can generally be classified into four categories:human,task,system,and environment.25The differences between various techniques lie in what factors to choose,and how to model the relationships between the influence factors and HEPs.

In aviation domain,studies have focused on identifying factors that link to pilot-error accidents through accident investigations.These approaches aim to discover causes for the accidents and to derive prevention strategies.Such approaches pertain to retrospective analysis by the assistance of error classifications,26such as the technique for the retrospective analysis of cognitive errors(TRACER-Lite)and the human factor analysis and classification system(HFACS).27The outcome of these prospective analyses can then be utilized for generating possible accidental scenarios useful for error prevention and management.Recently,with the realization that the effects of external error management approaches(e.g.,error classification schemes,error prevention through interface design and error tolerant systems)are limited in complex situations,Kontogiannis and Malakis argue that28proactive and selfmonitoring strategies are also important,which should become part of error management training in aviation and air traffic control.

Approaches in Aviation have been extended to other transportation domains,such as railway,roadway and shipping transportation,e.g.,HFACS was applied to conduct investigations into train accidents29and shipping accidents.30Though subjects involved in these domains are operators of vehicles,the contexts are different.As a result,domain specific error taxonomies were developed.31–33Furthermore,human error approaches in the above areas have also contributed to the understanding and management of human errors in health care domain.34,35However,notwithstanding the many similarities,important context difference sare required to be addressed.36

Although human error research has a long tradition in these safety-critical domains,they are quite domain-specific.The application contexts of the existing approaches are greatly different from that of software development,which make these approaches hardly applicable in the latter context.

Errors examined in the above areas are generally machine operation errors,whereas software development errors are design errors.Skill-based or rule-based performance constitutes a large proportion of machine operations.Errors at these two levels are much easier to be predicted37and prevented by external devices,e.g.,improving operation procedures and designing a better human-machine interface.In comparison,software development activities mainly involve problem solving performance on ill-defined problems,38,39which pertain to knowledge-based behavior.Knowledge-based errors are difficult to be anticipated and almost impossible to be prevented by external devices.Software development errors are strongly dependent on the developer’s internal ability to monitor and diagnose his/her own cognitive process.

In the computer science domain,Ko and Myers40first introduced Reason’s theories to observe programmers’performance and identify causes for software errors,with a very positive effect found in improving programming system design.Other studies relevant to human errors in the programming context concentrate on error handling of application users41,42and human computer interface design.43–45To the best of the authors’knowledge,little research focuses on software defect prevention by improving programmers’ability to self-regulate.

3.DPeHE approach

3.1.DPeHE framework

Generally,software defects are fixed and forgotten.Few developers explicitly know about the cognitive mechanisms underlying erroneous behavior.DPeHE provides a process to understand these mechanisms,learn from historic errors in a systematic way,and improve developers’awareness and ability to prevent human errors.

The framework of DPeHE is based on meta-cognitive theories.Meta-cognition refers to the ‘cognition about cognition”.46Meta-cognition includes two essential components47,48:(1)knowledge of cognition,referring to knowledge about cognitive processes and knowledge that can be used to control cognitive processes and,(2)regulation of cognition,as activities regarding self-regulatory mechanisms during an ongoing attempt to learn or solve problems,including planning activities,monitoring activities,and evaluating outcomes.

参照组行CT检查,患者仰卧于病床上,双臂向上举,运用GE16排螺旋CT,扫面患者髋臼上缘至股骨颈处,设置扫描层厚度为4毫米,间隔设置为5毫米,扫描患者病灶处。实验组行核磁共振检查,仪器运用飞利浦1.5T,患者仰卧,在体线圈旋转,获取图像。

Meta-cognition helps people to perform cognitive tasks more effectively.It is a salient feature of high-performance individuals in learning,problem solving,and engineering design.49–52There is a great deal of research demonstrating that those who are flexible and perseverant in problem solving,and who consciously apply their intellectual skills,are those who possess well-developed meta-cognitive abilities.49Since meta-cognition involves monitoring and regulating cognitive activities,it is also assumed to be closely related to cognitive failures.53,54

Researchers use meta-cognition training to improve cognitive performance,e.g.,learning ability,55,56math problem solving53,57–59and engineering design.52,60Meta-cognitive knowledge and regulation can be improved using a variety of instructional strategies.For example,Schoenfeld59elaborated a regulatory strategy consisting of heuristics to be used in mathematical problem solving.He divided problem solving into three stages:analysis,exploration,and verification.For example,strategies used in problem analysis include drawing a diagram,examining special cases of the problem,and trying to simplify the problem.Schraw61proposed a strategy evaluation matrix(SEM)in classroom settings,which includes strategies of skimming,slowing down,activating prior knowledge,mental integration and diagrams.

Based on the above evidence,it is reasonable to believe that providing meta-cognitive training for error prevention can be a helpful way of improving the software developers’ability to prevent errors,since it is widely accepted that proper metacognitive training can significantly improve problem solving performance.53,58,60,62,63Meta-cognition theory provides us with a reliable DPeHE framework from a theoretical view-point.However,only after directing the knowledge and regulations at the specific purpose(human error prevention)and the specific context(software development)does the approach become practical.In the DPeHE,the meta-cognitive knowledge concerns the cognitive process of software problem solving,such as why software developers commit errors,what kinds of errors they tend to commit under certain scenarios,and what strategies they can use.DPeHE regulation enables developers to become aware of error-prone scenarios when solving software design problems,to employ strategies to avoid committing errors,and to make appropriate evaluations of the outcomes.

The DPEHE framework is shown in Fig.1.The DPeHE consists of three stages.The first stage is called ‘knowledge training”.Software developers are trained to know why humans commit errors,what kinds of errors they might commit during programming,under what scenarios humans tend to commit errors,and how to prevent human errors during programming.

The second stage is called ‘regulation training”.Regulation training is the process to help software developers promote the awareness and self-regulation of human error prevention.Two DPeHE checklists are distributed to software developers.Before and during the course of programming tasks,software developers are asked to plan and monitor their problem solving processes assisted by the problem solving regulation list.After defects are found by debugging or testing,developers are required to identify causes for each defect they introduced,assisted by the root cause identification checklist.This stage continues until developers reflects that they can be consciously aware of some symptoms under error-prone situations.

At the final stage,software developers have acquired the ability to self-regulate under error-prone situations.They become consciously aware of error-prone situations and use strategies to prevent errors.Developers are encouraged to improve such abilities continuously with the accumulation of experience.

3.2.Knowledge training

Software development is a complex and flexible cognitive activity.Systematic knowledge about human errors is the basis to prevent errors;moreover,the amount of such knowledge deposited in programmers’long-term memory is an important determinant of their meta-cognitive monitoring ability.64Such systematic knowledge should contain not only error modes,but also the underlying process that governs human thoughts and actions.Understanding the cognitive mechanisms involved in software development can be helpful for comprehending error mechanisms,as ‘correct performance and systematic errors are two sides of the same coin”.37

Therefore,the knowledge training of DPeHE contains two aspects:cognitive model of the software design and human errors.The cognitive model of software design,which provides the cognitive mechanism that governs human errors,is the other side of the ‘coin”.Knowledge about human errors are designed in the frame of ‘why,when,what and how”:why and when software developers tend to commit errors,what patterns of errors they might commit under different scenarios,and ‘how”to prevent errors by using heuristic strategies.

Though software developers solve software problems all the time,few of them are consciously aware of their thought processes because many cognitive activities happen unconsciously.Before software developers understand error mechanisms,they should first explicitly know the general cognitive process involved in software development.

In software development,performance includes both routine and designing activities.Routine activities are those which do not require efforts of problem solving,such as typing or pressing a button in the programming environment to compile the program.However,the main part of software development is designing and coding.What makes coding different from design is that coding has some implementation characteristics such as using a familiar rule to define a variable.However,coding is considered to be routine problem solving as it also has problem-solving features,whereas software design is considered to be non-routine problem solving.65

We generalized these activities as an integrated cognitive model of software development(Fig.2).The model was built by integrating the most significant research results obtained in software psychology,such as Brooks66,Adelson and Soloway67,De´tienne38and Visser.65The cognitive performance of software development contains two main stages:problem representation and solution generation.

Problem representation38,39involves understanding the exterior representation material,e.g.software requirement specification.Through this process,the information embedded in the exterior document is converted to the developer’s mental model.68The accuracy of the mental model is greatly affected by the format of the exterior representation material12,39as well as the developer’s knowledge base.14,38,65–67

Solution generation contains the iterative processes of schema retrieving and matching,67,69solution evaluation,67and new schema construction through learning.70Each of these process is related to different error patterns.10,71All these processes are constrained by the limited working memory capacity and meta-cognition,70which involves monitoring and regulating the cognitive processes.

3.2.2.Why human commits errors:Human error mechanism

Errors are the manifestations of brain bottlenecks.37The first bottleneck is the capacity limitation of working memory,which leads to errors in ‘the attentional mode”;the other bottleneck lies in the human knowledge base,which leads to errors in ‘the schematic control mode”.37

(1)Attentional mode

Errors in the attentional mode originate from the limited capacity of working memory.Working memory is the system that actively holds information in the mind to do verbal and non-verbal tasks such as reasoning and comprehension,and to make it available for further information processing.Working memory is characterized as limited in terms of capacity and the time to sustain information.

The limitation of working memory confers the important benefits of two cognitive mechanisms: ‘selectivity” of inputs and ‘automatic processing” of information.In the real world,information is extremely abundant.With limited attention resource,humans only pay attention to several features of information.In such selective processes,if one has allocated attention to wrong features or ignored some important features,errors occur.Meanwhile,humans tend to ‘save” attention in familiar situations,leaving the task in automatic processing.In the course of such automatic processing,if one has omitted proper checks or performed mistimed checks,errors may occur.

Another group of error modes is also originated from working memory limitation.If one’s working memory is overloaded that the available attention is not sufficient for the task on hand,errors are prone to occur.

‘Working memory overload” is a cognitive state,which appears when cognitive load exceeds the individual’s working memory capacity.A diagram(Fig.3)is extracted from Schnotz and Ku¨rschner’s cognitive load theory70to visually demonstrate the main mechanism of working memory overload.Working memory load consists of three kinds of loads:intrinsic load,extraneous load and germane load.70Intrinsic load is imposed by the intrinsic aspects of a task.It is due to the natural complexity of the information that must be processed.However,complexity varies with subjects’proficiency levels.With the practice level enhanced,experts can ‘chunk”their schema12,14,69–A large number of interacting elements for one learner may only be a single element for another learner with better expertise.Accordingly,intrinsic load can be determined only with reference to a particular level of expertise.Extraneous load is caused entirely by the format of the problem representation material,e.g.,requirement specifications.Germane load is cognitive load caused by cognitive activities in working memory.It aims at intentional learning and goes beyond simple task performance,which is caused when the subject is engaged in schemata construction.Activities such as restructuring of problem representations and metacognitive processes are typical cognitive activities that increase germane load.

In summary,working memory overload is a mental state when working memory load exceeds the limitation of working memory capacity.Under this situation,people are liable to commit errors.Error modes called ‘informational overload”,‘workspace limitations” and ‘problems with complexity”by Reason37are all associated with working memory overload.

(2)Schematic control mode

The generic cognitive structure underlying all aspects of human knowledge and skill is schema,which was described as ‘an active organization of past reactions,or of past experiences,which is supposed to be operating in any well-adapted organic response”.37Through using schemata,most everyday situations do not require effortful processing–automatic processing is all that is required.People can quickly organize new perceptions into schemata and act effectively without effort.Under most circumstances,the ‘default” behavior is sufficient;however,under exceptional or novel circumstances,they may lead to errors.Reason calls this ‘the schematic control mode”.

In the ‘schematic control mode”,one tends to ignore countersigns indicating that the previous rules are inapplicable to the current situation,especially on the first occasion when the individual encounters a significant exception to a general rule.Humans tend to use those rules that have been successfully and frequently used in past.When it comes to solution construction,one tends to f i t his/her previous experience.This tendency manifests itself as ‘illusory correlation” and ‘halo effects”.37When it comes to solution checking,one tends to seek evidence to verify his/her hypotheses rather than to refute the hypotheses (e.g., ‘confirmation bias” and ‘over confidence”).Furthermore,humans tend to believe that all possible courses of an action have been checked,when in fact very few have been considered(‘biased review”).37

3.2.3.What and when errors are committed:Error mode base

Though human errors appear in different ‘guises” in different contexts,they take a limited number of underlying modes.37Understanding such recurring error modes is essential to improving the cognitive ability of error prevention.Therefore,we built an error mode base that contains common error modes committed by software developers.

Being the basis for DPeHE training,the error modes included in the base must be very reliable.Therefore,the error modes included in DPeHE are those that have been widely validated and accepted,e.g.,classical modes summarized by Reason,37‘post completion error”,72lack of knowledge,10and problem representation errors.12,73,74Furthermore,we adapted,tailored,and reorganized these modes to make a balance between usability,consistency and completeness.For instance, ‘perceptual confusions” proposed by Reason were not included in the base as we think that this error mode rarely occurs in software development.‘Countersigns and nonsigns”and ‘rule strength”37share the same underlying mechanism,‘apply strong but now wrong rules”mode,so they are integrated.Error modes such as ‘repetitions”37are excluded as they are hard to understand by engineers.

Furthermore,to enable the error modes to be practically used,explanations,scenarios and programming error examples are provided with each error mode(Table 2).These scenarios and examples demonstrate how the general psychological error modes manifest themselves as programming errors.Knowing such scenarios is essential to building conscious awareness of error-prone situations.The examples were obtained from the controlled experiments12,75or historical data in the Chinese aviation industry.11They are recurrent error patterns committed by different people in the same ways and governed by the same human error mechanisms.

3.2.4.How to prevent error:Strategy heuristics

Based on the knowledge about the cognitive mechanisms of problem solving and error committing,we then provide strategies to guide software developers to prevent errors.Strategy heuristics have proven to be an effective and idiomatical way to enhance self-regulation in education domain.59,76

In meta-cognitive training,heuristics are proposed as powerful general strategies to support learner in selecting the right strategy to solve a problem.General heuristics can be applied in a variety of problems;they are usually content-free and thus applied across many different situations.Researchers agree that students who know about the different kinds of heuristics will be more likely to use them when confronting different classroom tasks.59,77,78

Schoenfeld59and Schraw61have done a lot of work on meta-cognitive regulatory strategies in problem solving education.Schoenfeld59elaborated a regulatory strategy teaching method consisting of heuristics to be used in the stages of analysis,exploration and verification.Strategies used in problem analysis include drawing a diagram,examining special cases of the problem,and simplifying the problem.Strategies used in exploration include replacing conditions by equivalent ones,decomposing the problem in sub-goals,and looking for a related problem where the solution method may be useful with a view to solving the new problem.Strategies used in verification contain checking whether all pertinent data are used,and checking whether the solution can be obtained differently.

Schraw61has proposed a strategy evaluation matrix(SEM)in classroom settings,which includes strategies of skim,slow down,activate prior knowledge,mental integration and diagrams.SEMs were found to be useful by teachers who used them.61One strength of SEMs is that they promote strategy use(i.e.,a cognitive skill),which is known to significantly improve performance.A second strength is that SEMs promote explicit meta-cognitive awareness,even among younger students.A third strength is that SEMs encourage students to actively construct knowledge about how,when,and where to use strategies.

Integrating the above strategies,59,61we design the error mechanisms described in Table 2 with the problem solving model described in Section 3.2.1,and a list of strategy heuris-tics shown in Table 3.For instance,the strategy ‘slow down”is borrowed from Schraw61,while ‘searching countersigns” is proposed based on mechanisms of the targeted error mode:‘apply ‘strong but now wrong’rules”described in Table 2.To prevent this type of errors,one needs to delicately allocate attention to searching the signs that may contradict to the retrieved strong schema.

The strategies are arranged according to the problemsolving stages under a systematic framework of ‘when to use,how to use,and why to use”.We divide the problemsolving process into three stages:problem representation,solution generation and solution evaluation. ‘When to use”describes the error-prone situations under which the strategy applies.The classification on problem-solving stages combined with the error-prone situations aim to promote one’s awareness of error prevention. ‘How to use”,which describes the ways to use the strategies,is proposed for improving one’s self-regulation under error-prone situations. ‘Why to use”explains the researchers’motivation underlying the proposed strategies–error modes that the strategies are used to prevent.

Table 3 Strategy heuristics for error prevention.

3.3.Regulation training

Knowing error mechanisms and prevention strategies does not mean that software developers will use them in practice.Therefore,simply providing knowledge without experience or vice versa does not seem to be sufficient for the development of cognitive control.Only when a software developer is consciously aware of the error-prone situations and applies the strategies he/she learned in real programming practice,has the error-prevention ability been obtained.

Self-questioning is an effective way to promote cognitive regulation for self-directed learners and it is widely used in various domains.79For example,Schraw61proposed a regulatory checklist(RC),which provides a heuristic with three main categories:planning,monitoring,and evaluating.Questions like‘what is my goal?” at the planning stage, ‘am I reaching my goals?” in monitoring and ‘what didn’t work” are proposed to learners.Such self-questioning strategies can guide the learner’s performance before,during,and after task performance,thus improve his/her self-awareness and control over thinking and thereby improve performance.

We designed two regulation checklists to promote the ability to prevent errors.One checklist called problem solving regulation checklist(PSRC)is used before and in the course of software development.The other checklist,root cause identif ication checklist(RCIC),is used after defects are found in debugging or testing.

3.3.1.Problem-solving regulation checklist(PSRC)

The awareness of the problem-solving process is essential to preventing errors.Different stages of problem solving involve different mental activities along with different error modes.The problem regulation checklist is designed to help developers monitor their problem-solving process and promote awareness under error-prone scenarios.The checklist can be used before and during the course of the developers’task(Table 4).

Each scenario is relevant to a specific error mode,shown in the right column of Table 4.The PSRC and the strategy heuristics in Table 3 are closely related:error prevention is logically part of problem solving.Each error mode in Table 4 can be tracked to corresponding strategy heuristics in Table 3.PSRC focuses on arousing an individual’s awareness of error-prone scenarios during his or her problem solving in a real task,whereas strategy heuristics focus on what strategies can be used when the individual is aware of the error-prone scenarios.The strategy heuristics can be presented to individuals in the knowledge training report beforehand.

3.3.2.Root cause identification checklist(RCIC)

The evaluation of the outcomes is also important for the development of meta-cognitive regulation.47Learners who observe and evaluate their performance accurately may react appropriately by keeping and/or changing their study strategies to achieve the goal of,for example,maximizing their grade in a course.Thus,it is desirable to engage learners in activities that will help them assess themselves explicitly.56

For software development,generally,not all errors can be prevented during the problem-solving processes.Some errors may be introduced into the program and reveal themselves as defects,which may be found in debugging or testing.It is desirable to engage developers to assess and explain explicitly why these defects are introduced and what types of errors they have committed.Through such self-checking process,they can enhance their awareness of error committing mechanism and prevention heuristics.

In order to assist developers in identifying their defect causes,a retrospective checklist designed and validated in a previous study10was provided.In addition to human error modes explained above,the checklist integrated other factors leading to software defects such as process and tools.

3.4.Application criteria

The above sections present the DPeHE approach(compared to a Class in object-oriented programming):Section 3.1 gives an overview of the approach,and Sections 3.2 and 3.3 present the major processes and materials required to apply the approach.The above sections provide the essential content that should be used in DPhHE training,and meanwhile,DPhHE retains a trainer’s flexibility to conduct the training in the formats he/she favors(e.g.,the detailed forms of the slides used in knowledge training can be different from trainer to trainer)in a certain context.Section 4 will illustrate how to use the approach in a certain context(compared to an Object that is an initiation of the Class)in our case studies.

DPeHE can be commonly used by any software developers or organizations who wish to prevent software development errors,since DPeHE focuses on promoting individual softwaredevelopers’ability to prevent human errors but does not depend on the maturity level of software development process.

Table 4 Sample of problem-solving regulation checklist.

DPeHE does require the users to be trained with interdisciplinary knowledge presented in Sections 3.1 and 3.2.This training would require the trainers to possess interdisciplinary background in software engineering and cognitive psychology,as well as the ability to link the abovematerials to softwaredevelopers’programming practice in an easy-to-understand way.

4.Case studies

We conducted a case study to get feedback from the industry users.The case study is the most suitable paradigm to investigate DPeHE.It examines phenomena in its natural context,providing a deeper understanding of the phenomena under the study.A case study is particularly suitable when the analytical research paradigm is not sufficient for investigating complex real life issues,involving humans and their interactions with technology.80

In this study,we were concerned with whether an ordinary company in the Chinese aviation industry can apply DPeHE.Therefore,we conducted the study in two extreme cases.One case was conducted in an international organization at CMM Level 5,where the subjects had already used the traditional DP.The other case was conducted at a software development institution in the Chinese Aviation Industry at CMM Level 1,where the subjects had not known about the concept of defect prevention.

The case study proceeded with a mixed collection of quantitative and qualitative data.A combination of qualitative and quantitative data often provides a better understanding of the studied phenomenon.80Quantitative data were collected through the participants’assessment and defect tracking in order to explore the effectiveness of DPeHE.Qualitative data were collected through open questions in order to better understand how DPeHE affected programmers’ability to prevent errors.

4.1.Participants

Two groups of software developing engineers participated in the study.Participants in Group A came from a development center of a large international company whose process maturity was at CMM Level 5.The participants in Group B were from a software development institution in the Chinese aviation industry whose process maturity was at CMM Level 1.The institutions preferred to remain anonymous for confidentiality.

Group A consisted of eight software developers:five had more than 5 years of experience in software development,while three had 1–4 years of experience.The average experience of participants in Group A was 5.4 years.Group B included six developers,three with more than 5 years of experience and three with less than 5 years of experience.The average experience of participants in Group B was 4.5 years.

4.2.DPeHE application

The two application cases were conducted separately by following the same steps with the same material.Each group of training consisted of two stages:Knowledge training and regulation training.The knowledge training was conducted in the form of an interactive report.For both groups,the reports followed the same steps and were presented by the first author with the same content.The presentation was organized as follows:

·Introduction,presenting the significance of defect prevention and emphasizing that human errors were the critical causes of software defects with the material abstracted from Section 1.To capture the interests of participants,the reporter played the video of the selective attention test designed by Simons and Chabris available from Youtube.81

·Framework of DPeHE,presenting the concept and the process model of DPeHE.The aim of this step was to build a whole picture of the approach in the audiences’minds.The material was abstracted from Section 3.1.

·The thinking process of software development,presenting the cognitive model of software development.The material was abstracted from Section 3.2.1.

·Human error mechanisms,presenting why humans commit errors(with the material from Section 3.2.2),and what and when errors are committed(with the material from Section 3.2.3).This was an essential step to enable the audiences to understand human error mechanisms.To involve the participants in a deeper understanding of human error mechanisms,the best way is making them experience human error-proneness.Two psychological tests were used for this purpose.A revised working memory capacity test(operation span)82was carried out,as the interactive part of the report,to allow the audiences to experience the limits of working memory capacity.Furthermore,an adapted Wason’s confirmation bias test was conducted to witness the error mode of ‘confirmation bias” (Appendix A).

·How to prevent human errors in software development,presenting the strategy heuristics for error prevention with material from Section 3.2.4.

·Questions and discussions.Participants were encouraged to ask questions about the approach.Then,the examples of programming defect patterns in Table 2 were provided to the participants.These defects were called ‘coincident faults”collected in Ref.12.A coincident fault was a fault introduced by multiple programmers at the same place in the same way.The participants were asked to identify the possible error modes underlying these coincident faults by choosing error modes from the first column in Table 2.The participants were encouraged to discuss with the reporters.This step was designed to make sure that the audiences had understood the knowledge presented in the previous steps.

After the knowledge training,we provided the PSRC(Section 3.3.1)and RCIC(Section 3.3.2)to the participants.The developers were asked to use PSRC to monitor and regulate their cognitive activities in the course of software development.After defects were detected by debugging or independent tests,software developers were required to use RCIC to identify root causes.

4.3.Participant assessment

4.3.1.Participant assessment data collection

The training program,which included knowledge training and regulation training,lasted for a total of six months.The sub-jective data of this study concerns how participants evaluated DPeHE.Evaluations were obtained using self-assessment questionnaires designed in the form of Likert-scale questions.Though other methods exist to assess meta-cognition(e.g.,on-line computer log f i les76,eye-movement registration83,and think-aloud protocols55),the Likert-scale questionnaire remains the most popular58,84–88given the belief that the ‘inherently subjective nature of meta-cognitive processes”suggests that ‘the individual has the most direct and reliable access to cognitive experiences”.84Therefore,we used selfassessment questionnaires to collect subjective data from the developers.

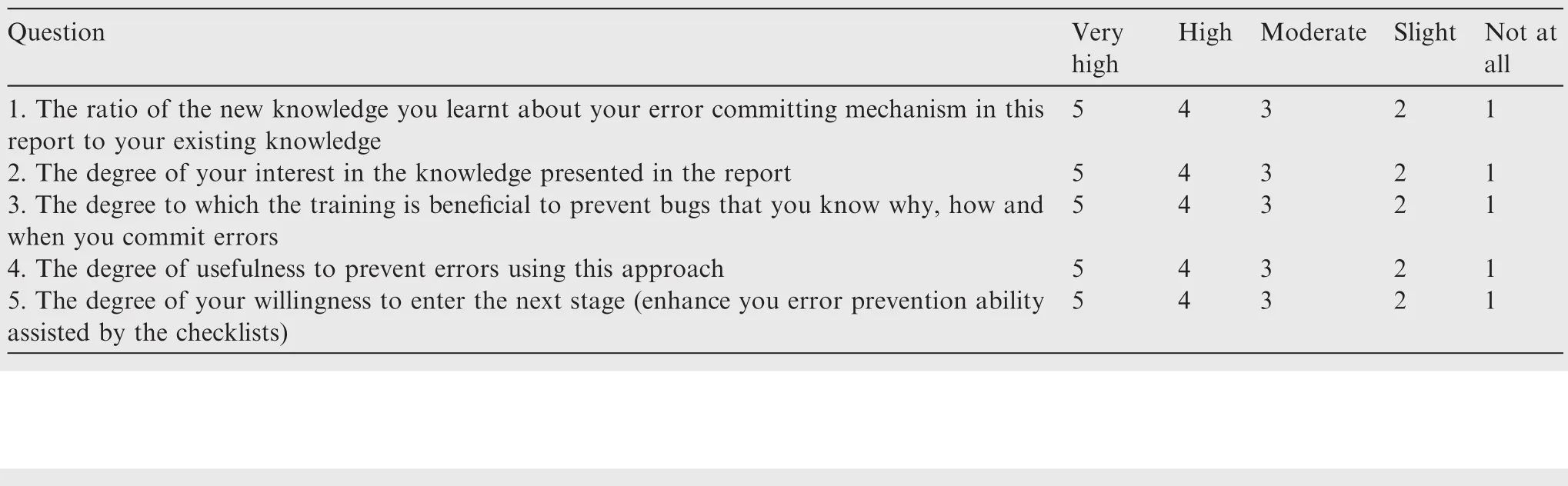

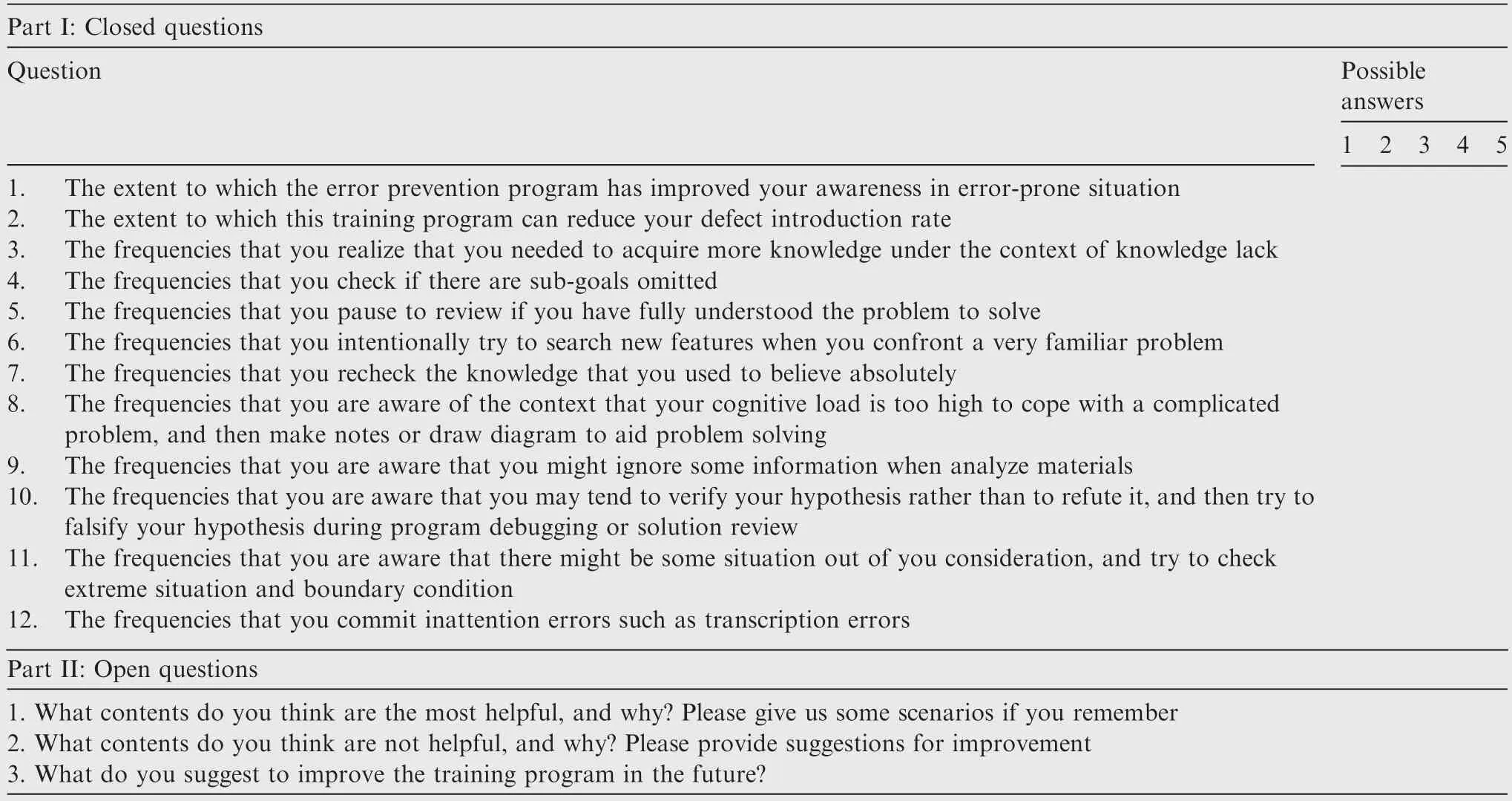

The data collection included two rounds.The first round focused on the evaluation of the knowledge training,which immediately followed the knowledge training report.The questions in this round concerned whether the knowledge and strategies provided by DPeHE were considered useful and interesting by the participants.Appendix B shows a sample of the questions used in the first round.The second round of self-assessment focused on whether participants thought they had made progress after the DPeHE training program.The questionnaire was completed by the participants at the end of six-month training program.A sample of the questionnaire is shown in Appendix C.

4.3.2.Participant assessment results

We were concerned with whether the users considered DPeHE effective.The effectiveness of DPeHE perceived by the users is measured by an average evaluation score in a five point Likert scale,which is one of widely accepted methods for assessing the effectiveness of an approach to promoting users’cognitive ability.58,84–88This effectiveness measure is defined in Eq.(1):

whereiindicates the question number,Ithe total number of questions,jthe subjects’sequence number,Jthe total number of subjects,andSjithe score of the questionigiven by the subjectj.

The results are shown in Table 5,whereKjindicates the mean score of all the questions given by the participantj.From Table 5,we can see that the mean scores given by both groups are above 4 points in both rounds.The results suggest that the participants considered the DPeHE beneficial for enhancing their knowledge and ability associated with error prevention.

Making a comparison between the mean score given by Group A and Group B,we can see that the knowledge training received slightly higher scores from Group A,while no difference was found after the regulation training.This result implies that the approach is not only feasible for organization at high process maturity level(i.e.Group A),but also can be applied by organizations at low process maturity level(i.e.Group B).

Furthermore,the answers to the open questions provided us with more details on the programmers’using experience.These feedbacks provided significant implications for other researchers or project managers to apply DPeHE effectively in other industrial contexts:

(1)The programmers(all of the subjects in the case study)remembered especially well the error modes and related strategies that were enhanced with video or cognitiveerror tests in the training.This implies that video or interactive cognitive tests are very effective in enhancing participants’memory,arousing their interests and motivating them to engage themselves in the training program.Instead of ‘telling” how an error is committed,the video and cognitive-error tests allow the participants to ‘experience” the errors.This is the first strength of DPeHE,which should particularly be emphasized when one applies DPeHE in his/her team contexts.

(2)The key to an effective DPeHE training lies in trans

forming the mechanisms of general human error modes in psychology to programming scenarios and corresponding prevention strategies.DPeHE has successfully linked human error modes to the recurrent software fault patterns found in the authors’previous experiments12,89and the historic database in the Chinese Aviation Industry.11After the DPeHE training,eight subjects reported that they became especially aware of designing extreme testing cases in unit testing.This was associated with the training on the error mode ‘bias review”and corresponding prevention strategies.Five subjects formed a habit of using notebook and breaking big problems into smaller sub-problems to cope with problem complexity.Though the strategy ‘writing down in the notebook”seems obvious,a programmer would not remember nor want to use this strategy if he was simply told by someone: ‘it is a good strategy based on my experience.”The programmers experienced their working memory limitations in the training,and knew why and how this strategy worked,i.e.as a way of externally expanding working memory capacity.Such experience is effective in enabling programmers to use a good strategy continuously.Some subjects also reported the experience related to the ‘post-completion error.” For instance,one subject said that he was impressed with the fact that about half of the programmers in the experiment had committed ‘post-completion error” at the same place in the same way.He often used the‘sub-goal checking” strategy after the training.The evidence provided by these participants indicates the other important strength of DPeHE:linking each human error mode to software defect examples is essential to improving programmers’awareness under error-prone scenarios and using corresponding preventive strategies.

Table 5 Participants’assessment results.

(3)Difficulties exist during the application of DPeHE.Seven subjects reported that DPeHE involves programmers’uncomfortable feelings,since DPeHE requires programmers to reveal their errors and analyze why they committed these errors.Such self-examination requires one’s mental effort and time,and a self-reflection process in conflict of one’s ego.Such obstacles could be even stronger when DPeHE is implemented at the organizational level,in which circumstances programmers are required by other people such as project managers to analyze how they commit errors.

4.4.Defect tracking

4.4.1.Defect data collection

Defects found in independent tests for both groups were tracked as the objective evidence.The data was recorded originally in the organizations’databases.We only needed to use the data to perform an analysis for the measurements defined in this study.

The data of Group A was recorded in a defect management system.It was a mature system containing abundant information such as defect description,defect type,the function component where the defect was located,the time when the function component was built,the time when the defect was found,and when the defect was fixed.

The situation of Group B is a bit different.The defects were in the form of Microsoft Word f i les.However,it did not affect our analysis as all the information we required were available,such as the description of a defect,the component where the defect was located,the size of the component,the time when the defect was found,and when it was fixed.

4.4.2.Defect tracking results

Generally,the environments of different projects can be very diverse.Factors such as project management,technical maturity of the objects,and software development models can greatly affect defect rates.In order to reduce the interferences of these factors,we compare the defects introduced by the team members who received the training of DPeHE with the defects introduced by those who did not,in the same project team.

Even in the same project team,the expertise and tasks for each participant were different.Therefore,we cannot simply compare the absolute defects committed by different people.We use relative measures for the purposes of comparison.We compare the relative progress of defect rate(RPDR)made by the participants of DPeHE with the RPDR made by those who did not participate in the training.The relative progress of defect rate is defined in Eq.(2):

wheremis the sequence number of a person,Δta fixed duration of time,RPDRmthe relative progress of defect rate made by the personm,DRmΔtBeforeTrainingthe defect rate of this person for a fixed time duration before the training,and DRmΔtAfterTrainingthe defect rate of the same person for the fixed time duration after the training.

Furthermore,we propose a measure that can exclude the influence of task and individual differences.Assuming that the project management factors were the same for the subjects from the same group(e.g.the same manager,the same rules of task assigning,and the same project schedule),the work time required for an individual to spend on a task represents the cognitive efforts he/her devoted to the task.In other words,cognitive effort can synthetically represent the difficulty level of a certain task to a certain developer,70and the difficulty level in turn significantly influences the proneness of a software developer introducing software defects.37,90Therefore,we use average defects per workday in a fixed time interval to measure defect rate,shown in Eq.(3):

whereDmΔtis the total defects introduced by the personmduring the fixed time Δt,and Δtis measured by workdays.It is notable that an assumption is made during the proposal of Defect Rate measure:a software developer is doing software development-relevant work in a normal workday.However,it is possible in reality that the software developer just sits in front of his desk and does nothing.This issue is suffered by any measure.A measure,which attempts to capture a property of an entity,can approach a property but never be equal to the property.For instance,the widely used measure,defect density(Number of Defects/Lines of Code)can hardly be used to capture a developer’s error-proneness.Considering the situation in which two developers design two program versions according to the same piece of requirement and they introduce a same defect,the error-proneness of these two developers are logically the same.However,the LOC for these two versions can be highly different.If we use defect density to describe human error-proneness,the error-proneness of these developers will be illusively different.

In our application cases,we track the defects introduced before and after the training with the same duration of Δt.Substituting Eq.(3)into Eq.(2),we get

We have tracked the defects introduced 150 workdays before the training and 150 workdays after the training.The data is summarized in Table 6.

We then performed Mann–WhitneyUTest to examine whether the RPDR of team members who received training of DPeHE was different from the RPDR of those who did not.Mann–WhitneyUTest is a nonparametric test based on the ranks of observations.It does not require assumptions such as normal distribution of data.It has greater efficiency than thet-test on non-normal distributions and for the small samples.

Significant difference was found in Organization A.RPDR of members who received training of DPeHE was significant higher than the RPDR of those who did not(mean difference=27.7%),the statisticU=44.0,the significance valuep<0.01.In Organization B,the significant difference was also found:RPDR of members who received training of DPeHE was significant higher than the RPDR of those who did not(mean difference=30.6%),U=16.0,p<0.01.

Table 6 Descriptive statistics of RPDR.

The results suggest that individuals who had received the training of DPeHE made more relative progress than those who had not in the same project context.Group B made less absolute progress than Group A,but they made better relative progress compared to the individuals in the same organization.These results imply that DPeHE is effective and applicable for both organizations independent of the process maturity levels.

5.Discussions

5.1.Contributions

This paper proposes an approach called DPeHE to prevent software defects proactively by promoting software developers’cognitive ability of human error prevention.Compared to the conventional DP(the rectangle enclosed by the dotted line in Fig.4)that focuses on organizational software process improvement,DPeHE focuses more on software developers’personal ability to prevent cognitive errors,shown as bolded diagrams outside of the dotted rectangle in Fig.4.

DPeHE promotes software developers’ability to prevent errors through two stages.In the first stage,DPeHE provides developers with explicit knowledge of human error mechanisms and prevention strategies.Such knowledge includes the cognitive processes underlying the software problem solving performance,why software developers commit errors,what types of errors programmers tend to commit under different circumstances and what strategies can be used to prevent errors.The knowledge has integrated fundamental theories in cognitive psychology and the problem domain contexts of software development,e.g.,how each general cognitive error mode manifests itself as defect patterns in programming.Making such knowledge explicit to software developers is essential to promoting their error prevention ability.

In the second stage,software developers use the provided strategies and devices to perform root cause analysis by themselves during their real programming performance.Through this self-checking experience,software developers gain better awareness and ability to self-regulate under error-prone situations.

Case studies show that DPeHE training is capable of building programmers’mental links between error modes(erroneous patterns originated from the generalcognitive limitation and mechanisms),error-prone scenarios(the contexts that likely trigger an error mode),programming defect examples(manifestations of error modes in the speci fi c task contexts)and error prevention strategies,thus promoting their software defect prevention capability.Furthermore,the human error modes provided in DPeHE can also be used as a detailed human error taxonomy to improve root cause analysis under the traditional DP framework,shown in the grey background in Fig.4.As discussed in Section 2.1,root cause taxonomy is essential to traditional DP.However,there is still a lack of detailed taxonomy under the category ‘people” in the conventional root cause taxonomies.7–9This gap has been fi lled by the error modes provided in DPeHE.

5.2.Lessons learnt and future studies

Software development is a knowledge-intensive activity where cognitive failures are the primary causes of software defects.Due to the cognitive nature of software development,improving software developers’ability to prevent errors proactively is a promising direction for software defect reduction.This paper proposes an approach called DPeHE to prevent software development defects through the cognitive training on software developers’ability to prevent human errors.The evidence from two case studies suggests that DPeHE is effective in promoting software developers’abilities to prevent software defects,and it can be widely applied in organizations independent of their process maturity levels.To the best of our knowledge,this paper has made the first attempt to address this problem systematically.

The training framework and materials used in DPeHE have implications to prevent errors in other stages of the software lifecycle(e.g.,requirement errors)as well as in other industries.Furthermore,the systematic knowledge summarized in this paper integrates human error theories with software psychology theories and explains the error mechanisms underlying software development defects.Such knowledge may also inspire other studies related to human errors in software engineering,such as defect prediction,risk management,defect detection,and programming education.

The evidence from the case studies suggests that DPeHE is effective in promoting software developers’abilities to prevent software defects.DPeHE was feasible and effective for an international organization at CMM Level 5 and an organization in the Chinese aviation industry at CMM Level 1.The key to an effective DPeHE training lies in the process and format of the training.An influential knowledge training combined with programmers’succeeding practice was demonstrated to be an effective process.Cognitive bias tests have been shown to be influential formats for knowledge training.Cognitive bias tests let the programmers ‘experience”error modes.Such tests are very powerful for capturing programmers’interests,enhancing their understanding of error modes,and increasing their motivations for the later application practice.

However,a successful DPeHE application is never easy.For instance,DPeHE training requires the trainers to possess interdisciplinary knowledge of psychology and software engineering.DPeHE also requires a programmer to check his own errors.Such self-checking is an uncomfortable process that introduces resistance from programmers.These different aspects of evidence provide valuable implications for further research related to human errors in software engineering.

This paper leads to two avenues for future research.One direction is to conduct more case studies and widely apply DPeHE in the software industry.DPeHE was proven to be applicable at two companies,one at the highest CMM Level and the other the lowest CMM level.Future studies can be extended to users in organizations at other process maturity levels.

The other interesting direction is using the human error mechanisms provided in this paper to design new methods for software defect detection.The human error mechanisms can be used to design checklists for enhancing software static testing such as requirement review and code inspection.For instance,the error mode ‘post-completion error” has specific triggering contexts:when a sub-requirement that is carried out at the end of a requirement but is not a necessary condition for the achievement of the main sub-requirement,the subrequirement tends to be omitted by software developers.89Knowledge of such error patterns can help software requirement reviewers identify erroneous locations in requirement documents.Secondly,the human error mechanisms have the potential to be used for detecting some software faults that have no abnormal symptoms in the syntactic and semantic aspects but involve problem-solving errors(the errors associated with exponential model development),and improving test case generation strategies(e.g.the ‘confirmation bias” error).

6.Conclusion

Appendix A.Confirmation bias test

There are four cards,which have a letter on one side and a number on the other.These cards are placed on a table showing C,P,5 and 8 respectively as shown in Fig.A1.

Which card(s)should you turn over to test whether the following hypothesis is true or false respectively:

(1) ‘If a card has a P on one side,then it has a 5 on the other side”

(2) ‘If a card has a P on one side,then it has not a 5 on the other side”

(3) ‘If a card has not a P on one side,then it has a 5 on the other side”

(4) ‘If a card has not a P on one side,then it has not a 5 on the other side”

Table B1 User-assessment questionnaire for DPeHE knowledge training.

Table C1 User-assessment questionnaire for DPeHE regulation training.

Appendix B.Questionnaire for the assessment of DPeHE knowledge training

Thank you for participating in the defect prevention training based on human errors.Please provide feedbacks to the report by choosing the scales beside each question in Table B1.Your feedback will remain anonymous.

Appendix C.Questionnaire for assessment of DPeHE regulation training

Thank you for participating in the defect prevention training based on human errors.The survey in Table C1 aims to seek your opinions on what aspects of the training might be helpful,and what aspects might require to be improved.Your feedback will remain anonymous.All the questions below are compared to the situations before you participated this training program.For questions on ‘extent”,1—Not at all,2—Slight,3—Moderate,4—High,5—Very high.For questions on ‘frequency”,1—Never,2—Rarely,3—Occasionally,4—Frequently,5—Very frequently.

1.Huang F,Smidts C.Causal mechanism graph─A new notation for capturing cause-effect knowledge in software dependability.Reliab Eng Syst Saf2017;158:196–212.

2.Jones CL.A process-integrated approach to defect prevention.IBM Syst J1985;24(2):150–67.

3.Mays RG,Jones CL,Holloway GJ,Studinski DP.Experiences with defect prevention.IBM Syst J1990;29(1):4–32.

4.Kalinowski M,Card DN,Travassos GH.Evidence-based guidelines to defect causal analysis.IEEE Softw.2012;29(4):16–8.

5.Mays RG.Applications of defect prevention in software development.IEEE J Sel Areas Commun1990;8(2):164–8.

6.Card DN.Learning from our mistakes with defect causal analysis.IEEE Softw1998;15(1):56–63.

7.Card DN.Myths and strategies of defect causal analysis.Proceedings:Pacific northwest software quality conference;2006.p.469–74.

8.Kalinowski M,Travassos GH,Card DN.Towards a defect prevention based process improvement approach.Proceedings of the 2008 34th Euromicro conference software engineering and advanced applications;2008 Sep 3–5;Parma.Washington D.C.:IEEE Computer Soiety;2008.p.199–206.

9.Moll JHV,Jacobs JC,Freimut B,Trienekens JJM.The importance of life cycle modeling to defect detection and prevention.Proceedings of the 10th international workshop on software technology and engineering practice;2002 Oct 6–8;Montreal.Washington D.C.:IEEE Computer Society;2002.p.144–55.

10.Huang FQ,Liu B,Huang B.A taxonomy system to identify human error causes for software defects.Proceedings of the 18th international conference on reliability and quality in design;2012 July 26–28;Boston.Piscataway(NJ):International Society of Science and Applied Technologies;2012.p.44–9.

11.Huang FQ,Liu B,Wang SH,Li QY.The impact of software process consistency on residual defects.J Software:Evolut Process2015;27(9):625–46.

12.Huang FQ,Liu B,Song Y,Keyal S.The links between human error diversity and software diversity:Implications for fault diversity seeking.Sci Comput Program2014;89(9):350–73.

13.Weinberg GM.The psychology of computer programming.New York:VNR Nostrand Reinhold Company;1971.

14.Huang FQ,Liu B,Wang YC.Review of software psychology.Comput Sci2013;40(3):1–7[Chinese].

15.Huang FQ.Software fault defense based on human errors.Beijing:Beihang University Press;2013[Chinese].

16.Leszak M,Perry DE,Stoll D.A case study in root cause defect analysis.Proceedings of the 22nd international conference on software engineering;2000 June 4–11;Limerick.New York:ACM;2000.p.428–37.

17.Chillarege R.Orthogonal defect classification.In:Lyu MR,editor.Handbookofsoftwarereliabilityengineering. Hightstown(NJ):McGraw-Hill Inc.;1996.p.359–400.

18.Baker DP,Krokos KJ.Development and validation of aviation causal contributors for error reporting systems.Hum Factors2007;49(2):185–99.

19.Salmon PM,Lenne´MG,Stanton NA,Jenkins DP,Walker GH.Managing error on the open road:the contribution of human error models and methods.Saf Sci2010;48(10):1225–35.

20.Vaurio JK.Human factors,human reliability and risk assessment in license renewal of a nuclear power plant.Reliab Eng Syst Saf2009;94(11):1818–26.

21.Jou YT,Yenn TC,Lin CJ,Tsai WS,Hsieh TL.The research on extracting the information of human errors in the main control room of nuclear power plants by using performance evaluation matrix.Saf Sci2011;49(2):236–42.

22.Swain AD,Guttmann HE.Handbook of human reliability analysis with emphasis on nuclear power plant applications.Washington D.C.:US Nuclear Regulatory Commission;1983.Report No.: NUREG/CR-1278; SAND-80-0200; ON:DE84001077.

23.Williams JC.A data-based method for assessing and reducing human error to improve operational performance.Proceedings of the IEEE 4th conference on human factors in power plants;1988 June 5–9;New York.Piscataway(NJ):IEEE Press;1988.p.436–50.

24.Hollnagel E.Cognitive reliability and error analysis method(CREAM).Oxford:Elsevier Science;1998.

25.Kim JW,Jung W.A taxonomy of performance influencing factors for human reliability analysis of emergency tasks.J Loss Prev Process Ind2003;16(6):479–95.

26.Netjasov F,Janic M.A review of research on risk and safety modelling in civil aviation.J Air Transport Manage2008;14(4):213–20.

27.Shappell SA,Wiegmann DA.The human factors analysis and classification system(HFACS).Washington D.C.:Federal Aviation Administration;2000.Report No.:DOT/FAA/AM-00/7.

28.Kontogiannis T,Malakis S.A proactive approach to human error detection and identification in aviation and air traffic control.Saf Sci2009;47(5):693–706.

29.Reinach S,Viale A.Application of a human error framework to conduct train accident/incident investigations.Accid Anal Prev2006;38(2):396–406.

30.Celik M,Cebi S.Analytical HFACS for investigating human errors in shipping accidents.Accid Anal Prev2009;41(1):66–75.

31.Stanton NA,Salmon PM.Human error taxonomies applied to driving:a generic driver error taxonomy and its implications for intelligent transport systems.Saf Sci2009;47(2):227–37.

32.Baysari MT,Caponecchia C,Mclntosh AS,Wilson JR.Classif ication of errors contributing to rail incidents and accidents:A comparison of two human error identification techniques.Saf Sci2009;47(7):948–57.

33.Young KL,Salmon PM.Examining the relationship between driver distraction and driving errors:a discussion of theory,studies and methods.Saf Sci2012;50(2):165–74.

34.Cacciabue PC,Vella G.Human factors engineering in healthcare systems:the problem of human error and accident management.Int J Med Informatics2010;79(4):1–17.

35.Kao LS,Thomas EJ.Navigating towards improved surgical safety using aviation-based strategies.J Surg Res2008;145(2):327–35.

36.Ricci M,Panos AL,Lincoln J,Salerno TA,Warshauer L.Is aviation a good model to study human errors in health care?Am J Surg2012;203(6):798–801.

37.Reason J.Human error.Cambridge,UK:Cambridge University Press;1990.

38.De´tienne F.Softwaredesign–cognitiveaspects. New York:Springer-Verlag New York,Inc.;2002.

39.Visser W,Hoc JM.Psychology of programming.London:Caister Academic Press Limited;1990.p.235–49.

40.Ko AJ,Myers BA.A framework and methodology for studying the causes of software errors in programming systems.J Visual Lang Comput2005;16(1–2):41–84.

41.Brodbeck FC,Zapf D,Pru¨mper J,Frese M.Error handling in off i ce work with computers:a field study.J Occup Organ Psychol1993;66:303–17.

42.Kay RH.The role of errors in learning computer software.Comput Educ2007;49(2):441–59.

43.Maxion RA,Reeder RW.Improving user-interface dependability through mitigation of human error.Int J Hum Comput Stud2005;63(1–2):25–50.

44.Kesseler E,Knapen EG.Towards human-centred design:Two case studies.J Syst Softw2006;79(3):301–13.

45.Krovi R,Chandra A.User cognitive representations:the case for an object oriented model.J Syst Softw1998;43(3):165–76.

46.Flavell JH.Metacognitvie aspects of problem solving.Nat Intel1976;12:231–5.

47.Brown AL.Metacognition,executive control,self-regulation and other more mysterious mechanisms.In:Weinert FE,Kluwe RH,editors.Metacognition,motivation,andunderstanding.New Jersey:Lawrence Erlbaum Associates;1987.p.65–116.

48.Schraw G,Moshman D.Metacognitive theories.Educ Psychol Rev1995;7(4):351–71.

49.Swanson HL.Influence of metacognitive knowledge and aptitude on problem solving.J Educ Psychol1990;82(2):306–14.

50.Bryant S.Rating expertise in collaborative software development.In Romero P,Good J,Acosta E,Bryant B,editors.Proceedings of the 17th workshop on the psychology of programming interest group;2005.p.19–29.

51.Berardi-Coletta B,Buyer LS,Dominowski RL,Rellinger ER.Metacognition and problem solving:a process-oriented approach.J Exp Psychol Learn Mem Cogn1995;21(1):205–23.

52.Hargrove RA.Assessing the long-term impact of a metacognitive approach to creative skill development.Int J Technol Des Educ2013;23(3):489–517.

53.Pennequin V,Sorel O,Nanty I,Fontaine R.Metacognition and low achievement in mathematics:the effect of training in the use of metacognitive skills to solve mathematical word problems.Think Reason2010;16(3):198–220.

54.Efklides A,Sideridis GD.Assessing cognitive failures.Eur J Psychol Assess2009;25(2):69–72.

55.Veenman MVJ,Van Hout-Wolters BHAM,Aff l erbach PM.Metacognition and learning:Conceptual and methodological considerations.Metacognition Learn2006;1(1):3–14.

56.Gama CA.Integrating metacognition instruction in interactive learning environments[dissereation].Brighton,UK:University of Sussex;2004.

57.Teong SK.The effect of metacognitive training on mathematical word-problem solving.J Comput Assist Learn2003;19(1):46–55.

58.Kramarski B,Mevarech ZR.Enhancing mathematical reasoning in the classroom:the effects of cooperative learning and metacognitive training.Am Educ Res J2003;40(1):281–310.

59.Schoenfeld AH.Learning to think mathematically:problem solving,metacognition,and sense-making in mathematics.In:Grouws DA,editor.Handbook for research on mathematics teaching and learning.New York:MacMillan;1992.p.334–70.

60.Lawanto O.Metacognition changes during an engineering design project.39th IEEE frontiers in education conference;2009 Oct 18–21;San Anonio.Piscataway(NJ):IEEE;2009.p.1–5.

61.Schraw G.Promoting general metacognitive awareness.Instr Sci1998;26(3):113–25.

62.Chinnappan M,Lawson MJ.The effects of training in the use of executive strategies in problem solving.Learn Instruct1996;6(1):1–17.

63.Kapa E.Transfer from structured to open-ended problem solving in a computerized metacognitive environment.Learn Instruct2007;17(6):688–707.

64.Nelson TO,Narens L.Metamemory:a theoretical framework and new findings.Psychol Learn Motiv1990;26:125–73.

65.Visser W.Dynamic aspects of design cognition:elements for a cognitive model of design.Paris:INRIA;2004.

66.Brooks R.Towards a theory of the cognitive processes in computer programming.Int J Man Mach Stud1977;9(6):737–51.

67.Adelson B,Soloway E.A model of software design.Int J Intell Syst1986;1:195–213.

68.Storey MA,Fracchia FD,Mu¨ller HA.Cognitive design elements to support the construction of a mental model during software exploration.J Syst Softw1999;44(3):171–85.

69.De´tienne F.Expert programming knowledge:a schema-based approach.In:Hoc JM,Green TRG,Samurcay R,Gilmore D,editors.Psychology of programming.London:Academic Press;1990.p.205–22.

70.Schnotz W,Ku¨rschner C.A reconsideration of cognitive load theory.Educ Psychol Rev2007;19(4):469–508.

71.Guindon R,Krasner H,Curtis B.Breakdowns and processes during the early activities of software design by professionals.In:Olson GM,Sheppard S,Soloway E,editors.Empirical studies of programmers:second workshop.Norwood(NJ):Ablex Publishing Corp;1987.p.65–82.

72.Byrne MD,Bovair S.A working memory model of a common procedural error.Cogn Sci1997;21(1):31–61.

73.Tang A,Lau MF.Software architecture review by association.J Syst Softw2014;88:87–101.

74.Meyer B.On formalism in specifications.IEEE Softw1985;2(1):6–26.

75.Huang FQ,Liu B.Study on the correlations between program metrics and defect rate by a controlled experiment.J Softw Eng2013;7(3):114–20.

76.Veenman MVJ,Wilhelm P,Beishuizen JJ.The relation between intellectual and metacognitive skills from a developmental perspective.Learn Instr2004;14(1):89–109.

77.Whimbey A,Lochhead J,Narode R.Problem solvingamp;comprehension.7th ed.New York:Routledge;2013.

78.Bransford JD,Brown AL,Cocking RR.How people learn:brain,mind,experience,and school.Washington D.C.:National Academy Press;1999.

79.Lin XD,Lehman JD.Supporting learning of variable control in a computer-based biology environment:effects of prompting college students to reflects on their own thinking.J Res Sci Teach1999;36(7):837–58.

80.Runeson P,Ho¨st M.Guidelines for conducting and reporting case study research in software engineering.Empirical Softw Eng2009;14(2):131–64.

81.Simons D,Chabris C.Selective attention test[Internet].Youtube;2010[cited March 16,2016].Available from:https://www.youtube.com/watch?v=vJG698U2Mvo.

82.Unsworth N.Examining variation in working memory capacity and retrieval in cued recall.Memory2009;17(4):386–96.

83.Kinnunen R,Vauras M.Comprehension monitoring and the level of comprehension in high-and low-achieving primary school children’s reading.Learn Instruct1995;5(2):143–65.

84.Nett UE,Goetz T,Hall NC,Frenzel AC.Metacognitive strategies and test performance:an experience sampling analysis of students’learning behavior.Educ Res Int2012;2:1–16.

85.O’Neil HF,Abedi J.Reliability and validity of a state metacognitive inventory:potential for alternative assessment.J Educ Res1996;89(4):234–45.

86.Boekaerts M,Corno L.Self-regulation in the classroom:a perspective on assessment and intervention.ApplPsychol2005;54(2):199–231.

87.Sperling RA,Howard BC,Miller LA,Murphy C.Measures of children’s knowledge and regulation of cognition.Contemp Educ Psychol2002;27(1):51–79.

88.Price SM.How perceived cognitive style,metacognitive monitoring,and epistemic cognition indicate problem solving confidence[dissertation].Raleigh,North Carolina:North Carolina State University;2009.p.193.

89.Huang FQ.Post-completion error in software development.Proceedings of the 9th international workshop on cooperative and human aspects of software engineering;2016 May 14–22;Austin,TX.New York:ACM;2016.p.108–13.

90.Fritz T,Begel A,Mu¨ller SC,Yigit-Elliott S,Zu¨ger M.Using psycho-physiological measures to assess task difficulty in software development.Proceedings of the 36th international conference on software engineering;2014 May 31–June 07;Hyderabad.New York:ACM;2014.p.402–13.

16 March 2016;revised 5 July 2016;accepted 23 December 2016 Available online 20 April 2017

猜你喜欢

杂志排行

CHINESE JOURNAL OF AERONAUTICS的其它文章

- Review on signal-by-wire and power-by-wire actuation for more electric aircraft

- Real-time solution of nonlinear potential flow equations for lifting rotors

- Suggestion for aircraft flying qualities requirements of a short-range air combat mission

- A high-order model of rotating stall in axial compressors with inlet distortion

- Experimental and numerical study of tip injection in a subsonic axial flow compressor

- Dynamic behavior of aero-engine rotor with fusing design suffering blade of f