Toward a Scalable SDN Control Mechanism via Switch Migration

2017-05-08GuozhenChengHongchangChenHongchaoHuZhimingWang

Guozhen Cheng, Hongchang Chen, Hongchao Hu, Zhiming Wang

National Digital Switching System Engineering & Technological R&D Center, Zhengzhou 450002, China

* The corresponding author, email: chc@ndsc.com.cn

I. INTRODUCTION

In the past few years, The performance of controllers has attracted more attentions due to its key role in Software-Defined Networking (SDN) [1].They manages all the switches in the network and make decisions based on a logically central network view.However,current static structure between controllers and switches cannot adapt for the variety of network traffic, and be prone to imbalance controller loads and degrade application performances.

At first stage, SDN deploys only one central controller that is responsible for all the switches [2, 3].This architecture could readily achieve network state consistency and avoid communications among different controllers.But the single resource-limited centralized controller confines SDN to a small-scale network, since large network is easier to overload it due to frequent and resource-exhaustive events such as OpenFlow (OF) PACKET-IN events [4].For example, The flow setup time1Whenever a switch receives a flow, it searches its flow table to find the entry matched.As the match is failed, it requests the controller to calculate the flow path and install appropriate rules.The time required to complete this operation is known as the flow setup time.in overloaded controller will be increased significantly, and the application performance will be degraded.

Afterwards, a few recent attempts have been taken to tackle this problem via distributed schemes which may fall into two major groups: those horizontally equalizing all controllers,i.e., flat architecture [4, 5], and those vertically layering from root controller to leaf ones,i.e., hierarchical architecture [6].Both architectures have improved the scalability of SDN control plane so that SDN could deploy in larger network, but the static map between controllers and switches lead to load imbalance among controllers.For instance, real measurement for network traffic shows that 1-2 orders of magnitude difference between peak and median flow arrive rates at a switch[7].Current static configurations could easily induce that some controllers are overcommitted and become hot spots, but other controllers are underutilized and turn to cold spots.Hence, workloads have to be offloaded from the hot spots since there is inadequate resource to meet Service Level Agreements (SLAs).Oppositely, cold spots expect to serve more switches for high network utility, since we believe that it is a waste of energy if resources are under-utilized.

The recent version of OpenFlow protocol[8] has solved the problem caused by such static configurations.Therefore, it proposes that each switch could be controlled by three different roles of controllers, master, equal and slave.Generally, there is only one master controller for a switch.The master can not only fetch the switches’ states but also write rules to its switches to instruct the data plane.The equal controllers are introduced to separate the loads from the master.They have the same authority with master.The slaves only read the states from its switches.Each switch could have more than one equal and slave controllers.If the master is failed due to overload or some exceptions, the equal controllers,or even slaves could be transited to master as soon as possible.However, the OF spec suggests no mechanism explicitly indicating the switch migration or controller roles shift,because the writers of this spec think that this is the responsibility of the controller to choose a master among themselves.

Recently, several works have provided to solve this problem by dynamically changing the number of controllers [9, 10] or periodically reassigning controllers in the network[13].However, in fact, many imbalanced cases could be eliminated by migrating switches from hot spots to cold spots without adding new controllers.Yet there is rare works to formally consider such Switch Migration Problem (SMP),i.e., which switch should and where it should be optimally migrated for more scalable control plane.

In this paper, we focus on designing a scalable control mechanism via solving SMP problem.To the best knowledge of the authors, this is the first work to solve SMP in SDN.The main contributions in this paper are as follows.

· We first give a SDN model to describe the relation between controllers and switches,and then we define SMP problem as a Network Utility Maximization problem (NUM)with the objective of serving more loads under available control resources.

· Based on the Markov approximation framework [14], we approximate our optimal objective with a Log-Sum-Exp function, and design a synthesizing distributed algorithm,Distributed Hopping Algorithms (DHA), to approach the optimal solution of SMP.And we prove that the gap between the approximated solution and the optimal one is limited and the solution search path of our DHA is a Markov Chain path with a stationary probability distribution.

· We implement a scalable control mechanism based on our DHA algorithms, and validate its performance in real ISP topologies.

In this paper, the authors design a synthesizing distributed algorithm to solve the Switch Migration Problem.

The remainder of this paper is organized as follows.The next section gives the related works.The section III discusses the intuition and details of our model for SDN.In section IV, we reduce our SDN model to network utility maximization problem.Section V presents the design of distributed hopping algorithms.Section VI describes an implementation of scalable control mechanism.Section VII val-idates our DHA.Section VIII concludes this paper.

II.RELATED WORK ON ELASTIC CONTROL

Current static map between controllers and switches prevents controller load exchanges along with the variety of network traffic.To address this problem, ElastiCon [9] is provided to dynamically grow or shrink the amount of controllers set and migrate the switches among controllers along with the network traffic.That is, ElastiCon represents us a framework to implement dynamic configuration between controllers and switches.Similarly, V.Yazici1[10] proposed a coordination framework for scalability and reliability of distributed control plane.but SMP problem are not solved properly,i.e., how to select migrated switches and their target controllers.

B.Helleret al.[11] solve how to place the controllers based on propagation latency.But this work only focused on where to place multiple controllers statically.Guang Yaoet al.[12] consider controller placement problem from the view of the controller load.

To achieve more performance and scalability in large-scale WAN, Md.Faizul Bariet al.[13] provide a dynamic controller provisioning framework to adapt the number of controllers and their geographical locations.The framework minimizes flow setup time and communication overhead by solving an integer linear program.But it has to perform a reassignment of entire control planes based on the collected traffic statistics.This operation easily leads to network instability because it incurs massive state synchronizations.Furthermore, both its greedy (DCP-GK) and simulated annealing approaches (DCP-SA) are centralized algorithms that do not adequately utilize the resource of distributed controllers.

To sum up, existing the solutions to DCPP is changing the number of controllers and their location via reassigning the switches for controllers.this operation is likely to incur network instability due to large numbers of state synchronizations among controllers.

This article has two differences compared with the existing works.First, our pivot is to solve SMP problem so that we can eliminate controller load imbalance by several switch migrations and without additionally new controllers.Second, based on the architecture of distributed controllers, we design a synthesizing distributed algorithm that each controller runs its own algorithmic procedure independently.

III.SYSTEM MODEL

3.1 The motivations

The objective of switch migration incentive adjusting mappings between controllers and switches is to serve more network flows under available resources and maximize the resource utility.In practice, there are many cases that need to change the mappings.First, some controllers can become quite leisured when the number of network flows through their switches becomes smaller.Therefore, a possible configuration is consolidating the switches from many light controllers into fewer ones.Then vacant controllers can be shut down or sleep for saving power and communication cost.This can hamper the controller sprawl, and we call this operation as switches consolidation.

Second, when some controllers are hot spots and other controllers are cold spots in SDN,flow setup time in hot spots will increase and network performance will deteriorate sharply.This load imbalance among controllers may be caused by network traffic fluctuation or scaleout of some new switches in some controllers.It is necessary to rebalance loads between hot spots and cold spots so that load difference could be eliminated among controllers.That is, some switches should be moved from hot spots to cold spots.We call this operation as load balance.

Third, if all active controllers become hot spots, it is impossible to eliminate hot spots by switch migrations.Operator will deploy some new controllers.This operation involves two stage.For one thing, a new controller one is incrementally placed.For another, switches in hot spots will be migrated to such a new controller.Our DHA algorithm could also be applied in the second stage towards an optimal migration path.

The above three cases could be detected by a load estimation application on the controller.Our DHA algorithm could be used to solve them by designing different optimal objectives.In the first case, we should design a control power function so that SMP minimizes a power function in such controllers.In the second case, we should design an utility function so that SMP maximize network flows requests under available resource.Essentially,the third one is a special case of second one.In this paper, we discuss load balance case in the residual part of this paper.We will explore the first case in our future work.

3.2 SDN model

Our pivot in this paper is switch migration problem towards more balanced controllers.So we assume that SDN controllers have been optimally placed in the distributed topology.

As the literature [12] stated, the load of SDN controller consists of many factors, such as processing of PACKET_IN events, maintaining the local domain view, communicating with other controllers, as well as installing flow entries.In different scenario, the proportions of those factors differ greatly.But the processing of PACKET_IN events is generally regarded as the most prominent part of the total load [15].Accordingly, the arriving rate of PACKET_IN events on a controller is counted to measure its load.

Therefore, we give our SDN model as follows.We consider a SDNconsisting ofcontrollersandswitchesLetbe control load generated by switchandbe the upper load limits of switch.Accordingly, the switch can be denoted as

IV.NETWORK UTILITY MAXIMIZATION PROBLEM IN SDN

Our primary objective is to find out how switch migration policies should be employed so that the network utility is maximized.We assume that the more events the controller handles under the available resources, the higher the utilities will be produced.Based on the our SDN model, we formulate switch migration problem as a centralized joint network utilization maximization problem in SDN,

We believe that once controller is powered on, the more resource it is possessed, the more utilities it will produce if all the resource consumers are legal.In addition, we assume that network utility function is twice differentiable,increasing and strictly concave.Hence, we define network utility function forwith log(·),

Then the objective function can be reformulated as,

V.DISTRIBUTED HOPPING ALGORITHMS

Theoretically, the problem SMP can be reformulated as 0-1 integer linear program, which is a typically combinatorial network optimization problem, and very difficult to solve.Although we can approach the optimal solution through Lagrangian relaxation with quadratic equality constraints and solve its dual problem, or decoupling it into several knapsack problems, it incurs time consuming [16].

Actually, many important network design problem can be formulated as a combinatorial network optimization problem, and a surge of studies have been provided to solve it and has made significant progress, but many of them are designed to centralized implementations[17] or time-consuming as the network size become larger [18][19].In our scenario, we need the approach that can be concurrently processed in a distributed manner, because each SDN controller manages its local switches and interacts with its neighbors.Moreover,network running distributed algorithms are more robust to network dynamics (e.g., switch migration and controller sleep).

In this article, we refer to a Markov approximation framework using the log-sum-exp function to approximate the optimal value of our SMP.Based on this, we provide a distributed hopping algorithm in a synthesizing form.In the subsequent section, we first describe the log-sum-exp approximation of SMP.Then we illustrate the detailed design of DHA.

5.1 Log-sum-exp approximation

Then SMP problem can be rewritten as follows,

Besides all the constraints of the problemthe equationis satisfied for the problem

To solve this problem, we use the log-sumexp function to approximate the optimal value ofas follows,

This additional entropy term opens a new design space for exploration.

Since the objective function of problemare twice differentiable, increasing and strictly concave for alland all the constraints are linear, Karush-Kuhn-Tucker(KKT) conditions are necessary and sufficient for an existing optimal solution.We can conclude that,

Theorem 1The optimal solution of the problemlike that,

· T he optimality gap betweenandis bounded by

The symbolWe refer the reader to our technical report [20] for details of the proof.

5.2 Distributed hopping algorithm design based on Markov chain

As stated in Lemma 1 of the literature [14],there exists at least one continuous-time time-reversible ergodic Markov Chain with stationary distributionThe state spacehas to satisfy two conditions.First, with the property of ergodicity, any two states in this state space can communicate with each other through at least one path.Second, the Markov Chain must obey detailed balance equation,wheredenotes the transition rate from strategyto.

In SDN, there is a logically centralized global view where all controllers share the information.Therefore, each controller can collect fresh value offrom the view to calculateandLetthe network will sojourn in the statefor a period that reduces to the exponential distribution with parameterBased on the second conclusion in theory 1,we can deduce that,

Algorithm 1 Distributed Hopping Algorithm

We briefly describe the distributed hopping algorithm (DHA) as follows.The following procedure runs on each controller independently, and we focus on a particular controller.

Stage 1: Initially, given an SDN topology with distributed controllers, any controlleris allocated a switch domain under the configuration.

Stage 2: Controllerrandomly selects a switchfrom its domainwith the size ofand a controllerfrom its neighbor setThenwill count down a random number.The random number is generated by the exponential distribution with mean

which represents as follows,

Stage 3:If the count is expired and no existing switch migration activity in its neighbors is sensed, the controllerwill broadcast the coming migration activity betweenandto its neighbors.Then the controllerwill migrate the selected switch into the controllerAfter migration, the controllerwill update all utilization ratiosof the switches ininto the network global view,and broadcast it to its neighbors.

Stage 4: Conversely, if there is such an activity between its neighbors, the controllerresets the timer.The algorithm returns to Stage 2.

The pseudocode of DHA is shown in Algorithm 1 which runs on each individual controller independently.We focus on a particular controller

We then have the conclusion as follows.

Theorem 2The process of distributed hopping algorithm is the implementation of time-reversible Markov Chain with stationary distribution

The proof can refer to our technical report about this paper [20].

VI.IMPLEMENTATION

6.1 DHA-CON

In this section, we implement a control mechanism prototype based on DHA algorithm,called DHA-CON, including a load estimation module, a DHA decision and a distributed data store.

Load Estimation.A load estimation module runs as a control application.It tracks the controller loads, and predicts the average message arrive rate from each switch.We set two thresholds, upper limit and lower limit, to indicate whether startup our DHA modules.If the loads are less than lower limit or bigger than upper limit for one minute, load estimation triggers DHA module to switch migration.

DHA Decision.Each controller installs a DHA module to decide switch migration.There are two work models for this module,balance and green.First, if a controller is hot spot, i.e., its loads are bigger than upper limit for one minute, DHA will work in the balance model to offload part of loads for equilibrium.Second, if a controller is cold spot, i.e., its loads are less than lower limit, DHA will work in the green model to offload all loads and shut down this controller.

Distributed data store.A distributed data store provides a logically central view for controller cluster.It stores all switches information, including data from load estimation module.

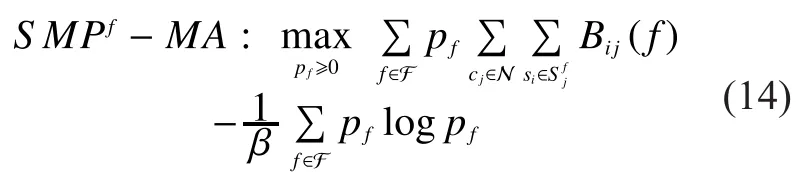

Beacon [26] is a high-performance controller with multiple threads, and is developed based on the Equinox, a framework of OSGi specification, so that we can easily implement our module services.We implement a scalable and intelligent control mechanism based on Beacon, called DHA-CON.We develop anIDHAServiceinterface and implement it by the serviceDHAImplshown in Fig.1 (a).Then we implement load Estimation modules shown in Fig.1 (b) which triggers for DHAImpl.Our DHAImpl register several existing listeners such asIOFSwitchListenerandIOFMessage-Listenerto receive OpenFlow messages.We invokeIBeaconProviderto interact with OF switches.

6.2 Controller-to-controller interface

We need to extend eastbound and westbound interface so that controllers could communicate with each other during DHA process.As shown in Fig.2, suppose controllerwith neighborseach runs a DHA thread.When the countdown timer inexpires, and there are no existing migration activities in its neighbors, it will emit migratingrequestmessage to its selected destinationThis message includes the migrating switch ID.Thenwill reply an ACK message toThe controllerbroadcastnotificationmessage to its neighbors to suggest that there is a migration activity betweenandFinally, the switch migration could be started.We refer the reader to [9] for details of messages needed during switch migration.

After switch migration, controllerandupdate their utilization ratios in the central data store, and broadcastupdatesto their neighbors respectively.

VII.THE NUMERICAL EVALUATION

7.1 Simulation setup

fig.1 Service register based on beacon

Fig.2 The message interactions among controllers

Fig.3 The experimental topology

In this section, we evaluate the performance of our prototype under the experimental environment shown in Fig.3.Consider performance interferences between Mininet and controllers,we deploy Mininet [21], Beacon controller and our DHA-CON on different physical machines.Each physical machine runs Ubuntu 12.04 LTS with JDK 1.7.The Mininet is used to simulate different network topologies.The machine running Beacon controller emulates a centralized control environment with single controller.DHA-CON is a distributed control environment with several controllers in the network.

Instead of artificial topology generated by this simulator, we use two real network topologies Chinanet [22] (38 nodes and 59 links)and Cernet [23] (36 nodes and 53 links) from zoo topology.Chinanet is a real ISP topology from China Telecom, one of three largest ISPs in China.Cernet is the largest education and research network in China.In addition, we install Beacon controller in an individual machine to simulate single centralized controller.Other five physical machines run DHA-CON instances.All physical machines have exactly the same configuration with 3.4GHz Intel Core i7 processor, 4GB of DDR3 RAM and a 1 Gbps NIC.They are connected by a H3C S5500 switch.We use iperf [24] to generate TCP flows between hosts.To simulate realistic traffic, all flows are generated as traffic characterization described by [25] such as flow size distribution and arriving rate.

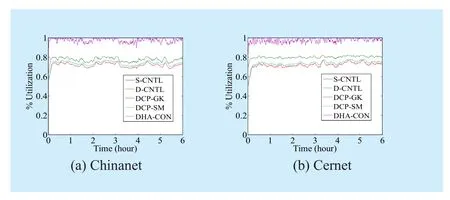

We focus on verifying whether our distributed hopping algorithm can improve the performance and scalability of distributed control plane.We compared our DHA-CON (4 controllers) with the scenarios of single controller (S-CNTL), static distributed controllers (D-CNTL) (4 controllers), and Dynamic Controller Provisioning Problem with Greedy Knapsack and Simulated Annealing (DCP-GK and DCP-SA) [13].In DCP-GK or DCP-SA,we initially deploy four controllers, and we will add a new one when one of its controllers overload.Specifically, we first compare average flow setup time along with traffic flows.Then, we evaluate the migration cost caused by DHA.Third, we compare the average utilization ratios of controllers.Finally, we evaluate utility gap of DHA.In our experiment,we define utility gap as the difference between system utility achieved by DHA and the optimal utility obtained by exhaustively searching algorithm which search the feasible configuration space.

7.2 Parameters measureme nt

Before our evaluation, we have to get the values of some parameters in DHA, that is,controller capacity and the upper limits of switch.We use the topology that two physical machines are connected with a switch.One machine runs a Beacon instance, another runs Cbench [27], a program for testing OpenFlow controllers.Each machine has one NIC with 1Gbps.

Cbench works in throughput mode with the command, cbench -c 192.168.1.3 -p 6633 -m 10000 -l 10 -s 16 -M 1000 -t.We find that the average throughput of Beacon is about 1500 kilo requests per second with 4 threads.

In our experiment, since the switch bandwidth is limited by the loopback interface of Mininet, it is difficult to overload the distributed controllers.So we have to restrict the controller capacity at a low level so that the controller is over-subscribed less than the factors of(In datacenter network up-links from ToRs are typically 1:5 to 1:20 oversubscribed [28]).The capacity of each controller in our experiment is limited to 300 kilo requests per second.And the upper limit of switch is simply calculated by equation(18), whererepresents the number of switches under the controller, andrepresents average loads generated by switch

At the right hand of the equation, the first item, called basic item, is calculated based on the controller throughput, control scale and over-subscription ratio.The second item,called individual item, is a random number not beyond

7.3 Numerical results

Our objective is to increase network utilities,so that they can handle as many OF event requests as possible with their available resources.We setduring simulations.

We run each simulation for 60 hours.Fig.4 shows the flow counts on Cernet and Chinanet respectively.Simulations are repeated for three times.At each time, we record flow setup time, the number of packets exchanged between controllers, and controller utilizations for different scenarios.We show the average results of three repeated simulations.

Flow setup time.In the simulation, we use average flow setup time to measure the effect of our DHA-CON, because it reflects controller load changes caused by switch migration.

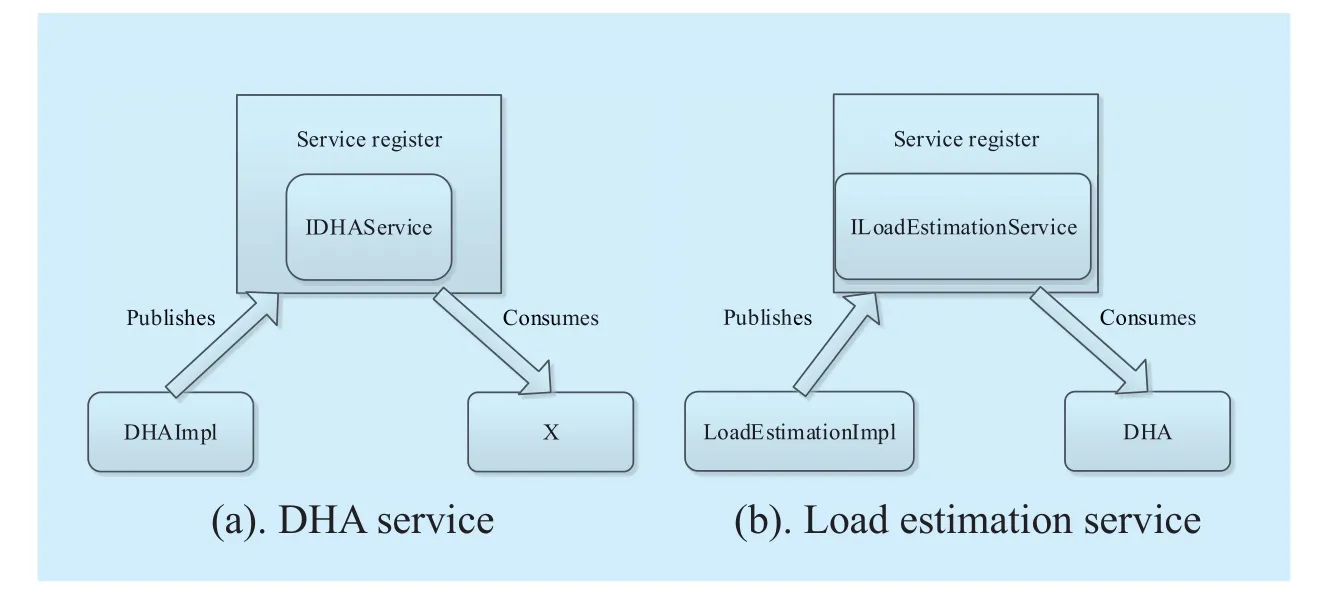

We compare the average flow setup time of DHA-CON (4 controller instances), S-CNTL,D-CNTL (4 controller instances), DCP-GK and DCP-SM (4~5 controller instances) in two different topologies.Fig.5 shows their time curves.We can see that S-CNTL is a constant, since single controller is overloaded all through the simulation.However, the flow setup time for other four scenarios fluctuates along with flow count, yet has different ranges.That is, D-CNTL has largest fluctuation,DCP-GK takes second place, and DHA-CON and DCP have less fluctuation.

Fig.4 Flow count

Fig.5 Average flow setup time

There are several reasons to explain the above results.First, single controller has the lowest scalability due to its resource-limited architecture.Second, compared with S-CNTL,although D-CNTL has a distributed control plane, its static architecture is likely to induce some heavy controllers that suffer from long flow setup time.Third, DCP-GK and DCP-SM could eliminate overloaded controller via adding a new controller, and DHA-CON achieves such scalability via switch migration.That is,DCP-GK and DCP-SM must run reassignment between controllers and switches while our DHA-CON only migrate several switches from one overloaded controller to light one.

The empirical CDFs in Fig.6 definitely present that dynamic control plane (i.e., DHACON and DCP) are less vulnerable to flow count than D-CNTL.

Fig.6 Empirical CDFs

Fig.7 Summary of overhead and average flow setup time

Fig.8 Average controller utilization

Overhead.We compare the overhead for five scenarios.Fig.7 presents the communicating overhead and average flow setup time with Chinanet and Cernet topologies.S-CNTL has the lowest communicating overhead because there are no controller-to-controller messages.Compared with S-CNTL, D-CNTL has higher overhead, because controllers need to state synchronization for global network view.DHA-CON generates more packets than D-CNTL, since it needs to migrate switches except state synchronization in DCNTL.But the cost for migration switches is small,no more than 20% of D-CNTL.DCP-GK and DCP-SM have highest communicating overhead.Their cost is twice times that of D-CNTL, and 1.5 times that of DHA-CON,because DCP that reassigns the mappings between controllers and switches will incur more switch migrations than DHA-CON.

As mentioned earlier, average flow setup time for DHA-CON is lowest than other scenarios, about 0.2 s.The average flow setup time for DCP-GK and DCP-SM are close to our DHA-CON, about 0.25 s for Chinanet and Cernet respectively.But the average flow setup time for S-CNTL and D-CNTL are more than 0.65 s and 0.35 s for both topologies.

Average utilization ratio.To validate the scalability of DHA-CON, we count the average utilization ratio for different scenarios.As show in Fig.8, S-CNTL has 100% utilization because its controller overload.Each controller in DHA-CON has more than 90% utilization.Since DHA-CON balances the loads among controllers so that more requests can be served.Controller in D-CNTL has less than 80% utilization in average because the load imbalance among different controllers.DCPGK and DCP-SM have lowest utilization in average.Although DCP could serve the same total loads with DHA-CON, but it needs more controllers than DHA-CON (one more than DHA-CON in our simulation).

We obtain the optimal network configuration by exhaustively searching the feasible network configurations.Whenthe average actual utility loss is 0.2331and 0.2219 for Chinanet and Cernet.We see that the performance loss bound is guaranteed, and the observed utility loss is quite smaller than the bound.

VIII.CONCLUSIONS

In this paper, we make the first attempt to explore SMP problem for more scalable control mechanism.We first model this problem as a NUM problem from the view of network loads.And then, we design a synthesizing distributed algorithm to solve it.Finally, we implement a prototype of this control mechanism, and validate it in two real topologies.Of course, control load and locality are not the only important factors when choosing target controllers.Resilience is also an important aspect.We will consider it in the future.

ACKNOWLEDGEMENT

The authors would like to thank the reviewers for their detailed reviews and constructive comments, which have helped improve the quality of this paper.The research reported in this paper was supported by the Foundation for Innovative Research Groups of the National Natural Science Foundation of China (Grant No.2016YFB0800100, No.2016YFB0800101), the National Natural Science Foundation of China (Grant No.61521003), the National Key R&D Program of China (Grant No.61309020).

[1] N.McKeown, T.Anderson, H.Balakrishnan, G.Parulkar,et al., “Openflow: enabling innovation in campus networks,” SIGCOMM CCR, 2008.

[2] N.Gude, T.Koponen, J.Pettit, B.Pfaff, M.Casado, N.Mckeown, and S.Shenker, “NOX: Towards an Operating System for Networks,” in SIGCOMM CCR, 2008.

[3] David Erickson, “The Beacon OpenFlow Controller,” In Proc.1st Workshop on Hot Topics in Software Defined Networking (HotSDN 2013),pages 13-18, Hong Kong, 2013.ACM Press.

[4] A.Tootoonchian and Y.Ganjali, “HyperFlow:A Distributed Control Plane for OpenFlow,” in INM/WREN, 2010.

[5] Teemu Koponen, Martin Casado, Natasha Gude,et al., “Onix: a distributed control platform for large-scale production networks,” In Proc.OSDI 2010, pages 351-364, Berkeley, 2010.USENIX Association.

[6] Soheil Hassas Yeganeh and Yashar Ganjali.Kandoo: a framework for efficient and scalable off-loading of control applications.In Proc.HotSDN 2012, pages 19-24, New York, 2012.ACM Press.

[7] T.Benson, A.Akella, and D.Maltz, “Network traffic characteristics of data centers in the wild,” in IMC, 2010.

[8] OpenFlow.https://www.opennetworking.org/images/stories/downloads/sdn-resources/onf-specifications/openflow/openflow-specv1.4.0.pdf

[9] A.Dixit, F.Hao, S.Mukherjee, T.Lakshman, R.Kompella, “Towards an Elastic Distributed SDN Controller,” In Proc.1st Workshop on Hot Topics in Software Defined Networking (HotSDN 2013), pages 7-12, Hong Kong, 2013.ACM Press.

[10] V.Yazicil, M.Oğuz Sunay1, Ali Ö.Ercan1.Controlling a Software-Defined Network via Distributed Controllers.In NEM submit 2012 arX-iv:1401.7651(2012).

[11] B.Heller, Rob Sherwood, and Nick McKeown.The controller placement problem.In Proc.1st Workshop on Hot Topics in Software Defined Networking (HotSDN 2012), pages 7-12, New York, 2012.ACM Press.

[12] Guang Yao, Jun Bi, Yuliang Li, and Luyi Guo.On the Capacitated Controller Placement Problem in Software Defined Networks.IEEE COMMUNICATIONS LETTERS, 2014.

[13] Md.Faizul Bari, Arup Raton Roy, Shihabur Rahman Chowdhury, Qi Zhang, Mohamed Faten Zhani, Reaz Ahmed, and Raouf Boutaba.Dynamic Controller Provisioning in Software Defined Networks.In CNSM, pp.18-25.2013.

[14] M.Chen, S.Liew, Z.Shao, and C.Kai, “Markov Approximation for Combinatorial Network Optimization”, Proceedings of IEEE INFOCOM 2010, San Diego, CA, US, March, 2010.

[15] A.Tootoonchian, S.Gorbunov, and Y.Ganjali, et al., “On controller performance in software-defined networks,” in Proc.of HotICE, 2012.

[16] Y.Feng, B.Li, and B.Li, “Bargaining towards maximized resource utilization in video streaming datacenters,” in Proc.of INFOCOM, 2012.

[17] P.Laarhoven and E.Aarts, Simulated annealing:theory and applications.Springer, 1987.

[18] S.Rajagopalan and D.Shah, “Distributed algorithm and reversible network,” in Proceedings of CISS, 2008.

[19] J.Liu, Y.Yi, A.Proutiere, M.Chiang, and H.Poor,“Towards Utility optimal Random Access Without Message Passing,” Special issue in Wiley Journal of Wireless Communications and Mobile Computing, Dec, 2009.

[20] DHA-CON-TR-01, http://pan.baidu.com/s/1o67ItiM

[21] B.Lantz, B.Heller, and N.McKeown.A network in a laptop: rapid prototyping for software-defined networks.In Proceedings of HotNets 2010, pages 19:1–19:6.

[22] http://www.topology-zoo.org/files/Chinanet.gml

[23] http://www.topology-zoo.org/files/Cernet.gml

[24] http://iperf.sourceforge.net.

[25] S.Gebert, R.Pries, D.Schlosser, and K.Heck.“Internet access traffic measurement and anal-ysis”, In Traffic Monitoring and Analysis, volume 7189 of LNCS, pages 29–42.2012.

[26] David Erickson, “The Beacon OpenFlow Controller,” In Proc.1st Workshop on Hot Topics in Software Defined Networking (HotSDN 2013),pages 13-18, Hong Kong, 2013.ACM Press.

[27] ROB SHERWOOD AND KOK-KIONG YAP.Cbench: an OpenFlow Controller Benchmarker.http://www.openflow.org/wk/index.php/Oflops.

[28] Albert Greenberg, James R.Hamilton, Navendu Jain,et al., “VL2: A Scalable and Flexible Data Center Network,” in Proc.of SIGCOMM’09,Pages 51-62, Barcelona.Spain.Aug.2009, ACM Press.

杂志排行

China Communications的其它文章

- A Non-Cooperative Differential Game-Based Security Model in Fog Computing

- Dynamic Weapon Target Assignment Based on Intuitionistic Fuzzy Entropy of Discrete Particle Swarm

- Directional Routing Algorithm for Deep Space Optical Network

- Offline Urdu Nastaleeq Optical Character Recognition Based on Stacked Denoising Autoencoder

- Identifying the Unknown Tags in a Large RFID System

- Reputation-Based Cooperative Spectrum Sensing Algorithm for Mobile Cognitive Radio Networks