Prospects for improving research communication and the incentive system in science

2015-03-24MarkPattersonRandySchekman

■Mark Patterson Randy Schekman

1) eLife,24 Hills Road,Cambridge,UK CB2 1JP,E-mail:m.patterson@elifesciences.org

2) Dept.of Mol.and Cell Biology,Li Ka Shing Center,UC Berkeley,Berkeley,CA 94720-3370

Much concern has been expressed in recent years about the processes associated with publishing research in high-profile journals,particularly within the life sciences and biomedicine[1-4].Researchers are frequently subjected to substantial delays in communicating their work,overzealous reviewersmake demands for extensive revision,and essential information and data are often relegated to supplementary files with little functionality.More broadly,much of this work is not published open access,and the quality and reliability of the science that is being reported in top-ranking journals has been called into question[5,6].

One of the root causes of these problems is the existing incentive system in science whereby researchers are frequently judged on the basis of the perceived prestige of the journal in which their work is published.The competition to publish in the most highly-selective journals has now become particularly fierce,and the need to reform the incentive and evaluation system is pressing.

In this article,we discuss a new initiative in research communication—eLife—and how this and other developments can help to address existing concerns and build on the possibilities offered by digitalmedia.

1 The origins of eLife

Funding agencies have a clear interest in how the research that they fund is communicated.Limiting the extent,speed or the quality of that communication reduces the return on research funds.Over the past decade,scores of agencies have therefore developed policies that require open access to the published outputs of the work that they fund[7].

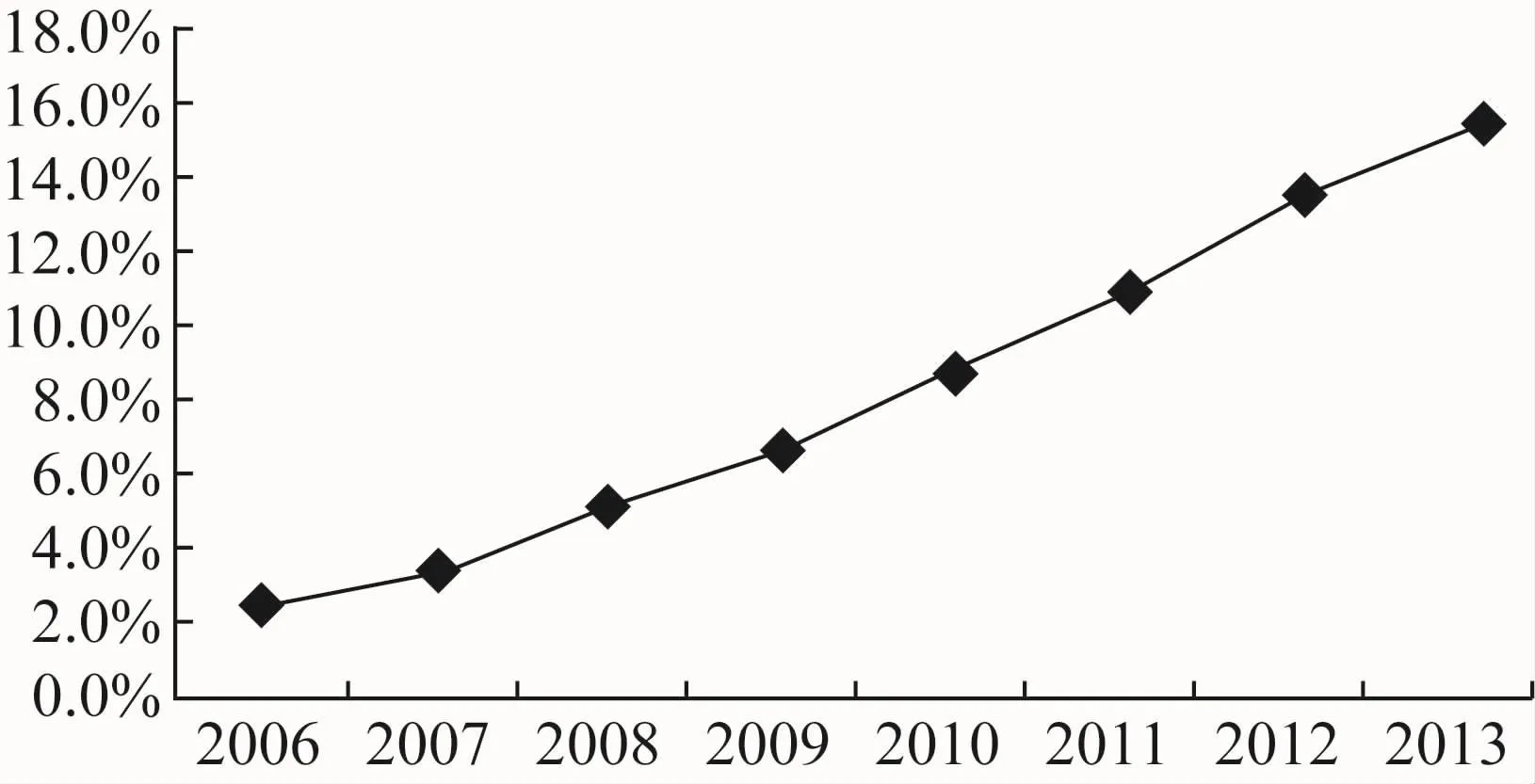

Despite the changing policy environment,progress towards open access has been slow,with the open access subset of PubMed Central representing only about 16%of the total biomedical literature(Figure 1).Once again,it is the strong incentive to publish in established high-impact-factor,generally commercial journals and the many associated brand-name journals that are launched to capture specialized content,which is one of the chief causes of the slow progress.Although there are now many reputable open-access journals(such as those published by BioMed Central and PLOS),mostof these journals have been launched in the past10 years,and it takes time for new journals to establish the prestige necessary to attract a large fraction of content.Nevertheless,the policy environment and the growing volume of open access options(also within the traditional subscription publishers)have now created very strong momentum towards comprehensive open access.

Figure 1 The percentage of articles indexed each year in PubM ed that are available in the“open access subset of PubM ed Central”

As noted above,however,access is not the only issue that needs to be addressed in biomedical publishing,and it was in light of this broader set of concerns(and the opportunity to do better) that three major biomedical funders—the Howard Hughes Medical Institute,the Max Planck Society and the Wellcome Trust—decided to launch eLife.The initial guiding principles were that the journal should be run by an outstanding group of life science researchers committed to improving the publication of new research,should publish high-quality science to provide an alternative to existing high-profile journals,and should be a locus for innovation and experimentation in digital research communication.eLife was launched a little over two years ago.

The journal is supported with generous start-up funds because the agencies also recognised that a journal with such ambitious goals would require substantial investment in outreach and communication,the financial remuneration of senior academic editors who havemany calls on their time,and a team of staff to support and develop the initiative.

2 Beginning w ith the editorial process

The very first step for eLife was to devise a more constructive and efficient editorial process for the journal—a process that directly addressed the key editorial problems frequently encountered at other journals.There are twomain differences from themore traditional process[8].First,when reviewers have submitted their peer reviews on an article,they discuss their opinions of the work in an online forum.Their identities are revealed to one another and,guided by an expert academic editor,they arrive at a consensus view on whether and how the article should be revised for publication in the journal.In this way,any disagreements between the reviewers are discussed and usually resolved.Given that reviewers know that this consultation will take place,our experience to date suggests that this encourages them to be constructive in their approach.The second innovation in the eLife process is that the editorwrites a summary letter to the author,after the online consultation amongst the reviewers,so that the author knows exactly what needs to be done in order to get the work published.

The overarching goal is to provide succinct advice to the author,and to eliminate requests for revision thatwill requiremanymonths ofwork.In cases where the editors and reviewers feel that the authors'conclusions are not adequately supported without substantial additional work,the article is rejected with a summary of the key reasons.For authors who are encouraged to resubmit a revised article,another important consequence of the eLife process is that revised articles can frequently be reassessed by the editor,without going back to the reviewers,and most articles therefore only undergo one round of review and revision.The result is that the research is published more quickly,and any unnecessary revision is eliminated.The popularity of this process has been indicated by authors,editors and reviewers,who often enjoy a thoughtful discussion about the submitted work with their peers.

Importantly,eLife is also not constrained to publish a set number of articles each month(unlike more traditional high-profile journals);therefore although the journal is selective,the editors judge each paper on its scientific merits and eLife can publish all the articles that meet their standards.If the findings and ideas are judged by the editors to have the potential to drive research forward,the editors will support publication in eLife.Authors are incentivised to tell their story in full,objectively and with a view to maximising the utility of the work for other researchers in their field.Reviewers,in light of the consultation step,are similarly incentivised to engage objectively with their colleagues in a constructive critique of the work that they have been invited to evaluate.

In future,we anticipate that eLife will take additional steps towards greater transparency in the editorial process.Journals such as Atmospheric Chemistry and Physics[9]and the BMJ[10]have demonstrated the benefits of more open approaches to peer review in two very different areas of research.Furthermore,as peer review becomes more transparent,reviewers will be able to gain academic credit for this important(but currently unrecognised)aspect of their work[11].With recognition will come a greater incentive to engage as constructively as possible in this process.With this inmind,new initiatives have been developed to help researchers gain recognition for peer review activity(e.g.Publons,Ref 12).The pace of developments and experimentation in peer review provides evidence that new approaches to peer review both before and after formal publication are likely to be developed,as scholarly communication adapts to the opportunities offered by digital communication.

3 Digital presentation

Another aspect of eLife's vision is to explore the potential of digital media in the presentation and communication of new research findings and ideas.eLife is a digital product first and foremost.A straightforward first step was therefore to incorporate video files(sometimes including audio) into the body of the online article,much like a figure or a table(e.g.Video 1 in Ref 13).

A second feature of the launch version of eLife was to present supplementary figures in a more usable fashion.Typically,online journals place supplementary files( including tables,text,extra figures,data and so on) at the end of an article in formats such as PDF with limited functionality.At eLife,however,we place any supplementary figures alongside themain figures in the body of the article,so that they can be seen where they are most relevant.eLife also lists specific datasets that have been generated or used in the course of the article.The researchers responsible for these datasets are indicated,so that appropriate credit is given.The locations and access rights associated with the data are also provided so that interested researchers can reuse the data for their own work.

Along with other digital-only journals,eLife is helping to point the way towardsmore effective use of new technology in the presentation of research findings.However,there is a great dealmore that can be done,as demonstrated by the first open-source product that eLife produced—eLife Lens.This article viewer provides an alternative to the conventional linear view of an article,so that users can navigate easily back and forth between the text and other items,such as figures,tables and references.eLife Lens is available for all of the eLife content,but is now also being piloted by several additional journals[14].

4 The evolution of research incentives

As discussed,eLife provides an open-access publication venue for high-quality research where editorial policies and practices are designed to create an objective and constructive experience for all concerned.There are also other open-access journals,exploring new editorial practices,such as F1000 Research[15]and PeerJ[16],along with many additional tools and services to share data and resources openly,such as Figshare[17],Zenodo[18],Dryad[19],Github[20],and BioArxiv[21].To complement the increasing range of opportunities to share research outputs,it is important to recognise the researcherswho take advantage of these opportunities and adopt the most productive scientific behaviours.Towards this end,it is necessary to move away from assessing researchers by the journal in which their work is published,and focus instead on the full range of contributions that researchers are making to science.

A useful step towards judging research contributions on their own merits is to use information that can be generated online about the extent to which individual articles have been used.PLOS pioneered this approach with the provision of a rich collection of article-levelmetrics,and such data are now provided by many publishers[22].New services such as ImpactStory[23],Altmetric[24]and Plum Analytics[25]have also emerged in recent years to support the widespread provision of metrics and indicators,primarily for individual research publications.

It is still early days for these approaches,but much useful data can already be presented to support a greater understanding of the impact and reach of research outputs[26,27].The evidence provides quantitative aswell as qualitative information(such as who is saying what about an individual article).New metrics and indicators are not limited to research articles and can be applied to other research outputs such as datasets,presentations and software.Ultimately,these new approaches have the potential to provide amuch richer and moremeaningful view of the accomplishments of individual researchers.

Despite the availability of new tools and data to support research assessment,such approacheswill only become widely adopted if there are complementary changes in assessment practices and policies at funders and institutions.Signs of shifts in assessment practices can be seen.The San Francisco Declaration on Research Assessment(DORA) provides a set of recommendations to encourage such policy change[28].As well as gathering the signatures of over 12,000 individuals and 500 institutions,the DORA website is also collecting examples of policies that shift the emphasis.In the UK,HEFCE has initiated an inquiry to look at theways in which article-basedmetrics could support processes of research assessment,and has indicated that impact factors should play no part in the Research Excellence Framework[29].Another example of policy change is provided by the US National Science Foundation,which encourages researchers to include research outputs other than journal publications in their grant applications[30].

As the focus of research assessment shifts towards a broader view of research outcomes,researchers will be incentivised tomake theirwork maximally useful for prospective readers and users.Journals and other web resourceswill need to compete not in terms of impact factors buthow effectively they ensure thatnew findings can be discovered and used,and in turn how they can help to build recognition for the researchers responsible for thiswork.

At eLife,we plan to explore new opportunities and incentives to communicate research more effectively.Recently we have introduced the ability for authors to extend their existing eLife publications with smaller articles describing interesting new findings[31].Typically,these short Research Advances are assessed by the same editors and reviewers who oversaw the publication of the original article.This approach could also be taken further to allow additional authors to extend the work of others using short articles.The motivation for the introduction of Research Advances is to provide new ways to publish findings that bolster,extend or indeed challenge the original work,and in general to accelerate the communication of new research.

A further experiment that eLife is conducting is to be the publishing partner for the Reproducibility Project:Cancer Biology,along with the Center for Open Science and Science Exchange[32].This substantial project will result in a large body of evidence examining the reproducibility of 50 highprofile papers in cancer biology.Both of these new projects—Research Advances and the Reproducibility Project—will provide opportunities to open up the scientific process to greater scrutiny,and will offer further incentives to researchers to share their work.

5 Next steps

Scientific journals are just one component in a complex research ecosystem,but they are tightly linked to the current incentive system in science.Given the fierce competition that exists for publication in highprofile journals today and some of the adverse consequences that arise from this competition,there is a pressing need for a new journal to publish work of the highest scientific merit.This is a core part of eLife's mission.However,eLife is also aiming to go much further than just creating an open-access journal.Key goals are to expand the range of options that researchers have to share their work and resources,and to support the broader progress of science by recognising researchers who share their work in the most effective ways.

As we work with the research community to shape the eLife initiative,one of the consistent themes that we have heard is the growing concern about the research environment for the early-career researcher community in particular.We have also found a strong appetite for change in research culture and incentives amongst this group.We have therefore created an advisory board for eLife comprising an outstanding and international group of graduate students,post-doctoral researchers,and early group leaders,so that the earlycareer researcher community has a strong voice in the policies and practices of this unique initiative[33].

The world of journals and research communication is steadily evolving thanks to the possibilities offered by digitalmedia.With the strong and continuing support of biomedical funders,eLife has quickly established a reputation for publishing high-quality science.Coupled with other new initiatives in research communication,along with changes in policy and practice around research assessment,there is every reason to be optimistic about the prospects for radical improvements in publishing,the associated incentives,and scientific progress in general.

[1]Law rence P.Lost in publication:how measurement harms science[J].Ethics in Science and Environmental Politics,2008,8,9-11.doi:10.3354/esep00079.

[2]Pringle JR.An enduring enthusiasm for academ ic science,but w ith concerns[J].Molecular Biology of the Cell,2013,24(21),3281-4.doi:10.1091/mbc.E13-07-0393.

[3]Raff M,Johnson A,Walter P.Painful publishing[J].Science,2008,321(5885),36.doi:10.1126/science.321.5885.36a.

[4]Vosshall LB.The glacial pace of scientific publishing:why it hurts everyone and what we can do to fix it[J].FASEB Journal, 2012,26 ( 9 ), 3589-93.doi:10.1096/fj.12-0901ufm.

[5]Brembs B,Button K,Munafò M.Deep impact:unintended consequences of journal rank[J].Frontiers in Human Neuroscience, 2013, 7, 291. doi: 10.3389/fnhum.2013.00291.

[6]Collins F S,Tabak L.Policy:NIH plans to enhance reproducibility[J].Nature,2014,505(7485),612-613.doi:10.1038/505612a.

[7]SHERPA/JULIET website http://www.sherpa.ac.uk/juliet/(accessed Dec 9,2014).

[8]Schekman R,Watt F,Weigel D.The eLife approach to peer review[J].eLife,2013,2,e00799.doi:10.7554/eLife.00799.

[9]Pöschl U.Multi-stage open peer review:scientific evaluation integrating the strengths of traditional peer review w ith the virtues of transparency and self-regulation[J].Frontiers in Computational Neuroscience,2012,6,33.doi:10.3389/fncom.2012.00033.

[10]Groves T,Loder E.Prepublication histories and open peer review at The BMJ[J].BMJ,2014,349(9),g5394.doi:10.1136/bm j.g5394.

[11]Fitzpatrick K.Chapter 1 in:Planned obsolescence:Publishing,technology,and the future of the academy[M].New YorkUniversity Press,2011.

[12]Publons website.https://publons.com/about/(accessed Dec 10,2014).

[13]RaoR P,M ielke F,Bobrov E,et al.Vocalization-whisking coordination and multisensory integration of social signals in rat auditory cortex[J].eLife,2014,3,e03185.doi:10.7554/eLife.03185.

[14]eLife website.http://elifesciences.org/elife-news/Lenspioneered-by-eLife-to-be-piloted-by-six-additional-publisherson-HighWire(accessed Dec 11,2014).

[15]http://f1000research.com/.

[16]https://peerj.com/.

[17]http://figshare.com/.

[18]https://zenodo.org/.

[19]http://datadryad.org/.

[20]https://github.com/.

[21]http://biorxiv.org/.

[22]Fenner M.Letter from the Guest Content Editor:Altmetrics Have Come of Age[J].Information Standards Quarterly,2013,25(2):3.doi:10.3789/isqv25no2.2013.01.

[23]https://impactstory.org/.

[24]http://www.altmetric.com/.

[25]http://www.plumanalytics.com/.

[26]Chamberlain S.Consuming Article-Level Metrics:Observations and Lessons[J].Information StandardsQuarterly,2013,25(2),4-13.doi:10.3789/isqv25no2.2013.02.

[27]Taylor M.Exploring the Boundaries:How Altmetrics Can Expand Our Vision of Scholarly Communication and Social Impact[J].Information Standards Quarterly,2013,25(2),27.doi:10.3789/isqv25no2.2013.05.

[28]Schekman R,Patterson M.Reforming research assessment[J].eLife,2013,2,e00855.doi:10.7554/eLife.00855.

[29]REF2014 website.http://www.ref.ac.uk/faq/all/(accessed Dec 11,2014).

[30]Piwowar H.(2013).Value all research products,Nature,493(7431) 159-159.DOI:10.1038/493159a.Free version available at http://researchrem ix.wordpress.com/2013/07/12/full-text-value-all-research-products/.

[31]Patterson M,Schekman R,Watt F M,et al.Advancing research[J].eLife,2014,3,e03980.doi:10.7554/eLife.03980.

[32]Morrison S J.Time to do something about reproducibility[J].eLife,2014,3,e03981.doi:10.7554/eLife.03981.

[33]eLife website.http://elifesciences.org/elife-news/Workingtogether-eLife-and-early-career-researchers(accessed Dec 11,2014).