Adaptive deployment optimization for a multi-layer surveillance network

2014-11-26HeYaobinZhangDianQiLiFengShengzhongMingZhongandFanJianping

He Yaobin,Zhang Dian,Qi Li,Feng Shengzhong,Ming Zhong,and Fan Jianping

1)Shenzhen Institute of Advanced Technology,Chinese Academy of Sciences,Shenzhen 518055,P.R.China

2)College of Computer Science and Software Engineering,Shenzhen University,Shenzhen 518060,P.R.China

3)The Third Research Institute of Ministry of Public Security,Shanghai 201204,P.R.China

It is well known that the cameras for surveillance are widely installed for public security,especially in the metropolitan zones.For example,it is reported that Shenzhen in China has equipped 2×105cameras in 2007[1], and Chongqing in China has launched the plan of installing 5×105cameras since 2011[2].Therefore,the surveillance videos become an important tool and data source in the field of public security.

However,“how to manage and utilize surveillance data”is a challenge issue for a large surveillance network system.One of the critical reasons is that data sources are widely spread and hard to be predict.Usually,surveillance monitors do not transmit the video data to the manager side.They send the data back when triggered.However,few research so far focus on understanding and exploring how to deploy the sufficient equipment so as to fully exploit the surveillance data.

The paper proposes a large surveillance data system,which is able to manage enormous monitors and computing nodes efficiently.We make a theoretical analysis on the equilibrium demand of the system,introduce a new queueing network model to characterize the dynamic video stream uploading behaviors from surveillance network,and derive the server capacity to optimize its realistic processing.To the best of our knowledge,this paper makes the first attempt to address the issue on how to quantify the dynamic incoming data flows.

Based on the quantitative model,we can optimize configuration ofcomputing nodes to achieve the bestapplication performance at a reduced cost.Furthermore,we will utilize history data records to manipulate the execution environment of computing nodes in the network.We formulate and deal with two optimization problems,which are the minimal server deployment and the dynamic routing for a satisfied QoS(quality of service).We propose an algorithm that can dynamically redirect the bursty requests flow to the sufficient nodes to avoid the overflow of the queueing and keep the system responding in time.Experimental results show thatitcan dramatically improve the overall performance of our system.

1 Queueing network and resource provision

Queueing network is a theory widely applied to many fields,such as processing control,communication,etc.Samari et al[3]describes an analytic model for performance studies of distributed computer networks.The model factors each node of a network into processing and channel components,and models each one separately using M/D/r or M/M/l queues.Recently,queueing network is utilized for enhancing the QoS of video application on the internet.Wu et al[4]leverages Jackson queueing network[5]with infinite-server queues to model the channel churns,though it has an impractical assumption that server resource are unlimited.Kumar et al[6]proposed a simple stochastic fluid model that seeks to expose the fundamental characteristics and limitations of P2P streaming systems.Wu et al[7]put forward a Jackson queueing network model to derive the demand for server capacity in VOD application.Jagannathan et al[8]designed scheduling policies that are robust to busty traffic on a simple queueing network consisting of two links only.Yang et al[9]used Jackson's network theorem to characterize the performance of mobile application servers among different cloud computing environments.Unlike previous research,to the best of our knowledge,our study is the first one to investigate and optimize the surveillance video network by using a queueing network model,which is more challenging than live streaming providing.

As more distributed systems are widely adopted for various applications,recent works trend to investigate work patterns and resource utilization in data centers.These investigations of real workload have revealed a vast of amount of wastage in data centers.Logothetis et al[10]addresses the need for“stateful dataflow”programs that can rapidly sift through huge,evolving data sets and presents a generalized architecture for continuous bulk processing that raises the level of abstraction for building incremental applications.Resource provision is another related topic in this field.Researchers mainly focused on predicating sufficient resource for the quality of service.Most of them are applied in cloud platform or grid networks[11-12].

2 System architecture

The surveillance data storage and processing system for public security(SDSPS2)can be sketched to be tree-structure distributed system as Fig 1.It consists of 4 layers for now,which are monitor,local station,area center,and great terminal in the bottom-up order.It can be extended to a higher tree structure by inserting more middle layers as the need of the real world grows.Monitor layer is the data source,and other layers are responsible for the data storage and processing.Higher layer nodes are more powerful in capability and performance than the lower ones.We note those nodes of the system in the following order:the great terminal is marked as node T,area centers are noted as node i,i=1,2,…,Ⅰ,whereⅠis the number of nodes in level 2;local stations under node i are node(i,j),j=1,2,…,Ji,where Jiis the child-nodes number of node i,and the number of monitors is ki,junder node(i,j)correspondingly.

2.1 Level 0:monitors

Monitor is the endpoint in our system.Each monitor has a sending buffer to store a short time of video.Nevertheless,it does not always send the video stream to its higher level stations unless by special orders.There are some triggers,in the form of many types,preset in the monitors level:it could be a pressure sensor to indicate a car over the traffic line in the wrong time,or a temperature sensor for fire alarm,or a smart video detector chip integrated in cameras to find out the wanted scenes automatically.Only if incidents fulfill the condition of a trigger,video stream in a certain time slice is pushed to its higher-level station for further analysis and storage.Based on the observation,we may assume that the time slice of each sent video stream has an averaged value T0,and its size could be r0T0,where r0(unit:byte/s)is the standard streaming playback rate.

Fig.1 The architecture of surveillance data storage and processing system for public security图1 公共治安监控数据存储和处理系统体系

2.2 Level 1:local stations

Each local station connects and manages monitors in its territory.Its topological structure is shown in Fig 2.Inside each local station,several servers are deployed to provide service for ongoing video streams.The tasks of those servers are mainly includ:① Storage,they are the interface of local multimedia database for upcoming video streaming.② First-round analysis,they will do the first round analysis,get themeta-data,and build the initial index for each video chuck.

Due to the shortage of availability or the low utilization rate,those servers may not have enough capacity to respond all the uploading requests to the same time.Therefore,those requests are buffered as a waiting queueing before they can get the service.

Specially,in local station node(i,j),the number of processed servers for ongoing video streams is noted as si,j.In addition,we can assume that the performance factor of each processing server is averagely equal to Pi,j,which is related to its bandwidth and executing ability(e.g.CPU,I/O).

Fig.2 Topological structure of a level-1 node图2 一级节点的拓扑结构

Furthermore,not all the upcoming videos are processed in this layer.Some of them are directly routed to the higher level nodes due to several reasons,so that the higher node needs to aggregate all live video in its territory to do a comprehensive analysis,etc.For similar reasons,video chucks in storage may be selected and pushed to its upper node for further analysis.As a result,we note these two kinds of uploading are“direct transfer”and“selective transfer”,respectively.

2.3 Other levels

Area center is a powerful processing center built upon lots of local stations.Its structure is similar to that of its child nodes.We can mark the number of its processing servers and the performance factor of each server as siand Pi,i=1,2,…,Ⅰ,correspondingly.

The great terminal is the most high-performance data center in our system.It can collect and analyze data from all its lower level nodes.It has sTprocessing servers to deal with upcoming videos,each of which has the performance factor PT.

3 System modeling analysis:a queueing network approach

3.1 Queueing network modeling

A Poisson process is a continuous-time stochastic process,which meets the following conditions:①The time between each pair of consecutive events has an exponential distribution with parameter λ,a.k.a.intensity,which is the expected number of events that occur per unit time.② The number of events in different time intervals is independent from each other.From recent research,the Poisson process is considered a good model for web services among many phenomena[13].

From observations,we can safely assume that the external arrival of uploading requests of the video streaming from monitors,or lower level nodes,follows a Poisson process with an average arrival rate of λ(its value may vary in different cases),and the service time of a job in the queue is exponentially distributed.

Considering the case of a level-1 node(i,j),storing and processing videos is regarded as a job in the queue.The arrival and accomplishment of video streams correspond to the job joining the queue and finishing from the queueing,while other important factors in the queueing model,such as service time and waiting time of a job,can mostly find a good mapping to our real system.It has si,jprocessing servers for incoming video streaming.We can model it as a queue Qi,jwith si,jservers and arrival rate λi,j.We can also get its service rate μi,j=Pi,j/(r0T0)and its service time 1/μi,j,where Pi,jis its performance factor and r0T0is the size of each video chuck.

As the theory of Poisson process,the sub-flows from stochastically splitting a Poisson flow,or the aggregation of multiple Poisson flows,are still Poisson flows,we can safely infer that the arrival to each queue in each node is Poisson process.Meanwhile,a Jackson network[8]is a network of queues where the arrivals at each queue form a Poisson process,and the job service times are exponentially distributed.An open Jackson network is one with external job arrivals into or departures from the system.As a result,we could model our system as the queueing network,which is also an open Jackson queueing network with several M/M/s/∞ queues,the number of which is equal to the number of datacenter nodes in our system.

We will do a detailed analysis of our system via the following network models.

3.2 Single-source external arrival model

We firstly consider the case that external data source are only from monitors under a single local station,node(i,j).We can build a data model representing this case as Fig 3.

In node(i,j),external arrivals with average arrival rate Λ may join the queue or directly be routed to the higher level node.Let α denote the probability of external arrivals joining the queue in current node.Therefore,for the M/M/si,j/∞ queue Qi,j,we have

where λi,jis the arrival rate,μi,jis the service rate of a single server,Pi,jis its performance factor and r0T0is the size of each video chuck.To avoid the waiting requests of the queue becoming infinity,we must have enough capability to deal with those requests to keep this system in the stable state.Mapping it into the Jackson network,given the constant factor Pi,j,we must have a sufficient number of processing servers si,jin the queue Qi,jin the equilibrium state,so that the expected sojourn time of each request Wsis no longer than T0,the average time slice of each video streaming.In other words,this condition also means the server utilization ρi,j< si,j.

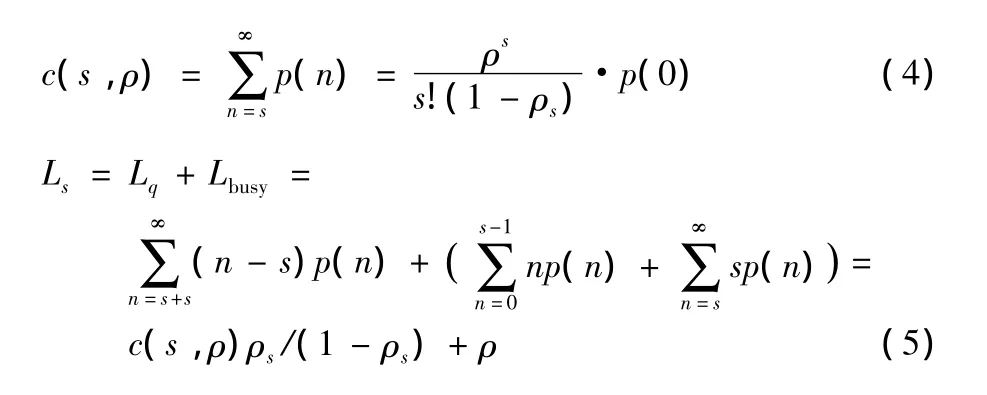

In the equilibrium of a Jackson network,each of its queue also stays equilibrium,thus we can have the following derivation:

where λ'iand λ'Tis the arrival rate of node i and node T in this single-source case.We note β as the probability of the occurrence of“selective transfer”.Thus βi,j,1and βi,j,2is the rate of“selective transfer”from node(i,j)to node i and node T respectively,as well as βifor node i to node T.

Fig.3 Single-source external arrival model图3 单一来源外部到达模型

Based on the queueing theory and Erlang-C formula[5],we can derive the equilibrium distribution of requests in the queue Q as where p(n)is the probability of n requests on a stable queue.Then we can infer important factors in a queue,such as c(s,ρ),the probability of waiting occurrence in the queue,and Ls,the expected number of requests in the queue,by the following formulae

In the actual computing,it is necessary to avoid computing a big number such as ρs.Thus,the formula of Lqis transformed as the following when computing

3.3 Real system model

In the above subsection,we discuss the single-source external arrival model,where the data flow only from node(i,j)to node i or node T.It is not the case in the real world.In our system,there are Jilevel-1 nodes under level-2 node i,i=1,2,…,Ⅰ,whereⅠis the number of nodes in level 2.It means the model is a tree-like network and the external sources are widely distributed on the level-0 layer.We describe the data flow and process between level-1 and level-2 by Fig 4.

Fig.4 Level 1 and level 2 system model图4 层1和层2的系统模型

From Fig 4,we can see there is no difference for the level-1 node(i,j)from the single-source external arrival model,but the arrival data flow of the level-2 node Ⅰbecomes multifold.Thus,we have to modify the arrival formula of λi.We can find that the arrival flow of node i is the aggregation of multiple flows from its child-nodes node(i,j),j=1,2,…,Ji.Therefore,we have λi.Similarly,the arrival flow of node T is the summary of ones from all its sub-nodes,λT=As a result,we can derive the arrival rate of different nodes by the following array of formulae:where αi,j,1and αi,j,2are the probability of the external data flows under node(i,j)joining the queue Qi,jand Qi;βi,j,1and βi,j,2are the rates of“selective transfer”from node(i,j)to node i and to node T respectively,as well as βifor node i to node T.Meanwhile,(1- αi,j,1) and(1- αi,j,2) are known as the“transparent rate”of external flow in queue Qi,jand Qi,respectively.In conclusion,the factors of our system with equilibrium can be conducted and inferred by Eq(1),(3)—(5),and(7).

4 System optimization

4.1 Server optimization

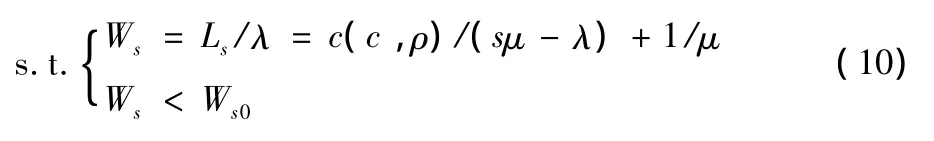

We now discuss how to find an appropriate number of processing servers in each datacenter with reduced investment.According to Little's Law,we have

Wsas the average sojourn time.As shown in the above discussion,the expected sojourn time of each request Wsshould be no longer than T0in a queue with equilibrium.Thus,given λ and T0,we can compute the minimal expected number of server s in queue Q,which satisfies the sojourn time and arrival rate condition at the same time.We can firstly get the value of λ by Eq(7),and Ls0=λT0by Eq(8),if all other parameters(i.e.α,β,Λ,P)are known.Then we could substitute all known parameters to Eq(5)with condition ρ< s,to derive the minimal value of s:We increase s one by one from an initial value(e.g.[ρ])to get Lsuntil we satisfy the condition Ls< Ls0.Therefore,given an expected sojourn time Ws0,we can perform this optimization to find out the minimal s to suffice requirement min(s)

4.2 Optimization on traffic bursts

The case we discussed in the above subsection is for normal situation,while its assumption is the arrival rate of λ is invariant.Actually,it is not the case in the real world.Concerning the application of public security,it is a key problem to quickly gather information as much as possible in certain circumstances.It is also one of the most important motivations to build our sys-tem.When emergency affairs occur,the requirements of data processing will be greatly increased suddenly.We have to study a mechanism to avoid the traffic bursts.

In the case of requirements booming,we cannot acquire more processing servers instantly in a datacenter.We have to consider taking the advantage of the networking system.In other word,we would increase the transparent rate of arrival data flow for a low-level node to its higher-level datacenter.The impact of manipulating the transparent rate to our network will be discussed below.

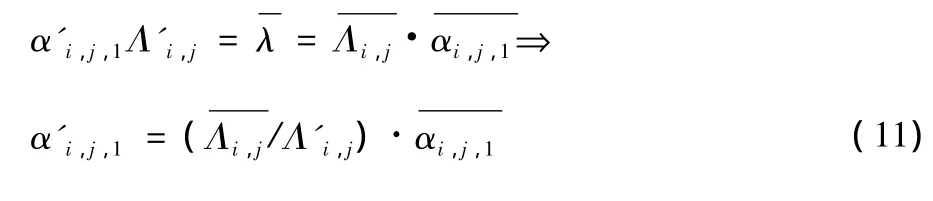

At first,we will find out the maximal arrival rate that a queue can afford in a given sojourn time Ws0in equilibrium state.It can be derived by the similar way as Eq(9)so that we have min(λ)

Thus we can compute the maximal arrival rate for each queue as a constant. Let Λ'denote the bursty external arrival rate of the system in emergency,we have ΔΛi,j=Λ'i,j-in the queue Qi,j,whereis the maximal external arrival rate that queue Qi,jcan afford with the original joining rate(where.In order to keep the arrival rate no more thanλ in Qi,j,we have to decrease the joining rate.Therefore,we have

where αi,j,1is the new joining rate of queue Qi,j.The amount of transferred workload is Δλi,j=ΔΛi,j

Suggested that all the transferred workload Δλi,jis accepted by the level-2 node i,if it still keeps the arrival rate lower thani,we can simply manipulate the new joining rate α'i,j,2by increasing the value of Δαi,j,1on the original one.Otherwise,we need to decrease the selective rate βi,j,1from node(i,j)temporally until it come to zero.If the data flow or its priority exceeds a guard line,the following strategies are executed depending on situations:①request and route the requests to the level-3 node;② decrease the arrival rates from other child-nodes..

Based on the discussion above,we setup a smart router in front of each node,which utilizes a dynamic routing algorithm to avoid the overflow of incoming requests.We log down the request arrivals and record the average value for each time interval(e.g.per hours,or other values based on the size of buffering).Thus,we can leverage the arrival patterns in the previous time to predict the stress of the incoming dataflow and dynamic change of the transparent rate of the local node.Another advantage of keeping the arrival log file is that we will study its history trace and do data mining.If the trace is found to be abnormal,a notice or warning will be given to the local node or its higher layer and the dynamic routing strategy may be changed.This design achieves implementation simplicity and has a good practical evaluation in the real world.

5 Evaluation

The overall system is a huge project,which is still under construction.The workload trace we archived for evaluation is from part of the system,which are 10 nodes under a level-2 node for 30 d.

The system parameters are listed as following.Each level-1 node is connected with several hundred monitors by high-speed fiber channel.The video rate is around 4 Mbit/s.Each time slice of the uploading video is set to 10 s.Thus its size is 4 Mbit/s×10 s=5 Mbyte.The configuration of servers in each node is Intel Xeon Processor E5540,4 Gbyte DDR memory,7 200 r/min SAS hard disk.It can handle average 2 Mbyte video data per second based on experiments.Thus we could have the average service rate μ =P/(r0T0)=2/5=0.4.The number of servers in level-1 for each node are listed

Table1.

Table1 Summary of nodes表1 各计算节点的服务器数量

The external arrivals are summed up and made an average value Λ(times/s)for each hour in our evaluation for now.The joining rate of queues in level-1 nodes and level-2 nodes are α1=0.95 and α2=0.05 for normal state.After applying the optimization algorithm,to manipulate the joining flow in the next interval we will change their value for each level-1 nodes based on its statistical result of Λ in current interval.As shown above,the guideline of average sojourn time in the queue Ws0is set to the time slice of the uploading video,which is equal to 10 s,to keep this system running smooth.Whenever it does not meet this standard within the time interval,we will record it as a“unsatisfied time interval”.The“unsatisfied rate”will be the ratio of“unsatisfied time interval”to the time elapsed for stating the health of the system,which is the lower the better.Fig 5 shows that the improvement of the“unsatisfied rate”of all level-1 nodes after applying the optimization.Those data are collected in 30 days for 720 intervals.We can infer that it would get a better result when the iterative interval is shorter.

Fig.5 Optimization vs raw of unsatisfied rate in level-1 nodes图5 一层节点优化后和原始不满意率对比图

The external arrivals are summed up and made an average value Λ(unit:times/s)for each hour in our evaluation for now.The joining rate of queues in level-1 nodes and level-2 nodes are α1=0.95 and α2=0.05 for normal state.After applying the optimization algorithm,we will change their value for each level-1 node based on its statistical result of Λ in current interval in order to manipulate the joining flow in the next interval.

Although the number of servers in a certain node is not easy to increase instantly,we can also use the data trace to estimate its optimized number at a reduced cost.The current server number of the level-2 node is 60,while its unsatisfied rate is reduced to 9.7×10-3under optimization.Based on the historical data collected,we can infer the relationship of server number and unsatisfied rate.Fig 6 plots its relationship varying the number of servers from 40 to 100 before and after the optimization.It can both help us to select an appropriate number of servers,and reveal the efficiency of our optimization.

Fig 7 reveals that the optimization can further change the distribution of the arrival rate in level-2 node.The level-2 node is more powerful than level-1 nodes in case of emergency,while it may be a relative low utilization rate averagely for a long time.The prediction optimization helps the level-1 nodes to export overloaded flows to the level-2 node.Fig 7 shows that the arrival rate will trend to distribute more equally in lower value zones,and the high arrival rate occurs much less.It proves that the optimization can greatly increase the utilization of the level-2 node and decrease the impact of bursty traffic.

Fig.6 Optimization vs raw of unsatisfied rate varying on the number of servers in level-2 node图6 二层节点在不同服务器下的不满意率优化对比图

Fig.7 Arrival rate distribution on level-2 node before/after optimization图7 二层节点优化前后数据到达率分布

Conclusions

In this paper,we propose a profound design of a large distributed surveillance network system that can be widely applied.We make a theoretical analysis on the equilibrium demand of the system,introduce a new queueing network model to characterize the dynamic video stream uploading behaviors from surveillance network,and derive the required server capacity to support the smooth processing.We then formulate two optimization problems for our system,including minimal server deployment,and dynamic routing for a satisfied QoS.We propose a practical algorithm,which can dynamically redirect the bursty requests flow to the sufficient nodes to avoid the overflow of the queueing and keep the system response in time.With this algorithm and utilizing instantaneous network statistics,the service provider periodically derives the incoming workload in the next interval,makes the decision for its routing strategies,and communicates with its higher layer for overall management.

We verify our analysis and evaluate our algorithm design in a realistic environment.The results show that it can archive a good performance under reduced equipments.

Since the system is still under construction,the future work may come from many fields.For example,we may consider the optimization of the price cost of utility and the distribution of computing servers.Furthermore,it is an important issue for us to archive more data logs from system for further data mining.It would both help the system working more efficient and discovering more interest information for research or for the benefit of civil affairs.

/参考文献:

[1]Peng Peng.200 Thousand Monitors has been Built in Shenzhen[N/OL].Xinhua Net,2007-03-21.http://news.xinhuanet.com/legal/2007-03/21/content_5876578.htm.(in Chinese)彭 蓬.深圳20万摄像头“上岗”[N/OL].新华社,2007-03-21.http://news.xinhuanet.com/legal/2007-03/21/content_5876578.htm.

[2]Cheng Wei.500 thousand monitors guarding Chongqing [N/OL].CBN,2010-11-23.http://www.yicai.com/news/2010/11/608181.html.(in Chinese)程 维.重庆50万个摄像头全城盯防 [N/OL].第一财经日报,2010-11-23.http://www.yicai.com/news/2010/11/608181.html.

[3]Samari N,Schneider G.A queueing theory-based analytic model of a distributed computer network [J].IEEE Transactions on Computers,1980,29(11):994-1001.

[4]Wu D,Liu Y,Ross K W.Queuing network models for multi-channel p2p live streaming systems[C]//IEEE International Conference on Computer Communications.Rio de Janeiro(Brazil):IEEE Press,2009:73-81.

[5]Bertsekas,D,Gallager R.Data networks[M].2nd ed.Upper Saddle River(USA):Prentice Hall,1992:221-233.

[6]Kumar R,Liu Y,Ross K.Stochastic fluid theory for P2P streaming systems[C]//IEEE International Conference on Computer Communications.Anchorage(USA):IEEE Press,2007:919-927.

[7]Wu Yu,Wu Chuan,Li Bo,et al.Cloudmedia:when cloud on demand meets video on demand[C]//The 31st International Conference on Distributed Computing Systems(ICDCS).Minneapolis(USA):[s.n.],2011:268-277.

[8]Jagannathan K,Jiang L,Naik P L,et al.Scheduling strategies to mitigate the impact of bursty traffic in wireless networks[C]//The 11th International Symposium on Modeling& Optimization in Mobile,Ad Hoc& Wireless Networks(WiOpt).Tsukuba(Japan):IEEE Press,2013:468-475.

[9]Yang W P,Wang L C,Wen H P.A queueing analytical model for service mashup in mobile cloud computing[C]//IEEE Wireless Communications and Networking Conference(WCNC).Shanghai(China):IEEE Press,2013:2096-2101.

[10]Logothetis D,Olston C,Reed B,et al.Stateful bulk processing for incremental analytics[C]//Proceedings of the 1st ACM Symposium on Cloud Computing,SoCC'10.New York(USA):Association for Computing Machinery,2010:51-62.

[11]Peixoto M L M,Santana M J,Estrella J C,et al.A metascheduler architecture to provide QoS on the cloud computing[C]//The 17th International Conference on Telecommunications(ICT).Doha(Qatar):IEEE Press,2010:650-657.

[12]Wu C, LiB C, Zhao S Q. Multi-channellive P2P streaming:refocusing on servers[C]//IEEE International Conference on Computer Communications.Phoenix(USA):IEEE Press,2008:1355-1363.

[13]Arlitt M F,Williamson C L.Internet web servers:workload characterization and performance implications[J].IEEE/ACM Transactions on Networking,1997,5(5):631-645.