YOLOv5ST:A Lightweight and Fast Scene Text Detector

2024-05-25YiweiLiuYingnanZhaoYiChenZhengHuandMinXia

Yiwei Liu ,Yingnan Zhao,⋆ ,Yi Chen ,Zheng Hu and Min Xia

1School of Computer and Science,Nanjing University of Information Science and Technology,Nanjing,210044,China

2School of Automation,Nanjing University of Information Science and Technology,Nanjing,210044,China

ABSTRACT Scene text detection is an important task in computer vision.In this paper,we present YOLOv5 Scene Text(YOLOv5ST),an optimized architecture based on YOLOv5 v6.0 tailored for fast scene text detection.Our primary goal is to enhance inference speed without sacrificing significant detection accuracy,thereby enabling robust performance on resource-constrained devices like drones,closed-circuit television cameras,and other embedded systems.To achieve this,we propose key modifications to the network architecture to lighten the original backbone and improve feature aggregation,including replacing standard convolution with depth-wise convolution,adopting the C2 sequence module in place of C3,employing Spatial Pyramid Pooling Global (SPPG) instead of Spatial Pyramid Pooling Fast (SPPF) and integrating Bi-directional Feature Pyramid Network (BiFPN) into the neck.Experimental results demonstrate a remarkable 26%improvement in inference speed compared to the baseline,with only marginal reductions of 1.6%and 4.2%in mean average precision(mAP)at the intersection over union(IoU) thresholds of 0.5 and 0.5:0.95,respectively.Our work represents a significant advancement in scene text detection,striking a balance between speed and accuracy,making it well-suited for performance-constrained environments.

KEYWORDS Scene text detection;YOLOv5;lightweight;object detection

1 Introduction

Scene text detection is an important application of object detection in computer vision.Traditionally,scene text detection methods tend to be characterized in terms of the text itself,such as considering the text as connected components [1],or considering the sequential orientation of the text[2].However,due to the complex and diverse morphology of scene text,it is difficult to accurately design a detection method on its characteristics.Therefore,it is more efficient to consider it as a general objection detection task.In recent years,with the advancements in deep learning,significant progress has been made in this field.Particularly,the advent of one-stage high-performance object detection models like the you only look once(YOLO)model[3]has enabled lightweight,real-time,and efficient scene text recognition networks.

Recently,numerous lightweight enhancement methods have been developed for YOLO,featuring low parameter counts and floating-point operations(FLOPs),examples of which include MobileNet[4],ShuffleNet [5],and GhostNet [6].However,experiments show that lower parameter numbers and FLOPs do not always lead to a higher inference speed [7].Therefore,our main motivation is to enable fast and practical scene text detection,and our work emphasizes the practical application of lightweight scene text detection models,making inference speed the focal point.

In this paper,we take the recent v6.0 version of YOLOv5 [8] as the basis for lightweight improvement.YOLOv5 series has various models with different sizes,the smallest is YOLOv5s,which offers a small model size and fast inference speed.It is suitable for scenarios where computational resources are limited.Therefore,we choose YOLOv5s for optimization,aiming at improving the inference speed and keeping an acceptable detection accuracy to make the model more friendly to low-performance devices.Our work mainly aims to reduce the computational cost by simplifying the network architecture and implementing lightweight feature aggregation methods to keep accuracy without increasing too much computational burden.

In our proposed YOLOv5ST (YOLOv5 Scene Text) model,the main contributions are as follows:

• Introducing depth-wise convolution to reduce computational complexity and cost,and removing unnecessary parts of the network to achieve faster inference speed.

• Improving the feature aggregation in the network to keep the detection accuracy as much as possible.

• Training the model with the SynthText scene text image dataset[9],and conducting detection experiments on the RoadText scene text video dataset[10].

The experimental results demonstrate the model’s ability to attain high inference speed while maintaining a relatively high level of accuracy.Additionally,we employ the Convolutional Recurrent Neural Network(CRNN)[11]scene text recognition model to realize the comprehensive functionality spanning efficient scene text detection through recognition.

2 Related Work

Traditional scene text detection methods are usually based on connected component analysis[1].This approach employs various properties,including color,edge,stylus (density calculation and pixel gradient),and texture,to determine and examine the candidate areas of the text.Candidate components are first detected by color clustering,edge clipping,or extra area extraction(text candidate extraction).Then,non-text components are filtered through manual design rules or automated train classifications(candidate correction by deleting non-candidate Text)[12].For example,Stroke width transform (SWT) [13] is a feature-extracting method that examines the density and thickness of characters to determine the edges.Stroke feature transform (SFT) [14] extends SWT to enhance performance on component separation and connection.Maximally stable extremal region (MSER)[15] detects stable regions in an image by analyzing their stability under varying thresholds and then extracting connected components.Those methods are efficient because number of connected components are usually small.However,clutter and thickening of texts will change the connected components,which reduces the accuracy and recall.To overcome this problem,the sliding window method[16]detects texts by shifting a window to all locations at several different scales to achieve a high recall;however,it has a high computational cost due to scanning all windows[12].

In recent years,neural networks,especially Convolutional Neural Network (CNN)-based networks,have shown great power in object detection.Therefore,it is feasible to consider scene text detection as a general object detection task.Object detection methods can be categorized into two-stage and one-stage methods.Two-stage methods divide the object detection task into two stages,where the first stage usually generates candidate object regions,and the second classifies and validates the candidate regions.Although two-stage methods enhance accuracy and robustness with the cooperation of two stages,they usually require more computational resources and time.On the other hand,one-stage methods detect objects directly from the input image,which are generally simpler and more efficient,but may have lower detection accuracy and recall in complex scenes.

Two-staged Regions with CNN features(R-CNN)series are typical early object detection models based on neural networks.R-CNN [17] adopts selective search to select anchor boxes (candidate regions),then extracts features for each anchor box using a CNN network and applies support vector machines (SVM) to classify the objects.Fast R-CNN [18] directly extracts the feature of the whole image,improving the efficiency.Faster R-CNN[19]introduces a region proposal network(RPN)to generate anchor boxes,predict object bounds and objectness scores at each position,and lead to highquality region proposals.Mask R-CNN[20]proposes a fully convolutional network(FCN)for precise pixel-to-pixel prediction manner,enabling finer alignment and more accurate segmentation.There are also CNN-based methods designed for scene text detection[12],such as TextBoxes[2]and Rotational Region CNN (R2CNN) [21].To detect lined text,TextBoxes designs a series of text-box layers that utilize convolution and pooling operations on multi-scale feature maps to predict text bounding boxes.R2CNN generates axis-aligned bounding boxes for text using RPN.For each axis-aligned text box proposed by RPN,R2CNN extracts its pooled features using different pooling sizes and employs simultaneous prediction of text/non-text scores,axis-aligned boxes,and tilted minimum area boxes.Finally,the detection candidates are post-processed using titled non-maximal suppression to obtain the final detection results,achieving better performance with inclined anchor boxes.Nonetheless,these detection methods still have relatively high computational costs and are difficult to achieve at high speed.

Real-time performance is crucial in numerous practical object detection applications,such as autonomous driving and real-time surveillance.Meanwhile,in terms of the trade-off between precision and recall,two-stage methods usually have certain misdetections and omissions in the first stage and need correction by the subsequent processing in the second stage.In contrast,through end-toend training,one-stage methods offer a better balance between precision and recall,which reduces these issues.Therefore,the one-stage object detection methods have gained more popularity.One typical example is SSD(Single Shot MultiBox Detector)[22],known for its fast speed,high accuracy,and adaptability to various object sizes.It converts the bounding boxes into a set of default boxes with different shapes and scales over different feature map locations,generates scores for each object category in each default box,and adjusts the boxes to better match the object shapes.TextBoxes++[23] specializes in detecting texts with arbitrary orientations,small sizes,and significantly variant aspect ratios.This specialization is achieved through adaptations on SSD,including the generation of arbitrarily oriented bounding boxes and the incorporation of multi-scale inputs.

As a one-stage object detection model,YOLO is proposed in [3] with good accuracy and fast speed.YOLO divides the input image into grids,with each grid cell responsible for detecting objects within it.For each cell,YOLO predicts whether an object is contained and the bounding box coordinates.It also predicts the class probability of the object for each bounding box.In contrast to traditional two-stage methods,YOLO makes direct predictions on the whole image,greatly reducing the computational cost.Additionally,YOLO benefits from global contextual information,improving detection accuracy.Over time,the YOLO model is continuously being optimized and improved.YOLOv2 [24] introduces anchor boxes to increase recall and employs the Darknet-19 network to improve accuracy,leading to faster speed and better performance.YOLOv3 [25] extracts features from three different scales using a concept similar to feature pyramid networks(FPN)[26],enabling more accurate detection of objects of various sizes,and replacing the feature extraction network with Darknet-53 for better accuracy.YOLOv4[27]combines numerous advanced features including Weighted-Residual-Connections (WRC),Cross-Stage-Partial-connections (CSP),Cross mini-Batch Normalization(CmBN),Self-adversarial-training(SAT),Mish activation,Mosaic data augmentation,DropBlock regularization,and CIoU loss.For example,a CSPDarknet [28] backbone is utilized to achieve a larger receptive field and better multi-scale detection capability.YOLOv5[8]is more deeply optimized in activation functions and optimizers and shows better performance.In the recent version YOLOv5 v6.0,BottleneckCSP [28] modules have been replaced by C3 [14],the Focus module in the backbone has been replaced by a Convolution-BatchNormalization-Sigmoid (CBS) block [29],and Spatial Pyramid Pooling (SPP) [30] has been replaced by SPPF [8].These modifications aim at enhancing detection speed and accuracy,making YOLO more suitable for real-time object detection,including scene text detection.

In recent research,most of the recent approaches are driven by deep network models [31].For example,Fast convergence speed and Accurate text localization (FANet) [32] is a scene text detection network that achieves fast convergence speed and accurate text localization.It combines the transformer feature learning and normalized Fourier descriptor modeling.Despite this,we still use YOLO in this paper because it is easy to use in real applications and can be extended to other object detection tasks.

3 Methodology

3.1 Network Architecture

The overall network of YOLOv5ST is based on YOLOv5s,a small version in the YOLOv5 v6.0 series.As depicted in Fig.1 (modifications are in red),with the main object of increasing detection speed while maintaining detection accuracy as much as possible,four key modifications are made to the original YOLOv5 network architecture:

(1)Replacing standard convolution with depth-wise convolution.This substitution significantly reduces computational complexity by decreasing the count of convolution kernels.

(2) Adopting the C2 sequence module in place of C3.This streamlined module involves fewer convolutional operations,incorporating depth-wise convolution,thereby amplifying detection speed.

(3)Employing SPPG instead of SPPF.SPPG is purposefully designed to fuse global features from varying scales.Consequently,the backbone’s output incorporates global information for subsequent stages in achieving higher accuracy.

(4)Integrates BiFPN into the neck.This integration facilitates the aggregation of a more extensive array of features within the feature pyramid,ultimately contributing to a heightened level of accuracy.

The modification (1) and (4) are existing approaches,and modifications (2) and (3) are our contributions in this research work.Meanwhile,we integrate these modifications into the specific network architecture.Overall,the network architecture of our proposed YOLOv5ST model is depicted in Fig.1.

Figure 1: Overall architecture of YOLOv5ST model

3.2 Depth-Wise Convolution

DWConv(Depthwise convolution)is used in our model to reduce computational cost in standard convolutions.

In [33],the proposed convolutional operation,also known as DSC (depth-wise separable convolution),consists of two parts,depth-wise convolution and point-wise convolution.In depth-wise convolution,the convolutional filters are divided into N groups that are equal to the number of input channels,and point-wise convolution is a 1 × 1 convolution to connect previous output to output channels.

In a convolution operation,we denote the input feature map size asDF×DF,kernel size asDk×Dk,number of input channels asCin,and the number of output channels asCout.For a standard convolution,the computation cost is

For depth-wise convolution,the computation cost is

For point-wise convolution,the computation cost is

Therefore,the overall computation cost of DSC is

Different from the depth-wise separable convolution,we can let N equal the greatest common divisor ofCin,andCout.Thus,the computation cost of DWConv is

DWConv can be applied in the CBS(Conv-BatchNorm-SiLU)block which consists of a convolution layer,a batch norm layer,and a SiLU activation function [27] to form a DWCBS (DWConv-BatchNorm-SiLU)block.

3.3 C2 Sequence Module

The C2seq(C2 sequence)module is inspired by C2f[34]to simplify the original C3,these modules are depicted in Fig.2.

Figure 2: (a)Bottleneck module used in C3;(b)C3 module used in YOLOv5;(c)C2f module used in YOLOv8;(d)Our proposed C2seq module with specific c_in and c_out parameters

As shown in Fig.2a,Bottleneck in C3 is designed to enhance feature extraction and information flow across different stages or layers of the network.As shown in Fig.2b,C3 is a CSP block with 3 CBS blocks that let the input pass through into two parallel halves.As shown in Fig.2c,C2f decreases the number of CBS blocks from 3 to 2 and uses split to do parallel computation.As shown in Fig.2d,we propose C2seq that further simplifies C2f.Experiments show that split operation in C2f has an impact on the inference speed of the model,so we remove this operation.Then,to overcome the disadvantage of not using residual connection [28],we keep some C3 in our network,as shown in Fig.1.In addition,we apply DWCBS to further simplify the module.In C2seq,the kernel size in 2 CBS blocks in Bottleneck is set to 3.Since in C2seq,the number of input and output channels of Bottleneck are set to different values,the shortcut in Bottleneck is not used,that is because C2seq modules are mainly used in neck,where original C3 does not use shortcut as well;and at the same time,it can have a higher channel compression ratio inside the Bottleneck to achieve better performance.

3.4 SPPG Module

In the YOLOv5s network,before feature aggregation in the neck,the output feature maps are sent to the SPPF(Spatial Pyramid Pooling Fast)module[8]to increase the receptive field and separate the most important features by pooling multi-scale versions of themselves[29].

However,multi-scale pooling can be applied not only on feature maps in a single scale.In the feature extraction process in the backbone,we have a sequence of feature maps at different scales.By considering those feature maps in one multi-scale pooling module,we can better aggregate global features,which is beneficial for accuracy.Therefore,in our network,we propose the SPPG (Spatial Pyramid Pooling Global) module,which is depicted in Fig.3.In the PANet [35] of YOLOv5,there are three scales,P3,P4 and P5.The output feature maps of P3,P4,and P5 in the backbone are sent to SPPG simultaneously to fuse global features with different scales to take advantage of multiscale features [36].For each input feature map,a max pool operation and an average pool operation are implemented simultaneously.Max pooling facilitates the extraction of salient features,while average pooling retains background information.The collaborative use of max pooling and average pooling ensures an accurate and seamless fusion of global features.The kernel size and padding for each pooling operation are set at 3 (in contrast to 5 in Spatial Pyramid Pooling with Fusion [SPPF]),as smaller kernels enhance the detection of smaller objects,thereby improving overall detection performance [29].The stride values for the two pooling operations on each input feature map are 4,2,and 1,respectively.Meanwhile,CBS blocks that are used in SPPF are removed for simplification.

3.5 BiFPN Feature Aggregation

Based on the PANet[21]architecture in YOLOv5,there are 3 different scale features(P3,P4 and P5).Multi-scale feature fusion aims to aggregate features at different resolutions [37].To fuse more features without adding much cost,we can implement BiFPN architecture by adding an extra jump connection edge from the original input node to the output node if they are at the same level,thus reusing the feature in the original input node[37].Specifically,in YOLOv5,P4 output in the backbone can be concatenated in the top-down process in the neck with a jump connection edge.As shown in Fig.4,the red edge represents the additional connection in BiFPN.

Figure 3: (a)The SPPF module used in YOLOv5;(b)The proposed SPPG module

Figure 4: YOLOv5 with BiPFN feature aggregation

4 Experiments

4.1 Data Set

We decide to use a large image dataset to train the model to achieve better precision and a video dataset to test the scene text detection performance.Therefore,in our experiments,SynthText dataset[9]and RoadText dataset[10]are used.The SynthText dataset is an image dataset,and the RoadText dataset is a video dataset.SynthText is used as train data,because as a synthetic dataset,it has notable advantages due to its abundant data volume and precise annotations,making it highly suitable for training.RoadText serves as test data because it includes annotated ground truth information concerning the texts found on the road,which demonstrates a genuine working environment of the model.

SynthText has synthetic text in diverse scene shapes and fonts,having 858,750 images,7,266,866 word instances,and 28,917,487 characters in total.The images are in various resolutions but mostly 600×450 pixels.The synthetic dataset has notable advantages due to its abundant data volume and precise annotations,making it highly suitable for training.Some examples are shown in Fig.5.

Figure 5: Some examples of SynthText dataset

The RoadText dataset comprises 500 real-world driving videos.Each video is 30 FPS and is approximately 10 s long,featuring a resolution of 1280×720 pixels.The dataset includes annotated ground truth information concerning the texts found on the road.It demonstrates a genuine working environment of the model,thus we chose this dataset for testing.Some examples are shown in Fig.6.

Figure 6: Some examples of RoadText dataset

RoadText dataset has text data in various complex application scenarios,which the model may encounter in real applications,as shown in Fig.7.

Figure 7: Some examples of RoadText datasets in different scenarios.(a)Light(normal)environment;(b)Dark environment;(c)Raining environment

4.2 Implementation Details

Our detection network implementation is based on YOLOv5s v6.0 and the PyTorch framework.The trains run 11 epochs with a batch size of 64,a learning rate of 0.01,a learning rate momentum of 0.937,and use SGD optimizer with decay of 0.0005.We implement ablation study to verify the validity of each modification,as listed in Table 1.We also implement a series of lightweight detection networks with the same settings,as listed in Table 2 for comparison.These tests are run on an NVIDIA GeForce RTX 2060 GPU.

Table 1: Ablation study results

Table 2: Detection performance comparison

4.3 Ablation Study

In this section,we present the impact of various modifications applied to our proposed model.The experimental environment and datasets remain consistent throughout.The performance of the original YOLOv5s v6.0 model is listed in the first row of Table 1,and models with different modifications are listed in other rows.

For DWConv and C2seq,the number of parameters (params) and Floating Point Operations(FLOPs)decrease significantly,leading to a substantial increase in their inference speed.Particularly noteworthy is the case of C2seq,which exhibits a slight increase in mean Average Precision(mAP).In the case of SPPG and BiFPN,there is a notable increase in the number of parameters(params),FLOPs,and mAP.However,the drop in inference speed is insignificant.It can be seen that these improvements have had the intended effect.

4.4 Scene Text Detection

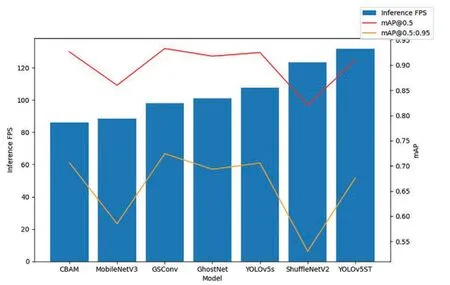

To compare the performance of scene text detection,we compare our proposed YOLOv5ST model with other YOLO-based object detection models,the results are listed in Table 2.

From Table 2,it can be seen that among those listed models,our proposed YOLOv5ST model has the best inference speed at 135.58 FPS,which increases by 26.09% compared to YOLOv5.As a relatively small and light model,its number of parameters and FLOPS reduced by 38.66%and 42.41%compared to YOLOv5s,respectively.Although there are other models like GhostNet,MobileNet v3,and ShufflNet v2 that have fewer parameters and FLOPs than ours,they do not achieve an inference speed as fast as ours.At the same time,our proposed YOLOv5ST model keeps a good mAP accuracy,with an acceptable decrease by only 1.63%and 4.19%in mAP 0.5 and mAP 0.5:0.95 compared to YOLOv5s,respectively.In conclusion,our proposed YOLOv5ST model has better overall performance in scene text detection.The performance comparison is also shown in Figs.8 and 9.Since YOLOv5ST is the closest to the upper left corner of Fig.9,it has the best overall performance.

In addition,to implement a concrete application of an end-to-end scene text detection and recognition system,YOLOv5ST integrates the CRNN [11] model to recognize the detected text.To demonstrate the joint performance of our scene text detection model and CRNN scene text recognition model in a practical application,some examples are shown in Fig.10.The models can detect and recognize most of the texts in the dataset,thus a lot of applications can be implemented based on our models to achieve good performance,especially in performance-limited devices like drones,closedcircuit television cameras,and other embedded devices.

Figure 8: Detection performance comparison

Figure 9: Detection performance comparison

Figure 10: Some examples of scene text detection and recognition

Compared to the original YOLOv5,YOLOv5ST has fewer false detections,as shown in Fig.11.This is attributed to our model that optimizes the feature extraction and increases the feature aggregation,thus leading to better accuracy.

Also,compared to the original YOLOv5,YOLOv5ST has better performance in handling various complex scenarios.Fig.7 shows different scenario examples in the RoadText dataset.In those scenarios,precision and recall are shown in Table 3.In most scenarios,YOLOv5ST has better recall and precision than YOLOv5s.

Table 3: Detection performance comparison

Figure 11: Some examples of YOLOv5 and YOLOv5ST

However,the model still has some false detections,as shown in Fig.12.For instance,the model occasionally misclassifies crosswalks and other objects that have visual patterns similar to text as textual elements.These misclassification cases show that the complexity of real-world scenes still presents a challenge for the model.To solve this,we need a more precise approach to training that specifically handles complex environments,by discovering deeper intrinsic connections of visual features and using more training data.Furthermore,the current model still has the potential for optimization and refinement through additional training.

Figure 12: Some examples of false detections

5 Conclusions and Future Work

Fast real-time scene text detection is an important task in object detection.In this paper,we propose a lightweight and fast scene text detection model based on the YOLOv5 v6.0 network.Our proposed YOLOv5ST model implements four key modifications on the YOLOv5s to reduce the computational cost,increase inference speed,and keep detection accuracy as much as possible.The proposed model uses DWConv and C2seq to simplify and lightweight the network and uses SPPG and BiFPN to enhance feature aggregation.We conduct experiments to demonstrate the feasibility of these modifications,and compare YOLOv5ST with other YOLO-based lightweight object detection models,the results show that we achieved a significant increase in inference speed of over 26%with less than 5%mAP decrease,thus proving the validity of our model.We also combine the model with a CRNN model to implement an end-to-end scene text detection and recognition system,so that practical applications can be developed based on our models,especially in performance-limited devices like drones,closedcircuit television cameras,and other embedded devices.

In summary,this paper makes improvements to the YOLOv5s network architecture to reduce the burden on the original backbone network improve feature aggregation,and prove the performance by experiments.

We believe our research will produce positive results and influence the object detection field.For a long time,in various lightweight object detection tasks,how to better balance the accuracy and inference speed is the key to research.This research shows a possibility to achieve both fast and accurate text detection,and these optimizations can also be combined with other object detection algorithms such as newer versions of YOLO,or applied to other object detection tasks.

In future work,we will attempt to further enhance both the accuracy and efficiency of our model.One key direction is to consider more data scenarios.For example,considering different text types and occlusion situations,as well as more kinds of weather conditions like foggy and snowy scenarios,to improve the robustness of the model in complex scenes.Another key direction is to incorporate our approach with the latest versions of YOLO,building lighter models by optimizing the network architecture,fine-tuning hyperparameters,and redesigning the backbone networks or feature extractors to discover better performance of YOLO.Additionally,we will conduct more experiments to investigate more suitable optimization algorithms and loss functions that best suit our specific problem domain.

Acknowledgement:The authors would like to express their gratitude for the valuable feedback and suggestions provided by all the anonymous reviewers and the editorial team.

Funding Statement:This work is supported in part by the National Natural Science Foundation of PR China(42075130)and Nari Technology Co.,Ltd.(4561655965).

Author Contributions:Study conceptualization,Yiwei Liu,Yi Chen and Yingnan Zhao;Data collection,Yiwei Liu and Yi Chen;Investigation,Yiwei Liu,Yi Chen,Zheng Hu and Min Xia;Methodology,Yiwei Liu,Yi Chen and Yingnan Zhao;Model implementation and training,Yiwei Liu and Yi Chen;Data analysis,Yiwei Liu and Yi Chen;Manuscript draft,Yiwei Liu and Yingnan Zhao;Manuscript revision,Yiwei Liu and Yingnan Zhao.All authors reviewed the results and approved the final version of the manuscript.

Availability of Data and Materials:The code is available at https://github.com/lyw02/YOLOv5ST.

Conflicts of Interest:The authors declare that they have no conflicts of interest to report regarding the present study.

杂志排行

Computers Materials&Continua的其它文章

- A Hybrid Level Set Optimization Design Method of Functionally Graded Cellular Structures Considering Connectivity

- A Spectral Convolutional Neural Network Model Based on Adaptive Fick’s Law for Hyperspectral Image Classification

- An Elite-Class Teaching-Learning-Based Optimization for Reentrant Hybrid Flow Shop Scheduling with Bottleneck Stage

- Internet of Things Authentication Protocols:Comparative Study

- Recent Developments in Authentication Schemes Used in Machine-Type Communication Devices in Machine-to-Machine Communication:Issues and Challenges

- Time and Space Efficient Multi-Model Convolution Vision Transformer for Tomato Disease Detection from Leaf Images with Varied Backgrounds