An image dehazing method combining adaptive dual transmissions and scene depth variation

2024-01-08LINLeiYANGYan

LIN Lei,YANG Yan

(School of Electronic and Information Engineering,Lanzhou Jiaotong University,Lanzhou 730070,China)

Abstract:Aiming at the problems of imprecise transmission estimation and color cast in single image dehazing algorithms,an image dehazing method combining adaptive dual transmissions and scene depth variation is proposed.Firstly,a haze image is converted from RGB color space to Lab color space,morphological processing and filtering operation are performed on the luminance component,and the atmospheric light is estimated in combination with the maximum channel.Secondly,a Gaussian-logarithmic mapping of haze image is used to estimate the dark channel of haze-free image,and the bright channel of haze-free image is obtained by using the inequality relation of atmospheric scattering model.Thus,the dual transmissions are gotten.Finally,an adaptive transmission map with joint optimization of dual transmissions is constructed according to the relationship between depth map and transmission.A high-quality haze-free image can be directly recovered by using the proposed method with the atmospheric scattering model.The experiments show that the recovery results have natural color,thorough dehazing effect,rich detail information and high visual contrast.Meanwhile,good dehazing effects can be gotten in different scenes.

Key words:Lab color space; scene depth; function mapping; adaptive dual transmission; night image dehazing

0 Introduction

Outdoor images captured in foggy environments lose contrast and color fidelity because light is absorbed and scattered by turbid media (e.g.,particles and water droplets) in the atmosphere during propagation.In addition,since most automatic systems require high image clarity,heavily degraded haze images will make them unable to work properly.Therefore,it is beneficial for further image processing and computer vision applications by obtaining clear images,such as image classification,image/video detection,remote sensing,video analysis,and recognition[1-2].

Currently,image dehazing methods can be broadly divided into three categories:image enhancement[3-4],machine learning[5-8],and image restoration[9-13].The representative methods based on image enhancement are wavelet transform algorithm[3]and Retinex algorithm[4].This kind of algorithms has extensive applicability and is commonly used in early research.However,these algorithms neglect the nature of image degradation and only perform simple processing to enhance visual intuition,which cannot achieve true dehazing.Algorithms based on machine learning mainly apply neural networks to achieving image dehazing.Cai et al.[5]devised an end-to-end network model by combining assumption and prior information in the recovery algorithm.The restoration results were ideal.Li et al.[6]proposed an all-in-one network model.It rewrited the atmospheric light and transmission into a new function.Meanwhile,it used multi-layer convolutional network to train and optimize the atmospheric light and transmission,and finally got the haze-free images.Ren et al.[7]proposed a multi-scale convolutional neural network to train the transmission.At the same time,they used a fine-scale network to optimize the transmission.Li et al.[8]further strengthened network accuracy by gradually filtering network residuals,and the detailed information of haze images could be well preserved.The effect of dehazing was apparent.This type of algorithm usually requires numerous training samples to attain better network performance for good dehazing.However,there are not a large number of real haze image datasets currently,and most of the images used for training are synthetic images.Therefore,this kind of algorithms is limited too.

Recently,single image restoration algorithms based on prior or hypothesis have made great progress.This kind of algorithms establishes an atmospheric scattering model based on the essence of image degradation,and realizes the restoration of a single image through the estimation of atmospheric light and transmission.These methods belong to the image restoration algorithm.Because the restoration effect is real and natural,the kind of algorithm has received extensive attention.After statistical analysis of a great number of outdoor haze-free images,He et al.[9]found that most of the pixel values of a haze-free image had at least one color channel with a lower value in non-sky area.Based on this finding,they proposed a dark channel prior method,and the haze-free image was recovered with a more obvious dehazing effect.However,this algorithm failed for the sky regions.Wang et al.[10]proposed a dehazing method based on linear transformation,which estimated the minimum channel of a haze-free image through the linear transformation of minimum channel of haze image.And a quadratic tree subdivision method was used to estimate atmospheric light value.The dehazing of this method was thorough,but the restored image was generally dark.Yang et al.[12]presented a dehazing algorithm,and a segmentation function was used to replace the minimum filtering operation.It effectively improved the problem that the dark channel prior algorithm was prone to produce halo effects at the sudden depth of field.Zhu et al.[13]proposed a dehazing method based on color attenuation prior (CAP),which used the relationship of brightness and saturation to obtain parameters of scene depth model through supervised learning,and then estimated the transmission.However,this method could not adjust parameters adaptively,and the restored image often leaved residual fog.

An image dehazing method combining adaptive dual transmissions and scene depth variation is proposed.Firstly,according to the CAP,the scene variation information is obtained through the connection of brightness,saturation and haze concentration,and a depth map is constructed.Then,the dark channel and the bright channel of haze-free image are estimated by using Gaussian-logarithmic mapping and inequality relationship between haze image and haze-free image.And an adaptive transmission is realized according to the scene change information.Finally,the luminance component of Lab color space and the maximum color channel of haze image are combined to estimate the local atmospheric light for restoring haze-free image.The experimental results show that a more precise transmission is obtained by using proposed algorithm,and it is suitable for dehazing in a variety of scenes.

1 Related work

1.1 Atmospheric scattering model

The atmospheric scattering model widely used in the field of image dehazing is shown as

I(x)=J(x)t(x)+A(1-t(x)),

(1)

whereI(x) is the observed hazy image;J(x) is the final restored haze-free image;t(x) is the medium transmission,andAis the global atmospheric light.

Assuming that the haze is homogenous,t(x) can be defined as

t(x)=e-βd(x), 0≤t(x)≤1,

(2)

whereβis the medium extinction coefficient,andd(x) is the scene depth (i.e.,the distance between the site and the imaging equipment).

1.2 Dark channel prior and bright channel prior

He et al.[9]put forward the dark channel prior through statistically observation of a large number of haze-free images.Within most of areas excluding the sky region,the intensity of some pixels tends to 0 at least one color (RGB) channel,that is

(3)

where Ω1(x) is ax-centered filter window;Jc(y) is a color channel of imageJ; andJdark(x) is a dark channel map.

Inspired by the dark channel prior,Bi et al.[14]proposed a bright channel prior,which meant that pixels of light source or sky area usually contained at least one high-intensity color channel.The formula is

(4)

where Ω2(x) is the filtering window centered onxandJc(y) is a color channel of imageJ.Eq.(4) describes the bright channel map of any image.

Assuming that atmospheric light is known,the transmission can be derived from Eq.(1).

(5)

As long as the transmissiont(x) is known and the atmospheric lightAis estimated,haze-free image can be recovered by using Eq.(1).

(6)

wheret0is a lower bound on the transmission.To avoid a denominator of 0,it is usually taken as 0.1.

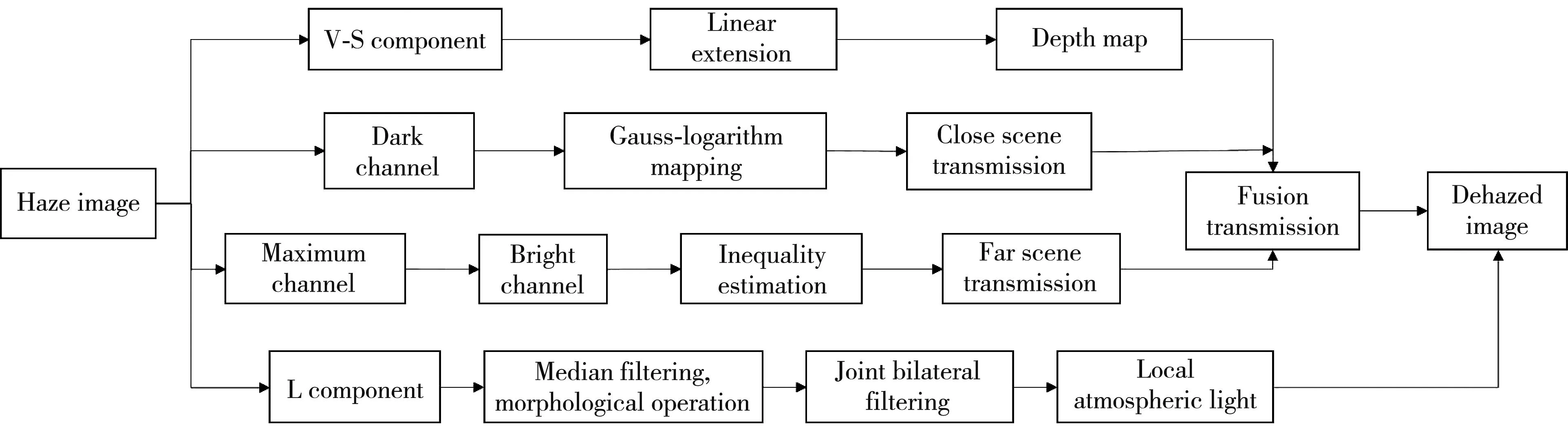

According to the atmospheric imaging theory,estimating transmission is a prerequisite for obtaining a clear image.However,the degree of image degradation is different between far scene and close scene,so different transmission estimates should be made in far scene and close scene.On this basis,an image dehazing method combining adaptive dual transmissions and scene depth variation is proposed.The flow chart is shown in Fig.1.

Fig.1 Algorithm flow chart

Firstly,a rough scene depth map of haze image is obtained according to the statistical relationship among haze concentration,brightness and saturation.In order to combine dual transmissions for accurate transmission estimation,the rough scene depth map is linearly extended to obtain a depth map.Secondly,according to dual channels prior theory,the two channels of haze image are obtained,and the dark channel of haze image is mapped by function to obtain the dark channel of haze-free image so as to obtain close scene transmission.The far scene transmission is obtained according to the bright channel prior and the inequality relationship of atmospheric imaging theory.An adaptive fusion transmission is realized by combining the depth map and dual transmissions.Finally,the local atmospheric light is estimated by using the inequality relationship between luminance component and maximum channel,and a haze-free image is recovered by combining the atmospheric scattering model.

2 Proposed method

2.1 Atmospheric light estimation

The atmospheric lightAis a crucial parameter in the atmospheric scattering model,which usually determines the visual effect of recovered haze-free image.The average of the highest intensity pixel values in haze image corresponding to the first 0.1% of brightness values in dark channel image is used as the atmospheric light value[9].This method is vulnerable to the pixel values of some white areas (e.g.,snowy ground or a white wall),so the estimated atmospheric light value is prone to errors.Wang et al.[10]used a quadratic tree subdivision algorithm to estimate atmospheric light value,but it was susceptible to interference from large white areas too.Kumari et al.[15]used morphological operations to eliminate the effect of partially white areas in haze images on the estimation of atmospheric light.Duan et al.[16]indicated that there was no global uniform atmospheric light value in night or multi-source haze images.In order to estimate atmospheric light more accurately and make the dehazing algorithm applicable to more scenes,the local atmospheric light method[17]is improved,and specific steps are as follows.

1) A haze image is transfered from RGB color space to Lab color space.The luminance component is extracted and normalized,and median filtering is performed on it to eliminate noise and weaken the impact of highlight pixels.

2) Morphological processing is used on the result of step 1) to avoid influence of white area on the atmospheric light estimation.Morphologic operation filter kernel is min(W,H)/15,whereWandHare the original image sizes.

3) Joint bilateral filtering is used between the luminance componentL(x) and the result of step 2),so that the block effect can be eliminated and primary local atmospheric lightA0(x) can be estimated.

4) In general,the values of local atmospheric lightA(x) are not greater than the pixel values of maximum channel of haze image,while the results obtained from the primary local atmospheric light are too small in local area,as shown in Fig.2(c).Thus,there is an inequality relationship,that is

(7)

Fig.2 Comparison of local atmospheric light improvement process and restoration results

A(x)=αA0(x)+βI′(x),

(8)

whereαandβare the atmospheric light fusion parameters satisfyingα+β=1.To make the local atmospheric light have adaptive characteristic,a pixel-by-pixel grayscale average of the primary local atmospheric lightA0(x) is calculated as the value ofα.

The improvement process of local atmospheric light and final result are shown in Fig.2.Compared with the local atmospheric light and its recovered result[17],it can be found that the local atmospheric light is too high in local area[17],which causes partial bright or color cast phenomenon in recovered image.The improved local atmospheric light restoration effect is better.Fig.3 shows the comparison of local atmospheric light values and their recovery results in a scene without strong light source.

Fig.3 Comparison of local atmospheric light and recovery results between this paper and Ref.[17]

2.2 Adaptive dual transmissions

2.2.1 Depth map

Zhu et al.[13]conducted experiments and analysis on a great number of haze images and found that the haze concentration varied with the scene depth in most outdoor haze images.At the same time,the higher the haze concentration,the greater the difference between saturation and brightness (i.e.,v(x)-s(x)) in HSV color space.Thus,the haze concentration is linearly related to the difference between brightness and saturation.According to the relationship between scene depth and haze concentration,it can be obtained that the scene depth is increasing with the difference between brightness and saturation.

The relationship is

d(x)∝c(x)∝v(x)-s(x),

(9)

wherev(x) is the brightness component of scene;s(x) is the saturation component,and they are obtained after haze image is converted from RGB color space to HSV color space;c(x) represents the haze concentration;d(x) is the scene depth.Fig.4 shows the relationship betweenc(x) andd(x).

Fig.4 Relationship between haze concentration and scene depth

It can be seen that haze concentration and the difference between brightness and saturation are the lowest in misty ranges (i.e.,close scene),and haze concentration and the difference between brightness and saturation are the highest in dense-haze ranges (i.e.,far scene).That is,as the haze concentration increases,the difference between brightness and saturation gradually increases,and the scene depth also becomes larger.

The result of (V(x)-S(x)) is taken as a rough scene depth,as shown in Fig.5(b).However,it fails for white objects.The difference between brightness and saturation of white objects is usually large.However,larger (V(x)-S(x)) is indicated as far scene in scene depth information.Therefore,a white object in close scene will make the estimation of scene depth information incorrect.So detail information of white objects should be omitted.

Fig.5 Scene depth information and depth map optimization process

The minimum filtering of the difference between brightness and saturation is used to approximate scene depth.The specific formula is

(10)

(11)

where Max(d′(x)) is the maximum ofd′(x); Min(d′(x)) is the minimum ofd′(x).In order to eliminate the block effect and improve the continuity at abrupt change ranges of scene depth,D(x) is optimized by using cross-bilateral filtering with Eqs.(12)-(13).

Gσs(‖x-y‖)[1-g(y)],

(12)

(13)

After cross-bilateral filtering,the output depth map tends to be smooth as a whole.As scene depth changes from close to far,the pixel value of depth map gradually increases without block effect.Therefore,the depth information can be reflected by optimized depth map efficiently.

2.2.2 Estimation of dual transmissions

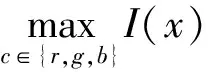

Currently the dark channel prior algorithm[9]is the most representative algorithm in the field of dehazing.It assumes that the dark channel value of haze-free image (normalized by atmospheric light) is approximately zero.However,the dark channel value in white or sky area is much greater than 0.Such regions do not satisfy the dark channel prior,soJc(x) in Eq.(5) is unknown,and transmissiont(x) cannot be estimated accurately.To cope with this situation,confidence value is introduced through human vision assumptions to recalculate the dark channel marked by haze image[18].It can decrease contour effect by reducing the dark channel of sky area,but it also reduces the dark channel of non-sky area,leading to overestimation of the transmission map.New assumptions about the dark pixel values of haze-free images are made.Since the image is known to be a haze image,the dark channel of haze image is sought to use to approximate the dark pixel values of haze-free image in the close scene range.While the dark pixel values of brighter regions of haze-free image (i.e.,the sky and light sources ranges) are improved to avoid the occurrence of smaller values oft(x).The calculation formula is

(14)

(15)

(16)

whereJd1(x) andJd2(x) are two function mappings of the dark channel of haze image (i.e.,Id(x)/A(x));δis the variance,and takes 0.15;εis an adjustment parameter,and takes 0.95;Jd1(x) is a Gaussian mapping,and the mapping result has lower value in close scene and higher value in far scene.But according to the dark channel theory,the dark pixel values in the close scene ranges only tend to be 0.In order to accurately estimate the dark pixel values in the close scene of haze-free image,a geometric average of Gaussian mapping and logarithmic mapping can be used as output result.While it avoids the situation where Gaussian mapping makes the dark channel value of a haze image (i.e.,Id(x)/A(x)) equal to the dark channel value of haze-free image (i.e.,Jd(x)/A(x)) in the far scene.In this way,the result of geometric average (i.e.,Jd′(x)) can makeJd1(x)suppressed when values are too large in far scene and compensated when values are too small in close scene,as shown in Fig.6.

Fig.6 Function mapping process

The transmission calculation formula of close scene is

(17)

whereω1is an adjustment coefficient,and takes 0.95.

The recovery result is not thorough only by using the close scene transmission,as shown in Fig.7(c).In order to improve dehazing effect,a far scene model is further utilized to achieve the estimation of far scene transmission.A large number of experiments have shown that the transmission estimated by the bright channel has a better effect on sky or light source areas,while the sky or light source regions are mostly distributed in the far scene.Therefore,the bright channel is used to get far scene transmission.In order to make the transmission change more slowly at the junction of the far scene and close scene,the bright channel is improved.According to the inequality relationship among the dark channel of a haze-free image (Jd(x)),the bright channel of a haze-free image (Jb(x)) and the bright channel of a haze image (Ib(x)) in the atmospheric scattering model,there is

Fig.7 Comparison of single transmission,dual transmissions,and their restoration results

Jd(x)≤Jb(x)≤Ib(x).

(18)

The bright channel map of haze-free image is improved,and the formula is

Jb(x)=(1-φ)Jd(x)+φIb(x),

(19)

whereφis an adjustment parameter with a value range between[0,1].Because the bright channel value of a haze-free image is more related to the bright channel of a haze image,φis taken as the average ofIb(x).Then the transmission of far scene can be calculated by

(20)

whereω2is an adjustment coefficient,which is taken as 0.95 and it is an empirical value.

2.2.3 Adaptive fusion coefficient

Sincetdis more suitable for close scene ranges andtbis more suitable for far scene ranges,the scene depth information of haze image is combined to construct a weightλfor fusing the transmissions of close scene and far scene.In order to make the transmission change slowly at the junction of close scene and far scene,and maketd(x) have a larger weight in close scene and maketb(x) have a larger weight in far scene,a Gaussian function is used to fit the depth map.The specific equation is

(21)

whereδλis the variance of fusion coefficientλ(x).After extensive experiments,δλis taken as 0.25.

Then the fused transmission is

t(x)=tb(x)λ(x)+td(x)(1-λ(x)).

(22)

As can be seen from Fig.7,the dehazing of sky region is not thorough when only the close scene transmission is used.After the fusion of dual transmissions,a more thorough dehazing effect is obtained in both far scene ranges and close scene ranges.

2.3 Image restoration

After getting the transmissiont(x),a refined transmission is obtained by guided filtering withIgrayas the reference image.Combined with the local atmospheric lightA(x),a haze-free image is obtained by using Eq.(6),in which the global atmospheric lightAis substituted by the local global atmospheric lightA(x).Fig.8 shows a comparison of transmissions and their recovery results obtained by a set of different algorithms.

Fig.8 Comparison of transmissions and restoration results of different algorithms

As can be seen from Fig.8,the transmission estimation is more accurate (i.e.,the light source range),which is more consistent with the two-channel prior theory,and the recovery result is clearer and more natural.

3 Experiment

The operating system is 64-bit Windows 10,the program running environment is Matlab 2016a,and the hardware environment is Intel(R) Core(TM) i7-6500U CPU@2.50 GHz with 8 GB RAM.The experimental comparison and analysis of a great number of images are performed in both subjective and objective aspects.

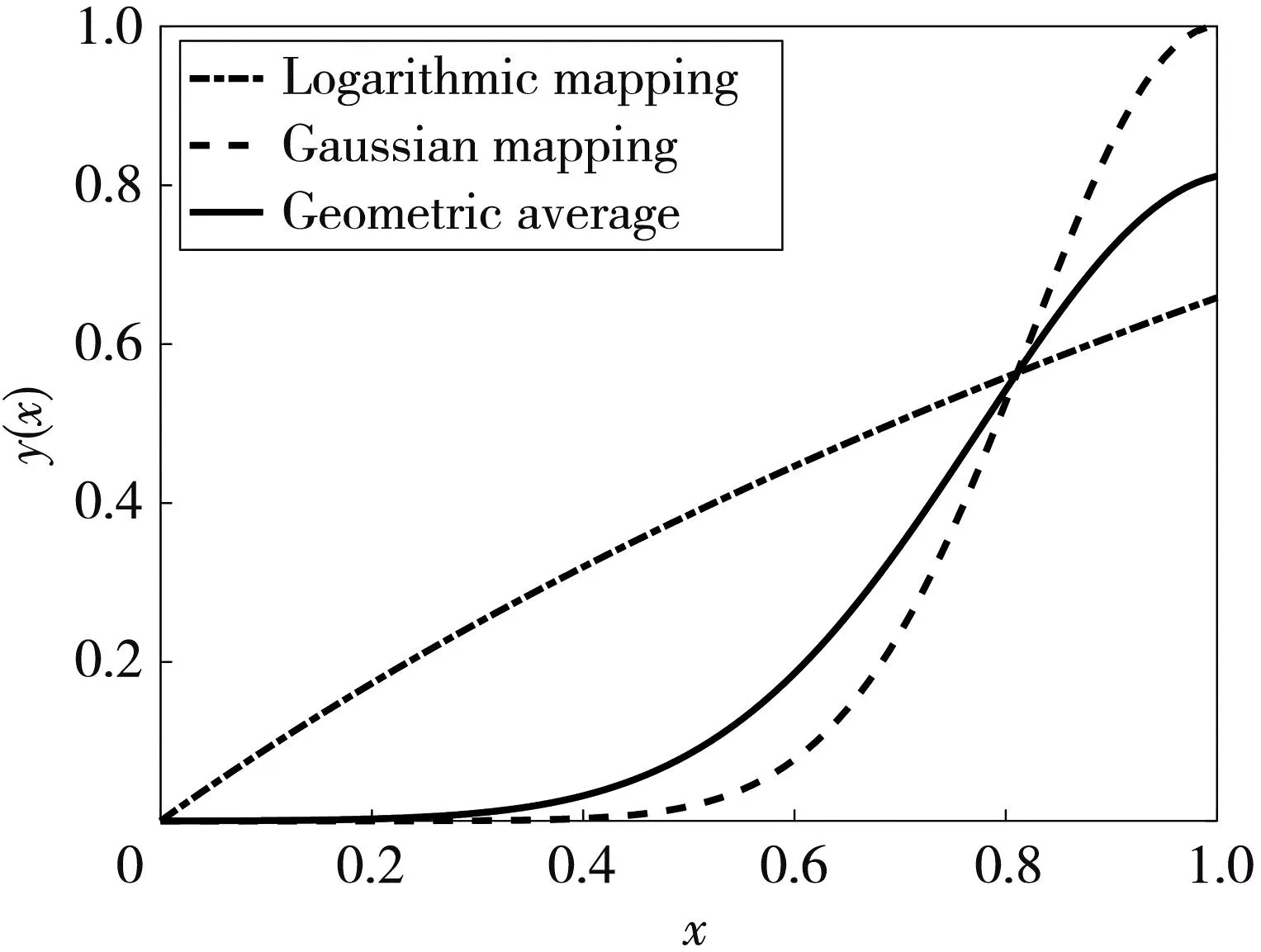

3.1 Comparison of dehazing effects

At present,the classical dehazing methods primarily include the dark channel prior (DCP) algorithm[9],the nonlinear compression (NC) algorithm[12],the color attenuation prior (CAP) algorithm[13],the multi-scale convolutional neural network (MSCN) algorithm[7],and end-to-end network dehazing (EEND) algorithm[5].These algorithms are contrasted with the proposed algorithm in seven different scenes.The experimental results are shown in Figs.9 and 10,respectively.

As can be seen from Fig.9,all six algorithms can effectively remove haze.The DCP algorithm has obvious advantage in misty scene,and the recovered image is clear and natural (i.e.,Fig.9(a)).But when haze images contain sky or strong light source range,the restored haze-free images are not ideal,as shown in Fig.9(b)-9(f),and there is obvious halo effect in sky area.The NC algorithm restores the detailed information of haze-free image obviously because it replaces the minimum filtering with a fine piecewise function and also uses nonlinear compression to improve transmission accuracy.But this algorithm sacrifices the color information of image,leading to a color cast problem (i.e.,the far scene in Fig.9(a) and 9(e)).While some haze images are not thoroughly dehazed,as shown in Figs.9(d) and 10(c).The CAP algorithm basically removes the haze,but there is still residual haze (i.e.,the far scene in Fig.9(a)).And the residual haze is more obvious at a sudden change in scene depth,as shown in Fig.9(d).The MSCN algorithm uses a multi-scale convolutional neural network to remove haze,but the color of some images is distorted,as shown in Fig.9(b)-9(e).And recovery result of misty scene is oversaturated,as shown in Fig.9(a).The EEND algorithm has excellent recovery results for haze images containing sky range.The recovery results are clear and natural,as shown in Fig.9(b),9(c),9(e)),but the recovery result is too dark in misty scene images,as shown in Fig.9(a).

Fig.9 Comparison results of real hazy images restoration effect

Compared with these algorithms,the dehazing results of the proposed algorithm attain better effects in both close and far scenes and night multi-light source scenes.Haze-free images excellently retain the distant and near scenes characteristics of original image,and have high contrast and clear structure.Compared with results of the DCP algorithm,the proposed algorithm has better results in haze removal in far scene and strong light source scene (i.e.,Fig.9(b),9(c),9(e),(f)).Compared with results of the NC and MSCN algorithms,the results of the proposed algorithm do not have apparent color cast in misty scene and abrupt change scene depth image (i.e.,Fig.9(a) and the alternation of far scene and close scene in Fig.9(d)).Compared with results of the CAP algorithm,the recovery results of proposed algorithm are more thorough in removing haze.The EEND algorithm has more ideal recovery results,but the result of the proposed algorithm is better in misty scenes,as shown in Fig.9(a).The proposed algorithm outperforms other algorithms in both single light source haze image and night haze images recovery results,as shown in Figs.9(f) and 10.In Fig.10,the recovery results of proposed algorithm are clear and natural,and it has a high visual contrast compared with other algorithms.Meanwhile,haze removal is more thorough.

3.2 Objective index evaluation

In order to avoid the one-sidedness of subjective evaluation,further analysis is conducted in conjunction with objective evaluation.A reference-free image quality assessment method[19-20]is used to objectively evaluate Figs.9 and 10.Image contrast (CG),normalized average gradient (r),visual contrast (VCM),universal quality index (UQI),image visibility check-and-balance standard (IVM) and running time (T) are used as objective evaluation indexes for recovered images.Among them,CGrepresents the color degree of recovered image,rdenotes the ability of algorithm to recover edge and texture information by using the degree of image gradient increase,VCMindicates the visibility of image,UQIreflects the structural index of the image itself,andIVMis the evaluation index of visible edges.And the larger these five indexes,the better.Treflects the algorithm running speed.The smaller this index,the better.The definition formulas for image contrast,normalized average gradient,visual contrast,and image visibility check-and-balance standard are

CG=Cj-Ci,

(23)

(24)

(25)

(26)

whereCjandCiare the average contrast of clear image and haze image;riis the average gradient ratio atPibetween the recovered image and the haze image;Xis the set of visible edges of recovered haze-free image;Rtis the number of local regions;RVis the area in local region where the standard deviation is larger than the specified threshold;ntotalis the number of all edges;C(x) is the mean contrast,andYis the area size of visible region.

Tables 1 and 2 display the comparison of average values of objective evaluation indexes in Figs.9 and 10,respectively.It can be seen that the proposed algorithm has attained good results in image contrast,normalized average gradient,visual contrast,universal quality index,and image visibility check-and-balance standard.Especially,the normalized average gradient and the visual contrast have significant advantages.At the same time,the proposed algorithm also has a smaller running time.The objective evaluation indexes further demonstrate effectiveness and real-time of the proposed algorithm.

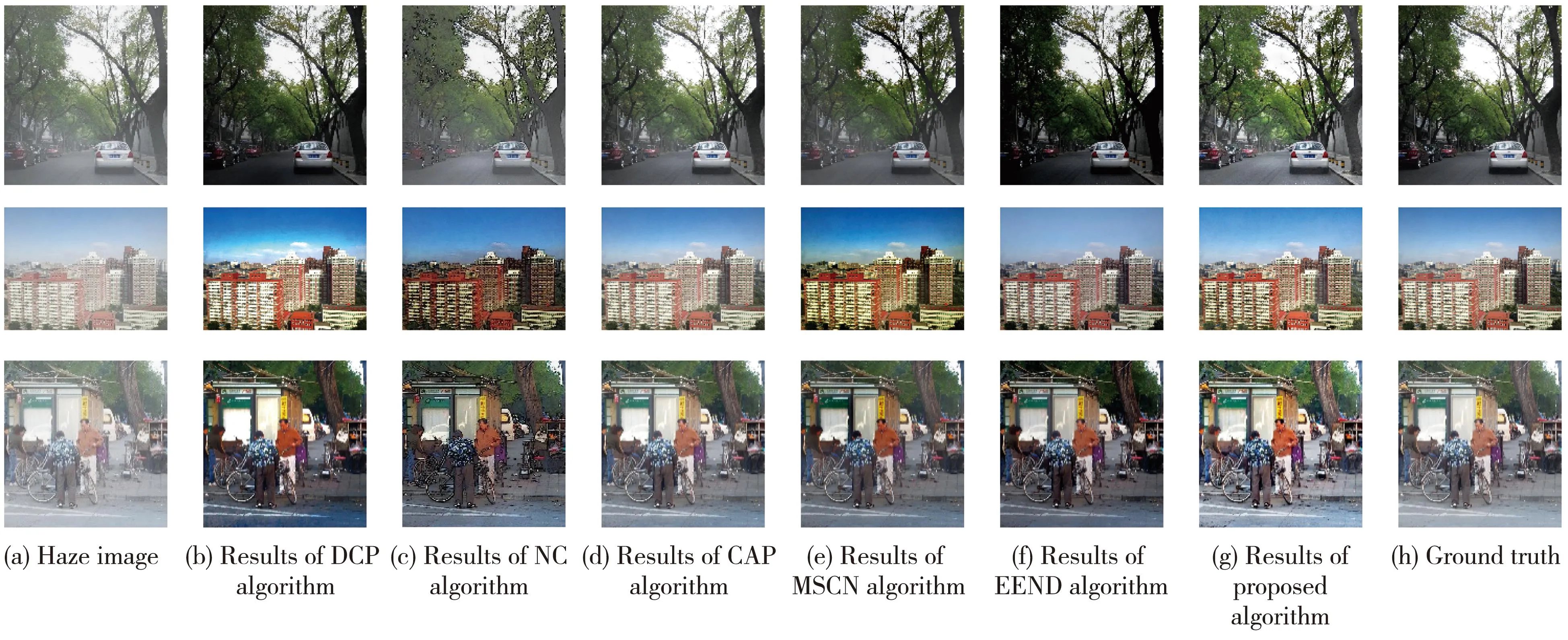

3.3 Test set validation

In order to illustrate the generalizability of the proposed algorithm,it is also validated on synthetic images that are widely used in the field of machine learning.3 images are randomly selected in the test set for comparative analysis,and the results are shown in Fig.11.It can be seen that the visual effects of MSCN algorithm and EEND algorithm are better than other algorithms,because they are both training network methods applied to the test set images.However,the proposed algorithm also has a better recovery effect for synthetic haze images as a whole.

Fig.11 Comparison of restoration results of test set haze images

The mean values of objective evaluation indexes for Fig.11 are shown in Table 3.The peak signal-to-noise ratio (PSNR) and the structural similarity (SSIM) are selected as objective indexes for measurement.Among them,SSIMindicates the degree of similarity between haze-free image and real image,andPSNRindicates the ratio of useful signal to noise.The larger thePSNRvalue,the smaller the distortion of restored images.The greater the SSIM value,the closer the recovered image is to the standard haze-free image.Due to huge advantage of the CAP algorithm in training samples,the objective evaluation indexes are superior to other algorithms,but the proposed algorithm has obvious advantages over all other algorithms.So the proposed algorithm is better in terms of dehazing effect.

Table 3 Comparison of objective evaluation indexes of test set haze images

4 Conclusions

In order to address the problem of inaccurate transmission estimation in image dehazing,an image dehazing method combining adaptive dual transmissions and scene depth variation is proposed.Firstly,an approximate depth-of-field model is established by using the relationship of image depth,saturation and brightness.Then the depth map is constructed by linear expansion.Meanwhile,the mapping function is constructed by using the dark channel prior to obtain close scene transmission.The far scene transmission is obtained by using interval estimation.Finally,an adaptive fusion of dual transmissions is realized by using the depth map.Recovered image is obtained by combining the improved local atmospheric light algorithm.The experimental results show that the proposed algorithm obtains a more thorough dehazing result.It can be widely applied to haze images of various scenes.At the same time,the objective evaluation indexes of real images are better than other algorithms,and the time complexity is low.

杂志排行

Journal of Measurement Science and Instrumentation的其它文章

- A synchronous scanning measurement method for resistive sensor arrays based on Hilbert-Huang transform

- A covert communication method based on imitating bird calls

- Remote sensing images change detection based on PCA information entropy feature fusion

- Research on restraint of human arm tremor by ball-type dynamic vibration absorber

- Drive structure and path tracking strategy of omnidirectional AGV

- Electro-hydraulic servo force loading control based on improved nonlinear active disturbance rejection control