Arabic Fake News Detection Using Deep Learning

2022-08-24KhaledFouadSaharSabbehandWalaaMedhat

Khaled M.Fouad,Sahar F.Sabbehand Walaa Medhat

1Faculty of Computers&Artificial Intelligence,Benha University,Egypt

2University of Jeddah,College of Computer Science and Engineering,Jeddah,21493,Saudi Arabia

3Information Technology and Computer Science,Nile University,Egypt

Abstract: Nowadays, an unprecedented number of users interact through social media platforms and generate a massive amount of content due to the explosion of online communication.However, because user-generated content is unregulated, it may contain offensive content such as fake news,insults,and harassment phrases.The identification of fake news and rumors and their dissemination on social media has become a critical requirement.They have adverse effects on users,businesses,enterprises,and even political regimes and governments.State of the art has tackled the English language for news and used feature-based algorithms.This paper proposes a model architecture to detect fake news in the Arabic language by using only textual features.Machine learning and deep learning algorithms were used.The deep learning models are used depending on conventional neural nets(CNN),long short-term memory(LSTM),bidirectional LSTM(BiLSTM),CNN+LSTM,and CNN + BiLSTM.Three datasets were used in the experiments, each containing the textual content of Arabic news articles; one of them is reallife data.The results indicate that the BiLSTM model outperforms the other models regarding accuracy rate when both simple data split and recursive training modes are used in the training process.

Keywords: Fake news detection; deep learning; machine learning; natural language processing

1 Introduction

The rise of social networks has considerably changed the way users around the world communicate.Social networks and user-generated content(UGC)are examples of platforms that allow users to generate,share,and exchange their thoughts and opinions via posts,tweets,and comments.Thus,social media platforms (i.e., Twitter, Facebook, etc.) are considered powerful tools through which news and information can be rapidly transmitted and propagated.These platforms empowered their significance to be the essence of information and news source for individuals through the WWW[1].However,social media and UGC platforms are a double-edged sword.On the one hand,they allow users to share their experiences which enriches the web content.On the other hand, the absence of content supervision may lead to the spread of false information intentionally or unintentionally[2],threatening the reliability of information and news on such platforms.

False information can be classified as intention-based or knowledge-based [3].Intention-based can be further classified into misinformation and disinformation.Misinformation is an unintentional share of false information based on the user’s beliefs, thoughts, and point of view.Whereas the intentional spread of false information to deceive,mislead,and harm users are called disinformation.Fake news is considered disinformation as they include news articles that are confirmed to be false/deceptive published intentionally to mislead people.Another categorization of false information[4]was based on the severity of its impact on users.Based on this study,false information is classified as a) fake news, b) biased/inaccurate news, and c) misleading/ambiguous news.Fake news has the highest impact and uses tools such as content fabrication, propaganda, and conspiracy theories [5].Finally,biased content is considered to be less dangerous and mainly uses hoaxes and fallacies.The last group is misleading news,which has a minor impact on users.Misleading content usually comes in the forms of rumors,clickbait,and satire/sarcasm news.Disinformation results in biased,deceptive,and decontextualized information based upon which emotional decisions are made,impulsive reactions,or stopping actions in progress.Disinformation results negatively impact users’experience and decisions,such as online shopping and stock markets[6].

The bulk of researches for fake news detection are based on machine learning techniques [7].Those techniques are feature-based, as they require identifying and selecting features that can help identify any piece of information/text’s fakeness.Those features are then fed into the chosen machine learning model for classification.In various languages,deep learning models[8]have recently proven efficiency in text classification tasks and fake news detection [9].They have the advantage that they can automatically adjust their internal parameters until they identify the best features to differentiate between different labels on their own.However,no researches use deep learning models[10]for fake news detection for the Arabic language,as far as we know from the literature.

The problem of Fake news detection can have harmful consequences on social and political life.Detecting fake news is very challenging,mainly when applied in different languages than English.The Arabic language is one of the most spoken languages over the globe.There are a lot of sources for news in Arabic,including official news websites.These sources are considered the primary source of Arabic datasets.Our goal is to detect rumors and measure the effect of fake news detection in the middle east region.We have evaluated many algorithms to achieve the best results.

The work’s main objective is exploring and evaluating the performance of different deep learning models in improving fake news detection for the Arabic language.Additionally,compare deep learning performance with the traditional machine learning techniques.Eight machine learning algorithms with cross-fold validation are evaluated,including probabilistic and vector space algorithms.We have also tested five combinations of deep learning algorithms,including CNN and LSTM.

The paper is organized as follows.Section 2 tackles the literature review in some detail.The proposed model architecture is presented in Section 3.Section 4 presents the experiments and the results with discussion.The paper is concluded in Section 5.

2 Literature Review

There are many methods used for fake news detection and rumor detection.The methods include machine learning and deep learning algorithms,as illustrated in the following subsections.

2.1 Fake News Detection Methods

Fake news detection has been investigated from different perspectives; each utilized different features for information classification.These features included linguistic, visual, user, post, and network-based features [5,11].The linguistic-based methods tried to find irregular styles within text based on a set of features such as the number of words, word length, multiple words frequencies;unique word count, psycho-linguistic features, syntactic features (i.e., TF-IDF, question marks,exclamation marks,hash-tags...etc.)to discriminate natural and fake news[11].Visual-based systems attempted to identify and extract visual elements from fictitious photos and movies [12] by using deep learning approaches.The user-based methods analyzed user-level features to identify likely fake accounts.It is believed that fake news can probably be created and shared by fake accounts or automatic pots created for this sake.User-based features were used to evaluate source/author credibility.Those features include, among others:the number of tweets, tweet repetition, number of followers, account age, account verifiability, user photo, demographics, user sentiment, topically relevancy,and physical proximity[13,14].The post-based methods analyzed users’feedback,opinions,and reactions as indicators of fake news.These features included comments,opinions,sentiment,user rating, tagging, likes, and emotional reactions [14].The network-based methods of social networks enabled the rapid spread of fake news.These methods tried to construct and analyze networks from different perspectives.Friendship networks,for instance,explored the user followers relationship.In comparison, stance networks represent post-to-post similarities.Another type is the co-occurrence networks,which evaluate user–topic relevancy[14].

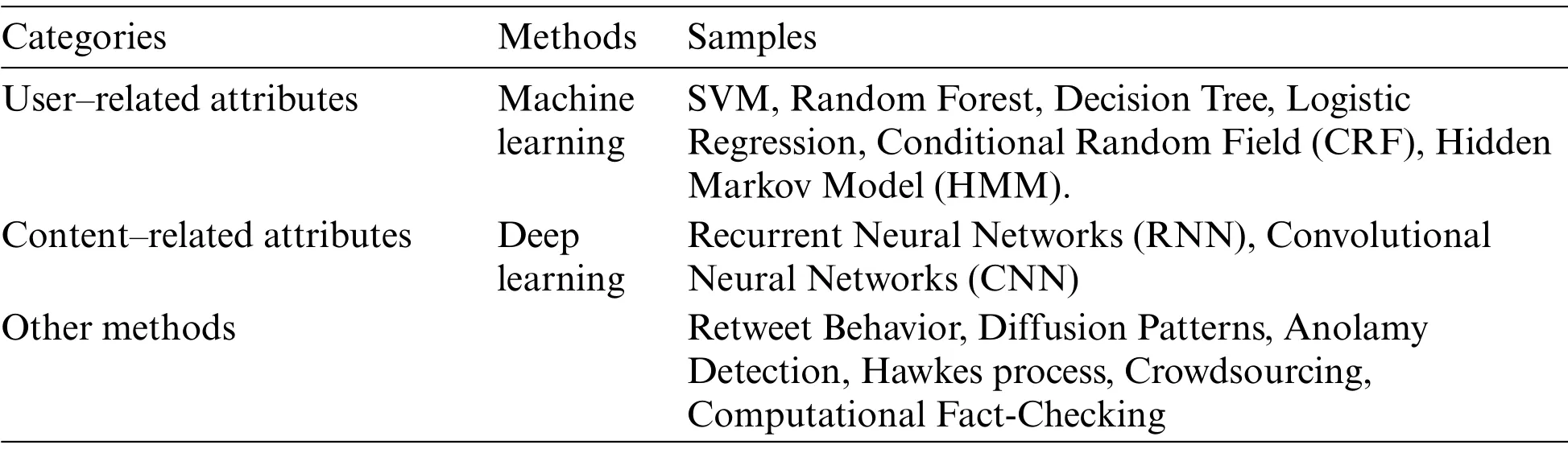

Many methods considered rumors or fake news detection as a classification issue.These methods aim to associate attributes’values,like a rumor or non-rumor,true or false,or fake or genuine,with a specific piece of text.Researchers had utilized machine-learning methods,accomplishing optimistic results.Substitutionary researchers utilized other methods based on data mining techniques.They depended on extrinsic resources, like knowledge bases, to forecast either the included class of social media content or to examine their truthfulness.Many methods that detect rumors have concentrated on utilizing content features for classification.Few methods for rumor detection have depended on social context.Otherwise, rumor detection and verification methods predominantly utilized a combination of content and context features.Using this combination is since using the social context of rumors may significantly enhance detection performance[14].Some different method categories of rumor detection that may be considered in the works of rumor detection analysis are shown in Tab.1.These methods can be categorized into classification methods and other methods.

Table 1:Different attributes that participate in the start and growth of rumors on social media

2.2 Machine Learning-Based Fake News Detection

Most of the fake news detection works to formulate the problem as a binary classification problem.The literature research may fall under the umbrella of three main classes[15];feature-based machine learning approaches,networking approaches,and deep learning approaches.Feature-based machine learning approaches aim at learning features from data using feature-engineering techniques before classification.Textual, visual, and user features are extracted and fed into a classifier, then evaluated to identify the best performance given those sets of features.The most widely used supervised learning techniques include logistic regression(LR)[16],ensemble learning techniques such as random forest(RF)and adaptive boosting(Adaboost)[16–18], decision trees[18], artificial neural networks[18],support vector machines(SVM)[16,18],naïve Bayesian(NB)[16,18,19]and k-nearest neighbor(KNN) [16–18] and linear discriminant analysis (LDA) [16,20,21].However, feature-based machine learning models suffer the issue of requiring feature engineering ahead of classification,cumbersome.Networking approaches evaluate user/author credibility by extracting features such as the number of followers, comments/replies content, timestamp, and using graph-based algorithms to analyze structural social networks[22,23].

2.3 Deep Learning for Fake News Detection

Deep learning (DL) [9,15] approaches use deep learning algorithms to learn features from data without feature engineering during training automatically.Deep learning models have proven a substantial performance improvement and eliminated the need for the feature extraction process.As stated earlier, deep learning models can automatically overcome the burden of feature engineering steps and automatically use training data to identify discriminatory features for fake news detection.Deep learning models showed remarkable performance in text classification general tasks[24–27]and have been widely used for fake news detection[28].Due to their efficiency for text classification tasks,deep learning models have been applied for fake news detection from the NLP perspective using only text.For instance, in [29], deep neural networks were used to predict real/fake news using only the news’s textual content.Different machines and deep learning algorithms were applied in this work,and their results showed the superiority of Gated Recurrent Units(GRUs)over other tested methods.

In [30], text content was preprocessed and input to recurrent neural networks (RNN), GRU,Vanilla, and Long Short Term Memories (LSTMs) for classifying fake news.Their results showed that LSTM follows GRU’s best performance and finally comes vanilla.Text-based only classification in [31] using tanh-RNNs and LSTM with a single hidden layer, GRU with one hidden layer, and enhanced with an extra GRU hidden layer.In [32], bidirectional LSTMs together with Concurrent neural networks(CNN)were used for classification.The bi-directional LSTMs considered contextual information in both directions:forward and backward in the text.In[33],the feasibility of applying deep learning architecture of CNN with LSTM cells for text-only–based classification of RNN and LSTMs and GRUs were used in[34]for text-based classification.Deep CNN multiple hidden layers were used in[35].

Other attempts were made using text and one or more of other features in[36]Arjun et al.utilized ensemble-based CNN and (BiLSTM) for multi-label classification of fake news based on textual content and features related to the source’s behavior speaker.In their model, fake news is assigned to one of six classes of fake news.(Pants-fire, False, Barely-true, Half-true, Mostly-true, and True).In [37], neural ensemble architecture (RNN, GRN, and CNN) and used content-based and authorbased features to detect rumors on Twitter.Textual and propagation-based features were used in[38,39]for classification:the former used RNN, CNN, and recursive neural networks (RvNN).CNN and RNN used only text and sentiment polarity of tweet’s response,and the RvNN model was used on the text and the propagation.At the same time,the latter study constructed RvRNN based on top-down and bottom-up tree structures.These models were tested compared to the traditional state-of-the-art models such as decision tree(Tree-based Ranki DTR,Decision-Tree Classifier DTC),RFC,different variations of SVM,GRU-RNN.Their results showed that TD-RvRNN gave the best performance.

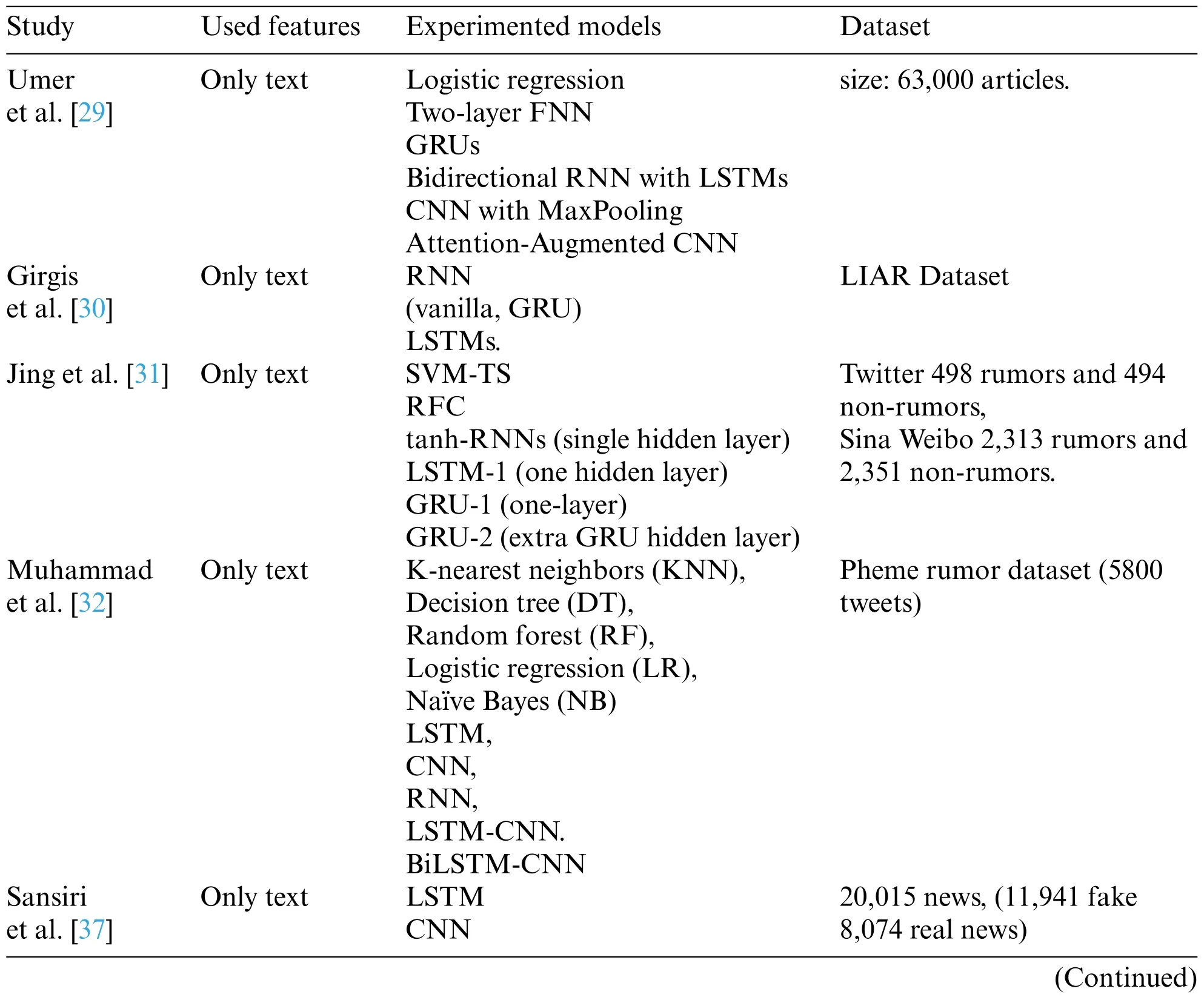

Post-based, together with user-based features, were used for fake news predictions [40].They applied RNN,LSTMs,and GRUs and found out that LSTMs outperformed the other two models.In [41], text, user-based, content-based features, signal features were used for prediction tasks using a hierarchical recurrent convolutional neural network.Their experiments included(Tree-based Ranki DTR,Decision-Tree Classifier DTC),SVM,GRUs,BLSTMs.Tab.2 summarizes the surveyed works in the literature.

Table 2:The summarization of the related works

Table 2:Continued

2.4 Arabic Rumor Detection

The Arabic language has a complex structure that imposes challenges in addition to the lack of datasets.Thus, the researches on rumor detection in Arabic social media are few and require more attention and effort to achieve optimal results.The studies that are focused on Arabic rumor detection are summarized in Tab.3.

Table 3:The researches that are concerning Arabic rumors detection

3 Proposed Architecture

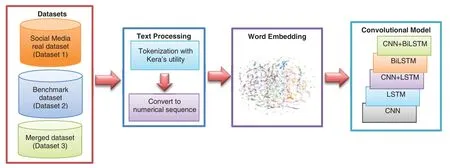

The proposed methodology investigates the most famous state of the arts deep learning algorithms for Arabic text classification;like deep learning techniques,they have the advantage of their ability to capture semantic features from textual data[47]automatically.The four different combinations of deep neural networks,namely,CNN,LSTM,BiLSTM,CNN+LSTM,and CNN+BiLSTM classification,have been performed.The proposed methodology is shown in Fig.1.

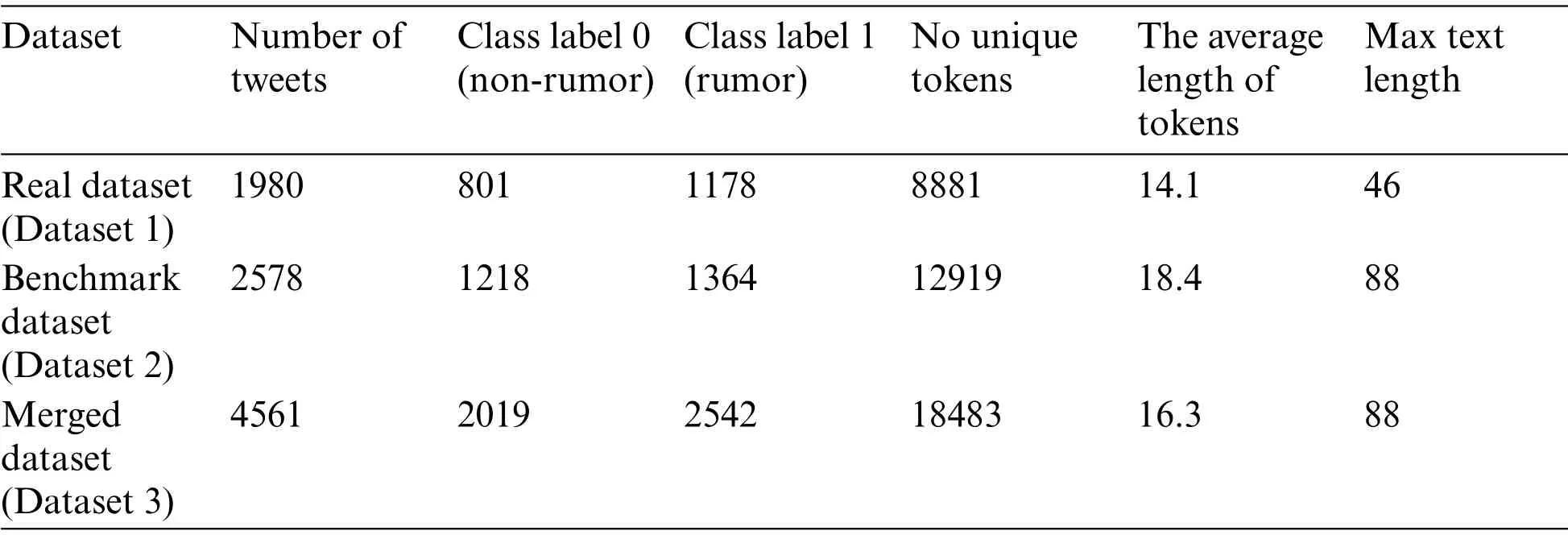

3.1 Datasets

The first dataset consists of news and tweets that were manually collected and annotated by rumor/non-rumor.The actual dataset was collected from the Arabic news portals such as; Youm7,Akhbarelyom, and Ahram.This fake news was announced to make people aware that news is not absolute and fake.This effort is the responsibility of the information and decision support center,the Egyptian cabinet.

Figure 1:System architecture

The second dataset is a benchmark dataset published in[48].Then,the two datasets are merged into one large combined dataset to test deep learning performance using a larger dataset.The details of each dataset are shown in the Tab.4.

Table 4:The description of the datasets

Tab.5 shows samples of that real dataset collected from Arabic news websites.

3.2 Preprocessing

Preprocessing the text before it is fed into the classifier is very important and impacts the overall performance of the classification model.In this step, the text is cleaned using filters to remove punctuations and all non-Unicode characters.Afterward,stop words are removed,then sentences are tokenized,and tokens are stemmed.The resulting sentences are then encoded as numerical sequences,the number of unique tokens and the maximum sentences’length is calculated.This maximum length is used to pad all sentences to be of the same size, equal to the maximum length.Labels are then encoded using one-hot-encoding.

Table 5:The samples of the real dataset

3.3 Word Embedding

Recently, word embeddings proved to outperform traditional text representation techniques.It represents each word as a real-valued vector in a dimensional space while preserving the semantic relationship between words.As vectors of words with similar meanings are placed close to each other.Word embeddings can be learned from the text to fit a deep neural model on text data.For our work,the Tensorflow Keras embedding layer was used.It takes,as an input,the numerically encoded text.It is implemented as the first hidden layer of the deep neural network where the word embeddings will be learned while training the network.The embedding layer stores a lookup table to map the words represented by numeric indexes to their dense vector representations.

3.4 Proposed Models

Our system explores the usage of three deep neural networks,namely,CNN,LSTM,and BiLSTM,and two combinations between CNN+LSTM and CNN+BiLSTM as illustrated in Fig.2.

CNN model consists of one conventional layer which learns to extract features from sequences represented using a word embedding and derive meaningful and useful sub-structures for the overall prediction task.It is implemented with 64 filters (parallel fields for processing words) with a linear(‘relu’) activation function.The second layer is a pooling layer to reduce the output of the convolutional layer by half.The 2D output from the CNN part of the model is flattened to one long 2D vector to represent the‘features’extracted by the CNN.Finally,two dense layers are used to scale,rotate and transform the vector by multiplying Matrix and vector.

Figure 2:Deep learning model

The output of the word embedding layer is fed into one LSTM layer with 128 memory units.The output of the LSTM is fed into a dense layer of size=64.This is used to enhance the complexity of the LSTM’s output threshold.The activation function is natural for binary classification.It is a binary-class classification problem;binary cross-entropy is used as the loss function.

The third model combines both CNN with LSTM,where two conventional layers are added with max-pooling and dropout layers.The conventional layers act as feature extractors for the LSTMs on input data.The CNN layer uses the output of the word embeddings layer.Afterward,the pooling layer reduces the features extracted by the CNN layer.A dropout layer is added to help to prevent neural from overfitting.The LSTM layer with a hidden size of 128 is added.We use one LSTM layer with a state output of size=128.Note, as per default return sequence is False, we only get one output,i.e.,of the last state of the LSTM.The output of LSTM is connected with a dense layer of size=64 to produce the final class label by calculating the probability of the LSTM output.The softmax activation function is used to generate the final classification.

The BiLSTM model uses a combination of recurrent and convolutional cells for learning.The output from the word embeddings layers is fed into a bi-directional LSTM.Afterward,dense layers are used to find the most suitable class based on probability.

BiLSTM-CNN model architecture uses a combination of convolutional and recurrent neurons for learning.As input, the output of the embeddings layers is fed into two-level conventional layers for feature learning for the BiLSTM layer.The features extracted by the CNN layers are max-pooled and concatenated.The fully connected dense layer predicts the probability of each class label.

For Training the deep learning models,Adam optimizer with 0.01 learning rate,weight decay of 0.0005,and 128 batch size.A dropout value of 0.5 is used to avoid overfitting and speed up the learning.The output layer uses a softmax activation function.

The experiments used python programming Tensorflow and Keras libraries for machine learning and deep learning models.A windows 10–based machine with core i7 and 16 GB RAM was used.

4 Results and Discussion

Two experiments have been performed on three different datasets.The first experiment utilizes the proposed deep learning algorithms.The second experiment utilizes machine-learning algorithms using n-gram feature extraction and compares their results with deep learning algorithms.

Experiments included two phases;first,the most famous machine learning algorithms have been applied for classification with different n-grams.Machine learning techniques were evaluated using accuracy, f1-measure, and AUC (Area Under Curve) measures.The second phase of experiments included applying deep learning models for classification.Deep learning algorithms were first trained using simple data spilled with 80%training and 20%testing.Then same algorithms were trained using 5-fold cross-validation[49].

4.1 Machine Learning Experiments

The experiments are conducted using many machine learning algorithms,including Linear SVC,SVC,multinomialNB,bernoulliNB,stochastic gradient descent(SGD),decision tree,random forest,and k-neighbors.Each algorithm is evaluated using accuracy, F-score, and area under the curve(AUC).The results of the first dataset experiment are shown in Tab.6.The table shows that the SGD classifier gives the best results.The figure shows that SVC,decision tree,and random forest give lower performance than other algorithms.

Table 6:The results of the first dataset experiment

The results of the second dataset experiment are shown in Tab.7.Each algorithm is evaluated using accuracy,F-score,and area under the curve(AUC).Tab.7 shows that the LinerarSVC classifier gives the best results.The table shows that SVC, decision tree, and random forest give lower performance than other algorithms.

Table 7:The results of the second dataset experiment

The results of the third dataset experiment are shown in Tab.8.Each algorithm is evaluated using accuracy, F-score, and area under the curve (AUC).The table shows that the LinerarSVC classifier gives the best F-score and AUC while MultinomialNB gives the best accuracy.The figure shows that SVC,decision tree,and random forest give lower performance than other algorithms.

Table 8:The results of the third dataset experiment

The methods SVC,decision tree,and random forest can be concluded that is not suitable for this problem.The following graphs depicted in Figs.3a–3h show the performance of each ML algorithm applied to each dataset.The BernoulliNB method shows its performance with the first dataset,found in Fig.3a.Fig.3b shows that MultinomialNB gives its lower performance for the third dataset.Fig.3c shows that k neighbors give their best performance for the first dataset.Fig.3d shows that random forest gives its best performance for the first dataset.Fig.3e shows that the decision tree performs best for the first dataset.Fig.3f shows that the SGD classifier gives an almost equivalent performance for all datasets.Fig.3g shows that SVC gives its best performance for the first dataset.Fig.3h shows that Linear SVC gives almost equivalent performance for all datasets.The first dataset is the manual collected and annotated data,which is the real-life data.Therefore,the machine learning algorithms give excellent performance for real-life data.

Figure 3:The performance of each ML algorithms with each dataset

4.2 Deep Learning Algorithms

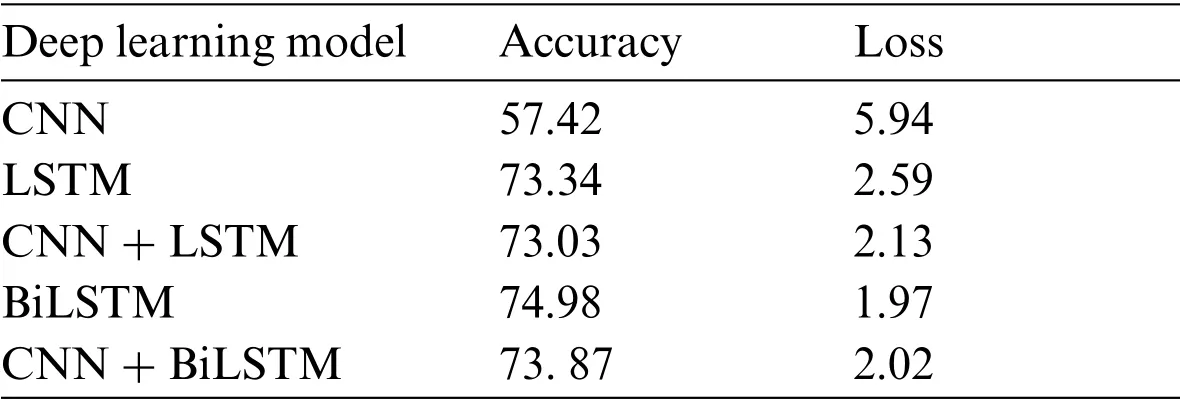

Many deep learning algorithms are conducted; CNN, LSTM, CNN + LSTM, BiLSTM, and CNN+BiLSTM.The evaluation metrics of accuracy,loss,and AUC are used.

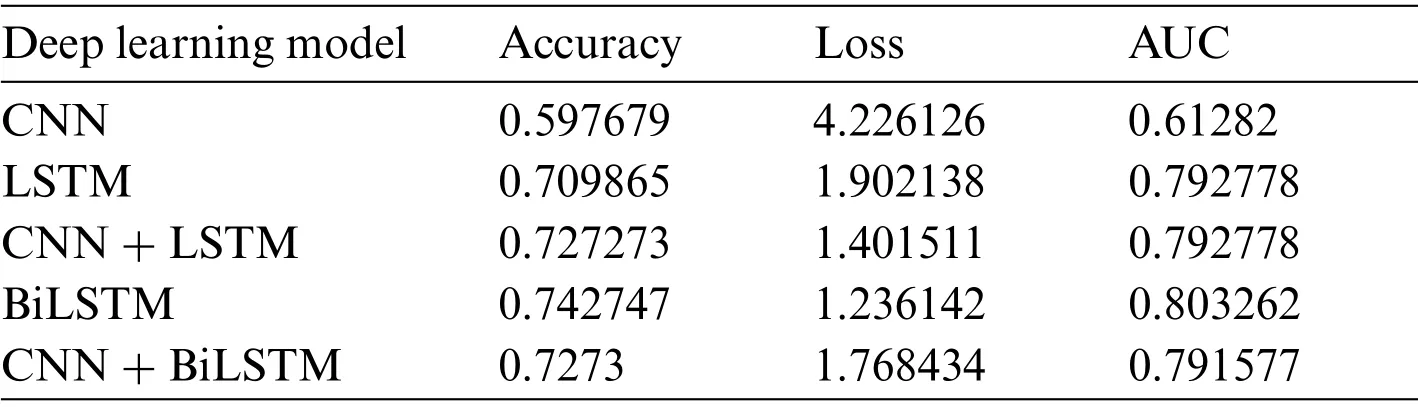

Tab.9 shows the performance of each algorithm applied to the first dataset.Tab.9 shows that BiLSTM gives the slightest loss,best accuracy,and best AUC.Thus,BiLSTM gives good performance with reasonable loss compared to other algorithms,which are close to each other in performance.

Table 9:The performance of each algorithm applied on the first dataset

Tab.10 shows the performance of each algorithm on the second dataset.Results on the second dataset also show that BiLSTM gives the least amount of loss, the best accuracy, and the best.Additionally, CNN gives a bad performance with a significant loss and the lowest accuracy.Other algorithms give almost similar performances.

Table 10:The performance of each algorithm applied on the second dataset

Tab.11 shows the performance of each algorithm on the third dataset.Tab.11 shows that CNN gives the least amount of loss.BiLSTM gives the best accuracy and the best AUC.Tab.11 shows that LSTM and CNN+BiLSTM give a significant loss while accuracies and AUC are almost similar to other algorithms.

Table 11:The performance of each algorithm applied on the third dataset

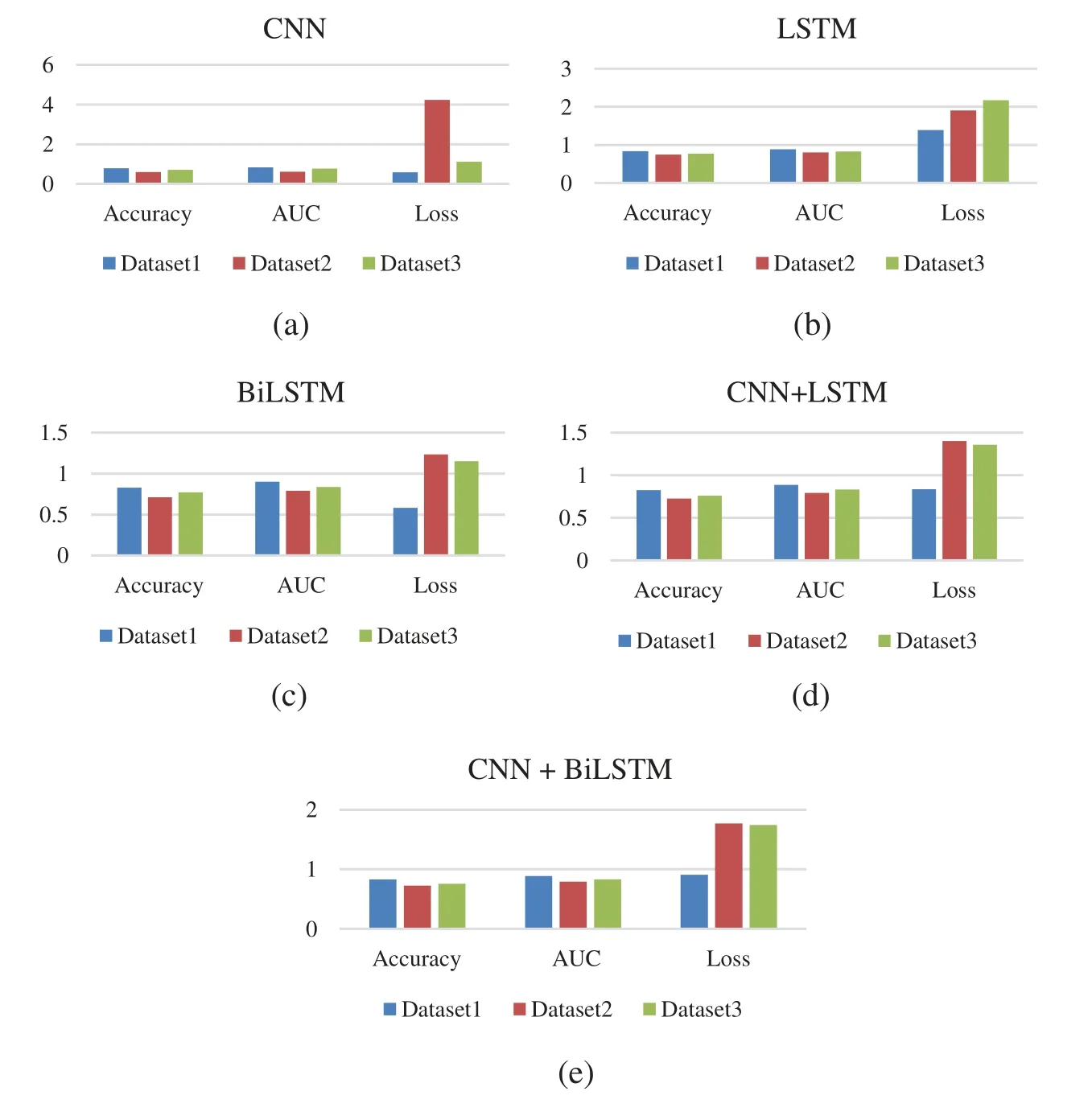

The following graphs depicted in Figs.4a–4h show the performance of each deep learning algorithm with each dataset.The BiLSTM method is more suitable for the first and second datasets because it significantly loses the third dataset, as shown in Fig.4a.Fig.4b shows that CNN is not suitable for this problem as it gives low performance for all datasets and a considerable amount of loss in the third dataset.Fig.4c shows that CNN+BiLSTM is more suitable for datasets one and second as it significantly loses dataset three.Fig.4d shows that CNN+LSTM is more suitable for the first and second datasets as it gives a significant amount of loss in the third dataset.Fig.4e shows that LSTM provides accepted performance for the first and second datasets but encounters a significant loss for the third dataset.Therefore,deep learning algorithms give better performance when combining with real-life data.

Figure 4:The performance of each deep learning algorithm with each dataset

4.3 Cross-Validation

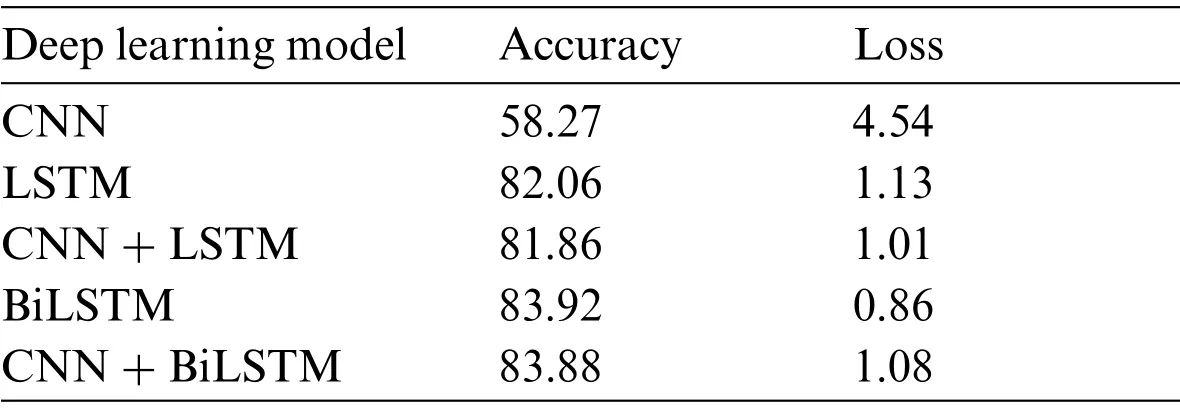

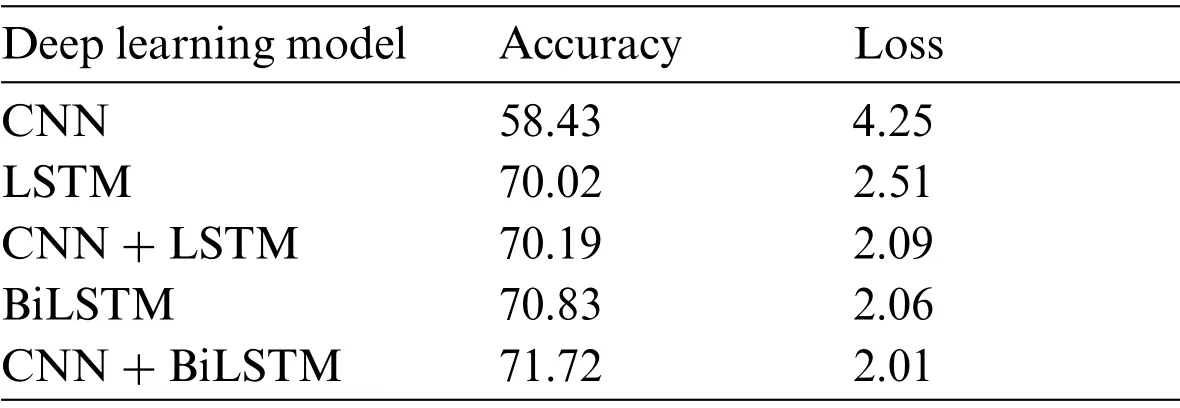

To verify the experiments done with deep learning algorithms, five-fold cross-validation on the three datasets have experimented.The results of each dataset are shown in Tabs.12–14.Results show that BiLSTM and BiLSTM + CNN give the highest accuracy and most negligible loss for all three datasets.On the other hand,CNN achieved the worest performance among all experimented models.

Table 12:The five-fold cross-validation of the first dataset

Table 13:The five-fold cross-validation of the second dataset

Table 14:The five-fold cross-validation of the third dataset

5 Conclusions and Future Works

This paper aims at investigating machine learning and deep learning models for content-based Arabic fake news classification.A series of experiments were conducted to evaluate the task-specific deep learning models.Three datasets were used in the experiments to assess the most well-known models in the literature.Our findings indicate that machine learning and deep learning approaches can identify fake news using text-based linguistic features.There was no single model that performed optimally across all datasets in terms of machine learning algorithms.On the other hand,our results show that the BiLSTM model achieves the highest accuracy among all models assessed across all datasets.

We intend to thoroughly examine the existing architectures combining various layers as part of our future work.Furthermore, examine the effect of various pre-trained word embeddings on the performance of deep learning models.

Funding Statement:The authors received no specific funding for this study.

Conflicts of Interest:Authors declare that they have no conflicts of interest to report regarding the present study.

杂志排行

Computers Materials&Continua的其它文章

- Hybrid Renewable Energy Resources Management for Optimal Energy Operation in Nano-Grid

- HELP-WSN-A Novel Adaptive Multi-Tier Hybrid Intelligent Framework for QoS Aware WSN-IoT Networks

- Plant Disease Diagnosis and Image Classification Using Deep Learning

- Structure Preserving Algorithm for Fractional Order Mathematical Model of COVID-19

- Cost Estimate and Input Energy of Floor Systems in Low Seismic Regions

- Numerical Analysis of Laterally Loaded Long Piles in Cohesionless Soil