E-mail Spam Classification Using Grasshopper Optimization Algorithm and Neural Networks

2022-08-23SanaaGhalebMumtazimahMohamadSyedAbdullahFadzliandWaheedGhanem

Sanaa A.A.Ghaleb,Mumtazimah Mohamad,Syed Abdullah Fadzli and Waheed A.H.M.Ghanem

1Faculty of Informatics and Computing,Universiti Sultan Zainal Abidin,Kuala Terengganu,22200,Malaysia

2Faculty of Ocean Engineering Technology and Informatics,Universiti Malaysia Terengganu,Kuala Terengganu,21030,Malaysia

3Faculty of Engineering,University of Aden,Aden,Yemen

4Faculty of Education(Aden-Saber),University of Aden,Aden,Yemen

Abstract: Spam has turned into a big predicament these days, due to the increase in the number of spam emails,as the recipient regularly receives piles of emails.Not only is spam wasting users’time and bandwidth.In addition,it limits the storage space of the email box as well as the disk space.Thus,spam detection is a challenge for individuals and organizations alike.To advance spam email detection, this work proposes a new spam detection approach,using the grasshopper optimization algorithm(GOA)in training a multilayer perceptron(MLP)classifier for categorizing emails as ham and spam.Hence,MLP and GOA produce an artificial neural network(ANN)model,referred to(GOAMLP).Two corpora are applied Spam Base and UK-2011 Web spam for this approach.Finally,the finding represents evidence that the proposed spam detection approach has achieved a better level in spam detection than the status of the art.

Keywords: Grasshopper optimization algorithm; multilayer perceptron;artificial neural network;spam detection approach

1 Introduction

Despite the popularity of social networking services to spread messages over the Internet,email remains at the forefront of social,academic,and business communications[1].E-mail is a major means of disseminating information around the world at no cost via smartphones and computers, which have made e-mail messages more and more popular [2].It is a quick means of communication for companies, government departments, and universities as well, and are used to save documents and facilitate their circulation among employees,to facilitate communication,conduct,and complete work.Notwithstanding the substantial benefits of utilizing email,the communication technology is followed by a huge number of non-requested emails and infrequently deceptive emails, represented as spam email(SE).SE is the most irritating phenomenon on the Internet that challenges individuals and global firms like Yahoo, Google, and Microsoft [3].Two basic methods can be used for spam detection:Machine learning(ML)and knowledge filtering(KF)[4].In a KF method,a view to create patterns and populate the detection database,different elements of the messages are analyzed using guideline filtering.When a pattern matches one of the detection policies, the message is labelled as spam.In comparison, an ML is more effective than a KF and does not require rules [5].In order to know the classification rules in email messages,specific algorithms are used.Due to the efficacy of the ML methods,many algorithms have been used to detect spam[6].

Systematic review literature showed that the ML method in purifying mail achieves effective categorization.They include ANNs [7], Support vector machines (SVM) [8], and Naive bayes (NB)[9].Some researchers have provided combined ML algorithms or hybridized algorithms to achieve an accurate,detection and pattern recognition method[10].However,the use of traditional training methods depend on the gradient algorithm,has drawbacks as compared to swarm intelligence that can be applied to ANNs specifically[11–13]Gradient descent is a local search algorithm that the existing solution to generate a new solution;nevertheless,it lacks good exploration and tends to be trapped in the local minima of the search space [14–16].In contrast, metaheuristics algorithms are an optimal solution because they have a balance between intensification and diversification and can address simultaneous adaptation in ANNs components.One of these techniques that are becoming popular in Neural networks (NN) training is the nature inspired metaheuristic algorithms (NIMAs).This is a very popular algorithm in this category; these include Genetic algorithm (GA) [17], Grasshopper optimization algorithm(GOA)[18],Ant colony optimization(ACO)[19],Particle swarm optimization(PSO)[20],and BAT algorithm[21].This research introduces a modified ML technique of the MLP referred to as a GOA.The major benefits of this GOA are a few controlling parameters, adaptive intensification and diversification search patterns, and a gradient-free mechanism.Our approach is represented as GOAMLP, where GOA is adopted for MLPs training.The application of these algorithms in NN training for spam classification is extensively evaluated, and their performances are compared with current and traditional metaheuristic algorithms.

Several stochastic global optimization (SGO) approaches demonstrate higher accuracy and computational efficiency compared to trajectory driven approaches like Backpropagation(BP).When applying SGO techniques for trained NNs, problems related to BP are resolved [22].SGO methods are normally inspired by physical and biological instances like PSO,ACO,or GA,etc.For example,some studies utilized the PSO for explaining the structure of the MLP model for addressing real world challenges[23].The authors applied GA to modify the variables of an ANNs[24].

Some studies utilized several techniques concurrently.For example,research by[25]uses GA to adjust the weights of the studies model, which in turn improves the model’s performance.However,the authors used a special dataset to evaluate the model.Reference [26] designed a detecting model by training BPNN via GA, where it optimizes weights of the BPNN, enhances accuracy.However,GA cannot guarantee an optimal solution.Additionally, Reference [27] introduced the negative selection algorithm (NSA) to develop variables of BPNN.The algorithm of the email is classified as self and non-self.The dataset that was used in this work is Spam Base using MLP and SVM classifiers.However, this model does not offer better performance.Reference [28] introduced the memetic algorithm(MA)which is a furtherance of convectional GA,which was applied in optimizing the relationship between weights in NN for SD.The dataset that was used in this was work Spam Base using Feedforward neural network (FFNN) classifier.However, MA lacks local optimal.Reference[29] presented Krill herd algorithm (KH), to classify ham and spam.The result demonstrates more accuracy and speedy convergence than the convectional BP paradigm.The dataset that was used in this work is SpamAssassin using FFNN classifiers.However, this model does not perform better.Reference[30]proposed spam detection model,by Biogeography-based optimization(BBO)algorithm based trained on ANN.The datasets that were used in this work are the Spam Base dataset and SpamAssassin using ANN classifiers.However,BBO lack exploiting the solutions.Reference[31]use of GA in a modification to standard ANNs and an artificial immune system for spam detection and SpamAssassin corpus was utilized for simulations.Lastly,Reference[32]proposed a new SD approach by ANNs to EBAT algorithm.The dataset that was used in this work is Spam Base and UK-2011 Web spam.This research introduces a new spam detection approach created by the most promising GOA in training MLP to address challenges faced by traditional MLP training algorithms.Two corpora are applied Spam Base and UK-2011 Web spam for this approach with better performance.

2 Methodology

The implementation of the SD approach regarding ANNs trained through GOA,as presented in Fig.1.The aim of the new GOAMLP model was to achieve a promising score in terms of detection accuracy,global convergence,law false positive prediction,and in identifying SE with the help of the new metaheuristic algorithm called GOA algorithm for training the ANNs.

Figure 1:The GOAMLP-SD approach

MLPs were prevalent in the spam detection approach due to their efficiency in classifying mail as wanted or spam.This sort of tool considers the system’s common components, the environment of electronic mailing, and statistically significant departures from expected user nature.These tools have open and extendable architectures that aid in the creation of intelligent character models in the environment.The protocol composes determining structure and variables of ANNs to learn the relationship between the incoming patterns and the target output through training.The training comprises? the protocol specifying the structure and variables of ANNs to learn the relationship between incoming patterns and target outputs.By decreasing the value of the MSE,the ANN structure and weights(w)&biases(B)of the ANNs were obtained.Then,the knowledge base(structure andwandB)is updated.The maximum number of iterations parameter(given in Fig.1)determines when the training process should end.The final stage is carried out after obtaining the most suitable model in terms of the best ANNs structure andwandB,which were built by using the training dataset.

2.1 Grasshopper Optimization Algorithm(GOA)

The GOA is a lately proposed swarm-primarily based totally meta-heuristic [33].As these algorithms are viewed alone in nature,they establish a big swarm of all insects.These insects construct swarm pests that negatively affect farmers and agriculture.The grasshopper’s life cycle includes two stages.The nymph moves slowly a small distance,while the adult age jumps high and travels a great distance;form their movement corresponds to exploration and exploitation.A version that shows the swarming conduct of the grasshopper becomes provided in[34]and is repetitive here:

Xiindicates locationithgrasshopper,Sishows the social interaction(SI)presented in Eq.(2),Giindicates the gravitational force onithgrasshopper,andAiindicates wind advection:

Nindicates the number of grasshoppers,dijindicates the length withinithandjthgrasshoppers,parametersindicates the social forces assessed by the following Eq.(3), anddij= |xi-xj|/dijrepresents the unit vector fromithtoithgrasshopper.

fandlare the attraction intensity and attraction length scale,correspondingly.In this algorithm,the SI is divided into three regions: stable, attraction, and repulsion.The “s”function gives values close to 0 with distances greater than 10 returns.If the distance between the locusts is greater, the function“s”cannot follow as strong forces.This problem can be resolved by theGicomponent using the following Eq.(4):

wheregindicates gravitational variable,andindicates unity vector toward the centre of earth.The wind advectionAiin Eq.(1)is computed by the following Eq.(5).

uindicates continuous flow andis unity vector in wind direction.thus,their motion is closely related to the wind direction.Next plugging the values ofS,G,andAin Eq.(1),the last Eq.becomes:

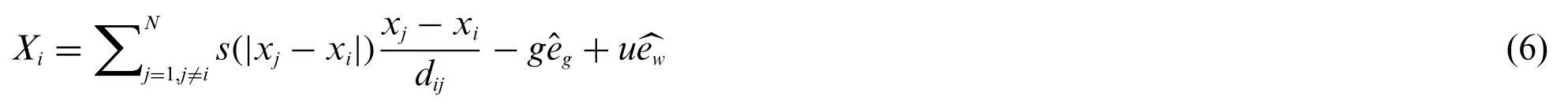

Eq.(6)is not able to be used at once to resolve optimization problems,because the grasshoppers fast attain the comfort 0, and the swarm gadget does now no longer converge to a goal location by Saremi et al..An enhanced version of this Eq.(7)is given as:

whereubd and lbdare indicating the lower and upper bounds inDthdimension,respectively.indicates the best solution found so far in theDthdimension space, and argumentcindicates the decreasing coefficient to detract from the stable, attraction, and repulsion areas.The argumentcmitigates the stable area directly to count iterations and is given as:

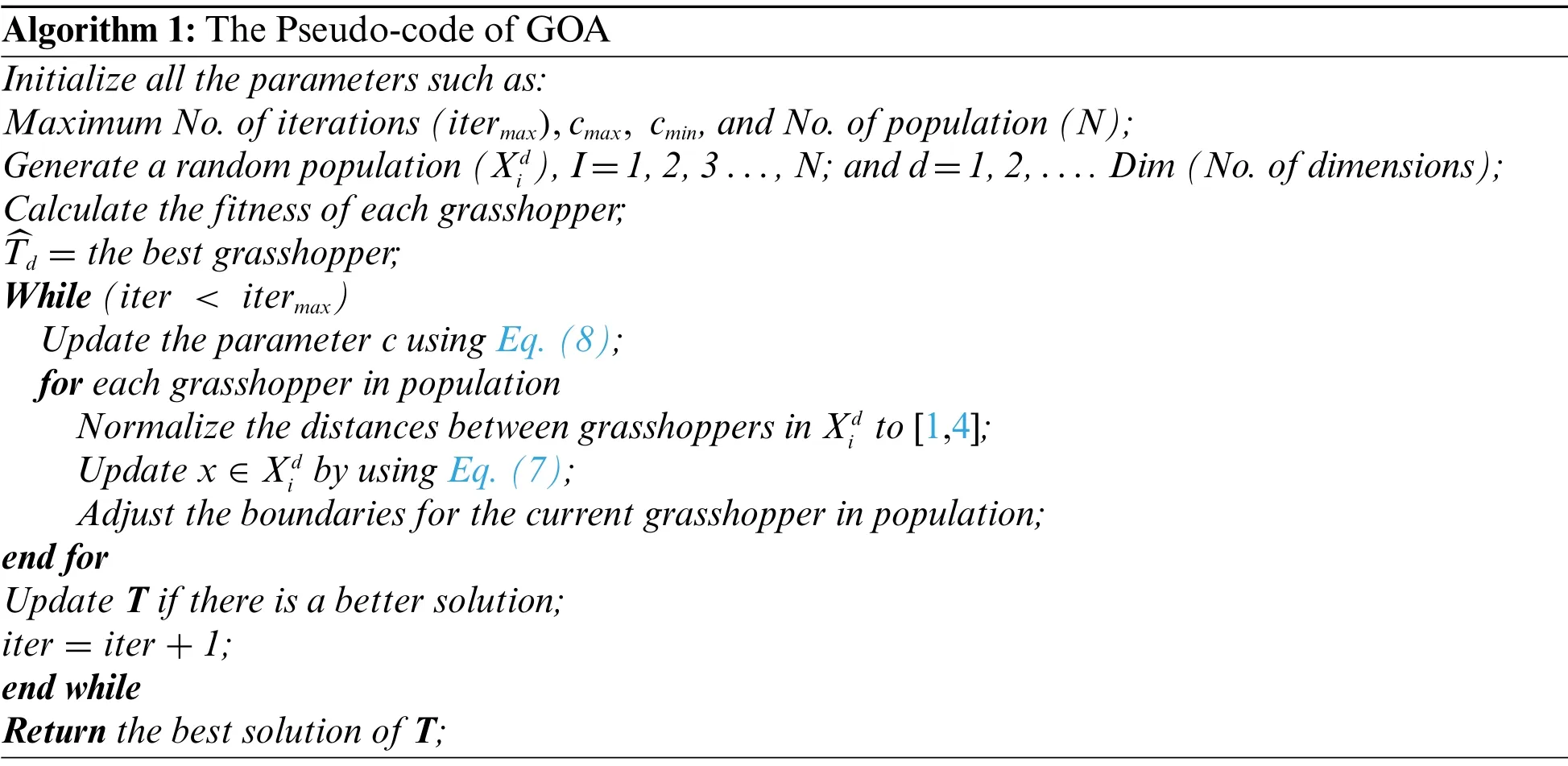

Algorithm 1:The Pseudo-code of GOA Initialize all the parameters such as:Maximum No.of iterations(itermax),cmax, cmin,and No.of population(N);Generate a random population(Xd i ),I=1,2,3...,N;and d=1,2,....Dim(No.of dimensions);Calculate the fitness of each grasshopper;^Td =the best grasshopper;While(iter <itermax)Update the parameter c using Eq.(8);for each grasshopper in population Normalize the distances between grasshoppers in Xd i to[1,4];Update x ∈Xd i by using Eq.(7);Adjust the boundaries for the current grasshopper in population;end for Update T if there is a better solution;iter=iter+1;end while Return the best solution of T;

The parameterc1is like the inertialwin PSO.Decrease the locust’s movement in an optimal solution.Coefficientcbalances the intensification and diversification.of the whole swarm by the optimal solution.The parameterc2is used to reduce the repulsion, comfort, and attraction zones among grasshoppers.In addition, componentlinearly creases the way for grasshoppers to intensify and diversify.

The second parts(|xj-xi|)reveals a grasshopper could be repelled by searching or employing.Parametercmaxindicates the least value,cminindicates most value,Iteris new iteration,anditermaxreveals the most iterations.The GOA pseudo-code is displayed in Algorithm 1.How GOA does,at this point it is worth pointing out to initialize all the parameters such as the maximum No.of iterations,c1&c2,and no.of populations.For each grasshopperxd,GOA starts with generating a random setand calculating the fitness function(FF)and the best grasshopperTdthen is chosen with the best FF.After selecting the grasshopper from the previous step,three steps are performed through:1)Normalizing the lengths amongst grasshoppers into[1,4];2).Modifying current grasshopperxi∈by utilizing Eq.(7);3)Adjusting the boundaries for the new grasshopper in the population.Finally,until the end criterion is reached,grasshopper position updates are made periodically.The grasshopper’s position and the best target’s fitness are returned as the best estimate for the global optimal.

2.2 The GOA Adaptation Process

The meta-heuristic algorithms have already shown great potential in solving the problem of classification or prediction of ANNs by tuning thewandBof the NN.In these approaches, the process of training involves using appropriate ANNs architecture,wandBrepresentation,termination condition(s),and FF.Through these four aspects are adapted in GOA to suit its functions as a training method for ANNs, to provide the required needs through the ANN training process, therefore the training method using the approach algorithm in this work named as GOAMLP.The grasshopper algorithm’s capacity to work with NNs has already been tested and compared to other algorithms,with encouraging results[35],our new approach is motivated by recent developments in the field.There are three basic techniques of using the meta-heuristic algorithm to train NNs.In the first stage, the algorithms are used to fixed right ANNs architecture for NNs during the learning process.Modifying the architecture could involve changing the relationship among the neurons,hidden layers,and hidden neurons.In the second stage,the algorithms are used to locatewandBthat enable a minimal MSE that denotes the cost function of the NNs.The training algorithm finds appropriate values for all relationwandBto reduce the general error of the ANNs.

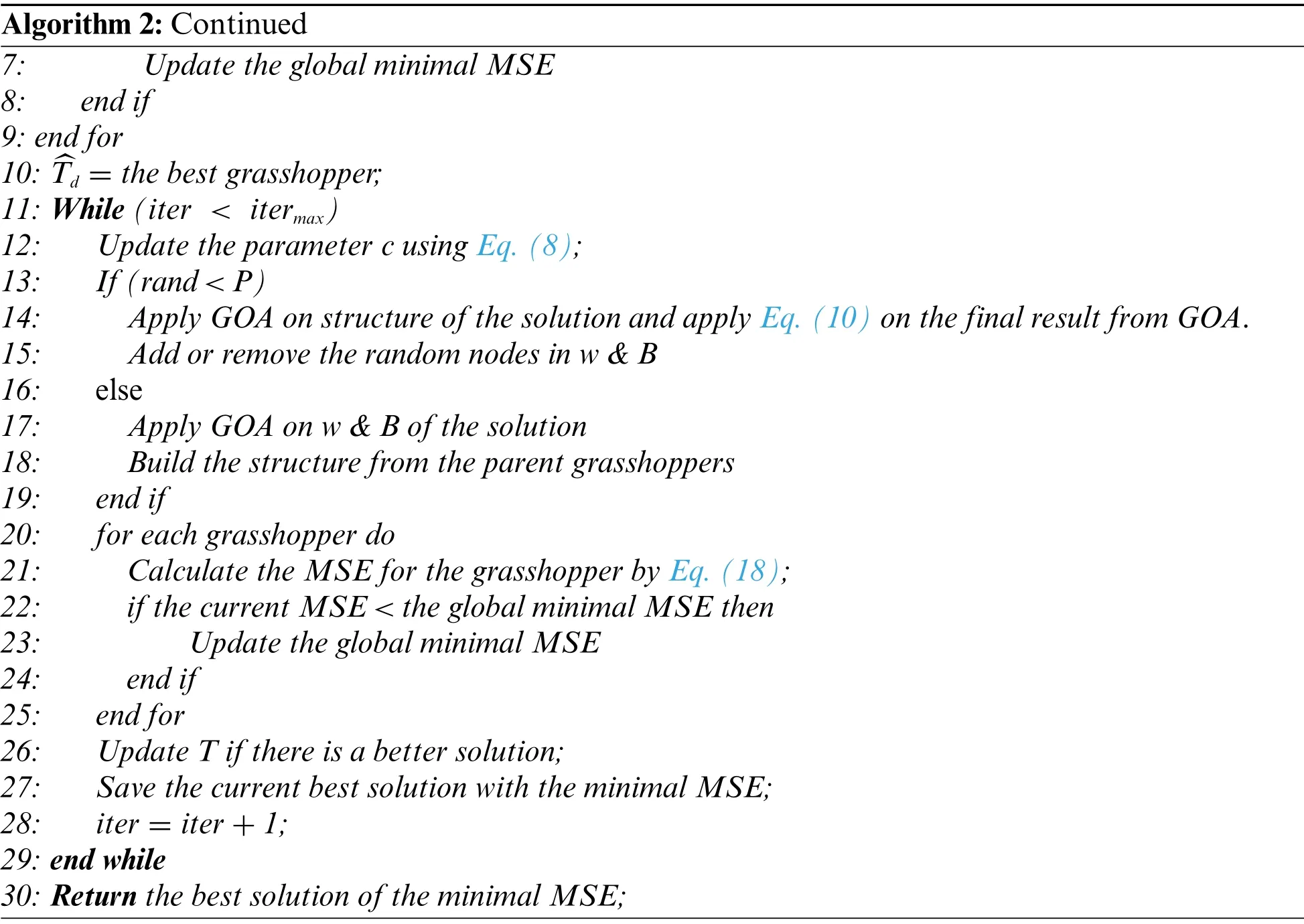

In the final stage,the algorithms is applied to modify the variables of the gradient descent learning algorithms.In our Reference[36]used the method which is discovering maximumwandBthroughout the training.Nevertheless, this research utilizes the GOA was proposed lately, to find the optimal ANNs.GOA is shown in Algorithm 2.At the beginning of Algorithm 2,all the variables of the GOA and the NNs model are provoke,namely,cmax,cmin,itermax,then the lower and upper bounds;then a set of solutions is created unselective.The GOA has different parameters,like solution vector size(SVS),that denote the no.of success in the SV.Solution in SV isxi(i=1,2...,D)is a D-dimensional vector,the dimension of solutions is described by Eq.(9).That is,Dis decision variables.The ranges are given byXLandXUvectors are indicating lower and upper bounds,with uniform distance of the SV.The SV is a vector of the best SV obtained.Eq.(9)represent unit vector of SVS×D.The SV dimension is set prior to address the algorithm.Each SV is also related to a quality value regarding objective function(f(x)).Algorithm 2 illustrates that GOAMLP is same as other algorithms,that start by initializing solution memory vector denoting MLPw.Calculates the initial fitness value for every grasshopper(solution) in line 3.The MSE for individual grasshopper (solution) in the whole SV is verified and MSE of global minimum derivation in lines 4–9.In line 10,is calculated the GOA parameterand loops given to maximum iteration in line 11.Then parameterc of the GOA is updated.

Algorithm 2:The Pseudo-code of GOAMLP 1:Initialize all the parameters such as:Training parameters;Maximum No.of iterations(itermax),cmax,cmin,and No.of population(N);Probability(P)of applying GOA operator on ANN structure or w&B;2:Generate a random population(Xd i ):(I=1,2,3...,N)and(d=1,2,....Dim →no.of dimensions);3:Calculate the fitness of each grasshopper;4:for each grasshopper do 5: Calculate the MSE for the grasshopper by Eq.(18);6: if the current MSE <the global minimal MSE then(Continued)

Algorithm 2:Continued 7: Update the global minimal MSE 8: end if 9:end for 10: ^Td =the best grasshopper;11:While(iter <itermax)12: Update the parameter c using Eq.(8);13: If(rand <P)14: Apply GOA on structure of the solution and apply Eq.(10)on the final result from GOA.15: Add or remove the random nodes in w&B 16: else 17: Apply GOA on w&B of the solution 18: Build the structure from the parent grasshoppers 19: end if 20: for each grasshopper do 21: Calculate the MSE for the grasshopper by Eq.(18);22: if the current MSE <the global minimal MSE then 23: Update the global minimal MSE 24: end if 25: end for 26: Update T if there is a better solution;27: Save the current best solution with the minimal MSE;28: iter=iter+1;29:end while 30:Return the best solution of the minimal MSE;

Lines 13 to 19 comprise providing a little change to the GOA operator in early testing of the probability parameter P and choosing one of two alternative approaches to balance the application of the GOA operator to either the ANNs structure or itswandB.

The possibility to know the answer a parent is proportional to the amount by that its fitness is a smaller amount than other of the opposite solution’s fitness.The GOA optimization process is used with a 50%probability in the ANN structure,and there is a 50%that applies the optimization process towandBduring the current iteration.If the GOA optimization is to be applied to the parental structure,thewandBneurons will be randomly attached or eliminated to fit the ANNs architecture of the solution.The no.of neurons inwandBof the SV is computed using Eqs.(11)–(13).This technology provides the GOAMLP to obtain an expanded quality of solutions with optimized ANNs structures ofwandB.An efficient FF that considers both the no.ofwandBconnections and the error to be minimized helps the algorithm improve the FF using a small-scale model.Finding new solutions by updating GOA.If the no.of iterations exceeds the maximum no.of generations, the iterations will be stopped.Moreover,this process requires to review the activewandBconnections to find the architecture of ANNs.Responsibility for selecting the ANN architecture rests with the GOA when obtaining a solution.The GOAMLP pseudocode is introduced in Algorithm 2.The solutions in the sample have two components,the first component encodes the ANNs architecture.When the result of applying random operator,P decides that the structure of the MLP is modified by another location of the grasshopper,SS determined as a binary pattern[0,1]denoting the grasshopper’s location in binary vector using a sigmoid function as presented in Eq.(10).

Eq.(10) is used on output of Eq.(9) during the process of GOA to the architecture of ANNs.When the result of the Eq.(10) is less than a specific number in the range 0, 1 thereafter the result from Eq.(10)is set to 0,on the other hand,this result is modified to one.Secondly part,locates that thewandBin ANNs model are modified.Motion of every grasshopper withinssdirection is between[-1,1].The first population architecture solution is indiscriminately given,and the length ofwandBis determined to match every structure.Finally, thewandBvalues are randomly generated.The MSE for a grasshopper(solution)in all SV is verified and the MSE of global minima in lines 20–25.In line 26,the GOA parameter,namely,is updated.Line 27 saves the best solution with minimum MSE.Theiterparameter is increased by 1 in line 28.Lastly,the good solution to the minimum MSE is recognized in line 30.

2.2.1 Solution Representation of ANN Structure by Using GOAMLP

The biases associated to every neuron are in hidden and output layers.The GOAMLP solution is represented by two one-dimensional vectors: 1) ANNs structure SV indicates the amount of inputs,the amount of hidden layers and amount of neurons at every hidden layer in ANNs.2)wandBSV indicates trained MLP.Each of those SV has an extraordinary representation.The value in the structure SV includes 0 or 1,whilst each value in thewandBSV have a real number between[-1,+1].The dimension of thewandBSV is equal towfor each layer of the MLP model,in addition to no.ofBin each layer.This length is computed in the use of Eq.(11).As such the totalwandBdepend on nodes and hidden layers,as shown in Eqs.(12)and(13).

wdenotes weights,Bequals biases,Istand for nodes in the input layer,Nmeans No.of nodes in every hidden layer,Hdenote hidden layers, andOmeans No of nodes in the output layer.With regard to identifying hidden nodes in MLP,some protocols suggested in the existing state-of-arts and no understanding amongst investigators on the most beneficial rule of application.

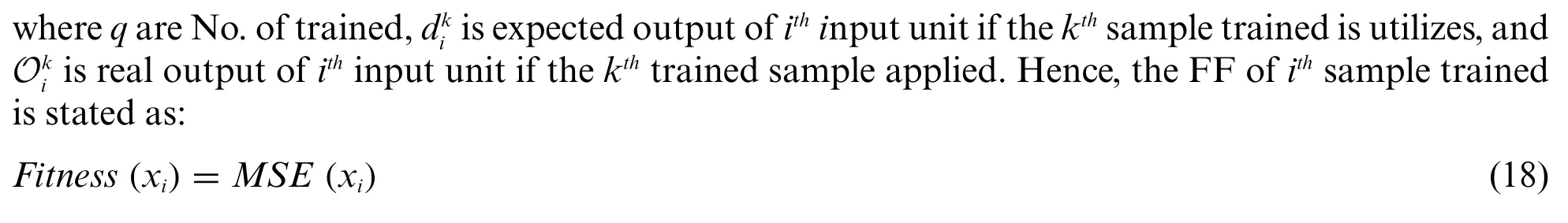

2.2.2 Fitness Function(FF)

This FF that can be utilized to assess the quality of the solutions to minimize the values obtained.In essence, this training method is similar to the previous studies [37,38].Supposing input nodes number isN,Hdenote hidden nodes, andOdenote output nodes, then the outputithhidden node is computed as:

wkjis associated weight for thejthhidden node to thekthoutput node andβkis the bias(threshold)of thekthoutput node.Lastly,the learning errorE(FF)is:

3 Validation of the Proposed

In this section the experiments for compared models were performed with a laptop configuration Core i5, 8 GB RAM, 2.4 GHz CPU, and MATLAB R2014a.The GOAMLP classifier is evaluated using the two data sets.The first dataset is Spam Base and consists of 4601 instances with 57 features.It consists of 1813 spam and 2788 legitimate emails.The dataset was obtained from the UCI[39].The second dataset is UK-2011 Web spam which consists of 3766 instances with eleven features.It consists of 1998 spam and 1768 legitimate emails.The features described as follows[40].

3.1 Parameters and Algorithms

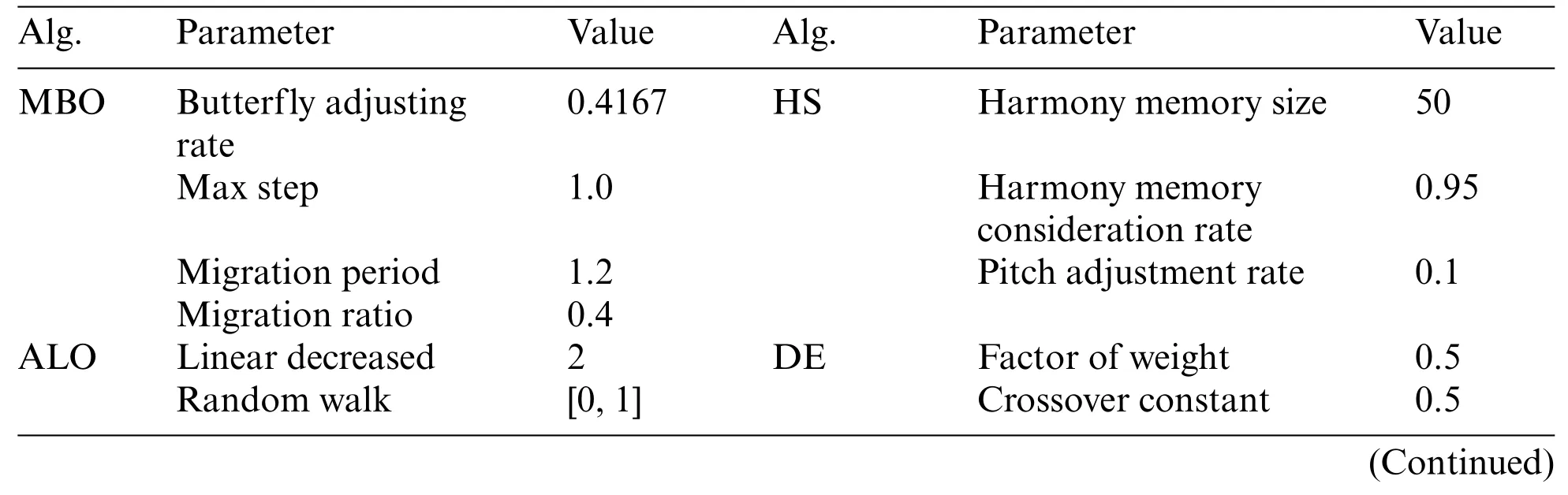

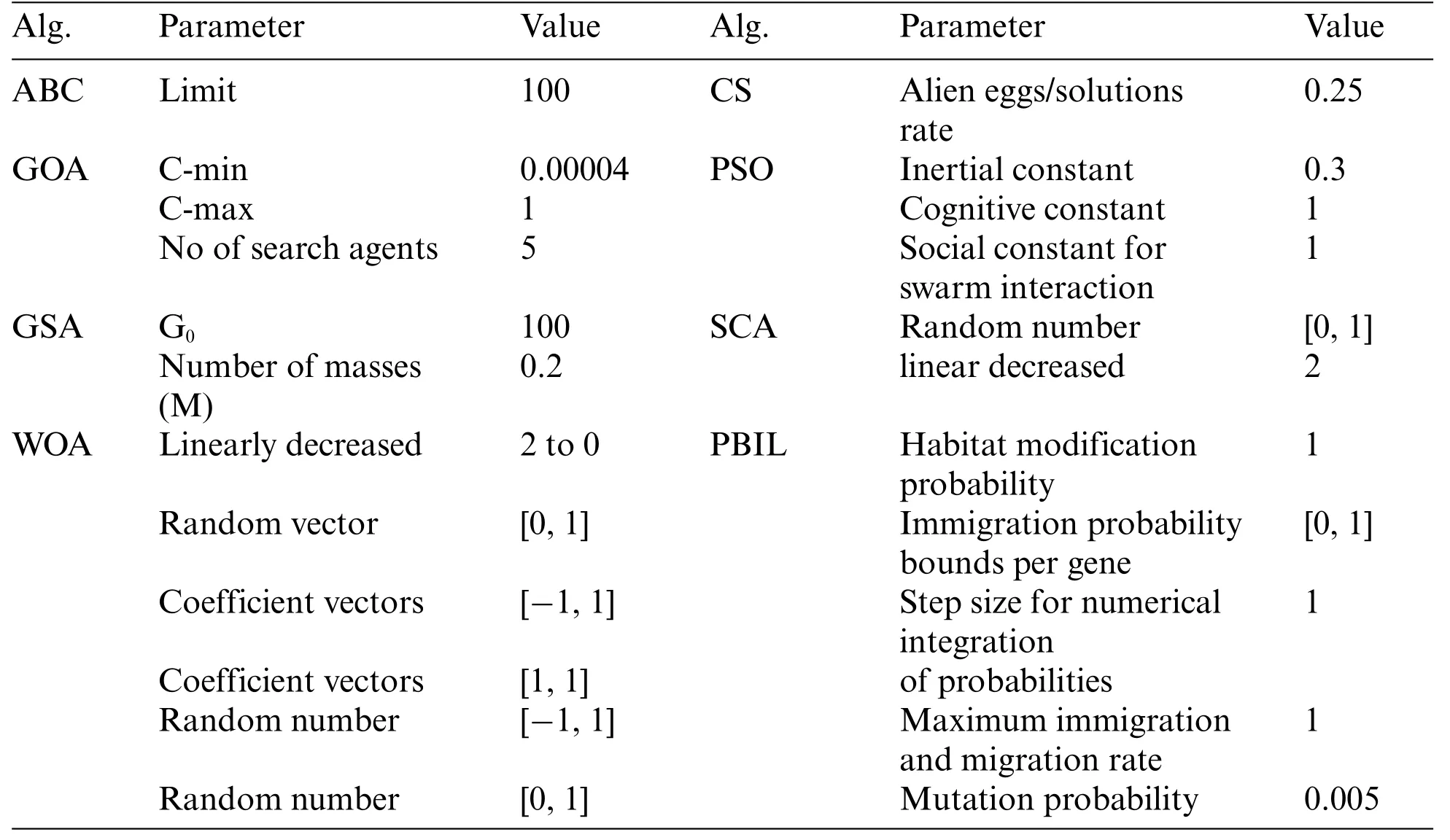

Different algorithms have been studied that analyse the reliability of the new model.All control variables of algorithms were set to the same values,SV solution,and dimensionality of SD that denotes features of the dataset.Shown in Tab.1 the parameters of the models utilized in the performance analysis.

Table 1: Parameters and algorithms

Table 1:Continued

3.2 Criteria for Performance Evaluation for GOAMLP

The proposed model compared ten basic measurements popularly applied in evaluating performance of the GOAMLP SD approach.The confusion matrix consists of four values include false negative (FN), true positive (TP), true negative (TN), and false positive (FP) rates.Tab.2 displayed the performance metrics.

Table 2: Comparison measures

4 Results and Discussion

As mentioned,this study uses two standard datasets to measure performance on data in different domains.Therefore,as is obligatory to normalize values of features to allow effective application to MLPs training, the minimum to maximum normalization technique was applied.The results from datasets are described as follows:

4.1 Scenario 1 the Spam Base Dataset

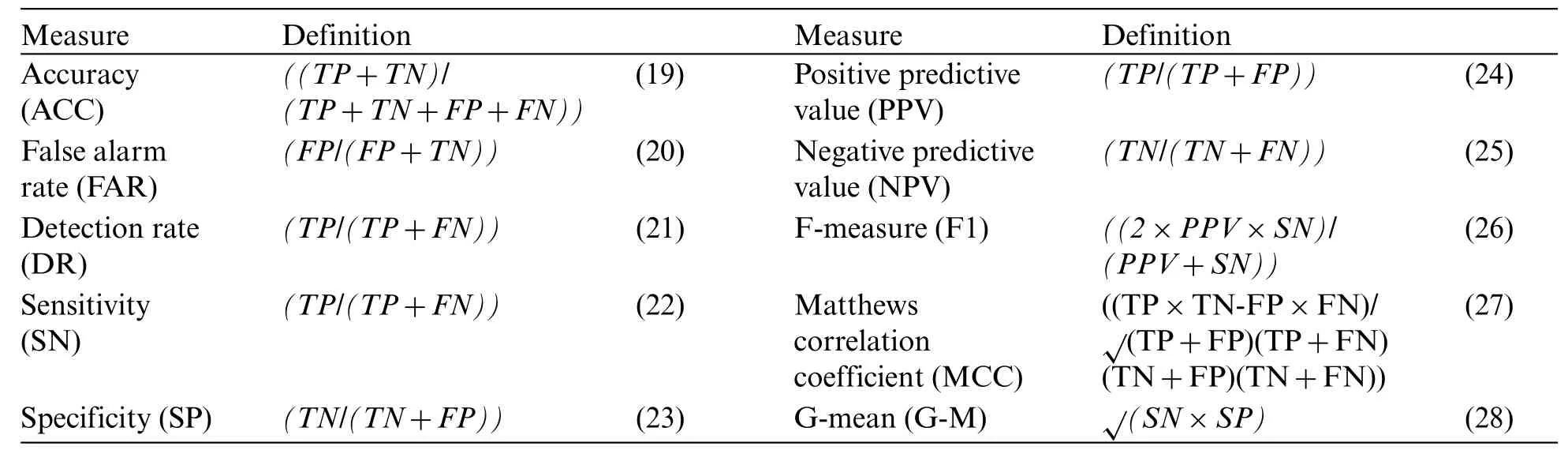

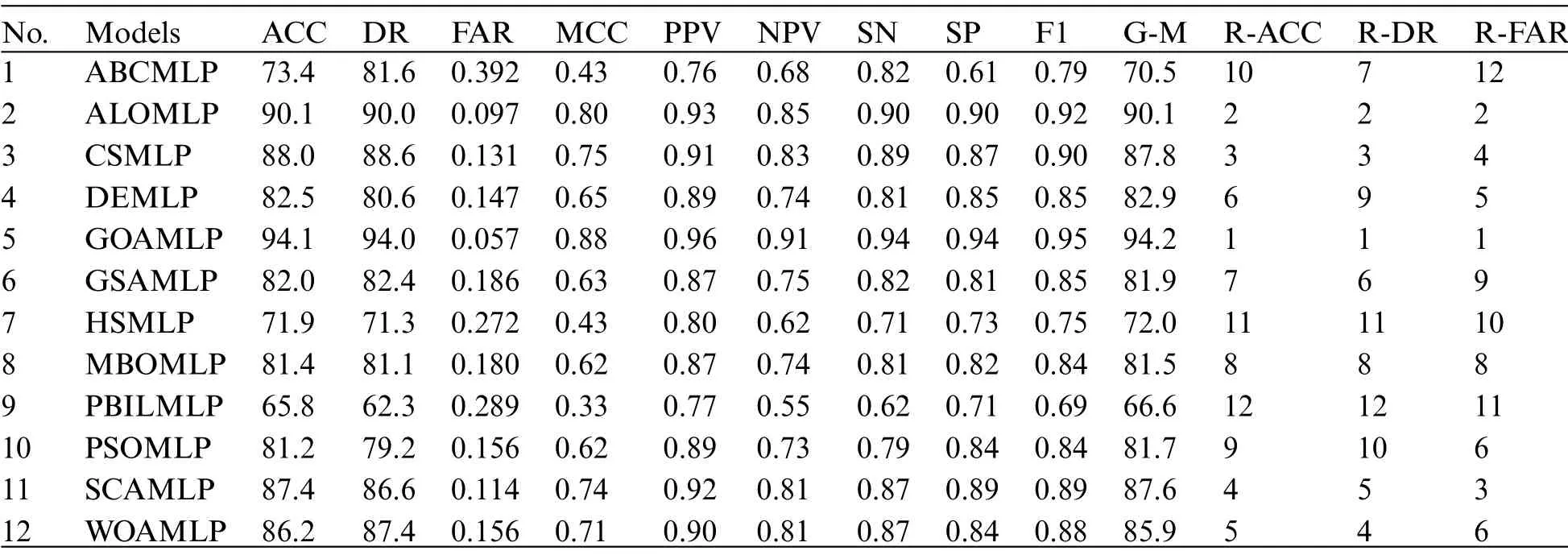

The results of GOAMLP SD approach and related models are computed using the Eqs.(19)–(28)in Tab.2.The last three columns of the range ACC (R-ACC), range DR (R-DR), and range FAR(R-FAR).Tab.3 summarize the results of GOAMLP spam detection approach.In term of accuracy,we find that the GOAMLP algorithm is the most accurate while the ALOMLP and the CSMLP give us closely the same lower percentage.

Table 3: The measurements of the performance for 12 algorithms vs.the spam base

Results recorded by the ALOMLP algorithm were roughly similar to GOAMLP with an ACC of 90.1%,DR of 90.0%,and FAR of 0.097;the CSMLP algorithm was rated third with regard to ACC and DR and rated 4th with regard to FAR of 88.0%, 88.6%, and 0.131, respectively.The SCAMLP was rated third with regard to FAR of 0.114 but rated fourth with regard to the ACC of 87.4%.The WOAMLP was rated 5th with regard to ACC,rated 4th with regard to DR,and rated sixth with regard to FAR of 86.2%,87.4%,and 0.156,respectively.On another hand,the PBILMLP algorithm has an inferior.

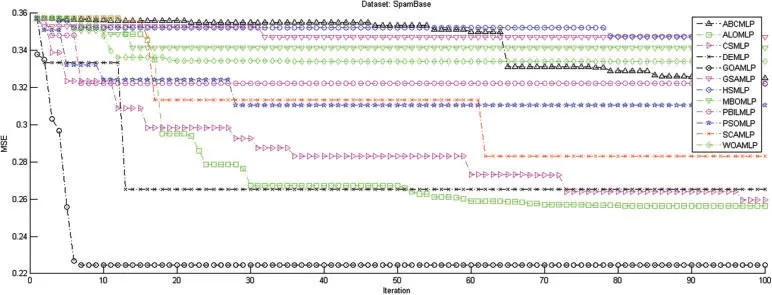

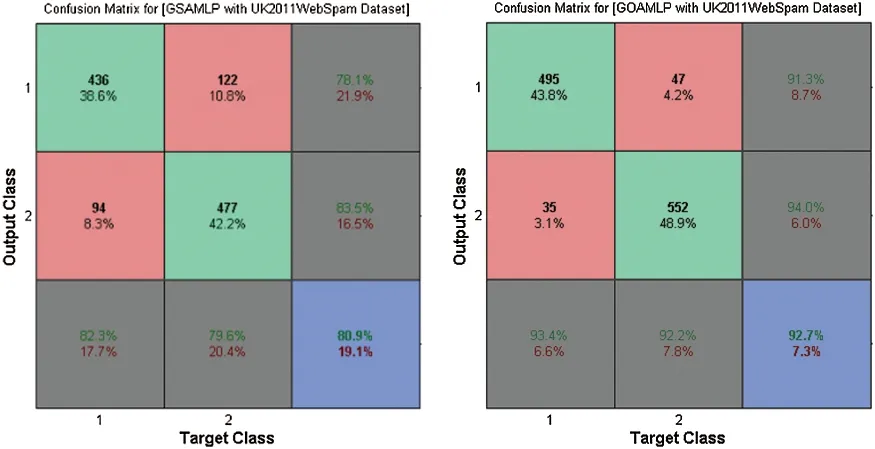

Fig.2 demonstrates the results of GOAMLP and other MLP algorithms when applied to this dataset, in terms of both the convergence speed of the MSE with the ultimate algorithm result.Investigating the convergence curves,we observe that GOAMLP significantly outperforms the other algorithms in terms of the convergence speed,which shows the goodness of fit the suggested algorithm trained.Fig.3 highlights the confusion matrix(CM)for the new model together with some algorithms.Due to the limited space, but their randomly selected 2 out of 12 algorithms to prove GOAMLP’s performance against algorithms.

Figure 2:The measurements of the performance for 12 algorithms vs.the spam base dataset

Figure 3:The confusion matrix for GOAMLP and PBILMLP vs.spam base dataset

4.2 Scenario 2 the UK-2011 Web Spam Dataset

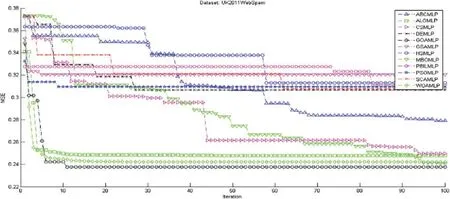

Tab.4, Figs.4 and 5 summarize the results of GOAMLP SD approach.The GOAMLP SD approach achieved high ranking in 92.7%, 93.4%, and 0.078, correspondingly.Our proposed SD approach is followed by the following models: WOAMLP is rated second with regard to ACC at a rate of 91.6%, second with regard to DR at a rate of 93.0%, and third respecting FAR at a rate of 0.097; the MBOMLP algorithm is rated third with regard to ACC at a rate of 88.9%, 6th regarding DR at a rate of 86.4%, and second with regard to FAR at a rate of 0.088; and ALOMLP model is rated fourth regarding ACC at a rate of 87.5%, third regarding DR at a rate of 89.2%, and seventh concerning FAR at a rate of 0.140.

Table 4: The measurements of the performance for 12 algorithms vs.the UK-2011 web spam

Figure 4:The measurements of the performance for 12 algorithms vs.the UK-2011Web spam dataset

In the area of speed of convergence,Fig.4 demonstrates GOAMLP SD approach achieved faster convergence rate.Fig.5 shows that the GOAMLP SD approach generally performed better with ACC,DR,and FAR.

Figure 5:The confusion matrix for GOAMLP and GSAMLP vs.UK-2011Web spam dataset

4.3 Scenario 3 Performance Comparison of Proposed Approaches and Other Methods

Tab.5 illustrated the comparisons of results of the proposed models with some methods of SD.

Table 5: Testing results comparison of proposed approaches and other methods of SD

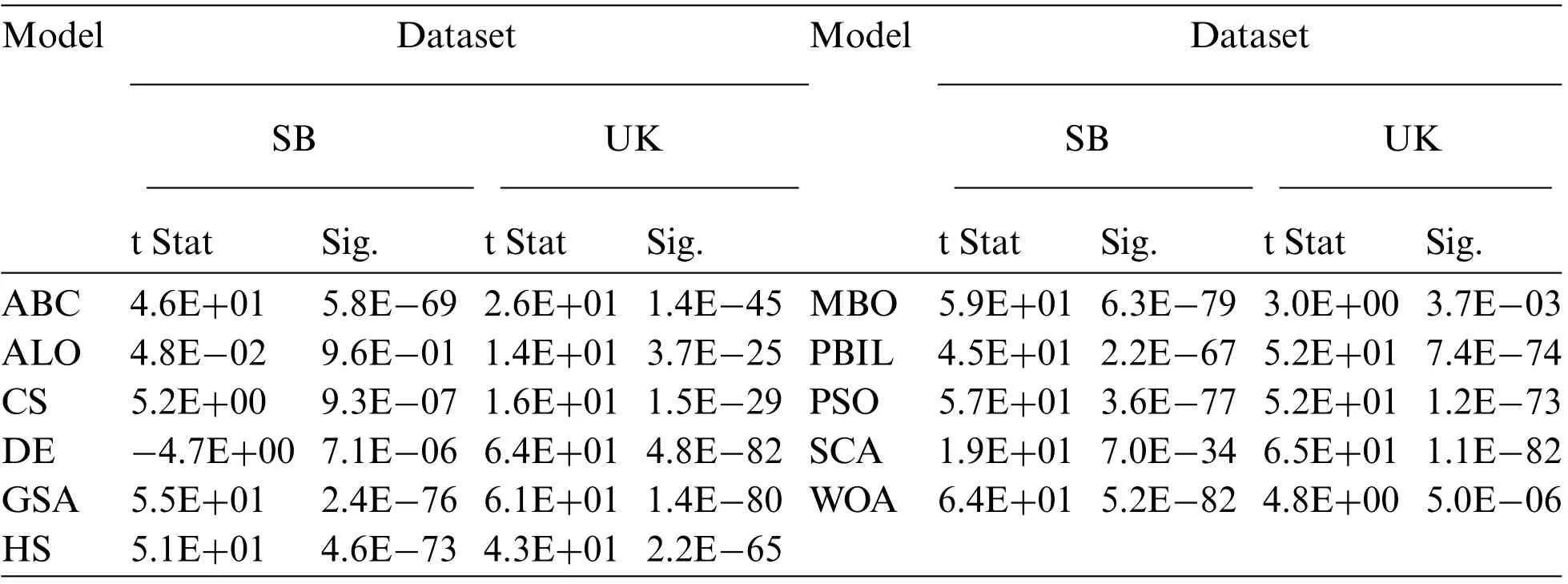

4.4 Statistical T-Test

The difference between the models was tested for statistical significance using a t-test.The analysis shown in Tab.6 shows a high correlation between the mean of the GOAMLP model at 0.05 alpha levels compared with the other models.The null hypothesis(H0)is the negation of any relationship between model 1(M1)and model 2(M2);M1 indicates as GOAMLP in all the tests.The statistical importance level was set at 0.05,that is,the alternative hypothesis will be considered when thep-value is less than 0.05 at the 95%confidence level.Meanwhile,Tab.6 showsp-values by paired t-tests among GOAMLP and other models,as well as the analysis of ACC,DR,and FAR.In Tab.6,all thep-values of<0.05 reveal that the hypothesis of GOAMLP superiority can be accepted,as the GOAMLP model achieved significantly better results than other models in all of the cases except for the test between ALOMLP and GOAMLP with Spam Base dataset.

Table 6: T-test for GOAMLP vs.the other models

5 Conclusion

This work introduced a novel approach for SD, namely, the GOAMLP.The focus was on the applicability of the GOA to train MLP.The performance of the proposed GOAMLP compared to the most recent SD.The work utilized 12 algorithms to train the MLP.The GOAMLP was trained against the benchmark datasets of Spam Base,and UK-2011Web spam and had classification accuracies of 94.1%,and 92.7%,detecting rates of 94.0%,and 93.4%,respectively;and finally,false alarm rates of 0.057,and 0.078.These results are higher than the result from other models that were tested using the same datasets.The outcomes display the adequacy of the proposed approach for spam detectors.All approaches were measured with regard to features of SD datasets.

Funding Statement:The authors received no specific funding for this study.

Conflicts of Interest:The authors declare that they have no conflicts of interest to report regarding the present study.

杂志排行

Computers Materials&Continua的其它文章

- Building a Trust Model for Secure Data Sharing(TM-SDS)in Edge Computing Using HMAC Techniques

- Adaptive Runtime Monitoring of Service Level Agreement Violations in Cloud Computing

- Automated Deep Learning Empowered Breast Cancer Diagnosis Using Biomedical Mammogram Images

- SSABA:Search Step Adjustment Based Algorithm

- SVM and KNN Based CNN Architectures for Plant Classification

- Adaptive Fuzzy Logic Controller for Harmonics Mitigation Using Particle Swarm Optimization