Blockchain-Based Architectural Framework for Vertical Federated Learning

2022-08-08QIANChenZHUWenjing朱雯晶

QIAN Chen(钱 辰) , ZHU Wenjing(朱雯晶)

1 School of Computer Science and Technology, Donghua University, Shanghai 201620, China 2 Institute of Scientific and Technical Information of Shanghai, Shanghai Library, Shanghai 200031, China

Abstract: The introduction of blockchain to federated learning(FL)is a promising solution to enable anonymous clients to collaboratively learn a shared prediction model using local data while avoiding the risk caused by the central server. However, the current researches only apply a shallow convergence between the two technologies. The aroused problems, such as the unsuitable consensus, the lack of incentive mechanism, and the incompetence of handling vertically partitioned data, make the blockchain-based FL exist in name only. This paper puts forward a novel blockchain-based framework for vertical FL with a specified consensus and incentive. Moreover, a real-world example is demonstrated to prove the practicability of our work.

Key words: vertical federated learning(FL); blockchain; smart contract; incentive mechanism

Introduction

With the advancements of machine learning(ML), various intelligent applications have been widely adopted across all areas of industries, especially those demanding knowledge and insights extracted from big data, such as healthcare and financial service[1-2].Undoubtedly, ML brings a new opportunity to traditional industries.As is well known, the quality of ML systems highly depends on the data,i.e., the more informative data we input, the more accurate model the ML system brings up.As a direct consequence, the continuous pursuit of data has inevitably exacerbated the privacy-preserving problem.In order to reduce the risks, two-thirds of countries indicate that they provide data protection by policy or legislation[3].It is a massive blow to ML research and industry despite the good intention.

A new ML paradigm, namely federated learning(FL), has been regarded as the most promising solution[4].The main idea is to allow multiple clients(e.g., mobile devices)to train a model in a collaborative fashion, whereas the training data are kept decentralized,i.e., deposited in the local databases.FL quickly succeeded in the cross-device development of simple applications, such as keyword spotting and emoji prediction[5-6].However, to release its full potential, we need to upgrade the traditional FL methodologies.

In this paper, we put forward a novel FL framework with three advantages.First, it allows multiple parties that own different attributes(e.g., features and labels)of the same data entity to jointly train a model.Second, it enhances the security of the data transfers taking place by introducing blockchain technology.Third, it promotes data sharing by an additional incentive mechanism based on an ad-hoc consensus algorithm.

The remainder of this paper is organized as follows.Section 1 elaborates on the background of our work, including the potential issues of FL and the benefit of using blockchain.Section 2 presents the proposed framework in detail.Section 3 illustrates the experiment and a corresponding evaluation.Conclusions are drawn in section 4.

1 Reviews

The training process of traditional FL is orchestrated by a central server, whereas the training data are kept decentralized,i.e., deposited in the local databases.At present, most FL approaches rely on a conventional client-server network, whose architecture and behavior are demonstrated in Fig.1.Hence, FL is ordinarily considered a partial decentralized ML setting,i.e., the data are decentralized while the control is centralized.At present, it has been proved helpful in trivial real-world cases.

Fig.1 Overview of FL framework

However, as the research progresses, problems keep surfacing.Firstly, a hub in the network undoubtedly has the possibility to cause a single point of failure[7].Secondly, finding a third party that can be trusted by every single client is merely impossible in practice.Not even mentioning the third party anyway increases the risk of data breach and the complexity of system design[8].Thirdly, and most importantly, it has been proved that a central server may become a bottleneck of computation and communication when the number of clients is sizeable[9].

To tackle the problems mentioned above, trending researchers investigated how to specify the FL algorithms within a peer-to-peer(P2P)framework, such as SimFL and SecureBoost[10-11].Both can build federated decision trees in a decentralized manner.Although the P2P network is not equivalent to the blockchain, it still shows that fully decentralization is the most promising strategy.

1.1 Rationale of using blockchain in FL

Blockchain, as the name implies, is a growing list of blocks linked by a virtual chain, where each block denotes an electronic record, and the chain symbolizes the cryptography methodology[12].The characteristics of blockchain are seemingly advantageous to FL[13]as follows.

(1)Decentralization.The underlying infrastructure of blockchain systems can allow the transaction to be processed without authentication by a central server.Hence, blockchain is applicable for the P2P-based FL framework.

(2)Persistency.Before a new block is added to the blockchain, it must be broadcast across the network and validated by other nodes.Thus, using blockchain can improve the robustness of FL, and data falsification can be detected easily.

(3)Immutability.It is impossible to tamper with the on-chain data since the transactions are deposited in every node of the distributed network.So it certainly can make FL applications more secure.

(4)Anonymity.The trust mechanism of blockchain allows users to interact with each other via generated addresses without exposing their real identities.Therefore, introducing blockchain in the FL domain fundamentally preserves the privacy of data owners.

(5)Auditability.Blockchain can be considered a singly linked list, in which every block is marked with a timestamp.As a natural consequence, any transaction can be verified and traced.In other words, we can monitor the transactions after every training epoch in FL.

The rise of the blockchain technology considerably promotes the development of smart contracts, which is defined as computerized transaction protocol to execute the term of an agreement[14].Ideally, establishing smart contracts in a blockchain environment takes over from third parties that have been essential to establish trust in the past.The ability to automatically interfere with the transaction process in a cost-effective, transparent, and secure fashion[15]is potentially beneficial for FL[16].It can be utilized to restrain the behavior of participants and automate the execution in FL workflows.Therefore, the interference of malicious behavior can be prevented while reducing the cost-efficiency in the training process.

In addition, the incentive mechanism of blockchain may provide the last piece of the puzzle in FL[17].The conventional FL paradigms do not consider any incentive scheme, which becomes a well-known problem that is urgently needed to be addressed.In other words, the clients who offer more data or better data in FL deserve more rewards than a global model.Otherwise, they will train their own models to take advantage of market competition.Consequently, data silos are further created, which is exactly the opposite of what FL aims to achieve.

To sum up, the aforementioned benefits of blockchain clearly express why we make a convergence of FL and blockchain technology in this paper.

1.2 Horizontal FL versus vertical FL

FL is categorized into two types in terms of the distribution characteristics of the data, namely horizontal FL and vertical FL, respectively[18].We hereby use a real-world example to explain their differences.

Alzheimer’s disease is a progressive brain disorder that gradually destroys a person’s memory, thinking, and behavior.Normally, it can be diagnosed through the biomarkers(e.g., amyloid beta(ABETA), Tau protein(Tau))or neuropsychological examination(e.g., mini-mental state examination(MMSE), Alzheimer’s Disease Assessment Scale-Cognitive Subscale 13(ADAS-Cog13).The former can only be obtained via medical tests in hospitals, whereas the latter can be sat with apps nowadays.Figure 2(a)shows two hospitals have different patient groups from their respective regions, but they identify similar biomarkers, so the feature spaces are the same.Therefore, a global model can be established by a horizontal FL solution.However, Fig.2(b)illustrates another scenario in which the same patients receive medical data via different resources,i.e., the biomarker results from the hospital and the neuropsychological examination grades from a mobile application.Apparently, the feature spaces are different, but on the basis of both parties, we can get joint training in a vertical FL framework.The comparison in Fig.2 gives rise to a conclusion that horizontal FL should only be used in specific scenarios, in which data may significantly differ in sample space but must have a certain amount of overlap among features.Contrariwise, in vertical FL, the data differ in feature space but must partially overlap on sample ID.

PTau-hyperphosphorylation of Tau; HV-hippocampal volume; ICV-intracerebral volume; WBV-whole brain volume.

On one hand, horizontal FL algorithms are primarily implemented on an enormous number of intelligent devices or Internet of Things(IoT)equipment.The whole training process is orchestrated by an authoritative organization, while the clients are usually assumed honest.On the other hand, vertical FL demands fewer clients with homogenous data, while some might lack labels.Apparently, vertical FL has more potential for wider application prospects,e.g., drug discovery and intelligent manufacturing[19-20], despite it being more challenging from a technical point of view.This paper shows how to realize a vertical FL system in the proposed framework.

2 Proposed Framework

The problems that occur in current FL scenarios and the solution that may overcome the natural defects of FL are investigated in the previous section.So, a blockchain framework for vertical FL is introduced hereby.

2.1 Architectural design

The first step to undertake is to establish the underlying architecture of the proposed framework.As demonstrated in Fig.3, our framework is realized based on a fundamental structure of three layers, which can be described from bottom to top as follows.

Fig.3 Architecture of proposed framework

(1)Network layer.This layer embodies a fully decentralized P2P network connected by a number of nodes.To start a learning mission, one of the nodes becomes the task publisher, while the others become clients.It is worth noting that each client plays twofold roles,i.e., the trainer and the miner.The former performs the training with its data locally, whereas the latter is responsible for verifying the newly-issued transactions and further producing new blocks according to the verification results.

(2)Ledger layer.The middle layer represents a distributed ledger.The data deposited in the ledger are constantly shared and synchronized across clients.Thereby, the events involved in the FL procedure,e.g., publishing a task, broadcasting learning models, and aggregating learning results, can be traced by any participant.

(3)Service layer.The top layer is constituted by blockchain applications.In our framework, the smart contract and the FL system are deployed here, which can be adopted to invoke the events of FL.

2.2 Smart contract

Smart contracts are a significantly crucial piece in our framework.Typically, they are defined, programmed, and deployed on the blockchain by the task publisher while the FL system starts initialization.However, the smart contract has its defects[21].The contracts are coded by humans, so errors are unavoidable.Worse yet, it is impossible to make corrections directly on a contract registered on the blockchain.The only remedy requires creating a new smart contract altogether, which anyway increases the risk of introducing more mistakes into the system.

To alleviate the problem, our framework uses a visualization modeling language, called BOX-MAN, to design and implement smart contracts in a compositional manner[22].

The BOX-MAN model defines two types of services: atomic service and composite service, as shown in Fig.4(a).The services are connected by a composition connector, a adaptation connector, and a parallel connector(PAR), as shown in Fig.4(b).An atomic service encapsulates a set of methods in the form of an input-output function with a purpose that different services can access.A composite service consists of sub-services(atomic service or composite service), which are composed of exogenous composition connectors.Such connectors coordinate control flows between sub-services from the outside.Data flows are coordinated by horizontal data routing and vertical data routing.The former links the input and the output of individual services, whereas the latter is regarded as data propagation between the services and their sub-services.

SEQ-sequencer; SEL-selector; LOP-loop; GUD-guard; PAR-parellel connector.

The composition connector includes a sequencer(SEQ)and a selector(SEL).The former defines sequencing, while the latter defines branching.In contrast, the adaptation connectors do not compose services.Instead, they are applied to individual services with the aim of adapting the received controls.For instance, the guard allows control to reach a service only if the condition is satisfied, and the loop(LOP)repeats control to a component until the condition is no longer fulfilled.Additionally, the parallel connector is defined to handle the parallel invocation of sub-services.As a matter of fact, these connectors altogether can simulate any(nested)statement in computer programming,e.g., if-else statement, while statement, parallel statement, and block statement,etc.

In order to manage the behavior of the proposed framework, three types of smart contracts have been designed as follows.

(1)At the very beginning of learning, the task publisher must draw up a contract to clarify the task requirements.For example, the contract should define the data size so that the data owners who do not have enough samples are aware that they are not qualified to join the training.Other important criteria include training accuracy, latency, aggregation rules, reward program and so forth.This smart contract is accessible to all nodes in the blockchain network.

(2)The first contract is essentially an initiative proposed by the task publisher.On the one hand, whoever is dissatisfied with the contract has the right to refuse the training request.On the other hand, interested participants must reply with their costs and capabilities.Such replies are also recorded using smart contracts and further registered on the blockchain.Meanwhile, every applicant must stake a deposit in the smart contract.For instance, every training node must prepare its data in a repository, whose directory path has to remain unchanged during the entire learning process.The path will be encrypted by the public key of the node and then be recorded in a smart contract, along with the hash of all data inside this directory.Therefore, if a dishonest client modifies its training data in the middle of the FL process, it will easily be spotted and hence lose the deposit.Contrariwise, the deposits will be automatically refunded after the entire learning is accomplished.

(3)The smart contract is also immensely beneficial in aggregating results after every learning epoch.In section 2.1, we introduce how a central server collects the updates from all training nodes and performs an algorithm to aggregate them.However, the aggregation becomes a lot more complex in vertical FL on a P2P network.The workaround will be presented later, and hereby the smart contract guarantees the results are automatically aggregated without human intervention.Moreover, according to the reward program written in the contract, the miner can earn a bonus every time a new block is added to the blockchain.Finally, the smart contract can be automatically triggered to end the learning process by comparing the actual training accuracy with the expected one.

2.3 Workflow process

Based on the static architecture defined in Fig.1, the dynamic behavior of the proposed framework can be defined.Figure 5 shows the overall workflow.We hereby elaborate on the details of the activities in each step.

2.3.1Step1—InitiatingtheFLsystem

It is worth noting that this activity is conducted only once at the beginning of the FL process.With the aid of smart contracts, any node in the network is allowed to publish a learning task, which includes selecting the learning algorithm, implementing the initial model, determining the hyperparameters, establishing the reward program, selecting the participants, and so on.Qualified clients will receive the learning settings at last.

Fig.5 Workflow of proposed framework

2.3.2Step2—Localtraining

After all, the gradient will be sent toJ, and accordingly,Jcan achieve the update, too.

Figure 6 shows an example of the implementation of the training process using linear regression with gradient descent methods.The underlying algorithm adapted from Yangetal.[18]defines a training objective, which is

(1)

Fig.6 Training process of vertical FL using linear regression

(2)

(3)

(4)

It becomes obvious that the encrypted loss can be formulated as

(5)

(6)

(7)

2.3.3Step3—Uploadingthemodelupdate

2.3.4Step4—Verificationandaggregation

2.3.5Step5—Blockgenerationandaddition

Although every node has its own model, only one can be deposited into the blockchain.In our framework, we use an FL empowered consensus called proof of training quality(PoQ)protocol, which seeks widespread agreement in terms of training quality among clients in the network[24].The consensus process starts with a broadcast of the new models, each of which is encrypted by the miner’s secret key.As a result, for any clientK, all models are collected and stored locally as candidate blocks.In order to conclude the proof of training,Kmust verify all received models by calculating the accuracyVKas

(8)

whereMkdenotes the model ofK, andωis considered as a weight parameter that indicates the contribution ofMkto the global model.Naturally,ωcan be determined by the size of training data

(9)

whereNkis the size ofK’s training data.Eventually, the node with minimumVKwill be automatically selected as the leader, and hence takes the responsibility for driving the consensus process with all other miners.

As illustrated in Fig.7, every client creates a transactiont(Mk)that sends its modelMkto the leader, so the leader can accordingly create a global modelMby following the aggregation rule defined in the smart contract.After that, the leader constructs the candidate blocks, which can be described asBk=(Hk,t(Mk),M), whereHkdenotes the block header of a blockBk.The blocks will be further broadcast in the network for approval.For example, each verifying node receivesBiand starts the regular verification,e.g., examining the header format, checking the timestamp, and so on.Then, afterBiis proved to be valid, the node computes the values ofV(Mi)andV(M).If|V(Mi)-V(M)|is within a certain range, the node sends a reply to the leader for approval.IfBiis approved by all nodes, the lead will sign the block and add it to the blockchain.

Fig.7 Consensus process

2.3.6Step6—Blockbroadcast

After a new block is added to the blockchain, it will be broadcast to every node in the network.However, in order to prevent blockchain forks, each node must send a confirmation signal to the leader if no fork is spotted.If the leader receives the signals from all nodes, the consensus process continues.Otherwise, the process starts over from step 2.

2.3.7Step7—Updatingthelocalmodel

Now clients can update their local models.Notably, the training can terminate here, and step 8 will be arrived next, depending on whether the end condition defined in the smart contract is triggered or not.Otherwise, the training process repeats from step 2.

2.3.8Step8—Offeringtherewards

The smart contract automatically rewards the trainers and miners according to their contributions to the FL process.

3 Experiments

FL is regarded as one of the most promising techniques to be associated with medical imaging[25].In this section, to verify the effectiveness of our framework, we conduct an experiment on real-world datasets.

3.1 Introduction of dataset

The data used in this paper are extracted from the Alzheimer’s Disease Neuroimaging Initiative(ADNI)dataset, in particular selected from structural magnetic resonance imaging(sMRI)data, which acquired using T1-weighted in-phase fast gradient-echo imaging at 1.5 T.These labeled 3D structural images can show the cross-sectional, coronal, and sagittal planes of the subject’s brain.We manually segment the images of the hippocampus in a total of 130 sets.

3.2 Data pre-processing

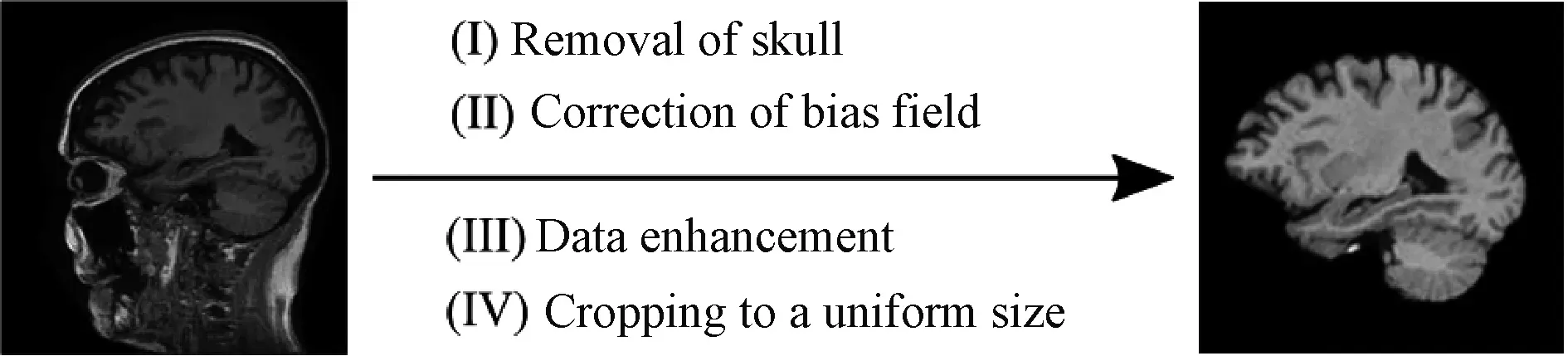

The pre-processing of the sMRI data before the experiment consists of four steps:(I)removal of the skull,(II)correction of the bias field,(III)data enhancement, and(IV)cropping to a uniform size.

Firstly, the skull is relatively distant from the hippocampus.Thus, it contains irrelevant voxel information that needs to be removed.Secondly, some unpredictable factors(e.g., position)may affect the image brightness during the scan operation.This is also known as a bias field.It can result in an inhomogeneous distribution of the magnetic field of the MRI machine, and therefore must be corrected.Thirdly, because the size of annotated data is small, we have to enhance the data by flipping and rotating the images in order to overcome the overfitting problem and improve the performance of the model.Finally, the sMRI images are cropped uniformly into 128×128×128 pixels to fit the model input requirements.Figure 8 shows the result of data pre-processing.

Fig.8 Comparison of sMRI data and pre-processed data

Eventually, the pre-processed images are split into left and right halves, providing a dataset of each half to different data owners.In each half, the pre-processed data were divided into two parts: the training set and the test set.The former contains 80% of the data, while the latter includes the rest 20%.

3.3 Experimental setup

The underlying model in our experiment is called the 3DUnet-CBAM based hippocampal segmentation model, which is the former research of ours[26].As the name implies, this model uses a 3DUnet convolutional network to avoid information loss between two-dimensional slices while incorporating the CBAM attention mechanism in the last layer of downsampling.The model successfully captures and combines the deep and superficial features of the hippocampus using monolithic ML.We hereby regard it as the initial model in our FL experiment.The total number of iterations of the model is 10, the batch training size is set to 1, and the initial learning rate is 0.000 1.

3.4 Experimental results

In order to quantitatively evaluate the effectiveness of the proposed framework with the 3DUnet-CBAM model, we adopt three evaluation metrics: Dice similarity coefficient, precision rate, and recall rate.

The Dice similarity coefficient is a measure of ensemble similarity, which is usually used to calculate the similarity of two samples.The value range is 0 to 1.The formula is

(10)

whereurepresents the hippocampus image after model segmentation,vrepresents the hippocampus manually labeled,|u∩v|is the intersection betweenuandv.|u|and|v|represent the number of voxels inuandv, respectively.

The segmentation of the hippocampus in effect is a binary classification task, because the segmentation result of the hippocampus is binarized,i.e., the gray area with a value of 0 is considered as the background, while the one with a value of 255 is the segmented hippocampus extracted from 3D sMRI image.The precision rate indicates how many of the samples that are predicted to be positive are truly positive samples, whereas the recall rate indicates how many of the positive examples in the sample are predicted correctly.They can be calculated byTP/(TP+FP)andTP/(TP+FN), whereTPandFPdenote the numbers of true-positive and false-positive samples, respectively;FNdenotes the number of a false-negative result.

The decision threshold set in this paper is 0.5.Table 1 shows the Dice similarity coefficient, precision rate, and recall rate of hippocampus segmentation using different ML paradigms.It becomes obvious that the proposed framework can perform an FL task and outcome a high-quality model, just like traditional ML can do.

Table 1 Comparison of evaluation results using different training methods

4 Conclusions

In this paper, a novel framework that enables FL systems to be executed based on a blockchain network is presented.Based on the framework, a vertical FL approach to train a global model with heterogeneous data based on our framework is introduced.The data security in the FL process by providing a security mechanism of blockchain is also enhanced.Moreover, an incentive mechanism based on the consensus algorithm for our framework is successfully designed, which has the potential to promote data sharing between unknown clients.

However, there are many remaining challenges.Some of them have originated from FL itself, while the rest is brought by blockchain.The former includes non-identically independently distributed(non-IID)data, threat models, poisoning attacks, and some algorithmic challenges.The latter contains scalability, inefficiency, and sometimes excessive transparency in the context of FL.Addressing the aforementioned issues is our future work.Although it is a long-term task, the ultimate goal of our research is to create a universal blockchain-based framework that is powerful and secure enough to execute any kind of FL training.

杂志排行

Journal of Donghua University(English Edition)的其它文章

- Three-Dimensional Model Reconstruction of Nonwovens from Multi-Focus Images

- Toughness and Fracture Mechanism of Carbon Fiber Reinforced Epoxy Composites

- Three-Dimensional Metacomposite Based on Different Ferromagnetic Microwire Spacing for Electromagnetic Shielding

- Optimal Control of Heterogeneous-Susceptible-Exposed-Infectious-Recovered-Susceptible Malware Propagation Model in Heterogeneous Degree-Based Wireless Sensor Networks

- Enhanced Arsenite Removal Using Bifunctional Electroactive Filter Hybridized with La(OH)3

- Students’ Feedback on Integrating Engineering Practice Cases into Lecture Task in Course of Built Environment