Malaria Parasite Detection Using a Quantum-Convolutional Network

2022-03-14JavariaAminMuhammadAlmasAnjumAbidaSharifMudassarRazaSeifedineKadryandYunyoungNam

Javaria Amin,Muhammad Almas Anjum,Abida Sharif,Mudassar Raza,Seifedine Kadry and Yunyoung Nam

1University of Wah,Wah Cantt,Pakistan

2National University of Technology(NUTECH),Islamabad,Pakistan

3COMSATS University Islamabad,Vehari Campus,Vehari,Pakistan

4COMSATS University Islamabad,Wah Campus,Pakistan

5Faculty of Applied Computing and Technology,Noroff University College,Kristiansand,Norway

6Department of Computer Science and Engineering,Soonchunhyang University,Asan,31538,Korea

Abstract: Malaria is a severe illness triggered by parasites that spreads via mosquito bites.In underdeveloped nations,malaria is one of the top causes of mortality,and it is mainly diagnosed through microscopy.Computer-assisted malaria diagnosis is difficult owing to the fine-grained differences throughout the presentation of some uninfected and infected groups.Therefore, in this study,we present a new idea based on the ensemble quantum-classical framework for malaria classification.The methods comprise three core steps:localization, segmentation, and classification.In the first core step, an improved FRCNN model is proposed for the localization of the infected malaria cells.Then, the RGB localized images were converted into YCbCr channels to normalize the image intensity values.Subsequently, the actual lesion region was segmented using a histogram-based color thresholding approach.The segmented images were employed for classification in two different ways.In the first method, a CNN model is developed by the selection of optimum layers after extensive experimentation,and the final computed feature vector is passed to the softmax layer for classification of the infection/non-infection of the microscopic malaria images.Second,a quantum-convolutionalmodel is employed for informative feature extraction from microscopic malaria images,and the extracted feature vectors are supplied to the softmax layer for classification.Finally,classification results were analyzed from two different models and concluded that the quantum-convolutional model achieved maximum accuracy as compared to CNN.The proposed models attain a precision rate greater than 90%,thereby proving that these models performed better than the existing models.

Keywords: Quantum; convolutional; RGB; YCbCr; histogram; Malaria

1 Introduction

Malaria is a bodily fluid infection transmitted by female Anopheles mosquito bites that spread parasitized malaria parasites into the human body [1].Information regarding malaria from the World Health Organization (WHO) is a global platform that signifies that approximately half of the global population suffers from this infectious disease [2].Approximately 200 million malaria outbreaks have resulted in 29,000 deaths annually, as per the World Health Report [3].While spending is steady as of 2021, there is no decline in the case of malaria.In 2016, US$ 2.7 billion were spent by the governments of malaria-endemic countries and foreign countries to monitor malaria [4].To minimize the prevalence of malaria, the government plans to spend US$ 6.4 billion annually by 2020 [5].The density and thinness, including its blood smear images, are usually analyzed by microscopists, and blood smears are checked with 100× expanded images according to WHO classification [6].Early diagnosis tests and therapy are sufficient to avoid the severity of malaria.Owing to the lack of information and analysis by epidemiologists, the health risks associated with the treatment of malaria have not yet been resolved [7].To monitor deaths caused by malaria, early evaluation of malaria is needed [8,9].The numbers showed that there was inadequate medical care for more febrile infants [10].Computerized methods have been widely utilized for malaria detection [11-13].Although much work has been performed with regard to malaria detection, there is still a gap in this domain due to several factors of microscopic malaria images such as poor contrast, larger variations, variable shape, and sizes that minimize the precise detection rate [14,15].As a result, a novel concept for segmenting and classifying malaria parasites is provided in this research article.The contributing steps of the proposed architecture are defined as follows.

• An improved FRCNN model was designed and trained on the tuned parameters for more precise localization of malaria lesions.

• RGB images are translated into YCbCr color space after localization, and the appropriate area is segmented using histogram-based thresholding.

• The classification is performed on the segmented images by performing a complex feature analysis using deep CNN and a quantum-convolutional model.

The organization of this article is: Section II discusses related work, Section III defines suggested methodology phases, and Section IV discusses the obtained findings.

2 Related Work

While therapies for malaria are effective, early diagnosis and intervention are necessary for good recovery.Therefore, disease identification is critical [16].Sadly, even if they can be acute,malarial symptoms are not distinct [17].A blood examination accompanied by an analysis of samples by a pathologist is critical [18].Artificial intelligence assisting a pathologist in this diagnosis is a game changer for clinicians in terms of time savings [19].In recent decades,many studies have been undertaken using statistical algorithms to offer premium solutions to promote interoperable health services for disease prevention [20].As it is least expensive or can classify all species of malaria, the manual process for malaria diagnosis is commonly used.This technique is widely utilized for detecting malaria severity, evaluating malaria medication, and recognizing a certain parasite left after treatment.Two types of blood images were designed for biological blood testing: dense smears and thinner smears [21].Coated with a thin smear, a thick smear can detect malaria more quickly and precisely [22].Microscopy, in addition to having all these advantages, has a major disadvantage of intensive preparation, and the correctness of the outcome depends solely on the microscopist’s abilities.Other malaria extraction techniques such as polymerase chain reaction, microarrays, fast diagnostic testing, quantifiable blood cells, and antibody immunofluorescent (IFA) testing exist [23,24].In almost every automatic malaria medical diagnosis, some primary processing phases have been completed.To eliminate noise and objects from images, the first phase is to obtain blood cell images, preceded through preprocessing in the second phase.Later features are computed on preprocessed images and transferred to classifiers for the classification of infectious/non-infectious blood images [25].The mean filter was applied for noise reduction, and blood cells were segmented using histogram thresholding [26].The LBP features were extracted and transferred to an SVM for malaria classification [27].Hung et al.proposed a deep learning system for parasite malaria detection [28].Faster R-CNN was used for identification and classification, followed by the AlexNet model for better classification.Deep learning has been utilized in malaria detection, as suggested in [29-32], which utilized morphological approaches to distinguish between infected and uninfected microscopic malaria photographs.Based on the features of texture and morphological structure, an SVM was utilized to classify infected/uninfected cells of malaria [33].Das et al.[34] used a mean geometric filter to process and analyze images with light correction and noise reduction.Considerable work has been conducted in the literature for the analysis of malaria parasite; but still a gap for more accurate classification.Hence, we herein present a modified approach for malaria parasite classification into related class labels such as infected and uninfected classes based on convolutional and quantum-convolutional models [35-50].

3 Structure of the Proposed Framework

The proposed model comprises three core steps: localization, segmentation, and classification.In the first core step, actual lesion images are localized using the FRCNN [51] model and localized images are then supplied to the segmentation phase, where original malaria images are converted into the YCbCr [52] color space and histogram-based thresholding is employed for the segmentation of the malaria lesions.The segmented malaria images were supplied for classification.In the classification phase, feature analysis is conducted on the segmented region in two distinct ways: first, deep features are obtained through the proposed seven-layer CNN model with softmax;second, complex features are analyzed using an improved quantum-convolutional model with a 2-bit quantum circuit with softmax to classify the input images.The major structured model of the proposed steps is shown in Fig.1.

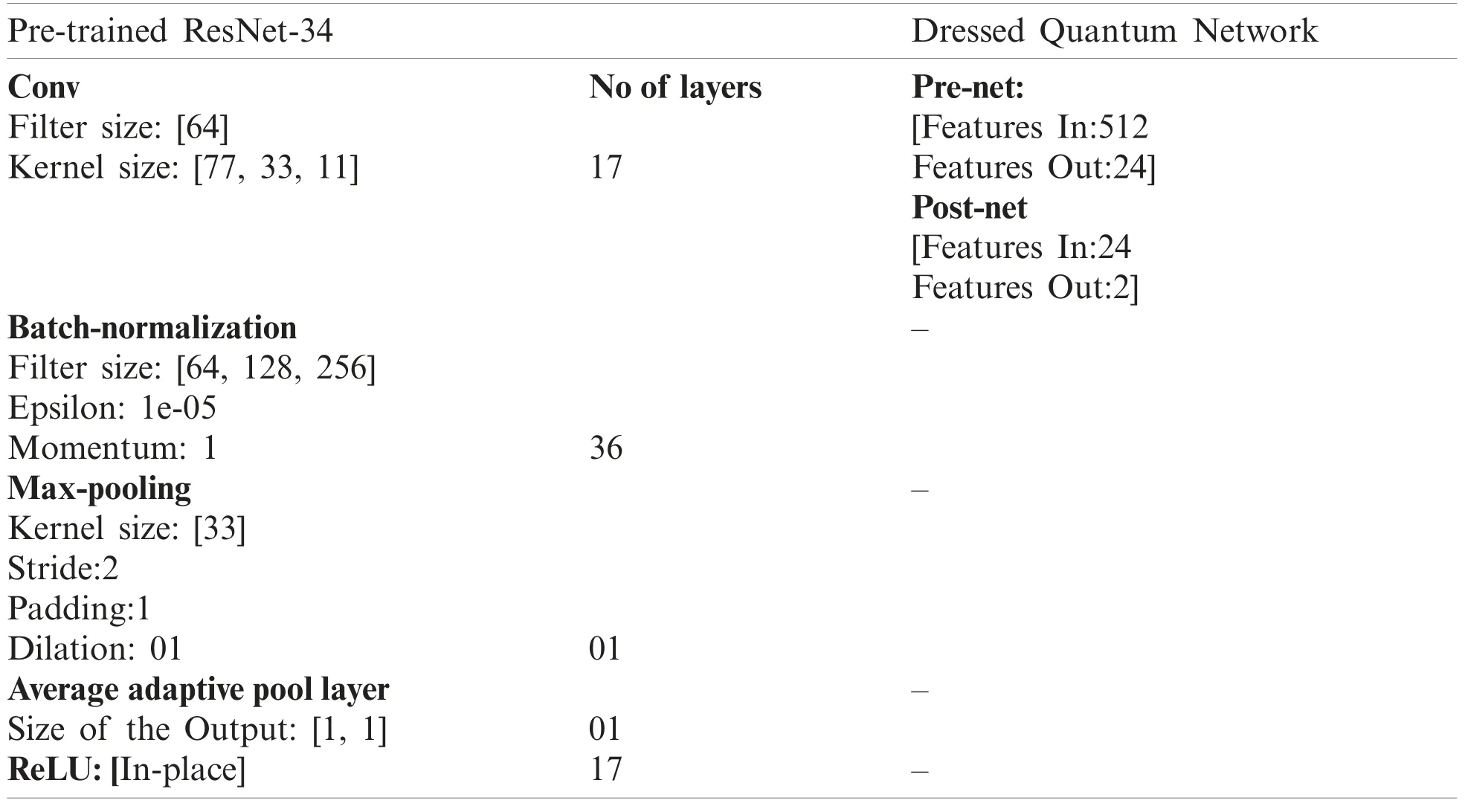

In the proposed model, segmented malaria images are transferred to the improved framework of the pretrained Resnet-34 and quantum variational models.resnet-34 [53] contains four blocks: 17 convolutional, 17 ReLU, 36 batch-norm, 01 pooling, 01 adaptive pool, and pre- and post-networks.Feature analysis is conducted using an average pool layer.The length of the extracted feature vector 1 × 1000 is supplied to the quantum variational circuits for model training/validation.

3.1 Localization of the Actual Malaria Lesions

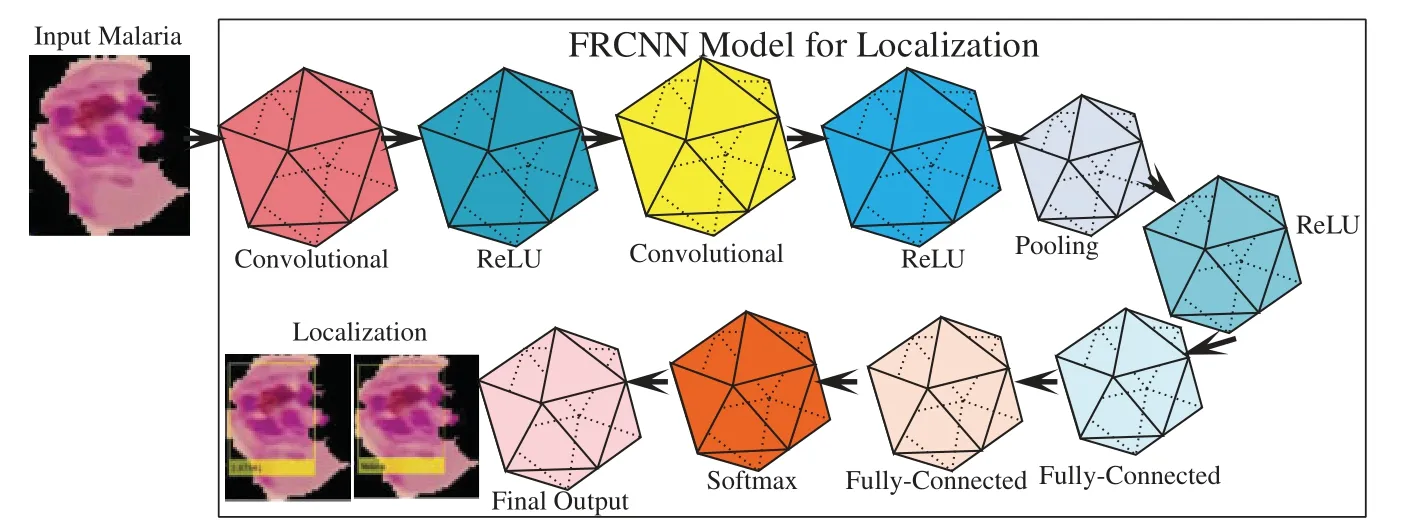

Detection is the task of finding and labeling parts of an image [54-59].R-CNN (regions of artificial networks) is a computer vision technique that combines rectangular region proposals with the features of artificial neural networks.The R-CNN method computes a two-step detection process.The first phase identifies a subset of image regions where an object can be obtained.The R-CNN applications of object detectors include face recognition and surveillance smart systems.R-CNNs can be divided into three types.Each variant aims to improve the efficiency, speed,and effectiveness of other procedures.Using a technique including Edge Boxes [60], the R-CNN detector [61] produces area proposals first.The picture was cropped and resized to include only the proposal areas.The R-CNN [62], for example, produces area proposals that use an algorithm similar to Edge Boxes.FRCNN pools features of the CNN corresponding to each feature map,while an R-CNN detector.FRCNN is more effective than R-CNN because computations for adjacent pixels are distributed throughout the FRCNN.Compared to external technique edge boxes, FRCNN provides a regional proposal network (RPN) to create proposal regions located inside the network.RPN utilizes anchor boxes for the detection of objects that generate proposal regions in a network that are better and faster to tune the input data.Therefore, in this study,a modified FRCNN model is designed for localization of the infected regions of malaria, which comprises 11 layers, including 01 original malaria images, 02 2D-convolutional, 03 ReLU, 01 2D-pooling, 02 fully connected, 01 softmax, and final output classification layers.The improved FRCNN model is shown in Fig.2.

Figure 1: Proposed design of the malaria detection

Figure 2: Proposed FRCNN model for localization

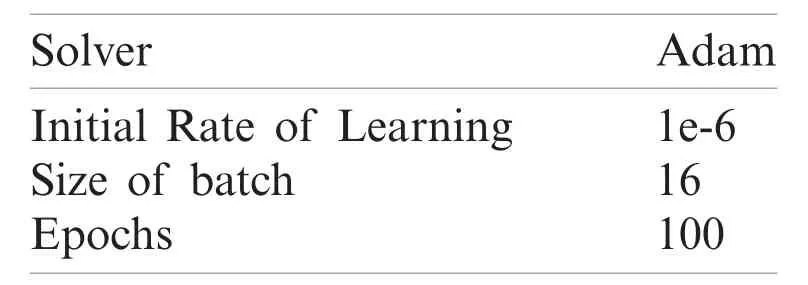

The model is trained on tuned parameters such as the Adam optimizer solver, 16 mini-batch size, and 100 training epochs.The research is conducted on a model trained on the parameters given in Tab.1.

Table 1: Selected parameters for localization model training

The FRCNN is trained on tuned parameters such as the Adam solver, 16 batch sizes, and 100 training epochs.

3.2 Malaria Parasite Images Segmentation Using Histogram

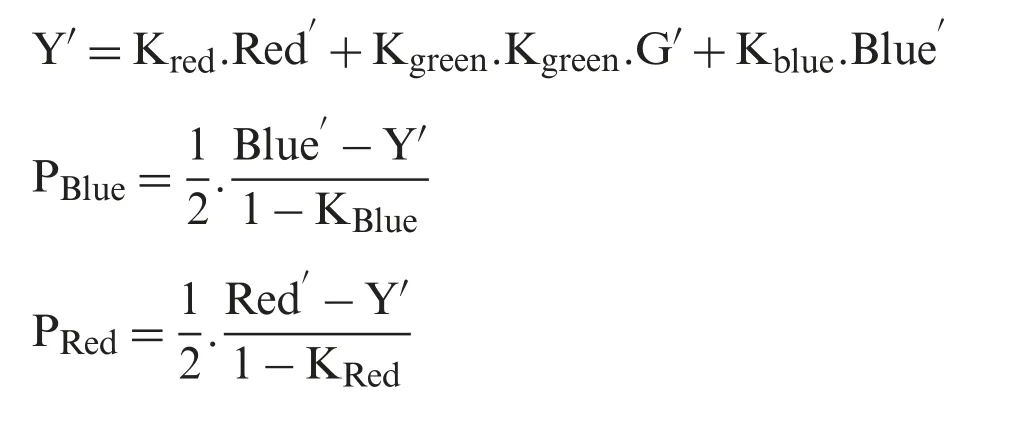

The RGB original malaria images are transformed into YCbCr color space, where Y denotes the luminance channel and CbCr represents the blue and red color channels.The mathematical representation of the selected color space is shown in (1).

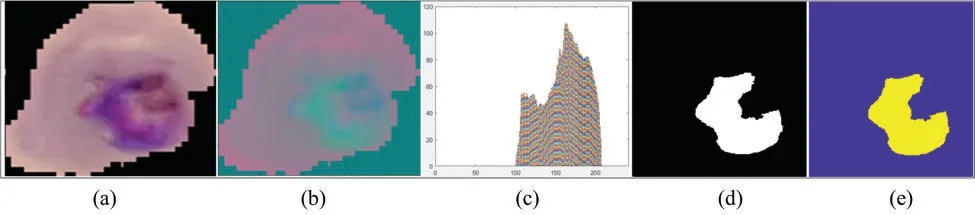

The conversion of RGB images into the YCbCr color space is shown in Fig.1.The real infected area of the malaria parasite is segmented using histogram-based color thresholding, as shown in Figs.3 and 4.

Figure 3: Segmentation results (a) original malaria images (b) YCbCr color space (c) binary segmentation (d) 3d-segmentation (e) histogram

Figure 4: Segmentated malaria cells (a) input malaria cells (b) 3d-segmented lesions (c) binary lesions

3.3 Malaria Classification

The discrimination among the malaria cells into two classes is performed using two proposed models trained from scratch—convolutional model and quantum-convolutional model.

3.3.1 Classification Using Improved Quantum-Convolutional Model

The proposed model comprises five blocks of the pretrained resnet34 model and two layers of the dress-quantum network as shown in Tab.2.

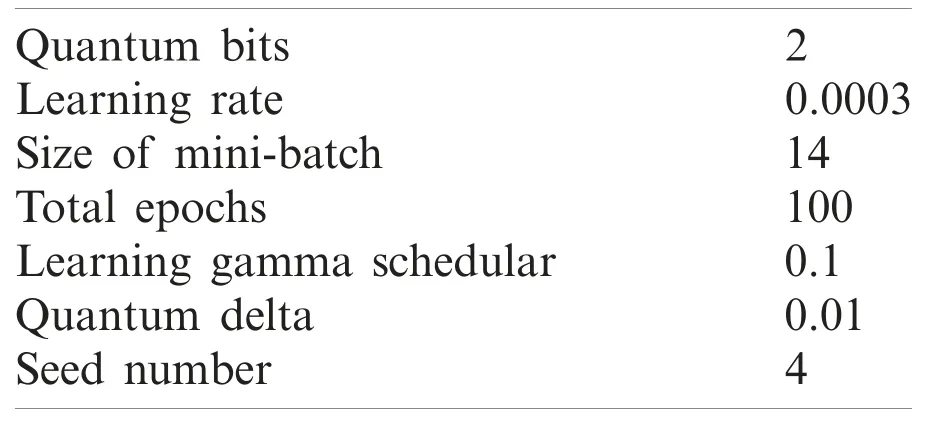

The proposed quantum-convolutional model is trained on 2-qubit quantum circuit with selected parameters, which are explained in Tab.3.

3.3.2 Classification of the Malaria Cells Using Convolutional Neural Network

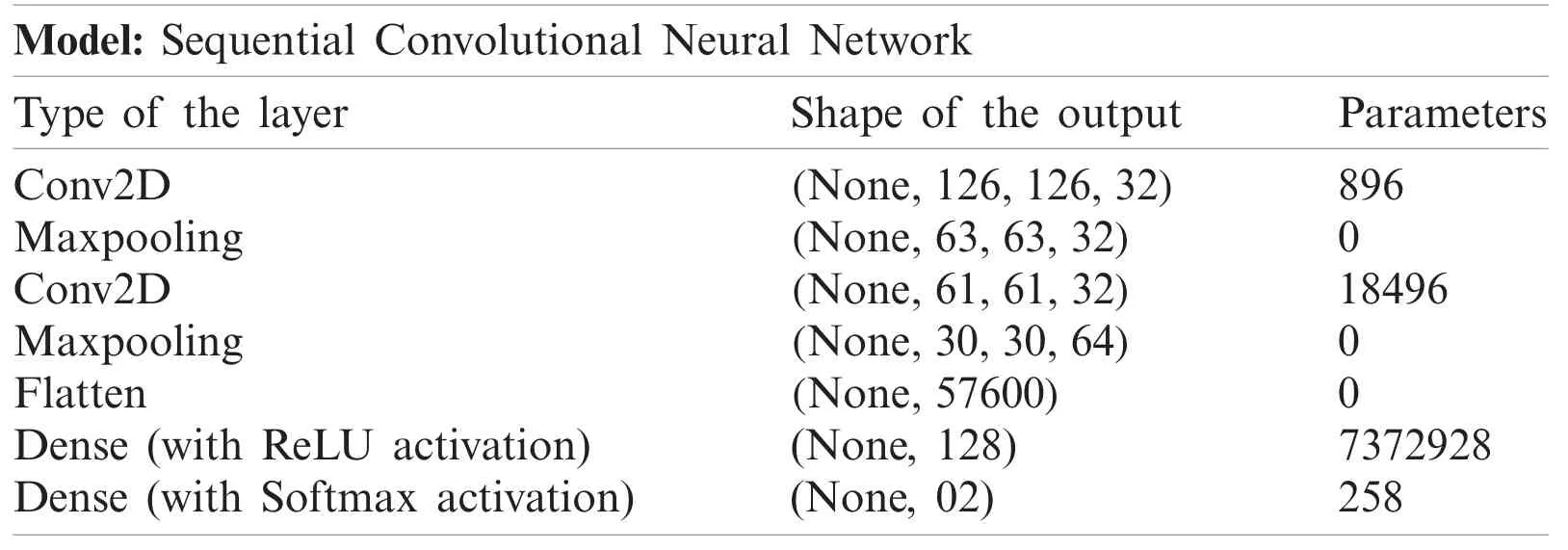

The segmented lesion images are classified into associated classes, and a new CNN model is developed that comprises seven layers—two convolutional 2D layers, two dense with ReLU activation and softmax layers, two maxpooling layers, and one flattened layers.A detailed model description is given in Tab.4.

Table 2: Proposed quantum-convolutional model

Table 3: Learning parameters of the quantum-convolutional model

Table 4: Layered architecture of the proposed CNN model

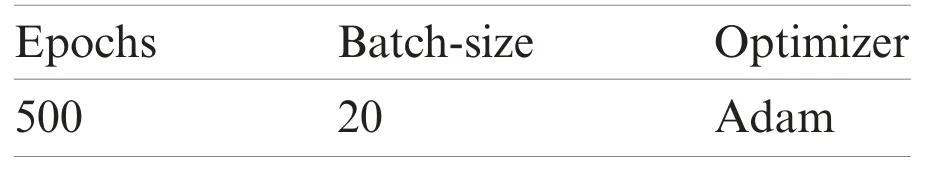

As seen in Tab.5, the system framework is trained using the following measures:

Table 5: Learning parameters of the proposed CNN model

Tab.5 shows the parameters of the proposed CNN model, where 500 epochs, 20 batch size, and Adam optimizer solver are utilized for malaria classification.Tab.5 shows the learning parameters of the model that provide significant improvements in model training, ultimately increasing the testing accuracy.

4 Benchmark Dataset

The malaria benchmark dataset contains two classe [63].The description of the dataset is presented in Tab.6.The proposed model was trained on five-, ten-, and fifteen-fold cross-validation for malaria classification.

Table 6: Malaria images for classification

4.1 Experimentation

In this study, three experiments were implemented for malaria cell classification in terms of metrics such as precision, sensitivity, and specificity.In the first experiment, the input malaria images were localized using the improved FRCNN model.In the second experiment, the localized images were segmented and transferred to the proposed CNN model.Similarly, in the third experiment, classification was performed using the quantum-convolutional model.

4.2 Experiment#1 Localization of Malaria Images Using Improved FRCNN Model

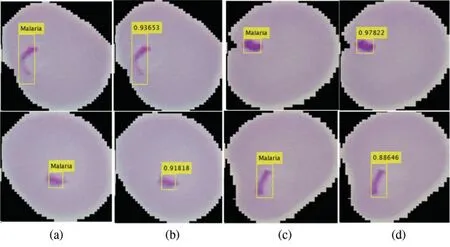

The performance of the localization model is computed in a variety of measures such as precision and IoU, as given in Tab.7.The localization outcomes with the predicted scores are shown in Fig.5.

Tab.7 shows the localization results, where the method achieved 0.98 IoU and 0.96 precision scores.

Figure 5: Localization outcomes (a) (c) localized malaria region (b) (d) predicted malaria scores

4.3 Experiment#1:Classification of Malaria Images Using the Proposed CNN Model

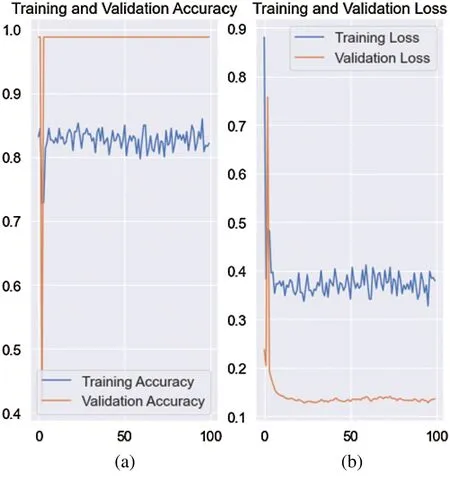

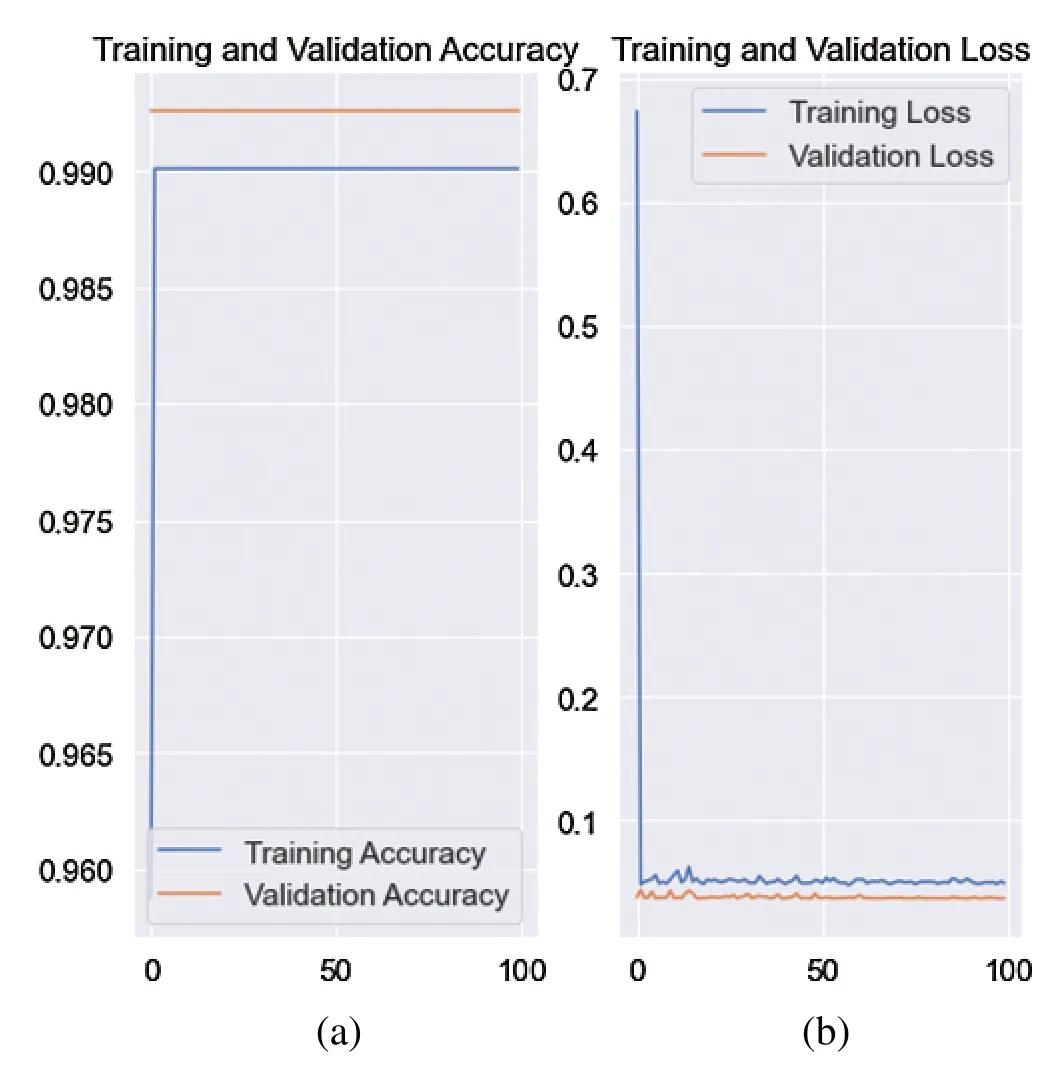

In this experiment, classification was performed on segmented images using the CNN model.The proposed model is trained on different numbers of training and testing images, such as 0.5 and 0.7 cross-validation as shown in Fig.6.The quantitative results are presented in Tab.8.

Figure 6: Training and validation accuracy with loss rate (a) accuracy (b) loss rate

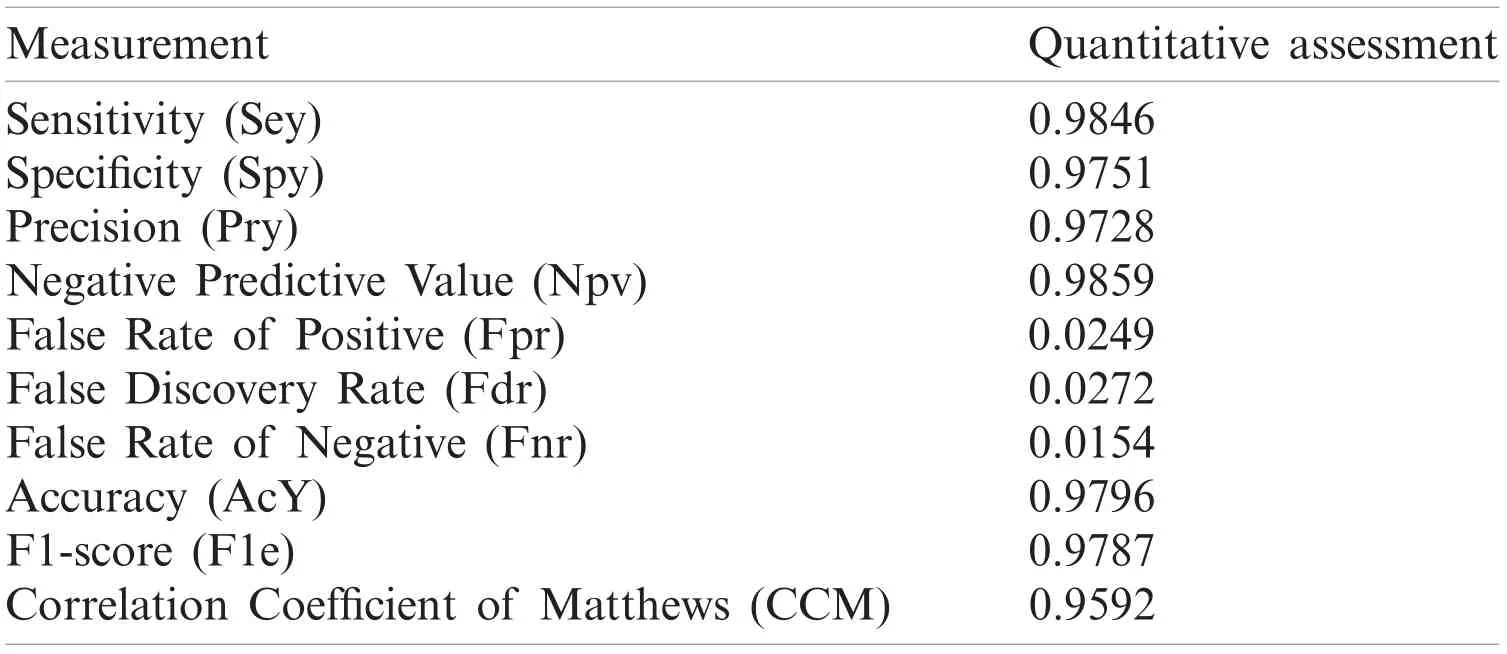

The results in Tab.8 show that the proposed techniques attained 0.9846, 0.9751, 0.9728,0.9859, 0.0249, 0.0272, 0.0154, 0.9796, 0.9787, 0.9592 scores for Sey, Spy, Pry, Npv, Fpr, Fdr,Fnr, AcY, F1e, and CCM, respectively.The classification outcomes for the 0.7 cross-validation are stated in Tab.9.

Table 8: Classification results on 0.5 hold validation using proposed CNN model

Table 9: Infected and uninfected cells classification on 0.7 separately criteria using proposed CNN model

The AcY achieved on the 0.7 cross-validation is 0.9884 and 0.0121 Fpr.The proposed model achieved 0.980 accuracy on 0.5 and 0.985 accuracy on 0.7 separability criteria of the training and testing images.

4.4 Experiment#2:Classification Outcomes Using the Quantum-Convolutional Model

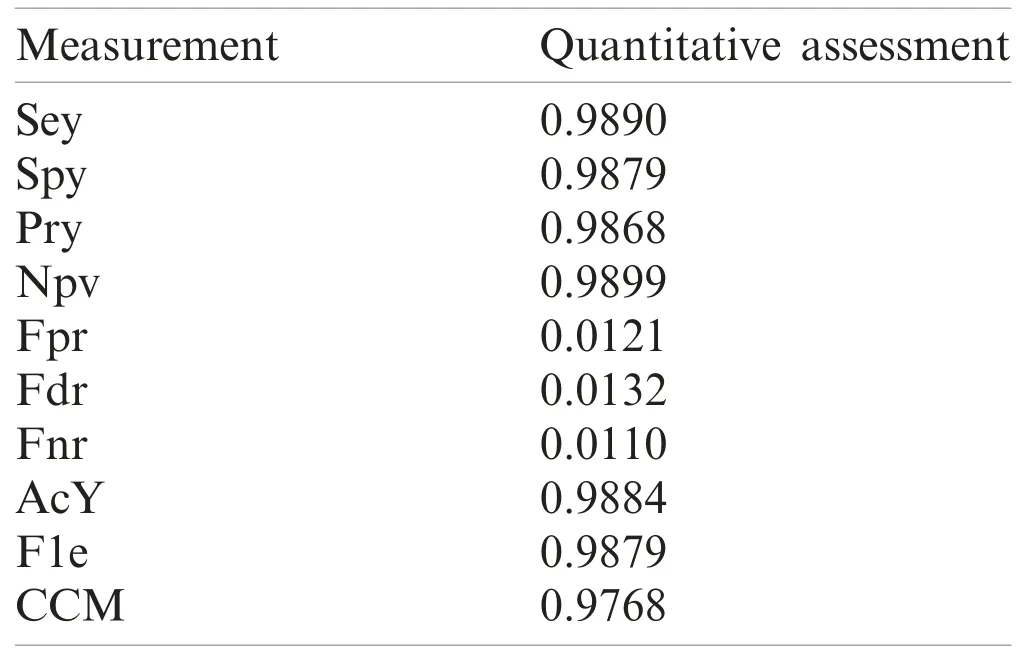

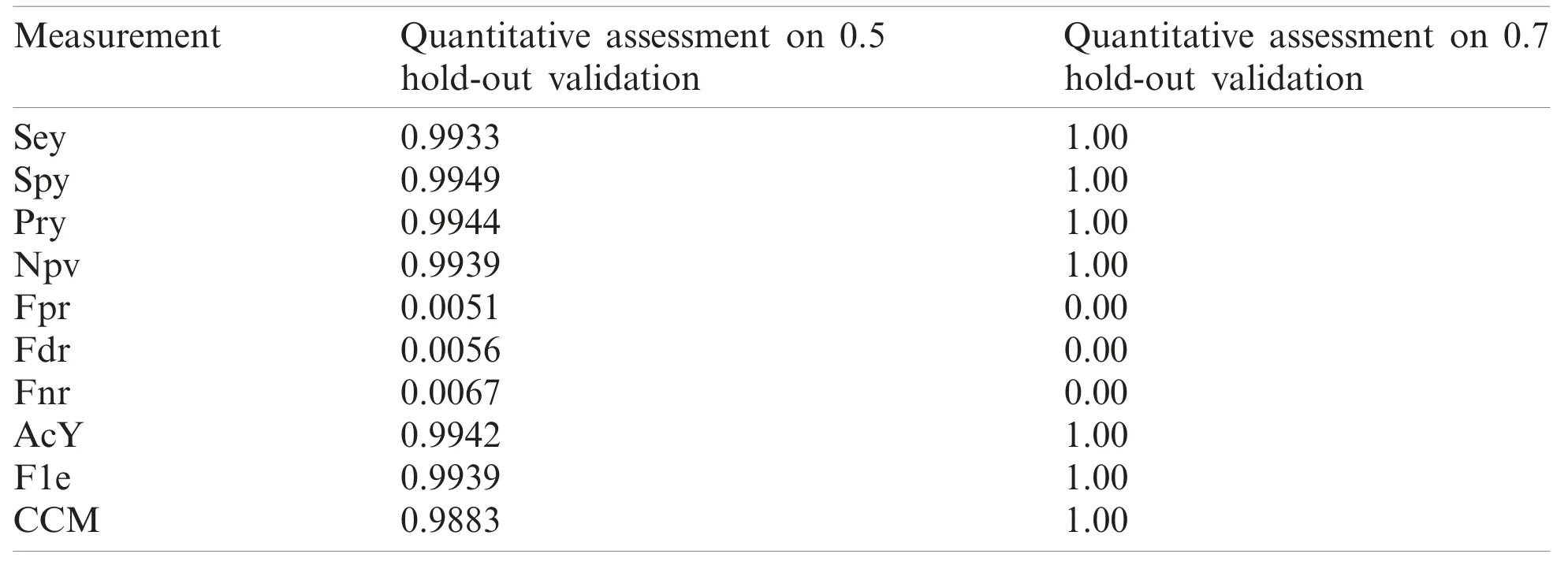

The efficiency of the classification model was calculated using a variety of performance metrics.The accuracy and loss rate of the training with respect to validation are graphically shown in Fig.7.A numerical assessment of the outcomes is presented in Tab.10.

Figure 7: Training accuracy with loss rate on quantum-convolutional model (a) accuracy of validation (b) loss rate of the validation

Table 10: Quantitative outcomes using quantum-convolutional model

The model achieved 0.9942 AcY and 0.9883 CCM on a 0.5 hold validation.The classification results for the 0.7 hold validation are listed in Tab.10.

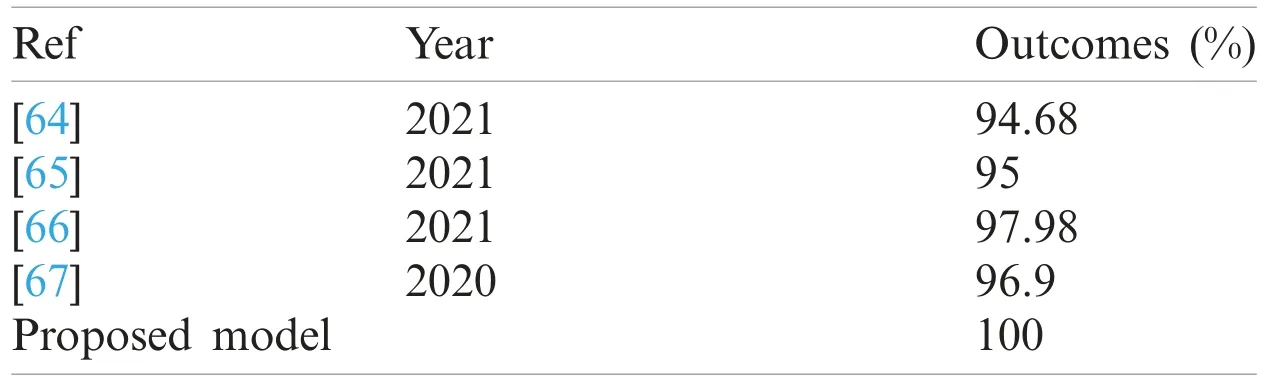

The results in Tab.10 show that the method achieved a 1.00 score.Finally, the computation results show that the quantum-convolutional model achieved a better outcome than the convolutional model.A comparison is presented in Tab.11.

The proposed technique outcomes are compared to existing works such as [64-67].The capsule network has been utilized for discrimination among infected/uninfected cells of malaria with 96.9% accuracy [67].However, the proposed quantum-convolutional model achieved 100%accuracy.

Table 11: Proposed results comparison

The method was utilized for feature extraction with a classifier for malaria classification.However, in this study, the classification of malaria cells was computed using a variety of measures.

5 Conclusion

Parasite malaria detection is a great challenge because malaria cells are noisy and exhibit large variations in shape and size.Therefore, this study investigated an improved framework for detection and classification.Malaria parasite cells were localized using an improved FRCNN model.The improved FRCNN model achieved a 0.96 precision score.Later, localized cells are segmented using a histogram-based thresholding approach and transferred to a two-classification model such as CNN and quantum-convolutional model.The proposed CNN model achieved an accuracy of 0.98 on 0.7 hold and 0.97 on 0.5 hold validation, whereas the quantum-convolutional model obtained 0.99 and 1.00 accuracy on 0.5 and 0.7 hold validation strategy, respectively.

Funding Statement:This work was supported by the National Research Foundation of Korea(NRF) grant funded by the Korea government (MSIT) (No.NRF-2021R1A2C1010362) and the Soonchunhyang University Research Fund.

Conflicts of Interest:The authors declare that they have no conflicts of interest to report regarding the present study.

杂志排行

Computers Materials&Continua的其它文章

- Polygonal Finite Element for Two-Dimensional Lid-Driven Cavity Flow

- Multi-Step Detection of Simplex and Duplex Wormhole Attacks over Wireless Sensor Networks

- Fuzzy Based Latent Dirichlet Allocation for Intrusion Detection in Cloud Using ML

- Automatic Detection and Classification of Human Knee Osteoarthritis Using Convolutional Neural Networks

- An Efficient Proxy Blind Signcryption Scheme for IoT

- An Access Control Scheme Using Heterogeneous Signcryption for IoT Environments