MLA:A New Mutated Leader Algorithm for Solving Optimization Problems

2022-03-14FatemehAhmadiZeidabadiSajjadAmiriDoumariMohammadDehghaniZeinabMontazeriPavelTrojovskandGauravDhiman

Fatemeh Ahmadi Zeidabadi,Sajjad Amiri Doumari,Mohammad Dehghani,Zeinab Montazeri,Pavel Trojovskýand Gaurav Dhiman

1Department of Mathematics and Computer Sciences,Sirjan University of Technology,Sirjan,Iran

2Graduate of Department of Electrical and Electronics Engineering,Shiraz University of Technology,Shiraz,Iran

3Department of Electrical and Electronics Engineering,Shiraz University of Technology,Shiraz,Iran

4Department of Mathematics,Faculty of Science,University of Hradec Králové,500 03,Hradec Králové,Czech Republic

5Department of Computer Science,Government Bikram College of Commerce,Patiala,Punjab,India

Abstract:Optimization plays an effective role in various disciplines of science and engineering.Optimization problems should either be optimized using the appropriate method (i.e., minimization or maximization).Optimization algorithms are one of the efficient and effective methods in providing quasioptimal solutions for these type of problems.In this study, a new algorithm called the Mutated Leader Algorithm(MLA)is presented.The main idea in the proposed MLA is to update the members of the algorithm population in the search space based on the guidance of a mutated leader.In addition to information about the best member of the population,the mutated leader also contains information about the worst member of the population, as well as other normal members of the population.The proposed MLA is mathematically modeled for implementation on optimization problems.A standard set consisting of twenty-three objective functions of different types of unimodal,fixed-dimensional multimodal, and high-dimensional multimodal is used to evaluatethe abilityof the proposed algorithmin optimization.Also,the results obtained from the MLA are compared with eight well-known algorithms.The results of optimization of objective functions show that the proposed MLA has a high ability to solve various optimization problems.Also, the analysis and comparison of the performance of the proposed MLA against the eight compared algorithms indicates the superiority of the proposed algorithm and ability to provide more suitable quasi-optimal solutions.

Keywords: Optimization; metaheuristics; leader; benchmark; objective function

1 Introduction

Nowadays, by enhancing information technology, various number of optimization problems arises in several fields such as bioinformatics, operation research, geophysics, and engineering etc [1,2].Hence, optimization has become a fundamental issue that plays a remarkable role in a wide range of programs and daily life.The simple concept of optimization is a method for obtaining the favorable solution for a problem by optimizing (i.e., minimizing or maximizing)a function in terms of some variables.In general, optimization problems consist of three main parts, including objective function, constraints, and optimization variables [3].Generally, methods for solving optimization problems can be divided into two categories, included deterministic and stochastic methods [4].

Deterministic methods, which are gradient-based methods and depending on the nature of the equations and variables, i.e., Simplex, Branch, Bound, Non-linear methods, etc.Deterministic algorithms are able to precisely find the optimal solutions, but they are not suitable for complicated and intractable problems, and their responding time grows exponentially in such complicated problems [5].Unfortunately, most real-life optimization problems include large solution space, non-convex, and non-linear objective functions and constraints.Such optimization problems classified as NP-hard problems and have high computational complexity that couldn’t be solved in a polynomial time and complicated in nature.Should be noted that mathematical methods are only effective in solving small-scale problems, and not efficient and cost-effective for solving complex problems [5,6].To tackle this problem, various approximation methods were proposed by the researchers that able to obtain an acceptable solution in a sensible time.

Compared to the gradient-based methods, they are less likely to be trapped in local optima,the gradient of the objective function is not required and can be used in high-complex and highdimensional problems.Other main advantages of these algorithms can be mentioned as:

Being severely robust, having a high chance to find global or a near-optimum within a reasonable time, being easy to implement, and being well suitable for the discrete type of optimization problems.The big disadvantage related to these algorithms is high computational complexity, week constraint-handling capability, problem-specific parameter tuning, and limited problem size [7].

These stochastic methods can be categorized into two general groups: heuristics and metaheuristics.The base of stochastic methods is repetition, in which they first generate an initial population and in each iteration of the algorithm, they improve their solution until the convergence criterion satisfied [8].

Two major drawbacks of the heuristic algorithms are (1) Stuck with a high probability of local optimum and (2) Severe weakness in practical applications for complex and high dimensional problems [9].Metaheuristic algorithms are presented to overcome these shortcomings associated with heuristic algorithms.The point about stochastic methods and optimization algorithms is that optimization algorithms do not guarantee the proposed solution as a global optimal solution.However, the solutions obtained from the optimization algorithms are close to the global optimal and are therefore called quasi-optimal.For this reason, various optimization algorithms are introduced by scientists to provide better quasi-optimal solutions to optimization problems.In this regard, optimization algorithms are applied in various fields in the literature such as energy [10-13], protection [14], electrical engineering [15-20], and energy carriers [21,22] to achieve the optimal solution.

The contribution and innovative of this study are in introducing a new optimization algorithm called Mutated Leader Algorithm (MLA) in order to solve various optimization problems.Using the mutated leader to update the position of the algorithm population in the search space is the main idea of the proposed MLA.In addition to information about the best member of the population, the mutated leader also contains information about the worst member of the population, as well as ordinary members of the population.The proposed MLA is mathematically modeled and evaluated on a standard set consisting of twenty-three standard objective functions.In addition, the performance of the MLA in solving these objective functions is compared with eight well-known algorithms.

The rest of the article is organized in such a way that in Section 2, a study on optimization algorithms is presented.The proposed MLA is introduced in Section 3.Simulation studies are presented in Section 4.Finally, conclusions and several suggestions for further studies are provided in Section 5.

2 Background

The development of metaheuristic algorithms (such as Particle Swarm Optimization(PSO) [23], Genetic Algorithm (GA) [24], and Gravitation Search Algorithm (GSA) [25]) has attracted more attention from researchers and more emphasis has given to the development of these algorithms.The advantages of metaheuristics algorithms can be mentioned as simplicity and flexibility, avoidance of local optimization, high performance, derivation-free mechanism, and simplicity by nature.Metaheuristic algorithms are basically inspired by real-world phenomena and simple principles in nature to find desired solutions for optimization problems by simulating biological phenomena or physical rules.There are two main categories for Metaheuristic algorithms:evolutionary-based methods and swarm-based techniques.Generally, swarm-based techniques simulate physical phenomena and use mathematical methodologies.On the other hand, 2 originate from the natural process of biological evolution.evolutionary-based methods originate from the process of biological evolution in nature.These methods have attracted more attention from many researchers in the last decades their capability to rapidly explore the feasible space and independence from the nature of the problem.

Based on inspiration source, which is known as one of the most popular classification criteria,optimization algorithms can be categorized into four general categories as (i) swarm-based (SB),(ii) evolutionary-based (EB), (iii) physics-based (PB), and (iv) game-based (GB) methods.

2.1 Physics-Based Algorithms

Physics-based algorithms are a type of algorithm that is inspired by existing physical laws and simulates them.For instance, Simulated Annealing (SA) is inspired by the process of gradual heating and refining of metals [26].Specifically, SA is the metaheuristic algorithm to approximate global solutions in a large search space defined for an optimization problem.This method frequently employed for problems with discrete search space (namely the traveling salesman problem).For problems where finding an approximate global optimum is more significant than obtaining an exact local solution within a specified time, SA may be preferred in comparison to accurate algorithms such as branch and bound or gradient descent and so on.Spring Search Algorithm (SSA)is inspired by Hooke’s law [27].This algorithm can be utilized to solve single-objective constrained optimization problems.The weights that are connected by springs are considered as the search agents of this algorithm in the search space of problem, which according to Hooke’s law, the force of these springs (or equivalently search agents), is proportional to the length of each spring.GSA simulated the law of gravity, mass interactions and motion.A set of masses that interact with each other based on Newtonian gravity force and the laws of motion are considered as searcher agents in this algorithm.This force leads to a global movement of all agents towards objects with weighty masses.Accordingly, masses collaborate using a directly gravitational force.Small World Optimization Algorithm (SWOA) [28], Designed by small-world phenomenon process, Curved Space Optimization (CSO) [29] based on principles of general relativity theory, Ray Optimization(RO) [30] algorithm, Black Hole (BH) [31], Momentum Search Algorithm (MSA) [32], Artificial Chemical Reaction Optimization Algorithm (ACROA) [33], Charged System Search (CSS) [34],Galaxy-based Search Algorithm (GbSA) [35], and Magnetic Optimization Algorithm (MOA) [36]are other examples of this type of algorithms.

2.2 Evolution-Based Algorithms

Evolution-based algorithms are one more type of optimization algorithms that utilize mechanisms inspired by biological evolution, such as mutation, reproduction, selection, and recombination.Algorithms such as GA, Biogeography-based Optimizer (BBO) [37], Differential Evolution(DE) [38], Evolution Strategy (ES) [39], and Genetic Programming (GP) [40] are belong to this group.For instance, GA is one of the well-known evolutionary-based metaheuristic algorithms.This algorithm is inspired by the natural selection process.GA commonly utilized to generate high-quality answers to optimization problems relying on biologically inspired operators namely, mutation, selection, crossover, and so on.BBO is generally employed to optimize multidimensional functions, but it does not apply the gradient of the function, which means that it does not need the function to be differentiable as required by classic optimization algorithms(namely newton and gradient descent algorithms).Hence, BBO can be used on discontinuous functions.Evolutionary Strategy (EA) is inspired by natural selection deals with a division of population-based optimization algorithms.According to natural selection theory individuals with characteristics useful to their survival are able to live through generations and transfer the good features to the next generation.

2.3 Swarm-Based Algorithms

Swarm-based algorithms are another type of optimization algorithm that is the collective intelligence behavior of self-organized and decentralized systems, e.g., artificial groups of simple agents.These algorithms are Inspired by normal processes of plant cycles, insect activities,and animals’social behavior.Examples of Swarm-based algorithms include PSO, Ant Colony Optimization (ACO) [41], Emperor Penguin Optimizer (EPO) [42], Teaching-Learning-Based Optimization (TLBO) [43], Grey Wolf Optimizer (GWO) [44], Following Optimization Algorithm (FOA) [45], Group Mean-Based Optimizer (GMBO) [46], Donkey Theorem Optimization(DTO) [47], Tunicate Swarm Algorithm (TSA) [48], Marine Predators Algorithm (MPA) [49], and Whale Optimization Algorithm (WOA) [50].

For Example, PSO and ACO are inspired by the social movement of the birds and moving ants in order to select the shortest route, respectively.The GWO algorithm simulates the hunting and the leadership hierarchy mechanism of grey wolves in nature.In this regard, four types of grey wolves (namely alpha, beta, delta, and omega) are used to simulate the leadership hierarchy.After that, the three important stages of hunting (i.e., searching, encircling, and attacking to the hunt) are implemented.More specifically, PSO is a meta-heuristic global optimization algorithm based on swarm intelligence.It comes from the investigation on the fish flock and bird movement behavior.This algorithm is frequently used and quickly developed because of its simple implementation and few particles needed to be setting.TLBO includes two main phases: Teacher Phase and Learner Phase, and the mean value of the population was used to update the solution.TLBO algorithm is based on the teaching-learning process in a classroom.In fact, this algorithm is inspired by the effect of a teacher on the performance of learners in a class.EPO is inspired by the social flock behavior of emperor penguins to survive successfully in the depth of the Antarctic winter.this algorithm can be used for both constrained and unconstrained optimization problems.The important steps of EPO are to generate the huddle boundary, compute temperature around the huddle, calculate the distance, and find the effective mover.TSA is a novel metaheuristic algorithm, inspired by the swarm behavior of tunicate during the navigation and foraging process to survive in the depth of the ocean.This method can be used for solving non-linear constrained optimizing problems.WOA mimics the social behavior of humpback whales.This algorithm is based on the bubble-net hunting strategy.

2.4 Game-Based Algorithms

Game-based algorithms were established based on simulating the various individual and group game rules to be used effectively in solving optimization problems.For instance, orientation search algorithm, Hide Objects Game Optimization (HOGO) [51], and Football Game-Based Optimization (FGBO) [52] as an example of Game-based algorithms can be mentioned.More precisely, the Orientation Search Algorithm (OSA) is designed taking into account the law of the orientation game.With this rule, players move on the playing field (i.e., search space) according to the path suggested by the referee [53].FGBO is developed based on simulating the policies and actions of clubs in the football league and population should be updated in four steps: 1) holding the league, 2) training the clubs, 3) transferring the players, and 4) relegation and promotion of the clubs.

3 Mutated Leader Algorithm(MLA)

In this section, the proposed Mutated Leader Algorithm (MLA) is introduced and its mathematical model is presented for use in solving optimization problems.MLA is a population-based method that in a repetition-based process is able to suggest appropriate quasi-optimal solutions to optimization problems.In MLA, a number of random solutions to an optimization problem are first proposed.These solutions are then updated under the guidance of mutated leaders in the search space.After the iterations are over, the MLA offers the best possible solution as a solution to the problem.In designing and modeling the proposed MLA, the following items have been considered:

Each member of the population is actually a vector whose values determine the values of the problem variables.

The member of the population that provides the most appropriate value for the objective function is the best member of the population.

The member of the population that presents the worst value of the objective function is the worst member of the population.

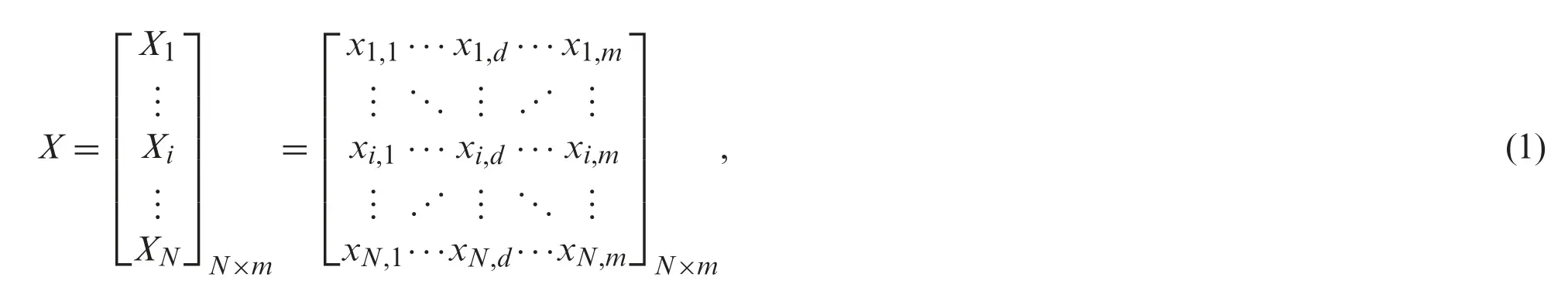

The members of the population in MLA are identified using a matrix called the population matrix in Eq.(1).

whereXis the population matrix of MLA,Xiis theith population member,Nis the number of population members,mis the number of variables,xi,dis thedth variable value suggested by theith population member.

The objective function of the optimization problem can be calculated based on the values suggested by each member of the population.Therefore, the values obtained for the objective function are determined using a vector called the objective function vector in Eq.(2).

whereFis the objective function vector andfiis the objective function value of theith population member.

In each iteration of the algorithm, after calculating the objective function based on population members, the best and worst members of the population are identified.In MLA, a mutated leader is used to update each member of the population.The mutated leader is actually a solution vector whose elements are randomly selected from the best member of the population, the worst member of the population, and other normal members of the population.The mutated leader is constructed for each member of the population and in each iteration of the algorithm based on the mentioned concepts using Eq.(3).

whereMLiis the mutated leader to guide theith population member in search space andmli,dis the itsdth dimension.xbestis the best population member,xworstis the worst population member,xk,dis thedth dimension of thekth population member which is selected randomly,pis a random number with a normal distribution in the range [0-1],Pbis the probability of the best member being elected, andPwis the probability of the worst member being elected as thedth dimension of mutated leader.

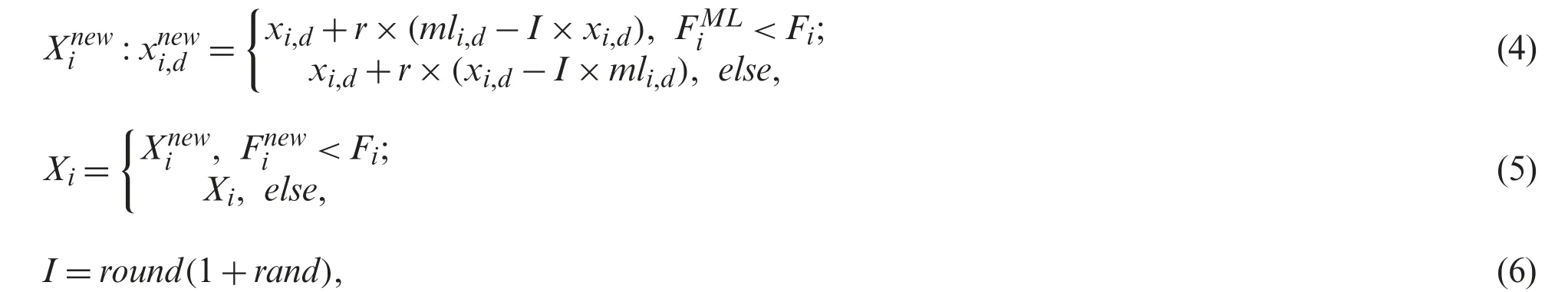

In each iteration of the algorithm, a mutated leader is created for each member of the population.After designing the mutated leader, each member of the population is updated in the search space based on the guidance of their own leader.This concept is simulated using Eqs.(4)-(6).

Well, Mr. Assistant, there must be some mistake. I just fell asleep and stayed on the bus too long. Then the driver made me get off. He wouldn t take me back with him! He talked some nonsense about rules. I m going to call the company and report him!

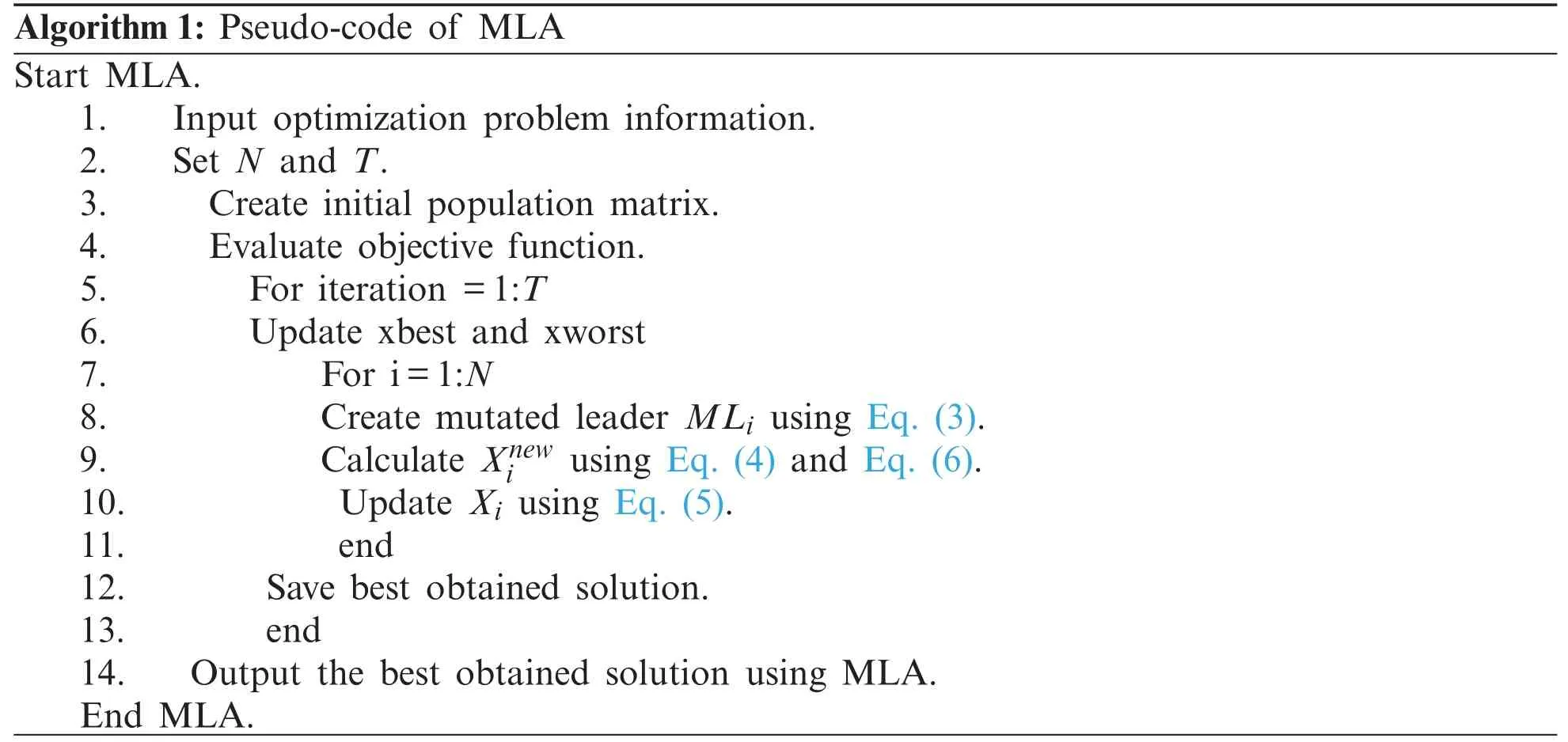

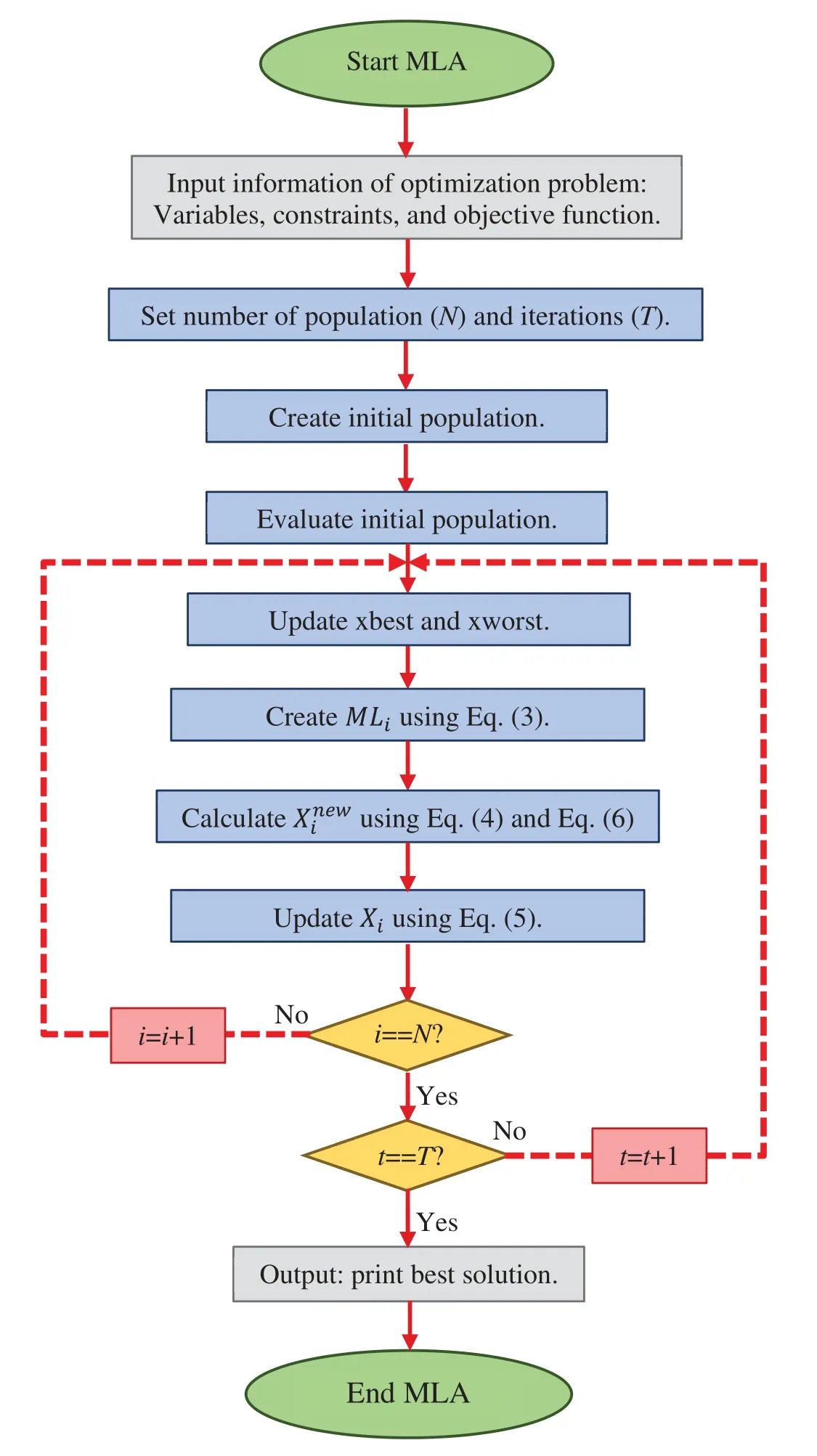

After all members of the population have been updated, the algorithm enters the next iteration.Again, the best member and the worst member are updated based on the new values of the objective function, and then the mutated leaders are created according to Eq.(3).The members of the population are also updated according to Eqs.(4)-(6).This process is repeated until the end of the algorithm execution.After the full implementation of the MLA, the best proposed solution for the considered optimization problem is presented.The pseudo-code of the proposed MLA is presented in Algorithm 1 and its flowchart is shown in Fig.1.

?

4 Simulation Studies

In this section, simulation studies and performance analysis of the proposed MLA in solving optimization problems and quasi-optimal solutions are presented.The capability of the proposed MLA is tested on a standard set of twenty-three objective functions of different types of functions.Information about these standard functions is given in Appendix A in Tabs.A1-A3.Also,the results of the proposed algorithm are compared with the performance of eight optimization algorithms including Particle Swarm Optimization (PSO) [23], Genetic Algorithm (GA) [24],Gravitation Search Algorithm (GSA) [25], Teaching-Learning-Based Optimization (TLBO) [43],Grey Wolf Optimizer (GWO) [44], Tunicate Swarm Algorithm (TSA) [48], Whale Optimization Algorithm (WOA) [50], and Marine Predators Algorithm (MPA) [49].At this stage, each of the optimization algorithms is simulated for 20 independent runs which the stop condition for iterations is reaching the number of iterations of the algorithm to 1000 per run.The results of the implementation of optimization algorithms on the objective functions are reported using the two criteria of the average of the obtained best solutions (ave) and the standard deviation of the obtained best solutions (std).

Figure 1: Flowchart of MLA

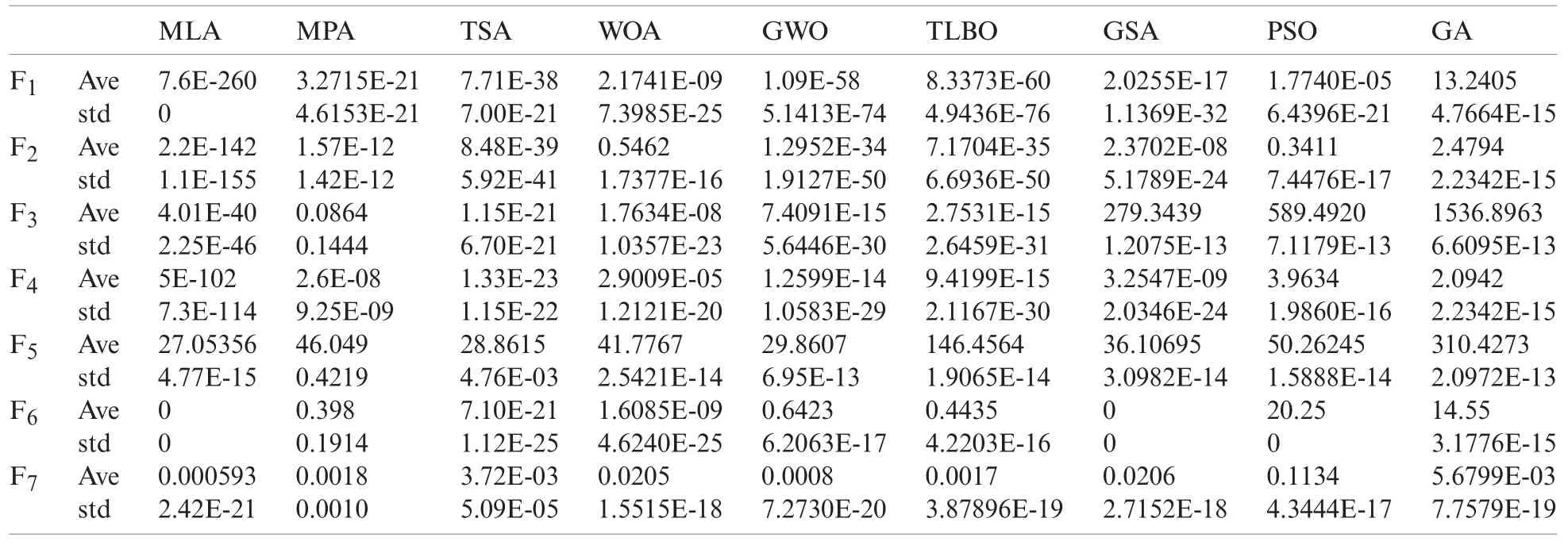

4.1 Evaluation of Unimodal Functions

The first group of functions considered to evaluate the performance of the proposed algorithm is of the type of unimodal functions, including seven functionsF1toF7.The results of optimization ofF1toF7objective functions using the proposed MLA and eight compared algorithms are presented in Tab.1.The proposed MLA is the best optimizer for the objective functions ofF1,F2,F3,F4,F5,F6, andF7by providing more appropriate solutions than the eight compared algorithms.In addition, MLA is able to provide the global optimal solution forF6.Comparison of the performance of optimization algorithms indicates that the proposed MLA has a high ability to solve unimodal optimization problems.

Table 1: Evaluation results of unimodal objective functions

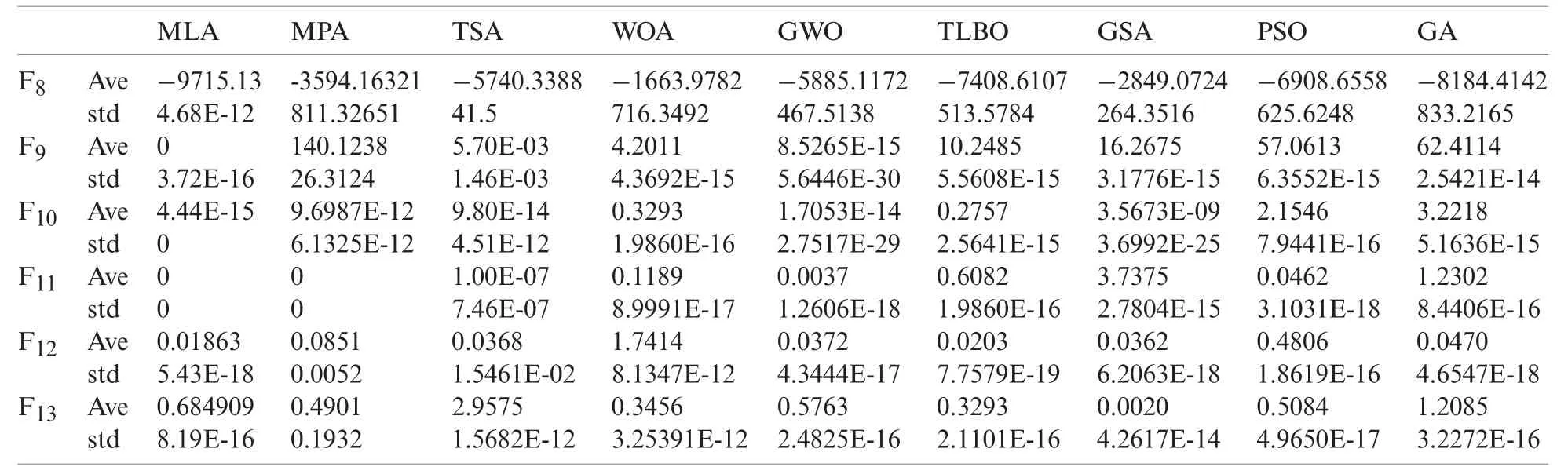

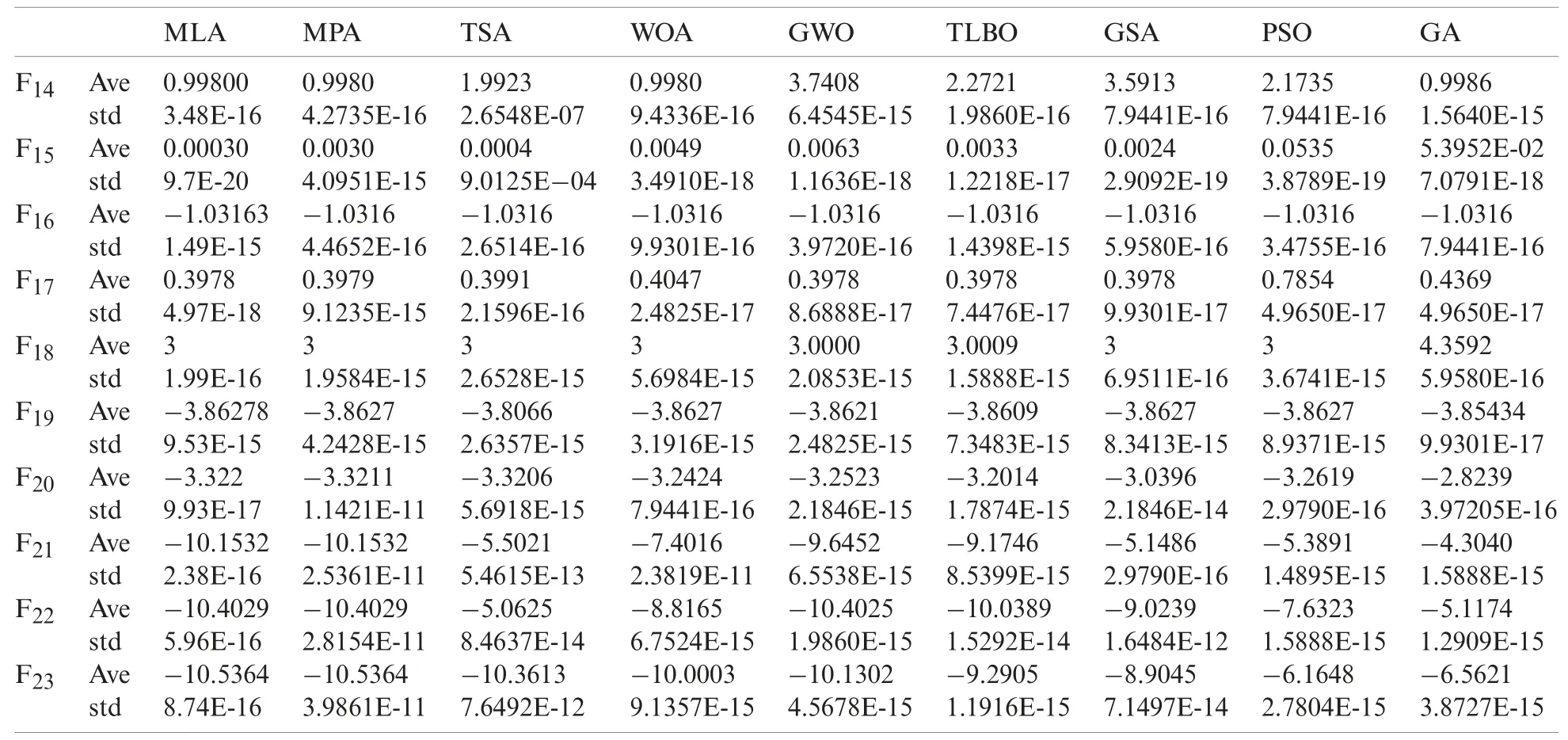

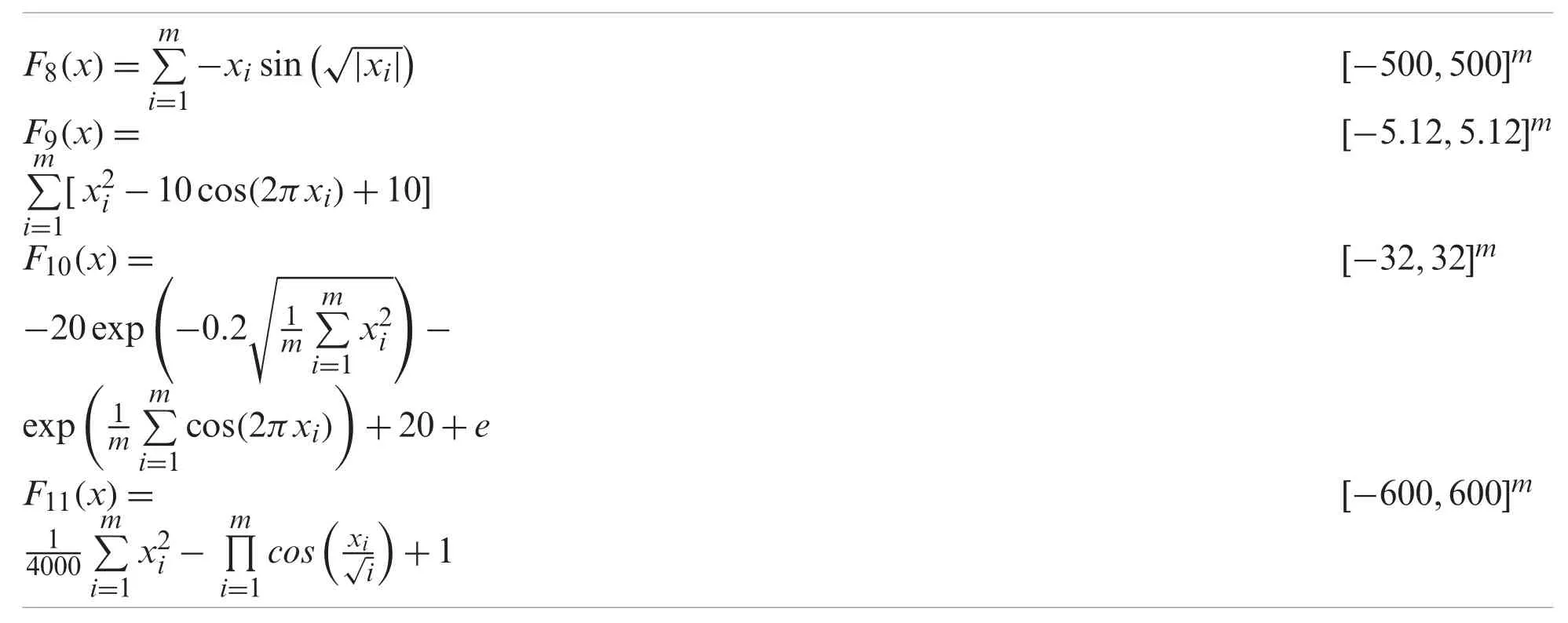

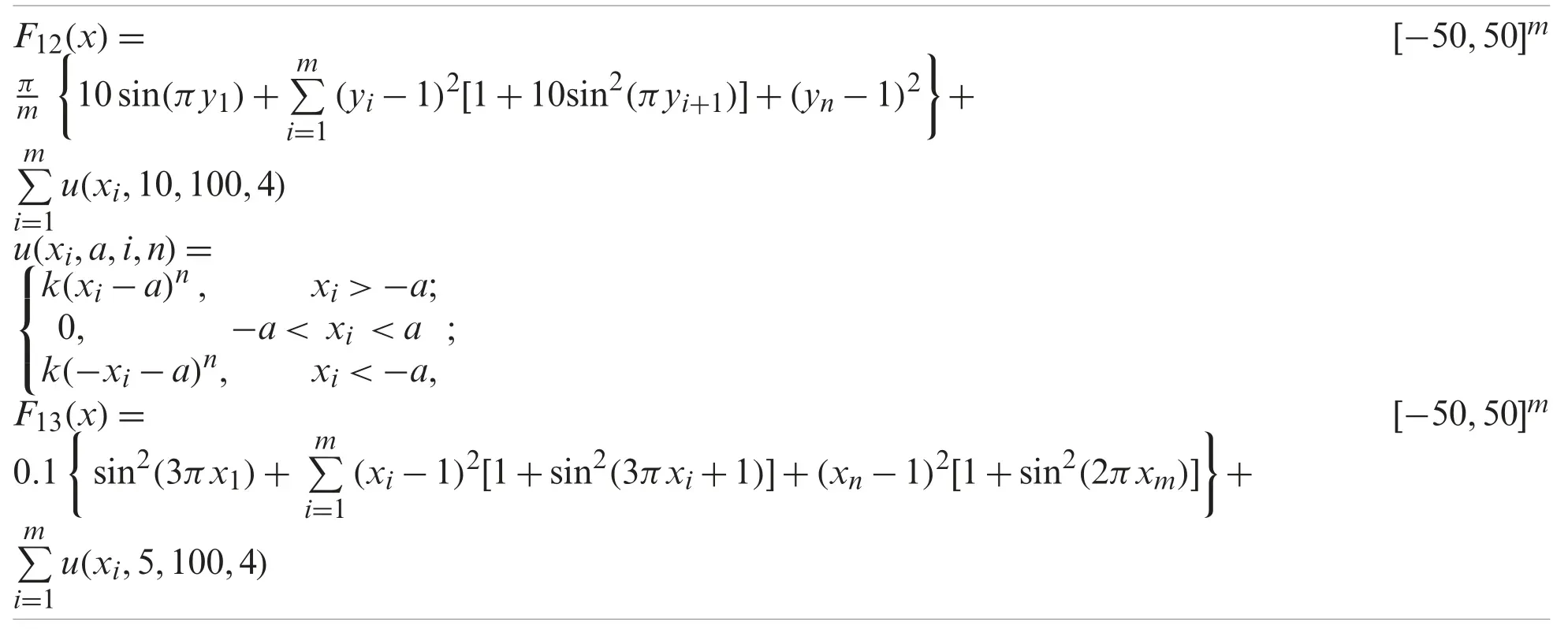

4.2 Evaluation of High-Dimensional Functions

The second group of objective functions intended for analyzing the performance of the proposed MLA is selected from high-dimensional multimodal type, including six functionsF8toF13.The results of the implementation of the MLA and eight compared algorithms on these objective functions are presented in Tab.2.MLA is the best optimizer for the objective functionsF8,F10, andF12.In addition, the proposed MLA is able to provide the global optimal solution forF9andF11.The analysis of the obtained results shows that the proposed MLA has an acceptable ability to optimize high-dimensional multimodal functions.

Table 2: Evaluation results of high-dimensional multimodal objective functions

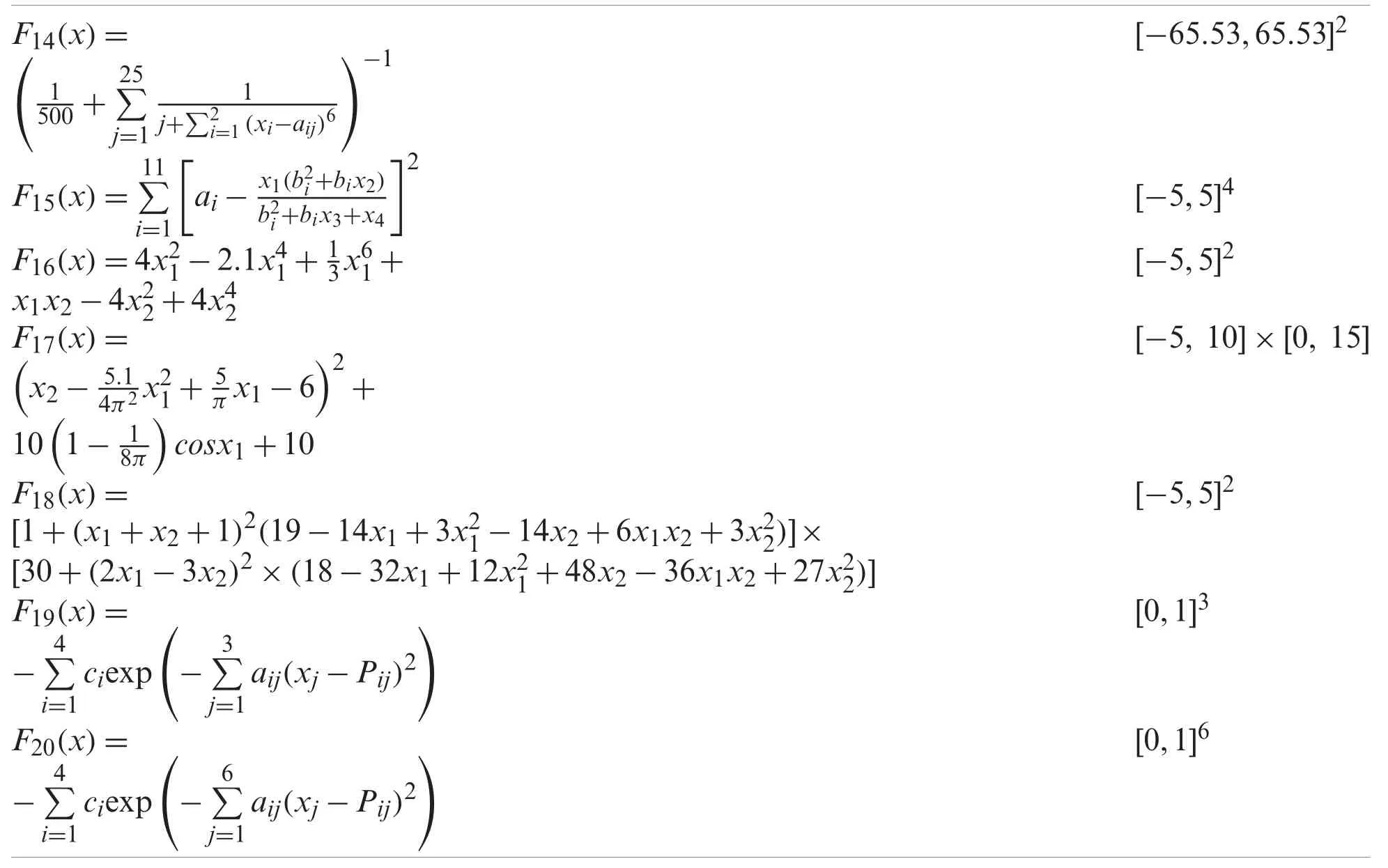

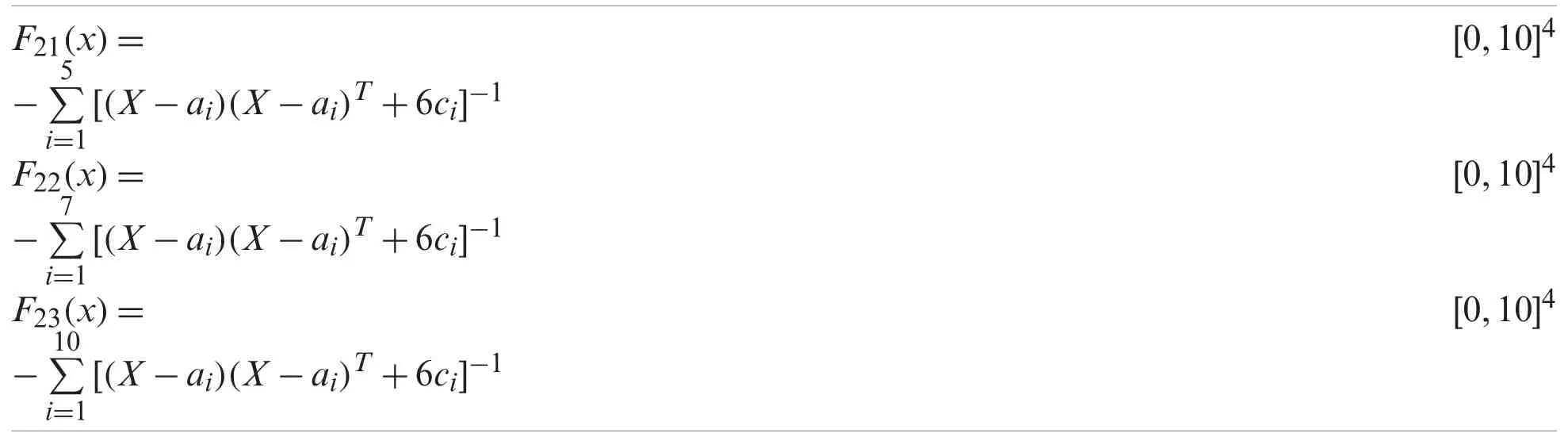

4.3 Evaluation of Fixed-Dimensional Functions

The third group of objective functions is selecte d from fixed-dimensional multimodal type to evaluate the capability of optimization algorithms, including ten functionsF14toF23.The results of optimization of these objective functions using the proposed MLA and eight compared algorithms are presented in Tab.3.The proposed MLA is the best optimizer forF15andF16functions and in other objective functions it has provided quasi-optimal solutions with less standard deviation.Comparison of the performance of the proposed MLA against the eight mentioned algorithms shows that the MLA has a high ability to solve multi-model problems and is more competitive than similar algorithms.

Table 3: Evaluation results of fixed-dimensional multimodal objective functions

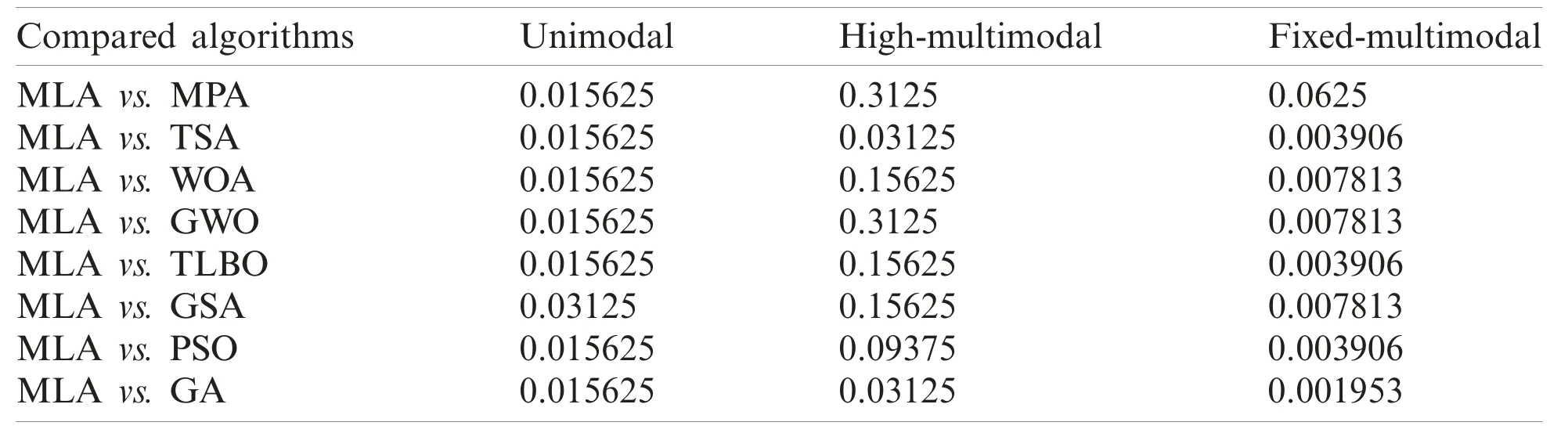

4.4 Statistical Analysis

Presenting the results of implementation of optimization algorithms on optimization problems using two criteria of the average of the best solutions obtained and their standard deviation provides valuable information about the capabilities and quality of optimization algorithms.However,it is possible that even with a very low probability, the superiority of an algorithm occurred by chance.Therefore, in this subsection, in order to further analyze the performance of optimization algorithms, a statistical analysis on the results obtained from them is presented.In this regard,Wilcoxon rank sum test, which is a non-parametric statistical test, is used to specify whether the results obtained by the proposed MLA are different from compared eight algorithms in a statistically significant way.

Ap-value indicates whether the performance difference of the given algorithm is statistically significant or not.The results of the statistical test obtained from the Wilcoxon rank sum test are reported in Tab.4.Based on the analysis of the results presented in Tab.4, it is clear that the proposed MLA in cases where thep-value is less than 0.05 is statistically different from the compared algorithm.

Table 4: Obtained results from the Wilcoxon rank sum test (p ≥0.05)

4.5 Sensitivity Analysis

In this subsection, the sensitivity analysis of the proposed MLA to two parameters of maximum number of iterations and number of population members is discussed.

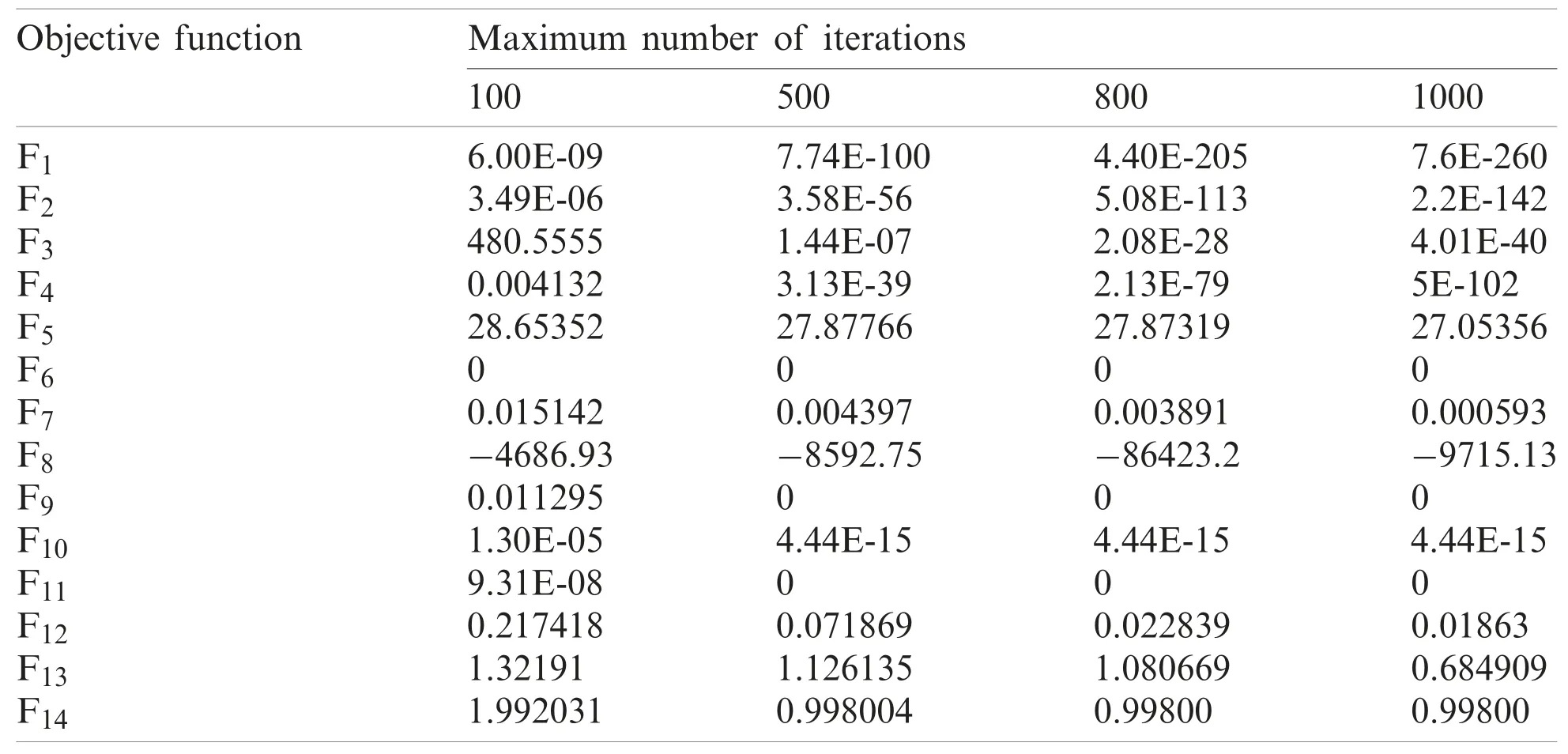

In the first stage, in order to analyze the sensitivity of the proposed MLA to the maximum number of iterations, the MLA in independent performances for the number of iterations of 100,500, 800, and 1000 is implemented on twenty-three objective functionsF1toF23.The results of this simulation for different number of iterations and different functions are reported in Tab.5.Based on the results obtained from this analysis, it is clear that with increasing the maximum number of iterations, the proposed MLA converges to more appropriate solutions and the values of the objective functions are reduced.

Table 5: Results of the algorithm sensitivity analysis to the maximum number of iterations

(Continued)

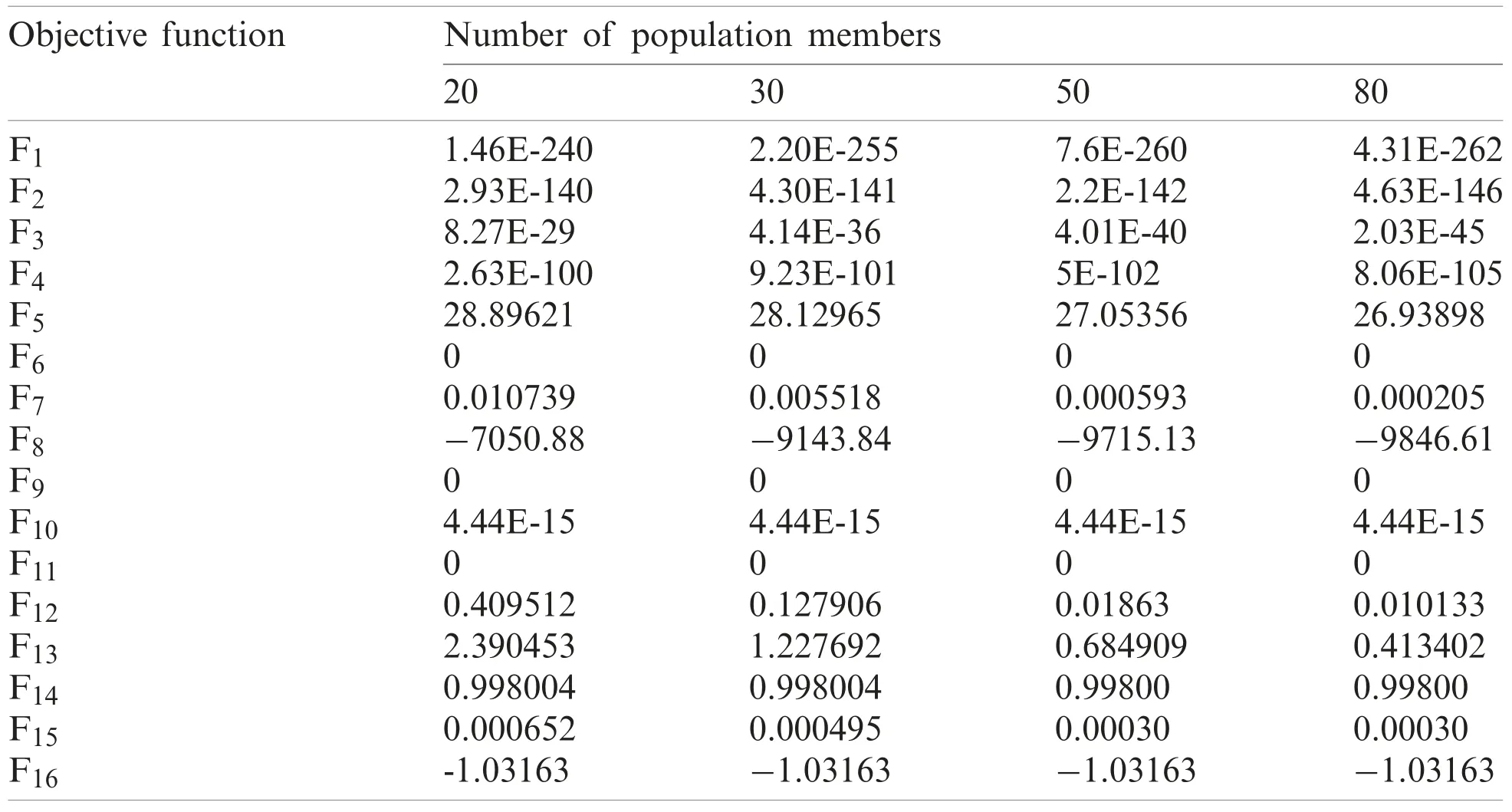

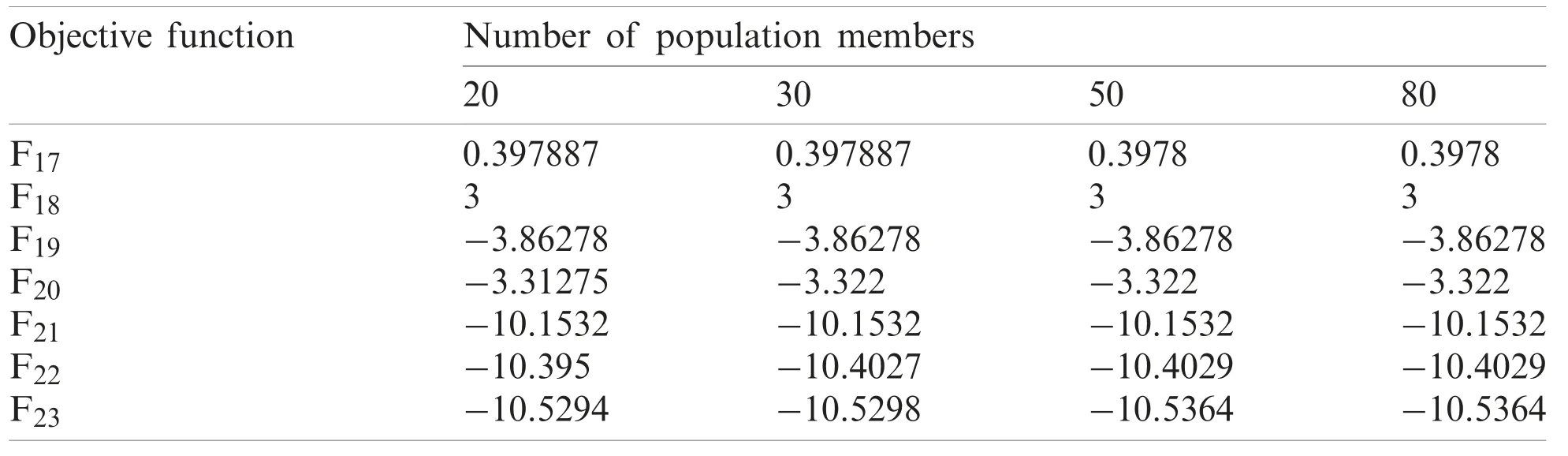

In the second stage, in order to analyze the sensitivity of the proposed MLA to the number of population members, the proposed algorithm in independent performances for different populations with 20, 30, 50, and 80 members is implemented on twenty-three objective functionsF1toF23.The results of the sensitivity analysis of the MLA to the number of population members are presented in Tab.6.What can be deduced from the simulation results of this analysis is that as the population members increase, the values of the objective functions decrease.

Table 6: Results of the algorithm sensitivity analysis to the number of population members

(Continued)

Table 6: continued

5 Conclusions and Future Studies

Numerous optimization problems in science must be solved using appropriate methods.Optimization algorithms is one of the problem-solving methods from the group of stochastic methods that is able to provide appropriate solutions to optimization problems.In this paper,a new optimization algorithm called Mutated Leader Algorithm (MLA) was introduced and designed to solve optimization problems.Updating and guiding members of the population in the problem-solving space based on mutated leaders was the main idea in the proposed MLA design.The proposed MLA was mathematically modeled and its ability to solve optimization problems on twenty-three standard objective functions of various types was evaluated.Also, the results obtained from the MLA were compared with the results of eight algorithms named Genetic Algorithm (GA), Particle Swarm Optimization (PSO), Gravitational Search Algorithm (GSA), Teaching Learning-Based Optimization (TLBO), Grey Wolf Optimizer (GWO), Whale Optimization Algorithm (WOA), Marine Predators Algorithm (MPA), and Tunicate Swarm Algorithm (TSA).The optimization results showed the high ability of the proposed MLA to solve optimization problems.Also, analysis and comparison of the performance of the mentioned optimization algorithms against the MLA, showed that the proposed algorithm has a better performance and is much more competitive than similar algorithms.

For further study in the future, the authors offer several suggestions, including the design the binary and multi-objective versions of proposed MLA.In addition, the use of MLA in solving optimization problems in various fields as well as real life problems are other suggestions for future studies.

Funding Statement:PT (corresponding author) was supported by the Excellence project PˇrF UHK No.2202/2020-2022 and Long-term development plan of UHK for year 2021, University of Hradec Králové, Czech Republic, https://www.uhk.cz/en/faculty-of-science/about-faculty/offic ial-board/internal-regulations-and-governing-acts/governing-acts/deans-decision/2020#grant-competi tion-of-fos-uhk-excellence-for-2020.

Conflicts of Interest:The authors declare that they have no conflicts of interest to report regarding the present study.

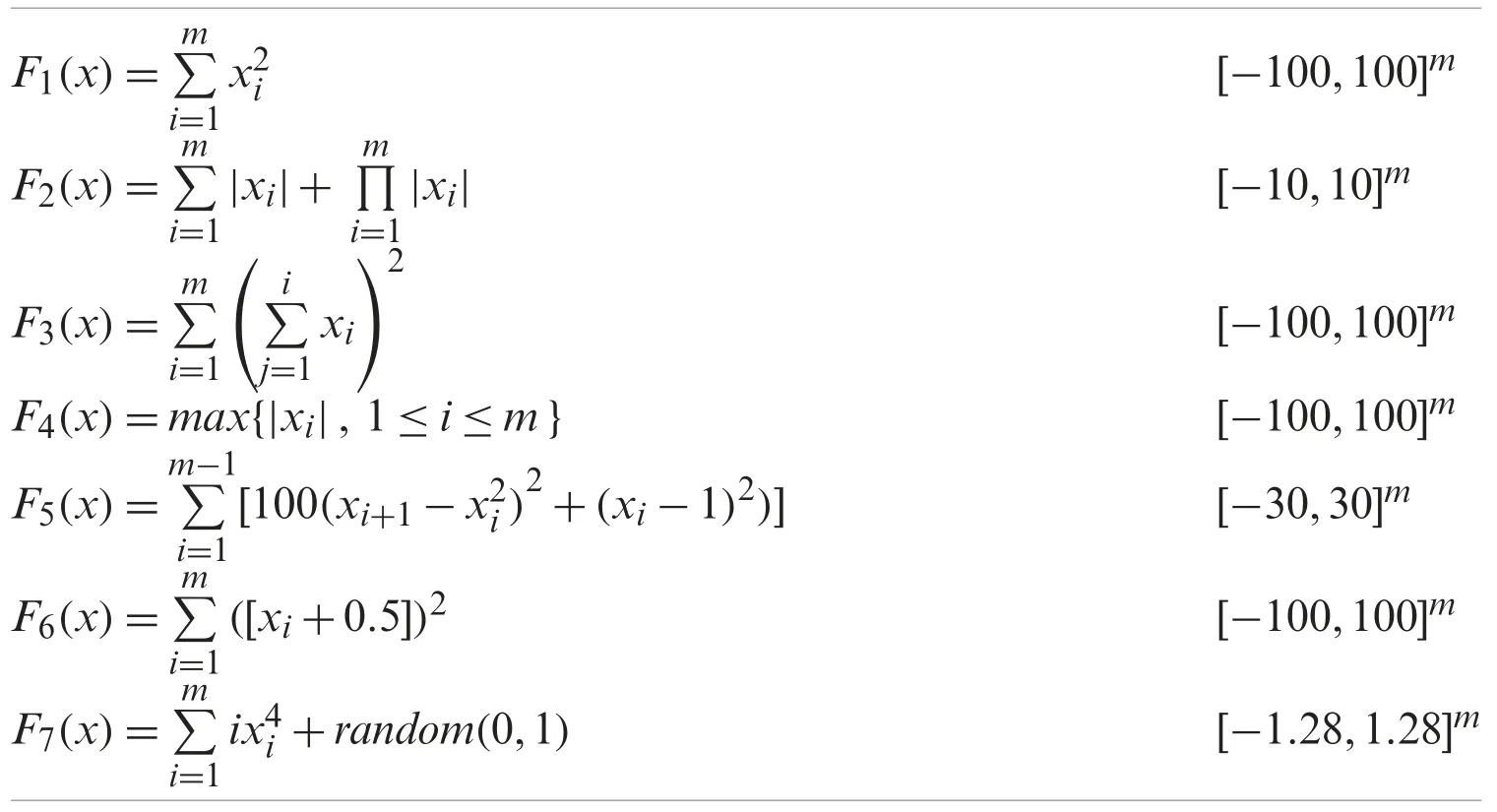

Appendix A.Objective functions

Information and details about the twenty-three standard objective functions used in the simulation section are given in Tabs.A1-A3.

Table A1: Unimodal objective functions

Table A2: High-dimensional objective functions

(Continued)

Table A2: Continued

Table A3: Fixed-dimensional objective functions

(Continued)

Table A3: Continued

杂志排行

Computers Materials&Continua的其它文章

- Polygonal Finite Element for Two-Dimensional Lid-Driven Cavity Flow

- Multi-Step Detection of Simplex and Duplex Wormhole Attacks over Wireless Sensor Networks

- Fuzzy Based Latent Dirichlet Allocation for Intrusion Detection in Cloud Using ML

- Automatic Detection and Classification of Human Knee Osteoarthritis Using Convolutional Neural Networks

- An Efficient Proxy Blind Signcryption Scheme for IoT

- An Access Control Scheme Using Heterogeneous Signcryption for IoT Environments