A Novel Deep Neural Network for Intracranial Haemorrhage Detection and Classification

2021-12-14VenugopalJayasankarMohamedYacinSikkandarMohamedIbrahimWalyIrinaPustokhinaDenisPustokhinandShankar

D.Venugopal,T.Jayasankar,Mohamed Yacin Sikkandar,Mohamed Ibrahim Waly,Irina V.Pustokhina,Denis A.Pustokhin and K.Shankar

1Department of ECE,KPR Institute of Engineering and Technology,Coimbatore,641407,India

2Department of Electronics and Communication Engineering,University College of Engineering,BIT Campus,Anna University,Tiruchirappalli,620024,India

3Department of Medical Equipment Technology,College of Applied Medical Sciences,Majmaah University,Al Majmaah,11952,Saudi Arabia

4Department of Entrepreneurship and Logistics,Plekhanov Russian University of Economics,Moscow,117997,Russia

5Department of Logistics,State University of Management,Moscow,109542,Russia

6Department of Computer Applications,Alagappa University,Karaikudi,630001,India

Abstract:Data fusion is one of the challenging issues,the healthcare sector is facing in the recent years.Proper diagnosis from digital imagery and treatment are deemed to be the right solution.Intracerebral Haemorrhage (ICH),a condition characterized by injury of blood vessels in brain tissues,is one of the important reasons for stroke.Images generated by X-rays and Computed Tomography(CT)are widely used for estimating the size and location of hemorrhages.Radiologists use manual planimetry,a time-consuming process for segmenting CT scan images.Deep Learning(DL)is the most preferred method to increase the efficiency of diagnosing ICH.In this paper,the researcher presents a unique multi-modal data fusion-based feature extraction technique with Deep Learning (DL) model,abbreviated as FFE-DL for Intracranial Haemorrhage Detection and Classification,also known as FFEDL-ICH.The proposed FFEDL-ICH model has four stages namely,preprocessing,image segmentation,feature extraction,and classification.The input image is first preprocessed using the Gaussian Filtering (GF) technique to remove noise.Secondly,the Density-based Fuzzy C-Means (DFCM) algorithm is used to segment the images.Furthermore,the Fusion-based Feature Extraction model is implemented with handcrafted feature(Local Binary Patterns)and deep features(Residual Network-152)to extract useful features.Finally,Deep Neural Network(DNN)is implemented as a classification technique to differentiate multiple classes of ICH.The researchers,in the current study,used benchmark Intracranial Haemorrhage dataset and simulated the FFEDL-ICH model to assess its diagnostic performance.The findings of the study revealed that the proposed FFEDL-ICH model has the ability to outperform existing models as there is a significant improvement in its performance.For future researches,the researcher recommends the performance improvement of FFEDL-ICH model using learning rate scheduling techniques for DNN.

Keywords:Intracerebral hemorrhage;fusion model;feature extraction;deep features;classification

1 Introduction

Traumatic Brain Injury (TBI) is one of the most recorded causes of death worldwide [1].Patients diagnosed with TBI experience functional disabilities in the long run.When a patient is diagnosed with TBI,the possibilities of having intracranial lesions,for instances Intracranial Hemorrhage (ICH),are high.ICH is one of the severe lesions that causes death.Such a feature is associated with increased mortality rate.Brain injury is highly fatal and leads to paralysis,if left undiagnosed in the earlier stages.Based on the position of brain injury,ICH is classified into five sub-types,which include Intra-Ventricular (IVH),Intra-Parenchymal (IPH),Subarachnoid(SAH),Epidural (EDH),and Subdural (SDH).Generally,the Computerized Tomography (CT)scanning method is used to diagnose critical conditions like TBI.CT scan is accessed easily and the acquisition time of a CT scan is less.Considering these advantages,healthcare professionals prefer CT scan over Magnetic Resonance Imaging (MRI) for early diagnosis of ICH.

During a CT scan,a series of images is generated using X-ray beams.During the scan process,brain cells are captured at various intensities,according to the type of tissue found in X-ray absorbency level (Hounsfield Units (HU)).Such CT scans fall under the application of windowing model.It converts HU values into gray-scale values [0,255]which depend on window level and width parameters.The diverse features of brain tissues appear in gray-scale image,based on the selection of unique window parameters [2].In CT scan images,ICH regions are displayed as hyperdense area without any defined architecture.Professional radiologists observe these CT scan images,confirm the presence of ICH,and its type and location.The diagnostic procedure completely depends on the accessibility of a subspecialty-trained neuroradiologist.In spite of these sophistications,there are chances of imprecise and ineffective results,especially in remote areas,where special care is inadequate.Against this backdrop,there is a need persists to overcome this challenge.

Convolutional Neural Network (CNN) is a promising model for automating functions,such as image classification and segmentation [3].Deep Learning (DL) methods are highly capable of automating ICH prediction and segmentation.The Fully Convolutional Network (FCN) mechanism,otherwise termed as U-Net,is recommended in the literature [4]for segmenting ICH regions in CT scan image.Automated ICH detection and classification are highly helpful for beginners in radiology,when professional doctors are not available during critical situations,especially in developing countries and remote areas.Furthermore,the tool also reduces time and wrong diagnosis,thereby paving a way for quick diagnosis of ICH.It is important to determine the volume of ICH for selecting an appropriate treatment regimen and surgical procedure if any,which aids in brain injury recovery at the earliest.

Several approaches have been proposed in the past to perform automatic ICH segmentation.ICH segmentation models are classified as classical and DL methodologies.In general,conventional approaches preprocess the CT scan images to remove noise,skull and artifacts.In this stage,the image has to be registered,while the brain tissue images should be segmented to extract complex features.These approaches depend on unsupervised clustering for the segmentation of ICH area.Shahangian et al.[5]applied DRLSE to execute the segmentation of EDH,IPH,and SDH regions and proposed a supervised model based on Support Vector Machine (SVM)to classify ICH slices.Brain segmentation was the first procedure in this model that removes both skull and brain ventricles.ICH segmentation was also carried out based on DRLSE.Then,shape and texture features were extracted from ICH regions,and consequently ICH was detected.As a result,the maximum values of Dice coefficient,sensitivity,and specificity were achieved in this study.Additionally,conventional unsupervised models [6]applied Fuzzy C-Means (FCM)clustering technique to perform ICH segmentation.

Muschelli et al.[7]presented an automated approach in which the authors compared various supervised models to propose a model that effectively performs ICH segmentation.Such an approach was accomplished by extracting brain tissue images from CT scans which were first registered through the application of CT brain-extracted template.Several attributes were obtained from every scan.Such features include threshold-related data,such as CT voxel intensity,local moment details,including Mean and Standard Deviation (STD),within-plane remarkable scores,initial segmentation after unsupervised approach,contralateral difference images,distance-to-brain center,and standardized-to-template intensity.The extracted features compared the images of diagnostic CT scan with average CT scan images captured from normal brain tissues.As an inclusion,some of the classifiers applied in this model were Logistic Regression (LR),generalized additive method and Random Forest (RF) method.The above-discussed approach was trained with CT scans and was validated.When compared with other classifiers,RF gained the maximum number of Dice coefficients.

DL models,for ICH segmentation,depend upon CNN or FCN models.In the study conducted earlier [8],two approaches were considered on the basis of CNN for ICH segmentation.Alternatively,secondary model was deployed by Nag et al.in which the developers selected CT slices with ICHs through a trained autoencoder (AE).In this study,ICH segmentation was performed by following an active contour Chan-Vese technology [9].The study used CT scan dataset and AE was trained using partial data.All these information were applied for performance validation.The study obtained an effective SE,positive predictive value,and a Jaccard index.Furthermore,the study inferred that FCN is capable of detecting ICH in every pixel and can also be applied in ICH segmentation process.Numerous structures of FCNs have been applied earlier in ICH segmentation process,including the Dilated Residual Net (DRN),modified VGG16,and U-Net structures.

Kuo et al.[10]implied a cost-sensitive active learning mechanism which contained an ensemble of Patch-based FCNs (PatchFCN).An uncertain value was determined for every patch and all the patches were consolidated and improved after estimating time constraint.The authors employed CT scan images for training and validation purposes,while retrospective scans and prospective scans were taken for testing.As a result,the study attained the maximum precision in ICH detection using test datasets.However,average precision was accomplished for ICH segmentation.Since the study used cost-sensitive active learning,the performance can be improved by annotating novel CT scans and by increasing the size of training data and scans.

Cho et al.[11]employed CNN-cascade approach for ICH prediction.The study used double FCN methodology for ICH segmentation.CNN-cascade approach depends on GoogLeNet network;whereas dual FCN approach is based on pre-trained VGG16 network.The latter gets extended and fine-tuned under the application of CT slices in brain and stroke images.These models were 5-fold cross-validated using massive CT scans.The researchers performed optimal SE and SP for ICH detection.The study obtained the maximum accuracy from ICH sub-type classification,while from EDH detection,minimum accuracy was achieved.In order to compute ICH segmentation,better precision and recall were determined in the study.

Kuang et al.[12]developed a semi-automated technology for regional ICH segmentation and ischemic infarct segmentation.The model followed U-Net approach for ICH and infarct segmentations.The approach was induced after using ICH and infarct regions for multi-region contour evolution.A collection of hand-engineered features was developed into U-Net based on bilateral density difference among symmetric brain regions in CT scan.The researchers weighed U-Net cross-entropy loss using Euclidean distance between applied pixels and the boundaries of positive masks.Therefore,the proposed semi-automated model,with weight loss,surpassed the classical U-net,where the model gained the maximum Dice similarity coefficient.

The current research study presents a novel Fusion-based Feature Extraction with Deep Learning (DL) model,abbreviated as FFE-DL,for Intracranial Haemorrhage Detection and Classification,known as FFEDL-ICH.The presented FFEDL-ICH model has four stages namely,preprocessing,image segmentation,feature extraction,and classification.In the beginning,the input image is preprocessed using Gaussian Filtering (GF) technique to remove noise.Densitybased Fuzzy C-Means (DFCM) algorithm is used for image segmentation.Fusion-based feature extraction model is used with both handcrafted feature (Local Binary Patterns) and deep feature(Residual Network-152) to extract the useful features.Finally,Deep Neural Network (DNN) is applied for classification in which different classes of ICH are identified.The authors simulated the model in benchmark Intracranial Haemorrhage dataset to determine the diagnostic performance of FFEDL-ICH model.

2 The Proposed ICH Diagnosis Model

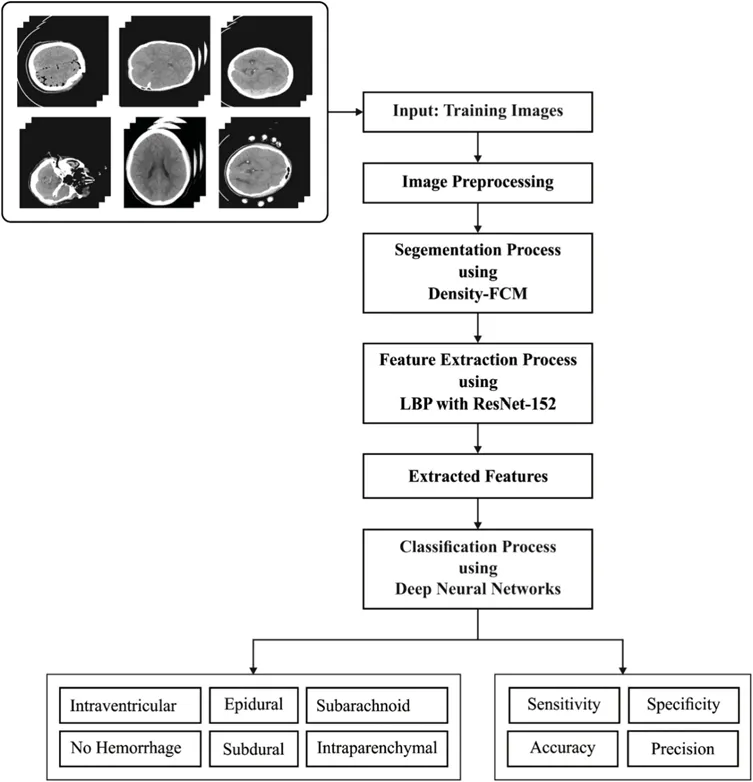

The ICH diagnosis model,which employs FFEDL-ICH,has four stages namely,preprocessing,segmentation,feature extraction,and classification.Once the input data is preprocessed using GF technique i.e.,removal of noise,the quality of the considered image improves.Secondly,the preprocessed images are segmented using DFCM technique to identify the damaged regions.Thirdly,the fused features are extracted and classified by DNN model.During feature extraction,LBP-based handcrafted features and deep features are fused together using ResNet-152 to attain better performance.Fig.1 shows the overall process involved in the proposed model,whereas the components involved are discussed in the upcoming sections.

Figure 1:Overall process of FFEDL-ICH

2.1 Image Preprocessing

Digital Image Processing technique is applied in a wide range of domains such as astronomy,geography,medicine,and so on.Such models require effective real-time outcomes.2D Gaussian filter is employed extensively to smoothen the images and remove noise [13].Such a model necessitates massive processing of resources in an efficient manner.Gaussian is one of the classical operators and Gaussian smoothing procedure is performed by convolution.Gaussian operator,in 1D,is expressed as follows.

An effective smoothing filter is suitable for images in different spatial and frequency domains,wherein the filter satisfies an uncertain relationship,as presented in the equation given below.

Gaussian operator in 2D(circularly symmetric) is depicted as given herewith:

where,σ(Sigma) refers to SD of Gaussian function.The maximum value is accomplished by high smoothing effect.(x,y)i imply the Cartesian coordinates of the image that represent the window dimensions.This filter contains both summation and multiplication operations between an image and a kernel,where the image is deployed in the form of matrix valued between 0 and 255 (8 bits).The kernel normalizes square matrix (between 0 and 1).Then,the kernel is depicted by counting the bits.In case of convolution process,the combination of all bits of kernels and every element of the image are divided with a power of 2.

Mean Square Error (MSE) denotes the cumulative square error between the reformed image and the actual image,and is calculated as follows.

where,M×Nrefers to the size of image,Oimagedefines the actual image andRmaeis the restored image.

Peak Signal-to-Noise Ratio (PSNR) is a peak value of Signal-to-Noise Ratio (SNR) and is defined as a ratio of possible higher power of a pixel value and power of distorted noise.PSNR impacts the supremacy of actual image and is expressed below.

Here,255×255 refers to the highest value of a pixel in an image,whereas MSE is determined for both actual and restored images ofM×Nsize.

In this approach,convolution is a multiplication task and provides a logarithmic multiplication.But it remains insufficient to meet the accuracy standards.Therefore,an effective logarithm multiplier is indeed deployed for Gaussian filter,whose accuracy is increased by a logarithm multiplier.

2.2 Image Segmentation

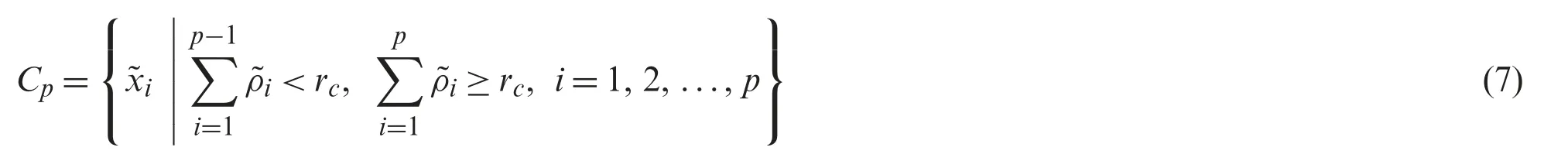

Once the input image is preprocessed,a novel approach called DFCM is employed for image segmentation [14].When applying FCM method,the count of clusterscas well as primary membership matrix need to be specified,since these values are sensitive in the selection of variables.The two shortcomings develop an effective FCM to compute the optimal clusters,whereas the final outcomes of FCM remain not-so-balanced.Generally,an optimal cluster focuses on partition and relies upon clustering to meet the assumptions made i.e.,the initials are nearby true‘cluster centers’which are nothing but ‘optimal initials’with minimum iterations.However,the average description of local data is depicted.Hence,a method for selecting initial cluster centers is presented.During the initial phase,the densities of all samplesxiare presented in the form ofρi.This value mimics the significance of a sample which is applied prominently in density-related clustering.Each sample is sorted out to reduce the order of densities.Then,the sorted samples are implied byXs=i=1,2,...,nwhile its equivalent densities arei=1,2,...,n.Then,a cutoff of densityrcis represented by,

whereσ∈(0,1)implies an adjustable parameter and is named as ‘density rate.’Then,the capable cluster centers are selected with maximum densities compared to alternate samples.Hence,the collection of capable cluster centers is depicted as follows:

From Eqs.(6) and (7),it is pointed out that the parameterσimpacts the score ofrc,which manages the count of potential cluster centers.Here,the parameterσis applied for changing the decided cluster centers.

As a result,appropriate initial cluster centers are selected from potential cluster centers and the count of clusterscis received simultaneously.The samples can be avoided from a similar cluster,when selecting the initial cluster centers i.e.,in case the distance between initial cluster centers is high,it can be selected.It is assumed that the distance between two initial cluster centers has to be higher,compared to the distance threshold as given herewith:

The researcher aims at obtaining an optimal initial membership matrix by eliminating complex functions.In this methodology,initial cluster centers as well as density of sample are provided to develop the basic membership matrix,U.The samples are placed under the cluster in which the center is higher than the considered one.For all the samples in(∈Xs,Xsrefer to a dataset where the samples are placed in reducing-order by local densities),the correspondingis defined as follows

where,i=1,2,...,n,k=1,2,...,candρvkrefers to density of a cluster centervk.Considerρvk-1≥ρvk,k=1,2,...,c.Here,the densityρiis defined as a samplexias given herewith:

where,χ(x)=dcrepresents the cutoff distance anddij=dist(xi,xj)is a Euclidean distance between the samplexiand samplexj.Normally,ρiis similar to the count of samples nearbydcfor samplexi,which shows the count of samples in itsdcneighborhood.

2.3 Fusion-Based Feature Extraction

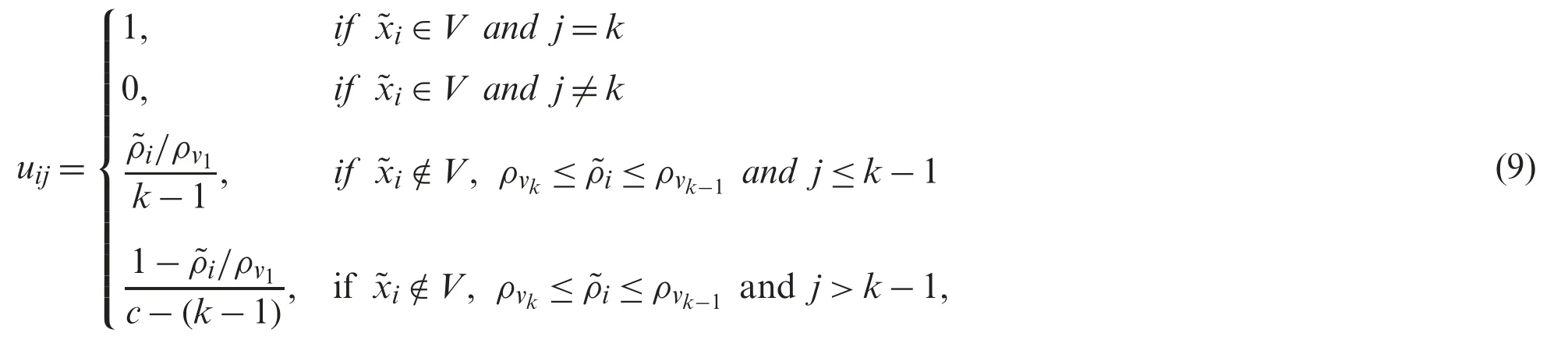

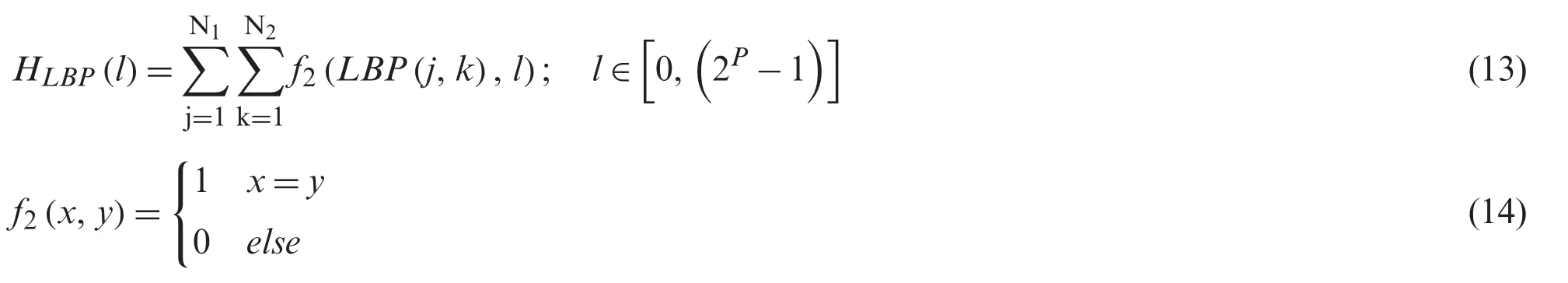

In this section,LBP-based handcrafted features and deep features are fused together for effective classification.LBP is often used in image and texture classification processes.LBP is also applied in a number of sectors such as facial analysis,Image Retrieval (IR),palm-print investigation,and so on.LBP has the potential to achieve maximum efficiency and rapid speed within adequate time.It depends on the correlation between intermediate pixels and adjacent neighbors of an image.Therefore,the relationship between intermediate pixels and similar images is related to the boundaries of neighboring and intermediate pixels.When the adjacent pixel gray values are higher than the intermediate pixel,then the LBP bit becomes ‘1’;else it is ‘0’as showcased in the Eqs.(11) and (12).

where,IM(gc)implies the gray value of middle pixel,refers to the gray value of equivalent images,Pdenotes the number count of neighbors andRrepresents the grade of neighborhood.Once the LBP pattern for pixels(j,k)is determined,the entire image is depicted by developing a histogram as illustrated in Eq.(13).

where,input image has the size ofN1×N2.LBP estimation for 3×3 pattern is demonstrated herewith.The histogram of every pattern is composed of data allocated for all edges in the images.

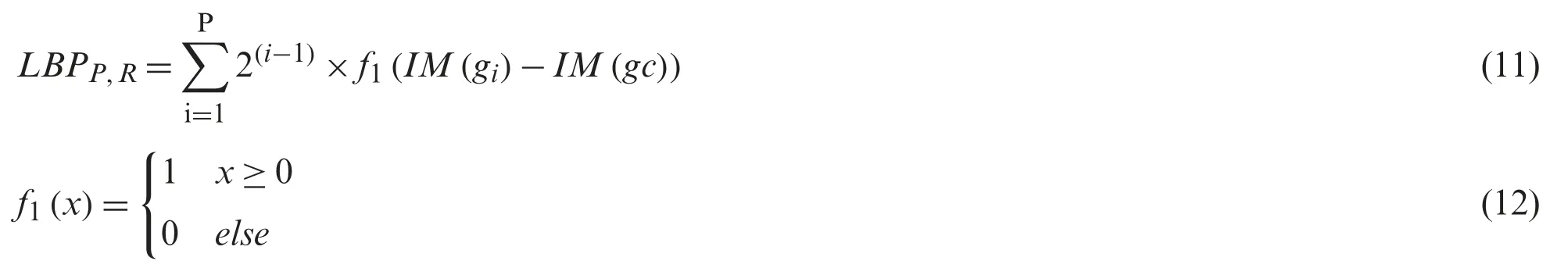

Then,deep features from ResNet-152 are extracted.Convolutional Neural Networks (CNN)belong to the family of Deep Learning (DL) methods.CNN can be applied in hierarchical classification operation,specifically,image classification.At first,CNN is developed for image and computer visions with an identical model deployed as visual cortex.CNNs are applied effectively in medical image classification process.In CNNs,an image tensor undergoes convolution using a set ofd×dkernels.Such convolution (“Feature Maps”) is stacked and implies that a number of features is predicted by the filters.There may be variations in feature dimensions of outcomes and input networks.Hence,the strategy for computing a solitary output in a matrix is simplified as follows:Every individual matrixIiis convoluted using a corresponding kernel matrixKi,j,and bias ofBj.Consequently,an activation function is utilized for every individual.Here,both biases and weights are modified to compete for feature detection filters,whereas Back-Propagation (BP) is applied during CNN training.The study also employed a feature map filter over three channels.In order to reduce the multifaceted processing,CNNs apply pooling layers.At first,the layers are pooled which reduces the size of an output layer from input using a single layer,which is then provided into the system.The current study employed a diverse pooling strategy to reduce the output at the time of protecting important features.In addition,the current study followed Max-pooling approach as well which is an extensively used approach.Higher activation is selected in pooling window.CNN is executed as a discriminative model that applies BP method obtained from sigmoid (Eq.(16)),or Rectified Linear Units (ReLU) (Eq.(17)) activation functions.The consequent layer is composed of a node with sigmoid activation function.It performs binary classification for all classes and a Softmax activation function for multi-class issues as shown in Eq.(18).

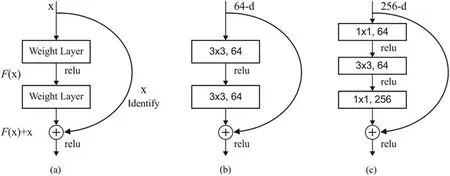

In addition,Adam optimizer was employed in this study,which is defined as a Stochastic Gradient Descent (SGD) which applies primary gradient moments (vandm,in Eqs.(19)-(22)).Adam optimizer deals with non-stationarity of a target that is identical to RMSProp,at the time of decomposing sparse gradient issues of RMSProp.where,mtdenotes the initial moment andvtrepresents a secondary moment and these moments are estimated.ResNet applies the residual block to overcome decomposition and gradient diminishing problems found in CNN.Residual block improves network performance.

Specifically,ResNet networks gain optimal success using ImageNet classification method.Moreover,residual block in ResNet surpasses the additional input and simulation outcome of the remaining blocks as illustrated in Eq.(23):where,xdenotes the input of residual block;Wimplies the weight of residual block andyshows the final outcome of residual block.

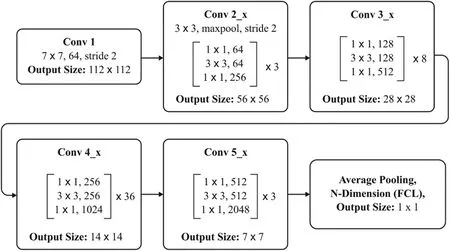

Fig.2 shows the block diagram of the newly developed approach.ResNet network is composed of diverse residual blocks,while the size of convolutional kernel is different.ResNet is developed earlier too,in the name of RetNet18,RestNet50,and RestNet152.A common architecture of ResNet152 is shown in Fig.3.The feature,extracted using ResNet remaining network,is placed in FC layer to classify the images [15].Hence,DNN method is applied for image classification.

Figure 2:(a) Residual block (b) two layer deep (c) three layer deep

Figure 3:ResNet-152 layers

2.4 Fusion Process

The fusion of features plays a vital role in image classification model that integrates at least two worst feature vectors.Now,features are fused on the basis of entropy.As discussed in earlier sections,LBP and ResNet 152 are fused during fusion process,which is illustrated as follows.

Furthermore,the extracted features are fused in a single vector.

where,fis a fused vector.The fused group of feature vectors is utilized during DNN-based classification process for detecting the existence of ICH.

2.5 Image Classification

Feed-Forward Artificial Neural Network (ANN) is a DL model trained with SGD by applying BP.In this model,several layers of hidden units are used between inputs and outcomes [16].Every hidden unitj,applies the logistic functionβand closely-related hyperbolic tangent frequently.A function with a well-trained derivative is also applied for mapping the results using total inputyjfromxj:

In case of multi-class classification like hyperspectral image classification,the output unitjtransforms the overall inputxjinto class probabilityPj,with the application of normalized exponential function termed as ‘softmax’

where,hrefers to index among all the classes.DNNs are trained in a discriminate manner by BP derivatives of cost function.DNN calculates the discrepancy from target outputs and original outputs are developed for training purpose.While applying softmax output function,natural cost functionCis defined as a cross entropy between target probabilitydand softmax output,P:

Here,the target probabilities consume the values of 1 or 0 and are considered as supervised data offered for training DNN method.

3 Performance Validation

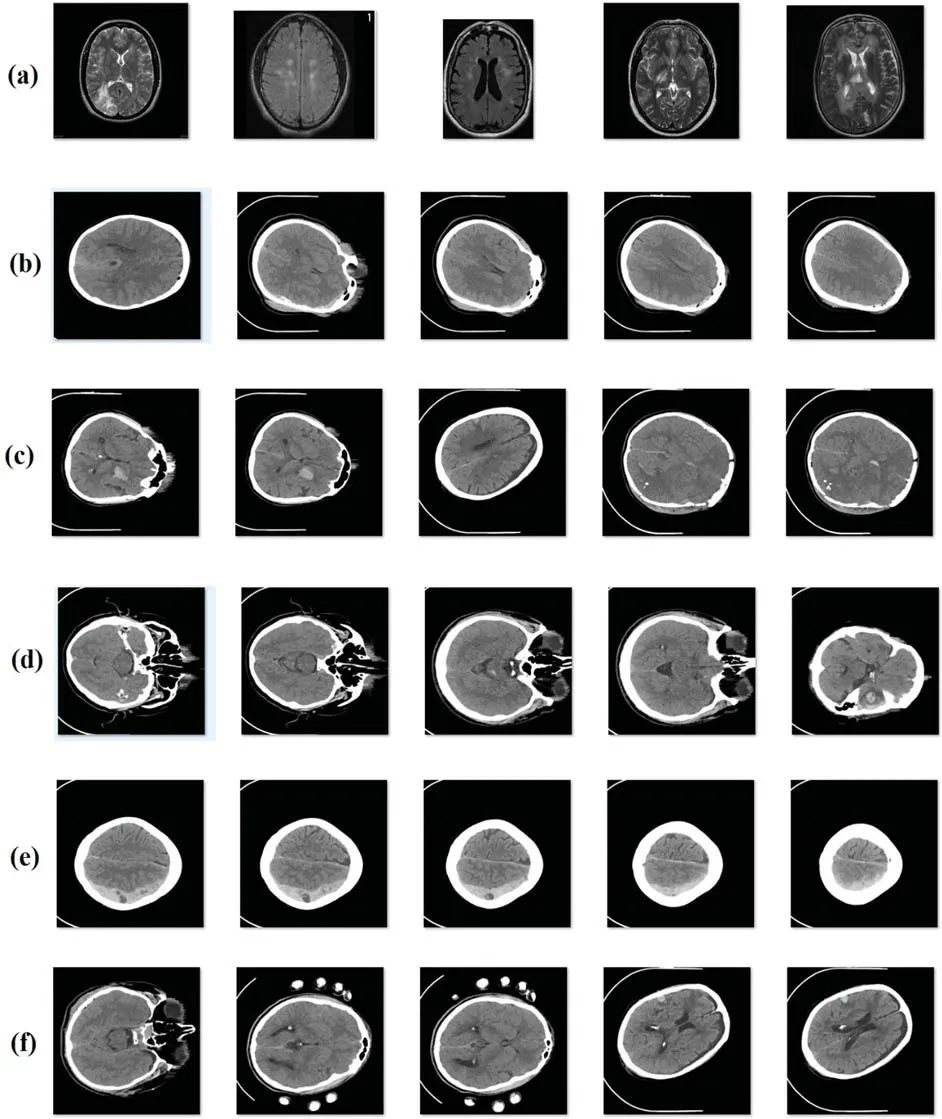

In order to evaluate the performance of the proposed model,an experimental analysis was conducted using a standard ICH dataset [17].Fig.4 depicts the sample group of images showing different classes of ICH.CT scan images dataset was collected from 82 patients under the age group of 72 years.Moreover,the dataset is composed of images grouped under six classes,such as Intraventricular with 24 slices,Intraparenchymal with 73 slices,Subdural with 56 slices,Subarachnoid with 18 slices,and No Hemorrhage with 2173 slices.Simulation outcomes were measured in terms of four parameters,such as sensitivity (SE),specificity (SP),accuracy,and precision.To perform the comparative analysis,different methods were used such as U-Net [18],Watershed Algorithm with ANN (WA-ANN) [19],ResNexT [20],Window Estimator Module to a Deep Convolutional Neural Network (WEM-DCNN) [21],CNN and SVM.

Figure 4:Sample images (a) no hemorrhage (b) epidural (c) intraventricular (d) intraparenchymal(e) subdural (f) subarachnoid

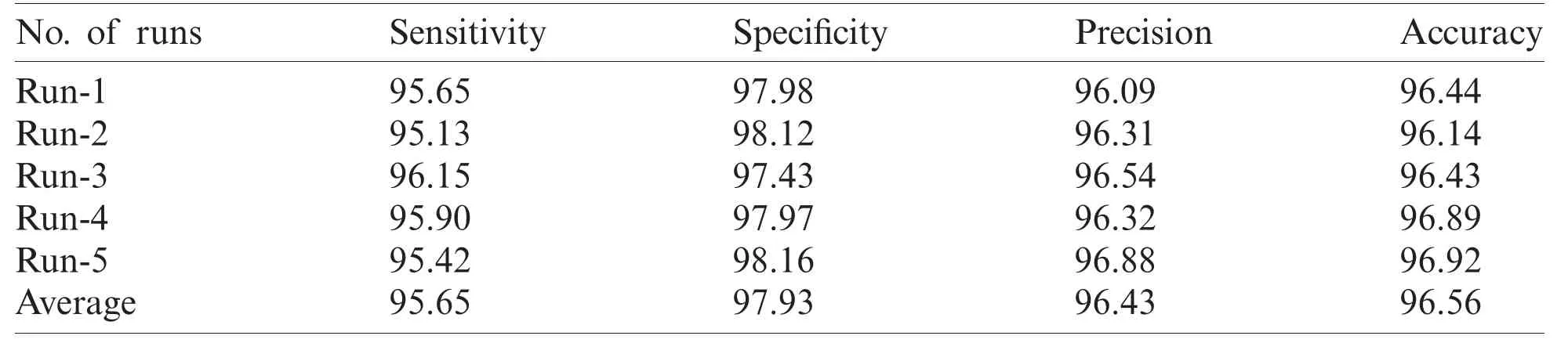

Tab.1 and Fig.5 represent the performance of FFEDL-ICH model in diagnosing ICH under different number of runs.The simulation outcomes portray that the FFEDL-ICH model achieved the highest ICH diagnosis performance under all the applied runs.For instance,when classifying ICH at run 1,the FFEDL-ICH model reached a higher sensitivity of 95.65%,specificity of 97.98%,precision of 96.09%,and recall of 96.44%.Further,during run 2,ICH was classified by FFEDL-ICH model with superior sensitivity of 95.13%,specificity of 98.12%,precision of 96.31%,and recall of 96.14%.

Table 1:Result of FFEDL-ICH method under different number of runs

Figure 5:Results of FFEDL-ICH in terms of sensitivity,specificity,precision and accuracy

Likewise,when classifying ICH at run 3,the proposed FFEDL-ICH method achieved a higher sensitivity of 96.15%,specificity of 97.43%,precision of 96.54%,and recall of 96.43%.Even when classifying ICH at run 4,the FFEDL-ICH approach obtained the maximum sensitivity of 95.90%,specificity of 97.97%,precision of 96.32%,and recall of 96.89%.When classifying ICH at run 5,the FFEDL-ICH method accomplished a superior sensitivity of 95.42%,specificity of 98.16%,precision of 96.88%,and recall of 96.92%.

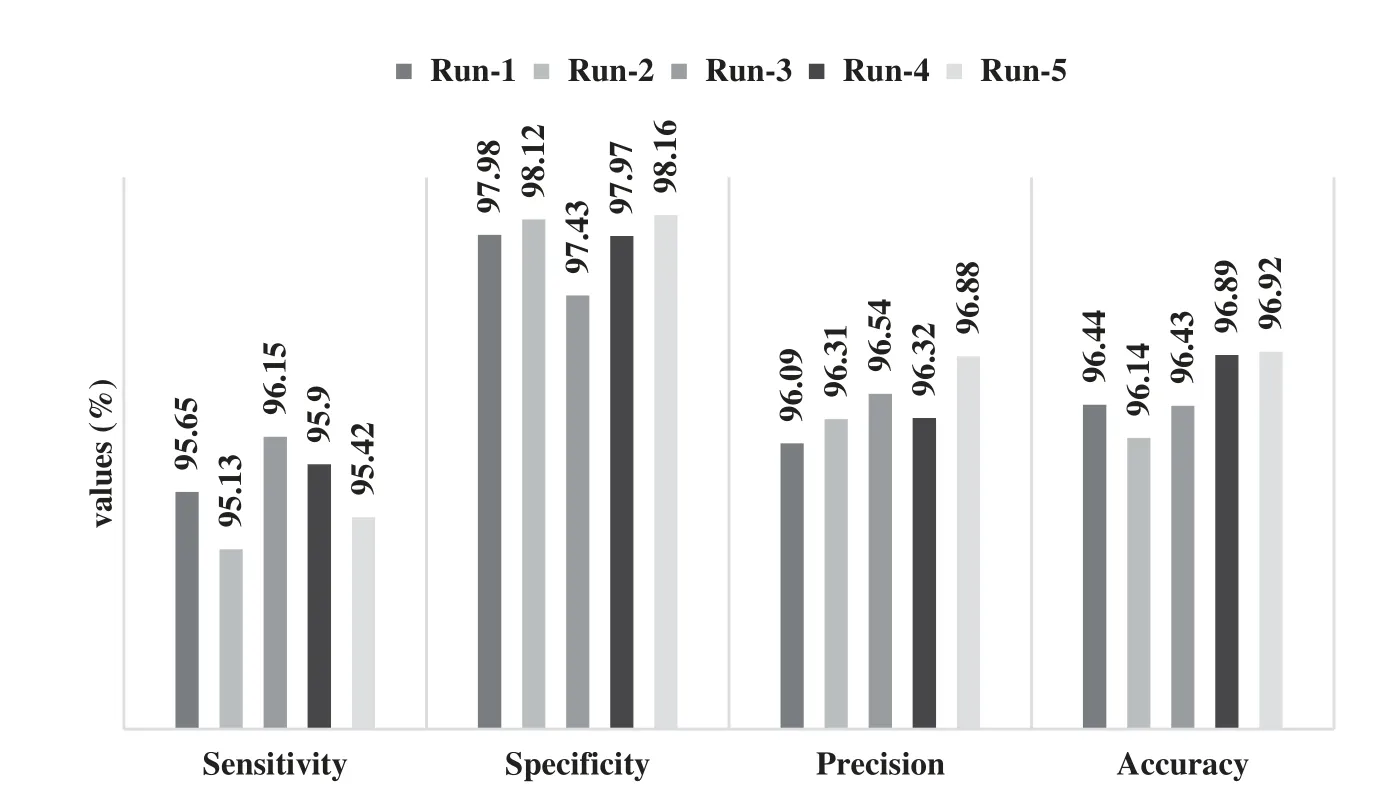

Fig.6 shows the results attained by FFEDL-ICH model on ICH diagnosis.The experimental outcomes denote that the FFEDL-ICH model produced superior results with high average sensitivity of 95.65%,specificity of 97.93%,precision of 96.43%,and accuracy of 96.56%.

Figure 6:Average analysis of FFEDL-ICH model in terms of sensitivity,specificity,precision and accuracy

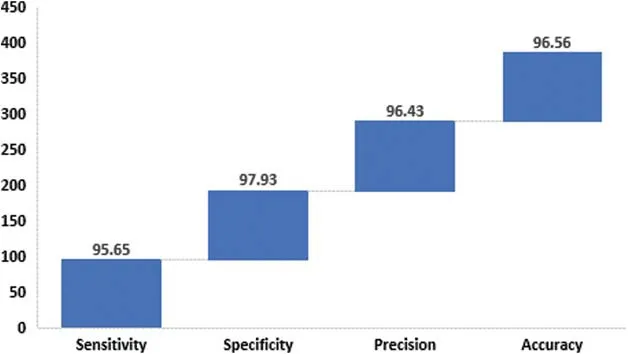

Fig.7 shows the results of the analyses yielded by FFEDL-ICH model in terms of sensitivity and specificity.When classifying ICH,in terms of sensitivity,the WA-ANN-based diagnosis model yielded poor results and can be termed as the worst classifier with the least sensitivity of 60.18%.Though U-Net model attempted to outperform the WA-ANN model,it resulted in only a slightly higher sensitivity of 63.1%.Moreover,the SVM model showcased a moderate performance with a sensitivity of 76.38%,whereas the WEM-DCNN model demonstrated somewhat considerable results with a sensitivity of 83.33%.Furthermore,the CNN model exhibited satisfactory results with a sensitivity of 87.06%.In line with these results,the ResNexT model competed well with the proposed model in terms of sensitivity and achieved 88.75%.But the presented FFEDL-ICH model surpassed all the earlier models by achieving the maximum sensitivity of 95.65%.

Figure 7:Sensitivity and specificity analyses of FFEDL-ICH model

When classifying ICH using SP,the WA-ANN-relied diagnosis model can be inferred as an ineffective classifier,since it gained the least SP of 70.13%.The SVM method managed to perform well than WA-ANN model,and accomplished a moderate SP of 79.41%.The CNN approach implied a considerable performance with an SP of 88.18%,while the U-Net approach accomplished an acceptable SP outcome of 88.60%.Moreover,the ResNexT framework produced convenient results with 90.42% SP.Along with that,the WEM-DCNN scheme provided a competing result with SP of 97.48%.However,the proposed FFEDL-ICH method outperformed all the compared approaches with the highest SP of 97.93%.

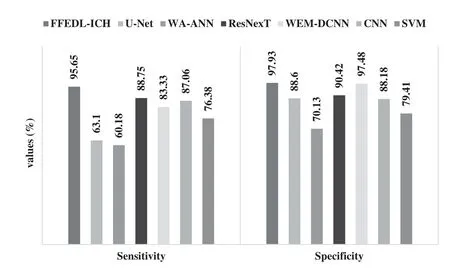

Fig.8 shows the results of analysis produced by FFEDL-ICH method with respect to precision and accuracy.When classifying ICH by means of precision,the WA-ANN relied on a diagnosis model ended up being an ineffective classifier with the least precision value of 70.08%.The SVM approach managed to perform better than the WA-ANN model and achieved a precision value of 77.53%.Furthermore,the CNN approach produced a considerable performance with a precision of 87.98% and the U-Net model illustrated better results with a precision of 88.19%.The WEM-DCNN technique exhibited reasonable results with a precision of 89.90%.Similarly,the ResNexT approach competed well with a precision of 95.20%.However,the projected FFEDL-ICH framework outperformed other models and achieved an optimal precision of 96.43%.

Figure 8:Precision and accuracy analyses of FFEDL-ICH model

When classifying ICH,with respect to accuracy,the WA-ANN related diagnosis model achieved the least position with low accuracy of 69.78%.The SVM technique attempted to perform above WA-ANN approach and yielded a moderate accuracy of 77.32%.Furthermore,the U-Net approach implied a slightly better performance with an accuracy of 87%,whereas the CNN technique accomplished reasonable results with an accuracy of 87.56%.Additionally,the WEM-DCNN method yielded satisfactory outcomes with an accuracy of 88.35%.Likewise,the ResNexT scheme produced competitive results with an accuracy of 89.30%.However,the projected FFEDL-ICH technique performed well than the compared methods and achieved the best accuracy of 96.56%.

4 Conclusion

The research work presented a DL-based FFEDL-ICH model for detecting and classifying ICH.Four stages present in the proposed FFEDL-ICH model include—preprocessing,image segmentation,feature extraction,and classification.The input image was first preprocessed using GF technique during when the noise was removed to increase the quality of image.Next,the preprocessed images were segmented using DFCM technique to identify the damaged regions.The fused features were then extracted and classified by DNN model in the final stage.The study used Intracranial Haemorrhage dataset to test the effectiveness of the proposed FFEDLICH model.The study results were validated under different aspects.The authors infer that the proposed FFEDL-ICH model outperformed all the compared models in terms of sensitivity (SE),specificity (SP),accuracy,and precision.For future researches,the researchers of the current study recommend extending the performance of FFEDL-ICH model using learning rate scheduling techniques for DNN.

Funding Statement:The author(s) received no specific funding for this study.

Conflicts of Interest:The authors declare that they have no conflicts of interest to report regarding the present study.

杂志排行

Computers Materials&Continua的其它文章

- Distributed Trusted Computing for Blockchain-Based Crowdsourcing

- An Optimal Big Data Analytics with Concept Drift Detection on High-Dimensional Streaming Data

- Bayesian Analysis in Partially Accelerated Life Tests for Weighted Lomax Distribution

- Impact Assessment of COVID-19 Pandemic Through Machine Learning Models

- Minimizing Warpage for Macro-Size Fused Deposition Modeling Parts

- An Optimized SW/HW AVMF Design Based on High-Level Synthesis Flow for Color Images